IV.14 Dynamics

Bodil Branner

1 Introduction

Dynamical systems are used to describe the way systems evolve in time, and have their origin in the laws of nature that NEWTON [VI. 14] formulated in Principia Mathematica (1687). The associated mathematical discipline, the theory of dynamics, is closely related to many parts of mathematics, in particular analysis, topology, measure theory, and combinatorics. It is also highly influenced and stimulated by problems from the natural sciences, such as celestial mechanics, hydrodynamics, statistical mechanics, meteorology, and other parts of mathematical physics, as well as reaction chemistry, population dynamics, and economics.

Computer simulations and visualizations play an important role in the development of the theory; they have changed our views about what should be considered typical, rather than special and atypical.

There are two main branches of dynamical systems: continuous and discrete. The main focus of this paper will be holomorphic dynamics, which concerns discrete dynamical systems of a special kind. These systems are obtained by taking a HOLOMORPHIC FUNCTION [I.3 §5.6] f defined on the complex numbers and applying it repeatedly. An important example is when f is a quadratic polynomial.

1.1 Two Basic Examples

It is interesting to note that both types of dynamical system, continuous and discrete, can be well illustrated by examples that date back to Newton.

(i) The N-body problem models the motion in the solar system of the sun and N - 1 planets, and does so in terms of differential equations. Each body is represented by a single point, namely its center of mass, and the motion is determined by Newton’s universal law of gravitation— also called the inverse square law. This says that the gravitational force between two bodies is proportional to each of their masses and inversely proportional to the square of the distance between them. Let ri denote the position vector of the ith body, mi its mass, and ![]() the universal gravitational constant. Then the force on the ith body due to the jth has magnitude gmimj/||rj-ri||2, and its direction is along the line from ri to rj. We can work out the total force on the ith body by adding up all these forces for j ≠ i Since a unit vector in the direction from ri to rj is (rj-ri)/||rj-ri||, we obtain a force of

the universal gravitational constant. Then the force on the ith body due to the jth has magnitude gmimj/||rj-ri||2, and its direction is along the line from ri to rj. We can work out the total force on the ith body by adding up all these forces for j ≠ i Since a unit vector in the direction from ri to rj is (rj-ri)/||rj-ri||, we obtain a force of

![]()

(There is a cube on the bottom rather than a square in order to compensate for the magnitude of rj - ri.) A solution to the N-body problem is a set of differentiable vector functions (r1 (t), . . . , rN(t)), depending on time t, that satisfy the N differential equations

![]()

which result from Newton’s second law, which states that force = mass × acceleration.

Newton was able to solve the two-body problem explicitly. By neglecting the influence of other planets, he derived the laws formulated by Johannes Kepler, which describe how each planet moves in an elliptic orbit around the sun. However, the jump to N > 2 makes an enormous difference to the complication of the problem: except in very special cases, the system of equations can no longer be solved explicitly (see THE THREE-BODY PROBLEM [V.33]). Nevertheless, Newton’s equations are of great practical importance when it comes to guiding satellites and other space missions.

(ii) NEWTON’S METHOD [II.4§2.3] for solving equations is quite different and does not involve differential equations. We consider a differentiable function f of one real variable and wish to determine a zero of f, that is, a solution to the equation f (x) = 0. Newton’s idea was to define a new function:

![]()

To put this more geometrically, Nf(x) is the x-coordinate of the point where the tangent line to the graph y = f(x) at the point (x, f(x)) crosses the x-axis. (If f′(x) = 0, then this tangent line is horizontal and Nf(x) is not defined.)

Under many circumstances, if x is close to a zero of f, then Nf(x) is significantly closer. Therefore, if we start with some value x0 and form the sequence obtained by repeated application of Nf, that is, the sequence x0, x1, x2 , . . . , where x1 = Nf(x0), x2 = Nf(x1), and so on, we can expect that this sequence will converge to a zero of f. And this is true: if the initial value x0 is sufficiently close to a zero, then the sequence does indeed converge toward that zero, and does so extremely quickly, basically doubling the number of correct digits in each step. This rapid convergence makes Newton’s method very useful for numerical computations.

1.2 Continuous Dynamical Systems

We can think of a continuous dynamical system as a system of first-order differential equations, which determine how the system evolves in time. A solution is called an orbit or trajectory, and is parametrized by a number t, which one usually thinks of as time, that takes real values and varies continuously: hence the name “continuous” dynamical system. A periodic orbit of period T is a solution that repeats itself after time T, but not earlier.

The differential equation x″(t) = -x(t) is of second order, but it is nevertheless a continuous dynamical system because it is equivalent to the system of two first-order differential equations x′1 (t) = x2(t) and x′2 (t) = -x1(t). In a similar way, the system of differential equations of the N-body problem can be brought into standard form by introducing new variables. The equations are equivalent to a system of 6N first-order differential equations in the variables of the position vectors ri = (xi1, xi2, xi3) and the velocity vectors r′i = (yi1, yi2, yi3). Thus, the N-body problem is a good example of a continuous dynamical system.

In general, if we have a dynamical system consisting of n equations, then we can write the ith equation in the form

![]()

or alternatively we can write all the equations at once in the form x′(t) = f(x(t)), where x(t) is the vector (x1(t) , . . . ,xn(t)) and f = (f1 , . . . , fn) is a function from ![]() n to

n to ![]() n. Note that f is assumed not to depend on t. If it does, then the system can be brought into standard form by adding the variable xn+1 = t and the differential equation x′n+1(t) = 1, which increases the dimension of the system from n to n + 1.

n. Note that f is assumed not to depend on t. If it does, then the system can be brought into standard form by adding the variable xn+1 = t and the differential equation x′n+1(t) = 1, which increases the dimension of the system from n to n + 1.

The simplest systems are linear ones, where f is a linear map: that is, f(x) is given by Ax for some constant n × n matrix A. The system above, x′1(t) = x2(t) and x′2(t), is an example of a linear system. Most systems, however, including the one for the N-body problem, are nonlinear. If the function f is “nice” (for instance, differentiable), then uniqueness and existence of solutions are guaranteed for any initial point x0. That is, there is exactly one solution that passes through the point x0 at time t = 0. For example, in the N-body problem there is exactly one solution for any given set of initial position vectors and initial velocity vectors. It also follows from uniqueness that any pair of orbits must either coincide or be totally disjoint. (Bear in mind that the word “orbit” in this context does not mean the set of positions of a single point mass, but rather the evolution of the vector that represents all the positions and velocities of all the masses.)

Although it is seldom possible to express solutions to nonlinear systems explicitly, we know that they exist, and we call the dynamical system deterministic since solutions are completely determined by their initial conditions. For a given system and given initial conditions it is therefore theoretically possible to predict its entire future evolution.

1.3 Discrete Dynamical Systems

A discrete dynamical system is a system that evolves in jumps: “time,” in such a system, is best represented by an integer rather than a real number. A good example is Newton’s method for solving equations. In this instance, the sequence of points we saw earlier, x0, x1 , . . . ,xk , . . . , where xk = Nf(xk-1), is called the orbit of x0. We say that it is obtained by iteration of the function Nf, i.e., by repeated application of the function.

This idea can easily be generalized to other mappings F : X → X, where X could be the real axis, an interval in the real axis, the plane, a subset of the plane, or some more complicated space. The important thing is that the output F(x) of any input x can be used as the next input. This guarantees that the orbit of any x0 in X is defined for all future times. That is, we can define a sequence, x0,x1 , . . . ,xk , . . . , where xk = F(xk-1) for every k. If the function F has an inverse F-1, then we can iterate both forwards and backwards and obtain the full orbit of x0 as the bi-infinite sequence . . . ,x-2,x-1,x0,x1,x2 , . . . , where xk = F(xk-1) and, equivalently, xk-1 = F-1(xk), for all integer values.

The orbit of x0 is periodic of period k if it repeats itself after time k, but not earlier, i.e., if xk = x0, but xj ![]() x0 for j = 1 , . . . ,k - 1. The orbit is called pre-periodic if it is eventually periodic, in other words if there exist

x0 for j = 1 , . . . ,k - 1. The orbit is called pre-periodic if it is eventually periodic, in other words if there exist ![]()

![]() 1 and k

1 and k ![]() 1 such that x

1 such that x![]() is periodic of period k, but none of the xj for 0

is periodic of period k, but none of the xj for 0 ![]() j <

j < ![]() are periodic. The notion of pre-periodicity has no counterpart in continuous dynamics.

are periodic. The notion of pre-periodicity has no counterpart in continuous dynamics.

A discrete dynamical system is deterministic, since the orbit of any given initial point x0 is completely determined once you know x0

1.4 Stability

The modern theory of dynamics was greatly influenced by the work of POINCARÉ [VI.61], and in particular by his prize-winning memoir on the three-body problem, succeeded by three more elaborate volumes on celestial mechanics, all from the late nineteenth century. The memoir was written in response to a competition where one of the proposed problems concerned stability of the solar system. Poincaré introduced the so-called restricted three-body problem, where the third body is assumed to have an infinitely small mass: it does not influence the motion of the other two bodies but it is influenced by them. Poincaré’s work became the prelude to topological dynamics, which focuses on topological properties of solutions to dynamical systems and takes a qualitative approach to them.

Of special interest is the long-term behavior of a system. A periodic orbit is called stable if all orbits through points sufficiently close to it stay close to it at all future times. It is called asymptotically stable if all sufficiently close orbits approach it as time tends to infinity. Let us illustrate this by two linear examples in discrete dynamics. For the real function F(x) = -x, all points have a periodic orbit: 0 has period 1 and all other x have period 2. Every orbit is stable, but none is asymptotically stable. The real function G(x) = ![]() x has only one periodic orbit, namely 0. Since G(0) = 0, this orbit has period 1, and we call it a fixed point. If you take any number and repeatedly divide it 2, then the resulting sequence will approach 0, so the fixed point 0 is asymptotically stable.

x has only one periodic orbit, namely 0. Since G(0) = 0, this orbit has period 1, and we call it a fixed point. If you take any number and repeatedly divide it 2, then the resulting sequence will approach 0, so the fixed point 0 is asymptotically stable.

One of the methods introduced by Poincaré during his study of the three-body problem was a reduction from a continuous dynamical system, in dimension n, say, to an associated discrete dynamical system, a mapping in dimension n - 1. The idea is as follows. Suppose we have a periodic orbit of period T > 0 in some continuous system. Choose a point x0 on the orbit and a hypersurface Σ through x0, for instance part of a hyperplane, such that the orbit cuts through Σ at x0. For any point in Σ that is sufficiently close to x0, one can follow its orbit around and see where it next intersects Σ. This defines a transformation, known as the Poincaré map, which takes the original point to the next point of intersection of its orbit with Σ. It follows from the fact that dynamical systems have unique solutions that every Poincaré map is injective in the neighborhood of x0 (within Σ) for which the Poincaré map is defined. One can perform both forwards and backwards iterations. Note that the periodic orbit of x0 in the continuous system is stable (respectively, asymptotically stable) exactly when the fixed point x0 of the Poincaré map in the discrete system is stable (respectively, asymptotically stable).

1.5 Chaotic Behavior

The notion of chaotic dynamics arose in the 1970s. It has been used in different settings, and there is no single definition that covers all uses of the term. However, the property that best characterizes chaos is the phenomenon of sensitive dependence on initial conditions. Poincaré was the first to observe sensitivity to initial conditions in his treatment of the three-body problem.

Instead of describing his observations let us look at a much simpler example from discrete dynamics. Take as a dynamical space X the half-open unit interval [0, 1), and let F be the function that doubles a number and reduces it modulo 1. That is, F(x) = 2x when 0 ![]() x <

x < ![]() and F(x) = 2x - 1 when

and F(x) = 2x - 1 when ![]()

![]() x < 1. Let x0 be a number in X and let its iterates be x1 = F(x0), x2 = F(x1), and so on. Then xk is the fractional part of 2kx0. (The fractional part of a real number t is what you get when you subtract the largest integer less than t.)

x < 1. Let x0 be a number in X and let its iterates be x1 = F(x0), x2 = F(x1), and so on. Then xk is the fractional part of 2kx0. (The fractional part of a real number t is what you get when you subtract the largest integer less than t.)

A good way to understand the behavior of the sequence x0, x1, x2,. . . of iterates is to consider the binary expansion of x0. Suppose, for example, that this begins 0.110100010100111 . . . . To double a number when it is written in binary, all you have to do is shift every digit to the left (just as one does in the decimal system when multiplying by 10). So 2x0 will have a binary expansion that begins 1.10100010100111 . . . . To obtain F(x 0), we have to take the fractional part of this, which we do by subtracting the initial 1. This gives us x1 = 0.10100010100111 . . . . Repeating the process we find that x2 = 0.0100010100111 . . . , x3 = 0.100010100111 . . . , and so on. (Notice that when we calculated x3 from x2 there was no need to subtract 1, since the first digit after the “decimal point” was a 0.) Now consider a different choice of initial number, ![]() = 0.110100010110110 . . . . The first nine digits after the decimal point are the same as the first nine digits of x0, so

= 0.110100010110110 . . . . The first nine digits after the decimal point are the same as the first nine digits of x0, so ![]() is very close to x0. However, if we apply F ten times to x0 and

is very close to x0. However, if we apply F ten times to x0 and ![]() , then their respective eleventh digits have shifted leftwards and become the first digits of x10 = 0.00111 . . . and

, then their respective eleventh digits have shifted leftwards and become the first digits of x10 = 0.00111 . . . and ![]() = 0.10110 . . . . These two numbers differ by almost

= 0.10110 . . . . These two numbers differ by almost ![]() , so they are not at all close.

, so they are not at all close.

In general, if we know x0 to an accuracy of k binary digits and no more, then after k iterations of the map F we have lost all information: xk could lie anywhere in the interval [0, 1). Therefore, even though the system is deterministic, it is impossible to predict its long-term behavior without knowing x0 with perfect accuracy.

This is true in general: it is impossible to make long-term predictions in any part of a dynamical system that shows sensitivity to initial conditions unless the initial conditions are known exactly. In practical applications this is never the case. For instance, when applying a mathematical model to perform weather forecasts, one does not know the initial conditions exactly, and this is why reliable long-term forecasting is impossible.

Sensitivity is also important in the notion of so-called strange attractors. A set A is called an attractor if all orbits that start in A stay in A and if all orbits through nearby points get closer and closer to A. In continuous systems, some simple sets that can be attractors are equilibrium points, periodic orbits (limit cycles), and surfaces such as a torus. In contrast to these examples, strange attractors have both complicated geometry and complicated dynamics: the geometry is fractal and the dynamics sensitive. We shall see examples of fractals later on.

The best-known strange attractor is the Lorenz attractor. In the early 1960s, the meteorologist Edward N. Lorenz studied a three-dimensional continuous dynamical system that gave a simplified model of heat flow. While doing so, he noticed that if he restarted his computer with its initial conditions chosen as the output of an earlier calculation, then the trajectory started to diverge from the one he had previously observed. The explanation he found was that the computer used more precision in its internal calculations than it showed in its output. For this reason, it was not immediately apparent that the initial conditions were in fact very slightly different from before. Because the system was sensitive, this tiny difference eventually made a much bigger difference. He coined the poetic phrase “the butterfly effect” to describe this phenomenon, suggesting that a small disturbance such as a butterfly flickering its wings could in time have a dramatic effect on the long-term evolution of the weather and trigger a tornado thousands of miles away. Computer simulations of the Lorenz system indicate that solutions are attracted to a complicated set that “looks like” a strange attractor. The question of whether it actually was one remained open for a long time. It is not obvious how trustworthy computer simulations are when one is studying sensitive systems, since the computer rounds off the numbers in each step. In 1998 Warwick Tucker gave a computer-assisted proof that the Lorenz attractor is in fact a strange attractor. He used interval arithmetic, where numbers are represented by intervals and estimates can be made precise.

For topological reasons, sensitivity to initial conditions is possible for continuous dynamical systems only when the dimension is at least 3. For discrete systems where the map F is injective, the dimension must be at least 2. However, for noninjective mappings, sensitivity can occur for one-dimensional systems, as we saw with the example given earlier. This is one of the reasons that discrete one-dimensional dynamical systems have been intensively studied.

1.6 Structural Stability

Two dynamical systems are said to be topologically equivalent if there is a homeomorphism (a continuous map with continuous inverse) that maps the orbits of one system onto the orbits of the other, and vice versa. Roughly speaking, this means that there is a continuous change of variables that turns one system into the other.

As an example, consider the discrete dynamical system given by the real quadratic polynomial F(x) = 4x(1 - x). Suppose we were to make the substitution y = -4x + 2. How could we describe the system in terms of y? Well, if we apply F, then we change x to 4x(1 - x), which means that y = -4x + 2 changes F(x) to -4F(x) + 2 = -16x(1 - x) + 2. But

-16x(1 - x) + 2 = 16x2 - 16x + 2

= (-4x + 2)2 - 2

= y2 - 2.

Therefore, the effect of applying the polynomial function F to x is to apply a different polynomial function to y, namely Q(y) = y2 - 2. Since the change of variables from x to -4x + 2 is continuous and invertible, one says that the functions F and Q are conjugate.

Because F and Q are conjugate, the orbit of any x0 under F becomes, after the change of variables, the orbit of the corresponding point y0 = -4x0 + 2 under Q. That is, for every k we have yk = -4xk + 2. The two systems are topologically equivalent: if you want to understand the dynamics of one of them, you can if you study the other, since its dynamics will be qualitatively the same.

For continuous dynamical systems the notion of equivalence is slightly looser in that we allow a homeomorphism between two topologically equivalent systems to map one orbit onto another without respecting the exact time evolution, but for discrete dynamical systems we must demand that the time evolution is respected as in the example above: in other words, we insist on conjugacy.

The term dynamical system was coined by Stephen Smale in the 1960s and has taken off since then. Smale evolved the theory of robust systems, also named structurally stable systems, a notion that was introduced in the 1930s by Alexander A. Andronov and Lev S. Pontryagin. A dynamical system is called structurally stable if all systems sufficiently close to it, belonging to some specified family of systems, are in fact topologically equivalent to it. We say that they all have the same qualitative behavior. An example of the kind of family one might consider is the set of all real quadratic polynomials of the form x2 + a. This family is parametrized by a, and the systems close to a given polynomial x2 + a0 are all the polynomials x2 + a for which a is close to a0. We shall return to the question of structural stability when we discuss holomorphic dynamics later.

If a family of dynamical systems parametrized by a variable a is not structurally stable, it may still be that the system with parameter a0 is topologically equivalent to all systems with parameter a in some region that contains a0. A major goal of research into dynamics is to understand not just the qualitative structure of each system in the family, but also the structure of the parameter space, that is, how it is divided up into such regions of stability. The boundaries that separate these regions form what is called the bifurcation set: if a0 belongs to this set, then there will be parameters a arbitrarily close to a0 for which the corresponding system has a different qualitative behavior.

A description and classification of structurally stable systems and a classification of possible bifurcations is not within reach for general dynamical systems. However, one of the success stories in the subject, holomorphic dynamics, studies a special class of dynamical systems for which many of these goals have been attained. It is time to turn our attention to this class.

2 Holomorphic Dynamics

Holomorphic dynamics is the study of discrete dynamical systems where the map to be iterated is a HOLOMORPHIC FUNCTION [I.3 §5.6] of the COMPLEX NUMBERS [I.3 §1.5]. Complex numbers are typically denoted by z. In this article, we shall consider iterations of complex polynomials and rational functions (that is, functions like (z2 + 1)/(z3 + 1) that are ratios of polynomials), but much of what we shall say about them is true for more general holomorphic functions, such as EXPONENTIAL [III.25] and TRIGONOMETRIC [III.92] functions.

Whenever one restricts attention to a special kind of dynamical system, there will be tools that are specially adapted to that situation. In holomorphic dynamics these tools come from complex analysis. When we concentrate on rational functions, there are more special tools, and if we restrict further to polynomials, then there are yet others, as we shall see.

Why might one be interested in iterating rational functions? One answer arose in 1879, when CAYLEY [VI.46] had the idea of trying to find roots of complex polynomials by extending Newton’s method, which we discussed in the introduction, from real numbers to complex numbers. Given any polynomial P, the corresponding Newton function NP is a rational function, given by the formula

![]()

To apply Newton’s method, one iterates this rational function.

The study of the iteration of rational functions flourished at the beginning of the twentieth century, thanks in particular to work of Pierre Fatou and Gaston Julia (who independently obtained many of the same results). Part of their work concerned the study of the local behavior of functions in the neighborhoods of a fixed point. But they were also concerned about global dynamical properties and were inspired by the theory of so-called normal families, then recently established by Paul Montel. However, research on holomorphic dynamics almost came to a stop around 1930, because the fractal sets that lay behind the results were so complicated as to be almost beyond imagination. The research came back to life in around 1980 with the vastly extended calculating powers of computers, and in particular the possibility of making sophisticated graphic visualizations of these fractal sets. Since then, holomorphic dynamics has attracted a lot of attention. New techniques continue to be developed and introduced.

To set the scene, let us start by looking at one of the simplest of polynomials, namely z2.

2.1 The Quadratic Polynomial z2

The dynamics of the simplest quadratic polynomial, Q0(z) = z2, plays a fundamental role in the understanding of the dynamics of any quadratic polynomial. Moreover, the dynamical behavior of Q0 can be analyzed and understood completely.

If z = reiθ, then z2 = r2e2iθ, so squaring a complex number squares its modulus and doubles its argument. Therefore, the unit circle (the set of complex numbers of modulus 1) is mapped by Q0 to itself, while a circle of radius r < 1 is mapped onto a circle closer to the origin, and a circle of radius r > 1 is mapped onto a circle farther away.

Let us look more closely at what happens to the unit circle. A typical point in the circle, eiθ, can be parametrized by its argument θ, which we can take to lie in the interval [0, 2π). When we square this number, we obtain e2iθ, which is parametrized by the number 2θ if 2θ < 2π, but if 2θ ![]() 2π, then we subtract 2π so that the argument, 2θ - 2π, still lies in [0 , 2π). This is strongly reminiscent of the dynamical system we considered in section 1.5. In fact, if we replace the argument θ by its modified argument θ/2π, which amounts to writing e2πiθ instead of eiθ, then it becomes exactly the same system. Therefore, the behavior of z2 on the unit circle is chaotic.

2π, then we subtract 2π so that the argument, 2θ - 2π, still lies in [0 , 2π). This is strongly reminiscent of the dynamical system we considered in section 1.5. In fact, if we replace the argument θ by its modified argument θ/2π, which amounts to writing e2πiθ instead of eiθ, then it becomes exactly the same system. Therefore, the behavior of z2 on the unit circle is chaotic.

As for the rest of the complex plane, the origin is an asymptotically stable fixed point, Q0(0) = 0. For any point z0 inside the unit circle the iterates zk converge to 0 as k tends to infinity. For any point z0 outside the unit circle the distance |zk| between the iterates zk and the origin tends to infinity as k tends to infinity. The set of initial points z0 with bounded orbit is equal to the closed unit disk, i.e., all points for which |z0| ![]() 1. Its boundary, the unit circle, divides the complex plane into two domains with qualitatively different dynamical behavior.

1. Its boundary, the unit circle, divides the complex plane into two domains with qualitatively different dynamical behavior.

Some orbits of Q0 are periodic. In order to determine which ones, we first notice that the only possibility outside the unit circle is the fixed point at the origin, since all other points, when you repeatedly square them, either get steadily closer and closer to the origin, or get steadily farther and farther away. So now let us look at the unit circle, and consider the point e2πiθ0, with modified argument θ0. If this point is periodic with period k, we must have 2kθ0 = θ0(mod 1): that is, (2k - 1)θ0 must be an integer. Because of this, it is convenient to parametrize a point on the unit circle by its modified argument. From now on, when we say “the point θ,” we shall mean the point e2πiθ, and when we say “argument” we shall mean modified argument.

We have just established that the point θ is periodic with period k only if(2k 1)θ is an integer. It follows that there is one point of period 1, namely θ0 = 0. There are two points of period 2, forming one orbit, namely ![]()

![]()

![]()

![]()

![]() . There are six points for period 3, forming two orbits, namely

. There are six points for period 3, forming two orbits, namely ![]()

![]()

![]()

![]()

![]()

![]()

![]() and

and ![]()

![]()

![]()

![]()

![]()

![]()

![]() . (At each stage, we double the number we have, and subtract 1 if that is needed to get us back into the interval [0, 1).) The points of period 4 are fractions with denominator 15, but the converse is not true: the fractions

. (At each stage, we double the number we have, and subtract 1 if that is needed to get us back into the interval [0, 1).) The points of period 4 are fractions with denominator 15, but the converse is not true: the fractions![]() =

= ![]() and

and ![]() =

= ![]() have the lower period 2. The periodic points on the unit circle are dense in the unit circle, meaning that arbitrarily close to any point is a periodic point. This follows from the observation that all repeating binary expansions, such as 0.1100011000110001100011000 . . . are periodic, and any finite sequence of 0s and 1s is the start of a repeating sequence. One can, in fact, show that the periodic points on the unit circle are exactly the points whose argument is a fraction p/q in [0, 1) with q odd. Any fraction with even denominator can be written in the form p/(2

have the lower period 2. The periodic points on the unit circle are dense in the unit circle, meaning that arbitrarily close to any point is a periodic point. This follows from the observation that all repeating binary expansions, such as 0.1100011000110001100011000 . . . are periodic, and any finite sequence of 0s and 1s is the start of a repeating sequence. One can, in fact, show that the periodic points on the unit circle are exactly the points whose argument is a fraction p/q in [0, 1) with q odd. Any fraction with even denominator can be written in the form p/(2![]() q) for some odd number q. After

q) for some odd number q. After ![]() iterations, such a fraction will land on a periodic point, so the initial point is pre-periodic. Points with rational argument in [0, 1) have a finite orbit, while points with irrational argument have an infinite orbit. The reason for taking modified arguments is now justified: the behavior of the dynamics depends on whether θ0 is rational or irrational.

iterations, such a fraction will land on a periodic point, so the initial point is pre-periodic. Points with rational argument in [0, 1) have a finite orbit, while points with irrational argument have an infinite orbit. The reason for taking modified arguments is now justified: the behavior of the dynamics depends on whether θ0 is rational or irrational.

When θ0 is irrational its orbit may or may not be dense in [0, 1). This is another fact that is easy to see if one considers binary expansions. For instance, a very special example of a θ0 with a dense orbit is given by the binary expansion

θ0 = 0.0100011011000001010011100101110111 . . . ,

where one obtains this expansion by simply listing all finite binary sequences in turn: first the blocks of length one, 0 and 1, then the blocks of length two, 00, 01, 10, and 11, and so on. When we iterate, this binary expansion shifts to the left and all possible finite sequences appear at some time or another at the beginning of some iterate θk.

2.2 Characterization of Periodic Points

Let z0 be a fixed point of a holomorphic map F. How do the iterates of points near z0 behave? The answer depends crucially on a number ρ, called the multiplier of the fixed point, which is defined to be F´ (z0). To see why this is relevant, notice that if z is very close to z0, then F(z) is, to a first-order approximation, equal to F(z0) + F´(z0)(z - z0) = z0 + ρ(z - z0). Thus, when you apply F to a point near z0, its difference from z0 approximately multiplies by ρ. If |ρ| < 1, then nearby points will get closer to z0, in which case z0 is called an attracting fixed point. If ρ = 0, then this happens very quickly and z0 is called super-attracting. If |ρ| > 1, then nearby points get farther away and z0 is called repelling. Finally, if |ρ| = 1, then one says that z0 is indifferent.

If z0 is indifferent, then its multiplier will take the form ρ = e2πiθ, and near z0 the map F will be approximately a rotation about z0 by an angle of 2πθ. The behavior of the system depends very much on the precise value of θ. We call the fixed point rationally or irrationally indifferent if θ is rational or irrational, respectively. The dynamics is not yet completely understood in all irrational cases.

A periodic point z0 of period k will be a fixed point of the kth iterate Fk = F ο. . ο F of F. For this reason we define its multiplier by ρ = (Fk)´(z0). It follows from the chain rule that

![]()

and therefore that the derivative of Fk is the same at all points of the periodic orbit. This formula also implies that a super-attracting periodic orbit must contain a critical point (that is, a point where the derivative of F is zero): if (Fk)´(z0) = 0, then at least one F´(zj) must be 0.

Note that 0 is a super-attracting fixed point of Q0, and that any periodic orbit of Q0 of period k on the unit circle has multiplier 2k. All periodic orbits on the unit circle are therefore repelling.

2.3 A One-Parameter Family of Quadratic Polynomials

The quadratic polynomial Q0 sits at the center of the one-parameter family of quadratic polynomials of the form Qc(z) = z2 + c. (We considered this family earlier, but then z and c were real rather than complex.) For each fixed complex number c we are interested in the dynamics of the polynomial Qc under iteration. The reason we do not need to study more general quadratic polynomials is that they can be brought into this form by a simple substitution w = az + b, similar to the substitution in the real example in section 1.6. For any given quadratic polynomial P we can find exactly one substitution w = az + b and one c such that

a(P(z)) + b = (az + b)2 + c for all z.

Therefore, if we understand the dynamics of the polynomials Qc, then we understand the dynamics of all quadratic polynomials.

There are other representative families of quadratic polynomials that can be useful. One example is the family Fλ(z) = λz + z2. The substitution w = z + ![]() λ changes Fλ into Qc, where c =

λ changes Fλ into Qc, where c = ![]() λ -

λ - ![]() λ2. We shall return to the expression of c in terms of λ later on. In the family of polynomials Qc, the parameter c = Qc(0) coincides with the only critical value of Qc in the plane:

λ2. We shall return to the expression of c in terms of λ later on. In the family of polynomials Qc, the parameter c = Qc(0) coincides with the only critical value of Qc in the plane:

as we shall see later, critical orbits play an essential role in the analysis of the global dynamics. In the family of polynomials Fλ the parameter λ is equal to the multiplier of the fixed point at the origin of Fλ, which sometimes makes this family more convenient.

2.4 The Riemann Sphere

To understand further the dynamics of polynomials it is best to regard them as a special case of rational functions. Since a rational function can sometimes be infinite, the natural space to consider is not the complex plane ![]() but the extended complex plane, which is the complex plane together with the point “∞.” This space is denoted

but the extended complex plane, which is the complex plane together with the point “∞.” This space is denoted ![]() =

= ![]() ∪ {∞}. A geometrical picture (see figure 1) is obtained by identifying the extended complex plane with the Riemann sphere. This is simply the unit sphere {(x1, x2, x3) :

∪ {∞}. A geometrical picture (see figure 1) is obtained by identifying the extended complex plane with the Riemann sphere. This is simply the unit sphere {(x1, x2, x3) : ![]() +

+ ![]() +

+ ![]() = 1} in three-dimensional space. Given a number z in the complex plane, the straight line joining z to the north pole N = (0, 0, 1) intersects this sphere in exactly one place (apart from N itself). This place is the point in the sphere that is associated with z. Notice that the bigger |z| is, the closer the associated point is to N. We therefore regard N as corresponding to the point ∞.

= 1} in three-dimensional space. Given a number z in the complex plane, the straight line joining z to the north pole N = (0, 0, 1) intersects this sphere in exactly one place (apart from N itself). This place is the point in the sphere that is associated with z. Notice that the bigger |z| is, the closer the associated point is to N. We therefore regard N as corresponding to the point ∞.

Let us now think of Q0(z) = z2 as a function from ![]() to

to ![]() . We have seen that 0 is a super-attracting fixed point of Q0. What about ∞, which is a fixed point as well? The classification we gave in terms of multipliers does not work at ∞, but a standard trick in this situation is to “move” ∞ to 0. If one wishes to understand the behavior of a function f with a fixed point at ∞, one can look instead at the function g(z) = 1/f(1/z), which has a fixed point at 0 (since 1/f(1/0) = 1/f(∞) = 1/∞ = 0). When f(z) = z2, g(z) is also z2, so ∞ is also a super-attracting fixed point of Q0.

. We have seen that 0 is a super-attracting fixed point of Q0. What about ∞, which is a fixed point as well? The classification we gave in terms of multipliers does not work at ∞, but a standard trick in this situation is to “move” ∞ to 0. If one wishes to understand the behavior of a function f with a fixed point at ∞, one can look instead at the function g(z) = 1/f(1/z), which has a fixed point at 0 (since 1/f(1/0) = 1/f(∞) = 1/∞ = 0). When f(z) = z2, g(z) is also z2, so ∞ is also a super-attracting fixed point of Q0.

Figure 2 The Douady rabbit. The filled Julia set of Qc0 where c0 is the one root of the polynomial (c2 + c)2 + c that has positive imaginary part. This corresponds to one of the three possible c values for which the critical orbit 0 ![]() c

c ![]() c2 + c

c2 + c ![]() (c2 + c)2 + c = 0 is periodic of period 3. The critical orbit is marked with three white dots inside the filled Julia set: 0 in the black, c0 in the light gray, and

(c2 + c)2 + c = 0 is periodic of period 3. The critical orbit is marked with three white dots inside the filled Julia set: 0 in the black, c0 in the light gray, and ![]() + c0 in the gray. The corresponding three attracting basins of

+ c0 in the gray. The corresponding three attracting basins of ![]() are marked in black, light gray, and gray, respectively. The Julia set is the common boundary of the black, light gray, and gray basins of attraction as well as of Ac0 (∞).

are marked in black, light gray, and gray, respectively. The Julia set is the common boundary of the black, light gray, and gray basins of attraction as well as of Ac0 (∞).

In general, if P is any nonconstant polynomial, then it is natural to define P(∞) to be ∞. Applying the above trick, we obtain a rational function. For example, if P(z) = z2 + 1, then 1/P(1/z) = z2/(z2 + 1). If P has degree at least 2, then ∞ is a super-attracting fixed point.

The connection between ![]() and rational functions is expressed by the following fact: a function F :

and rational functions is expressed by the following fact: a function F : ![]()

![]()

![]() is holomorphic everywhere (with suitable definitions at ∞) if and only if it is a rational function. This is not obvious, but is typically proved in a first course in complex analysis. Among the rational functions, the polynomials are the ones for which F(∞) = ∞ = F-1(∞).

is holomorphic everywhere (with suitable definitions at ∞) if and only if it is a rational function. This is not obvious, but is typically proved in a first course in complex analysis. Among the rational functions, the polynomials are the ones for which F(∞) = ∞ = F-1(∞).

A polynomial P of degree d has d - 1 critical points in the plane (not including ∞). These are the roots of the derivative P´, counted with multiplicity. The critical point at ∞ has multiplicity d - 1, as can again be seen by looking at the map 1/P(1/z). In particular, quadratic polynomials have exactly one critical point in the plane. The degree of a rational function P/Q (where P and Q have no common roots) is defined to be the maximal degree of the polynomials P and Q. A rational function of degree d has 2d - 2 critical points in ![]() , as we have just seen for polynomials.

, as we have just seen for polynomials.

2.5 Julia Sets of Polynomials

It can be shown that the only invertible holomorphic maps from ![]() to

to ![]() are polynomials of degree 1, that is, functions of the form az + b with a ≠ 0. The dynamical behavior of these maps is easy to analyze, simple, and hence not interesting.

are polynomials of degree 1, that is, functions of the form az + b with a ≠ 0. The dynamical behavior of these maps is easy to analyze, simple, and hence not interesting.

From now on, therefore, we shall consider only polynomials P of degree at least 2. For all such polynomials, ∞ is a super-attracting fixed point, from which it follows that the plane is split into two disjoint sets with qualitatively different dynamics, one consisting of points that are attracted to ∞ and the other consisting of points that are not. The attracting basin of ∞, denoted by Ap (∞), consists of all initial points z such that Pk(z) → ∞ as k → ∞. (Here, Pk(z) stands for the result of applying P to z k times.) The complement of Ap (∞) is called the filled Julia set, and is denoted by KP. It can be defined as the set of all points z such that the sequence z, P(z), P2(z), P3(z), . . . is bounded. (It is not hard to show that sequences of this kind either tend to ∞ or are bounded.)

The attracting basin of ∞ is an open set and the filled Julia set is a closed, bounded set (i.e., a COMPACT SET [III.9]). The attracting basin of ∞ is always connected. For this reason the boundary of KP is equal to the boundary of Ap (∞). The common boundary is called the Julia set of P and is denoted by JP. The three sets KP, AP (∞), and JP are completely invariant, i.e., P(KP) = KP = P-1(KP), and so on. If we replace P by any iterate Pk, then the filled Julia set, the attracting basin of ∞, and the Julia set of Pk are the same sets as those of P.

For the polynomial Q0, we showed earlier that the filled Julia set is the closed unit disk, {z : | z | ≤ 1}; the attracting basin of ∞ is its complement, {z : | z | > 1}; and the Julia set is the unit circle, {z : | z | = 1}.

The name “filled Julia set” refers to the fact that KP is equal to JP with all its holes (or, more formally, the bounded components of its complement) filled in. The complement of the Julia set is called the Fatou set and any connected component of it is called a Fatou component.

Figures 2-6 show different examples of Julia sets of quadratic polynomials Qc. For simplicity we set ![]() , and

, and ![]() . Note that all Julia sets JC are symmetric around 0, owing to the symmetry in the formula: QC (-z) QC (z), which implies that if a point z belongs to JC, then so does -z.

. Note that all Julia sets JC are symmetric around 0, owing to the symmetry in the formula: QC (-z) QC (z), which implies that if a point z belongs to JC, then so does -z.

Figure 3 The Julia set of Q1/4. Every point inside the Julia set (including the critical point 0) is attracted (under repeated applications of Q1/4) to the rationally indifferent fixed point ![]() with multiplier ρ = 1, which belongs to J1/4.

with multiplier ρ = 1, which belongs to J1/4.

Figure 4 The Julia set of Qc with a so-called Siegel disk around an irrationally indifferent fixed point of multiplier ![]() . The corresponding c-value is equal to

. The corresponding c-value is equal to ![]() ρ -

ρ - ![]() ρ2. In the Siegel disk, the Fatou component containing the fixed point, the action of Qc can, after a suitable change of variables, be expressed as w

ρ2. In the Siegel disk, the Fatou component containing the fixed point, the action of Qc can, after a suitable change of variables, be expressed as w ![]() , πw. The fixed point is marked and so are some orbits of points in its vicinity. The critical orbit is dense in the boundary of the Siegel disk.

, πw. The fixed point is marked and so are some orbits of points in its vicinity. The critical orbit is dense in the boundary of the Siegel disk.

2.6 Properties of Julia Sets

In this section we shall list several common properties of Julia sets. The proofs of these, which are beyond the scope of this article, mostly depend on the theory of normal families.

- The Julia set is the set of points for which the system displays sensitivity to initial conditions, i.e., the chaotic subset of the dynamical system.

- The repelling orbits belong to the Julia set and form a dense subset of the set. That is, any point in the Julia set can be approximated arbitrarily well by a repelling point. This is the definition originally used by Julia. (Of course, the name “Julia set” was used only later.)

- For any point z in the Julia set, the set of iterated preimages

forms a dense subset of the Julia set. This property is used when one is making computer pictures of Julia sets.

forms a dense subset of the Julia set. This property is used when one is making computer pictures of Julia sets. - In fact, for any point z in

(with at most one or two exceptions), the closure of the set of iterated preimages contains the Julia set.

(with at most one or two exceptions), the closure of the set of iterated preimages contains the Julia set. - For any point z in the Julia set and any neighborhood Uz of z, the iterated images Fk(Uz) cover all of

except at most one or two exceptional points. This property demonstrates an extreme sensitivity to initial conditions.

except at most one or two exceptional points. This property demonstrates an extreme sensitivity to initial conditions. - If Ω is a union of Fatou components that is completely invariant (that is, F(Ω) = Ω = F-1(Ω)), then the boundary of Ω coincides with the Julia set. This justifies the definition of the Julia set of a polynomial as the boundary of the attracting basin of ∞. Compare also with figure 2, where the attracting basins of

and AC0 (∞) are examples of such completely invariant sets.

and AC0 (∞) are examples of such completely invariant sets. - The Julia set is either connected or consists of uncountably many connected components. An example of the latter is shown in figure 6.

- The Julia set is typically a fractal: when one zooms in on it, one finds that the complication of the set is repeated at all scales. It is also self-similar, in the following sense: for any noncritical point z in the Julia set, any sufficiently small neighborhood Uz of z is mapped bijectively onto F(Uz), a neighborhood of F(z). The Julia set in Uz and the Julia set in F(Uz) look alike.

All but the last two properties can easily be verified in the example Q0. In this case the exceptional points are 0 and ∞.

2.7 Böttcher Maps and Potentials

2.7.1 Böttcher Maps

Consider the quadratic polynomial Q-2 (z) = z2 - 2. If z belongs to the interval [-2, 2], then z2 belongs to the interval [0, 4], so Q-2 (z) also belongs to the interval [-2, 2]. It follows that this interval is contained in the filled Julia set K-2.

Figure 5 (a) Some equipotentials and external rays R0 (θ) of Q0 in A0 (∞), the set of complex numbers of modulus greater than 1. (b) The corresponding equipotentials and external rays R- (θ) of Q-2 in A-2 (∞), the set of complex numbers not in K-2 = J-2 = [-2, 2]. The external rays that are drawn have arguments θ = ![]() p, where p = 0, 1, . . . , 11.

p, where p = 0, 1, . . . , 11.

The polynomial Q-2 (z) is not topologically equivalent to Q0(w) = w2, but when z is big enough, it behaves in a similar way, since 2 is small compared with z2. We can express this similarity with an appropriate holomorphic change of variables. Indeed, suppose that z = w + 1/w. Then when w changes to w2, z changes to w2 + 1/w2. But this equals

(w + 1/w)2 - 2 = z2 - 2 = Q-2(z).

The reason this does not show that Q0 and Q-2 are equivalent is that the change of variables cannot be inverted. However, in a suitable region it can. If z = w + 1/w, then w2 - wz + 1 = 0. Solving this quadratic equation we find that ![]() , which leaves us with the problem of which square root to take. It can be shown that for one choice |w| < 1 and for the other choice |w| > 1, as long as z does not lie in the interval [-2, 2]. If we always choose the square root for which |w| > 1, then it turns out that the resulting function of z is a continuous function (in fact, holomorphic) from the set

, which leaves us with the problem of which square root to take. It can be shown that for one choice |w| < 1 and for the other choice |w| > 1, as long as z does not lie in the interval [-2, 2]. If we always choose the square root for which |w| > 1, then it turns out that the resulting function of z is a continuous function (in fact, holomorphic) from the set ![]() [-2, 2] of complex numbers not in [-2, 2] to the set {w : |w| > 1} of complex numbers of modulus greater than 1.

[-2, 2] of complex numbers not in [-2, 2] to the set {w : |w| > 1} of complex numbers of modulus greater than 1.

Once this is established, it follows that the behavior of Q-2 on the set ![]() [-2, 2] is topologically the same as the behavior of Q0 on the set {w : |w| > 1}. In particular, points outside

[-2, 2] is topologically the same as the behavior of Q0 on the set {w : |w| > 1}. In particular, points outside ![]() [-2, 2] have orbits that tend to infinity under iteration by Q-2. Therefore, the attracting basin A-2 (∞) of Q-2 is

[-2, 2] have orbits that tend to infinity under iteration by Q-2. Therefore, the attracting basin A-2 (∞) of Q-2 is ![]() [-2, 2], and the filled Julia set K-2 and the Julia set J-2 are both equal to [-2, 2].

[-2, 2], and the filled Julia set K-2 and the Julia set J-2 are both equal to [-2, 2].

Let us write ψ-2 (w) for w + 1 /w. The function ψ-2, which we used to change variables, maps circles of radius greater than 1 onto ellipses, and takes radial lines R0(θ) that consists of all complex numbers of some given argument θ and modulus greater than 1 to half-branches of hyperbolas. Since the ratio of ψ-2 (w) to w tends to 1 as w → ∞, each radial line will be the asymptote of the corresponding hyperbola half-branch (see figure 5).

It turns out that what we have just done for the polynomial Q-2 can be done for any quadratic polynomial Qc. That is, for sufficiently large complex numbers there is a holomorphic function, denoted ![]() c, called the Böttcher map, that changes variables in such a way that Qc turns into Q0, in the sense that

c, called the Böttcher map, that changes variables in such a way that Qc turns into Q0, in the sense that ![]() c(Qc(z)) =

c(Qc(z)) = ![]() c(z)2. (The map ψ-2 described above is the inverse of the Böttcher map in the case c = -2, rather than the map itself.) After the change of variables, the new coordinates are called Böttcher coordinates.

c(z)2. (The map ψ-2 described above is the inverse of the Böttcher map in the case c = -2, rather than the map itself.) After the change of variables, the new coordinates are called Böttcher coordinates.

More generally, for all monic polynomials P (i.e., polynomials with leading coefficient 1) there is a unique holomorphic change of variables ![]() P that converts P into the function z

P that converts P into the function z ![]() zd for large enough z, in the sense that

zd for large enough z, in the sense that ![]() P (P(z)) =

P (P(z)) = ![]() P(z)d, and has the property that (

P(z)d, and has the property that (![]() p(z)/z) → 1 as z → ∞. The inverse of

p(z)/z) → 1 as z → ∞. The inverse of ![]() P is written ψP.

P is written ψP.

2.7.2 Potentials

As we have noted already, if one repeatedly squares a complex number z of modulus greater than 1, then it will escape to infinity The larger the modulus of z, the faster the iterates will tend to infinity If instead of squaring, one applies a monic polynomial P of degree d, then for large enough z it is again true that the iterates z, P(z), P2 (z), . . . tend to infinity It follows from the formula ![]() P (P(z)) =

P (P(z)) = ![]() P(z)d that

P(z)d that ![]() . Therefore, the speed at which the iterates tend to infinity depends not on |z| but on |

. Therefore, the speed at which the iterates tend to infinity depends not on |z| but on |![]() P(z)|: the larger the value of |

P(z)|: the larger the value of |![]() P(z), the faster the convergence. For this reason, the level sets of |

P(z), the faster the convergence. For this reason, the level sets of |![]() P|, that is, sets of the form {z ∈

P|, that is, sets of the form {z ∈ ![]() : |

: |![]() P (z)| = r}, are important.

P (z)| = r}, are important.

For many purposes it is useful to look not at the function ![]() P itself but at the function gP (z) = log |

P itself but at the function gP (z) = log |![]() P (z)|. This function is called the potential, or Green’s function. It has the same level sets as |

P (z)|. This function is called the potential, or Green’s function. It has the same level sets as |![]() P(z)|, but has the advantage that it is a HARMONIC FUNCTION [IV.24 §5.1].

P(z)|, but has the advantage that it is a HARMONIC FUNCTION [IV.24 §5.1].

Clearly, gP is defined whenever ![]() P is defined. But we can in fact extend the definition of gP to the whole of the attracting basin AP(∞). Given any z for which the iterates Pk(z) tend to infinity, one chooses some k such that

P is defined. But we can in fact extend the definition of gP to the whole of the attracting basin AP(∞). Given any z for which the iterates Pk(z) tend to infinity, one chooses some k such that ![]() P(Pk(z)) is defined and one sets gp(z) to be d-k log |

P(Pk(z)) is defined and one sets gp(z) to be d-k log |![]() P(Pk(z))|. Notice that

P(Pk(z))|. Notice that ![]() P (Pk+1 (z)) =

P (Pk+1 (z)) = ![]() P(Pk(z))d, so log |

P(Pk(z))d, so log |![]() P(Pk+1(z))| = d log |

P(Pk+1(z))| = d log |![]() P(Pk(z))|, from which it is easy to deduce that the value of d-k log |

P(Pk(z))|, from which it is easy to deduce that the value of d-k log |![]() P(Pk(z)| does not depend on the choice of k.

P(Pk(z)| does not depend on the choice of k.

The level sets of gP are called equipotentials. Notice that the equipotential of potential gp (z) is mapped by P onto the equipotential of potential gp(P(z)) = dgp(z). As we shall see, useful information about the dynamics of the polynomial P can be deduced from information about its equipotentials.

If ψP is defined everywhere on the circle Cr of radius r, for some r > 1, then it maps it to {z : |![]() P(z)| = r}, which is the equipotential of potential log r. For large enough r, this equipotential is a simple closed curve encircling KP, and it shrinks as r decreases. It is possible for two parts of this curve to come together so that it forms a figure-of-eight shape and then splits into two, like an amoeba dividing, but this can happen only if the curve crosses a critical point of P. Therefore, if all the critical points of P belong to the filled Julia set KP (as in the example Q-2, where 0 ∈ K-2 = [-2, 2]), then it cannot happen. In this case, the Böttcher map

P(z)| = r}, which is the equipotential of potential log r. For large enough r, this equipotential is a simple closed curve encircling KP, and it shrinks as r decreases. It is possible for two parts of this curve to come together so that it forms a figure-of-eight shape and then splits into two, like an amoeba dividing, but this can happen only if the curve crosses a critical point of P. Therefore, if all the critical points of P belong to the filled Julia set KP (as in the example Q-2, where 0 ∈ K-2 = [-2, 2]), then it cannot happen. In this case, the Böttcher map ![]() P can be defined on the whole of the attracting basin AP (∞), and it is a bijection from AP (∞) to the attracting basin A0(∞) = {w ∋

P can be defined on the whole of the attracting basin AP (∞), and it is a bijection from AP (∞) to the attracting basin A0(∞) = {w ∋ ![]() : |w| > 1} of the polynomial zd. There are equipotentials of potential t for every t > 0 and they are all simple closed curves. (Compare with figure 5.) As t approaches 0, the equipotential of potential t, together with its interior, forms a shape that gets closer and closer to the filled Julia set Kp. It follows that KP is a connected set, as is the Julia set JP.

: |w| > 1} of the polynomial zd. There are equipotentials of potential t for every t > 0 and they are all simple closed curves. (Compare with figure 5.) As t approaches 0, the equipotential of potential t, together with its interior, forms a shape that gets closer and closer to the filled Julia set Kp. It follows that KP is a connected set, as is the Julia set JP.

Figure 6 The Julia set of a quadratic polynoniial Qc, for which the critical point 0 escapes to infinity under iteration. The Julia set is totally disconnected. The figure-of-eight-shaped curve with 0 at its intersection point is the equipotential through 0. The simple closed curve surrounding it is the equipotential through the critical value c.

On the other hand, if at least one of the critical points in the plane belongs to AP(∞), then at a certain point the image of Cr splits into two or more pieces. In particular, the equipotential containing the fastest escaping critical point (i.e., the critical point with the highest value of the potential gP) has at least two loops, as is illustrated in figure 6. The inside of each loop is mapped by P onto the inside of the equipotential of the corresponding critical value, which is a simple closed curve (since the potential of the critical value is greater than the potential of any critical point). Inside each loop there must be points from the filled Julia set KP, so this set must be disconnected. The Böttcher map can always be defined on the outside of the equipotential of the fastest escaping critical point and can therefore always be applied to the fastest escaping critical value.

If Qc, is a quadratic polynomial for which 0 escapes to infinity under iteration, then the filled Julia set turns out to be totally disconnected, which means that the connected components of Kc, are points. None of these points is isolated: they can all be obtained as limits of sequences of other points of Kc. A set which is compact, totally disconnected, and with no isolated points is called a CANTOR SET [III.17], since such a set is homeomorphic to Cantor’s middle-thirds set. Note that in this case Kc = Jc. For Qc we have the following dichotomy: the Julia set Jc is connected if 0 has a bounded orbit, and it is totally disconnected if 0 escapes to infinity under iteration. We shall return to this dichotomy when we come to define the Mandelbrot set later in this article.

2.7.3 External Rays of Polynomials with Connected Julia Set

We have just obtained information by looking at the images under ψP of circles of radius greater than 1. We can obtain complementary information from the images of radial lines, which cut all these circles at right angles. If the Julia set is connected, then, as we saw in the discussion of potentials, the Böttcher map ![]() P is a bijection from the attracting basin AP(∞) to the attracting basin of zd, which is the complement {w : |w| > 1} of the closed unit disk. As before, let

P is a bijection from the attracting basin AP(∞) to the attracting basin of zd, which is the complement {w : |w| > 1} of the closed unit disk. As before, let ![]() 0(θ) denote the half-line that consists of all complex numbers of argument θ and modulus greater than 1. Because (

0(θ) denote the half-line that consists of all complex numbers of argument θ and modulus greater than 1. Because (![]() P(z)/z) → 1 as z → ∞, the image of

P(z)/z) → 1 as z → ∞, the image of ![]() 0(θ) under ψP is a half-infinite curve consisting of points with arguments getting closer and closer to θ. This curve is denoted by

0(θ) under ψP is a half-infinite curve consisting of points with arguments getting closer and closer to θ. This curve is denoted by ![]() P (θ), and is known as the external ray of argument θ of P. Note that

P (θ), and is known as the external ray of argument θ of P. Note that ![]() 0(θ) is the external ray of argument θ of zd.

0(θ) is the external ray of argument θ of zd.

One can think of equipotentials as contour lines of the potential function, and of external rays as the lines of steepest ascent. Between the two of them, they provide a parametrization of the attracting basin, just as modulus and argument provide a parametrization of {z : |z| > 1}: if you know the potential at a certain complex number z, and you also know which external ray it lies on, then you know what z is. Moreover, a ray of argument θ is mapped by P onto the ray of argument dθ, just as, when a number z lies on the half-line ![]() 0(θ), then zd lies on the half-line

0(θ), then zd lies on the half-line ![]() 0 (dθ).

0 (dθ).

We say that an external ray lands if ψP (re2πiθ) converges to a limit as r ![]() 1. If this happens, then the limit is called the landing point. However, it may happen that the end of the ray oscillates so much that there is a continuum of different limit points. In this case the ray is nonlanding. It can be shown that all rational rays land. Since a rational ray is either periodic or pre-periodic under iteration by P, the landing point of a rational ray must be either a periodic or a pre-periodic point in the Julia set. Much of the structure of the Julia set can be picked up from knowledge about common landing points. In the example illustrated in figure 2, the closures of the three Fatou components containing the critical orbit have one point in common This point is a repelling fixed point and the common landing point of the rays of argument

1. If this happens, then the limit is called the landing point. However, it may happen that the end of the ray oscillates so much that there is a continuum of different limit points. In this case the ray is nonlanding. It can be shown that all rational rays land. Since a rational ray is either periodic or pre-periodic under iteration by P, the landing point of a rational ray must be either a periodic or a pre-periodic point in the Julia set. Much of the structure of the Julia set can be picked up from knowledge about common landing points. In the example illustrated in figure 2, the closures of the three Fatou components containing the critical orbit have one point in common This point is a repelling fixed point and the common landing point of the rays of argument ![]() ,

, ![]() ,

, ![]() . The rays of argument and

. The rays of argument and ![]() and

and ![]() are adjacent to the Fatou component containing the critical value c0. These two arguments will show up again in the parameter plane and tell us where c0 is situated.

are adjacent to the Fatou component containing the critical value c0. These two arguments will show up again in the parameter plane and tell us where c0 is situated.

2.7.4 Local Connectedness

In the example illustrated in figure 5 the inverse of the Böttcher map (the function ψ-2) is defined on the set {w : |w| > 1} of all complex numbers w of modulus greater than 1. However, it can be continuously extended to a function defined on the larger set {w : |W| ≥ 1}. If we use the formula ψ-2(w) = w + 1 w, then we have ψ-2(e2πiθ) = 2 cos(2πθ), which is the landing point of the external ray R-2(θ). For an arbitrary connected filled Julia set KP, we have the following result of Carathéodory: the inverse ψP of the Böttcher map has a continuous extension from {w : |w| > 1} to {w : |w| ≥ 1} if and only if KP is locally connected. To understand what this means, imagine a set that is shaped like a comb. From any point in this set to any other point there is a continuous path that lies in the set, but it is possible for the two points to be very close and for the shortest path to be very long. This happens, for example, if the two points are the ends of neighboring teeth of the comb. A connected set X is called locally connected if every point has arbitrarily small connected neighborhoods. It is possible to build comb-like sets (with infinitely many teeth) that contain points for which all connected neighborhoods have to be large. The filled Julia sets in the examples in figures 2–5 are locally connected, but there are examples of filled Julia sets that are not locally connected. When KP is locally connected, then all external rays land, and the landing point is a continuous function of the argument. Under these circumstances, we have a natural and useful parametrization of the Julia set JP.

2.8 The Mandelbrot Set M

We shall now restrict our attention to quadratic polynomials of the form Qc. These are parametrized by the complex number c, and in this context we shall refer to the complex plane as the parameter plane, or c-plane. We would like to understand the family of dynamical systems that arise when we iterate the polynomials Qc. Our goal will be to do this by dividing the c-plane into regions that correspond to polynomials with qualitatively the same dynamics. These regions will be separated by their boundaries, which together form the so-called bifurcation set. This consists of “unstable” c-values: that is, values of c for which there are other values arbitrarily nearby that give rise to qualitatively different dynamical behavior. In other words, a parameter c belongs to the bifurcation set if a small perturbation of c can make an important difference to the dynamics.

Recall the dichotomy that we stated earlier: the Julia set Jc is connected if the critical point 0 belongs to the filled Julia set Kc and is totally disconnected if 0 belongs to the attracting basin Ac (∞). This dichotomy motivates the following definition: the Mandelbrot set M consists of the c-values for which Jc is connected. That is,

![]()

Since the Julia set represents the chaotic part of the dynamical system given by Qc, the dynamical behavior is certainly qualitatively affected by whether c belongs to M or not. We have therefore made a start toward our goal, but the division of the plane into M and ![]() M is very coarse, and it does not obviously give us the complete understanding we are looking for.

M is very coarse, and it does not obviously give us the complete understanding we are looking for.

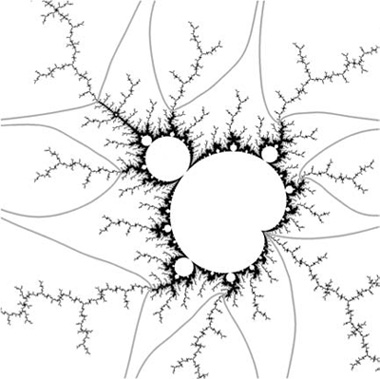

The important set is in fact not M, but its boundary ∂M, which is illustrated in figure 7. Notice that this set has a number of “holes” (in fact, infinitely many). The Mandelbrot set itself is obtained by filling in all these holes. More precisely, the complement of ∂M consists of an infinite collection of connected components, of which one, the outside of the set, stretches off to infinity, while all the others are bounded. The “holes” are the bounded components.

This definition is similar to the definition of the Julia set of a polynomial. It is easy to define the filled Julia set, and the Julia set is then defined as its boundary. The Julia set provides a lot of structure in the dynamical plane, the z-plane. The Mandelbrot set is similarly easy to define, and its boundary provides a lot of structure in the c-plane. Remarkably, even though each Julia set concerns just one dynamical system, while the Mandelbrot set concerns an entire family of systems, there are close analogies between them, as will become clear.

Figure 7 The boundary ∂ M of the Mandelbrot set.

Pioneering work on holomorphic dynamics in general and quadratic polynomials in particular was carried out in the early 1980s by Adrien Douady and John H. Hubbard. They introduced the name “Mandelbrot set” and proved several results about it. In particular, they defined a sort of Böttcher map, denoted by ΦM, for the Mandelbrot set, which is a map from the complement of the Mandelbrot set to the complement of the closed unit disk.

The definition of ΦM is actually quite simple: for each c let ΦM (c) equal ![]() c (c), where

c (c), where ![]() c is the Böttcher map for the parameter c. However, Douady and Hubbard did more than merely define ΦM: they proved that it is a holomorphic bijection with holomorphic inverse.

c is the Böttcher map for the parameter c. However, Douady and Hubbard did more than merely define ΦM: they proved that it is a holomorphic bijection with holomorphic inverse.

Once we have ΦM we can make further definitions, just as we did with the Böttcher map. For instance, we can define a potential G on the complement of the Mandelbrot set by setting G(c) = gc (c) = log |ΦM(c)| An equipotential is then a level set of ΦM (that is, a set of the form {c ∈ ![]() : |ΦM(c)| = r} for some r > 1) and the external ray of argument θ is the set {c ∈

: |ΦM(c)| = r} for some r > 1) and the external ray of argument θ is the set {c ∈ ![]() : arg(ΦM(c)) = 2πθ} (that is, the inverse image of a radial line R0 (θ)). The latter is denoted by RM (θ) and it is asymptotic to the radial line of argument θ. The rational external rays are known to land (see figure 8).

: arg(ΦM(c)) = 2πθ} (that is, the inverse image of a radial line R0 (θ)). The latter is denoted by RM (θ) and it is asymptotic to the radial line of argument θ. The rational external rays are known to land (see figure 8).

It follows from the above that as t approaches zero, the equipotential of potential t, together with its interior, gets closer and closer to M: that is, M is the intersection of all such sets. Hence, M is a connected, closed, bounded subset of the plane.

Figure 8 Some equipotentials of M and the external rays of arguments θ of periods 1, 2, 3, and 4. In counterclockwise direction the arguments between 0 and ![]() are 0,

are 0, ![]() ,

, ![]() ,

, ![]() ,

, ![]() ,

, ![]() ,

, ![]() ,

, ![]() ,

, ![]() ,

, ![]() , and

, and ![]() ; and symmetrically in clockwise direction they are 1 - ζ with ζ as above. The external rays of argument

; and symmetrically in clockwise direction they are 1 - ζ with ζ as above. The external rays of argument ![]() and

and ![]() are landing at the root point of the hyperbolic component that has c0, the parameter value of the Douady rabbit in figure 2, as its center. The rays of argument

are landing at the root point of the hyperbolic component that has c0, the parameter value of the Douady rabbit in figure 2, as its center. The rays of argument ![]() and

and ![]() are landing at the root point of the copy of M shown in figure 9.

are landing at the root point of the copy of M shown in figure 9.

2.8.1 J-Stability

As we have mentioned and as figure 7 suggests, the complement of ∂M has infinitely many connected components. These components are of great dynamical significance: if c and c′ are two parameters taken from the same component, then the dynamical systems arising from Qc and Qc′ can be shown to be essentially the same. To be precise, they are J-equivalent, which means that there is a continuous change of variables that converts the dynamics on one Julia set to the dynamics on the other. If c belongs to the boundary ∂, then there are parameter values c′ arbitrarily close to c for which Qc and Qc′ are not J-equivalent, so ∂M is the “bifurcation set with respect to J-stability.” We shall comment on the global structural stability later.

2.8.2 Hyperbolic Components

From now on, we shall use the word “component” to refer to the holes of the Mandelbrot set—that is, to the bounded components of the complement of ∂M.

We start by considering the component containing c = 0, the central component H0. Recall from section 2.3 that, after a suitable change of variables, one can change the polynomial Fλ(z) = λz + z2 into the polynomial Qc, where the parameters λ and c are related by the equation c = ![]() λ –

λ – ![]() λ2. The parameter λ has a dynamical meaning: the origin is a fixed point of Fλ and λ is its multiplier. This knowledge tells us that the corresponding Qc has a fixed point of multiplier λ; we denote the fixed point by αc. For |λ| < 1 the fixed point is attracting.

λ2. The parameter λ has a dynamical meaning: the origin is a fixed point of Fλ and λ is its multiplier. This knowledge tells us that the corresponding Qc has a fixed point of multiplier λ; we denote the fixed point by αc. For |λ| < 1 the fixed point is attracting.

The unit disk {λ : |λ| < 1} corresponds to the central component H0, and the function that takes a parameter c in H0 to the corresponding parameter λ in the unit disk is called the multiplier map, and is denoted by ρH0. Thus, ρH0 (c) is the multiplier of the fixed point αc of the polynomial Qc The multiplier map ρH0 is a holomorphic isomorphism from ![]() 0 to the unit disk. As we have just seen, the inverse map is given by

0 to the unit disk. As we have just seen, the inverse map is given by ![]() . This map extends continuously to the unit circle, and thereby gives us a parametrization of the boundary of the central component

. This map extends continuously to the unit circle, and thereby gives us a parametrization of the boundary of the central component ![]() 0 by points λ of modulus 1. The image of the unit circle under the map λ

0 by points λ of modulus 1. The image of the unit circle under the map λ ![]()

![]() λ -

λ - ![]() λ2 is a cardioid. This explains the heartlike shape of the largest part of the Mandelbrot set, which can be seen in figure 7.

λ2 is a cardioid. This explains the heartlike shape of the largest part of the Mandelbrot set, which can be seen in figure 7.

Any quadratic polynomial has two fixed points if we count with multiplicity (in fact, two distinct ones unless c = ![]() ). The central component

). The central component ![]() 0 is characterized as the component of c-values for which Qc has an attracting fixed point. For any c outside the cardioid, Qc has two repelling fixed points, but it may have an attracting periodic orbit of a period greater than 1. It is an important fact that the attracting basin of an attracting periodic orbit always contains a critical orbit. Therefore, for any quadratic polynomial there can be at most one attracting periodic orbit.

0 is characterized as the component of c-values for which Qc has an attracting fixed point. For any c outside the cardioid, Qc has two repelling fixed points, but it may have an attracting periodic orbit of a period greater than 1. It is an important fact that the attracting basin of an attracting periodic orbit always contains a critical orbit. Therefore, for any quadratic polynomial there can be at most one attracting periodic orbit.

We call a component H of the Mandelbrot set a hyperbolic component if, for every parameter c in H, the polynomial Qc has an attracting periodic orbit. For any given hyperbolic component, the periods of the attracting periodic orbits will be the same. There is a corresponding multiplier map ρ![]() , from

, from ![]() to the unit disk, which assigns to each parameter c in H the multiplier of the attracting periodic orbit. This multiplier map is always a holomorphic isomorphism that extends continuously to the boundary ∂H of H.

to the unit disk, which assigns to each parameter c in H the multiplier of the attracting periodic orbit. This multiplier map is always a holomorphic isomorphism that extends continuously to the boundary ∂H of H.

The points ![]() (0) and

(0) and ![]() (1) are called the center and the root of

(1) are called the center and the root of ![]() . The center of

. The center of ![]() is the unique c in

is the unique c in ![]() for which the periodic orbit of Qc is super-attracting. As for the root, if the period of the component is k, then it will be the landing point for a pair of external rays of periodic arguments of period k. (For the central component H0 there is only one ray assigned.) Conversely, every external ray with such an argument lands at the root point of a hyperbolic component of period k. Thus, the arguments of these rays give addresses to the hyperbolic components. This can be seen in figure 8, from which one can read off the mutual positions of all the components of periods 1–4.

for which the periodic orbit of Qc is super-attracting. As for the root, if the period of the component is k, then it will be the landing point for a pair of external rays of periodic arguments of period k. (For the central component H0 there is only one ray assigned.) Conversely, every external ray with such an argument lands at the root point of a hyperbolic component of period k. Thus, the arguments of these rays give addresses to the hyperbolic components. This can be seen in figure 8, from which one can read off the mutual positions of all the components of periods 1–4.

As a consequence of the above, the number of hyperbolic components corresponding to a certain period k can be determined both as the number of roots in the polynomial ![]() (0) that are not roots in