IV.12 Partial Differential Equations

Sergiu Klainerman

Introduction

Partial differential equations (or PDEs) are an important class of functional equations: they are equations, or systems of equations, in which the unknowns are functions of more than one variable. As a very crude analogy, PDEs are to functions as polynomial equations (such as x2 + y2 = 1, for example) are to numbers. The distinguishing feature of PDEs, as opposed to more general functional equations, is that they involve not only unknown functions, but also various partial derivatives of those functions, in algebraic combination with each other and with other, fixed, functions. Other important kinds of functional equations are integral equations, which involve various integrals of the unknown functions, and ordinary differential equations (ODEs), in which the unknown functions depend on only one independent variable (such as a time variable t) and the equation involves only ordinary derivatives d/dt, d2/dt2, d3/dt3,. . . of these functions.

Given the immense scope of the subject the best I can hope to do is to give a very crude perspective on some of the main issues and an even cruder idea of the multitude of current research directions. The difficulty one faces in trying to describe the subject of PDEs starts with its very definition. Is it a unified area of mathematics, devoted to the study of a clearly defined set of objects (in the way that algebraic geometry studies solutions of polynomial equations or topology studies manifolds, for example), or is it rather a collection of separate fields, such as general relativity, several complex variables, or hydrodynamics, each one vast in its own right and centered on a particular, very difficult, equation or class of equations? I will attempt to argue below that, even though there are fundamental difficulties in formulating a general theory of PDEs, one can nevertheless find a remarkable unity between various branches of mathematics and physics that are centered on individual PDEs or classes of PDEs. In particular, certain ideas and methods in PDEs have turned out to be extraordinarily effective across the boundaries of these separate fields. It is thus no surprise that the most successful book ever written about PDEs did not mention PDEs in its title: it was Methods of Mathematical Physics by COURANT [VI.83] and HILBERT [VI.63].

As it is impossible to do full justice to such a huge subject in such limited space I have been forced to leave out many topics and relevant details; in particular, I have said very little about the fundamental issue of breakdown of solutions, and there is no discussion of the main open problems in PDEs. A longer and more detailed version of the article, which includes these topics, can be found at

http://press.princeton.edu/titles/8350.html

1 Basic Definitions and Examples

The simplest example of a PDE is the LAPLACE EQUATION [I.3 §5.4]

![]()

Here, Δ is the Laplacian, that is, the differential operator that transforms functions u = u(xl, x2, x3) defined from ![]() 3 to

3 to ![]() according to the rule

according to the rule

![]()

where ∂1, ∂2, ∂3 are standard shorthand for the partial derivatives ∂/∂x1, ∂/∂x2, ∂/∂x3. (We will use this shorthand throughout the article.) Two other fundamental examples (also described in [I.3 §5.4]) are the heat equation and the wave equation:

In each case one is asked to find a function u that satisfies the corresponding equations. For the Laplace equation u will depend on x1, x2, and x3, and for the other two it will depend on t as well. Observe that equations (2) and (3) again involve the symbol Δ, but also partial derivatives with respect to the time variable t. The constants k (which is positive) and c are fixed and represent the rate of diffusion and the speed of light, respectively. However, from a mathematical point of view they are not important, since if u(t, x1, x2, x3) is a solution of (3), for example, then υ(t, x1, x2, x3) = u(t, x1/c, x2/c, x3/c) satisfies the same equation with c = 1. Thus, when one is studying the equations one can set these constants to be 1. Both equations are called evolution equations because they are supposed to describe the change of a particular physical object as the time parameter t varies. Observe that (1) can be interpreted as a particular case of both (2) and (3): if u = u(t, x1, x2, x3) is a solution of either (2) or (3) that is independent of t, then ∂t u = 0, so u must satisfy (1).

In all three examples mentioned above, we tacitly assume that the solutions we are looking for are sufficiently differentiable for the equations to make sense. As we shall see later, one of the important developments in the theory of PDEs was the study of more refined notions of solutions, such as DISTRIBUTIONS [III.18], which require only weak versions of differentiability.

Here are some further examples of important PDFs. The first is THE SCHRÖDINGER EQUATION [III.83],

![]()

where u is a function from ![]() ×

× ![]() 3 to

3 to ![]() . This equation describes the quantum evolution of a massive particle, k =

. This equation describes the quantum evolution of a massive particle, k = ![]() /2m, where

/2m, where ![]() > 0 is Planck’s constant and m is the mass of the particle. As with the heat equation, one can set k to equal 1 after a simple change of variables. Though the equation is formally very similar to the heat equation, it has very different qualitative behavior. This illustrates an important general point about PDEs: that small changes in the form of an equation can lead to very different properties of solutions.

> 0 is Planck’s constant and m is the mass of the particle. As with the heat equation, one can set k to equal 1 after a simple change of variables. Though the equation is formally very similar to the heat equation, it has very different qualitative behavior. This illustrates an important general point about PDEs: that small changes in the form of an equation can lead to very different properties of solutions.

A further example is the Klein-Gordon equation

![]()

This is the relativistic counterpart to the Schrödinger equation: the parameter m has the physical interpretation of mass and mc2 has the physical interpretation of rest energy (reflecting Einstein’s famous equation E = mc2). One can normalize the constants c and mc2/![]() so that they both equal 1 by applying a suitable change of variables to time and space.

so that they both equal 1 by applying a suitable change of variables to time and space.

Though all five equations mentioned above first appeared in connection with specific physical phenomena, such as heat transfer for (2) and propagation of electromagnetic waves for (3), they have, miraculously, a range of relevance far beyond their original applications. In particular there is no reason to restrict their study to three space dimensions: it is very easy to generalize them to similar equations in n variables x1, x2, . . . ,xn.

All the PDEs listed so far obey a simple but fundamental property called the principle of superposition: if u1 and u2 are two solutions to one of these equations, then any linear combination a1u1 + a2u2 of these solutions is also a solution. In other words, the space of all solutions is a VECTOR SPACE [I.3 §2.3]. Equations that obey this property are known as homogeneous linear equations. If the space of solutions is an affine space (that is, a translate of a vector space) rather than a vector space, we say that the PDE is an inhomogeneous linear equation; a good example is Poisson’s equation:

![]()

where f : ![]() 3 →

3 → ![]() is a function that is given to us and u :

is a function that is given to us and u : ![]() 3 →

3 → ![]() is the unknown function. Equations that are neither homogeneous linear nor inhomogeneous linear are known as nonlinear. The following equation, the MINIMAL SURFACE EQUATION [III.94 §3.1], is manifestly nonlinear:

is the unknown function. Equations that are neither homogeneous linear nor inhomogeneous linear are known as nonlinear. The following equation, the MINIMAL SURFACE EQUATION [III.94 §3.1], is manifestly nonlinear:

The graphs of solutions u : ![]() 2 →

2 → ![]() of this equation are area-minimizing surfaces (like soap films).

of this equation are area-minimizing surfaces (like soap films).

Equations (1), (2), (3), (4), (5) are not just linear: they are all examples of constant-coefficient linear equations. This means that they can be expressed in the form

![]()

where ![]() is a differential operator that involves linear combinations, with constant real or complex coefficients, of mixed partial derivatives of u. (Such operators are called constant-coefficient linear differential operators.) For instance, in the case of the Laplace equation (1),

is a differential operator that involves linear combinations, with constant real or complex coefficients, of mixed partial derivatives of u. (Such operators are called constant-coefficient linear differential operators.) For instance, in the case of the Laplace equation (1), ![]() is simply the Laplacian Δ, while for the wave equation (3),

is simply the Laplacian Δ, while for the wave equation (3), ![]() is the d’Alembertian

is the d’Alembertian

![]()

The characteristic feature of linear constant-coefficient operators is translation invariance. Roughly speaking, this means that if you translate a function u, then you translate ![]() u in the same way. More precisely, if υ(x) is defined to be u(x - a) (so the value of u at x becomes the value of υ at x + a; note that x and a belong to

u in the same way. More precisely, if υ(x) is defined to be u(x - a) (so the value of u at x becomes the value of υ at x + a; note that x and a belong to ![]() 3 here), then

3 here), then ![]() υ(x) is equal to

υ(x) is equal to ![]() u(x - a). As a consequence of this basic fact we infer that solutions to the homogeneous, linear, constant-coefficient equation (8) are still solutions when translated.

u(x - a). As a consequence of this basic fact we infer that solutions to the homogeneous, linear, constant-coefficient equation (8) are still solutions when translated.

Since symmetries play such a fundamental role in PDEs we should stop for a moment to make a general definition. A symmetry of a PDE is any invertible operation T: u ![]() T(u) from functions to functions that preserves the space of solutions, in the sense that u solves the PDE if and only if T(u) solves the same PDE. A PDE with this property is then said to be invariant under the symmetry T. The symmetry T is often a linear operation, though this does not have to be the case. The composition of two symmetries is again a symmetry, as is the inverse of a symmetry, and so it is natural to view a collection of symmetries as forming a GROUP [I.3 §2.1] (which is typically a finite- or infinite-dimensional LIE GROUP [III.48 §1]).

T(u) from functions to functions that preserves the space of solutions, in the sense that u solves the PDE if and only if T(u) solves the same PDE. A PDE with this property is then said to be invariant under the symmetry T. The symmetry T is often a linear operation, though this does not have to be the case. The composition of two symmetries is again a symmetry, as is the inverse of a symmetry, and so it is natural to view a collection of symmetries as forming a GROUP [I.3 §2.1] (which is typically a finite- or infinite-dimensional LIE GROUP [III.48 §1]).

Because the translation group is intimately connected with THE FOURIER TRANSFORM [III.27] (indeed, the latter can be viewed as the representation theory of the former), this symmetry strongly suggests that Fourier analysis should be a useful tool to solve constant-coefficient PDEs, and this is indeed the case.

Our basic constant-coefficient linear operators, the Laplacian Δ and the d’Alembertian ![]() , are formally similar in many respects. The Laplacian is fundamentally associated with the geometry of EUCLIDEAN SPACE [I.3 §6.2]

, are formally similar in many respects. The Laplacian is fundamentally associated with the geometry of EUCLIDEAN SPACE [I.3 §6.2] ![]() 3 and the d’Alembertian is similarly associated with the geometry of MINKOWSKI SPACE [I.3 §6.8]

3 and the d’Alembertian is similarly associated with the geometry of MINKOWSKI SPACE [I.3 §6.8] ![]() 1+3. This means that the Laplacian commutes with all the rigid motions of the Euclidean space

1+3. This means that the Laplacian commutes with all the rigid motions of the Euclidean space ![]() 3, while the d’Alembertian commutes with the corresponding class of Poincaré transformations of Minkowski spacetime. In the former case this simply means that invariance applies to all transformations of

3, while the d’Alembertian commutes with the corresponding class of Poincaré transformations of Minkowski spacetime. In the former case this simply means that invariance applies to all transformations of ![]() 3 that preserve the Euclidean distances between points. In the case of the wave equation, the Euclidean distance has to be replaced by the space time distance between points (which would be called events in the language of relativity): if

3 that preserve the Euclidean distances between points. In the case of the wave equation, the Euclidean distance has to be replaced by the space time distance between points (which would be called events in the language of relativity): if ![]() = (t, x1, x2, x3) and Q(s, y1, y2, y3), then the distance between them is given by the formula

= (t, x1, x2, x3) and Q(s, y1, y2, y3), then the distance between them is given by the formula

dM(P, Q)2 = -(t - s)2 + (x1 - y1)2 + (x2 - y2)2 + (x3 - y3)2.

As a consequence of this basic fact we infer that all solutions to the wave equation (3) are invariant under translations and LORENTZ TRANSFORMATIONS [I.3 §6.8].

Our other evolution equations (2) and (4) are clearly invariant under rotations of the space variables x = (x1, x2, x3) ∈ ![]() 3, when t is fixed. They are also Galilean invariant, which means, in the particular case of the Schrödinger equation (4), that whenever u = u(t, x) is a solution so is the function

3, when t is fixed. They are also Galilean invariant, which means, in the particular case of the Schrödinger equation (4), that whenever u = u(t, x) is a solution so is the function ![]() for any vector υ ∈

for any vector υ ∈ ![]() 3.

3.

Poisson’s equation (6), on the other hand, is an example of a constant-coefficient inhomogeneous linear equation, which means that it takes the form

![]()

for some constant-coefficient linear differential operator ![]() and known function f. To solve such an equation requires one to understand the invertibility or otherwise of the linear operator

and known function f. To solve such an equation requires one to understand the invertibility or otherwise of the linear operator ![]() : if it is invertible then u will equal

: if it is invertible then u will equal ![]() -1 f, and if it is not invertible then either there will be no solution or there will be infinitely many solutions. Inhomogeneous equations are closely related to their homogeneous counterpart; for instance, if u1, u2 both solve the inhomogeneous equation (9) with the same inhomogeneous term f, then their difference u1 - u2 solves the corresponding homogeneous equation (8).

-1 f, and if it is not invertible then either there will be no solution or there will be infinitely many solutions. Inhomogeneous equations are closely related to their homogeneous counterpart; for instance, if u1, u2 both solve the inhomogeneous equation (9) with the same inhomogeneous term f, then their difference u1 - u2 solves the corresponding homogeneous equation (8).

Linear homogeneous PDEs satisfy the principle of superposition but they do not have to be translation invariant. For example, suppose that we modify the heat equation (2) so that the coefficient k is no longer constant but rather an arbitrary, positive, smooth function of (x1, x2, x3). Such an equation models the flow of heat in a medium in which the rate of diffusion varies from point to point. The corresponding space of solutions is not translation invariant (which is not surprising as the medium in which the heat flows is not translation invariant). Equations like this are called linear equations with variable coefficients. It is more difficult to solve them and describe their qualitative features than it is for constant-coefficient equations. (See, for example, STOCHASTIC PROCESSES [IV.24 §5.2] for an approach to equations of type (2) with variable k.) Finally, nonlinear equations such as (7) can often still be written in the form (8), but the operator ![]() is now a nonlinear differential operator. For instance, the relevant operator for (7) is given by the formula

is now a nonlinear differential operator. For instance, the relevant operator for (7) is given by the formula

where |∂u|2 = (∂1u)2 + (∂2u)2. Operators such as these are clearly not linear. However, because they are ultimately constructed from algebraic operations and partial derivatives, both of which are “local” operations, we observe the important fact that ![]() is at least still a “local” operator. More precisely, if u1 and u2 are two functions that agree on some open set D, then the expressions

is at least still a “local” operator. More precisely, if u1 and u2 are two functions that agree on some open set D, then the expressions ![]() [u1] and

[u1] and ![]() [u2] also agree on this set. In particular, if

[u2] also agree on this set. In particular, if ![]() [0] = 0 (as is the case in our example), then whenever u vanishes on a domain,

[0] = 0 (as is the case in our example), then whenever u vanishes on a domain, ![]() [u] will also vanish on that domain.

[u] will also vanish on that domain.

So far we have tacitly assumed that our equations take place in the whole of a space such as ![]() 3,

3, ![]() + ×

+ × ![]() 3, or

3, or ![]() ×

× ![]() 3. In reality one is often restricted to a fixed domain of that space. Thus, for example, equation (1) is usually studied on a bounded open domain of

3. In reality one is often restricted to a fixed domain of that space. Thus, for example, equation (1) is usually studied on a bounded open domain of ![]() 3 subject to a specified boundary condition. Here are some basic examples of boundary conditions.

3 subject to a specified boundary condition. Here are some basic examples of boundary conditions.

Example. The Dirichlet problem for Laplace’s equation on an open domain of D ⊂ ![]() 3 is the problem of finding a function u that behaves in a prescribed way on the boundary of D and obeys the Laplace equation inside.

3 is the problem of finding a function u that behaves in a prescribed way on the boundary of D and obeys the Laplace equation inside.

More precisely, one specifies a continuous function u0: ∂D → ![]() and looks for a continuous function u, defined on the closure

and looks for a continuous function u, defined on the closure ![]() of D, that is twice continuously differentiable inside D and solves the equations

of D, that is twice continuously differentiable inside D and solves the equations

A basic result in PDEs asserts that if the domain D has a sufficiently smooth boundary, then there is exactly one solution to the problem (10) for any prescribed function uo on the boundary ∂D.

Example. The Plateau problem is the problem of finding the surface of minimal total area that bounds a given curve.

When the surface is the graph of a function u on some suitably smooth domain D, in other words a set of the form {(x, y, u(x,y)) : (x,y) ∈ D}, and the bounding curve is the graph of a function u0 over the boundary ∂D of D, then this problem turns out to be equivalent to the Dirichlet problem (10), but with the linear equation (1) replaced by the nonlinear equation (7). For the above equations, it is also often natural to replace the Dirichlet boundary condition u(x) = u0(x) on the boundary ∂D with another boundary condition, such as the Neumann boundary condition n(x) · ∇xu(x) = u1(x) on ∂D, where n(x) is the outward normal (of unit length) to D at x. Generally speaking, Dirichlet boundary conditions correspond to “absorbing” or “fixed” barriers in physics, whereas Neumann boundary conditions correspond to “reflecting” or “free” barriers.

Natural boundary conditions can also be imposed for our evolution equations (2)-(4). The simplest one is to prescribe the values of u when t = 0. We can think of this more geometrically. We are prescribing the values of u at each spacetime point of form (0,x,y,z), and the set of all such points is a hyperplane in ![]() 1+3: it is an example of an initial time surface.

1+3: it is an example of an initial time surface.

Example. The Cauchy problem (or initial value problem, sometimes abbreviated to IVP) for the heat equation (2) asks for a solution u : ![]() + ×

+ × ![]() 3 →

3 → ![]() on the spacetime domain

on the spacetime domain ![]() + ×

+ × ![]() 3 = {(t,x) : t > 0, x ∈

3 = {(t,x) : t > 0, x ∈ ![]() 3}, which equals a prescribed function u0 :

3}, which equals a prescribed function u0 : ![]() 3 →

3 → ![]() on the initial time surface {0} ×

on the initial time surface {0} × ![]() 3 = ∂(

3 = ∂(![]() + ×

+ × ![]() 3).

3).

In other words, the Cauchy problem asks for a sufficiently smooth function u, defined on the closure of ![]() + ×

+ × ![]() 3 and taking values in

3 and taking values in ![]() , that satisfies the conditions

, that satisfies the conditions

The function u0 is often referred to as the initial conditions, or initial data, or just data, for the problem. Under suitable smoothness and decay conditions, one can show that this equation has exactly one solution u for each choice of data u0. Interestingly, this assertion fails if one replaces the future domain ![]() + ×

+ × ![]() 3 = {(t, x) : t > 0, x ∈

3 = {(t, x) : t > 0, x ∈ ![]() 3} by the past domain

3} by the past domain ![]() - ×

- × ![]() 3 = {(t,x) : t < 0, x ∈

3 = {(t,x) : t < 0, x ∈ ![]() 3}.

3}.

A similar formulation of the IVP holds for the Schrödinger equation (4), though in this case we can solve both to the past and to the future. However, in the case of the wave equation (3) we need to specify not just the initial position u(0, x) = u0(x) on the initial time surface t = 0, but also an initial velocity ∂tu(0, x) = u1(x), since equation (3) (unlike (2) or (4)) cannot formally determine ∂tu in terms of u. One can construct unique smooth solutions (both to the future and to the past of the initial hyperplane t = 0) to the IVP for (3) for very general smooth initial conditions u0, u1.

Many other boundary-value problems are possible. For instance, when analyzing the evolution of a wave in a bounded domain D (such as a sound wave), it is natural to work with the spacetime domain ![]() × D and prescribe both Cauchy data (on the initial boundary 0 × D) and Dirichlet or Neumann data (on the spatial boundary

× D and prescribe both Cauchy data (on the initial boundary 0 × D) and Dirichlet or Neumann data (on the spatial boundary ![]() × ∂D). On the other hand, when the physical problem under consideration is the evolution of a wave outside a bounded obstacle (for example, an electromagnetic wave), one considers instead the evolution in

× ∂D). On the other hand, when the physical problem under consideration is the evolution of a wave outside a bounded obstacle (for example, an electromagnetic wave), one considers instead the evolution in ![]() × (

× (![]() 3 D) with a boundary condition on D.

3 D) with a boundary condition on D.

The choice of boundary condition and initial conditions for a given PDE is very important. For equations of physical interest these arise naturally from the context in which they are derived. For example, in the case of a vibrating string, which is described by solutions of the one-dimensional wave equation ![]() in the domain (a,b) ×

in the domain (a,b) × ![]() , the initial conditions u = u0 and ∂tu = u1 at t = t0 amount to specifying the original position and velocity of the string. The boundary condition u(b) = u(b) = 0 is what tells us that the two ends of the string are fixed.

, the initial conditions u = u0 and ∂tu = u1 at t = t0 amount to specifying the original position and velocity of the string. The boundary condition u(b) = u(b) = 0 is what tells us that the two ends of the string are fixed.

So far we have considered just scalar equations. These are equations where there is only one unknown function u, which takes values either in the real numbers ![]() or in the complex numbers

or in the complex numbers ![]() . However, many important PDEs involve either multiple unknown scalar functions or (equivalently) functions that take values in a multidimensional vector space such as

. However, many important PDEs involve either multiple unknown scalar functions or (equivalently) functions that take values in a multidimensional vector space such as ![]() m. In such cases, we say that we have a system of PDEs. An important example of a system is that of the CAUCHY-RIEMANN EQUATIONS [I.3 §5.6]:

m. In such cases, we say that we have a system of PDEs. An important example of a system is that of the CAUCHY-RIEMANN EQUATIONS [I.3 §5.6]:

![]()

where u1, u2: ![]() 2 →

2 → ![]() are real-valued functions on the plane. It was observed by CAUCHY [VI.29] that a complex function w(x+iy) = u1(x,y)+iu2(x,y) is HOLOMORPHIC [I.3 §5.6] if and only if its real and imaginary parts u1, u2 satisfy the system (12). This system can still be represented in the form of a constant-coefficient linear PDE (8), but u is now a vector

are real-valued functions on the plane. It was observed by CAUCHY [VI.29] that a complex function w(x+iy) = u1(x,y)+iu2(x,y) is HOLOMORPHIC [I.3 §5.6] if and only if its real and imaginary parts u1, u2 satisfy the system (12). This system can still be represented in the form of a constant-coefficient linear PDE (8), but u is now a vector ![]() , and

, and ![]() is not a scalar differential operator, but rather a matrix of operators

is not a scalar differential operator, but rather a matrix of operators ![]() .

.

The system (12) contains two equations and two unknowns. This is the standard situation for a determined system. Roughly speaking, a system is called overdetermined if it contains more equations than unknowns and underdetermined if it contains fewer equations than unknowns. Underdetermined equations typically have infinitely many solutions for any given set of prescribed data; conversely, overdetermined equations tend to have no solutions at all, unless some additional compatibility conditions are imposed on the prescribed data.

Observe also that the Cauchy-Riemann operator ![]() has the following remarkable property:

has the following remarkable property:

![]()

Thus ![]() can be viewed as a square root of the two-dimensional Laplacian Δ. One can define a similar type of square root for the Laplacian in higher dimensions and, more surprisingly, even for the d’Alembertian operator

can be viewed as a square root of the two-dimensional Laplacian Δ. One can define a similar type of square root for the Laplacian in higher dimensions and, more surprisingly, even for the d’Alembertian operator ![]() in

in ![]() 1+3. To achieve this we need to have four 4 × 4 complex matrices γ1, γ2, γ3, γ4 that satisfy the property

1+3. To achieve this we need to have four 4 × 4 complex matrices γ1, γ2, γ3, γ4 that satisfy the property

γαγβ + γβγα = -2mαβI.

Here, I is the unit 4 × 4 matrix and mαβ = ![]() when α = β = 1, -

when α = β = 1, -![]() when α = β ≠ 1, and 0 otherwise. Using the γ matrices we can introduce the Dirac operator as follows. If u = (u1, u2, u3, u4) is a function in

when α = β ≠ 1, and 0 otherwise. Using the γ matrices we can introduce the Dirac operator as follows. If u = (u1, u2, u3, u4) is a function in ![]() 1+3 with values in

1+3 with values in ![]() 4, then we set Du = iγα∂αu. It is easy to check that, indeed, D2u=

4, then we set Du = iγα∂αu. It is easy to check that, indeed, D2u= ![]() u. The equation

u. The equation

![]()

is called the Dirac equation and it is associated with a free, massive, relativistic particle such as an electron.

One can extend the concept of a PDE further to cover unknowns that are not, strictly speaking, functions taking values in a vector space, but are instead sections of a VECTOR BUNDLE [IV.6 §5], or perhaps a map from one MANIFOLD [I.3 §6.9] to another; such generalized PDEs play an important role in geometry and modern physics. A fundamental example is given by the EINSTEIN FIELD EQUATIONS [IV.13]. In the simplest, “vacuum,” case, they take the form

![]()

where Ric(g) is the RICCI CURVATURE [III.78] tensor of the spacetime manifold M = (M, g). In this case the spacetime metric itself is the unknown to be solved for. One can often reduce such equations locally to more traditional PDE systems by selecting a suitable choice of coordinates, but the task of selecting a “good” choice of coordinates, and working out how different choices are compatible with each other, is a nontrivial and important one. Indeed, the task of selecting a good set of coordinates in order to solve a PDE can end up being a significant PDE problem in its own right.

PDEs are ubiquitous throughout mathematics and science. They provide the basic mathematical framework for some of the most important physical theories: elasticity, hydrodynamics, electromagnetism, general relativity, and nonrelativistic quantum mechanics, for example. The more modern relativistic quantum field theories lead, in principle, to equations in an infinite number of unknowns, which lie beyond the scope of PDEs. Yet, even in that case, the basic equations preserve the locality property of PDEs. Moreover, the starting point of a QUANTUM FIELD THEORY [IV.17 §2.1.4] is always a classical field theory, which is described by systems of PDEs. This is the case, for example, in the standard model of weak and strong interactions, which is based on the so-called Yang-Mills-Higgs field theory. If we also include the ordinary differential equations of classical mechanics, which can be viewed as one- dimensional PDEs, we see that essentially all of physics is described by differential equations. As examples of PDEs underlying some of our most basic physical theories we refer to the articles that discuss THE EULER AND NAVIER–STOKES EQUATIONS [III.23], THE HEAT EQUATION [III.36], THE SCHRÖDINGER EQUATION [III.83], and THE EINSTEIN EQUATIONS [IV.13].

An important feature of the main PDEs is their apparent universality. Thus, for example, the wave equation, first introduced by D’ALEMBERT [VI.20] to describe the motion of a vibrating string, was later found to be connected with the propagation of sound and electromagnetic waves. The heat equation, first introduced by FOURIER [VI.25] to describe heat propagation, appears in many other situations in which dissipative effects play an important role. The same can be said about the Laplace equation, the Schrödinger equation, and many other basic equations.

It is even more surprising that equations that were originally introduced to describe specific physical phenomena have played a fundamental role in several areas of mathematics that are considered to be “pure,” such as complex analysis, differential geometry, topology, and algebraic geometry. Complex analysis, for example, which studies the properties of holomorphic functions, can be regarded as the study of solutions to the Cauchy-Riemann equations (12) in a domain of ![]() 2. Hodge theory is based on studying the space of solutions to a class of linear systems of PDEs on manifolds that generalize the Cauchy-Riemann equations: it plays a fundamental role in topology and algebraic geometry. THE ATIYAH—SINGER INDEX THEOREM [V.2] is formulated in terms of a special class of linear PDEs on manifolds, related to the Euclidean version of the Dirac operator. Important geometric problems can be reduced to finding solutions to specific PDEs, typically nonlinear. We have already seen one example: the Plateau problem of finding surfaces of minimal total area that pass through a given curve. Another striking example is the UNIFORMIZATION THEOREM [V.34] in the theory of surfaces , which takes a compact Riemannian surface S (a two-dimensional surface with a RIEMANNIAN METRIC [I.3 §6.10]) and, by solving the PDE

2. Hodge theory is based on studying the space of solutions to a class of linear systems of PDEs on manifolds that generalize the Cauchy-Riemann equations: it plays a fundamental role in topology and algebraic geometry. THE ATIYAH—SINGER INDEX THEOREM [V.2] is formulated in terms of a special class of linear PDEs on manifolds, related to the Euclidean version of the Dirac operator. Important geometric problems can be reduced to finding solutions to specific PDEs, typically nonlinear. We have already seen one example: the Plateau problem of finding surfaces of minimal total area that pass through a given curve. Another striking example is the UNIFORMIZATION THEOREM [V.34] in the theory of surfaces , which takes a compact Riemannian surface S (a two-dimensional surface with a RIEMANNIAN METRIC [I.3 §6.10]) and, by solving the PDE

![]()

(which is a nonlinear variant of the Laplace equation (1)), uniformizes the metric so that it is “equally curved” at all points on the surface (or, more precisely, has constant SCALAR CURVATURE [III.78]) without changing the conformal class of the metric (i.e., without distorting any of the angles subtended by curves on the surface). This theorem is of fundamental importance to the theory of such surfaces: in particular, it allows one to give a topological classification of compact surfaces in terms of a single number χ(S), which is called the EULER CHARACTERISTIC [I.4 §2.2] Of the surface S. The three-dimensional analogue of the uniformization theorem, the GEOMETRIZATION CONJECTURE [IV.7 §2.4] Of Thurston, has recently been established by Perelman, who did so by solving yet another PDE; in this case, the equation is the RICCI FLOW [III.78] equation

![]()

which can be transformed into a nonlinear version of the heat equation (2) after a carefully chosen change of coordinates. The proof of the geometrization conjecture is a decisive step toward the total classification of all three-dimensional compact manifolds, in particular establishing the well-known POINCARÉ CONJECTURE [IV.7 §2.4]. To overcome the many technical details in establishing this conjecture, one needs to make a detailed qualitative analysis of the behavior of solutions to the Ricci flow equation, a task which requires just about all the advances made in geometric PDEs in the last hundred years.

Finally, we note that PDEs arise not only in physics and geometry but also in many fields of applied science. In engineering, for example, one often wants to control some feature of the solution u to a PDE by carefully selecting whatever components of the given data one can directly influence; consider, for instance, how a violinist controls the solution to the vibrating string equation (closely related to (3)) by modulating the force and motion of a bow on that string in order to produce a beautiful sound. The mathematical theory dealing with these types of issues is called control theory.

When dealing with complex physical systems, one cannot possibly have complete information about the state of the system at any given time. Instead, one often makes certain randomness assumptions about various factors that influence it. This leads to the very important class of equations called stochastic differential equations (SDEs), where one or more components of the equation involve a RANDOM VARIABLE [III.71 §4] of some sort. An example of this is in the BLACK-SCHOLES MODEL [VII.9 §2] in mathematical finance. A general discussion of SDEs can be found in STOCHASTIC PROCESSES [IV.24 §6].

The plan for the rest of this article is as follows. In section 2 I shall describe some of the basic notions and achievements of the general theory of PDEs. The main point I want to make here is that, in contrast with ordinary differential equations, for which a general theory is both possible and useful, partial differential equations do not lend themselves to a useful general theoretical treatment because of some important obstructions that I shall try to describe. One is thus forced to discuss special classes of equations such as elliptic, parabolic, hyperbolic, and dispersive equations. In section 3 I will try to argue that, despite the impossibility of developing a useful general theory that encompasses all, or most, of the important examples, there is nevertheless an impressive unifying body of concepts and methods for dealing with various basic equations, and this gives PDEs the feel of a well-defined area of mathematics. In section 4 I develop this further by trying to identify some common features in the derivation of the main equations that are dealt with in the subject. An additional source of unity for PDEs is the central role played by the issues of regularity and breakdown of solutions, which is discussed only briefly here. In the final section we shall discuss some of the main goals that can be identified as driving the subject.

2 General Equations

One might expect, after looking at other areas of mathematics such as algebraic geometry or topology, that there was a very general theory of PDEs that could be specialized to various specific cases. As I shall argue below, this point of view is seriously flawed and very much out of fashion. It does, however, have important merits, which I hope to illustrate in this section. I shall avoid giving formal definitions and focus instead on representative examples. The reader who wants more precise definitions can consult the online version of this article.

For simplicity we shall look mostly at determined systems of PDEs. The simplest distinction, which we have already made, is between scalar equations, such as (1)-(5), which consist of only one equation and one unknown, and systems of equations, such as (12) and (13). Another simple but important concept is that of the order of a PDE, which is defined to be the highest derivative that appears in the equation; this concept is analogous to that of the degree of a polynomial. For instance, the five basic equations (1)-(5) listed earlier are second order in space, although some (such as (2) or (4)) are only first order in time. Equations (12) and (13), as well as the Maxwell equations, are first order.1

We have seen that PDEs can be divided into linear and nonlinear equations, with the linear equations being divided further into constant-coefficient and variable- coefficient equations. One can also divide nonlinear PDEs into several further classes depending on the “strength” of the nonlinearity. At one end of the scale, a semilinear equation is one in which all the nonlinear components of the equation have strictly lower order than the linear components. For instance, equation (15) is semilinear, because the nonlinear component eu is of zero order, i.e., it contains no derivatives, whereas the linear component Δsu is of second order. These equations are close enough to being linear that they can often be effectively viewed as perturbations of a linear equation. A more strongly nonlinear class of equations is that of quasilinear equations, in which the highest- order derivatives of u appear in the equation only in a linear manner but the coefficients attached to those derivatives may depend in some nonlinear manner on lower-order derivatives. For instance, the second-order equation (7) is quasilinear, because if one uses the product rule to expand the equation, then it takes the quasilinear form

for some explicit algebraic functions F11, F12, F22 of the lower-order derivatives of u. While quasilinear equations can still sometimes be analyzed by perturbative techniques, this is generally more difficult to accomplish than it is for an analogous semilinear equation. Finally, we have fully nonlinear equations, which exhibit no linearity properties whatsoever. A typical example is the Monge-Ampère equation

det(D2u) = F(x, u, Du),

where u : ![]() u →

u → ![]() is the unknown function, Du is the GRADIENT [I.3 §5.3] of u, D2u = (∂i∂ju)1≤i,j≤n is the Hessian matrix of u, and F:

is the unknown function, Du is the GRADIENT [I.3 §5.3] of u, D2u = (∂i∂ju)1≤i,j≤n is the Hessian matrix of u, and F: ![]() n ×

n × ![]() ×

× ![]() n →

n → ![]() is a given function. This equation arises in many geometric contexts, ranging from manifold-embedding problems to the complex geometry of CALABI-YAU MANIFOLDS [III.6]. Fully nonlinear equations are among the most difficult and least well-understood of all PDEs.

is a given function. This equation arises in many geometric contexts, ranging from manifold-embedding problems to the complex geometry of CALABI-YAU MANIFOLDS [III.6]. Fully nonlinear equations are among the most difficult and least well-understood of all PDEs.

Remark. Most of the basic equations of physics, such as the Einstein equations, are quasilinear. However, fully nonlinear equations arise in the theory of characteristics of linear PDEs, which we discuss below, and also in geometry.

2.1 First-Order Scalar Equations

It turns out that first-order scalar PDEs in any number of dimensions can be reduced to systems of first- order ODEs. As a simple illustration of this important fact consider the following equation in two space dimensions:

where a1, a2, f are given real functions in the variables x = (x1, x2) ∈ ![]() 2. We associate with (17) the first-order 2 × 2 system

2. We associate with (17) the first-order 2 × 2 system

To simplify matters, let us assume that f = 0.

Suppose now that x(s) = (x1 (s), x2 (s)) is a solution of (18), and let us consider how u(x1(s), x2 (s)) varies as s varies. By the chain rule we know that

![]()

and equations (17) and (18) imply that this equals zero (by our assumption that f = 0). In other words, any solution u = u(x1, x2) of (17) with f = 0 is constant along any parametrized curve of the form x(s) = (x1 (s), x2 (s)) that satisfies (18).

Thus, in principle, if we know the solutions to (18), which are called characteristic curves for the equation (17), then we can find all solutions to (17). I say “in principle” because, in general, the nonlinear system (18) is not so easy to solve. Nevertheless, ODEs are simpler to deal with, and the fundamental theorem of ODEs, which we will discuss later in this section, allows us to solve (18) at least locally and for a small interval in s.

The fact that u is constant along characteristic curves allows us to obtain important qualitative information even when we cannot find explicit solutions. For example, suppose that the coefficients a1, a2 are smooth (or real analytic) and that the initial data is smooth (or real analytic) everywhere on the set ![]() where it is defined, except at some point x0 where it is discontinuous. Then the solution u remains smooth (or real analytic) at all points except along the characteristic curve Γ that starts at x0, or, in other words, along the solution to (18) that satisfies the initial condition x(0) = x0. That is, the discontinuity at x0 propagates precisely along Γ. We see here the simplest manifestation of an important principle, which we shall explain in more detail later: singularities of solutions to PDEs propagate along characteristics (or, more generally, hypersurfaces).

where it is defined, except at some point x0 where it is discontinuous. Then the solution u remains smooth (or real analytic) at all points except along the characteristic curve Γ that starts at x0, or, in other words, along the solution to (18) that satisfies the initial condition x(0) = x0. That is, the discontinuity at x0 propagates precisely along Γ. We see here the simplest manifestation of an important principle, which we shall explain in more detail later: singularities of solutions to PDEs propagate along characteristics (or, more generally, hypersurfaces).

One can generalize equation (17) to allow the coefficients a1, a2, and f to depend not only on x = (x1, x2) but also on u:

![]()

The associated characteristic system becomes

As a special example of (19) consider the scalar equation in two space dimensions,

![]()

which is called the Burgers equation. Here we have set a1(x,u(x)) = 1 and a2(x,u(x)) = u(x). With this choice of a1, a2, we can take x1(s) to be s in (20). Then, renaming x2(s) as x(s), we derive the characteristic equation in the form

![]()

For any given solution u of (21) and any characteristic curve (s, x(s)) we have (d/ds)u(s,x(s)) = 0. Thus, in principle, knowing the solutions to (22) should allow us to determine the solutions to (21). However, this argument seems worryingly circular, since u itself appears in (22).

To see how this difficulty can be circumvented, consider the IVP for (21): that is, look for solutions that satisfy u(0,x) = u0(x). Consider an associated characteristic curve x(s) such that, initially, x(0) = x0. Then, since u is constant along the curve, we must have u(s, x(s)) = u0(x0). Hence, going back to (22), we infer that dx/ds = u0(x0) and thus x(s) = x0+su0(x0). We thus deduce that

![]()

which implicitly gives us the form of the solution u. We see once more, from (23), that if the initial data is smooth (or real analytic) everywhere except at a point x0 of the line t = 0, then the corresponding solution is also smooth (or real analytic) everywhere in a small neighborhood V of x0, except along the characteristic curve that begins at x0. The smallness of V is necessary here because new singularities can form at large scales. Indeed, u has to be constant along the lines x + su0(x), whose slopes depend on u0(x). At a point where these lines cross we would obtain different values of u, which is impossible unless u becomes singular by this point. This blow-up phenomenon occurs for any smooth, nonconstant initial data u0.

Remark. There is an important difference between the linear equation (17) and the quasilinear equation (19). The characteristics of the first depend only on the coefficients a1 (x), a2 (x), while the characteristics of the second depend explicitly on a particular solution u of the equation. In both cases, singularities can only propagate along the characteristic curves of the equation. For nonlinear equations, however, new singularities can form at large distance scales, whatever the smoothness of the initial data.

The above procedure extends to fully nonlinear scalar equations in ![]() d such as the Hamilton-Jacobi equation

d such as the Hamilton-Jacobi equation

![]()

where u: ![]() ×

×![]() n →

n → ![]() is the unknown function, Du is the gradient of u, and the HAMILTONIAN [III.35] H :

is the unknown function, Du is the gradient of u, and the HAMILTONIAN [III.35] H : ![]() d û

d û ![]() d →

d → ![]() and the initial data u0 :

and the initial data u0 : ![]() d →

d → ![]() are given. For instance, the eikonal equation ∂tu = |Du| is a special instance of a Hamilton-Jacobi equation. We associate with (24) the ODE system

are given. For instance, the eikonal equation ∂tu = |Du| is a special instance of a Hamilton-Jacobi equation. We associate with (24) the ODE system

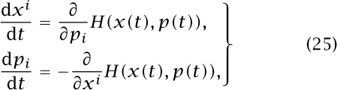

where i runs from 1 to d. The equations (25) are known as a Hamiltonian system of ODEs. The relationship between this system and the corresponding Hamilton-Jacobi equation is a little more involved than in the cases discussed above. Briefly, we can construct a solution u to (24) based only on the knowledge of the solutions (x(t), p(t)) to (25), which are called the bicharacteristic curves of the nonlinear PDE. Once again, singularities can only propagate along bicharacteristic curves (or hypersurfaces). As in the case of the Burgers equation, singularities will occur for more or less any smooth data. Thus, a classical, continuously differentiable solution can only be constructed locally in time. Both Hamilton-Jacobi equations and Hamiltonian systems play a fundamental role in classical mechanics as well as in the theory of the propagation of singularities in linear PDEs. The deep connection between Hamiltonian systems and first-order Hamilton-Jacobi equations played an important role in the introduction of the Schrödinger equation into quantum mechanics.

2.2 The Initial Value Problem for ODEs

Before we can continue with our general presentation of PDEs we need first to discuss, for the sake of comparison, the IVP for ODEs. Let us start with a first-order ODE

![]()

subject to the initial condition

![]()

Let us also assume for simplicity that (26) is a scalar equation and that f is a well-behaved function of x and u, such as f(x, u) = u3 - u + 1 + sinx. From the initial data u0 we can determine ∂xu(x0) by substituting x0 into (26). If we now differentiate the equation (26) with respect to x and apply the chain rule, we derive the equation

![]()

which for the example just defined works out to be cos x + 3u2(x)∂xu(x) - ∂xu(X). Hence,

![]()

and since ∂xu(x0) has already been determined we find that ![]() can also be explicitly calculated from the initial data u0. This calculation also involves the function f and its first partial derivatives. Taking higher derivatives of the equation (26) we can recursively determine

can also be explicitly calculated from the initial data u0. This calculation also involves the function f and its first partial derivatives. Taking higher derivatives of the equation (26) we can recursively determine ![]() as well as all other higher derivatives of u at x0. Therefore, one can in principle determine u(x) with the help of the Taylor series

as well as all other higher derivatives of u at x0. Therefore, one can in principle determine u(x) with the help of the Taylor series

We say “in principle” because there is no guarantee that the series converges. There is, however, a very important theorem, called the Cauchy-Kovalevskaya theorem, which asserts that if the function f is real analytic, as is certainly the case for our function f(x, u) = u3 - u + 1 + sinx, then there will be some neighborhood J of x0 where the Taylor series converges to a real-analytic solution u of the equation. It is then easy to show that the solution thus obtained is the unique solution to (26) that satisfies the initial condition (27). To summarize: if f is a well-behaved function, then the initial value problem for ODEs has a solution, at least in some time interval, and that solution is unique.

The same result does not always hold if we consider a more general equation of the form

![]()

Indeed, the recursive argument outlined above breaks down in the case of the scalar equation (x - x0)∂xu = f(x,u) for the simple reason that we cannot even determine ∂xu(x0) from the initial condition u(x0) = u0. A similar problem occurs for the equation (u - u0)∂Xu = f(x, u). An obvious condition that allows us to extend our previous recursive argument to (28) is to insist that a(x0, u0) ≠ 0. Otherwise, we say that the IVP (28) is characteristic. If both a and f are also real analytic, the Cauchy-Kovalevskaya theorem applies again and we obtain a unique, real-analytic solution of (28) in a small neighborhood of x0. In the case of an N × N system,

A(x, u(x))∂xu = F(x,u(x)), u(x0) = u0,

A = A(x, u) is an N × N matrix, and the noncheracteristic condition becomes

![]()

It turns out, and this is extremely important in the development of the theory of ODEs, that, while the nondegeneracy condition (29) is essential to obtain a unique solution of the equation, the analyticity condition is not at all important: it can be replaced by a simple local Lipschitz condition for A and F. It suffices to assume, for example, that their first partial derivatives exist and that they are locally bounded. This is always the case if the first derivatives of A and F are continuous.

Theorem (the fundamental theorem of ODEs). If the matrix A(x0, u0) is invertible and if A and F are continuous and have locally bounded first derivatives, then there is some time interval J ⊂ ![]() that contains x0, and a unique solution2 u defined on J that satisfies the initial conditions u(x0) = u0.

that contains x0, and a unique solution2 u defined on J that satisfies the initial conditions u(x0) = u0.

The proof of the theorem is based on the Picard iteration method. The idea is to construct a sequence of approximate solutions u(n)(x) that converge to the desired solution. Without loss of generality we can assume A to be the identity matrix.3 One starts by setting u(0)(x) = u0 and then defines, recursively,

∂xu(n) (x) = F(x, u(n-1)(x)), u(n-l) (x0) = u0.

Observe that at every stage all we need to solve is a very simple linear problem, which makes Picard iteration easy to implement numerically. As we shall see below, variations of this method are also used for solving nonlinear PDEs.

Remark. In general, the local existence theorem is sharp, in the sense that its conditions cannot be relaxed. We have seen that the invertibility condition for A(x0, u0) is necessary. Also, it is not always possible to extend the interval J in which the solution exists to the whole of the real line. As an example, consider the nonlinear equation ∂xu = u2 with initial data u = u0 at x = 0, for which the solution u = u0/(1 - xu0) becomes infinite in finite time: in the terminology of PDEs, it blows up.

In view of the fundamental theorem and the example mentioned above, one can define the main goals of the mathematical theory of ODEs as follows.

(1) Find criteria for global existence. In the case of blow-up describe the limiting behavior.

(ii) In the case of global existence describe the asymptotic behavior of solutions and families of solutions.

Though it is impossible to develop a general theory that achieves both goals (in practice one is forced to restrict oneself to special classes of equations motivated by applications), the general local existence and uniqueness theorem mentioned above provides a powerful unifying theme. It would be very helpful if a similar situation were to hold for general PDEs.

2.3 The Initial Value Problem for PDEs

In the one-dimensional situation one specifies initial conditions at a point. The natural higher-dimensional analogue is to specify them on hypersurfaces ![]() ⊂

⊂ ![]() d, that is, (d - 1)-dimensional subsets (or, to be more precise, submanifolds). For a general equation of order k, that is, one that involves k derivatives, we need to specify the values of u and of its first k - 1 derivatives in the direction normal to

d, that is, (d - 1)-dimensional subsets (or, to be more precise, submanifolds). For a general equation of order k, that is, one that involves k derivatives, we need to specify the values of u and of its first k - 1 derivatives in the direction normal to ![]() . For example, in the case of the second-order wave equation (3) and the initial hyperplane t = 0 we need to specify initial data for u and ∂tu.

. For example, in the case of the second-order wave equation (3) and the initial hyperplane t = 0 we need to specify initial data for u and ∂tu.

If we wish to use initial data of this kind to start obtaining a solution, it is important that the data should not be degenerate. (We have already seen this in the case of ODEs.) For this reason, we make the following general definition.

Definition. Suppose that we have a kth-order quasilinear system of equations, and the initial data comes in the form of the first k - 1 normal derivatives that a solution u must satisfy on a hypersurface ![]() . We say that the system is noncharacteristic at a point x0 of

. We say that the system is noncharacteristic at a point x0 of ![]() if we can use the initial data to determine formally all the other higher partial derivatives of u at x0, in terms of the data.

if we can use the initial data to determine formally all the other higher partial derivatives of u at x0, in terms of the data.

As a very rough picture to have in mind, it may be helpful to imagine an infinitesimally small neighborhood of x0. If the hypersurface ![]() is smooth, then its intersection with this neighborhood will be a piece of a (d - 1)-dimensional affine subspace. The values of u and the first k - 1 normal derivatives on this intersection are given by the initial data, and the problem of determining the other partial derivatives is a problem in linear algebra (because everything is infinitesimally small). To say that the system is noncharacteristic at x0 is to say that this linear algebra problem can be uniquely solved, which is the case provided that a certain matrix is invertible. This is the nondegeneracy condition referred to earlier.

is smooth, then its intersection with this neighborhood will be a piece of a (d - 1)-dimensional affine subspace. The values of u and the first k - 1 normal derivatives on this intersection are given by the initial data, and the problem of determining the other partial derivatives is a problem in linear algebra (because everything is infinitesimally small). To say that the system is noncharacteristic at x0 is to say that this linear algebra problem can be uniquely solved, which is the case provided that a certain matrix is invertible. This is the nondegeneracy condition referred to earlier.

To illustrate the idea, let us look at first-order equations in two space dimensions. In this case ![]() is a curve Γ, and since k - 1 = 0 we must specify the restriction of u to Γ ⊂

is a curve Γ, and since k - 1 = 0 we must specify the restriction of u to Γ ⊂ ![]() 2 but we do not have to worry about any derivatives. Thus, we are trying to solve the system

2 but we do not have to worry about any derivatives. Thus, we are trying to solve the system

where a1 a2, and f are real-valued functions of x (which belongs to ![]() 2) and u. Assume that in a small neighborhood of a point

2) and u. Assume that in a small neighborhood of a point ![]() the curve Γ is described parametrically as the set of points x = (x1(s), x2(s)). We denote by n(s) = (n1(s), n2(s)) a unit normal to Γ.

the curve Γ is described parametrically as the set of points x = (x1(s), x2(s)). We denote by n(s) = (n1(s), n2(s)) a unit normal to Γ.

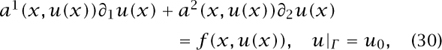

As in the case of ODEs, which we looked at earlier, we would like to find conditions on Γ such that for a given point in Γ we can determine all derivatives of u from the data u0, the derivatives of u along Γ, and the equation (30). Out of all possible curves Γ we distinguish in particular the characteristic ones we have already encountered above (see (20)):

One can prove the following fact:

Along a characteristic curve, the equation (30) is degenerate. That is, we cannot determine the first-order derivatives of u uniquely in terms of the data u0.

In terms of the rough picture above, at each point there is a direction such that if the hypersurface, which in this case is a line, is along that direction, then the resulting matrix is singular. If you follow this direction, then you travel along a characteristic curve.

Conversely, if the nondegeneracy condition

![]()

is satisfied at some point ![]() = x(0) ∈ Γ, then we can determine all higher derivatives of u at x0 uniquely in terms of the data u0 and its derivatives along Γ. If the curve Γ is given by the equation ψ(x1, x2) = 0, with nonvanishing gradient Dψ(

= x(0) ∈ Γ, then we can determine all higher derivatives of u at x0 uniquely in terms of the data u0 and its derivatives along Γ. If the curve Γ is given by the equation ψ(x1, x2) = 0, with nonvanishing gradient Dψ(![]() ) ≠ 0, then the condition (31) takes the form

) ≠ 0, then the condition (31) takes the form

al (![]() , u(

, u(![]() ))∂1 ψ(

))∂1 ψ(![]() ) + a2 (

) + a2 (![]() , u(

, u(![]() ))∂2ψ(

))∂2ψ(![]() ) ≠ 0.

) ≠ 0.

With a little more work one can extend the above discussion to higher-order equations in higher dimensions, and even to systems of equations. Particularly important is the case of a second-order scalar equation in ![]() d,

d,

together with a hypersurface ![]() in

in ![]() d defined by the equation ψ(x) = 0, where ψ is a function with nonvanishing gradient Dψ. Define the unit normal at a point x0 ∈

d defined by the equation ψ(x) = 0, where ψ is a function with nonvanishing gradient Dψ. Define the unit normal at a point x0 ∈ ![]() to be n = Dψ/|Dψ|, or, in component form, ni = ∂iψ/|∂ψ|. As initial conditions for (32) we prescribe the values of u and its normal derivative n[u](x) = n1(x)∂1u(x) + n2(x)∂2u(x) + ··· + nd(x)∂du(x) on

to be n = Dψ/|Dψ|, or, in component form, ni = ∂iψ/|∂ψ|. As initial conditions for (32) we prescribe the values of u and its normal derivative n[u](x) = n1(x)∂1u(x) + n2(x)∂2u(x) + ··· + nd(x)∂du(x) on ![]() :

:

u(x) = u0(x), n[u](x) = u1(x), x ∈ ![]() .

.

It can be shown that ![]() is noncharecteristic (with respect to equation (32)) at a point

is noncharecteristic (with respect to equation (32)) at a point ![]() (that is, we can determine all derivatives of u at

(that is, we can determine all derivatives of u at ![]() in terms of the initial data u0, u1) if and only if

in terms of the initial data u0, u1) if and only if

On the other hand, ![]() is a characteristic hypersurface for (32) if

is a characteristic hypersurface for (32) if

for every x in ![]() .

.

Example. If the coefficients a of (32) satisfy the condition

then clearly, by (34), no surface in ![]() d can be characteristic. This is the case, in particular, for the Laplace equation Δu = f. Consider also the minimal surface equation (7) written in the form

d can be characteristic. This is the case, in particular, for the Laplace equation Δu = f. Consider also the minimal surface equation (7) written in the form

![]()

with h11(∂u) = 1 + (∂2u)2, h22(∂u) = 1 + (∂1u)2, h12(∂u) = h21(∂u) = -∂1u∂2u. It is easy to check that the quadratic form associated with the symmetric matrix hij(∂u) is positive definite for every ∂u. Indeed,

hij(∂u)ξiξj = (1 + |∂u|2)-1/2(|ξ|2 - (1 + |∂u|2)-1(ξ · ∂u)2) > 0.

Thus, even though (36) is not linear, we see that all surfaces in ![]() 2 are noncharacteristic.

2 are noncharacteristic.

Example. Consider the wave equation ![]() u = f in

u = f in ![]() 1+d. All hypersurfaces of the form ψ(t, x) = 0 for which

1+d. All hypersurfaces of the form ψ(t, x) = 0 for which

are characteristic. This is the famous eikonal equation, which plays a fundamental role in the study of wave propagation. Observe that it splits into two Hamilton-Jacobi equations (see (24)):

The bicharacteristic curves of the associated Hamiltonians are called bicharacteristic curves of the wave equation. As particular solutions of (37) we find ψ(t, x) = (t - t0) + |x - x0| and ∂-(t,x) = (t - t0) - |x - x0|, whose level surfaces ∂± = 0 correspond to forward and backward light cones with their vertex at ![]() = (t0, x0). These represent, physically, the union of all light rays emanating from a point source at

= (t0, x0). These represent, physically, the union of all light rays emanating from a point source at ![]() . The light rays are given by the equation (t - t0)ω = (x - x0), for ω ∈

. The light rays are given by the equation (t - t0)ω = (x - x0), for ω ∈ ![]() 3 with |ω| = 1, and are precisely the (t, x) components of the bicharacteristic curves of the Hamilton-Jacobi equations (38). More generally, the characteristics of the linear wave equation

3 with |ω| = 1, and are precisely the (t, x) components of the bicharacteristic curves of the Hamilton-Jacobi equations (38). More generally, the characteristics of the linear wave equation

![]()

with a00 > 0 and aij satisfying (35), are given by the Hamilton-Jacobi equations:

-a00(t, x)(∂tψ)2 + aij(x)∂iψ∂jψ = 0

or, equivalently,

The bicharacteristics of the corresponding Hamiltonian systems are called bicharacteristic curves of (39).

Remark. In the case of the first-order scalar equations (17) we have seen how knowledge of characteristics can be used to find, implicitly, general solutions. We have also seen that singularities propagate only along characteristics. In the case of second-order equations the characteristics are not sufficient to solve the equations, but they continue to provide important information, such as how the singularities propagate. For example, in the case of the wave equation ![]() u = 0 with smooth initial data u0, u1 everywhere except at a point

u = 0 with smooth initial data u0, u1 everywhere except at a point ![]() = (t0, x0), the solution u has singularities present at all points of the light cone -(t - t0)2 + |x - x0|2 = 0 with vertex at

= (t0, x0), the solution u has singularities present at all points of the light cone -(t - t0)2 + |x - x0|2 = 0 with vertex at ![]() . A more refined version of this fact shows that the singularities propagate along bicharacteristics. The general principle here is that singularities propagate along characteristic hypersurfaces of a PDE. Since this is a very important principle, it pays to give it a more precise formulation that extends to general boundary conditions, such as the Dirichlet condition for (1).

. A more refined version of this fact shows that the singularities propagate along bicharacteristics. The general principle here is that singularities propagate along characteristic hypersurfaces of a PDE. Since this is a very important principle, it pays to give it a more precise formulation that extends to general boundary conditions, such as the Dirichlet condition for (1).

Propagation of singularities. If the boundary conditions or the coefficients of a PDE are singular at some point ![]() , and otherwise smooth (or real analytic) everywhere in some small neighborhood V of

, and otherwise smooth (or real analytic) everywhere in some small neighborhood V of ![]() , then a solution of the equation cannot be singular in V except along a characteristic hypersurface passing through

, then a solution of the equation cannot be singular in V except along a characteristic hypersurface passing through ![]() . In particular, if there are no such characteristic hypersurfaces, then any solution of the equation must be smooth (or real analytic) at every point of V other than

. In particular, if there are no such characteristic hypersurfaces, then any solution of the equation must be smooth (or real analytic) at every point of V other than ![]() .

.

Remarks. (i) The heuristic principle mentioned above is invalid, in general, at large scales. Indeed, as we have shown in the case of the Burgers equation, solutions to nonlinear evolution equations can develop new singularities whatever the smoothness of the initial conditions. Global versions of the principle can be formulated for linear equations based on the bicharacteristics of the equation. See (iii) below.

(ii) According to the principle, it follows that any solution of the equation Δu = f, satisfying the boundary condition u|∂D = u0 with a boundary value u0 that merely has to be continuous, is automatically smooth everywhere in the interior of D provided that f itself is smooth there. Moreover, the solution is real analytic if f is real analytic.

(iii) More precise versions of this principle, which plays a fundamental role in the general theory, can be given for linear equations. In the case of the general wave equation (39), for example, one can show that singularities propagate along bicharacteristics. These are the bicharacteristic curves associated with the Hamilton-Jacobi equation (40).

2.4 The Cauchy-Kovalevskaya Theorem

In the case of ODEs we have seen that a noncharacteristic IVP always admits solutions locally (that is, in some time interval about a given point). Is there a higher- dimensional analogue of this fact? The answer is yes, provided that we restrict ourselves to the real-analytic situation, which is covered by an appropriate extension of the Cauchy-Kovalevskaya theorem. More precisely, one can consider general quasilinear equations, or systems, with real-analytic coefficients, real-analytic hypersurfaces ![]() , and appropriate real-analytic initial data on

, and appropriate real-analytic initial data on ![]() .

.

Theorem (Cauchy-Kovalevskaya (CK)). If all the real-analyticity conditions made above are satisfied and if the initial hypersurface ![]() is noncharacteristic at x0,4 then in some neighborhood of x0 there is a unique real-analytic solution u(x) that satisfies the system of equations and the corresponding initial conditions.

is noncharacteristic at x0,4 then in some neighborhood of x0 there is a unique real-analytic solution u(x) that satisfies the system of equations and the corresponding initial conditions.

In the special case of linear equations, an important companion theorem, due to Holmgren, asserts that the analytic solution given by the CK theorem is unique in the class of all smooth solutions and smooth noncharacteristic hypersurfaces ![]() . The CK theorem shows that, given the noncharacteristic condition and the analyticity assumptions, the following straightforward way of finding solutions works: look for a formal expansion of the kind u(x) = ΣαCα(x - x0)α by determining the constants Cα recursively from simple algebraic formulas arising from the equation and initial conditions on

. The CK theorem shows that, given the noncharacteristic condition and the analyticity assumptions, the following straightforward way of finding solutions works: look for a formal expansion of the kind u(x) = ΣαCα(x - x0)α by determining the constants Cα recursively from simple algebraic formulas arising from the equation and initial conditions on ![]() . More precisely, the theorem ensures that the naive expansion obtained in this way converges in a small neighborhood of x0 ∈

. More precisely, the theorem ensures that the naive expansion obtained in this way converges in a small neighborhood of x0 ∈ ![]() .

.

It turns out, however, that the analyticity conditions required by the CK theorem are much too restrictive, and therefore the apparent generality of the result is misleading. A first limitation becomes immediately apparent when we consider the wave equation ![]() u = 0. A fundamental feature of this equation is finite speed of propagation, which means, roughly speaking, that if at some time t a solution u is zero outside some bounded set, then the same must be true at all later times. However, analytic functions cannot have this property unless they are identically zero (see SOME FUNDAMENTAL MATHEMATICAL DEFINITIONS [I.3 §5.6]). Therefore, it is impossible to discuss the wave equation properly within the class of real-analytic solutions. A related problem, first pointed out by HADAMARD [VI.65], concerns the impossibility of solving the Cauchy problem, in many important cases, for arbitrary smooth nonanalytic data. Consider, for example, the Laplace equation Δu = 0 in

u = 0. A fundamental feature of this equation is finite speed of propagation, which means, roughly speaking, that if at some time t a solution u is zero outside some bounded set, then the same must be true at all later times. However, analytic functions cannot have this property unless they are identically zero (see SOME FUNDAMENTAL MATHEMATICAL DEFINITIONS [I.3 §5.6]). Therefore, it is impossible to discuss the wave equation properly within the class of real-analytic solutions. A related problem, first pointed out by HADAMARD [VI.65], concerns the impossibility of solving the Cauchy problem, in many important cases, for arbitrary smooth nonanalytic data. Consider, for example, the Laplace equation Δu = 0 in ![]() d. As we have established above, any hypersurface

d. As we have established above, any hypersurface ![]() is noncharacteristic, yet the Cauchy problem u|

is noncharacteristic, yet the Cauchy problem u|![]() = u0, n[u]|

= u0, n[u]|![]() = u1, for arbitrary smooth initial conditions u0, u1, may admit no local solutions in a neighborhood of any point of

= u1, for arbitrary smooth initial conditions u0, u1, may admit no local solutions in a neighborhood of any point of ![]() . Indeed, take

. Indeed, take ![]() to be the hyperplane x1 = 0 and assume that the Cauchy problem can be solved for given nonanalytic smooth data in a domain that includes a closed ball B centered at the origin. The corresponding solution can also be interpreted as the solution to the Dirichlet problem in B, with the values of u prescribed on the boundary ∂B. But this, according to our heuristic principle (which can easily be made rigorous in this case), must be real analytic everywhere in the interior of B, contradicting our assumptions about the initial data.

to be the hyperplane x1 = 0 and assume that the Cauchy problem can be solved for given nonanalytic smooth data in a domain that includes a closed ball B centered at the origin. The corresponding solution can also be interpreted as the solution to the Dirichlet problem in B, with the values of u prescribed on the boundary ∂B. But this, according to our heuristic principle (which can easily be made rigorous in this case), must be real analytic everywhere in the interior of B, contradicting our assumptions about the initial data.

On the other hand, the Cauchy problem for the wave equation ![]() u = 0 in

u = 0 in ![]() d+1 has a unique solution for any smooth initial data u0, u1 that is prescribed on a spacelike hypersurface. This means a hypersurface ψ(t, x) = 0 such that at every point

d+1 has a unique solution for any smooth initial data u0, u1 that is prescribed on a spacelike hypersurface. This means a hypersurface ψ(t, x) = 0 such that at every point ![]() = (t0, x0) that belongs to it the normal vector at

= (t0, x0) that belongs to it the normal vector at ![]() lies inside the light cone (either in the future direction or in the past direction). To say this analytically,

lies inside the light cone (either in the future direction or in the past direction). To say this analytically,

This condition is clearly satisfied by a hyperplane of the form t = t0, but any other hypersurface close to this is also spacelike. By contrast, the IVP is ill-posed for a timelike hypersurface, i.e., a hypersurface for which

That is, we cannot, for general non-real-analytic initial conditions, find a solution of the IVP. An example of a timelike hypersurface is given by the hyperplane x1 = 0. Let us explain the term “ill-posed” more precisely.

Definition. A given problem for a PDE is said to be well-posed if both existence and uniqueness of solutions can be established for arbitrary data that belongs to a specified large space of functions, which includes the class of smooth functions.5 Moreover, the solutions must depend continuously on the data. A problem that is not well-posed is called ill-posed.

The continuous dependence on the data is very important. Indeed, the IVP would be of little use if very small changes in the initial conditions resulted in very large changes in the corresponding solutions.

2.5 Standard Classification

The different behavior of the Laplace and wave equations mentioned above illustrates the fundamental difference between ODEs and PDEs and the illusory generality of the CK theorem. Given that these two equations are so important in geometric and physical applications, it is of great interest to find the broadest classes of equations with which they share their main properties. The equations modeled by the Laplace equation are called elliptic, while those modeled by the wave equation are called hyperbolic. The other two important models are the heat equation (see (2)) and the Schrödinger equation (see (4)). The general classes of equations that they resemble are called parabolic and dispersive, respectively.

Elliptic equations are the most robust and the easiest to characterize: they are the ones that admit no characteristic hypersurfaces.

Definition. A linear, or quasilinear, N × N system with no characteristic hypersurfaces is called elliptic.

Equations of type (32) whose coefficients aij satisfy condition (35) are clearly elliptic. The minimal surface equation (7) is also elliptic. It is also easy to verify that the Cauchy-Riemann system (12) is elliptic. As was pointed out by Hadamard, the IVP is not well-posed for elliptic equations. The natural way of parametrizing the set of solutions to an elliptic PDE is to prescribe conditions for u, and some of its derivatives (the number of derivatives will be roughly half the order of the equation) at the boundary of a domain D ⊂ ![]() n. These are called boundary-value problems (BVPs). A typical example is the Dirichlet boundary condition u|∂D = u0 for the Laplace equation Δu = 0 in a domain D ⊂

n. These are called boundary-value problems (BVPs). A typical example is the Dirichlet boundary condition u|∂D = u0 for the Laplace equation Δu = 0 in a domain D ⊂ ![]() n. One can show that, if the domain D satisfies certain mild regularity assumptions and the boundary value u0 is continuous, then this problem admits a unique solution that depends continuously on u0. We say that the Dirichlet problem for the Laplace equation is well-posed. Another well-posed problem for the Laplace equation is given by the Neumann boundary condition n[u]|∂D = f, where n is the exterior unit normal to the boundary. This problem is well-posed for all continuous functions f defined on ∂D with zero mean average. A typical problem of general theory is to classify all well-posed BVPs for a given elliptic system.