Chapter 12

Sound Design

Myths and Realities

As my partner Ken Sweet and I seriously immersed ourselves into sound effects development chores for John Carpenter's The Thing, we decided to try using a sound designer for the first time. The term had hardly been born, and few pictures presented the sound crew with the time and budget to allow experimentation. We called some colleagues to get recommendations for sound design talent.

The first place we contracted used a 24-track machine, something we did not use much in feature film sound work. The concept of layering sounds over themselves for design purposes appeared promising. The film-based Moviola assembly at sound editorial facilities like ours just could not layer and combine prior to the predub rerecording phase. Ken and I sat patiently for half the day as the multi-track operator ran the machine back and forth, repeatedly layering up the same cicada shimmer wave. The owner of the facility and the operator kept telling us how “cool” it would sound, but when they played their compilation back for us, we knew the emperor had no clothes on, at least at this facility.

We called around again and someone recommended a young man who used something called a Fairlight. We arranged for a design session and arrived with our ¼″ source material. We told the eager young man exactly what kinds of sounds we needed to develop. Ken and I could hear the concepts in our heads, but we did not know how to run the fancy gear to expose the sounds within us. We explained the shots we wanted, along with the specially designed cues of sound desired at those particular moments. The young man turned to his computer and keyboard in a room stacked with various equipment rented for the session and dozens of wire feeds spread all over the floor.

Once again, Ken and I sat patiently. An hour passed as the young man dabbled at this and fiddled with that. A second hour passed. By the end of the third hour, I had not heard one thing anywhere near the concepts we had articulated. Finally, I asked the “sound designer” if he had an inkling of how to make the particular sound we so clearly had defined.

He said that he actually did not know how to make it, that his technique was just to play around and adjust things until he made something that sounded kind of cool, and then he would lay it down on sound tape. His previous clients would just pick whatever cues they wanted to use from his “creations.”

Deciding it was going to be a long day, I turned to our assistant and asked him to run to the nearest convenience store and pick up some snacks and soft drinks. While I was digging in my pocket for some money, a quarter slipped out, falling onto the Vocorder unit. Suddenly, a magnificent metal shring-shimmer ripped through the speakers. Ken and I looked up with a renewed hope. “Wow!! Now that would be great for the opening title ripping effect! What did you do?”

The young man lifted his hands from the keyboard. “I didn't do anything.”

I glanced down at the Vocorder, seeing the quarter. It was then that I understood what happened. I dropped another quarter, and again an eerie metal ripping shring resounded. “That's it! Lace up some tape; we're going to record this.”

Ken and I began dropping coins as our sound designer sat helpless, just rolling tape to record. Ken started banging on the Vocorder, delivering whole new variants of shimmer rips. “Don't do that!” barked the young man. “You'll break it!”

“The studio will buy you a new one!” Ken snapped back. “At least we're finally designing some sound!”

Ken and I knew that to successfully extract the sounds we could hear within our own heads, we would have to learn and master the use of the new signal-processing equipment. It was one of the most important lessons we learned.

I always have had a love-hate relationship with the term “sound designer.” While it suggests someone with a serious mind set for the development of a soundtrack, it also rubs me the wrong way because so many misunderstand and misuse what “sound design” truly is, cheapening what it has been, what it should be, and what it could be. This abuse and ignorance led the Academy of Motion Picture Arts and Sciences to decide that the job title “sound designer” would not be eligible for any Academy Award nominations or subsequent awards.

I have worked on mega-million-dollar features that have had a sound designer contractually listed in the credits whose work was not used. One project was captured by a sound editorial company only because it promised to contract the services of a particular sound designer, yet during the critical period of developing the concept sound effects for the crucial sequences, the contracted sound designer was on a beach in Tahiti. (Contrary to what you might think, he was not neglecting his work. Actually, he had made an agreement with the supervising sound editor, who knew he had been burned out from the previous picture. They both knew that, to the client, sound design was a perceived concept—a concept nonetheless that would make the difference between contracting the sound job or losing the picture to another sound editorial firm.)

By the time the sound designer returned from vacation, the crucial temp dub had just been mixed, with the critical sound design already completed. Remember, they were not sound designing for a final mix. They were designing for the temp dub, which in this case was more important politically than the final mix, because it instilled confidence and comfort for the director and studio. Because of the politics, the temp dub would indelibly set the design concept, with little room for change.

Regardless of what you may think, the contracted sound designer is one of the best in the business. At that moment in time and schedule, the supervising sound editor only needed to use his name and title to secure the show; he knew that several of us on his editorial staff were more than capable of accomplishing the sound design chores for the picture.

THE “BIG SOUND”

In July 1989 two men from Finland came to my studio: Antti Hytti was a music composer, and Paul Jyrälä was a sound supervisor/mixer. They were interested in a tour of my facility and transfer bay, in particular. I proudly showed them through the sound editorial rooms as I brought them to the heart of our studio—transfer. I thought it odd that Paul simply glanced over the Magna-Tech and Stellavox, only giving a passing acknowledgment to the rack of processing gear. He turned his attention to studying the room's wraparound shelves of tapes and odds and ends.

Paul spoke only broken English, so I turned to the composer with curiosity. “Antti, what is he looking for?”

Antti shrugged, then asked Paul. After a short interchange Antti turned back to me. “He says that he is looking for the device that makes the Big Sound.”

I was amused. “There is no device that makes the Big Sound. It's a philosophy, an art—an understanding of what sounds good together to make a bigger sound.”

Antti interpreted to Paul, who in turn nodded with understanding as he approached me. “You must come to Finland so we make this Big Sound.”

I resisted the desire to chuckle, as I was up to my hips in three motion pictures simultaneously. I shook their hands as I wished the two men well, assuming I would not see them again. Several weeks later, I received a work-in-progress video of the picture on which Paul had asked me to take part. Still in the throes of picture editorial, it was falling increasingly further behind schedule. My wife and I watched the NTSC (National Television System Committee) transfer of the PAL (phase alternating line) video as we sat down to dinner. I became transfixed as I watched images of thousands of troops in this 1930s-era epic, with T-26 Russian armor charging across snow-covered battlefields.

The production recordings were extremely good but, like most production tracks, focused on spoken dialog. In a picture filled with men, tanks, airplanes, steam trains, and weaponry, much sound effects work still had to be done. The potential sound design grew within my head—my imagination started filling the gaps and action sequences. If any picture cried out for the Big Sound, this was the one—Talvisota: The Winter War, was the true-life story of the war between Finland and the Soviet Union in 1939. It proved one of the most important audio involvements of my professional career. It was not a question of money. It was an issue of passion—the heart and soul of a nation beckoned from the rough work-in-progress video.

I made arrangements to go to Helsinki and work with Paul Jyrälä to co-supervise this awesome challenge. I put together a bag filled with sound effect DAT tapes and a catalog printout to add to the sound design lexicon that Paul and I would have to use.

This project was especially challenging, as this was pre-nonlinear workstation. The film was shot 35-mm with a 1:66 aspect. They don't use 35-mm stripe or fullcoat for sound editing. But they did use 17.5-mm fullcoat and had one of the best 2-track stereo transfer bays I had the privilege to work in. To add to the challenge, we only had nine 17.5-mm Perfectone playback machines on the re-recording stage, which meant that we had to be extremely frugal about how wide our “A,” “B,” “C” predub groups could be built out. We had no Moviolas, no synchronizers, no Acmade coding machines, no cutting tables with rewinds, and no Rivas splicers. We did have two flatbed Steinbecks, a lot of veteran know-how, and a sauna.

AMERICAN SOUND DESIGN

Properly designed sound has a timbre all its own. Timbre is the violin—the vibration resonating emotionally with the audience. Timbre sets good sound apart from a pedestrian soundtrack.

Sound as we have seen it grow in the United States has distinguished American pictures on a worldwide market. Style, content, slickness of production, rapidity of storytelling—all made the sound of movies produced in the United States a benchmark and inspiration for sound craftspersonship throughout the rest of the world. For a long time, many foreign crews considered sound only a background behind the actors. They have only in recent years developed a Big Sound concept of their own.

For those clients understanding that they need a theatrical soundtrack for their pictures, the first hurdle is not understanding what a theatrical soundtrack sounds like, but how to achieve it. When you say “soundtrack,” the vast majority of people think only of the music score. The general audience believes that almost all nonmusical sounds are actually recorded on the set when the film is shot. It does not occur to them that far more time and effort were put into the nonmusical portion of the audio experience of the storytelling as was put into composing and orchestrating the theme of the music score itself.

Some producers and filmmakers fail to realize that theatrical sound is not a format; it is not a commitment to spend giga-dollars or hire a crew the size of a combat battalion. It is a philosophy, an art form that only years of experience can help you understand.

The keys to a great soundtrack are its dynamics and variation, with occasional introductions of subtle, unexpected things: the hint of hot gasses on a close-up of a recently fired gun barrel, or an unusual spatial inversion, such as a delicate sucking-up sound juxtaposed against a well-oiled metallic movement for a shot of a high-tech device being snapped open.

The Sound Design Legacy

When you ask a film enthusiast about sound design, the tendency is to recall legendary pictures with memorable sound, such as Apocalypse Now and the Star Wars series. I could not agree more. Many of us were greatly influenced by the work of Walter Murch and Ben Burtt (who worked on the above films, respectively). They not only had great product opportunities to practice their art form, but they also had producer-directors who provided the latitude and financial means to achieve exceptional accomplishments.

Without taking any praise away from Walter or Ben, let us remember that sound design did not begin in the 1970s. Did you ever study the soundtracks to George Pal's War of the Worlds or The Naked Jungle? Have you considered the low-budget constrictions that director Robert Wise faced while making The Day the Earth Stood Still, or the challenges confronting his sound editorial team in creating both the flying saucer and alien ray weapons? Who dreamed up using soda fizz as the base sound effect for the Maribunta, the army ants that terrorized Charlton Heston's South American plantation in The Naked Jungle? Speaking of ants, imagine thinking up the brilliant idea of looping a squeaky pickup truck fan belt for the shrieks of giant ants in Them. Kids in the theatre wanted to hide for safety when that incredible sound came off the screen.

When these fine craftspeople labored to make such memorable sound events for your entertainment pleasure, they made them without the help of today's high-tech digital tools, without harmonizers or vocorders, without a Synclavier or a Fairlight. They designed these sounds with their own imaginations of working with the tools they had at hand and being extraordinarily innovative, understanding what sounds to put together to create new sound events—how to play them backward, slow them down, cut, clip, and scrape them with a razor blade (when magnetic soundtrack became available in 1953), or paint them with blooping ink (when they still cut sound effects on optical track 35-mm film).

DO YOU DO SPECIAL EFFECTS TOO?

In the late summer of 1980 I had completed Roger Corman's Battle Beyond the Stars. I was enthusiastic about the picture, mainly because I had survived the film's frugal sound editorial budget as well as all the daily changes due to the myriad of special-effects shots that came in extremely late in the process.

I had to come up with seven different sounding spacecrafts with unique results, such as the Nestar ship which we created from human voices. It is the community choir from my hometown college of Coalinga, California. Choral director Bernice Isham had conducted her sopranos, altos, tenors, and basses through a whole maze of interesting vocal gymnastics, which were later processed to turn 40 voices into million-pound thrust engines for the Nestar ship, manned by clone humanoids. You can listen to several examples of the sound effects creations developed from the choral voices, which continue to be used in many films to this day.

We had developed Robert Vaughn's ship from the root recordings of a dragster car, then processed it heavily to give it a menacing and powerful “magnetic-flux” force, just the kind of quick-draw space chariot a space-opera gunslinger would drive.

The day after the cast and crew screening, I showed up at Roger's office to discuss another project. As was the custom, I was met by his personal secretary. I could not help but beam with pride regarding my work on Battle, so I asked her if she had attending the screening, and if so, what did she think of the sound effects?

She had gone to the screening, but she struggled to remember the soundtrack. “The sound effects were okay, for what few you had.”

“The few I had?”

The secretary shrugged. “Well, you know, there were so many special effects in the picture.”

“Special effects! Where do you think the sound for all of those special effects came from?” I snapped back.

She brightened up. “Oh, do you do that too?”

“Do that too?” I was dumbfounded. “Who do you think makes those little plastic models with the twinky-lights sound like powerful juggernauts? Sound editors do, not model builders!”

It became obvious to me that the viewing audience can either not separate visual special effects from sound effects or has a hard time understanding where one ends and the other begins.

A good friend of mine got into hot water once with the Special Effects Committee when, in a heated argument he had the temerity to suggest that to have a truly fair appraisal of their work in award evaluation competition they need to turn the soundtrack off. After all, the work of the sound designer and the sound editors were vastly affecting the perception of visual special effects. The committee did not appreciate, nor heed my friend's suggestion, even though my friend had more than 30 years and 400 feature credits of experience behind his statement.

I have had instances where visual special effects artists would drop by to hear what I was doing with their work-in-progress “animatic” special effect shots, only to be inspired by something that we were doing that they had not thought of. In turn, they would go back to continue work on these shots, factoring in new thinking that had been born out of our informal get-together.

SOUND DESIGN MISINFORMATION

A couple of years ago I read an article in a popular audio periodical in which a new, “flavor-of-the-month” sound designer had been interviewed. He proudly boasted something no one else supposedly had done: he had synthesized Clint Eastwood's famous .44 magnum gunshot from Dirty Harry into a laser shot. I sighed. We had done the same thing nearly 20 years before for the Roger Corman space opera, Battle Beyond the Stars. For nearly two decades Robert Vaughn's futuristic hand weapon had boldly fired the sharpest, most penetrating laser shot imaginable, which we developed from none other than Clint Eastwood's famous pistol.

During the mid-1980s I had hired a young enthusiastic graduate from a prestigious southern California university film school. He told me he felt very honored to start his film career at my facility, as one of his professors had lectured about my sound design techniques for Carpenter's The Thing.

Momentarily venerated, I felt a rush of pride, which was swiftly displaced by curious suspicion. I asked the young man what his professor had said. He joyously recounted his professor's explanation about how I had deliberately and painstakingly designed the heartbeat throughout the blood test sequence in reel 10, which subconsciously got into a rhythmic pulse, bringing the audience to a moment of terror.

I stood staring at my new employee with bewilderment. What heartbeat? My partner, Ken Sweet, and I had discussed the sound design for the project very thoroughly, and one sound we absolutely had stayed away from because it had screamed of cliché was any kind of heartbeat.

I could not take it any longer. “Heartbeat?! Horse hockey!! The studio was too cheap to buy fresh fullcoat stock for the stereo sound effect transfers! They used reclaim which had been sitting on a steel film rack that sat in the hallway across from the entrance to dubbing 3. Nobody knew it at the time, but the steel rack was magnetized, which caused a spike throughout the stock. A magnetized spike can't be removed by bulk degaussing. Every time the roll of magnetic stock turns 360 degrees there is a very low frequency ‘whomp.’ You can't hear it on a Moviola. We didn't discover it until we were on the dubbing stage—by then it was too late!!”

The young man shrugged. “It still sounded pretty neat.”

Pretty neat? I guess I'm not as upset about the perceived sound design where none was intended as I am about the fact that the professor had not researched the subject on which he lectured. He certainly never had called to ask about the truth, let alone to inquire about anything of consequence that would have empowered his classroom teachings. He just made up a fiction, harming the impressionable minds of students with misinformation.

In that same sequence of The Thing I can point out real sound design. As John Carpenter headed north to Alaska to shoot the Antarctica base-camp sequences, he announced that one could not go too far in designing the voice of The Thing. To that end, I tried several ideas, with mixed results. Then one morning I was taking a shower when I ran my fingers over the soap-encrusted fiberglass wall. It made the strangest unearthly sound. I was inspired. Turning off the shower, I grabbed my tape recorder, and dangled microphones from a broomstick taped in place from wall to wall at the top of the shower enclosure.

I carefully performed various “vocalities” with my fingertips on the fiberglass— moans, cries, attack shrieks, painful yelps, and other creature movements. When John Carpenter returned from Alaska, I could hardly wait to play the new concept for him. I set the tape recorder on the desk in front of him and depressed the “Play” button.

I learned a valuable lesson that day. What a client requests is not necessarily what he or she really wants, or particularly what he or she means. Rather than developing a vocal characterization for a creature never truly heard before, both director and studio executives actually meant, “We want the same old thing everyone expects to hear and what has worked in the past—only give it a little different spin, you know.” The only place I could sneak a hint of my original concept for The Thing‘s vocal chords was in the blood test scene where Kurt Russell stuck the hot wire into the petri dish and the blood leaped out in agony onto the floor.

At the point the blood turned and scurried away was where I cut those sound cues of my fingertips on the shower wall, called “Tentacles tk-2.” I wonder what eerie and horrifying moments we could have conjured up instead of the traditional cliché lion roars and bear growls we were compelled to use in the final confrontation between Kurt Russell and the mutant Thing.

DAS BOOT: MIKE LE-MARE

I believe “legendary” is the only word to properly describe the audio storytelling achievement that Mike Le-Mare (Blow-Up, Ronin, Without a Clue, Bloodsport, New Jack City, Reindeer Games, The Terminator, Enemy Mine, and The Neverending Story) and Karola Storr along with their British-German team, accomplished in 1981 with Das Boot, a film directed by Wolfgang Peterson.

Never before had anyone been nominated twice for an Academy Award in the sound arts on the same picture. Mike Le-Mare was nominated for Best Sound as well as Best Sound Effect Editing.

Many attribute the successful experience of Das Boot to the careful and thoughtful sounds Mike gave to the film. The sound effects, by design and intent, were profoundly responsible for the psychological, claustrophobic terror during such sequences such as the depth charge attack, which left the audience with sweaty palms, gripping the armrests. Who could not be affected by stress and creaks of the metal hull of the U-boat enduring the pressures of the North Atlantic? Mike's broad palette of audio textures—unsettling air expulsions, pit-of-the-stomach ronks, and low-end growls—seized the audience, making real to them the vulnerability and mortality of the submarine crew in a way that transcended the visual picture.

Such a track did not magically happen because Le-Mare could hear the potential sound design in his head. Nor did it happen because he willed it. Granted, such beginnings are vital, the will to strive for excellence is essential, but without rolling up his sleeves and attending to the countless details and processes, without challenging himself and his crew to take the extra effort to achieve more, such sound experiences are not possible.

Le-Mare had been brought onto the project early enough to be able to do a significant amount of custom recording. The production company had access to a World War II-era German U-boat. To record the authentic diesel (for surface) and electric (for underwater maneuvering) engines, Le-Mare was allowed access to a permanently moored U-boat at Wasserburg where he made carefully controlled recordings of the submarine engines. Many variations were required for the various sequences; precise notes kept; strict attention paid to microphone aspect; engine gears and revolutions per minute (rpm) were written down, such as U-boat: CLOSE—diesel engine startup and CONSTANT—nice tappets, then engine shuts down (in 2nd gear at 202 rpm). Recordings were made of the engine in all gears, under strain, cruising, at flank speed, reversing—recorded close up, medium perspective, down the companionway, or as heard from the conning tower.

Le-Mare made most of his recordings with a ¼″ Uher 4200 Report Monitor and Nagra III tape machines, using both Sennheiser and Neumann microphones. He obtained some authentic German hydrophone recordings that had extremely detailed notations, such as HYDROPHONE: destroyer approaches and passes overhead, 250 rpm slows to 160 rpm (2 shaft—4 blade) then slows to stop TK-1.

From his own extensive sound effect library back in London, Le-Mare pulled a variety of ship horns, bilge water slops, tanks flooding with sea water, tanks blowing with air, vents, safety pressure valves, ASDIC pings, and all the metal and metal-related groans and rubs he could put together.

To help focus and define the authenticity of his sound design, Le-Mare brought in numerous German naval veterans who had served on U-boats during the war. He would tell them the use of each sound cue grouping, and then he would play it.

“No, it did not sound like that,” the submariner would say. “It sounds more like the bulkheads crying out in agony.”

Sometimes Le-Mare would get emotional reactions from the men as the sounds evoked still-present realities from their memories.

Figure 12.1 Mike Le-Mare and Karola Storr (Das Boot) tackle the challenge of John Frankenheimer's action-thriller Ronin.

“There were times that I would have conflicting opinions,” Le-Mare explained. “I resorted to acquiring World War II-era recordings, unsuitable for theatrical use, but I would listen to the texture and timbre of them and do an A-B comparison to see that our audio recreations were on the right track. This and the U-boat servicemen really helped me out a lot.”

Unlike most submarine movie crews that build the practical set open on the side so that the cameras can get the shots easier, U-96 was built in two sections, front and rear. To this day, the two halves (now joined together) are still on display at Bavaria Studios in Munich. Le-Mare recalled:

Le-Mare brought onto the Foley stage all kinds of electric motors for the sounds of the echo range finder, compass, depth gauge, generator, and other equipment for controlled isolated recordings. He brought in switches and various mechanisms for periscope handle movements and fine adjustments, ballast tank operations, rudder controls, hydroplane wheel turns, and so forth. Each item was carefully recorded, making numerous variations for the future sound editorial process.

Le-Mare had Moviolas and Acmade's Pic-Sync Competitors shipped in from London to supply his British sound editorial crew with the equipment it felt most comfortable and confident using, while the German crew used flatbed Steinbecks. With Das Boot, German re-recording stages were challenged by the number of tracks it took to mount the required sound. With a limited number of soundtracks generally used in film production, picture editors ran the mixing sessions. Not with Das Boot. Aside from Klaus Doldinger's haunting music score, Mike Le-Mare came onto the dubbing stage with as many as a 110 hard-effect tracks and an average of 20 Foley tracks in addition to the dialog, ADR, and backgrounds. In fact Le-Mare was asked to get behind the console and help in the mixing at some busy moments, because he was the only one who had a grasp of the material and how it all would work together. For all this, Le-Mare was nominated not only for Best Sound Effects Editing as the supervising sound editor, but also for Best Sound as one of the re-recording mixers.

It was one of the most complex soundtracks ever to be mixed on Stage “A” at Bavaria Studios. So thorough was the feature's preparation and the attention to detail given it, a month after Le-Mare and his sound crew had finished the two-and-a-half-hour feature version, the director and producers decided that Le-Mare and his team should prepare the television version. They went back to the drawing board to prepare the new five-and-a-half-hour version. Of course, the conventional method would have been to cut down the feature version, but, in this case, new scenes were actually added and existing materials were extended to generate a longer picture.

More producers should consider hiring quality theatrical editors to handle foreign language conversions, but it comes down to money. Remember this—you get what you pay for. So many foreign language remixes seem comical and often slipshod. The producers must be willing to pay for fine craftspersonship to achieve a high-quality standard.

THE DIFFERENCE BETWEEN DESIGN AND MUD

David Stone

Supervising sound editor David Stone, winner of the Academy Award for Best Sound Effects Editing for 1992’s Bram Stoker's Dracula, is a quiet and reserved audio artist. As sound design field commanders go, he is not flamboyant nor does he use showmanship as a crutch. What he does is quietly and systematically deliver the magic of his craft— his firm grasp of storytelling through sound. Though he loves to work on animated films, having helmed projects such as Beauty and the Beast, A Goofy Movie, Cats Don't Dance, as well as Pooh's Grand Adventure: The Search for Christopher Robin, and The Lion King II, one should never try to typecast him.

Stone has time and again shown his various creative facets and the ability to mix his pallet of sounds in different ways to suit the projects at hand. He leaves the slick illusionary double-talk to others. For himself, he stands back and studies the situation for awhile. He makes no pretense that he is not an expert in all the fancy audio software or that he was up half the night trying to learn how to perform a new DSP (digital signal processing) function. Stone offered the following insight:

Figure 12.2 David Stone, supervising sound editor and Academy Award winner for Best Sound Effect Editing for Bram Stocker's Dracula (1992).

There was a time when Hollywood soundtracks kept getting better because freelance sound people were circulating and integrating into different groups for each film. So skills and strategies were always growing in a fertile environment. It's the social power that makes great films extraordinary … the same thing that audiences enjoy in films that have more ensemble acting, where the art is more than the sum of its parts.

I think if film students could study the history of the soundtracks they admire, they'd see this pattern of expert collaboration, and that's quite the opposite of what happens when too few people rely on too much tech to make movie sound.

Being a computer vidiot is not what creates good sound design—understanding what sounds go together and when to use them does.

Many times I have watched young sound editors simply try to put together two or more sound effects to achieve a larger audio event, often without satisfaction. A common mistake is to simply lay one pistol shot on top of another. They do not necessarily get a bigger pistol shot; in fact, they often only diminish the clarity and character of the weapon because they are putting together two sounds that have too many common frequency dynamics to compliment one another—instead the result is what we call mud.

A good example of this is the Oliver Stone film On Any Sunday. The sound designer did not pay much attention to the frequency characteristics of his audio elements, or, for that matter, to overmodulation. As I listened to the presentation at the Academy theatre (just so you will not think that a lesser theatre with a sub-standard speaker system was the performance venue) I was completely taken out of the film itself by the badly designed football effects. The lack of clarity and “roundness” of the sound was, instead, replaced by overdriven effects with an emphasis in the upper midrange that cannot be described as anything other than distortion.

A newly initiated audio jockey may naively refer to such sound as befitting the type of film, the “war” of sports needing a “war” of sound. Frankly, such an answer is horse dung. Listen to the sound design of Road to Perdition or Saving Private Ryan. These are also sounds of violence and war in the extreme, and yet they are designed with full “roundness” and clarity, with each audio event having a very tactile linkage with its visual counterpart.

I have harped on this throughout this book and will continue to do so, because it is the keystone of the sound design philosophy: if you take the audience out of the film because of bad sound design, then you have failed as a sound designer. It's just that simple.

I hope you will be patient with me for a few pages as I am going to introduce you (if you do not already know these sound artists) to several individuals who you may want to use as role models as you develop your own style of work and professional excellence. As you read about them, you will see a common thread—a common factor is all of them. They do not just grind out their projects like so much sausage in a meat grinder. They structure their projects as realistically as they can within the budgetary and schedule restraints; they know how to shift gears and cut corners when and if they have to without sacrificing the ultimate quality of the soundtrack that they are entrusted with. They custom record—they try new things—they know how to put together an efficient team to accomplish the mission.

Gary Rydstrom

Sound designer/sound re-recording mixer Gary Rydstrom has developed a stellar career with credits such as Backdraft, Terminator 2: Judgment Day, both Jurassic Parks, Strange Days, The Haunting, Artificial Intelligence, Minority Report, and the stunning sound design and mixing efforts of Saving Private Ryan.

Richard King

This supervising sound editor/sound designer is no stranger to challenging and complex sound design. Winning the Academy Award for Best Sound Editing for Master and Commander: The Far Side of the World, Richard has also helmed such diverse feature films as Rob Roy, GATTACA, The Jackal, Unbreakable, Signs, Lemony Snicket's: A Series of Unfortunate Events, Steven Spielberg's epic version of War of the Worlds, and Firewall, to name but a few. King says:

Figure 12.3 Supervising sound editor/sound designer Richard King. On the left, Richard and his team are custom recording canvas sail movement for Master and Commander: The Far Side of the World, which earned Richard an Academy Award for Best Sound Editing (2004).

Figure 12.4 Supervising sound editor/sound designer Jon Johnson. Academy Award winner for Best Sound Editing in 2000 for the WWII sea action-thriller U-571.

Jon Johnson

Supervising sound editor Jon Johnson first learned his craft on numerous television projects in the early 1980s and then designed and audio processed sound effects for Stargate and later for Independence Day. Taking the helm as supervising sound editor, Jon has successfully overseen the sound design and editorial projects such as The Patriot, A Knight's Tale, Joy Ride, The Rookie, The Alamo, The Great Raid, and Amazing Grace, to name a few. In 2000, Jon won the Academy Award for Best Sound Editing for the World War II submarine action-thriller U-571.

John A. Larsen

Supervising sound editor John Larsen is one of the few sound craftspeople-artists who is also a smart and successful businessman. His sound editorial department on the 20th Century Fox lot continually takes on and successfully takes extremely difficult and challenging projects through the postproduction process.

Figure 12.5 John Larsen reviewing material with assistant sound editor Smokey Cloud.

One of his first important sound editing jobs came when he worked on the 1980 Popeye, starring Robin Williams. He continued to practice his craft on Personal Best, Table for Five, Brainstorm, and Meatballs II. In 1984 he served as the supervising sound editor for the television series Miami Vice, where he really learned the often hard lessons of problem solving compressed schedules and tight crews.

After sound editing on Fandango and Back to the Future, John picked up the gauntlet to supervise feature films in 1985. Since then he has handled a wide breadth of style and demands from pictures like Lord of Illusions, Down Periscope, Chain Reaction, and Jingle All the Way to high-design projects such as Alien 4: Resurrection, The X Files feature film, The Siege, the 2001 version of Planet of the Apes, I-Robot, Daredevil, Elektra, Fantastic 4, and all three X-Men features. He has literally become the sound voice of Marvel Comics for 20th Century Fox.

“How can anyone who loves sound not be excited about the potential creation of unknown and other worldly characters? It is a dream come true! We should all strive to be so lucky,” he said.

John understands exactly how to tailor difficult, and often highly politically “hot-to-handle” projects through the ever-changing, never-locked picture realm of high-budget, fast schedules with high expectation demands. John adds:

Richard Anderson

Supervising sound editor/sound designer Richard Anderson actually started his career as a picture editor, but in the late 1970s became the supervising sound editor at Gomillion Sound, overseeing numerous projects of all sorts and helming several Roger Corman classics, such as Ron Howard's first directorial effort, Grand Theft Auto. A couple of years later Richard won the Academy Award, along with Ben Burtt, as co-supervising sound editors for Best Sound Effect Editing for Raiders of the Lost Ark.

Figure 12.6 Richard Anderson sitting in front of the giant server that feeds all of the work-stations with instant access to one of the most powerful sound libraries in the business.

Richard teamed up with Stephen Hunter Flick as they worked together on projects such as Star Trek: The Motion Picture, Final Countdown, Oliver Stone's The Hand, Poltergeist, 48 Hours, Under Fire, and many others.

As one of the best role models that I could think of for those who would aspire to enter the field of entertainment sound, Richard Anderson's personal work habits, ethics, and superb style of stage etiquette and leadership are extraordinarily evident as he supervised such projects as 2010, Predator, Beetle Juice, Edward Scissorhands, The Lion King, Being John Malkovich, Dante's Peak, and more recently Madagascar, Shark Tale, and Over the Hedge. But perhaps one of Richard's more satisfying efforts was in the field of audio restoration for the director's cut version of David Lean's Lawrence of Arabia.

Audio restoration, whether it be resurrecting and expanding a film to a version that a director had originally intended, which has become a very popular process in recent years, or whether it be to clean up and bring back an existing historic soundtrack to the way it sounded when it was first mixed—removing optical crackle, hiss, transfer ticks, and anomalies that include, but are not limited to, nasty hiss presence due to sloppy copies, made from copies, made from copies, rather than going back to the original source.

Stephen Hunter Flick

Supervising sound editor Stephen Hunter Flick started his career in the late 1970s, a veteran of Roger Corman's Deathsport, which happened to be my first supervising sound editor's job and when I first met Stephen. He soon teamed up with Richard Anderson and helped tackle numerous robustly sound designed projects, serving as supervising sound editor on no less than Pulp Fiction, Apollo 13, Twister, Predator 2, Starship Troopers, Spider-Man, Terminator 3: Rise of the Machines, Arthura: A Space Adventure, and many others. In addition Stephen has won two Academy Awards for Best Sound Effect Editing for two action-driven features, Robocop and Speed.

Figure 12.7 Stephen Hunter Flick taking a break from organizing a project in his cutting rooms on the Sony Lot in Culver City.

Having worked with Stephen from time to time on numerous projects, I would also entice the reader to learn from the leadership techniques of Stephen. Many supervising sound editors, when faced with conflict or the giant finger-pointing-of-fault that often arises during the postproduction process, will sacrifice their editors to save their own skin as they are first and foremost afraid of losing a client, or worse, having a picture pulled from their hands.

Stephen has, time and time again, demonstrated his leadership character to protect his talent. He is the supervising sound editor—he is responsible, which means he is responsible. If his team members make mistakes or have problems, he does not sacrifice them up to protect his own skin. He will deal with the problem within “the family,” adopting a more basic and mature wisdom that comes from years of veteran know-how, rather than finding the guilty or throwing a scapegoat to the clients—just fix and solve the problem. He has earned great respect and loyalty from hundreds of craftspeople who have worked with him and for him over the past 30 years.

I was in between pictures at my own editorial shop when Stephen asked if I would come over to Weddington to help cut sound effects on Predator 2 for him. Several days later, John Dunn, another sound effects editor, asked if I could bring some of my weapon effects to the opening shootout in reel 1. I brought a compilation DAT the following day with several gun effects I thought would contribute toward the effort.

Just after lunch, Flick burst into my room (as is his habit, garnishing him the affectionate nickname of “Tsunami”) and demanded to know, “How come your guns are bigger than mine?!”

“I never said my guns are bigger than yours, Steve.”

Steve shrugged. “I mean, I know I have big guns, but yours are—dangerous!”

With that, he whirled and disappeared down the hall just as suddenly as he had appeared. I sat in stunned aftermath, pondering his description of “dangerous.” After due consideration, I agreed. The style by which I set my microphones up when I record weapon fire, and the combinations of elements if I editorially manufacture weapon fire to create audio events that are supposed to scare and frighten, have a “bite” to them.

Gunfire is an in-your-face crack! Big guns are not made by pouring tons of low-end frequency into the audio event. Low-end does not have any punch or bite. Low-end is fun, when used appropriately, but the upper mid-range and high-end bite with a low-end under bed will bring the weapon to life. Dangerous sounds are not heard at arm's length where it is safe, but in your face. They are almost close enough to reach out and touch you. Distance, what we call “proximity,” triggers your subconscious emotional responses to determine the danger.

Figure 12.8 The “Mauser rifle” Pro Tools session.

I approach blending multiple sound cues together in a new design to being a choir director. Designing a sound event is exactly like mixing and moving the various voices of the choir to create a new vocal event with richness and body. I think of each sound as being soprano, alto, tenor, or bass. Putting basses together will not deliver a cutting punch and a gutsy depth of low end. All I will get is muddy low-end with no cutting punch!

The Pro Tools session shown in Figure 12.8 is a rifle combination I put together for the 1998 action-adventure film Legionnaire, a circa 1925 period film. We had several thousand rifle shots in the battle scenes; for each, I painstakingly cut 4 stereo pairs of sounds that made up the basic signature of a single 7.62 Mauser rifle shot. The reason is simple. Recording a gunshot with all the spectrum characteristics envisioned in a single recording by the supervising sound editor is impossible. Choice of microphone, placement of microphone, selection of recording medium—all these factors determine the voice of the rifle report.

Listen to the individual cues and the combination mix of the “designed” 7.62 Mauser rifle shot on the DVD provided with this book.

As an experienced sound designer, I review recordings at my disposal and choose those cues that comprise the soprano, alto, tenor, and bass of the performance. I may slow down or speed up one or more of the cues. I may pitch-shift or alter the equalization, as I deem necessary. I then put them together, very carefully lining up the leading edge of each discharge as accurately as I can (well within 1/100th of a second accurate), then I play them together as one new voice. I may need to lower the tenor or raise the alto, or I may discover that the soprano is not working and that I must choose a new soprano element.

If possible, I will not commit the mixing of these elements at the sound editorial stage. After consulting with the head re-recording mixer and considering the predubbing schedule, I will decide whether we can afford cutting the elements abreast and leaving the actual mixing to the sound effects mixer during predubbing. If the budget of the postproduction sound job is too small and the sound effects predubbing too short (or nonexistent), I will mix the elements together prior to the sound editorial phase, thereby throwing the die of commitment to how the individual elements are balanced together in a new single stereo pair.

The same goes for rifle bolt actions. I may have the exact recordings of the weapon used in the action sequence in the movie. When I cut them to sync, I play them against the rest of the tracks. The bolt actions played by themselves sound great, but now they are mixed with the multitude of other sounds present. They just do not have the same punch or characterization as when they stood alone. As a sound designer, I must pull other material, creating the illusion and perception of historical audio accuracy, but I must do it by factoring in the involvement of the other sounds of the battle. Their own character and frequency signatures will have a profound influence on the sound I am trying to create.

This issue was demonstrated on Escape from New York. During sound effect predubs, the picture editor nearly dumped the helicopter skid impacts I had made for the Hueys upon descent and landing in the streets of New York. Played by themselves, they sounded extremely loud and sharp. The experienced, veteran sound effect mixer, Gregg Landaker, advised the picture editor that it was not necessary or wise to dump the effects at that time; let them stay as they were in a separate set of channels because they could be easily dumped later if not wanted.

Later, as we rehearsed the sequence for the final mix, it became apparent that Gregg's advice not to make a hasty decision paid off. Now that the heavy pounding of the Huey helicopter blades filled the theatre along with the haunting siren-like score, what had sounded like huge and ungainly metal impacts in the individual predub now became a gentle, even subtle, helicopter skid touchdown on asphalt.

WHAT MAKES EXPLOSIONS BIG

For years following Christine, people constantly asked about the magic I used to bring such huge explosions to the screen. Remember, Christine was mixed before digital technology, when we still worked to an 85-dB maximum. The simple answer should transcend your design thinking into areas aside from just explosions. I see sound editors laying on all kinds of low-end or adding shotgun blasts into the explosion combination, and certainly a nice low-end wallop is cool and necessary, but it isn't dangerous. All it does is muddy it up. What makes an explosion big and dangerous is not the boom, but the debris thrown around.

I first realized this while watching footage of military C4 explosives igniting. Smokeless and seemingly nothing as a visual entity unto themselves, they wreak havoc, tearing apart trees, vehicles, masonry—the debris makes C4 so visually awesome. The same is true with sound. Go back and listen to the sequence in reel 7 of Christine, the sequence where the gas station blows up. Listen to the glass debris flying out the window, the variations of metal, oil cans, crowbars, tires, tools that come flying out of the service bay. Listen to the metal sidings of the gas pumps flying up and impacting the light overhang atop the fueling areas. Debris is the key to danger. Prepare tracks so that the re-recording mixer can pan debris cues into the surround channels, bringing the audience into the action, rather than allowing it to watch the scene at a safe distance.

THE SATISFACTION OF SUBTLETY

Sound design often calls to mind the big, high-profile audio events that serve as landmarks in a film. For every stand-out moment, however, dozens of other equally important moments designate sound design as part of the figurative chorus line. These moments are not solo events, but supportive and transparent performances that enhance the storytelling continuity of the film.

Undoubtedly, my reputation is one of action sound effects. I freely admit that I enjoy designing the hardware and firepower and wrath of nature; yet some of my most satisfying creations have been the little things that hardly are noticed: the special gust of wind through the hair of the hero in the night desert, the special seat compression with a taste of spring action as a passenger swings into a car seat and settles in, the delicacy of a slow door latch as a child timidly enters the master bedroom—little golden touches that fortify and sweetly satisfy the idea of design.

REALITY VS. ENTERTAINMENT

One of the first requirements for the successful achievement of a soundtrack is becoming audio educated with the world. I know that advice sounds naive and obvious, but it is not. Listen and observe life around you. Listen to the components of sound and come to understand how things work. Learn the difference between a Rolls Royce Merlin engine of a P-51 and the Pratt-Whitney of an AT-6, the difference between a hammer being cocked on a .38 service revolver and a hammer being cocked on a .357 Smith & Wesson. What precise audio movements separate the actions of a hundred-ton metal press? What is the audio difference between a grass fire and an oil fire? Between pine burning and oak? What is the difference between a rope swish and a wire or dowel swish? How can one distinguish blade impacts of a fencing foil from a cutlass or a saber? What kind of metallic ring-off would go with each?

A supervising sound editor I knew was thrown off a picture because he did not know what a Ford Cobra was, and insisted that his effect editors cut sound cues from an English sports car series. It is not necessary to be a walking encyclopedia that can regurgitate information about the South American Kerrington mating call or the rate of fire of a BAR (that is, if you even know what a BAR is). What is important is that you diligently do research so that you can walk onto the re-recording stage with the proper material.

I remember predubbing a helicopter warming up on stage. Suddenly the engine wind-up bumped hard with a burst from the turbine. The sound effects mixer quickly tried to duck it out, as he thought it was a bad sound cut on my part. The director immediately corrected him; indeed that was absolutely the right sound at exactly the right spot. The mixer asked how I knew where to cut the turbine burst. I told him that, in studying the shot frame by frame, I had noticed two frames of the exhaust that were a shade lighter than the rest. It seemed to me that that was where the turbo had kicked in.

Reality, however, is not necessarily the focus of sound design. There is reality, and there is the perception of reality. We were rehearsing for the final mix of reel 3 of Escape from New York, where the Huey helicopters descend and land in an attempt to find the president. I had been working very long hours and was exhausted. After I had dozed off and fallen out of my chair several nights before, Don Rogers had supplied a roll-around couch for me to sleep on during the mixing process. The picture editor paced behind the mixers as they rehearsed the reel. He raised his hand for them to stop and announced that he was missing a “descending” sound.

Gregg Landaker and Bill Varney tried to determine to what element of sound the picture editor was referring. The exact components of the helicopters were all there. From an authenticity point of view, nothing was missing.

I rolled over and raised my hand. “Roll back to 80 feet, take effects 14 off the line. Take the feed and the take-up reels off the machine and switch them; then put on a 3-track head stack. Leaving effects 14 off the line, roll back to this shot, then place effects 14 on the line. I think you will get the desired effect.”

Everybody turned to look at me with disbelief, certain I was simply talking in my sleep. Bill Varney pressed the talkback button on the mixing console so that the recordist in the machine room could hear. “Would you please repeat that, Mr. Yewdall?”

I repeated the instructions. The picture editor had heard enough. “What is that supposed to accomplish?”

I explained. “At 80 feet is where Lee Van Cleef announces he is ‘going in.’ The next shot is the fleet of Hueys warming up and taking off. If you check the cue sheets, I think that you will see that effects 14 has a wind-up from a cold start. Played forward, it is a jet whine ascending with no blade rotation. If we play that track over the third channel position of a 3-channel head stack, which means the track is really playing backward while we are rolling forward, I think we will achieve a jet whine descending.” Listen to the sound cue of “Huey helicopter descending” on the DVD provided with this book.

From then on, more movies used helicopter jet whine warm-ups (prior to blade rotation) both forward and reversed to sell the action of helicopters rising or descending, as the visual action dictated. It is not reality, but it is the entertaining perception of reality.

In today's postproduction evolution, the tasks of equalization and signal processing, once considered sacred ground for the re-recording mixers, increasingly have become the working domain of sound designers and supervising sound editors. Accepting those chores, however, also brings about certain responsibilities and ramifications should your work be inappropriate and cost the client additional budget dollars to unravel what you have done.

If you twist those signal-processing knobs, then know the accompanying burden of responsibility. Experienced supervising sound editors have years of “combat” on the re-recording mix stage to know what they and their team members should do, and what should be left for re-recording mixers. I liken my work to getting the rough diamond into shape. If I overpolish the material, I risk trapping the mixer with an audio cue that he or she may not be able to manipulate and appropriately use. Do not polish sound to a point that a mixer has no maneuvering room. You will thwart a collaborative relationship with the mixer.

The best sound design is done with a sable brush, not with a 10-pound sledgehammer. If your sound design distracts the audience from the story, you have failed. If your sound design works in concert with and elevates the action to a new level, you have succeeded. It is just that simple.

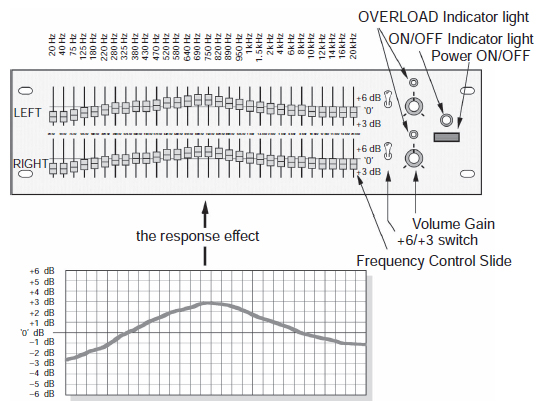

Sound design does not mean that you have to have a workstation stuffed full of fancy gear and complex software. One of the best signal process devices that I like to use is the simple Klark-Teknik DN 30/30, a 30-band graphic equalizer (see Figure 12.9). Taking a common but carefully chosen wood flame steady, I used the equalizer in real time, undulating the individual sliders wildly in waves to cause the “mushrooming” fireball sensation.

Listen to audio cue on the DVD provided with this book to listen to the before and after of “mushrooming flames.”

“WORLDIZING” SOUND

Charles Maynes wrote a perfect article on the subject of “worldizing” of sound and so with permission from Charles Maynes and the Editors Guild Magazine (in which it appeared in April 2004), we are proud to share it with you here.

“WORLDIZING: Take Studio Recordings into the Field to Make Them Sound Organic”

By Charles Maynes

For some of us in sound and music circles, “worldizing” has long held a special sense of the exotic. Worldizing is the act of playing back a recording in a real-world environment, allowing the sound to react to that environment, and then re-recording it so that the properties of the environment become part of the newly recorded material. The concept is simple, but its execution can be remarkably complex.

Figure 12.9 The Klark-Teknik DN 30/30 graphic equalizer. If you turn up the volume gain full to the right (which equals +6 dB level) and flipped the +6/+3 dB switch up into the +6 position, then this would give you a total of +12 dB or -12 dB “gain” or “cut.” Note how the settings of the frequency faders affect the response curve.

Figure 12.10 Re-recording pre-recorded sounds under freeway overpasses in the “concrete jungle” of the city. (Photo by Alec Boehm.)

In Walter Murch's superb essay on the reconstruction of the Orson Welles film A Touch of Evil, he quotes from a 58-page memo that Welles wrote to Universal to lay out his vision for the movie. At one point, Welles describes how he wants to treat the music during a scene between Janet Leigh and Akim Tamiroff, and he offers as elegant a description of worldizing as I can think of:

The music itself should be skillfully played but it will not be enough, in doing the final sound mixing, to run this track through an echo chamber with a certain amount of filter. To get the effect we're looking for, it is absolutely vital that this music be played back through a cheap horn in the alley outside the sound building. After this is recorded, it can then be loused up even further in the process of re-recording. But a tiny exterior horn is absolutely necessary, and since it does not represent very much in the way of money, I feel justified in insisting upon this, as the result will be really worth it.

At the time, Universal did not revise Touch of Evil, according to these notes, but the movie's recent reconstruction incorporates these ideas. Worldizing is now a technique that has been with us for some time and will likely be used and refined for years to come.

WALTER MURCH AND WORLDIZING

The practice of worldizing—and, I believe, the term itself—started with Walter Murch, who has used the technique masterfully in many films. However, it has received most of its notoriety from his use of it in American Graffiti and in the granddaddy of the modern war film, Apocalypse Now.

In American Graffiti, recordings of the Wolfman Jack radio show were played through practical car radios and re-recorded with both stationary and moving microphones to recreate the ever-changing quality of the multiple moving speaker sources the cars were providing. On the dub stage, certain channels were mechanically delayed to simulate echoes of the sound bouncing off the buildings. All of these channels, in addition to a dry track of the source, were manipulated in the mix to provide the compelling street-cruising ambience of the film.

In Apocalypse Now, the most obvious use of this technique was on the helicopter communications ADR, which was re-recorded through actual military radios in a special isolation box. The ground-breaking result has been copied on many occasions.

SOUND EFFECTS

In the previous examples, worldizing was used for dialogue or music, but it has also been used very effectively for sound effects. One of my personal favorite applications of the technique was on the film GATTACA, which required dramatically convincing electric car sounds. Supervising sound editor Richard King and his crew devised a novel method to realize these sounds by installing a speaker system on the roof of a car, so that they could play back various sounds created for the vehicles.

According to King, the sounds were made up of recordings that ranged from mechanical sources such as surgical saws and electric motors to propane blasts and animal and human screams. In the studio, King created pads of these sounds, which were then used for playback.

Richard and Patricio Libenson recorded a variety of vehicle maneuvers, with the prepared sounds being played through the car-mounted speakers and re-recorded by microphones. They recorded drive-bys, turns, and other moves to give the sounds a natural acoustic perspective. As King points out, one of the most attractive aspects of worldizing is the way built-in sonic anomalies happen as sound travels through the atmosphere.

King also used worldizing to create a believable sound for a literal rain of frogs in the film Magnolia. To simulate the sound of frogs’ bodies falling from the sky, King and recordist Eric Potter began by taking pieces of chicken and ham into an abandoned house and recording their impacts on a variety of surfaces, including windows, walls and roofs. Using this source material, King created a continuous bed of impacts that could then be played back and re-recorded. For the recording environment, King chose a canyon, where he and Potter set up some distant mics to provide a somewhat “softened” focus to the source material. A loudspeaker system projected the recordings across the canyon to impact acoustic movement.

In addition to this, King and Potter moved the speakers during the recordings to make the signal go on and off axis. This provided an additional degree of acoustic variation. King and Potter created other tracks by mounting the speakers on a truck and driving around, which provided believable Doppler effects for the shifting perspectives of the sequence.

Another interesting application was Gary Rydstrom's treatment of the ocean sounds during the D-Day landing sequence in Saving Private Ryan, where he used tubes and other acoustic devices to treat the waves during the disorienting Omaha Beach sequence.

MYSTERY MEN

Charles Maynes contributed the following thoughts:

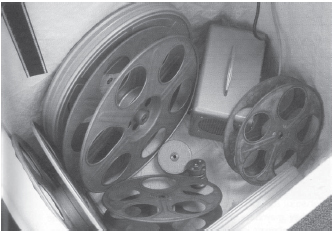

Figure 12.11 Charles Maynes found that a squawk box in a trim bin creates a distinctive sound for the doomsday machine in Mystery Men. (Photo by Steven Cohen.)

I used worldizing in the film Mystery Men, a superhero comedy that required a distinctive sound for a doomsday device called the “psycho-defraculator.” The device could render time and space in particularly unpleasant ways, yet it was home-made and had to have a somewhat rickety character. I was after a sound like the famous “Inertia Starter” (the Tasmanian Devil's Sound) from Warner Bros. cartoons, but I also wanted to give the sense that the machine was always on the verge of self-destruction.

We started by generating a number of synthesized tones, which conveyed the machine's ever-accelerating character. After finding a satisfying basic sound, we needed to find a suitable way to give the impression of impending collapse. By exhaustively trolling through the sound library, I found various recordings of junky cars and broken machines, and I began to combine them with the synthetic tones. I spent a considerable amount of time vari-speeding the elements but was never really satisfied with the result.

Since this was in 1999, we still had a film bench nearby, with a sync block and squawk box. I remembered how gnarly that squawk box speaker sounded and thought, worldize the synth, that's the answer! So I took the squawk box into my editing room, plugged the synthesizer into it and was quickly satisfied with the distortion it provided.

Then I realized that using the squawk box inside a trim bin might be even better. So in came the trim bin, which became home to the speaker. As I listened, I noticed that the bin was vibrating at certain pitches and immediately tried to find which were the best ones to work with. After some trial and error, I started to put various bits of metal and split reels into the bin and noticed that it started really making a racket when the volume was turned up. I had arrived at my sound design destination. The compelling thing about this rig was that I changed the frequency of the synthesizer so that different objects would vibrate and bang against one another, creating a symphony of destruction. The sound was simultaneously organic and synthetic and gave the feeling that the machine was about to vibrate itself to pieces, like a washing machine during the spin cycle, with all the fasteners holding it together removed.

IN THE FUTURE

Charles continued:

Traditionally, it has been difficult to impart the acoustic qualities of real-world locations to our sound recordings using signal processors and electronic tone shaping, but this may well be changing. A new wave of processors now appearing on the market use a digital process called “convolution” to precisely simulate natural reverb and ambience. Using an actual recording made in a particular space, they separate out the reverb and other acoustic attributes of the sound, then apply those to a new recording.

The source recordings are generally created with a sine wave sweep or an impulse, typically from a starter's pistol, which is fired in the space being sampled. Hardwave devices incorporating this technology are available from both Yamaha and Sony; software convolution reverb for Apple-based digital audio workstations (including Digidesign Pro Tools, Steinberg Nuendo and Cubase, Apple Logic Audio and Mark of the Unicorn Digital Performer) is available from the Dutch company AudioEase.

While it seems as though this might be a Rosetta stone that could be used for matching ADR to production dialogue, there are still limitations to the technology. The main one is that the reverb impulse is being modeled on a single recorded moment in time, so the same reverb is applied to each sound being processed with the particular sample. However, the acoustic character of this process is significantly more natural than the digital reverbs we are accustomed to. This tool was used very effectively to process some of the ADR on Lord of the Rings to make it sound as though it had been recorded inside a metal helmet.

One of our goals as sound designers is to imbue our recordings with the physical imperfection of the real world, so that they will fit seamlessly into the world of our film. We want the control that the studio offers us, but we aim to create a sound that feels as natural as a location recording. Worldizing is one way to do this, and so are the new digital tools that help to simulate it. But sound editors are infinitely inventive. The fun of our job is to combine natural and artificial sounds in new ways to create things that no one has ever heard before!

Charles Maynes is a sound designer and supervising sound editor. His credits include Spider-Man, Twister, and U-571. Special thanks to Richard King, John Morris, Walter Murch, and Gary Rydstrom for their patient help with this article.

WORLDIZING DAYLIGHT

I think one of more interesting applications of worldizing was the work done by David Whittaker, M.P.S.E., on the Sylvester Stallone action-adventure film Daylight. In the last part of the film, when the traffic tunnel started to fill up with water, the sound designing chores beckoned for a radical solution. After the sound editing process and premixing was complete on the scenes to be treated “underwater,” all the channels of the premixes were transferred to a DA-88 with time code. Whittaker tells about it:

We purchased a special underwater speaker, made by Electro-Voice. We took a portable mixing console, an amplifier, a water-proofable microphone, a microphone preamp, and two DA-88 machines—one to play back the edited material and the other one to re-record the new material onto. We did the work in my backyard where I have a large swimming pool—it's thirty-eight feet long by eighteen feet wide.

We chose a Countryman lavalier production mike, because it tends to be amazingly resistant to moisture. To waterproof it we slipped it into an unlubricated condom, sealing the open end with thin stiff wire twisted tight around the lavalier's cable. After some experimentation we settled on submerging the speaker about three feet down in the corner of one end, with the mike dropped into the water about the same distance down at the opposite corner of the pool, thus giving us 42 feet of water between the speaker and the microphone. We found we needed as much water as possible between the speaker and the microphone to get a strong effect. We literally hung the mike off a fishing pole—it looked pretty hilarious.

We then played back and re-recorded each channel one at a time, using the time code to keep the DA-88 machines in exact sync phase. The worldizing radically modified the original sounds. The low frequencies tended to disappear, and the high frequencies took on a very squirrely character, nothing like the way one hears in air.

The result became a predub pass onto itself; when we ran it at the re-recording mixing stage, we could play it against the “dry” original sound channels and control how “wet” and “murky” we wanted the end result. Actually, I think it was very effective—and it certainly always made me smile when I would go swimming in my pool after that.

SOME FINAL ADVICE TO CONSIDER

I learned more than one valuable lesson on Escape from New York. As with magicians, never tell the client how you made the magic.

Escape from New York‘s budget was strained to the limit and the job was not done yet. The special effect shots had not been completed, and the budget could not bear the weight of being made at a traditional feature special effect shop. They decided to contract the work to Roger Corman's company, as he had acquired the original computer-tracking special effect camera rig that George Lucas had used on Star Wars. Corman was making a slew of special effect movies to amortize the cost of acquiring the equipment, in addition to offering special effect work to outside production companies, Escape from New York being one.

The first shots were delivered. John Carpenter (director), Debra Hill (producer), Todd Ramsay (picture editor), Dean Cundey (director of photography), Bob Kizer (special effects supervisor), and several members of the visual special effects team sat down in Projection “A” at Goldwyn to view the footage. It has been suggested that the quality expectation from the production team was not very high. After all, this was only Corman stuff, how good could it be?

The first shot flickered onto the screen—the point of view of Air Force One streaking over New York harbor at night, heading into the city just prior to impact. Carpenter, Hill, and Cundey were amazed by the high quality of the shot. After the lights came up, John asked the visual special effects team how it was done. The team members, proud and happy that Carpenter liked their work, blurted out, “Well, first we dumped black paint on the concrete floor, then we let it dry halfway. Then we took paint rollers and roughed it up, to give it the ‘wave’ effect of water at night. Then we made dozens of cardboard cut-outs of the buildings and cut out windows… .”

Carpenter's brow furrowed as he halted their explanation. He pressed the talk-back button to projection. “Roll it again, please.”

Now they viewed the footage again, only with the discerning eye of foreknowledge of the illusion's creation. Now they could see the imperfections, could see how it was done. The reel ran out and the lights came up.

Carpenter thought a moment, and then turned to the visual special effects team. “No—I am going with what I saw the first time. You fooled me. If I ever ask you how you did something again, don't tell me.”

The lesson of that afternoon was burned indelibly into my brain and I have referred to it time and time again in dealing with clients who want to know how something was made. Do not destroy the illusion and magic of your creation by revealing the secrets of how you did them, especially to your clients.

Here endeth the lesson. Now go forth ye inspired and create great audio masterpieces so that the world may dream.