As you know, natural language processing has many applications:

To perform these tasks, we need to calculate features such as TF-IDF scores (refer to Stemming, lemmatizing, filtering, and TF-IDF scores). Especially, with large datasets, it makes sense to store the features for easy processing. Search engines use inverted indices, which map words to web pages. This is similar to the association table pattern (refer to Implementing association tables).

We will implement the association table pattern with three tables. One table contains the words, another will implement the association table pattern with three tables. One table contains the words, another table holds the information about the documents, and the third table links the other two tables as shown in the following schema:

The program is in the terms_database.py file in this book's code bundle:

- The imports are as follows:

from sqlalchemy.ext.declarative import declarative_base from sqlalchemy import Column from sqlalchemy import ForeignKey from sqlalchemy import Float from sqlalchemy import Integer from sqlalchemy import String from sqlalchemy.orm import backref from sqlalchemy.orm import relationship import os import dautil as dl from nltk.corpus import brown from sqlalchemy import func import ch8util Base = declarative_base()

- Define the following class for the text documents:

class Text(Base): __tablename__ = 'texts' id = Column(Integer, primary_key=True) file = Column(String, nullable=False, unique=True) terms = relationship('Term', secondary='text_terms') def __repr__(self): return "Id=%d file=%s" % (self.id, self.file) - Define the following class for the words in the articles:

class Term(Base): __tablename__ = 'terms' id = Column(Integer, primary_key=True) word = Column(String, nullable=False, unique=True) def __repr__(self): return "Id=%d word=%s" % (self.id, self.word) - Define the following class for the association of documents and words:

class TextTerm(Base): __tablename__ = 'text_terms' text_id = Column(Integer, ForeignKey('texts.id'), primary_key=True) term_id = Column(Integer, ForeignKey('terms.id'), primary_key=True) tf_idf = Column(Float) text = relationship('Text', backref=backref('term_assoc')) term = relationship('Term', backref=backref('text_assoc')) def __repr__(self): return "text_id=%s term_id=%s" % (self.text_id, self.term_id) - Define the following function to insert entries in the texts table:

def populate_texts(session): if dl.db.not_empty(session, Text): # Cowardly refusing to continue return fids = brown.fileids(categories='news') for fid in fids: session.add(Text(file=fid)) session.commit() - Define the following function to insert entries in the terms table:

def populate_terms(session): if dl.db.not_empty(session, Term): # Cowardly refusing to continue return terms = ch8util.load_terms() for term in terms: session.add(Term(word=term)) session.commit() - Define the following function to insert entries in the association table:

def populate_text_terms(session): if dl.db.not_empty(session, TextTerm): # Cowardly refusing to continue return text_ids = dl.collect.flatten(session.query(Text.id).all()) term_ids = dl.collect.flatten(session.query(Term.id).all()) tfidf = ch8util.load_tfidf() logger = dl.log_api.conf_logger(__name__) for text_id, row, in zip(text_ids, tfidf): logger.info('Processing {}'.format(text_id)) arr = row.toarray()[0] session.get_bind().execute( TextTerm.__table__.insert(), [{'text_id': text_id, 'term_id': term_id, 'tf_idf': arr[i]} for i, term_id in enumerate(term_ids) if arr[i] > 0] ) session.commit() - Define the following function to perform a search with keywords:

def search(session, keywords): terms = keywords.split() fsum = func.sum(TextTerm.tf_idf) return session.query(TextTerm.text_id, fsum). join(Term, TextTerm). filter(Term.word.in_(terms)). group_by(TextTerm.text_id). order_by(fsum.desc()).all() - Call the functions we defined with the following code:

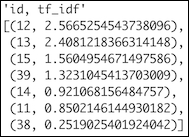

if __name__ == "__main__": dbname = os.path.join(dl.data.get_data_dir(), 'news_terms.db') session = dl.db.create_session(dbname, Base) populate_texts(session) populate_terms(session) populate_text_terms(session) printer = dl.log_api.Printer() printer.print('id, tf_idf', search(session, 'baseball game'))

We performed a search for "baseball game." Refer to the following screenshot for the end result (file IDs and TF-IDF sums):

We stored TF-IDF scores using the association table database pattern. As an example of using the database, we queried for "baseball game." The query looked up the IDs of both words in the terms table and then summed the related TF-IDF scores in the association table. The sums serve as a relevancy score. Then, we presented the corresponding file IDs with relevancy scores in descending order. If you are showing the result to end users, you will have to do at least one more query to replace the file IDs with filenames. As it happens, the files we are analyzing are named ca01 to ca44, so the query is not strictly necessary.

Because I had the TF-IDF scores already, I found it convenient to store them directly. However, you can also decide to store the term frequency and inverse document frequency and derive the TF-IDF scores from those. You only need to determine the term frequency for each new document and the words in the document. All the inverse document frequencies need to be updated when documents are added or removed. However, establishing a link via the association table is already enough to calculate the term frequency, inverse document frequency, and TF-IDF scores.

- The Wikipedia page about search engine indexing at https://en.wikipedia.org/wiki/Search_engine_indexing (retrieved October 2015)