A great deal of time and effort is spent developing the AI for the average modern game. Years ago, AI was often an afterthought for a single gameplay programmer, but these days most game projects employ at least one dedicated AI specialist, and entire AI teams are becoming increasingly common. At the same time, more and more developers are coming to realize that, even in multiplayer games, AI is not only a critical component for providing fun gameplay, but it is also essential if we are going to continue to increase the sense of realism and believability that were previously the domain of physics and rendering. It does no good to have a brilliantly rendered game with true-to-life physics if your characters feel like cardboard cutouts or zombie robots.

Given this increase in team size, the increasing prominence of AI in the success or failure of a game, and the inevitable balancing and feature creep that occur toward the end of every project, it behooves us to search for AI techniques that enable fast implementation, shared conventions between team members, and easy modification. Toward that end, this gem describes methods for applying patterns to our AI in such a way as to allow it to be built, tuned, and extended in a modular fashion.

The key insight that drives the entirety of this work is that the decisions made by the AI can typically be broken down into much smaller considerations, individual tests used in combination to make a single decision. The same considerations can apply to many different decisions. Furthermore, the evaluation of those considerations can be performed independent of the larger decision, and then the results can be combined as necessary into a final decision. Thus, we can implement the logic for each consideration once, test it extensively, and then reuse that logic throughout our AI.

None of the core principles described here are new. They can be found throughout software engineering and academic AI in a variety of forms. However, all too often we game programmers rush into building code, trying to solve our specific problem of the day and get the product out the door, without taking a step back and thinking about how to improve those systems. With some thought and organization, it could be easier to change, extend, and even reuse bits and pieces in future projects. Taking the time to do that would pay off both in the short run, making life easier (and thus improving the final result) for the current title, and in the long run, as more AI code is carried forward from game to game.

Let’s begin with a real-world example that illustrates the general ideas behind this work. Imagine that you have just taken a new job as an AI engineer at Middle of Nowhere Games, and you are in the process of searching for an apartment in some faraway city. You might visit a variety of candidates, write down a list of the advantages and disadvantages of each, and then use that list to guide your final decision.

For an apartment in a large complex near a busy shopping area, for example, your list might look something like this:

606 Automobile Way, Apt 316 | |

|---|---|

Pros | Cons |

Close to work | Great view…of a used car lot |

Easy highway access | No off-street parking |

Convenient shopping district | Highway noise |

Another apartment, located in the attic of a kindly old lady’s country house, might have a wholly different list:

10-B Placid Avenue | |

|---|---|

Pros | Cons |

Low rent | 45-minute commute |

Nearby woods, biking trails | No shopping nearby |

Electricity and water included | Thin walls, landlady downstairs |

These lists clearly reflect the decision-maker’s personal taste—in fact, it seems likely that if two people were to make lists for the same apartment, their lists would have little in common. It is not the actual decision being made that’s important, but rather the process being used to arrive at that decision. That is to say, given a large decision (Where should I live for the next several years of my life?), this process breaks that decision down into a number of independent considerations, each of which can be evaluated in isolation. Only after each consideration has been properly evaluated do we tackle the larger decision.

There is a reasonably finite set of common considerations (such as the rent, ease of commute, size of the apartment, aesthetics of the apartment and surrounding environment, and so forth) that would commonly be taken into account by apartment shoppers. From an AI point of view, if we can encode those considerations, we can then share the logic for them from actor to actor, and in some cases even from decision to decision.

As an example of the latter advantage, imagine that we wanted to select a location for a picnic. Several of the considerations used for apartments—such as overall cost, the aesthetics of the surrounding environment, and the length of the drive to get there—would also be used when picking a picnic spot. Again, if it were an NPC making this decision, then cleverly designed code could be shared in a modular way and could perhaps even be configured by a designer once the initial work of implementing the overall architecture and individual considerations was complete.

Another advantage of this type of approach is that it supports extensibility. For example, imagine that after visiting several apartments, we came across one that had a hot tub and pool or a tennis court. Previously, we had not even considered the availability of these features. However, we can now add this consideration to our list of pros and cons without disturbing the remainder of our logic.

At this point we have made a number of grandiose claims—hopefully sufficient to pique the reader’s interest—but we clearly have some practical problems as well. What is described above is a wholly human approach to decision-making, not easily replicated in code. Our next step, then, should be to see whether we can apply a similar approach to making relatively simple decisions, such as those that rely on a single yes or no answer.

Many common architectures rely on simple Boolean logic at their core. For example, from a functional point of view, finite state machines have a Boolean decision-maker attached to each transition, determining whether to take that transition given the current situation. Behavior trees navigate the tree through a series of Boolean decisions (take this branch or don’t take this branch) until they arrive at an action they want to take. Rule-based architectures consist of a series of “rules,” each of which is a Boolean decision stating whether or not to execute the associated action. And so forth.

To build a pattern-based architecture for our AI, we first need to define a shared interface for our considerations and then decide how to combine the results of their evaluation into a final decision. For Boolean decisions, consider the following interface:

class IConsiderationBoolean

{

public:

IConsiderationBoolean() {}

virtual ~IConsiderationBoolean() {}

// Evaluate this consideration

bool Evaluate(const DecisionContext& context) = 0;

// Load the data that controls our decisions

void LoadData(const DataNode& node) = 0;

}Every consideration will inherit from this interface and therefore will be required to specify the Evaluate() and LoadData() methods.

Evaluate() takes a DecisionContext as its only argument. The context contains whatever information might be needed to make a decision. For example, it might include the current game time, a pointer to the actor being controlled, a pointer to the game world, and so on. Alternatively, it might simply be a pointer to the actor’s knowledge base, where beliefs about the world are stored. Regardless, Evaluate() processes the state of the world (as contained in the context) and then returns true if execution is approved or false otherwise.

The other mandatory function is LoadData(). The data being loaded specifies how the decision should be made. We will go into more detail on this issue later in this gem.

Games are rife with examples of considerations that can be encoded in this way. For example, we might have a health consideration that will only allow an action to be taken if a character’s hit points are in a specified range. A time-of-day consideration might only allow an action to take place during the day (or at night or between noon and 1:00 p.m.). A cool-down consideration could prevent an action from being taken if it has been executed in the recent past.

The simplest approach for combining Boolean considerations into a final decision is to give each consideration veto power on the overall decision. That is, take the action being gated by the decision if and only if every consideration returns true. Obviously, more robust techniques can be implemented, up to and including full predicate logic, but this simple approach works well for a surprisingly large number of cases and has the advantage of being extremely simple to implement.

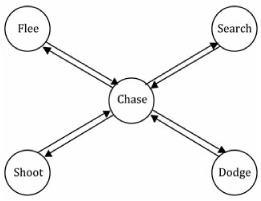

As an example of this approach in a game environment, consider the simple state machine in Figure 3.2.1, which is a simplified version of what might be found in a typical first-person shooter’s combat AI (for example).

In this AI, Chase is our default state. We exit it briefly to dodge or take a shot, but then return to it as soon as we’re done with that action. Here are the considerations we might attach to each transition:

Chase ⇒ Shoot:

We have a line of sight to the player.

It has been at least two seconds since our last shot.

It has been at least one second since we last dodged.

The player’s health is over 0 percent (that is, he’s not dead yet).

Chase ⇒ Dodge:

We have a line of sight to the player.

The player is aiming at us.

It has been at least one seconds since our last shot.

It has been at least five seconds since we last dodged.

Our health is below 60 percent. (As we take more damage, we become more cautious.)

Our health is less than 1.2 times the player’s health. (If we’re winning, we become more aggressive.)

Shoot ⇒ Chase:

We’ve completed the Shoot action.

Dodge ⇒ Chase:

We’ve completed the Dodge action.

One advantage of breaking down our logic in this way is that we can encapsulate the shared logic from each decision in a single place. Here are the considerations used above:

Line of Sight Consideration. Checks the line of sight from our actor to the player (or, more generally, from our actor to an arbitrary target that can be specified in the data or the

DecisionContext).Aiming at Consideration. Checks whether the player’s weapon is pointed toward our actor.

Cool-down Consideration. Checks elapsed time since a specified type of action was last taken.

Absolute Health Consideration. Checks whether the current health of our actor (or the player) is over (or under) a specified cutoff.

Health Comparison Consideration. Checks the ratio between our actor’s health and the player’s health.

Completion Consideration. Checks whether the current action is complete.

Each of those considerations represents a common pattern that can be found not only in this specific example, but also in a great many decisions in a great many games. These patterns are not unique to these particular decisions or even this particular genre. In fact, many of them can be seen in one form or another in virtually every game that has ever been written. Line-of-sight checks, for example, or cool-downs to prevent abilities from being used too frequently…these are basic to game AI.

At this point we have all the pieces we need. We know what behaviors we plan to support (in this case, one behavior per state), we know all of the decisions that need to be made (represented by the transitions), and we know the considerations that go into each decision. However, there is still some work to do to put it all together.

Most of what needs to be done is straightforward. We can implement a character class that contains an AI. The AI contains a set of states. Each state contains a list of transitions, and each transition contains a list of considerations.

Keep in mind that the considerations don’t always do the same thing. For example, both Chase ⇒ Shoot and Chase ⇒ Dodge contain an Absolute Health consideration, but those considerations are expected to return true under very different conditions. For Chase ⇒ Shoot, we return true if the health of the player is above 0 percent. For Chase ⇒ Dodge, on the other hand, we return true if the health of our actor is below 60 percent. More generally, each decision that includes this condition also needs to specify whether it should examine the health of the player or our actor, whether it should return true when that value is above or below the cutoff, and what cutoff it should use. The information used to specify how each instance of a consideration should evaluate the world is obtained through the LoadData() function:

void CsdrHealth::LoadData(const DataNode& node)

{

// If true we check the player’s health, otherwise

// we check our actor’s health.

m_CheckPlayer = node.GetBoolean(“CheckPlayer”);

// The cutoff for our health check – may be the

// upper or lower limit, depending on the value

// of m_HighIsGood.

m_Cutoff = node.GetFloat(“Cutoff”);

// If true then we return true when our health is

// above the cutoff, otherwise we return true when

// our health is below the cutoff.

m_HighIsGood = node.GetBoolean(“HighIsGood”);

}As you can see, this is fairly straightforward. We simply acquire the three values the Evaluate() function will need from our data node. With that in mind, here is the Evaluate() function itself:

bool CsdrHealth::Evaluate(const DecisionContext& ctxt)

{

// Get the health that we’re checking – either ours

// or the player’s.

float health;

if (m_CheckPlayer)

health = ctxt.GetHealth(ctxt.GetPlayer());

else

health = ctxt.GetHealth(ctxt.GetMyActor());

// Do the check.

if (m_HighIsGood)

return currentHealth >= m_Cutoff;

else

return currentHealth <= m_Cutoff;

}

}Again, there’s nothing complicated here. We get the appropriate health value (either ours or the player’s, depending on what LoadData() told us) from the context and compare it to the cutoff.

The simplicity of this code is in many ways the entire point. Each consideration is simple and easy to test in its own right, but combined they become powerfully expressive.

Now that we have built a functional core AI for our shiny new FPS game, it’s time to start iterating on that AI, finding ways in which it’s less than perfect, and fixing them. As a first step, let’s imagine that we wanted to add two new states: Flee and Search (as seen in Figure 3.2.2). Like all existing states, these new states transition to and from the Chase state. Here are the considerations for our new transitions:

Chase ⇒ Flee:

Our health is below 15 percent.

The player’s health is above 10 percent. (If he’s almost dead, finish him.)

Flee ⇒ Chase:

The player’s health is below 10 percent.

We have a line of sight to the player.

Chase ⇒ Search:

We don’t have a line of sight to the player.

Search ⇒ Chase:

We have a line of sight to the player.

As you can see, adding these states should be fairly simple. We will need to implement the new behaviors, but all of the considerations needed to decide whether to execute those behaviors already exist.

Of course, not all changes can be made using existing considerations. For example, imagine that we want to make two further changes to our AI:

QA complains that dodging is too predictable. Instead of always using a five-second cool-down, they want us to use a cool-down that varies randomly between three and seven seconds.

The artists would like to add a really cool-looking animation for drawing your weapon. In order to support this, the designers have asked us to have the characters draw their weapons when they enter Chase and then holster them again if they go into Search.

The key is to find a way to modify our existing code so that we can support these new specifications without affecting any other portion of the AI. We certainly don’t want to have to go through the entire AI for every character, find every place that these considerations are used, and change the data for all of them.

Implementing the variable cool-down is fairly straightforward. Previously the Cool-Down consideration took a single argument to specify the length of the cool-down. We’ll modify it to optionally take minimum and maximum values instead. Thus, all the existing cases will continue to work (with an exact value specified), but our Cool-Down consideration will now have improved functionality that can be used to fix this bug and can also be used in the future as we continue to build the AI. We’ll have to take some care in making sure that we check that exactly one type of cool-down is specified. In other words, the user needs to specify either an exact cool-down or a variable cool-down; he or she can’t specify both. An Assert in LoadData() should be sufficient.

For the second change, we can make the Chase behavior automatically draw the weapon (if it’s not drawn already), so that doesn’t require a change to our decision logic. We do need to ensure that we don’t start shooting until the weapon is fully drawn, however. In order to do that, we simply implement an Is Weapon Drawn consideration and add it to the transition from Chase to Shoot.

One thing to notice is that all of the values required to specify our AI are selected at game design time. That is, we determine up front what decisions our AI needs to make, what considerations are necessary to support those decisions, and what tuning values we should specify for each consideration. Once the game is running, they are always the same. For example, our actor’s hit points might go up or down, but if a decision uses an Absolute Health consideration, then the threshold at which we switch between true and false never changes during gameplay.

Since none of this changes during gameplay, nearly all of the decision-making logic can be specified in data. We can specify the AI for each character, where the AI contains a set of states, each state contains a list of transitions, each transition contains a list of considerations, and each consideration contains the tuning values used for that portion of the AI. This sort of hierarchical structure is something that XML does well, making it an excellent choice for our data specification.

As with all data-driven architectures, the big advantage is that if we want to change the way the decisions are made, we only have to change data, not code. No recompile is required. If you’ve implemented the ability to reload the data while the game is live, you don’t even need to restart the game, which can save a lot of time when testing a situation that is tricky to re-create in-game. Of course, some changes, such as implementing an entirely new consideration, will require code changes, but much of the tuning and tweaking—and sometimes even more sizeable adjustments—will not.

One thing to consider when taking this approach is whether it’s worth investing some time into the tools you use for data specification. Our experience has been that the time spent specifying and tuning behavior is significantly greater than the time spent writing the core reasoner. Good tools can not only make those adjustments quicker and easier (especially if they’re integrated into the game so that adjustments can be made in real time), but they can also include error checking for common mistakes and help to avoid subtle behavioral bugs that might otherwise be hard to catch. Further, since many of these considerations are broadly applicable to a variety of games, not only the considerations but also the tools for specifying them could be carried from game to game as part of your engine (or even integrated into a new engine). Finally, it is often possible to allow designers and artists to specify AI logic if you create good tools for doing so, giving them more direct control over the look and feel of the game and freeing you up to focus on issues that require your technical expertise.

One of the benefits of this approach is that it reduces the amount of duplicate code in your AI. For example, if there are seven different decisions that evaluate the player’s hit points, instead of writing that evaluation code in seven places we write it once, in the form of the Absolute Health consideration.

As our work on the AI progresses, we might quickly find that we also have an Absolute Mana consideration, an Absolute Stamina consideration, a Time of Day consideration, a Cool-Down consideration, and a Distance to Player consideration. Although each of these considers a different aspect of the situation in-game, under the covers they each do exactly the same thing. That is, they compare a floating-point value from the game (such as the player’s hit points, the current time of day, the distance to the player, and so forth) to one or two static values that are specified in data.

With that in mind, it’s worth looking for opportunities to further reduce duplicate code by building meta-considerations, which is to say high-level considerations that handle the implementation of more specific, low-level considerations, such as the ones given above. This has the advantage of not only further reducing the duplication of code, but also enforcing a uniform set of conventions for specifying data. In other words, if all of those considerations inherit from a Float Comparison consideration base class, then the data for them is likely to look remarkably similar, and a designer specifying data for one that he hasn’t used before is likely to get the result he expects on his first try, because it works the same way as every other Float Comparison consideration that he’s used before.

While nearly all decisions are ultimately Boolean (that is, an AI either takes an action or it doesn’t), it is often useful to evaluate the suitability of a variety of options and then allow that evaluation to guide our decisions. As with Boolean approaches, there are a variety of approaches for doing this. Discussion of these approaches can be found throughout the AI literature. A few game-specific examples include [Dill06, Dill08, and Mark09]. For the purposes of this gem, however, the interesting question is not how and when to use a float-based approach, but rather how to build modular, pattern-based evaluation functions when we do.

Imagine that we are responsible for building the opposing-player AI for a real-time strategy game. Such an AI would need to decide when and where to attack. In order to do this, we might periodically score several prospective targets. For each target we would consider a number of factors, including its economic value (that is, whether it generates revenue or costs money to maintain), its impact on the strategic situation (for example, would it allow you access to the enemy’s territory, consolidate your defenses, or protect your lines of supply), and the overall military situation (in other words, whether you can win this fight).

Our next step should be to find a way for each of these considerations to evaluate the situation independently that enables us to easily combine the results of all of those evaluations into a single score, which can be used to make our final decision.

Creating an evaluation function is as much art as science, and in fact there is an entire book dedicated to this subject [Mark09]. However, just as with Boolean decisions, there are simple tricks that can be used to handle the vast majority of situations. Specifically, we can modify our Evaluate() function so that it returns two values: a base priority and a final multiplier. When every consideration has been evaluated, we first add all of the base priorities together and then multiply that total by the product of the final multipliers. This allows us to create considerations that are either additive or multiplicative in nature, which are two of the most common techniques for creating priority values.

Coming back to our example, the considerations for economic value and strategic value might both return base priorities between –500 and 500, generating an overall base priority between –1,000 and 1,000 for each target. They would return a negative value if, from their point of view, taking this action would be a bad idea. For example, capturing a building that has an ongoing upkeep cost might receive a negative value from the economic consideration (unless it had some economic benefit to offset that cost), because once you own it, you’ll have to start paying its upkeep. Similarly, attacking a position that, if obtained, would leave you overextended and exposed would receive a negative value from the strategic consideration. These considerations could return a final multiplier of 1.

The consideration for the military situation, however, could be multiplicative in nature. That is, it would return a base priority of 0 but would return a final multiplier between 0 and 3. (For a better idea of how to generate that multiplier, see our previous work [Dill06].) Thus if the military situation is completely untenable (in other words, the defensive forces are much stronger than the units we would use to attack), then we could return a very small multiplier (such as 0.000001), making it unlikely that this target would be chosen no matter how attractive it is from an economic or strategic standpoint. On the other hand, if the military situation is very favorable, then we would strongly consider this target (by specifying a multiplier of 3), even if it is not tremendously important in an economic or strategic sense. If we have no military units to attack with, then this consideration might even return a multiplier of 0.

We do not execute an action whose overall priority is less than or equal to zero. Thus if all of the base priorities add up to a negative value, or if any consideration returns a final multiplier of zero, then the action will not be executed. Just as in the Boolean logic case, a single consideration can effectively veto an action by returning a final multiplier of zero.

As with the previous examples, the greatest value to be found is that these same considerations can be reused elsewhere in the AI. For example, the economic value might be used when selecting buildings to build or technologies to research. The strategic value might be used when selecting locations for military forts and other defensive structures. The military situation would be considered not only for attacks, but also when deciding where to defend, and perhaps even when deciding whether to build structures in contested areas of the map.

One weakness of the aforementioned approach is that it only allows considerations to have additive or multiplicative effects on one another. Certainly there are many other ways to combine techniques—in fact, much of the field of mathematics addresses this topic! One common trick, for example, is to use an exponent to change the shape of the curve when comparing two values. Certainly we can extend our architecture to include this, perhaps including an exponent to the set of values returned by our Evaluate() function and applying all of the exponents after the final multipliers. Doing so significantly increases the complexity of our AI, however, because this new value needs to be taken into account with every consideration we implement and every decision we make. This may not seem like a big deal, but it can make the task of specifying, tuning, and debugging the AI significantly harder than it would otherwise be—especially if it is to be done by non-technical folks who may not have the same intuitive sense of how the numbers combine as an experienced AI engineer would.

Along the same lines, in many cases even the final multiplier is overkill. For simpler decisions (such as those in an FPS game or an action game), we can often have the Evaluate() function return a base priority as before, but instead of returning a multiplier, it can simply return a Boolean value that specifies whether it wants to veto this action. If any consideration returns false, then the action is not taken (the score is set to 0); otherwise, the score is the sum of the base priorities.

This gem has presented a set of approaches for building modular, pattern-based architectures for game AI. All of these approaches function by breaking a decision into separate considerations and then encoding each consideration independently. There are several advantages to techniques of this type:

Code duplication between decisions is dramatically reduced, because the code for each consideration goes in a single place.

Considerations are reusable not only within a single project, but in some cases also between multiple projects. As a result, AI implementation will become easier as the library of considerations grows larger and more robust.

Much of the AI can be specified in data, with all the advantages that implies.

With proper tools and a good library of considerations, designers and artists can be enabled to specify AI logic directly, both getting them more directly involved in the game AI and freeing up the programmer for other tasks.