If we have many features (explanatory variables), it is tempting to include them all in our model. However, we then run the risk of overfitting—getting a model that works very well for the training data and very badly for unseen data. Not only that, but the model is bound to be relatively slow and require a lot of memory. We have to weigh accuracy (or an other metric) against speed and memory requirements.

We can try to ignore features or create new better compound features. For instance, in online advertising, it is common to work with ratios, such as the ratio of views and clicks related to an ad. Common sense or domain knowledge can help us select features. In the worst-case scenario, we may have to rely on correlations or other statistical methods. The scikit-learn library offers the RFE class (recursive feature elimination), which can automatically select features. We will use this class in this recipe. We also need an external estimator. The RFE class is relatively new, and unfortunately there is no guarantee that all estimators will work together with the RFE class.

- The imports are as follows:

from sklearn.feature_selection import RFE from sklearn.svm import SVC from sklearn.svm import SVR from sklearn.preprocessing import MinMaxScaler import dautil as dl import warnings import numpy as np

- Create a SVC classifier and an RFE object as follows:

warnings.filterwarnings("ignore", category=DeprecationWarning) clf = SVC(random_state=42, kernel='linear') selector = RFE(clf) - Load the data, scale it using a

MinMaxScalerfunction, and add the day of the year as a feature:df = dl.data.Weather.load().dropna() df['RAIN'] = df['RAIN'] == 0 df['DOY'] = [float(d.dayofyear) for d in df.index] scaler = MinMaxScaler() for c in df.columns: if c != 'RAIN': df[c] = scaler.fit_transform(df[c]) - Print the first row of the data as a sanity check:

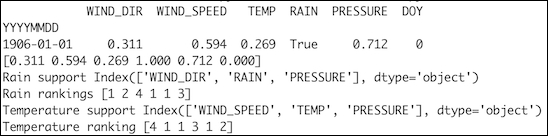

dl.options.set_pd_options() print(df.head(1)) X = df[:-1].values np.set_printoptions(formatter={'all': '{:.3f}'.format}) print(X[0]) np.set_printoptions() - Determine support and rankings for the features using rain or no rain as classes (in the context of classification):

y = df['RAIN'][1:].values selector = selector.fit(X, y) print('Rain support', df.columns[selector.support_]) print('Rain rankings', selector.ranking_) - Determine support and rankings for the features using temperature as a feature:

reg = SVR(kernel='linear') selector = RFE(reg) y = df['TEMP'][1:].values selector = selector.fit(X, y) print('Temperature support', df.columns[selector.support_]) print('Temperature ranking', selector.ranking_)

Refer to the following screenshot for the end result:

The code for this recipe is in the feature_elimination.py file in this book's code bundle.

The RFE class selects half of the features by default. The algorithm is as follows:

- Train the external estimator on the data and assign weights to the features.

- The features with smallest weights are removed.

- Repeat the procedure until we have the necessary number of features.

- The documentation for the

RFEclass at http://scikit-learn.org/stable/modules/generated/sklearn.feature_selection.RFE.html (retrieved November 2015)