NET Privacy

Marco Cremonini, University of Milan

Chiara Braghin, University of Milan

Claudio Agostino Ardagna, University of Milan

In recent years, large-scale computer networks have become an essential aspect of our daily computing environment. We often rely on a global information infrastructure for ebusiness activities such as home banking, ATM transactions, or shopping online. One of the main scientific and technological challenges in this setting has been to provide security to individuals who operate in possibly untrusted and unknown environments. However, beside threats directly related to computer intrusions, epidemic diffusion of malware, and outright frauds conducted online, a more subtle though increasing erosion of individuals’ privacy has progressed and multiplied. Such an escalating violation of privacy has some direct harmful consequences—for example, identity theft has spread in recent years—and negative effects on the general perception of insecurity that many individuals now experience when dealing with online services.

Nevertheless, protecting personal privacy from the many parties—business, government, social, or even criminal—that examine the value of personal information is an old concern of modern society, now increased by the features of the digital infrastructure. In this chapter, we address these privacy issues in the digital society from different points of view, investigating:

• The various aspects of the notion of privacy and the debate that the intricate essence of privacy has stimulated

• The most common privacy threats and the possible economic aspects that may influence the way privacy is (and especially is not, in its current status) managed in most firms

• The efforts in the computer science community to face privacy threats, especially in the context of mobile and database systems

• The network-based technologies available to date to provide anonymity in user communications over a private network

1. Privacy in the Digital Society

Privacy in today’s digital society is one of the most debated and controversial topics. Many different opinions about what privacy actually is and how it could be preserved have been expressed, but still we can set no clear-cut border that cannot be trespassed if privacy is to be safeguarded.

The Origins, The Debate

As often happens when a debate heats up, the extremes speak louder and, about privacy, the extremes are those that advocate the ban of the disclosure of whatever personal information and those that say that all personal information is already out there, therefore privacy is dead. Supporters of the wide deployment and use of anonymizing technologies are perhaps the best representatives of one extreme. The chief executive officer of Sun Microsystems, Scott McNealy, with his “Get over it” comment, has gained large notoriety for championing the other extreme opinion.1

However, these are just the extremes; in reality net privacy is a fluid concept that such radical positions cannot fully contain. It is a fact that even those supporting full anonymity recognize that there are several limitations to its adoption, either technical or functional. On the other side, even the most skeptical cannot avoid dealing with privacy issues, either because of laws and norms or because of common sense. Sun Microsystems, for example, is actually supporting privacy protection and is a member of the Online Privacy Alliance, an industry coalition that fosters the protection of individuals’ privacy online.

Looking at the origins of the concept of privacy, Aristotle’s distinction between the public sphere of politics and the private sphere of the family is often considered the root. Much later, the philosophical and anthropological debate around these two spheres of an individual’s life evolved. John Stuart Mill, in his essay, On Liberty, introduced the distinction between the realm of governmental authority as opposed to the realm of self-regulation. Anthropologists such as Margaret Mead have demonstrated how the need for privacy is innate in different cultures that protect it through concealment or seclusion or by restricting access to secret ceremonies.

More pragmatically, back in 1898, the concept of privacy was expressed by U.S. Supreme Court Justice Brandeis, who defined privacy as “The right to be let alone.”2 This straightforward definition represented for decades the reference of any normative and operational privacy consideration and derivate issues and, before the advent of the digital society, a realistically enforceable ultimate goal. The Net has changed the landscape because the very concept of being let alone while interconnected becomes fuzzy and fluid.

In 1948, privacy gained the status of fundamental right of any individual, being explicitly mentioned in the United Nations Universal Declaration of Human Rights (Article 12): “No one shall be subjected to arbitrary interference with his privacy, family, home or correspondence, nor to attacks upon his honour and reputation. Everyone has the right to the protection of the law against such interference or attacks.”3 However, although privacy has been recognized as a fundamental right of each individual, the Universal Declaration of Human Rights does not explicitly define what privacy is, except for relating it to possible interference or attacks.

About the digital society, less rigorously but otherwise effectively in practical terms, in July 1993, The New Yorker published a brilliant cartoon by Peter Steiner that since then has been cited and reproduced dozen of times to refer to the supposed intrinsic level of privacy—here in the sense of anonymity or hiding personal traits—that can be achieved by carrying out social relations over the Internet. That famous cartoon shows one dog that types on a computer keyboard and says to the other one: “On the Internet, no one knows you’re a dog.”4 The Internet, at least at the very beginning of its history, was not perceived as threatening to individuals’ privacy; rather, it was seen as increasing it, sometimes too much, since it could easily let people disguise themselves in the course of personal relationships. Today that belief may look naïve with the rise of threats to individual privacy that have accompanied the diffusion of the digital society. Nevertheless, there is still truth in that cartoon because, whereas privacy is much weaker on the Net than in real space, concealing a person’s identity and personal traits is technically even easier. Both aspects concur and should be considered.

Yet an unambiguous definition of the concept of privacy has still not been produced, nor has an assessment of its actual value and scope. It is clear, however, that with the term privacy we refer to a fundamental right and an innate feeling of every individual, not to a vague and mysterious entity. An attempt to give a precise definition at least to some terms that are strictly related to (and often used in place of) the notion of privacy can be found in5,6, where the differences among anonymity, unobservability, and unlinkability are pointed out. In the digital society scenario, anonymity is defined as the state of not being identifiable, unobservability as the state of being indistinguishable, and unlinkability as the impossibility of correlating two or more actions/items/pieces of information. Privacy, however defined and valued, is a tangible state of life that must be attainable in both the physical and the digital society.

The reason that in the two realms—the physical and the digital—privacy behaves differently has been widely debated, too, and many of the critical factors that make a difference in the two realms, the impact of technology and the Internet, have been spelled out clearly. However, given the threats and safeguards that technologies make possible, it often remains unclear what the goal of preserving net privacy should be—being that extreme positions are deemed unacceptable.

Lessig, in his book Free Culture,7 provided an excellent explanation of the difference between privacy in the physical and in the digital world: “The highly inefficient architecture of real space means we all enjoy a fairly robust amount of privacy. That privacy is guaranteed to us by friction. Not by law […] and in many places, not by norms […] but instead, by the costs that friction imposes on anyone who would want to spy. […] Enter the Internet, where the cost of tracking browsing in particular has become quite tiny. […] The friction has disappeared, and hence any ‘privacy’ protected by the friction disappears, too.”

Thus, privacy can be seen as the friction that reduces the spread of personal information, that makes it more difficult and economically inconvenient to gain access to it. The merit of this definition is to put privacy into a relative perspective, which excludes the extremes that advocate no friction at all or so much friction to stop the flow of information. It also reconciles privacy with security, being both aimed at setting an acceptable level of protection while allowing the development of the digital society and economy rather than focusing on an ideal state of perfect security and privacy.

Even in an historic perspective, the analogy with friction has sense. The natural path of evolution of a technology is first to push for its spreading and best efficiency. When the technology matures, other requirements come to the surface and gain importance with respect to mere efficiency and functionalities. Here, those frictions that have been eliminated because they are a waste of efficiency acquire new meaning and become the way to satisfy the new requirements, in terms of either safety or security, or even privacy. It is a sign that a technology has matured but not yet found a good balance between old and new requirements when nonfunctional aspects such as security or privacy become critical because they are not well managed and integrated.

Privacy Threats

Threats to individual privacy have become publicly appalling since July 2003, when the California Security Breach Notification Law8 went into effect. This law was the first one to force state government agencies, companies, and nonprofit organizations that conduct business in California to notify California customers if personally identifiable information (PII) stored unencrypted in digital archives was, or is reasonably believed to have been, acquired by an unauthorized person.

The premise for this law was the rise of identity theft, which is the conventional expression that has been used to refer to the illicit impersonification carried out by fraudsters who use PII of other people to complete electronic transactions and purchases. The California Security Breach Notification Law lists, as PII: Social Security number, driver’s license number, California Identification Card number, bank account number, credit- or debit-card number, security codes, access codes, or passwords that would permit access to an individual’s financial account.8 By requiring by law the immediate notification to the PII owners, the aim is to avoid direct consequences such as financial losses and derivate consequences such as the burden to restore an individual’s own credit history. Starting on January 1, 2008, California’s innovative data security breach notification law also applies to medical information and health insurance data.

Besides the benefits to consumers, this law has been the trigger for similar laws in the United States—today, the majority of U.S. states have one—and has permitted the flourishing of regular statistics about privacy breaches, once almost absent. Privacy threats and analyses are now widely debated, and research focused on privacy problems has become one of the most important. Figure 28.1 shows a chart produced by plotting data collected by Attrition.org Data Loss Archive and Database,9 one of the most complete references for privacy breaches and data losses.

Figure 28.1 Privacy breaches from the Attrition.org Data Loss Archive and Database up to March 2008 (X-axis: Years 2000–2008; Y-axis (logarithmic): PII records lost).

Looking at the data series, we see that some breaches are strikingly large. Etiolated.org maintains some statistics based on Attrition.org’s database: In 2007, about 94 million records were hacked at TJX stores in the United States; confidential details of 25 million children have been lost by HM Revenue & Customs, U.K.; the Dai Nippon Printing Company in Tokyo lost more than 8 million records; data about 8.5 million people stored by a subsidiary of Fidelity National Information Services were stolen and sold for illegal usage by a former employee. Similar paths were reported in previous years as well. In 2006, personal data of about 26.5 million U.S. military veterans was stolen from the residence of a Department of Veterans Affairs data analyst who improperly took the material home. In 2005, CardSystems Solutions—a credit card processing company managing accounts for Visa, MasterCard, and American Express—exposed 40 million debit- and credit-card accounts in a cyber break-in. In 2004, an employee of America Online Inc. stole 92 million email addresses and sold them to spammers. Still recently, in March 2008, Hannaford Bros. supermarket chain announced that, due to a security breach, about 4.2 million customer credit and debit card numbers were stolen.10

Whereas these incidents are the most notable, the phenomenon is distributed over the whole spectrum of breach sizes (see Figure 28.1). Hundreds of privacy breaches are reported in the order of a few thousand records lost and all categories of organizations are affected, from public agencies, universities, banks and financial institutions, manufacturing and retail companies, and so on.

The survey Enterprise@Risk: 2007 Privacy & Data Protection, conducted by Deloitte & Touche and Ponemon Institute,11 provides another piece of data about the incidence of privacy breaches. Among the survey’s respondents, over 85% reported at least one breach and about 63% reported multiple breaches requiring notification during the same time period. Breaches involving over 1000 records were reported by 33.9% of respondents; of those, almost 10% suffered data losses of more than 25,000 records. Astonishingly, about 21% of respondents were not able to estimate the record loss. The picture that results is that of a pervasive management problem with regard to PII and its protection, which causes a continuous leakage of chunks of data and a few dramatic breakdowns when huge archives are lost or stolen.

It is interesting to analyze the root causes for such breaches and the type of information involved. One source of information is the Educational Security Incidents (ESI) Year in Review–2007,12 by Adam Dodge. This survey lists all breaches that occurred worldwide during 2007 at colleges and universities around the world.

Concerning the causes of breaches, the results over a total of 139 incidents are:

Therefore, incidents to be accounted for by mismanagement by employees (unauthorized disclosure and loss) account for 47%, whereas criminal activity (penetration/hacking and theft) account for 40%.

With respect to the type of information exposed during these breaches, the result is that:

• PII have been exposed in 42% of incidents

• Social Security numbers in 34%

Again, rather than direct economic consequences or illicit usage of computer facilities, such breaches represents threats to individual privacy.

Privacy Rights Clearinghouse is another organization that provides excellent data and statistics about privacy breaches. Among other things, it is particularly remarkable for its analysis of root causes for different sectors, namely the private sector, the public sector (military included), higher education, and medical centers.13 Table 28.1 reports its findings for 2006.

Table 28.1

Root causes of data breaches, 2006

Source: Privacy Rights Clearinghouse.

Comparing these results with the previous statistics, the Educational Security Incidents (ESI) Year in Review–2007, breaches caused by hackers in universities look remarkably different. Privacy Rights ClearingHouse estimates as largely prevalent the external criminal activity (hackers and theft), which accounts for 77%, and internal problems, which account for 19%, whereas in the previous study the two classes were closer with a prevalence of internal problems.

Hasan and Yurcik14 analyzed data about privacy breaches that occurred in 2005 and 2006 by fusing datasets maintained by Attrition.org and Privacy Rights ClearingHouse. The overall result partially clarifies the discrepancy that results from the previous two analyses. In particular, it emerges that considering the number of privacy breaches, education institutions are the most exposed, accounting for 35% of the total, followed by companies (25%) and state-level public agencies, medical centers, and banks (all close to 10%). However, by considering personal records lost by sector, companies lead the score with 35.5%, followed by federal agencies with 29.5%, medical centers with 16%, and banks with 11.6%. Educational institutions record a lost total of just 2.7% of the whole. Therefore, though universities are victimized by huge numbers of external attacks that cause a continuous leakage of PII, companies and federal agencies are those that have suffered or provoked ruinous losses of enormous archives of PII. For these sectors, the impact of external Internet attacks has been matched or even exceeded by internal fraud or misconduct.

The case of consumer data broker ChoicePoint, Inc., is perhaps the one that got the most publicity as an example of bad management practices that led to a huge privacy incident.15 In 2006, the Federal Trade Commission charged that ChoicePoint violated the Fair Credit Reporting Act (FCRA) by furnishing consumer reports—credit histories—to subscribers who did not have a permissible purpose to obtain them and by failing to maintain reasonable procedures to verify both their identities and how they intended to use the information.16

The opinion that threats due to hacking have been overhyped with respect to others is one shared by many in the security community. In fact, it appears that root causes of privacy breaches, physical thefts (of laptops, disks, and portable memories) and bad management practices (sloppiness, incompetence, and scarce allocation of resources) need to be considered at least as serious as hacking. This is confirmed by the survey Enterprise@Risk: 2007 Privacy & Data Protection,11 which concludes that most enterprise privacy programs are just in the early or middle stage of the maturity cycle. Requirements imposed by laws and regulations have the highest rates of implementation; operational processes, risk assessment, and training programs are less adopted. In addition, a minority of organizations seem able to implement measurable controls, a deficiency that makes privacy management intrinsically feeble. Training programs dedicated to privacy, security, and risk management look at the weakest spot. Respondents report that training on privacy and security is offered just annually (about 28%), just once (about 36.5%), or never (about 11%). Risk management is never the subject of training for almost 28% of respondents. With such figures, it is no surprise if internal negligence due to unfamiliarity with privacy problems or insufficient resources is such a relevant root cause for privacy breaches.

The ChoicePoint incident is paradigmatic of another important aspect that has been considered for analyzing privacy issues. The breach involved 163,000 records and it was carried out with the explicit intention of unauthorized parties to capture those records. However, actually, in just 800 cases (about 0.5%), that breach leads to identity theft, a severe offense suffered by ChoicePoint customers. Some analysts have questioned the actual value of privacy, which leads us to discuss an important strand of research about economic aspects of privacy.

2. The Economics of Privacy

The existence of strong economic factors that influence the way privacy is managed, breached, or even traded off has long been recognized.17,18 However, it was with the expansion of the online economy, in the 1990s and 2000s, that privacy and economy become more and more entangled. Many studies have been produced to investigate, from different perspectives and approaches, the relation between the two. A comprehensive survey of works that analyzed the economic aspects of privacy can be found in19.

Two issues among the many have gained most of the attention: assessing the value of privacy and examining to what extent privacy and business can coexist or are inevitably conflicting one with the other. For both issues the debate is still open and no ultimate conclusion has been reached yet.

The Value of Privacy

To ascertain the value of privacy on the one hand, people assign high value to their privacy when asked; on the other hand, privacy is more and more eroded and given away for small rewards. Several empirical studies have tested individuals’ behavior when they are confronted with the decision to trade off privacy for some rewards or incentives and when confronted with the decision to pay for protecting their personal information. The approaches to these studies vary, from investigating the actual economic factors that determine people’s choices to the psychological motivation and perception of risk or safety.

Syverson,20 then Shostack and Syverson,21 analyzed the apparently irrational behavior of people who claim to highly value privacy and then, in practice, are keen to release personal information for small rewards. The usual conclusion is that people are not actually able to assess the value of privacy or that they are either irrational or unaware of the risks they are taking. Though there is evidence that risks are often miscalculated or just unknown by most people, there are also some valid reasons that justify such paradoxical behavior. In particular, the analysis points to the cost of examining and understanding privacy policies and practices, which often make privacy a complex topic to manage. Another observation regards the cost of protecting privacy, which is often inaccurately allocated. Better reallocation would also provide government and business with incentives to increase rather than decrease protection of individual privacy.

One study that dates to 1999, by Culnan and Armstrong,22 investigated how firms that demonstrate to adopt fair procedures and ethical behavior can mitigate consumer concerns about privacy. Their finding was that consumers who perceive that the collection of personal information is ruled by fair procedures are more willing to release their data for marketing use. This supported the hypothesis that most privacy concerns are motivated by an unclear or distrustful stance toward privacy protection that firms often exhibit.

In 2007, Tsai et al.23 published research that addresses much the same issue. The effect of privacy concerns on online purchasing decisions has been tested and the results are again that the role of incomplete information in privacy-relevant decisions is essential. Consumers are sensitive to the way privacy is managed and to what extent a merchant is trustful. However, in another study, Grosslack and Acquisti24 found that individuals almost always choose to sell their personal information when offered small compensation rather than keep it confidential.

Hann, Lee, Hui, and Png have carried out a more analytic work in two studies about online information privacy. This strand of research25 estimated how much privacy is worth for individuals and how economic incentives, such as monetary rewards and future convenience, could influence such values. Their main findings are that individuals do not esteem privacy as an absolute value; rather, information is available to trade off for economic benefits, and that improper access and secondary use of personal information are the most important classes of privacy violation. In the second work,26 the authors considered firms that tried to mitigate privacy concerns by offering privacy policies regarding the handling and use of personal information and by offering benefits such as financial gains or convenience. These strategies have been analyzed in the context of the information processing theory of motivation, which considers how people form expectations and make decisions about what behavior to choose. Again, whether a firm may offer only partially complete privacy protection or some benefits, economic rewards and convenience have been found to be strong motivators for increasing individuals’ willingness to disclose personal information.

Therefore, most works seems to converge to the same conclusion: Whether individuals react negatively when incomplete or distrustful information about privacy is presented, even a modest monetary reward is often sufficient for disclosing one’s personal information.

Privacy and Business

The relationship between privacy and business has been examined from several angles by considering which incentives could be effective for integrating privacy with business processes and, instead, which disincentives make business motivations to prevail over privacy.

Froomkin27 analyzed what he called “privacy-destroying technologies” developed by governments and businesses. Examples of such technologies are collections of transactional data, automated surveillance in public places, biometric technologies, and tracking mobile devices and positioning systems. To further aggravate the impact on privacy of each one of these technologies, their combination and integration result in a cumulative and reinforcing effect. On this premise, Froomkin introduces the role that legal responses may play to limit this apparently unavoidable “death of privacy.”

Odlyzko28,29,30,31 is a leading author that holds a pessimistic view of the future of privacy, calling “unsolvable” the problem of granting privacy because of price discrimination pressures on the market. His argument is based on the observation that the markets as a whole, especially Internet-based markets, have strong incentives to price discriminate, that is, to charge varying prices when there are no cost justifications for the differences. This practice, which has its roots long before the advent of the Internet and the modern economy—one of the most illustrative examples is 19th-century railroad pricing practices—provides relevant economic benefits to the vendors and, from a mere economic viewpoint, to the efficiency of the economy. In general, charging different prices to different segments of the customer base permits vendors to complete transactions that would not take place otherwise. On the other hand, the public has often contrasted plain price discrimination practices since they perceive them as unfair. For this reason, many less evident price discrimination practices are in place today, among which bundling is one of the most recurrent. Privacy of actual and prospective customers is threatened by such economic pressures toward price discrimination because the more the customer base can be segmented—and thus known with greatest detail—the better efficiency is achieved for vendors. The Internet-based market has provided a new boost to such practices and to the acquisition of personal information and knowledge of customer habits.

Empirical studies seem to confirm such pessimistic views. A first review of the largest privately held companies listed in the Forbes Private 5032 and a second study of firms listed in the Fortune 50033 demonstrate a poor state of privacy policies adopted in such firms. In general, privately held companies are more likely to lack privacy policies than public companies and are more reluctant to publicly disclose their procedures relative to fair information practices. Even the larger set of the Fortune 500 firms exhibited a large majority of firms that are just mildly addressing privacy concerns.

More pragmatically, some analyses have pointed out that given the current privacy concerns, an explicitly fair management of customers’ privacy may become a positive competitive factor.34 Similarly, Hui et al.35 have identified seven types of benefits that Internet businesses can provide to consumers in exchange for their personal information.

3. Privacy-Enhancing Technologies

Technical improvements of Web and location technologies have fostered the development of online applications that use the private information of users (including physical position of individuals) to offer enhanced services. The increasing amount of available personal data and the decreasing cost of data storage and processing make it technically possible and economically justifiable to gather and analyze large amounts of data. Also, information technology gives organizations the power to manage and disclose users’ personal information without restrictions. In this context, users are much more concerned about their privacy, and privacy has been recognized as one of the main reasons that prevent users from using the Internet for accessing online services. Today’s global networked infrastructure requires the ability for parties to communicate in a secure environment while preserving their privacy. Support for digital identities and definition of privacy-enhanced protocols and techniques for their management and exchange then become fundamental requirements.

A number of useful privacy-enhancing technologies (PETs) have been developed for dealing with privacy issues, and previous works on privacy protection have focused on a wide variety of topics.36,37,38,39,40,41 In this section, we discuss the privacy protection problem in three different contexts. We start by describing languages for the specification of access control policies and privacy preferences. We then describe the problem of data privacy protection, giving a brief description of some solutions. Finally, we analyze the problem of protecting privacy in mobile and pervasive environments.

Languages for Access Control andPrivacy Preferences

Access control systems have been introduced for regulating and protecting access to resources and data owned by parties. However, the importance gained by privacy requirements has brought with it the definition of access control models that are enriched with the ability of supporting privacy requirements. These enhanced access control models encompass two aspects: to guarantee the desired level of privacy of information exchanged between different parties by controlling the access to services/resources, and to control all secondary uses of information disclosed for the purpose of access control enforcement.

In this context, many languages for access control policies and privacy preferences specification have been defined, among which eXtensible Access Control Markup Language (XACML),42 Platform for Privacy Preferences Project (P3P),38,43 and Enterprise Privacy Authorization Language (EPAL)44,45 stand out.

The eXtensible Access Control Markup Language (XACML),42 which is the result of a standardization effort by OASIS, proposes an XML-based language to express and interchange access control policies. It is not specifically designed for managing privacy, but it represents a relevant innovation in the field of access control policies and has been used as the basis for following privacy-aware authorization languages. Main features of XACML are: (1) policy combination, a method for combining policies on the same resource independently specified by different entities; (2) combining algorithms, different algorithms representing ways of combining multiple decisions into a single decision; (3) attribute-based restrictions, the definition of policies based on properties associated with subjects and resources rather than their identities; (4) multiple subjects, the definition of more than one subject relevant to a decision request; (5) policy distribution, policies can be defined by different parties and enforced at different enforcement points; (6) implementation independence, an abstraction layer that isolates the policy-writer from the implementation details; and (7) obligations,46 a method for specifying the actions that must be fulfilled in conjunction with the policy enforcement.

Platform for Privacy Preferences Project (P3P)38,43 is a World Wide Web Consortium (W3C) project aimed at protecting the privacy of users by addressing their need to assess that the privacy practices adopted by a server provider comply with users’ privacy requirements. P3P provides an XML-based language and a mechanism for ensuring that users can be informed about privacy policies of the server before the release of personal information. Therefore, P3P allows Web sites to declare their privacy practices in a standard and machine-readable XML format known as P3P policy. A P3P policy contains the specification of the data it protects, the data recipients allowed to access the private data, consequences of data release, purposes of data collection, data retention policy, and dispute resolution mechanisms. Supporting privacy preferences and policies in Web-based transactions allows users to automatically understand and match server practices against their privacy preferences. Thus, users need not read the privacy policies at every site they interact with, but they are always aware of the server practices in data handling. In summary, the goal of P3P is twofold: It allows Web sites to state their data-collection practices in a standardized, machine-readable way, and it provides users with a solution to understand what data will be collected and how those data will be used.

The corresponding language that would allow users to specify their preferences as a set of preference rules is called a P3P Preference Exchange Language (APPEL).47 APPEL can be used by users’ agents to reach automated or semiautomated decisions regarding the acceptability of privacy policies from P3P-enabled Web sites. Unfortunately, as stated in, interactions between P3P and APPEL48 had shown that users can explicitly specify just what is unacceptable in a policy, whereas the APPEL syntax is cumbersome and error prone for users.

Finally, Enterprise Privacy Authorization Language (EPAL)44,45 is another XML-based language for specifying and enforcing enterprise-based privacy policies. EPAL is specifically designed to enable organizations to translate their privacy policies into IT control statements and to enforce policies that may be declared and communicated according to P3P specifications.

In this scenario, the need for access control frameworks that integrate policy evaluation and privacy functionalities arose. A first attempt to provide a uniform framework for regulating information release over the Web has been presented by Bonatti and Samarati.49 Later a solution that introduced a privacy-aware access control framework was defined by Ardagna et al.50 This framework allows the integration, evaluation, and enforcement of policies regulating access to service/data and release of personal identifiable information, respectively, and provides a mechanism to define constraints on the secondary use of personal data for the protection of users’ privacy. In particular, the following types of privacy policies have been specified:

• Access control policies. These govern access/release of data/services managed by the party (as in traditional access control).

• Release policies. These govern release of properties/credentials/personal identifiable information (PII) of the party and specify under which conditions they can be released.

• Data handling policies. These define how personal information will be (or should be) dealt with at the receiving parties.51

An important feature of this framework is to support requests for certified data, issued and signed by trusted authorities, and uncertified data, signed by the owner itself. It also allows defining conditions that can be satisfied by means of zero-knowledge proof52,53 and based on physical position of the users.54 In the context of the Privacy and Identity Management for Europe (PRIME),55 a European Union project for which the goal is the development of privacy-aware solutions and frameworks has been created.

Data Privacy Protection

The concept of anonymity was first introduced in the context of relational databases to avoid linking between published data and users’ identity. Usually, to protect user anonymity, data holders encrypt or remove explicit identifiers such as name and Social Security number (SSN). However, data deidentification does not provide full anonymity. Released data can in fact be linked to other publicly available information to reidentify users and to infer data that should not be available to the recipients. For instance, a set of anonymized data could contain attributes that almost uniquely identify a user, such as, race, date of birth, and ZIP code. Table 28.2A and Table 28.2B show an example of where the anonymous medical data contained in a table are linked with the census data to reidentify users. It is easy to see that in Table 28.2a there is a unique tuple with a male born on 03/30/1938 and living in the area with ZIP code 10249. As a consequence, if this combination of attributes is also unique in the census data in Table 28.2b, John Doe is identified, revealing that he suffers from obesity.

Table 28.2A

Census Data

Table 28.2B

User reidentification

If in the past limited interconnectivity and limited computational power represented a form of protection against inference processes over large amounts of data, today, with the advent of the Internet, such an assumption no longer holds. Information technology in fact gives organizations the power to gather and manage vast amounts of personal information.

To address the problem of protecting anonymity while releasing microdata, the concept of k-anonymity has been defined. K-anonymity means that the observed data cannot be related to fewer than k respondents.56 Key to achieving k-anonymity is the identification of a quasi-identifier, which is the set of attributes in a dataset that can be linked with external information to reidentify the data owner. It follows that for each release of data, every combination of values of the quasi-identifier must be indistinctly matched to at least k tuples.

Two approaches to achieve k-anonymity have been adopted: generalization and suppression. These approaches share the important feature that the truthfulness of the information is preserved, that is, no false information is released.

In more detail, the generalization process generalizes some of the values stored in the table. For instance, considering the ZIP code attribute in Table 28.2B and supposing for simplicity that it represents a quasi-identifier, the ZIP code can be generalized by dropping, at each step of generalization, the least significant digit. As another example, the date of birth can be generalized by first removing the day, then the month, and eventually by generalizing the year.

On the contrary, the suppression process removes some tuples from the table. Again, considering Table 28.2B, the ZIP codes, and a k-anonymity requirement for k=2, it is clear that all tuples already satisfy the k=2 requirement except for the last one. In this case, to preserve the k=2, the last tuple could be suppressed.

Research on k-anonymity has been particularly rich in recent years. Samarati56 presented an algorithm based on generalization hierarchies and suppression that calculates the minimal generalization. The algorithm relies on a binary search on the domain generalization hierarchy to avoid an exhaustive visit of the whole generalization space. Bayardo and Agrawal57 developed an optimal bottom-up algorithm that starts from a fully generalized table (with all tuples equal) and then specializes the dataset into a minimal k-anonymous table. LeFevre et al.58 are the authors of Incognito, a framework for providing k-minimal generalization. Their algorithm is based on a bottom-up aggregation along dimensional hierarchies and a priori aggregate computation. The same authors59 also introduced Mondrian k-anonymity, which models the tuples as points in d-dimensional spaces and applies a generalization process that consists of finding the minimal multidimensional partitioning that satisfy the k preference.

Although there are advantages of k-anonymity for protecting respondents’ privacy, some weaknesses have been demonstrated. Machanavajjhala et al.60 identified two successful attacks to k-anonymous table: the homogeneity attack and the background knowledge attack. To explain the homogeneity attack, suppose that a k-anonymous table contains a single sensitive attribute. Suppose also that all tuples with a given quasi-identifier value have the same value for that sensitive attribute, too. As a consequence, if the attacker knows the quasi-identifier value of a respondent, the attacker is able to learn the value of the sensitive attribute associated with the respondent. For instance, consider the 2-anonymous table shown in Table 28.3 and assume that an attacker knows that Alice is born in 1966 and lives in the 10212 ZIP code. Since all tuples with quasi-identifier <1966,F,10212> suffer anorexia, the attacker can infer that Alice suffers anorexia. Focusing on the background knowledge attack, the attacker exploits some a priori knowledge to infer some personal information. For instance, suppose that an attacker knows that Bob has quasi-identifier <1984,M,10249> and that Bob is overweight. In this case, from Table 28.3, the attacker can infer that Bob suffers from HIV.

Table 28.3

An example of a 2-Anonymous table

To neutralize these attacks, the concept of l-diversity has been introduced.60 In particular, a cluster of tuples with the same quasi-identifier is said to be l-diverse if it contains at least l different values for the sensitive attribute (disease, in the example in Table 28.3). If a k-anonymous table is l-diverse, the homogeneity attack is ineffective, since each block of tuples has at least l>=2 distinct values for the sensitive attribute. Also, the background knowledge attack becomes more complex as l increases.

Although l-diversity protects data against attribute disclosure, it leaves space for more sophisticated attacks based on the distribution of values inside clusters of tuples with the same quasi-identifier.61 To prevent this kind of attack, the t-closeness requirement has been defined. In particular, a cluster of tuples with the same quasi-identifier is said to satisfy t-closeness if the distance between the probabilistic distribution of the sensitive attribute in the cluster and the one in the original table is lower than t. A table satisfies t-closeness if all its clusters satisfy t-closeness.

In the next section, where the problem of location privacy protection is analyzed, we also discuss how the location privacy protection problem has adapted the k-anonymity principle to a pervasive and distributed scenario, where users move on the field carrying a mobile device.

Privacy for Mobile Environments

The widespread diffusion of mobile devices and the accuracy and reliability achieved by positioning techniques make available a great amount of location information about users. Such information has been used for developing novel location-based services. However, if on one side such a pervasive environment provides many advantages and useful services to the users, on the other side privacy concerns arise, since users could be the target of fraudulent location-based attacks. The most pessimistic have even predicted that the unrestricted and unregulated availability of location technologies and information could lead to a “Big Brother” society dominated by total surveillance of individuals.

The concept of location privacy can be defined as the right of individuals to decide how, when, and for which purposes their location information could be released to other parties. The lack of location privacy protection could be exploited by adversaries to perform various attacks62:

• Unsolicited advertising, when the location of a user could be exploited, without her consent, to provide advertisements of products and services available nearby the user position

• Physical attacks or harassment, when the location of a user could allow criminals to carry out physical assaults on specific individuals

• User profiling, when the location of a user could be used to infer other sensitive information, such as state of health, personal habits, or professional duties, by correlating visited places or paths

• Denial of service, when the location of a user could motivate an access denial to services under some circumstances

A further complicating factor is that location privacy can assume several meanings and introduce different requirements, depending on the scenario in which the users are moving and on the services the users are interacting with. The following categories of location privacy can then be identified:

• Identity privacy protects the identities of the users associated with or inferable from location information. To this purpose, protection techniques aim at minimizing the disclosure of data that can let an attacker infer a user identity. Identity privacy is suitable in application contexts that do not require the identification of the users for providing a service.

• Position privacy protects the position information of individual users by perturbing corresponding information and decreasing the accuracy of location information. Position privacy is suitable for environments where users’ identities are required for a successful service provisioning. A technique that most solutions exploit, either explicitly or implicitly, consists of reducing the accuracy by scaling a location to a coarser granularity (from meters to hundreds of meters, from a city block to the whole town, and so on).

• Path privacy protects the privacy of information associated with individuals movements, such as the path followed while travelling or walking in an urban area. Several location-based services (personal navigation systems) could be exploited to subvert path privacy or to illicitly track users.

Since location privacy definition and requirements differ depending on the scenario, no single technique is able to address the requirements of all the location privacy categories. Therefore, in the past, the research community focusing on providing solutions for the protection of location privacy of users has defined techniques that can be divided into three main classes: anonymity-based, obfuscation-based, and policy-based techniques. These classes of techniques are partially overlapped in scope and could be potentially suitable to cover requirements coming from one or more of the categories of location privacy. It is easy to see that anonymity-based and obfuscation-based techniques can be considered dual categories. Anonymity-based techniques have been primarily defined to protect identity privacy and are not suitable for protecting position privacy, whereas obfuscation-based techniques are well suited for position protection and not appropriate for identity protection. Anonymity-based and obfuscation-based techniques could also be exploited for protecting path privacy. Policy-based techniques are in general suitable for all the location privacy categories, although they are often difficult for end users to understand and manage.

Among the class of techniques just introduced, current research on location privacy has mainly focused on supporting anonymity and partial identities. Beresford and Stajano63,64 proposed a method, called mix zones, which uses an anonymity service based on an infrastructure that delays and reorders messages from subscribers. Within a mix zone (i.e., an area where a user cannot be tracked), a user is anonymous in the sense that the identities of all users coexisting in the same zone are mixed and become indiscernible. Other works are based on the concept of k-anonymity. Bettini et al.65 designed a framework able to evaluate the risk of sensitive location-based information dissemination. Their proposal puts forward the idea that the geo-localized history of the requests submitted by a user can be considered as a quasi-identifier that can be used to discover sensitive information about the user. Gruteser and Grunwald66 developed a middleware architecture and an adaptive algorithm to adjust location information resolution, in spatial or temporal dimensions, to comply with users’ anonymity requirements. To this purpose, the authors introduced the concepts of spatial cloaking. Spatial cloaking guarantees the k-anonymity by enlarging the area where a user is located to an area containing k indistinguishable users. Gedik and Liu67 described another k-anonymity model aimed at protecting location privacy against various privacy threats. In their proposal, each user is able to define the minimum level of anonymity and the maximum acceptable temporal and spatial resolution for her location measurement. Mokbel et al.68 designed a framework, named Casper, aimed at enhancing traditional location-based servers and query processors with anonymous services, which satisfies both k-anonymity and spatial user preferences in terms of the smallest location area that can be released. Ghinita et al.69 proposed PRIVE, a decentralized architecture for preserving query anonymization, which is based on the definition of k-anonymous areas obtained exploiting the Hilbert space-filling curve. Finally, anonymity has been exploited to protect the path privacy of the users70,71,72 Although interesting, these solutions are still at an early stage of development.

Alternatively, when the users’ identity is required for location-based service provision, obfuscation-based techniques has been deployed. The first work providing an obfuscation-based technique for protecting location privacy was by Duckham and Kulik.62 In particular, their framework provides a mechanism for balancing individual needs for high-quality information services and for location privacy. The idea is to degrade location information quality by adding n fake positions to the real user position. Ardagna et al.73 defined different obfuscation-based techniques aimed at preserving location privacy by artificially perturbing location information. These techniques degrade the location information accuracy by (1) enlarging the radius of the measured location, (2) reducing the radius, and (3) shifting the center. In addition, a metric called relevance is used to evaluate the level of location privacy and balance it with the accuracy needed for the provision of reliable location-based services.

Finally, policy-based techniques are based on the notion of privacy policies and are suitable for all the categories of location privacy. In particular, privacy policies define restrictions that must be enforced when location of users is used by or released to external parties. The IETF Geopriv working group74 addresses privacy and security issues related to the disclosure of location information over the Internet. The main goal is to define an environment supporting both location information and policy data.

4. Network Anonymity

The wide diffusion of the Internet for many daily activities has enormously increased interest in security and privacy issues. In particular, in such a distributed environment, privacy should also imply anonymity: a person shopping online may not want her visits to be tracked, the sending of email should keep the identities of the sender and the recipient hidden from observers, and so on. That is, when surfing the Web, users want to keep secret not only the information they exchange but also the fact that they are exchanging information and with whom. Such a problem has to do with traffic analysis, and it requires ad hoc solutions. Traffic analysis is the process of intercepting and examining messages to deduce information from patterns in communication. It can be performed even when the messages are encrypted and cannot be decrypted. In general, the greater the number of messages observed or even intercepted and stored, the more can be inferred from the traffic. It cannot be solved just by encrypting the header of a packet or the payload: In the first case, the packet could still be tracked as it moves through the network; the second case is ineffective as well since it would still be possible to identify who is talking to whom.

In this section, we first describe the onion routing protocol,75,76,77 one of the better-known approaches that is not application-oriented. Then we provide an overview of other techniques for assuring anonymity and privacy over networks. The key approaches we discuss are mix networks,78,79 the Crowds system,80 and the Freedom network.81

Onion Routing

Onion routing is intended to provide real-time bidirectional anonymous connections that are resistant to both eavesdropping and traffic analysis in a way that’s transparent to applications. That is, if Alice and Bob communicate over a public network by means of onion routing, they are guaranteed that the content of the message remains confidential and no external observer or internal node is able to infer that they are communicating.

Onion routing works beneath the application layer, replacing socket connections with anonymous connections and without requiring any change to proxy-aware Internet services or applications. It was originally implemented on Sun Solaris 2.4 in 1997, including proxies for Web browsing (HTTP), remote logins (rlogin), email (SMTP), and file transfer (FTP). The Tor82 generation 2 onion routing implementation runs on most common operating systems. It consists of a fixed infrastructure of onion routers, where each router has a longstanding socket connection to a set of neighboring ones. Only a few routers, called onion router proxies, know the whole infrastructure topology. In onion routing, instead of making socket connections directly to a responding machine, initiating applications make a socket connection to an onion routing proxy that builds an anonymous connection through several other onion routers to the destination. In this way, the onion routing network allows the connection between the initiator and responder to remain anonymous. Although the protocol is called onion routing, the routing that occurs during the anonymous connection is at the application layer of the protocol stack, not at the IP layer. However, the underlying IP network determines the route that data actually travels between individual onion routers. Given the onion router infrastructure, the onion routing protocol works in three phases:

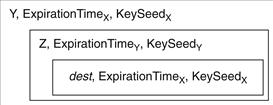

During the first phase, the initiator application, instead of connecting directly with the destination machine, opens a socket connection with an onion routing proxy (which may reside in the same machine, in a remote machine, or in a firewall machine). The proxy first establishes a path to the destination in the onion router infrastructure, then sends an onion to the first router of the path. The onion is a layered data structure in which each layer of the onion (public-key encrypted) is intended for a particular onion router and contains (1) the identity of the next onion router in the path to be followed by the anonymous connection; (2) the expiration time of the onion; and (3) a key seed to be used to generate the keys to encode the data sent through the anonymous connection in both directions. The onion is sent through the path established by the proxy: an onion router that receives an onion peels off its layer, identifies the next hop, records on a table the key seed, the expiration time and the identifiers of incoming and outgoing connections and the keys that are to be applied, pads the onion and sends it to the next onion router. Since the most internal layer contains the name of the destination machine, the last router of the path will act as the destination proxy and open a socket connection with the destination machine. Note that only the intended onion router is able to peel off the layer intended to it. In this way, each intermediate onion router knows (and can communicate with) only the previous and the next-hop router. Moreover, it is not capable of understanding the content of the following layers of the onion. The router, and any external observer, cannot know a priori the length of the path since the onion size is kept constant by the fact that each intermediate router is obliged to add padding to the onion corresponding to the fixed-size layer that it removed.

Figure 28.2 shows an onion for an anonymous connection following route WXYZ; the router infrastructure is as depicted in Figure 28.3, with W the onion router proxy.

Figure 28.2 Onion message.

Figure 28.3 Onion routing network infrastructure.

Once the anonymous connection is established, data can be sent in both directions. The onion proxy receives data from the initiator application, breaks it into fixed-size packets, and adds a layer of encryption for each onion router in the path using the keys specified in the onion. As data packets travel through the anonymous connection, each intermediate onion router removes one layer of encryption. The last router in the path sends the plaintext to the destination through the socket connection that was opened during the setup phase. This encryption layering occurs in the reverse order when data is sent backward from the destination machine to the initiator application. In this case, the initiator proxy, which knows both the keys and the path, will decrypt each layer and send the plaintext to the application using its socket connection with the application. As for the onion, data passed along the anonymous connection appears different to each intermediate router and external observer, so it cannot be tracked. Moreover, compromised onion routers cannot cooperate to correlate the data stream they see.

When the initiator application decides to close the socket connection with the proxy, the proxy sends a destroy message along the anonymous connection and each router removes the entry of the table relative to that connection.

There are several advantages in the onion routing protocol. First, the most trusted element of the onion routing infrastructure is the initiator proxy, which knows the network topology and decides the path used by the anonymous connection. If the proxy is moved in the initiator machine, the trusted part is under the full control of the initiator. Second, the total cryptographic overhead is the same as for link encryption but, whereas in link encryption one corrupted router is enough to disclose all the data, in onion routing routers cannot cooperate to correlate the little they know and disclose the information. Third, since an onion has an expiration time, replay attacks are not possible. Finally, if anonymity is also desired, then all identifying information must be additionally removed from the data stream before being sent over the anonymous connection. However, onion routing is not completely invulnerable to traffic analysis attacks: if a huge number of messages between routers is recorded and usage patterns analyzed, it would be possible to make a close guess about the routing, that is, also about the initiator and the responder. Moreover, the topology of the onion router infrastructure must be static and known a priori by at least one onion router proxy, which make the protocol little adaptive to node/router failures.

Tor82 generation 2 onion routing addresses some of the limitations highlighted earlier, providing a reasonable trade-off among anonymity, usability, and efficiency. In particular, it provides perfect forward secrecy and it does not require a proxy for each supported application protocol.

Anonymity Services

Some other approaches offer some possibilities for providing anonymity and privacy, but they are still vulnerable to some types of attacks. For instance, many of these approaches are designed for World Wide Web access only; being protocol-specific, these approaches may require further development to be used with other applications or Internet services, depending on the communication protocols used in those systems.

David Chaum78,79 introduced the idea of mix networks in 1981 to enable unobservable communication between users of the Internet. Mixes are intermediate nodes that may reorder, delay, and pad incoming messages to complicate traffic analysis. A mix node stores a certain number of incoming messages that it receives and sends them to the next mix node in a random order. Thus, messages are modified and reordered in such a way that it is nearly impossible to correlate an incoming message with an outgoing message. Messages are sent through a series of mix nodes and encrypted with mix keys. If participants exclusively use mixes for sending messages to each other, their communication relations will be unobservable, even if the attacker records all network connections. Also, without additional information, the receiver does not have any clue about the identity of the message’s sender. As in onion routing, each mix node knows only the previous and next node in a received message’s route. Hence, unless the route only goes through a single node, compromising a mix node does not enable an attacker to violate either the sender nor the recipient privacy. Mix networks are not really efficient, since a mix needs to receive a large group of messages before forwarding them, thus delaying network traffic. However, onion routing has many analogies with this approach and an onion router can be seen as a real-time Chaum mix.

Reiter and Rubin80 proposed an alternative to mixes, called crowds, a system to make only browsing anonymous, hiding from Web servers and other parties information about either the user or the information she retrieves. This is obtained by preventing a Web server from learning any information linked to the user, such as the IP address or domain name, the page that referred the user to its site, or the user’s computing platform. The approach is based on the idea of “blending into a crowd,” that is, hiding one’s actions within the actions of many others. Before making any request, a user joins a crowd of other users. Then, when the user submits a request, it is forwarded to the final destination with probability p and to some other member of the crowd with probability 1-p. When the request is eventually submitted, the end server cannot identify its true initiator. Even crowd members cannot identify the initiator of the request, since the initiator is indistinguishable from a member of the crowd that simply passed on a request from another.

Freedom network81 is an overlay network that runs on top of the Internet, that is, on top of the application layer. The network is composed of a set of nodes called anonymous Internet proxies, which run on top of the existing infrastructure. As for onion routing and mix networks, the Freedom network is used to set up a communication channel between the initiator and the responder, but it uses different techniques to encrypt the messages sent along the channel.

5. Conclusion

In this chapter we discussed net privacy from different viewpoints, from historical to technological. The very nature of the concept of privacy requires such an enlarged perspective because it often appears indefinite, being constrained into the tradeoff between the undeniable need of protecting personal information and the evident utility, in many contexts, of the availability of the same information. The digital society and the global interconnected infrastructure eased accessing and spreading of personal information; therefore, developing technical means and defining norms and fair usage procedures for privacy protection are now more demanding than in the past.

Economic aspects have been introduced since they are likely to strongly influence the way privacy is actually managed and protected. In this area, research has provided useful insights about the incentive and disincentives toward better privacy.

We presented some of the more advanced solutions that research has developed to date, either for anonymizing stored data, hiding sensitive information in artificially inaccurate clusters, or introducing third parties and middleware in charge of managing online transactions and services in a privacy-aware fashion. Location privacy is a topic that has gained importance in recent years with the advent of mobile devices and that is worth a specific consideration.

Furthermore, the important issue of anonymity over the Net has been investigated. To let individuals surf the Web, access online services, and interact with remote parties in an anonymous way has been the goal of many efforts for years. Some important technologies and tools are available and are gaining popularity.

To conclude, whereas privacy over the Net and in the digital society does not look to be in good shape, the augmented sensibility of individuals to its erosion, the many scientific and technological efforts to introduce novel solutions, and a better knowledge of the problem with the help of fresh data contribute to stimulating the need for better protection and fairer use of personal information. For this reason, it is likely that Net privacy will remain an important topic in the years to come and more innovations toward better management of privacy issues will emerge.

1P. Sprenger, “Sun on privacy: ‘get over it,’” WIRED, 1999, www.wired.com/politics/law/news/1999/01/17538.

2S. D. Warren, and L. D. Brandeis, “The right to privacy,” Harvard Law Review, Vol. IV, No. 5, 1890.

3United Nations, Universal Declaration of Human Rights, 1948, www.un.org/Overview/rights.html.

4P. Steiner, “On the Internet, nobody knows you’re a dog,” Cartoonbank, The New Yorker, 1993, www.cartoonbank.com/item/22230.

5A. Pfitzmann, and M. Waidner, “Networks without user observability – design options,” Proceedings of Workshop on the Theory and Application of Cryptographic Techniques on Advances in Cryptology (EuroCrypt’85), Vol. 219 LNCS Springer, Linz, Austria, pp. 245–253, 1986.

6A. Pfitzmann, and M. Köhntopp, “Anonymity, unobservability, and pseudonymity—a proposal for terminology,” in Designing Privacy Enhancing Technologies, Springer Berlin, pp. 1–9, 2001.

7L. Lessig, Free Culture, Penguin Group, 2003, www.free-culture.cc/.

8California Security Breach Notification Law, Bill Number: SB 1386, February 2002, http://info.sen.ca.gov/pub/01-02/bill/sen/sb_1351-1400/sb_1386_bill_20020926_chaptered.html.

9Attrition.org Data Loss Archive and Database (DLDOS), 2008, http://attrition.org/dataloss/.

10Etiolated.org, “Shedding light on who’s doing what with your private information,” 2008, http://etiolated.org/.

11Deloitte & Touche LLP and Ponemon Institute LLC, Enterprise@Risk: 2007 Privacy & Data Protection Survey, 2007, www.deloitte.com/dtt/cda/doc/content/us_risk_s%26P_2007%20Privacy10Dec2007final.pdf.

12A. Dodge, Educational Security Incidents (ESI) Year in Review 2007, 2008. www.adamdodge.com/esi/yir_2006.

13Privacy Rights ClearingHouse, Chronology of Data Breaches 2006: Analysis, 2007, www.privacyrights.org/ar/DataBreaches2006-Analysis.htm.

14R. Hasan, and W. Yurcik, “Beyond media hype: Empirical analysis of disclosed privacy breaches 2005–2006 and a dataset/database foundation for future work,” Proceedings of Workshop on the Economics of Securing the Information Infrastructure, Washington, 2006.

15S. D. Scalet, “The five most shocking things about the ChoicePoint data security breach,” CSO online, 2005, www.csoonline.com/article/220340.

16Federal Trade Commission (FTC), “ChoicePoint settles data security breach charges; to pay $10 million in civil penalties, $5 million for consumer redress, 2006,” www.ftc.gov/opa/2006/01/choicepoint.shtm.

17J. Hirshleifer, “The private and social value of information and the reward to inventive activity,” American Economic Review, Vol. 61, pp. 561–574, 1971.

18R. A. Posner, “The economics of privacy,” American Economic Review, Vol. 71, No. 2, pp. 405–409, 1981.

19K. L. Hui, and I. P. L. Png, “Economics of privacy,” in terrence hendershott (ed.), Handbooks in Information Systems, Vol. 1, Elsevier, pp. 471–497, 2006.

20P. Syverson, “The paradoxical value of privacy,” Proceedings of the 2nd Annual Workshop on Economics and Information Security (WEIS 2003), 2003.

21A. Shostack, and P. Syverson, “What price privacy? (and why identity theft is about neither identity nor theft),” In Economics of Information Security, Chapter 11, Kluwer Academic Publishers, 2004.

22M. Culnan, and P. Armstrong, “Information privacy concerns, procedural fairness, and impersonal trust: an empirical evidence,” Organization Science, Vol. 10, No. 1, pp. 104–1, 51999.

23J. Tsai, S. Egelman, L. Cranor, and A. Acquisti, “The effect of online privacy information on purchasing behavior: an experimental study,” Workshop on Economics and Information Security (WEIS 2007), 2007.

24J. Grossklags, and A. Acquisti, “When 25 cents is too much: an experiment on willingness-to-sell and willingness-to-protect personal information,” Proceedings of Workshop on the Economics of Information Security (WEIS), Pittsburgh, 2007.

25Il-H. Hann, K. L. Hui, T. S. Lee, and I. P. L. Png, “Online information privacy: measuring the cost-benefit trade-off,” Proceedings, 23rd International Conference on Information Systems, Barcelona, Spain, 2002.

26Il-H. Hann, K. L. Hui, T. S. Lee, and I. P. L. Png, “Analyzing online information privacy concerns: an information processing theory approach,” Journal of Management Information Systems, Vol. 24, No. 2, pp. 13–42, 2007.

27A. M. Froomkin, “The death of privacy?,” 52 Stanford Law Review, pp. 1461–1469, 2000.

28Odlyzko, A. M., “Privacy, economics, and price discrimination on the internet,” Proceedings of the Fifth International Conference on Electronic Commerce (ICEC2003), N. Sadeh (ed.), ACM, pp. 355–366, 2003.

29A. M. Odlyzko, “The unsolvable privacy problem and its implications for security technologies,” Proceedings of the 8th Australasian Conference on Information Security and Privacy (ACISP 2003), R. Safavi-Naini and J. Seberry (eds.), Lecture Notes in Computer Science 2727, Springer, pp. 51–54, 2003.

30A. M. Odlyzko, “The evolution of price discrimination in transportation and its implications for the Internet,” Review of Network Economics, Vol. 3, No. 3, pp. 323–346, 2004.

31A. M. Odlyzko, “Privacy and the clandestine evolution of ecommerce,” Proceedings of the Ninth International Conference on Electronic Commerce (ICEC2007), ACM, 2007.

32A. R. Peslak, “Privacy policies of the largest privately held companies: a review and analysis of the Forbes private 50,” Proceedings of the ACM SIGMIS CPR Conference on Computer Personnel Research, Atlanta, 2005.

33K. S. Schwaig, G. C. Kane, and V. C. Storey, “Compliance to the fair information practices: how are the fortune 500 handling online privacy disclosures?,” Inf. Manage., Vol. 43, No. 7, pp. 805–820, 2006.

34M. Brown, and R. Muchira, “Investigating the relationship between internet privacy concerns and online purchase behavior,” Journal Electron. Commerce Res, Vol. 5, No. 1, pp. 62–70, 2004.

35K. L. Hui, B. C. Y. Tan, and C. Y. Goh, “Online information disclosure: Motivators and measurements,” ACM Transaction on Internet Technologies, Vol. 6, No. 4, pp. 415–441, 2006.

36C. A. Ardagna, E. Damiani, S. De Capitani di Vimercati, and P. Samarati, “Toward privacy-enhanced authorization policies and languages,” Proceedings of the 19th IFIP WG11.3 Working Conference on Data and Application Security, Storrs, CT, pp. 16–27, 2005.

37R. Chandramouli, “Privacy protection of enterprise information through inference analysis,” Proceedings of IEEE 6th International Workshop on Policies for Distributed Systems and Networks (POLICY 2005), Stockholm, Sweden, pp. 47–56, 2005.

38L. F. Cranor, Web Privacy with P3P, O’Reilly & Associates, 2002.

39G. Karjoth, and M. Schunter, “Privacy policy model for enterprises,” Proceedings of the 15th IEEE Computer Security Foundations Workshop, Cape Breton, Nova Scotia, pp. 271–281, 2002.

40B. Thuraisingham, “Privacy constraint processing in a privacy-enhanced database management system,” Data & Knowledge Engineering, Vol. 55, No. 2, pp. 159–188, 2005.

41M. Youssef, V. Atluri, and N. R. Adam, “Preserving mobile customer privacy: An access control system for moving objects and customer profiles,” Proceedings of the 6th International Conference on Mobile Data Management (MDM 2005), Ayia Napa, Cyprus, pp. 67–76, 2005.

42eXtensible Access Control Markup Language (XACML) Version 2.0, February 2005, http://docs.oasis-open.org/xacml/2.0/access_control-xacml-2.0-core-spec-os.pdf.

43World Wide Web Consortium (W3C), Platform for privacy preferences (P3P) project, 2002, www.w3.org/TR/P3P/.

44P. Ashley, S. Hada, G. Karjoth, and M. Schunter, “E-P3P privacy policies and privacy authorization,” Proceedings of the ACM Workshop on Privacy in the Electronic Society (WPES 2002), Washington, pp. 103–109, 2002.

45P. Ashley, S. Hada, G. Karjoth, C. Powers, and M. Schunter, “Enterprise privacy authorization language (epal 1.1),” 2003, www.zurich.ibm.com/security/enterprise-privacy/epal.

46C. Bettini, S. Jajodia, X. S. Wang, and D. Wijesekera, “Provisions and obligations in policy management and security applications,” Proceedings of 28th Conference Very Large Data Bases (VLDB ’02), Hong Kong, pp. 502–513, 2002.

47World Wide Web Consortium (W3C). A P3P Preference Exchange Language 1.0 (APPEL1.0), 2002, www.w3.org/TR/P3P-preferences/.

48R. Agrawal, J., Kiernan, R., Srikant, and Y. Xu, “An XPath based preference language for P3P,” Proceedings of the 12th International World Wide Web Conference, Budapest, Hungary, pp. 629–639, 2003.

49P. Bonatti, and P. Samarati, “A unified framework for regulating access and information release on the web,” Journal of Computer Security, Vol. 10, No. 3, pp. 241–272, 2002.

50C. A. Ardagna, M. Cremonini, S. De Capitani di Vimercati, and P. Samarati, “A privacy-aware access control system,” Journal of Computer Security, 2008.

51C. A. Ardagna, S. De Capitani di Vimercati, and P. Samarati, “Enhancing user privacy through data handling policies,” Proceedings of the 20th Annual IFIP WG 11.3 Working Conference on Data and Applications Security, Sophia Antipolis, France, pp. 224–236, 2006.

52J. Camenisch, and A. Lysyanskaya, “An efficient system for non-transferable anonymous credentials with optional anonymity revocation,” Proceedings of the International Conference on the Theory and Application of Cryptographic Techniques (EUROCRYPT 2001), Innsbruck, Austria, pp. 93–118, 2001.

53J. Camenisch, and E. Van Herreweghen, “Design and implementation of the idemix anonymous credential system,” Proceedings of the 9th ACM Conference on Computer and Communications Security (CCS 2002), Washington, pp. 21–30, 2002.

54C. A. Ardagna, M. Cremonini, E. Damiani, S. De Capitani di Vimercati, and P. Samarati, “Supporting location-based conditions in access control policies,” Proceedings of the ACM Symposium on Information, Computer and Communications Security (ASIACCS ’06), Taipei, pp. 212–222, 2006.

55Privacy and Identity Management for Europe (PRIME), 2004, www.prime-project.eu.org/.

56P. Samarati, “Protecting respondents’ identities in microdata release,” IEEE Transactions on Knowledge and Data Engineering, Vol. 13, No. 6, pp. 1010–1027, 2001.

57R. J. Bayardo, and R. Agrawal, “Data privacy through optimal k-anonymization,” Proceedings of the 21st International Conference on Data Engineering (ICDE’05), Tokyo, pp. 217–228, 2005.

58K. LeFevre, D. J. DeWitt, and R. Ramakrishnan, “Incognito: Efficient full-domain k-anonymity,” Proceedings of the 24th ACM SIGMOD International Conference on Management of Data, Baltimore, pp. 49–60, 2005.

59K. LeFevre, D. J. DeWitt, and R. Ramakrishnan, “Mondrian multidimensional k-anonymity,” Proceedings of the 22nd International Conference on Data Engineering (ICDE’06), Atlanta, 2006.

60A. Machanavajjhala, J. Gehrke, D. Kifer, and M. Venkitasubramaniam, “l-diversity: Privacy beyond k-anonymity,” Proceedings of the International Conference on Data Engineering (ICDE ’06), Atlanta, 2006.

61N. Li, T. Li, and S. Venkatasubramanian, “t-closeness: Privacy beyond k-anonymity and l-diversity,” Proceedings of the 23nd International Conference on Data Engineering, Istanbul, Turkey, pp. 106–115, 2007.

62M. Duckham, and L. Kulik, “Location privacy and location-aware computing,” Dynamic & Mobile GIS: Investigating Change in Space and Time, pp. 34–51, Taylor & Francis, 2006.

63A. R. Beresford, and F. Stajano, “Location privacy in pervasive computing,” IEEE Pervasive Computing, vol. 2, no. 1, pp. 46–55, 2003.

64A. R. Beresford, and F. Stajano, “Mix zones: User privacy in location-aware services,” Proceedings of the 2nd IEEE Annual Conference on Pervasive Computing and Communications Workshops (PERCOMW04), Orlando, pp. 127–131, 2004.

65C. Bettini, X. S. Wang, and S. Jajodia, “Protecting privacy against location-based personal identification,” Proceedings of the 2nd VLDB Workshop on Secure Data Management (SDM’05), Trondheim, Norway, pp. 185–199, 2005.

66M. Gruteser, and D. Grunwald, “Anonymous usage of location-based services through spatial and temporal cloaking,” Proceedings of the 1st International Conference on Mobile Systems, Applications, and Services (MobiSys), San Francisco, pp. 31–42, 2003.

67B. Gedik, and L. Liu, “Protecting location privacy with personalized k-anonymity: Architecture and algorithms,” IEEE Transactions on Mobile Computing, vol. 7, no. 1, pp. 1–18, 2008.

68M. F. Mokbel, C. Y. Chow, and W. G. Aref, “The new Casper: Query processing for location services without compromising privacy,” Proceedings of the 32nd International Conference on Very Large Data Bases (VLDB 2006), Seoul, South Korea, pp. 763–774, 2006.

69G. Ghinita, P. Kalnis, and S. Skiadopoulos, “PrivE: Anonymous location-based queries in distributed mobile systems,” Proceedings of the International World Wide Web Conference (WWW 2007), Banff, Canada, pp. 371–380, 2007.

70M. Gruteser, J. Bredin, and D. Grunwald, “Path privacy in location-aware computing,” Proceedings of the Second International Conference on Mobile Systems, Application and Services (MobiSys2004), Boston, 2004.