Capacity analysis and performance optimization are two intertwined processes that are too often neglected; however, these processes address common questions when you are faced with designing or supporting a network environment. The reasons for the neglect are endless, but most often IT professionals are simply tied up with daily administration and firefighting, or the perception of mystery surrounding these procedures is intimidating. These processes require experience and insight. Equally important is the fact that handling these processes is an art, but it doesn’t require a crystal ball. If you invest time in these processes, you will spend less time troubleshooting or putting out fires, thus making your life less stressful and also reducing business costs.

The majority of capacity analysis is working to minimize unknown or immeasurable variables, such as the number of gigabytes or terabytes of storage the system will need in the next few months or years, to adequately size a system. The high number of unknown variables is largely because network environments, business policy, and people are constantly changing. As a result, capacity analysis is an art as much as it involves experience and insight.

If you’ve ever found yourself having to specify configuration requirements for a new server or having to estimate whether your configuration will have enough power to sustain various workloads now and in the foreseeable future, proper capacity analysis can help in the design and configuration. These capacity-analysis processes help weed out the unknowns and assist you while making decisions as accurately as possible. They do so by giving you a greater understanding of your Windows Server 2003 environment. This knowledge and understanding can then be used to reduce time and costs associated with supporting and designing an infrastructure. The result is that you gain more control over the environment, reduce maintenance and support costs, minimize firefighting, and make more efficient use of your time.

Business depends on network systems for a variety of different operations, such as performing transactions or providing security, so that the business functions as efficiently as possible. Systems that are underutilized are probably wasting money and are of little value. On the other hand, systems that are overworked or can’t handle workloads prevent the business from completing tasks or transactions in a timely manner, may cause a loss of opportunity, or may keep the users from being productive. Either way, these systems are typically not much benefit to operating a business. To keep network systems well tuned for the given workloads, capacity analysis seeks a balance between the resources available and the workload required of the resources. The balance provides just the right amount of computing power for given and anticipated workloads.

This concept of balancing resources extends beyond the technical details of server configuration to include issues such as gauging the number of administrators that may be needed to maintain various systems in your environment. Many of these questions relate to capacity analysis, and the answers aren’t readily known because they can’t be predicted with complete accuracy.

To lessen the burden and dispel some of the mysteries of estimating resource requirements, capacity analysis provides the processes to guide you. These processes include vendor guidelines, industry benchmarks, analysis of present system resource utilization, and more. Through these processes, you’ll gain as much understanding as possible of the network environment and step away from the compartmentalized or limited understanding of the systems. In turn, you’ll also gain more control over the systems and increase your chances of successfully maintaining the reliability, serviceability, and availability of your system.

There is no set or formal way to start your capacity-analysis processes. However, a proven and effective means to begin to proactively manage your system is to first establish system-wide policies and procedures. Policies and procedures, discussed shortly, help shape service levels and users’ expectations. After these policies and procedures are classified and defined, you can more easily start characterizing system workloads, which will help gauge acceptable baseline performance values.

The benefits of capacity analysis are almost inconceivable. Capacity analysis helps define and gauge overall system health by establishing baseline performance values, and then the analysis provides valuable insight into where the system is heading. It can be used to uncover both current and potential bottlenecks and can also reveal how changing management activities may affect performance today and tomorrow. It also allows you to identify and resolve performance issues proactively instead of before they become an issue or are recognized by management.

Another benefit of capacity analysis is that it can be applied to small environments and scale well into enterprise-level systems. The level of effort needed to initially drive the capacity-analysis processes will vary depending on the size of your environment, geography, and political divisions. With a little upfront effort, you’ll save time, expense, and gain a wealth of knowledge and control over the network environment.

As mentioned earlier, it is recommended that you first begin defining policies and procedures regarding service levels and objectives. Because each environment varies in design, the policies that you create can’t be cookie-cutter; you need to tailor them to your particular business practices and to the environment. In addition, you should strive to set policies that set user expectations and, more importantly, help winnow out empirical data.

Essentially, policies and procedures define how the system is supposed to be used—establishing guidelines to help users understand that the system can’t be used in any way they see fit. Many benefits are derived from these policies and procedures. For example, in an environment where policies and procedures are working successfully and where network performance becomes sluggish, it would be safer to assume that groups of people weren’t playing a multiuser network game, that several individuals weren’t sending enormous email attachments to everyone in the global address list, or that a rogue Web or FTP server wasn’t placed on the network.

The network environment is shaped by the business more so than the IT department. Therefore, it’s equally important to gain an understanding of users’ expectations and requirements through interviews, questionnaires, surveys, and more. Some examples of policies and procedures that you can implement in your environment pertaining to end users could be the following:

Message size can’t exceed 2MB.

Beta software can be installed only on lab equipment (that is, not on client machines or servers in the production environment).

All computing resources are for business use only (in other words, no gaming or personal use of computers is allowed).

Only certain applications will be supported and allowed on the network.

All home directories will be limited to 300MB per user.

Users must either fill out the technical support Outlook form or request assistance through the advertised help desk phone number.

Policies and procedures, however, aren’t just for your end users. They can also be established and applied to IT personnel. In this scenario, policies and procedures can serve as guidelines for technical issues, rules of engagement, or simply an internal set of rules to abide by. The following list provides some examples of policies and procedures that might be applied to the IT personnel:

System backups must include system state data and should be completed by 5:00 a.m. each workday.

Routine system maintenance should be performed only on Saturday mornings between 5:00 and 8:00 a.m.

Basic technical support requests should be attended to within two business days.

Priority technical support requests should be attended to within four hours of the request.

Technical support staff should use Remote Desktop on client machines first before attempting to solve the problem locally.

Any planned downtime for servers must be approved by the IT Director at least one week in advance.

If you’ve begun defining policies and procedures, you’re already cutting down the number of immeasurable variables and amount of empirical data that challenge your decision-making process. The next step to prepare for capacity analysis is to begin gathering baseline performance values.

Baselines give you a starting point in which to compare results against. For the most part, determining baseline performance levels involves working with hard numbers that represent the health of a system. On the other hand, a few variables coincide with the statistical representations such as workload characterization, vendor requirements or recommendations, industry-recognized benchmarks, and the data that you collect.

It is unlikely that each system in your environment is a separate entity that has its own workload characterization. Most, if not all, network environments have systems that depend on other systems or are even intertwined among different workloads. This makes workload characterization difficult at best.

Workloads are defined by how processes or tasks are grouped, the resources they require, and the type of work being performed. Examples of how workloads can be characterized include departmental functions, time of day, the type of processing required (such as batch or real-time), companywide functions (such as payroll), volume of work, and much more.

So, why is workload characterization so important? Identifying systems’ workloads allows you to determine the appropriate resource requirements for each of them. This way, you can properly plan the resources according to the performance levels the workloads expect and demand.

Benchmarks are a means to measure the performance of a variety of products, including operating systems, virtually all computer components, and even entire systems. Many companies rely on benchmarks to gain competitive advantage because so many professionals rely on them to help determine what’s appropriate for their network environment.

As you would suspect, sales and marketing departments all too often exploit the benchmark results to sway IT professionals over their way. For this reason, it’s important to investigate the benchmark results and the companies or organizations that produced the results. Vendors, for the most part, are honest with the results, but it’s always a good idea to check with other sources, especially if the results are suspicious. For example, if a vendor has supplied benchmarks for a particular product, check to make sure that the benchmarks are consistent with other benchmarks produced by third-party organizations (such as magazines, benchmark organizations, and in-house testing labs). If none are available, you should try to gain insight from other IT professionals or run benchmarks on the product yourself before implementing it in production.

Although some suspicion may arise from benchmarks because of the sales and marketing techniques, the real purpose of benchmarks is to point out the performance levels that you can expect when using the product. Benchmarks can be extremely beneficial for decision-making, but they shouldn’t be your sole source for evaluating and measuring performance. Use the benchmark results only as a guideline or starting point when consulting benchmark results during capacity analysis. It’s also recommended that you pay close attention to their interpretation.

Table 35.1 lists companies or organizations that provide benchmark statistics and benchmark-related information, and some also offer tools for evaluating product performance.

A growing number of tools originating and evolving from the Windows NT4, Windows 2000, and Unix operating system platforms can be used in data collection and analysis on Windows Server 2003. Some of these tools are even capable of forecasting system capacity, depending on the amount of information they are given.

In the past year Microsoft has released several sizing tools. Probably the most well known is the Microsoft Operations Manager (MOM) sizing tool; however, similar tools are available for any number of Microsoft applications. They are presented in the form of an interactive Microsoft Excel spreadsheet. The user inputs data relative to their environment, and the tool returns the recommended hardware configuration to support the request. They are based on Microsoft best practices and have been fairly accurate in practical use.

Microsoft also offers some handy utilities that are either inherent to Windows Server 2003 or are sold as separate products. Some of these utilities are included with the operating system, such as Task Manager, Network Monitor, and Performance Console (also known as Performance or System Monitor). Data that is collected from these applications can be exported to other applications, such as Microsoft Excel or Access, for inventory and analysis. Other Microsoft utilities that are sold separately are Systems Management Server (SMS) and MOM.

Windows Server 2003’s arsenal of utilities for capacity-analysis purposes includes command-line and GUI-based tools. This section discusses the Task Manager, Network Monitor, and Performance Console, which are bundled with the Windows Server 2003 operating system.

The Windows Server 2003 Task Manager is similar to its Windows 2000 predecessor in that it offers multifaceted functionality. You can view and monitor processor-, memory-, application-, network-, and process-related information in real-time for a given system. This utility is great for getting a quick view of key system health indicators with the lowest performance overhead.

To begin using Task Manager, use any of the following methods:

Press Ctrl+Shift+Esc.

Right-click the taskbar and select Task Manager.

Press Ctrl+Alt+Delete and then click Task Manager.

When you start the Task Manager, you’ll see a screen similar to that in Figure 35.1.

The Task Manager window contains the following five tabs:

Applications—. This tab lists the user applications that are currently running. You also can start and end applications under this tab.

Processes—. Under this tab, you can find performance metric information of the processes currently running on the system.

Performance—. This tab can be a graphical or tabular representation of key system parameters in real-time.

Networking—. This tab displays the network traffic coming to and from the machine. The displayed network usage metric is a percentage of total available network capacity for a particular adapter.

Users—. This tab displays users who are currently logged on to the system.

In addition to the Task Manager tabs, the Task Manager is, by default, configured with a status bar at the bottom of the window. This status bar, shown in Figure 35.2, displays the number of running processes, CPU utilization percentage, and the amount of memory currently being used.

As you can see, the Task Manager presents a variety of valuable real-time performance information. This tool is particularly useful for determining what processes or applications are problematic and gives you an overall picture of system health.

There are limitations, however, which prevent it from becoming a useful tool for long-term or historical analysis. For example, the Task Manager can’t store collected performance information; it is capable of monitoring only certain aspects of the system’s health, and the information that is displayed pertains only to the local machine. For these reasons alone, the Task Manager doesn’t make a prime candidate for capacity-planning purposes (you must be logged on locally or connected via Terminal Services to gauge performance with the Task Manager).

There are two versions of Network Monitor that you can use to check network performance. The first is bundled within Windows Server 2003, and the other is a part of Systems Management Server. Although both have the same interface, like the one shown in Figure 35.3, the one bundled with the operating system is slightly scaled down in terms of functionality when compared to the SMS version.

The Network Monitor that is built into Windows Server 2003 is designed to monitor only the local machine’s network activity. This utility design stems from security concerns regarding the ability to capture and monitor traffic on remote machines. If the operating system version had this capability, anyone who installed the Network Monitor would possibly be able to use it to gain unauthorized access to the system. Therefore, this version captures only frame types traveling into or away from the local machine.

To install the Network Monitor, perform the following steps:

Select Add or Remove Programs from the Start, Control Panel menu.

In the Add or Remove Programs window, click Add/Remove Windows Components.

Within the Windows Components Wizard, select Management and Monitoring Tools and then click Details.

In the Management and Monitoring Tools window, select Network Monitor Tools and then click OK.

If you are prompted for additional files, insert your Windows Server 2003 CD or type a path to the location of the files on the network.

After the installation, locate and execute the Network Monitor from the Start, Programs, Administration Tools menu.

As mentioned earlier, the SMS version of the Network Monitor is a full version of the one integrated into Windows Server 2003. The most significant difference between the two versions is that the SMS version can run indiscriminately throughout the network (that is, it can monitor and capture network traffic to and from remote machines). It is also equipped to locate routers on the network, provide name-to-IP address resolution, and generally monitor all the traffic traveling throughout the network.

Because the SMS version of Network Monitor is capable of capturing and monitoring all network traffic, it poses possible security risks. Any unencrypted network traffic can be compromised; therefore, it’s imperative that you limit the number of IT personnel who have the necessary access to use this utility.

On the other hand, the SMS version of Network Monitor is more suitable for capacity-analysis purposes because it is flexible enough to monitor network traffic from a centralized location. It also allows you to monitor in real-time and capture for historical analysis. For all practical purposes, however, it wouldn’t make much sense to install SMS just for the Network Monitor capabilities, especially considering that you can purchase more robust third-party utilities.

Many IT professionals rely on the Performance Console because it is bundled with the operating system, and it allows you to capture and monitor every measurable system object within Windows Server 2003. This tool is a Microsoft Management Console (MMC) snap-in, so using the tool involves little effort to become familiar with it. You can find and start the Performance Console from within the Administrative Tools group on the Start menu.

The Performance Console, shown in Figure 35.4, is by far the best utility provided in the operating system for capacity-analysis purposes. With this utility, you can analyze data from virtually all aspects of the system both in real-time and historically. This data analysis can be viewed through charts, reports, and logs. The log format can be stored for use later so that you can scrutinize data from succinct periods of time.

Because the Performance Console is available to everyone running the operating system and it has a lot of built-in functionality, we’ll assume that you’ll be using this utility.

Without a doubt, many third-party utilities are excellent for capacity-analysis purposes. Most of them provide additional functionality not found in Windows Server 2003’s Performance Console, but they cost more, too. You may want to evaluate some third-party utilities to get a more thorough understanding of how they may offer more features than the Performance Console. Generally speaking, these utilities enhance the functionality that’s inherent to Performance Console, such as scheduling, an enhanced level of reporting functionality, superior storage capabilities, the ability to monitor non-Windows systems, or algorithms for future trend analysis. Some of these third-party tools are listed in Table 35.2.

Although it may be true that most third-party products do add more functionality to your capacity-analysis procedures, there are still pros and cons to using them over the free Performance Console. The most obvious is the expense of purchasing the software licenses for monitoring the enterprise, but some less obvious factors include the following:

The number of administrators needed to support the product in capacity-analysis procedures is higher.

Some third-party products have higher learning curves associated with them. This increases the need for either vendor or in-house training just to support the product.

The key is to decide what you need to adequately and efficiently perform capacity-analysis procedures in your environment. You may find that the Performance Console is more than adequate, or you may find that your network environment, because of complexities, requires a third-party product that can encompass all the intricacies.

Capacity analysis is not about how much information you can collect; it is, however, about collecting the appropriate system health indicators and the right amount of information. Without a doubt, you can capture and monitor an overwhelming amount of information from performance counters. There are more than 1,000 counters, so you’ll want to carefully choose what to monitor. Otherwise, you may collect so much information that the data will be hard to manage and difficult to decipher. Keep in mind that more is not necessarily better with regard to capacity analysis. This process is more about efficiency. Therefore, you need to tailor your capacity-analysis monitoring as accurately as possible to how the server is configured.

Every Windows Server 2003 has a common set of resources that can affect performance, reliability, stability, and availability. For this reason, it’s important that you monitor this common set of resources.

Four resources comprise the common set of resources: memory, processor, disk subsystem, and network subsystem. They are also the most common contributors to performance bottlenecks. A bottleneck can be defined in two ways. The most common perception of a bottleneck is that it is the slowest part of your system. It can either be hardware or software, but generally speaking, hardware is usually faster than software. When a resource is overburdened or just not equipped to handle higher workload capacities, the system may experience a slowdown in performance. For any system, the slowest component of the system is, by definition, considered the bottleneck. For example, a Web server may be equipped with ample RAM, disk space, and a high-speed network interface card (NIC), but if the disk subsystem has older drives that are relatively slow, the Web server may not be able to effectively handle requests. The bottleneck (that is, the antiquated disk subsystem) can drag the other resources down.

A less common, but equally important form of bottleneck, is one where a system has significantly more RAM, processors, or other system resources than the application requires. In these cases, the system creates extremely large pagefiles, has to manage very large sets of disk or memory sets, yet never uses the resources. When an application needs to access memory, processors, or disks, the system may be busy managing the idle resource, thus creating an unnecessary bottleneck caused by having too many resources allocated to a system. Thus, performance optimization not only means having too few resources, but also means not having too many resources allocated to a system.

In addition to the common set of resources, the functions that the Windows Server 2003 performs can influence what you should consider monitoring. So, for example, you would monitor certain aspects of system performance on file servers differently than you would for a domain controller (DC). There are many functional roles (such as file and print sharing, application sharing, database functions, Web server duties, domain controller roles, and more) that Windows Server 2003 can serve under, and it is important to understand all those roles that pertain to each server system. By identifying these functions and monitoring them along with the common set of resources, you gain much greater control and understanding of the system.

The following sections go more in depth on what specific counters you should monitor for the different components that comprise the common set of resources. It’s important to realize though that there are several other counters that you should consider monitoring in addition to the ones described in this chapter. You should consider the following material a baseline of the minimum number of counters to begin your capacity-analysis and performance-optimization procedures.

Later in the chapter, we will identify several server roles and cover monitoring baselines, describing the minimum number of counters to monitor.

The key elements to begin your capacity analysis and performance optimization are the common contributors to bottlenecks. They are memory, processor, disk subsystem, and network subsystem.

Available system memory is usually the most common source for performance problems on a system. The reason is simply that incorrect amounts of memory are usually installed on a Windows Server 2003 system. By definition, Windows Server 2003 tends to consume a lot of memory. Fortunately, the easiest and most economical way to resolve the performance issue is to configure the system with additional memory. This can significantly boost performance and upgrade reliability.

When you first start the Performance Console in Windows Server 2003, three counters are monitored. One of these counters is an important one related to memory: the Pages/sec counter. The Performance Console’s default setting is illustrated in Figure 35.4. It shows three counters being monitored in real-time. The purpose is to provide a simple and quick way to get a basic idea of system health.

There are many significant counters in the memory object that could help determine system memory requirements. Most network environments shouldn’t need to consistently monitor every single counter to get accurate representation of performance. For long-term monitoring, two very important counters can give you a fairly accurate picture of memory requirements: Page Faults/sec and Pages/sec memory. These two memory counters alone can indicate whether the system is properly configured with the proper amount of memory.

Systems experience page faults when a process requires code or data that it can’t find in its working set. A working set is the amount of memory that is committed to a particular process. In this case, the process has to retrieve the code or data in another part of physical memory (referred to as a soft fault) or, in the worst case, has to retrieve it from the disk subsystem (a hard fault). Systems today can handle a large number of soft faults without significant performance hits. However, because hard faults require disk subsystem access, they can cause the process to wait significantly, which can drag performance to a crawl. The difference between memory and disk subsystem access speeds is exponential even with the fastest drives available.

The Page Faults/sec counter reports both soft and hard faults. It’s not uncommon to see this counter displaying rather large numbers. Depending on the workload placed on the system, this counter can display several hundred faults per second. When it gets beyond several hundred page faults per second for long durations, you should begin checking other memory counters to identify whether a bottleneck exists.

Probably the most important memory counter is Pages/sec. It reveals the number of pages read from or written to disk and is therefore a direct representation of the number of hard page faults the system is experiencing. Microsoft recommends upgrading the amount of memory in systems that are seeing Pages/sec values consistently averaging above 5 pages per second. In actuality, you’ll begin noticing slower performance when this value is consistently higher than 20. So, it’s important to carefully watch this counter as it nudges higher than 10 pages per second.

Note

The Pages/sec counter is also particularly useful in determining whether a system is thrashing. Thrashing is a term used to describe systems experiencing more than 100 pages per second. Thrashing should never be allowed to occur on Windows Server 2003 systems because the reliance on the disk subsystem to resolve memory faults greatly affects how efficiently the system can sustain workloads.

Most often the processor resource is the first one analyzed when there is a noticeable decrease in system performance. For capacity-analysis purposes, you should monitor two counters: % Processor Time and Interrupts/sec.

The % Processor Time counter indicates the percentage of overall processor utilization. If more than one processor exists on the system, an instance for each one is included along with a total (combined) value counter. If this counter averages a usage rate of 50% or greater for long durations, you should first consult other system counters to identify any processes that may be improperly using the processors or consider upgrading the processor or processors. Generally speaking, consistent utilization in the 50% range doesn’t necessarily adversely affect how the system handles given workloads. When the average processor utilization spills over the 65 or higher range, performance may become intolerable.

The Interrupts/sec counter is also a good guide of processor health. It indicates the number of device interrupts that the processor (either hardware or software driven) is handling per second. Like the Page Faults/sec counter mentioned in the “Memory” section, this counter may display very high numbers (in the thousands) without significantly impacting how the system handles workloads.

Hard disk drives and hard disk controllers are the two main components of the disk subsystem. Windows Server 2003 only has Performance Console objects and counters that monitor hard disk statistics. Some manufacturers, however, may provide add-in counters to monitor their hard disk controllers. The two objects that gauge hard disk performance are Physical and Logical Disk. Unlike its predecessor (Windows 2000), Windows Server 2003 automatically enables the disk objects by default when the system starts.

Although the disk subsystem components are becoming more and more powerful, they are often a common bottleneck because their speeds are exponentially slower than other resources. The effects, though, may be minimal and maybe even unnoticeable, depending on the system configuration.

Monitoring with the Physical and Logical Disk objects does come with a small price. Each object requires a little resource overhead when you use them for monitoring. As a result, you should keep them disabled unless you are going to use them for monitoring purposes. To deactivate the disk objects, type diskperf -n. To activate them at a later time, use diskperf -y or diskperf -y \mycomputer to enable them on remote machines that aren’t running Windows Server 2003. Windows Server 2003 is also very flexible when it comes to activating or deactivating each object separately. To specify which object to enable or disable, use a d for the Physical Disk object, or a v for the Logical Disk object.

Activating and deactivating the disk subsystem objects in Windows Server 2003 is fairly straightforward. Use diskperf -y to activate all disk counters, diskperf -y \mycomputer to enable them on remote machines, or diskperf -n to deactivate them. Windows Server 2003 is also very flexible when it comes to activating or deactivating each object separately. To specify which object to enable or disable, use a d for the Physical Disk object or a v for the Logical Disk object.

To minimize system overhead, disable the disk performance counters if you don’t plan on monitoring them in the near future. For capacity-analysis purposes, though, it’s important to always watch the system and keep informed of changes in usage patterns. The only way to do this is to keep these counters enabled.

So, what specific disk subsystem counters should be monitored? The most informative counters for the disk subsystem are % Disk Time and Avg. Disk Queue Length. The % Disk Time counter monitors the time that the selected physical or logical drive spends servicing read and write requests. The Avg. Disk Queue Length monitors the number of requests not yet serviced on the physical or logical drive. The Avg. Disk Queue length value is an interval average; it is a mathematical representation of the number of delays the drive is experiencing. If the delay is frequently greater than 2, the disks are not equipped to service the workload and delays in performance may occur.

The network subsystem is by far one of the most difficult subsystems to monitor because of the many different variables. The number of protocols used in the network, the network interface cards, network-based applications, topologies, subnetting, and more play vital roles in the network, but they also add to its complexity when you’re trying to determine bottlenecks. Each network environment has different variables; therefore, the counters that you’ll want to monitor will vary.

The information that you’ll want to gain from monitoring the network pertains to network activity and throughput. You can find this information with the Performance Console alone, but it will be difficult at best. Instead, it’s important to use other tools, such as the Network Monitor, in conjunction with Performance Console to get the best representation of network performance as possible. You may also consider using third-party network analysis tools such as sniffers to ease monitoring and analysis efforts. Using these tools simultaneously can broaden the scope of monitoring and more accurately depict what is happening on the wire.

Because the TCP/IP suite is the underlying set of protocols for a Windows Server 2003 network subsystem, this discussion of capacity analysis focuses on this protocol. The TCP/IP counters are added after the protocol is installed (by default).

There are several different network performance objects relating to the TCP/IP protocol, including ICMP, IPv4, IPv6, Network Interface, TCPv4, UDPv6, and more. Other counters such as FTP Server and WINS Server are added after these services are installed. Because entire books are dedicated to optimizing TCP/IP, this section focuses on a few important counters that you should monitor for capacity-analysis purposes.

First, examining error counters, such as Network Interface: Packets Received Errors or Packets Outbound Errors, is extremely useful in determining whether traffic is easily traversing the network. The greater the number of errors indicates that packets must be present, causing more network traffic. If a high number of errors is persistent on the network, throughput will suffer. This may be caused by a bad NIC, unreliable links, and so on.

If network throughput appears to be slowing because of excessive traffic, you should keep a close watch on the traffic being generated from network-based services such as the ones described in Table 35.3.

In addition to monitoring the common set of bottlenecks (memory, processor, disk subsystem, and network subsystem), the functional roles of the server influence what other counters you should monitor. The following sections outline some of the most common roles for Windows Server 2003 that also require the use of additional performance counters.

Terminal Server has its own performance objects for the Performance Console called the Terminal Services Session and Terminal Services objects. It provides resource statistics such as errors, cache activity, network traffic from Terminal Server, and other session-specific activity. Many of these counters are similar to those found in the Process object. Some examples include % Privileged Time, % Processor Time, % User Time, Working Set, Working Set Peak, and so on.

Note

You can find more information on Terminal Services in Chapter 27, “Windows Server 2003 Terminal Services.”

Three important areas to always monitor for Terminal Server capacity analysis are the memory, processor, and application processes for each session. Application processes are by far the hardest to monitor and control because of the extreme variances in programmatic behavior. For example, all applications may be 32-bit, but some may not be certified to run on Windows Server 2003. You may also have in-house applications running on Terminal Services that may be poorly designed or too resource intensive for the workloads they are performing.

A Windows Server 2003 domain controller (DC) houses the Active Directory (AD) and may have additional roles such as being responsible for one or more Flexible Single Master Operation (FSMO) roles (schema master, domain naming master, relative ID master, PDC Emulator, or infrastructure master) or a global catalog (GC) server. Also, depending on the size and design of the system, a DC may serve many other functional roles. In this section, AD, replication, and DNS monitoring will be explored.

Active Directory is the heart of Windows Server 2003 systems. It’s used for many different facets, including, but not limited to, authentication, authorization, encryption, and Group Policies. Because AD plays a central role in a Windows Server 2003 network environment, it must perform its responsibilities as efficiently as possible. You can find more information on Windows Server 2003’s AD in Chapter 4, “Active Directory Primer.” Each facet by itself can be optimized, but this section focuses on the NTDS and Database objects.

The NTDS object provides various AD performance indicators and statistics that are useful for determining AD’s workload capacity. Many of these counters can be used to determine current workloads and how these workloads may affect other system resources. There are relatively few counters in this object, so it’s recommended that you monitor each one in addition to the common set of bottleneck objects. With this combination of counters, you can determine whether the system is overloaded.

Another performance object that you should use to monitor AD is the Database object. This object is not installed by default, so you must manually add it to be able to start gathering more information on AD.

To load the Database object, perform the following steps:

Copy the performance DLL (

esentprf.dll) located in%SystemRoot%System32to any directory (for example,c:esent).Launch the Registry Editor (

Regedt32.exe).Create the Registry key

HKEY_LOCAL_MACHINESYSTEMCurrentControlSet _ServicesESENT.Create the Registry key

HKEY_LOCAL_MACHINESYSTEMCurrentControlSet _ServicesESENTPerformance.Select the

ESENTPerformancesubkey.Create the value

Openusing data typeREG_SZand string equal toOpenPerformanceData.Create the value

Collectusing the data typeREG_SZand string equal toCollectPerformanceData.Create the value

Closeusing the data typeREG_SZand string equal toClosePerformanceData.Create the value

Libraryusing the data typeREG_SZand string equal toc:esentesentprf.dll.Exit the Registry Editor.

Open a command prompt and change the directory to

%SystemRoot%System32.Run

Lodctr.exe Esentprf.iniat the command prompt.

After you complete the Database object installation, you can execute the Performance Console and use the Database object to monitor AD. Some of the relevant counters contained within the Database object to monitor AD are described in Table 35.4.

Table 35.4. AD Performance Counters

The domain name system (DNS) has been the primary name resolution mechanism in Windows 2000 and continues to be with Windows Server 2003. For more information on DNS, refer to Chapter 9, “The Domain Name System.” There are numerous counters available for monitoring various aspects of DNS in Windows Server 2003. The most important categories in terms of capacity analysis are name resolution response times and workloads as well as replication performance.

The counters listed in Table 35.5 are used to compute name query traffic and the workload that the DNS server is servicing. These counters should be monitored along with the common set of bottlenecks to determine the system’s health under various workload conditions. If users are noticing slower responses, you can compare the query workload usage growth with your performance information from memory, processor, disk subsystem, and network subsystem counters.

Table 35.5. Counters to Monitor DNS

Comparing results with other DNS servers in the environment can also help you to determine whether you should relinquish some of the name query responsibility to other DNS servers that are less busy.

Replication performance is another important aspect of DNS. Windows Server 2003 supports legacy DNS replication, also known as zone transfers, which populate information from the primary DNS to any secondary servers. There are two types of legacy DNS replication: incremental (propagating only changes to save bandwidth) and full (the entire zone file is replicated to secondary servers).

Full zone transfers (AXFR) occur on the initial transfers and then the incremental zone transfers (IXFR) are performed thereafter. The performance counters for both AXFR and IXFR (see Table 35.6) measure both requests and the successful transfers. It is important to note that if your network environment integrates DNS with non-Windows systems, it is recommended to have those systems support IXFR.

Table 35.6. DNS Zone Transfer Counters

Counter | Description |

|---|---|

Total number of full zone transfer requests received by the DNS Server service when operating as a master server for a zone. | |

Total number of full zone transfer requests sent by the DNS Server service when operating as a secondary server for a zone. | |

Total number of full zone transfer requests received by the DNS Server service when operating as a secondary server for a zone. | |

Total number of full zone transfers received by the DNS Server service when operating as a secondary server for a zone. | |

Total number of full zone transfers successfully sent by the DNS Server service when operating as a master server for a zone. | |

Total number of incremental zone transfer requests received by the master DNS server. | |

Total number of incremental zone transfer requests sent by the secondary DNS server. | |

Total number of incremental zone transfer responses received by the secondary DNS server. | |

Total number of successful incremental zone transfers received by the secondary DNS server. | |

Total number of successful incremental zone transfers sent by the master DNS server. |

If your network environment is fully Active Directory–integrated, the counters listed in Table 35.6 will all be zero.

Measuring AD replication performance is a complex process because of the many variables associated with replication. They include, but aren’t limited to, the following:

Intrasite versus intersite replication

The compression being used (if any)

Available bandwidth

Inbound versus outbound replication traffic

Fortunately, there are performance counters for every possible AD replication scenario. These counters are located within the NTDS object and are prefixed by the primary process that is responsible for AD replication—the Directory Replication Agent (DRA). Therefore, to monitor AD replication, you need to choose those counters beginning with DRA.

Server Performance Analyzer 2.0 (SPA 2.0) is a new tool from Microsoft that integrates with Server 2003 and comes preconfigured to analyze common roles for Windows servers. The tool is downloadable from Microsoft.com and must be installed on a server running some version of Windows Server 2003. This tool is invaluable for server sizing, education, and troubleshooting performance issues. It presents data that could be obtained through multiple other methods in an easy-to-read and understand format. Perhaps the most useful feature is the overview at the beginning of the report. It presents a sunny/cloudy/rainy graphical representation of server health in key areas such as memory utilization, processor use, and disk I/O.

After you download it, double-click the msi package to install the tool. Be sure to choose locations with adequate storage for data and reports. Click Finish to complete the installation. A reboot is not required. The program will be available at the root level of the program submenu under the Start menu.

Click the left column to expand the configuration menu. Here, you can choose and customize the server roles you would like to analyze for this server. System Overview is chosen by default. It offers only the most basic information and is often included as a subset of other roles.

To add a role, click the File menu and choose Add/Repair Data Collector Groups. You’ll see six options. The most often used is Server Roles. This option will allow you to select basic server roles with preconfigured counters and preset thresholds for performance recommendations. A more advanced method would be to select IT Study Roles, which allow more in-depth monitoring on a larger scope of server roles. You can also create custom roles based on your own needs. Right-click Data Collectors and Reports and choose New/Data Collector Group. You can now customize every portion of the test from trace providers to counters. Basic tests will take 100 seconds to complete, while longer tests may take 5 minutes or more.

To run a test, select the role you would like to evaluate and click the green start arrow. After the test is complete, a report will be generated with the results. The status can be seen in the status bar at the bottom of the application window. Report generation can often take longer than the tests themselves.

SPA 2.0 creates reports in a standard XML format that can be viewed natively within the tool console or with Internet Explorer. To access the current report for any given test, expand the reports item and select Current. Expand the menu until the most recent report is displayed and select it to view the full report. A sample report is shown in Figure 35.5.

The report structure is fairly simple; however, it contains a large amount of data and is interactive. Some sections may say Top 4 of 10 and you can choose any number, up to the maximum to display for the section.

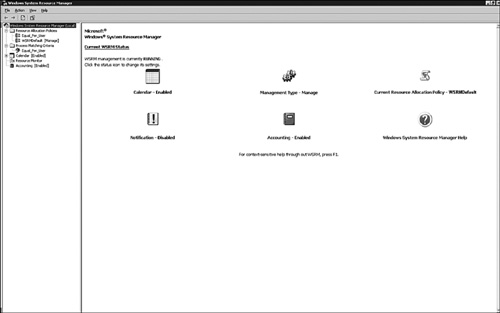

The Windows System Resource Manager (WSRM), a feature of Windows Server 2003 Enterprise or Datacenter Editions, allows administrators to gain additional control over applications and processes. More specifically, WSRM can be used to control application’s or process’ processor utilization, memory usage, as well as processor affinity. Policies can be created to set constraints or ceiling values for an application or process. For instance, a policy can be configured to allow ProcessA to use only 10% of the processor during specified times or at all times. The scheduling is managed by the built-in calendar function.

The WSRM utility, illustrated in Figure 35.6, offers an intuitive way to gain control over the system and optimize application and overall system performance. A default policy is automatically installed to manage any system-related services that may be running on the system. You can also create custom policies for specific applications.

A notable advantageous feature of WSRM is its capability to manage applications running under Terminal Services. WSRM can be used to prevent a potential rogue user session before the associated applications grab most of a server’s resources or even lock out other sessions. When an application or process begins to exceed its resource allocation (specified in the WSRM policy), the WSRM service attempts to bring the resource usage of the process back within the specified target ranges.

Microsoft is taking a proactive approach in maintaining reliability and availability with Windows Server 2003 by establishing measures to help you keep up to date with the latest service packs and updates.

Anyone who has administered a Windows system is probably aware of the importance of keeping up to date with the latest system upgrades, such as bug fixes, performance upgrades, and security updates. Service packs and updates are intended to ensure optimal performance, reliability, and stability. Updates are individual patches, whereas service packs envelope many of these updates into a single upgrade. The service packs themselves don’t contain new features. Only core enhancements and bug fixes are a part of the service pack. This makes for a more reliable, more robust service pack that will most likely prevent you from losing reliability and availability.

Service packs also contain the following benefits:

They can detect the current level of encryption and maintain that version (such as 56-bit or 128-bit).

They usually don’t have to be reapplied after you install a system component or service.

Updates are Microsoft’s answer to quick, reliable fixes. They are convenient because you don’t have to wait for the next service pack to get a problem fixed. Updates often address a single problem. They now contain built-in integrity checks to ensure that the update doesn’t apply an older version of what already exists.

You can run the update from a command line using the hotfix.exe utility.

Various patch automation solutions exist for the Windows Server 2003 network environment. All of them strive to keep you up to date with the latest service packs and updates without large administrative overhead.

Two of these solutions are provided by Microsoft. The first is Windows Update with the Automatic Updates service, and the other is Software Update Services (described next).

Windows Update is a Web-based service using ActiveX controls that scans a system to see whether any patches, critical updates, or product updates are available and should be installed. It presents a convenient and easy way to keep your system up to date. The downside, however, is that you still have to go to the Windows Update Web site (http://windowsupdate.microsoft.com/) and select which components to download and install. Moreover, using this process makes it difficult to control and manage which updates can safely be applied to clients and servers.

As you can probably already tell, the Windows Automatic Update method of keeping up with service packs and updates can be beneficial to Windows network environments, but it’s not true patch automation. Users or administrators still need to tend to each system and download the appropriate components. This method also has a few other limitations; for example, it doesn’t allow you to save the update to disk to install later, there are no mechanisms to distribute updates to other systems, and there isn’t administrative control over which updates are applied to Windows systems throughout the organization’s network infrastructure.

The Windows Software Update Services is a logical extension of Windows Update that helps solve the automation problem while maintaining a greater level of control for the organization’s IT personnel. It is a tool designed specifically for managing and distributing Windows service packs and updates on Windows 2000 and higher systems in an AD environment.

The Software Update Services consists of a Windows Server 2003 server preferably located within a DMZ and a client-side agent or service. The update server receives service packs and updates directly from Microsoft’s public Windows Update Web site at scheduled intervals, and then they are saved to disk. Administrators can then test the service packs and updates before approving them to be distributed throughout the network. The path that the update takes from here depends on how the Windows Software Update Server is configured. After they are approved, service packs and updates can be pushed out at predefined intervals through a Group Policy Object (GPO) to client and server systems, systems can jump on the intranet and manually download updates, or the updates can be distributed to other servers that will eventually push the updates out to systems through the network environment. The latter option is extremely beneficial to larger companies or organizations because it distributes the workload so that a single server isn’t responsible for pushing updates to every system.

Other benefits to using Software Update Services stem from the following management capabilities:

Software Update Services can be managed from a Web-based interface using Internet Explorer 5.5 or higher.

Synchronization with Software Update Services and the content approval process is audited and logged.

Statistics about update download and installations are kept.

Although you can easily get caught up in daily administration and firefighting, it’s important to step back and begin capacity-analysis and performance-optimization processes and procedures. These processes and procedures can minimize the environment’s complexity, help you gain control over the environment, and assist you in anticipating future resource requirements.

Spend time performing capacity analysis to save time troubleshooting and fire-fighting.

Use capacity analysis processes to help weed out the unknowns.

Establish systemwide policies and procedures to begin to proactively manage your system.

After establishing systemwide policies and procedures, start characterizing system workloads.

Use performance metrics and other variables such as workload characterization, vendor requirements or recommendations, industry-recognized benchmarks, and the data that you collect to establish a baseline.

Use the benchmark results only as a guideline or starting point.

Use Task Manager to quickly view performance.

Use performance logs and alerts to capture performance data on a regular basis.

Consider using Microsoft Operations Manager or third-party products to assist with performance monitoring, capacity and data analysis, and reporting.

Carefully choose what to monitor so that the information doesn’t become unwieldy.

At a minimum, monitor the most common contributors to performance bottlenecks: memory, processor, disk subsystem, and network subsystem.

Identify and monitor server functions and roles along with the common set of resources.

Examine network-related error counters.

Automate patch management processes and procedures using Software Update Services, Systems Management Server, or a third-party product.