5

Novel Hybrid Optimal Deep Network and Optimization Approach for Human Face Emotion Recognition

J. Seetha1, M. Ayyadurai2⋆ and M. Mary Victoria Florence3

1Department of Computer Science and Business Systems, Panimalar Engineering College, Chennai, India

2Department of CSE, SRM Institute of Science and Technology, Ramapuram, Chennai, India

3 Department of Mathematics, Panimalar Engineering College, Chennai, India

Abstract

Emotion is significant in deciding a human’s ideas, behavior, and feelings. Using the advantages of deep learning, an emotion detection system can be constructed and various applications such as face unlocking, feedback analysis, and so on are executed with high accuracy. Artificial intelligence’s fast development has made a significant contribution to the technological world. However, it has several difficulties in achieving optimal recognition. Interpersonal differences, the intricacy of facial emotions, posture, and lighting, among other factors, provide significant obstacles. To resolve these issues, a novel Hybrid Deep Convolutional based Golden Eagle Network (HDC-GEN) model algorithm is proposed for the effective recognition of human emotions. The main goal of this research is to create hybrid optimal strategies that classify five diverse human facial reactions. The feature extraction of this research is carried out using Heap Coupled Bat Optimization (HBO) method. The execution of this research is performed by MATLAB software. The simulation outcomes are compared with the conventional methods in terms of accuracy, recall, precision, and F-measure and the comparison shows the effective performance of proposed approaches in facial emotion recognition.

Keywords: Face emotion recognition, deep learning, optimization, hybrid model, golden eagle optimization, feature extraction, and bat optimization

5.1 Introduction

Emotional analytics combine psychology and technology in an intriguing way. The examination of facial gestures is one of the most used methods for recognition of emotions. Basic emotions are thought to be physiologically fixed, intrinsic, and universal to all individuals and many animals [1]. Severe reactions are either a collection of fundamental emotions or a collection of unusual feelings. The major issue is figuring out which emotions are fundamental and which are complicated. While analyzing the information acquired by the ears and sight, people may detect these signals even when they are softly expressed. Based on psychological research that illustrates that visual data affects speech intelligibility, it is reasonable to infer that human emotion interpretation shares a predictable pattern [2]. The practice of recognizing human emotions from facial gestures is known as recognition of facial expression. Facial expression is a universal signal that all humans use to communicate their mood. In this era, facial expression detection systems are very important because they can record people’s behavior, sentiments, and intentions [3].

Computer networks, software, and networking are rapidly evolving and becoming more widely used. These systems play a vital part in our daily lives and make life much easier for us. As a result, face expression detection as a method of image analysis is quickly expanding. Human–computer interface, psychological assessments, driving enabled businesses, automation, drunk driver identification, healthcare, and, most importantly, lie detection are all conceivable uses [4]. The human brain perceives emotions instinctively and technology that can recognize emotions has recently been developed. Furthermore, Artificial Intelligence (AI) can identify emotions by understanding the meaning of each face expression and learn to adapt to fresh input.

Artificial Neural Networks (ANN) are being employed in AI systems currently [5]. Long Short Term Memory (LSTM) based RNN [6], Nave Bayes, K-Nearest Neighbors (KNN), and Convolutional Neural Networks [7] are used to tackle a variety of challenges such as excessive makeup, position, and expression [8]. The properties of the swarm intelligence optimization method, which can execute parallel computation and sharing of information, have caught our interest in order to make good use of excess computational resources and enhance optimization effectiveness [9]. The Particle Swarm Optimization (PSO) and Ant Colony Optimization (ACO) algorithms, as well as their upgraded variants, constitute the swarm intelligence algorithm [10]. The traditional approaches are slow and inaccurate, but a face expression detection system based on deep learning has proven to be superior. Furthermore, typical techniques largely focus on face analysis while keeping the backdrop intact, resulting in a large number of irrelevant and inaccurate characteristics that complicate CNN training.

In this framework, this paper proposes a novel for facial emotion recognition using a Hybrid Deep Convolutional based Golden Eagle Network (HDC-GEN) model algorithm. Moreover, the finest features are extracted using HBO method. The current article comprises of four basic facial expression categories that have been reported: displeasure/anger, sadness/unhappiness, happiness/smile, fear, and surprise/astonishment. Investigations have been carried out and the findings reveal that the suggested network outperforms the benchmark and state-of-the-art solutions for emotion recognition based on facial expression images. It offers several advantages over manual correction in terms of training efficiency and performance, especially when computational resources are sufficient. When compared to other standard emotion detection approaches, this proposed method produced better results in analyzing and classifying emotional data gathered from investigations.

The following is an outline of the article’s layout: The traditional methods used for emotion classification are explained in Section 5.2. In Section 5.3, the system model along with problem definition is provided. The proposed method is described in Section 5.4. Finally, the findings are provided in Section 5.5 and the implications are explored in Section 5.6.

5.2 Related Work

Some of the recent works correlated to this research are articulated as follows: Humans have traditionally had an easy time detecting emotions from facial expression but performing the same performance with a computer program is rather difficult. In this study, Mehendale and Ninad [11] presented a unique face emotion identification approach based on convolutional neural networks. Using an EV of length 24 data, it was feasible to appropriately emphasize the emotion with 96 percent accuracy. Furthermore, before to the formation of EV, a unique background removal approach is used to prevent dealing with many issues that may arise.

Researchers were able to attain cutting-edge performance in emotion identification by employing deeper architectures. For this reason, Mungra, Dhara, et al. [12] developed the PRATIT model which employs certain image preprocessing processes and a CNN design for face emotion identification. To manage differences in the photos, preprocessing methods including gray scaling, resizing, cropping, and normalization are utilized in PRATIT, which obtains an accuracy rate of 78.52%.

A multimodal attention based Bidirectional Long Short-Term Memory (BLSTM) network architecture for effective emotion identification is described by Li, Chao, et al. [13]. The optimal temporal properties are automatically learned using BLSTM with Recurrent Neural Networks (LSTM-NNs). The learnt deep characteristics are then given into a DNN, which predicts the probability of emotional response for every stream. Furthermore, the overall feeling is predicted using a decision level hybrid method.

Estrada, et al. [14] compared numerous sentiment classification models for the categorization of learning attitudes in an Artificial Learning Environment termed ILE-Java, employing three distinct methodologies including deep learning, machine learning, and an evolutionary technique named EvoMSA. It was also found that the EvoMSA produced the best findings, with an accuracy of 93% for the sample sentiTEXT and 84% for the sample eduSERE, depending on the outcomes of the mentioned methods.

In reality, optimizing hyper-parameters continues to be a problem when developing machine learning models like CNNs. Therefore, Gao, Zhongke, et al. [15] offered an autonomously optimized model that selects the structure utilizing a binary coding scheme and GPSO with gradient consequences. The GPSO-based algorithm effectively explores the optimum solution, enabling CNNs to outperform well in classification throughout the dataset. The findings demonstrate that this strategy, which is centered on a GPSO-optimized Classification algorithm, reached a high level of recognition rate.

5.3 System Model and Problem Statement

Humans accomplish the task of identifying expressions on a daily basis with ease, yet automated expression identification remains tough due to the difficulties in separating the emotions’ feature set. Face detection and preprocessing, face image extraction, and classification techniques are the three phases involved in human recognition utilizing facial images. There are major differences in how people articulate themselves. Due to numerous elements such as backdrop, lighting, and position, photographs of the same subject with the same expression change. Emotion recognition is difficult owing to a variety of input modalities that play a vital part in interpreting information. The task of recognizing emotions is complicated by the lack of a big collection of training images and the difficulty of distinguishing emotion depending on whether the input image is fixed or an evolving clip into face features. The challenge faced is mostly for real-time identification of facial emotions that vary drastically.

5.4 Proposed Model

Emotion artificial intelligence is a system that can detect, imitate, understand, and deal with human facial gestures and feelings. Artificial intelligence systems have grown in popularity in the contemporary world as a result of their extensive capabilities and ease of use. However, the traditional methods have certain drawbacks for the accurate recognition of emotions. Thus, a novel HDC-GEN classification method is proposed in this work for significant human facial emotion identification. Primarily, the input images are preprocessed using a median filter because the raw dataset has more noise and unwanted elements are placed. Furthermore, the knowledge based face detection function and image cropping are provided using a MATLAB function. The feature extraction is employed by HBO algorithm to improve the classification. Then, the HDC-GEN algorithm improves the facial expression recognition accuracy. The proposed model of emotion recognition diagrammatic illustration is provided in Figure 5.1.

Figure 5.1 Proposed model of emotion recognition illustration.

5.4.1 Preprocessing Stage

Preprocessing is the initial step in the process of extracting features from images. Moreover, preprocessing is the phase at which noise is removed via filtering. The median filter is employed to remove noise in this case. Preprocessing is necessary because it removes unnecessary noise and improves the sharpness of the images.

5.4.2 Knowledge Based Face Detection

The aim of face detection in this research is to give the position and size of the face in the entire image. Learning-based face detection techniques are the most successful methods in terms of detection accuracy and speed. There could be a variety of items in the background of the input image, such as a building, a people, or trees. This face detection framework can process images very quickly while attaining good detection rates. In this study, the goal of face detection is to determine the size and position of the face in the full image. In terms of detection speed and accuracy, knowledge-based image detection algorithms are the most successful. Rectangular characteristics can be generated quickly using this cascade object detector in MATLAB. Any position’s image representation is the summation of the pixels beyond and to the side of it. For example, the sum of the pixels beyond and to the side of m and n makes up the integral image at point m and n.

5.4.3 Image Resizing

The cropping function is used to crop the face area from the input human image once the bounding box has detected the face region using MATLAB. The facial region inside each sample image is clipped at this point.

5.4.4 Feature Extraction

A right and left eye, nose, and mouth are among the traits examined in the suggested technique. The images are then enlarged to maintain the same distance between the midpoints of the left and right eyes and rotated such that the line connecting the two is horizontal. Next, we initialize all feature parameters to the HBO method. The feature extraction is done by the maximum and minimum point estimation using Equation 5.1:

where di is the feature point, minimum distance rate is denoted as dmin, maximum distance rate is denoted as dmax, and random path of selection is considered as δ. Furthermore, the priority of feature extraction estimated by the fitness in Equations 5.2 and 5.3:

where n is denoted as time step, ![]() is the optimal fitness speed, and the best point is considered as X⋆. The extraction condition for each feature is evaluated by the fitness of heap using Equation 5.4:

is the optimal fitness speed, and the best point is considered as X⋆. The extraction condition for each feature is evaluated by the fitness of heap using Equation 5.4:

where f is represented as objective function, t is the current iteration, n is the number of feature vectors, and βn is the nth feature vector. The intensity value and row and column position scores are computed for each pixel in the fixed region (nose, right eye, left eye, and mouth). The neutral image as well as the emotion image is used to calculate the feature quality. For the purpose of training, the proposed hybrid network is provided the feature values for all of the images.

5.5 Proposed HDC-GEN Classification

Classification is the process of predicting specific outcomes based on a set of inputs. The method uses a training set, which consists of a collection of qualities and their related outcomes, sometimes referred to as the target or prediction attribute, to predict the outcome. The program tries to figure out if there are any correlations between the qualities that might help predict the outcome. The HDC-GEN layer consists of three layers named the input layer, output layer, and hidden layer. The HDC-GEN algorithm is the combination of improved deep CNN and Golden Eagle Optimization. A learning function in the hidden units is operated by the golden eagle fitness function.

Initialization: Initialize the featured data as (aj, bj, cj). The kernel function is executed to the input function using Equation 5.5:

where m is the input image feature and n is the convolution kernel performance, τ is denoted as time delay, and t is symbolized as time, respectively. The tangent hyperplane is evaluated for the kernel exploration by Equation 5.6:

where h1, h2,……ha are the normal vector and c1, c2,……ca are the ith node decision vector.

Max-Pooling Function: Once the kernel function is applied, then the max-pooling operation is executed. The invariant features are extracted in the kernel layer using the max-pooling function, turning the extracted features into various images. The max-pooling function is applied in all portions of the featured images using Equation 5.7:

where o is denoted as the section range and p is considered as the width window.

Optimized Fully Connected Training Layer: The fully connected layer of HDC-GEN is used to train the non-linear combination of data. In addition, the images are flattened into column vectors. For each training iteration, it is then fed into a feed-forward based deep net as well as a backward propagation model. The training set is teaching the right class size in this stage. Thus, the feed-forward propagation is evaluated in Equation 5.8:

The training may be characterized using a vector that starts at the current position of the data and ends at the ideal weight points in the data memory. The training vector for classification is computed using Equation 5.9:

Figure 5.2 Flowchart for proposed human emotion recognition.

where ![]() is the training exploitation vector,

is the training exploitation vector, ![]() is the best location of data visited for the emotion, and

is the best location of data visited for the emotion, and ![]() is the present position of the feature point. Furthermore, the trained data is regularized by the Softmax operation for the further function of classification.

is the present position of the feature point. Furthermore, the trained data is regularized by the Softmax operation for the further function of classification.

Classification: Based on the probabilities from the optimized training stage, the emotions are classified further using Equation 5.10:

where the weight vector is denoted as z, y is represented as the label variable, the features of sample are considered as x, and the label of class is denoted as m, respectively. As a result, the actions are classified using the trained network. Once the best feeling has been identified, the criterion ceases to work until the best result has been achieved. Finally, the suggested approach’s performance is assessed using several metrics. Figure 5.2 shows the flowchart for the suggested approach of human emotion recognition.

5.6 Result and Discussion

All of the applications were tested on a Windows platform with an Intel i5 powered by an octa processor and 8 GB of RAM. Then, MATLAB 2018a was used to conduct the experiment. Initially, the expanded Cohn–Kanade expression sample was used to assess the algorithm’s efficiency. As the amount of images in the collection improves, so does the accuracy. The dataset was firstly divided into two parts: training set with 80% of the data as well as a testing set with 20% of the data. Several of the training networks were uploaded and supplied the whole testing set with a single image at a moment during the validation process. This was a brand-new image that the model had not seen before. In the beginning, the image input to the model was preprocessed. As a result, the model did not know what the proper output was and had to forecast it accurately based on its own training. It preceded to categorize the emotion depicted in the image only based about what it had previously learned and the image’s features. As a result, for each image, it generated a list of identified emotion probabilities. The number of correct forecasts was calculated by comparing the highest probability reaction for each image with the real feelings associated with the visuals.

5.6.1 Performance Metrics Evaluation

The performance of the proposed method is validated with the subsequent metrics such as accuracy, precision, F-measure, recall, error, and processing time.

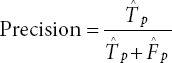

Precision: The precision metrics are one of the significant evaluation measures for the performance analysis of proposed emotion classification. This is evaluated by the ratio of particular predicted positive emotions to the overall predicted positive emotion, which is expressed using Equation 5.11:

where the true positive is denoted as ![]() and false positive is signified as

and false positive is signified as ![]()

Accuracy: The accuracy is another important parameter for the evaluation measures in emotion classification. It characterizes the accurate percentage of classified emotion and when the accuracy reaches 100%, then it is referred to as the classification of emotion at its finest and is estimated using Equation 5.12:

Recall: It defines the particular amount of accurate positives and is classified particularly as optimistic, expressed using Equation 5.13:

F-Measure: The metrics of F-measure are estimated by the ratio of mean weight of precision and recall using Equation 5.14:

Error Rate: The proposed emotion classification error rate is evaluated using Equation 5.15 as:

5.6.2 Comparative Analysis

The performance measures attained from the proposed approach are compared with the traditional models GPSO-CNN [15], BLSTM-RNN [13], and SSA-DCNN in terms of precision, accuracy, recall, error rate, F-measure, and processing time. The precision value of the proposed approach for anger, sadness, fear, surprise, and happiness is compared with the conventional methods, GPSO-CNN [15], BLSTM-RNN [13] and SSA-DCNN, and is illustrated in Figure 5.3. From this, the happy emotion achieved the highest precision value by the proposed approach (98.9%) when compared with the existing methods GPSO-CNN (98%), BLSTM-RNN (74%), and SSA-DCNN (96.45%).

Figure 5.3 Comparison of precision over existing methods.

The accuracy value of the proposed approach for anger, sadness, fear, surprise, and happiness is compared with the existing methods GPSO-CNN [15], BLSTM-RNN [13], and SSA-DCNN and is illustrated in Figure 5.4. From this, the happy emotion achieved the highest accuracy value by the proposed approach (99.2%) when compared with the existing methods GPSO-CNN (69%), BLSTM-RNN (86%), and SSA-DCNN (95.33%).

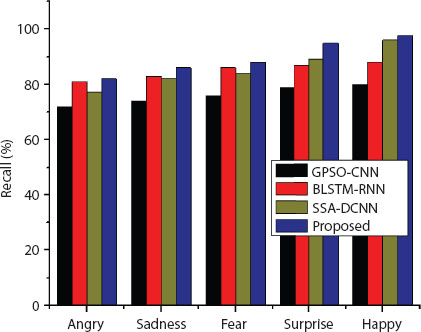

Moreover, the recall value of the proposed approach for anger, sadness, fear, surprise, and happiness is compared with existing methods such as GPSO-CNN [15], BLSTM-RNN [13], and SSA-DCNN and is illustrated in Figure 5.5. From this, the happy emotion achieved the highest recall value by the proposed method (97.6%) when compared with the existing methods GPSO-CNN (80%), BLSTM-RNN (88%), and SSA-DCNN (96%).

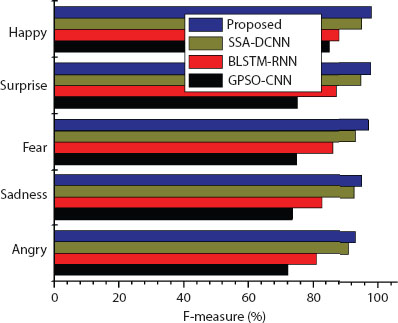

The F-measure value for the five different emotions compared with the existing methods such as GPSO-CNN [15], BLSTM-RNN [13], and SSA-DCNN is illustrated in Figure 5.6. From this, the happy emotion achieved the highest F-measure value by the proposed method (97.98%) when compared with the existing methods GPSO-CNN (85%), BLSTM-RNN (88%), and SSA-DCNN (95%).

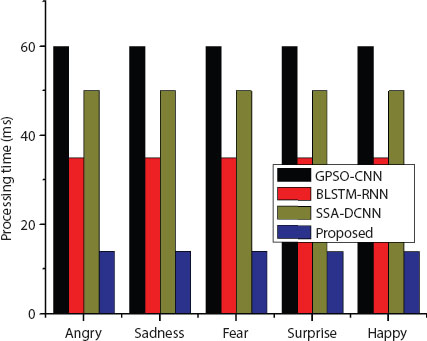

Also, the estimation of processing time is the foremost measure in this study because less processing time is more significant than high time. The processing time for the five different emotions compared with the existing methods such as GPSO-CNN [15], BLSTM-RNN [13], and SSA-DCNN is illustrated in Figure 5.7. From this, the happy emotion achieved less processing time by the proposed method (14ms) compared with the existing methods GPSO-CNN (60ms), BLSTM-RNN (35ms), and SSA-DCNN (50ms).

Figure 5.4 Comparison of accuracy over existing models.

Figure 5.5 Comparison of recall with existing methods.

Figure 5.6 Comparison of F-measure over conventional models.

Figure 5.7 Comparison of processing time over existing models.

The error value obtained from the proposed classification model for the five different emotions is compared with the existing methods such as GPSO-CNN [15], BLSTM-RNN, [13] and SSA-DCNN and illustrated in Figure 5.8. From this, the happy emotion achieved less error than the proposed method (0.01%) compared with the existing methods GPSO-CNN (1%), BLSTM-RNN (0.2%), and SSA-DCNN (0.18%).

Figure 5.8 Comparison of error rate over conventional approaches.

5.7 Conclusion

Emotion recognition is a difficult problem that is rising in relevance due to its numerous applications. Facial expressions can be useful in assessing a human’s emotion or mental state. The proposed design solutions were executed effectively and a computational simulation verified their validity. The development of a hybrid model and a program for identifying emotions by facial expression is the major result of this study. Each category of emotions has its own set of recognition accuracy metrics. Images with the emotion “happy” (99.2%) have the best recognition accuracy, while images with the emotion “sadness” have the worst (97%). Also, the comparative analysis proves that the proposed method has achieved superior performance in emotion recognition over the existing models in terms of high accuracy, precision, F-measure, recall, and less processing time as well as error rate. In the future, a new intelligent algorithm can develop with proper validation of various human emotions.

References

- 1. Ghanem, Bilal, Paolo Rosso, and Francisco Rangel. “An emotional analysis of false information in social media and news articles.” ACM Transactions on Internet Technology (TOIT) 20.2 (2020): 1-18.

- 2. Dasgupta, Poorna Banerjee. “Detection and analysis of human emotions through voice and speech pattern processing.” arXiv preprint arXiv:1710.10198 (2017).

- 3. Chen, Caihua. “An analysis of Mandarin emotional tendency recognition based on expression spatiotemporal feature recognition.” International Journal of Biometrics 13.2-3 (2021): 211-228.

- 4. Sánchez-Gordón, Mary, and Ricardo Colomo-Palacios. “Taking the emotional pulse of software engineering—A systematic literature review of empirical studies.” Information and Software Technology 115 (2019): 23-43.

- 5. Hemanth, D. Jude, and J. Anitha. “Brain signal based human emotion analysis by circular back propagation and Deep Kohonen Neural Networks.” Computers & Electrical Engineering 68 (2018): 170-180.

- 6. Du, Guanglong, et al. “A convolution bidirectional long short-term memory neural network for driver emotion recognition.” IEEE Transactions on Intelligent Transportation Systems (2020).

- 7. Ashwin, T. S., and Guddeti Ram Mohana Reddy. “Automatic detection of students’ affective states in classroom environment using hybrid convolutional neural networks.” Education and Information Technologies 25.2 (2020): 1387-1415.

- 8. Nonis, Francesca, et al. “Understanding Abstraction in Deep CNN: An Application on Facial Emotion Recognition.” Progresses in Artificial Intelligence and Neural Systems. Springer, Singapore, 2021. 281-290.

- 9. Sarmah, Dipti Kapoor. “A survey on the latest development of machine learning in genetic algorithm and particle swarm optimization.” Optimization in Machine Learning and Applications. Springer, Singapore, 2020. 91-112.

- 10. Liu, Junjie. “Automatic Film Label Acquisition Method Based on Improved Neural Networks Optimized by Mutation Ant Colony Algorithm.” Computational Intelligence and Neuroscience 2021 (2021).

- 11. Mehendale, Ninad. “Facial emotion recognition using convolutional neural networks (FERC).” SN Applied Sciences 2.3 (2020): 1-8.

- 12. Mungra, Dhara, et al. “PRATIT: a CNN-based emotion recognition system using histogram equalization and data augmentation.” Multimedia Tools and Applications 79.3 (2020): 2285-2307.

- 13. Li, Chao, et al. “Exploring temporal representations by leveraging attention-based bidirectional LSTM-RNNs for multi-modal emotion recognition.” Information Processing & Management 57.3 (2020): 102185.

- 14. Estrada, María Lucía Barrón, et al. “Opinion mining and emotion recognition applied to learning environments.” Expert Systems with Applications 150 (2020): 113265.

- 15. Gao, Zhongke, et al. “A GPSO-optimized convolutional neural networks for EEG-based emotion recognition.” Neurocomputing 380 (2020): 225-235.

Note

- ⋆ Corresponding author: [email protected]