Chapter 1

Basics of Computers, Computing, and Programming

In all things it is good to understand some foundational material before going into detail. This helps to ground you and give you some context. Inevitably, you already have some experience with computers and that does give you a bit of context to work in. However, it is quite possible that your experience is limited to rather recent technology. One of the factors that shapes the world of Computer Science is that it is fast moving and ever changing. Knowing how we got to where we are can perhaps help us see where we will be going.

1.1 History

One might think that Computer Science is a field with a rather short history. After all, computers have not existed all that long and have been a standard fixture for even less time. However, the history of Computer Science has deep roots in math that extend far back in time. One could make a strong argument that the majority of Computer Science is not even really about computers. This is perhaps best exemplified in this quote by Edsger Dijkstra, “Computer Science is no more about computers than astronomy is about telescopes.”[8] Instead, Computer Science is about algorithms. An algorithm is a formal specification for stating a method to solve a problem. The term itself is a distortion of the name al-Khwārizmī. He was Persian mathematician who lived in the 11th century and wrote Treatise on Demonstration of Problems of Algebra, the most significant treatise on algebra written before modern times. He also wrote On the Calculation with Hindu Numerals, which presented systematic methods of applying arithmetic to algebra.

One can go even further back in time depending on how flexibly we use the term computation. Devices for facilitating arithmetic could be considered. That would push things back to around 2400 BCE. Mechanical automata for use in astronomy have also existed for many centuries. However, we will focus our attention on more complete computational devices, those that can be programmed to perform a broad range of different types of computation. In that case, the first real mechanical computer design would have been the Analytical Engine which was designed by Charles Babbage and first described in 1837. Ada Lovelace is often referred to as the first programmer because her notes on the Analytic Engine included what would have been a program for the machine. For various reasons, this device was never built and as such, the first complete computers did not come into existence for another 100 years.

It was in the 1940s that computers in a form that we would recognize them today came into existence. This began with the Zuse Z3 which was built in Germany in 1941. By the end of the 1940s there were quite a few digital computers in operation around the world including the ENIAC, built in the US in 1946. The construction of these machines was influenced in large part by more theoretical work that had been done a decade earlier.

One could argue that the foundations of the theoretical aspects of Computer Science began in 1931 when Kurt Gödel published his incompleteness theorem. This theorem, which proved that in any formal system of sufficient complexity, including standard set theory of mathematics, would have statements in it that could not be proved or disproved. The nature of the proof itself brought in elements of computation as logical expressions were represented as numbers and operations were transformations on those numbers. Five years later, Alan Turing and Alonzo Church created independent models of what we now consider to be computation. In many ways, the work they did in 1936 was the true birth of Computer Science as a field and it enabled that first round of digital computers.

Turing created a model of computation called a Turing machine. The Turing machine is remarkably simple. It has an infinite tape of symbols and a head that can read or write on the tape. The machine keeps track of a current state, which is nothing more than a number. The instructions for the machine are kept in a table. There is one row in the table for each allowed state. There is one column for each allowed symbol. The entries in the table give a symbol to write to the tape, a direction to move the tape, and a new state for the machine to be in. The tape can only be moved one symbol over to the left or right or stay where it is at each step. Cells in the table can also say stop in which case the machine is supposed to stop running and the computation is terminated.

The way the machine works is that you look up the entry in the table for the current state of the machine and symbol on the tape under the head. You then write the symbol from the table onto the tape, replacing what had been there before, move the tape in the specified direction, and change the state to the specified state. This repeats until the stop state is reached.

At roughly the same time that Turing was working on the idea of the Turing machine, Church developed the lambda calculus. This was a formal, math-based way of expressing the ideas of computation. While it looks very different from the Turing machine, it was quickly proved that the two are equivalent. That is to say that any problem you can solve with a Turing machine can be solved with the lambda calculus and the other way around. This led to the so-called Church-Turing thesis stating that anything computable can be computed by a Turing machine or the lambda calculus, or any other system that can be shown to be equivalent to these.

1.2 Hardware

When we talk about computers it is typical to break the topic into two parts, hardware and software. Indeed, the split goes back as far as the work of Babbage and Lovelace. Babbage designed the hardware and focused on the basic computation abilities that it could do. Lovelace worked on putting together groups of instructions for the machine to make it do something interesting. Her notes indicate that she saw the further potential of such a device and how it could be used for more than just doing calculations.

This split still exists and is significant because most of this book focuses exclusively on one half of the split, software. To understand the software though, it is helpful to have at least some grasp of the hardware. If you continue to study Computer Science, hopefully you will, at some point, have a full course that focuses on the nature of hardware and the details of how it works. For now, our goal is much simpler. We want you to have a basic mental image of how the tangible elements of a computer work to make the instructions that we type in execute to give us the answers that we want.

Modern computers work by regulating the flow of electricity through wires. Most of those wires are tiny elements that have been etched into silicon and are only tens of nanometers across. The voltage on the wires is used to indicate the state of a bit, a single element of storage with only two possible values, on or off. The wires connect up transistors that are laid out in a way that allows logical processing. While a modern computer processor will include literally hundreds of millions of transistors, into the billions, we can look at things at a much higher level and generally ignore that existence of those individual wires and transistors.

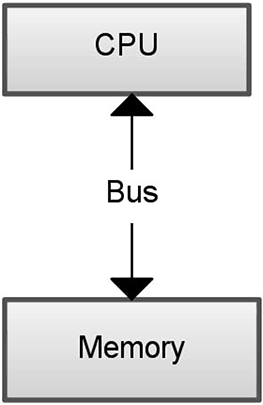

In general, modern computers are built on the von Neumann architecture with minor modifications. John von Neumann was another one of the fathers of computing. One of his ideas was that programs for a computer are nothing more than data and can be stored in the same place as the data. This can be described quite well with the help of the basic diagram in figure 1.1. There is a single memory that stores both the programs and the data used by the program. It is connected to a Central Processing Unit (CPU) by a bus. The CPU, which can be more generally called a processor, has the ability to execute simple instructions, read from memory, and write to memory. When the computer is running, the CPU loads an instruction from memory, executes that instruction, then loads another. This happens repeatedly until the computer stops. This simple combination of a load and execute is called a cycle.

This is the most basic view of the von Neumann shared memory architecture. A CPU and the memory are connected by a bus. The memory stores both data and the programs themselves. The CPU can request information from memory or write to memory over the bus.

One of the things the CPU does is to keep track of the location in memory of the next instruction to be executed. We call a location in memory an address. By default this moves forward each time by the appropriate amount to get to the next instruction. Different types of computers can have different instructions. All computers will have instructions to read values from memory, store values to memory, do basic math operations, and change the value of the execution address to jump to a different part of the program.

The individual instructions that computers can do are typically very simple. Computers get their speed from the fact that they perform cycles very quickly. Most computers now operate at a few gigahertz. This means that they can run through a few billion instructions every second. There are a lot of complexities to real modern computers that are needed to make that possible which are not encompassed by this simple image of a computer. In many ways, these are details that we do not have to worry about too much at the beginning, but they are important in professional programming because they can have a profound impact on the performance of a program.

We will spend just a few paragraphs talking about two of the complexities in modern processors as they are probably the most significant. One of them will even impact material later in this book. The first is the arrangement of memory. While the simple model shows a single block of memory connected to the CPU, modern processors have an entire memory hierarchy. This hierarchy allows machines to effectively have access to very large amounts of storage while generally giving very fast access to what is really needed.

When you talk about memory on a computer, there are two things that make it into the normal machine specifications that are advertised most of the time. Those are the amount of RAM (Random Access Memory) and the amount of disk space. The latter is generally much larger than the former. Typically speed goes inverse of size for memory, and this is true of disks and RAM. Disk drives are significantly slower to access than RAM, though what is written to them stays there even when the machine is turned off. RAM is faster than the disk, but it still is not fast enough to respond at the rate that a modern processor can use it. For this reason, processors generally have smaller amounts of memory on them called cache. The cache is significantly faster than the RAM when it comes to how fast the processor can read or write values. Even that is not enough anymore though and modern processors will include multiple levels of cache referred to as L1, L2, L3, etc. Each level up is generally bigger, but also further from the processor and slower.

Some applications have to concern themselves with these details because the program runs faster if it will fit inside of a certain cache. If a program uses a small enough amount of memory that it will fit inside of the L2 cache, it will run significantly faster than if it does not. We won’t generally worry about this type of issue, but there are many professional developers who do.

The other significant difference is that modern processors have multiple cores. What that means in our simple picture is that the CPU is not doing one instruction at a time, it is doing several. This is what we call parallel processing. When it happens inside of a single program it is referred to as multithreading. This is a huge issue for programmers because programs have not historically included multithreading and new computers require it in order to fully utilize their power. Unfortunately, making a program multithreaded can be difficult. Different languages offer different ways to approach this. This is one of the strengths of Scala and we will discuss it at multiple points in Part II of the book.

1.3 Software

The programs that are run on hardware are typically called software. The software is not a physical entity. However, without some type of software, the hardware is useless. It is the running of a program that makes hardware useful to people. As with the hardware, software can be seen as having multiple parts. It also has a layering or hierarchy to it. At the base is a layer that gives the computer the initial instructions for what to do when it is turned on. This is often called the BIOS (Basic Input/Output System). The BIOS is generally located on a chip in the machine instead of on the more normal forms of memory like the disk or RAM. Instructions stored in this way are often called firmware. This term implies that it is between the software and the hardware. The firmware really is instructions, just like any other software. In a sense it is less soft because it is stored in a way that might be impossible to write to or which is harder to write to.

The BIOS is responsible for getting all the basic functionality started up on the machine. Sitting on top of the BIOS is the Operating System (OS). The Operating System is responsible for controlling the operations of the machine and how it interacts with the user. The OS is also responsible for writing files to disk and reading files from disk. In addition, it has the job of loading other programs into memory and getting them started. Over time, the amount of functionality in operating systems has grown so that they are also expected to present nice interfaces and have all types of other “basic” functionality that really is not so basic.

At the top level are the programs that the user runs. When the user instructs the operating system to run a program, the operating system loads that program into memory and sets the execution address so that the computer will start running the program. The breadth of what programs can do is nearly unlimited.1 Everything that runs on every digital device in the world is a program. You use them to type your papers and do your e-mail. They likely also run the fuel injection system in your car and control the lights at intersections. Programs regulate the flow of electricity through the power grid and the flow of water to your indoor plumbing. Programs do not just give you the apps on your phone, they are running when you talk on the phone to compress your speech into a digital form and send it out to a local tower where another program examines it and sends it on toward the destination. On the way, it likely passes through multiple locations and gets handled by one or more programs at each stop. At some point on the other end, another program takes the digital compressed form and expands it back out to analog that can be sent to a speaker so the person you are talking to can hear it. Someone wrote each of those programs and over time more programs are being written that serve more and more different purposes in our lives.

In the last section we mentioned that newer processors have multiple cores on them. The availability of multiple cores (and perhaps multiple processors) is significant for software as well. First, they give the OS the ability to have multiple things happening at one time. All but the simplest of operating systems perform multitasking. This allows them to have multiple programs or processes running at one time. This can be done on a single core by giving each process a short bit of time and then switching between them. When there are multiple cores present, it allows the programs to truly run all at once.

Each process can also exploit its own parallelism by creating multiple threads. The OS is still generally responsible for scheduling what threads are active at any given time. This allows a single program to utilize more of the resources of a machine than what is present on a single core. While this does not matter for some specialized applications, the use of multiple cores has become more and more commonplace and the core count on large machines as well as smaller devices is currently climbing at an exponential rate. As a result, the need to multithread programs becomes ever more vital.

This increasing number of cores in machines has led to another interesting development in the area of servers. Servers are more powerful computers that are used by companies to store large amounts of data and do lots of processing on that data. Everything you do on the web is pulling data from servers. If you are at a University, odds are that they have at least one room full of servers that act as a control and data storage center for the campus.

The nature of the work that servers do is often quite different from a normal PC. A large fraction of their job is typically just passing information around and the workload for that can be very unevenly distributed. The combination of this and multiple cores has led to an increase in the use of virtualization. A virtual machine is a program that acts like a computer. It has a BIOS and loads an OS. The OS can schedule and run programs. This whole process happens inside of a program running potentially on a different OS on a real machine. Using virtualization, you can start multiple instances of one or more operating systems running on a single machine. As long as the machine has enough cores and memory, it can support the work of all of these virtual machines. Doing this cuts down on the number of real machines that have to be bought and run, reducing costs in both materials and power consumption.

1.4 Nature of Programming

Every piece of software, from the BIOS of each device to the OS and the multitude of applications they run is a program that was written by a programmer. So what is this thing we call programming and how do we do it? How do we give a computer instructions that will make it do things for us? In one sense, programming is just the act of giving the computer instructions in a format that it can work with. At a fundamental level, computers do nothing more than work with numbers. Remember the model in Figure 1.1. Each cycle the computer loads an instruction and executes it. There was a time when programming was done by writing the instructions that the machine executes. We refer to the language of these instructions as machine language. While machine language is really the only language that the computer understands, it is not a very good language for humans to work in. The numbers of machine language do not hold inherent meaning for humans, and it is very easy to make mistakes. For this reason, people have developed better ways to program computers than to write out machine language instructions.

The first step up from machine language is assembly language. Assembly language is basically the same as machine language in that there is an assembly instruction for each machine language instruction. However, the assembly instructions are entered as words that describe what they do. The assembly language also helps to keep track of how things are laid out in memory so that programmers do not have to actively consider such issues the way they do with machine language. To get the computer to understand assembly language, we employ a program that does a translation from assembly language to machine language. This program is called an assembler.

Even assembly language is less than ideal for expressing the ideas that we want to put into programs. For this reason, other languages have been created. These higher level languages use more complete words and allow a more complex organization of ideas so that more powerful programs can be written more easily. The computer does not understand these languages either. As such, they either employ compilers that translate the higher level languages into assembly then down to machine language or interpreters that execute the instructions one at a time without ever turning them into machine language.

There are literally hundreds of different programming languages. Each one was created to address some deficiency that was seen in other languages or to address a specific need. This book uses the Scala programming language. It is hard to fully explain the benefits of Scala, or any other programming language, to someone who has not programmed before. We will just say that Scala is a very high level language that allows you to communicate ideas to the computer in a concise way and gives you access to a large number of existing libraries to help you write programs that are fun, interesting, or useful.

Early on in your process of learning how to program you will likely struggle with figuring out how to express your ideas in a programming language instead of the natural language that you are used to. Part of this is because programming languages are fundamentally different than natural languages in that they don’t allow ambiguity. In addition, they typically require you to express ideas at a lower level than you are used to with natural language. Both of these are actually a big part of the reason why everyone should learn how to program. The true benefits of programming are not seen in the ability to tell a computer how to do something. The real benefits come from learning how to break problems down.

At a very fundamental level, the computer is a stupid machine. It does not understand or analyze things.2 The computer just does what it is told. This is both a blessing and a curse for the programmer. Ambiguity is fundamentally bad when you are describing how to do things and is particularly problematic when the receiver of the instructions does not have the ability to evaluate the different possible meanings and pick the one that makes the most sense. That means that programs have to be rigorous and take into account small details. On the other hand, you can tell computers to do things that humans would find incredibly tedious, and the computer will do it repeatedly for as long as you ask it to. This is a big part of what makes computers so useful. They can sift through huge amounts of data and do many, many calculations quickly without fatigue-induced errors.

In the end, you will find that converting your thoughts into a language the computer can understand is the easy part. Yes, it will take time to learn your new language and to get used to the nuances of it, but the real challenge is in figuring out exactly what steps are required to solve a problem. As humans, we tend to overlook many of the details in the processes we go through when we are solving problems. We describe things at a very high level and ignore the lower levels, assuming that they will be implicitly understood. Programming forces us to clarify those implicit steps and, in doing so, forces us to think more clearly about how we solve problems. This skill becomes very important as problems get bigger and the things we might want to have implicitly assumed become sufficiently complex that they really need to be spelled out.

One of the main skills that you will develop when you learn how to program is the ability to break problems down into pieces. All problems can be broken into smaller pieces and it is often helpful to do so until you get down to a level where the solution is truly obvious. This approach to solving problems is called a top-down approach because you start at the top with a large problem and break it down into smaller and smaller pieces until, at the bottom, you have elements that are simple to address. The solutions you get are then put back together to produce the total solution.

Another thing that you will learn from programming is that while there are many ways to break down almost any problem, not all of them are equally good. Some ways of breaking the problem down simply “make more sense”. Granted, that is something of a judgment call and might differ from one person to the next. A more quantifiable metric of the quality of how a problem is broken down is how much the pieces can be reused. If you have solved a particular problem once, you do not want to have to solve it again. You would rather use the solution you came up with before. Some ways of breaking up a problem will result in pieces that are very flexible and are likely to be useful in other contexts. Other ways will give you elements that are very specific to a given problem and will not be useful to you ever again.

There are many aspects of programming for which there are no hard and fast rules on how things should be done. In this respect, programming is much more an art than a science. Like any art, in order to really get good at it you have to practice. Programming has other similarities to the creative arts. Programming itself is a creative task. When you are programming you are taking an idea that exists in your head and giving it a manifestation that is visible to others. It is not actually a physical manifestation. Programs are not tangible. Indeed, that is one of the philosophically interesting aspects of software. It is a creation that other people can experience, but they can not touch. It is completely virtual. Being virtual has benefits. Physical media have limitations on them imposed by the laws of physics. Whether you are painting, sculpting, or engineering a device, there are limitations to what can be created in physical space. Programming does not suffer from this. The ability of expression in programming is virtually boundless. If you go far enough in Computer Science you will learn where there are bounds, but even there the results are interesting because it is possible that the bounds on computation are bounds on human thinking as well.

This ability for near infinite expression is the root of the power, beauty, and joy of programming. It is also the root of the biggest challenge. Programs can become arbitrarily complex. They can become too complex for humans to understand what they are doing. For this reason, a major part of the field of Computer Science is trying to find ways to tame the complexity and make it so that large and complex ideas can be expressed in ways that are also easy for humans to follow and determine the correctness of.

1.5 Programming Paradigms

The fact that there are many ways to break up problems or work with problems has not only led to many different programming languages, it has led to whole families of different approaches that are called paradigms. There are four main paradigms of programming. It is possible others could come into existence in the future, but what appears to be happening now is that languages are merging the existing paradigms. Scala is one of the languages that is blurring the lines between paradigms. To help you understand this we will run through the different paradigms.

1.5.1 Imperative Programming

The original programming paradigm was the imperative paradigm. That is because this is the paradigm of machine language. So all the initial programs written in machine language were imperative. Imperative programming involves giving the computer a set of instructions that it is to perform. Those actions change the state of the machine. This is exactly what you get when you write machine language programs.

The two keys to imperative programming are that the programmer specifically states how to do things and that the values stored by the computer are readily altered during the computation. The converse of imperative programming would be declarative programming where the programmer states what is to be done, but generally is not specific about how. The Scala language allows imperative style programming and many of the elements of this book will talk about how to use the imperative programming style in solving problems.

1.5.2 Functional Programming

Functional programming was born out of the mathematical underpinnings of Computer Science. The first functional languages were actually based very heavily on Church’s lambda calculus. In a way this is in contrast to imperative programming which bares a stronger resemblance to the ideas in the Turing machine. “Programming” a Turing machine is only loosely correlated writing machine language, but the general ideas of mutable state and having commands that are taken one after the other are present on the Turing machine. Like the lambda calculus, functional languages are fundamentally based on the idea of functions in mathematics. We will see a lot more of the significance of mathematical functions on programmatic thinking in chapter 5.

Functional languages are typically more declarative than imperative languages. That is to say that you typically put more effort into describing what is to be done and a lot less in describing how it is to be done. For a language to be considered purely functional the key is that it not have a mutable state, at least not at a level that the programmer notices. What does that mean? It means that you have functions that take values and return values, but do not change anything else along the way. The only thing they do is give you back the result. In an imperative language, little traces of what has been done can be dropped all over the place. Certainly, functional programs have to be able to change memory, but they always clean up after themselves so that there is nothing left behind to show what they did other than the final answer. Imperative programs can leave alterations wherever they want, and there is no stipulation that they set things back the way they found it. Supporters of functional programming often like to say that functional programs are cleaner. If you think of a program as being a person this statement makes for an interesting analogy. Were it to enter your room looking for something, you would never know, because the functional program would leave no trace of its passing. At the end, everything would be as it had started except that the function would have the result it was looking for. An imperative program entering your room might not change anything, but more than likely it would move the books around and leave your pillow in a different location. The imperative program would change the “state” of your room. The functional one would not.

Scala supports a functional style of programming, but does not completely enforce it. A Scala program can come into your room and it is easy to set it up so that it does not leave any trace behind, but if you want to leave traces behind you can. The purpose of this combination is to give you flexibility in how you do things. When a functional implementation is clean and easy you can feel free to use it. However, there are situations where the functional style has drawbacks and in those cases you can use an imperative style.

1.5.3 Object-Oriented Programming

Object-oriented programming is a relative newcomer to the list of programming paradigms. The basic idea of object-oriented programming first appeared in the SIMULA67 programming language. As the name implies, this dates it back to the 1960s. However, it did not gain much momentum until the 1980s when the Smalltalk language took the ideas further. In the 1990s, object-orientation really hit it big and now virtually any new language that is created and expects to see wide use will include object-oriented features.

The basic idea of object-orientation is quite simple. It is that data and the functions that operate on the data should be bundled together into things called objects. This idea is called encapsulation and we will discuss it a fair bit in Part II of this book. This might seem like a really simple idea, but it enables a lot of significant extras that do not become apparent until you have worked with it awhile.

Object-orientation is not really independent of the imperative and functional paradigms. Instead, object-oriented programs can be done in either a functional or an imperative style. The early object-oriented languages tended to be imperative and most of the ones in wide use today still are. However, there are a number of functional languages now that include object-oriented features.

Scala is a purely object-oriented language, a statement that is not true of many languages. What this means and the implications of it are discussed in detail in later chapters. For most of the first half of this book, the fact that Scala is object-oriented will not even be that significant to us, but it will always be there under the surface.

1.5.4 Logic Programming

The fourth programming paradigm is logic programming. The prime example language is Prolog. Logic programming is completely declarative. As the name implies, programs are written by writing logical statements. It is then up to the language/computer to figure out a solution. This is the least used of the paradigms. This is in large part because of significant performance problems. The main use is in artificial intelligence applications. There is some indication that logic programming could reappear in languages where it is combined with other paradigms, but these efforts are still in the early stages.

1.5.5 Nature of Scala

As was indicated in the sections above, Scala provides a mix of different paradigms. It is a truly hybrid language. This gives you, as the programmer, the ability to solve problems in the way that makes the most sense to you or that best suits the problem at hand. The Scala language directly includes imperative, functional, and object-oriented elements. The name Scala stands for Scalable Language, so it is not completely out of the question for them to add a library in the future that could support logic programming to some extent if a benefit to doing so was found.

1.6 End of Chapter Material

1.6.1 Summary of Concepts

- Computing has a much longer history than one might expect with roots in mathematics.

- Hardware is the term used for the actual machinery of a computer.

- Software is the term used for the instructions that are run on hardware. Individual pieces of software are often called programs.

- The act of writing instructions for a computer to solve a problem in a language that the computer can understand or that can be translated to a form the computer understands is called programming.

- Programming is very much an art form. There are many different ways to solve any given problem. and they can be arbitrarily complex. Knowing how to design good programs takes practice.

- There are four different broad types or styles of programming called paradigms.

- The imperative programming paradigm is defined by explicit instructions telling the machine what to do and a mutable state where values are changed over time.

- The functional paradigm is based on Church’s lambda calculus and uses functions in a very mathematical sense. Mathematical functions do not involve a mutable state.

- Object-orientation is highlighted by combining data and functionality together into objects.

- Logic programming is extremely declarative, meaning that you say what solution you want, but not how to do it.

- Scala is purely object-oriented with support for both functional and imperative programming styles.

1.6.2 Exercises

- Find out some of the details of your computer hardware and software.

- (a) Processor

- Who makes it?

- What is the clock speed?

- How many cores does it have?

- How much cache at different cache levels? (optional)

- (b) How much RAM does the machine have?

- (c) How much non-volatile storage (typically disk space) does it have?

- (d) What operating system is it running?

- (a) Processor

- If you own a tablet computer, repeat exercise 1 for that device.

- If you own a smartphone, repeat exercise 1 for that device.

- Briefly describe the different programming paradigms and compare them.

- List a few languages that use each of the different programming paradigms.

- Do an Internet search for a genealogy of programming languages. Find and list the key languages that influences the development of Scala.

- Go to a website where you can configure a computer (like http://www.dell.com). What is the maximum number of cores you put in a PC? What about a server?

- Go to http://www.top500.org, a website that keeps track of the 500 fastest computers in the world. What are the specifications of the fastest computer in the world? What about the 500th fastest?

1.6.3 Projects

- Search on the web to find a list of programming languages. Pick two or three and write descriptions of the basic properties of each including when they were created and possibly why they were created.

- Compare and contrast the following activities: planning/building a bridge, growing a garden, painting a picture. Given what little you know of programming at this point, how do you see it comparing to these other activities?

- Make a timeline of a specific type of computer technology. This could be processors, memory, or whatever. Go from as far back as you can find to the modern day. What were the most significant jumps in technology and when did they occur?

- One of the more famous predictions in the field of computing hardware is Moore’s law. This is the term used to describe a prediction that the number of components on an integrated circuit will double roughly every 18 months. The name comes from Intel® co-founder Gordon Moore who noted in 1965 that the number had doubled every year from 1958 to that point and predicted that would continue for at least a decade. This has now lead to exponential growth in computing power and capabilities for over five decades.3 Write a short report on the impact this has had on the field and what it might mean moving forward.

- Investigate and write a short report on some developments in future hardware technology. Make sure to include a things that could come into play when the physical limits of silicon are reached.

- Investigate and write a short report on some developments in future software technology. What are some of the significant new application areas that are on the horizon? This should definitely include a look at artificial intelligence and robotics.

- As computers run more and more of our lives, computer security and information assurance become more and more significant. Research some of the ways that computers are compromised and describe how this is significant for programmers writing applications and other pieces of software.

Additional exercises and projects can be found on the website.

1There are real limitations to computing that are part of theoretical Computer Science. There are certain problems that are probably not solvable by any program.

2At least they don’t yet. This is the real goal of the field of Artificial Intelligence (AI). If the dreams of AI researchers are realized, the computers of the future will analyze the world around them and understand what is going on.

3You can see the exponential growth of supercomputer power on plots at Top500.org.