4

Music Systems

Introduction

Music is one our most powerful tools to affect how players feel in games. The systems described in this chapter will give you an understanding of some of the key techniques involved in implementing music in games. You will be able to try out your music in these systems and to prototype new ones. It is unlikely that many of these systems would operate in exactly the same way in an actual game engine because you would have a dedicated programmer to implement them at a lower level. However, an understanding of how the systems and prototypes operate will enable you to describe to the programmer precisely the effect you are after. By looking at the systems you have built in Kismet, your programmer will be able to replicate them a lot more efficiently. We’ll focus here on techniques to get your music working and sounding good in a game. Although music is typically streamed from disk, it is not immune to the concerns over memory that we discussed in Chapter 2. See the section titled “Streaming” in Appendix B for some specific advice on memory management for music.

As you’re reading this section, you’re obviously interested in writing or implementing music in games, so we’re going to assume some musical knowledge. If you don’t know how to write music then we’d suggest you put this book down, and go and learn about pitch, rhythm, harmony, timbre, texture, instrumentation, and how to structure all these things into a successful musical whole.

You’re back. Well done! Now let’s move on.

If you want to write music for games, you need to learn to write music … for … games. It’s not a shortcut to that film music job you always wanted. You need to play, understand, and love games and, yes, if you skipped straight to this chapter in the hope of a shortcut, you will have to go back and read the earlier chapters properly to understand the implementation ideas ahead. Your music works alongside, and hopefully with, the sound in games, so you need to understand both.

If you take a flick through the following pages and are thinking “but I’m a composer, not a programmer,” then we can understand that initial reaction. However, in games audio you cannot separate the creation from the implementation. If you want your music to sound the best, and be the most effective that it can be, then you need to engage with the systems that govern it. As you will no doubt recognize from the discussions that follow, the production and recording methods involved in producing music for games share a great deal with those used for film, but there are some important differences. As these are beyond the remit of this book, we’ll provide some links to further reading on this topic in the bibliography.

Don’t worry. Writing good music is possibly one of the most complicated things you can do. If you’ve mastered that task, then this part is easy.

Styles and Game Genres

Certain genres of games have become closely associated with particular styles of music. That’s not to say that these traditions can’t be challenged, but you should acknowledge that players may come to your game with preexisting expectations about the style of music they will experience. This is equally true of the producers and designers who may be commissioning the music. The following list of game categories is intended to be a broad overview.

Social/Casual/Puzzle

The music for this type of game is often repetitive. Perhaps there is something in the mesmeric quality of these games that suits the timelessness or suspension of time implied by a repeatedly looping music track. The more pragmatic answer is that by nature many of these games are often fairly undemanding in terms of processing so that they can be accessible to older platforms or work on the web. They will also often be played without the audio actually switched on (in the office), so music is not thought to be a great consideration and typically receives little investment. This leads to simple repetitive loops, which are the easiest to process (and the cheapest music to make).

Platformer/Arcade

In the public’s imagination, it is this genre that people still associate most closely with the idea of “game music.” The synthesized and often repetitive music of early games has a retro appeal that makes music in this genre really a case of its own. What might be considered repetitive level music within other genres is still alive and well here, the iconic feedback sounds of pickups and powerups often blur the line between “music” sounds and “gameplay” sounds, and it is not unusual to find an extremely reactive score that speeds up or suddenly switches depending on game events.

Driving/Simulation/Sports

Driving is closely associated with playing back your own music or listening to the radio, so it is not surprising that the racing genre remains dominated by the use of the licensed popular music sound track. In the few cases where an interactive score has been attempted, it has usually been in games that have extended gameplay features such as pursuit. In a straightforward driving game where race position is the only real goal, perhaps the repetitive nature of the action and limited emotional range of the gameplay would make any attempts at an interactive score too repetitive. Simulations typically do not have music (apart from in the menu), and sports-based games aim to closely represent the real-life experience of a sporting event, which of course does not tend to have interactive music, apart from perhaps some responsive jingles. On the rare occasions where music is used, it tends again to be the licensed “radio” model consisting of a carefully chosen selection of tracks that are representative of the culture or community of the sport.

Strategy (Real Time and Non)/Role-Playing Games/Massively Multiplayer Online Games

The music in this genre is often important in defining both the location and the culture of the player’s immediate surroundings (see “Roles and functions of music in games,” presented later) although it often also responds to gameplay action. Attempting to provide music for an experience that can last an indefinite amount of time, together with the complexities of simultaneously representing multiple characters through music (see the discussions of the “Leitmotif,” below) means that this genre is potentially one of the most challenging for the interactive composer.

Adventure/Action/Shooter

Probably the most cinematic of game genres, these games aim to use music to support the emotions of the game’s narrative. Again this presents a great challenge to the composer, who is expected to match the expectations of a Hollywood-type score within a nonlinear medium where the situation can change at any time given the choices of the player.

Roles and Functions of Music in Games

There are usually two approaches to including music in a game. The first is to have music that appears to come from inside the reality of the game world. In other words, it is played by, or through, objects or characters in the game. This could be via a radio, a television, a tannoy, or through characters playing actual instruments or singing in the game. This type of music is commonly referred to as “diegetic” music. Non-diegetic music, on the other hand, is the kind with which you’ll be very familiar from the film world. This would be when the sound of a huge symphony orchestra rises up to accompany the heartfelt conversation between two people in an elevator. The orchestra is not in the elevator with them; the music is not heard by the characters in the world but instead sits outside of that “diegesis” or narrative space. (The situation of the game player does confuse these definitions somewhat as the player is simultaneously both the character in the world and sitting outside of it, but we’ll leave that particular debate for another time, perhaps another book.)

Whether you choose to use diegetic or non-diegetic music for your game, and the particular approach to using music you choose, will of course be subject to the specific needs of your game. Here we will discuss a range of roles and functions that music can perform in order to inform your choices.

You’re So Special: Music as a Signifier

Apart from anything else, and irrespective of its musical content, simply having music present is usually an indication that what you are currently experiencing is somehow special or different from other sections of the game that do not have music. If you watch a typical movie, you’ll notice that music is only there during specific, emotionally important sections; it’s not usually wallpapered all over this film (with a few notable exceptions). The fact that these occasions have music marks them as being different, indicating a special emotional significance. In games, music often delineates special events by signifying the starting, doing, and completion of tasks or sections of the game. By using music sparsely throughout your game, you can improve its impact and effectiveness in highlighting certain situations. If music is present all the time, then it will have less impact than if it only appears for certain occasions.

This Town, is Coming Like a Ghost Town: Cultural Connotations of Instrumentation and Style

Before you write the first note, it’s worth considering the impact of the palette of instruments you are going to choose. Although the recent trend has been for games to ape the Hollywood propensity for using large orchestral forces, it’s worth considering what alternative palettes can bring. The orchestral score has the advantage that it is, to some extent, meeting expectations within certain genres. It’s a sound most people are familiar with, so it will probably produce less extreme responses (i.e., not many people are going to hate it). Of course, the melodies, harmonies and rhythms you use are also essential elements of style, but instrumentation remains the most immediately evocative. By choosing to use a different set of instruments, you can effectively conjure up a certain time period or geographical area (e.g., a Harpsichord for the 17th century, Bagpipes for Scotland). If you think of some of the most iconic film music of the previous century (leaving John Williams to one side for a moment), there is often a distinct set of instruments and timbres that has come to influence other composers and define certain genres (think of Ennio Morricone’s “spaghetti westerns” or of Vangelis’ synthesized score for Blade Runner).

In addition to conjuring up a specific time and place, these instruments—and, of course, the musical language—also bring significant cultural baggage and hence symbolic meaning. Different styles of music are often closely associated with different cultural groups, and by using this music or instrumentation you can evoke the emotions or culture ascribed to these groups. This is particularly effective when using diegetic music (e.g., from a radio), as you are effectively showing the kind of music that these people listen to and therefore defining the kind of people they are.

You Don’t Have to Tell Me: Music as an Informational Device

Sometimes it is appropriate to make explicit the link between the music and the game variables so that the music acts as an informational device. Music often provides information that nothing else does; therefore, you need it there in order to play the game effectively. The music could act as a warning to the player to indicate when an enemy approaches or when the player has been spotted. The details and consequences of linking music to the artificial intelligence (AI) in such a way must be carefully considered. Depending on your intentions you may not want the music to react simply when an enemy is within a given distance, as this may not take orientation into account. Do you want the music to start even when you can’t see the enemy? Do you want it to start even when the enemy can’t see you? Again, your answer will depend on your particular intentions and what is effective within the context of your game.

He’s a Killer: Music to Reveal Character

One of the most powerful devices within film music is the “Leitmotif.” Originally a technique associated with opera, it assigns specific musical motifs to specific characters. For example, the princess may have a fluttery flute melody, and the evil prince’s motif may be a slow dissonant phrase played low on the double bass. The occurrence of the motif can, of course, inform the player of a particular character’s presence, but additionally the manipulation of the musical material of the motif can reveal much about both the nature of the character and what the character is feeling at any given time. You may hear the princess’s theme in a lush romantic string arrangement to tell you she’s in love, in a fast staccato string arrangement to tell you that she’s in a hurry, or with Mexican-type harmonies and instrumentation to tell you that she’s desperately craving a Burrito.

You might choose to have explicit themes for particular characters, for broad types (goodies/baddies), or for particular races or social groups. What you do musically with these themes can effectively provide an emotional or psychological subtext without the need for all those clumsy things called “words.”

In Shock: Music for Surprise Effect

A sudden and unexpected musical stab is the staple of many horror movies. One of the most effective ways of playing with people’s expectations is to set up a system that’s a combination of the informational and character-based ideas described earlier. In this scenario, the music predictably provides a warning of the approach of a particular dangerous entity by playing that character’s motif. By setting up and continually re-establishing this connection between music and character, you set up a powerful expectation. You can then play with these expectations to induce tension or uncertainty in the listener. Perhaps on one occasion you play the music, but the character does not actually appear, on another you again override this system in order to provoke shock or surprise by having the danger suddenly appear, but this time without the warning that the player has been conditioned to expect.

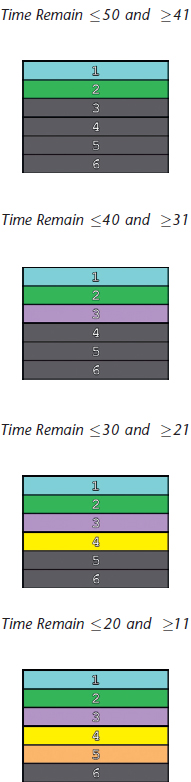

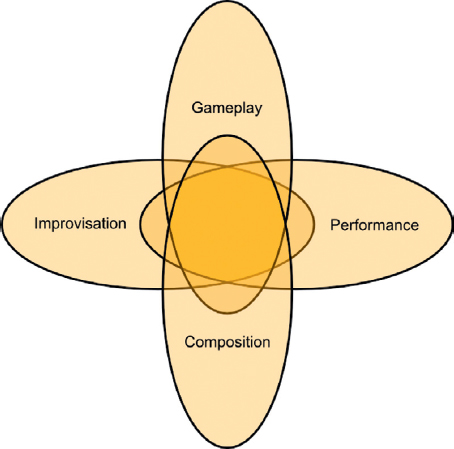

Hey Mickey, You’re So Fine: Playing the Mood, Playing the Action

With a few notable exceptions, music in games tends toward a very literal moment-by-moment playing of the action and mood of the game, rarely using the kind of juxtaposition or counterpoint to the visuals you see in films. When music is used to closely highlight or reinforce the physical action on the screen, it is sometimes referred to disparagingly as “Mickey Mousing.” This derives, of course, from the style of music in many cartoons which imitates the actions very precisely in a way that acts almost as a sound effect, with little in the way of a larger form of musical development. You can do this in a linear cartoon as the action occurs over a fixed and known time period; this way, you can plan your music carefully so that it is in the correct tempo to catch many of the visual hits. Although game music is rarely so closely tied to the physical movement or action taking place (partly because of the difficulty in doing so), it often responds to communicate the game situation in terms of shifting to different levels of intensity or mood depending on gameplay variables such as the presence of enemies, the number of enemies within a given distance of the player, player health, enemy health, weapon condition, amount of ammo, distance from save point or target location, or pickups/powerups. Along with these reactive changes in intensity there might also be “stingers,” short musical motifs that play over the top of the general musical flow and specifically pick out and highlight game events.

Punish Me with Kisses: Music as Commentary, Punishment, or Reward

Related to playing the action of a game, music can also fulfill an additional function of acting as a punishment or reward to the player. You might use music to comment on actions that the player has just undertaken in a positive or negative way, to encourage the player to do something, or to punish the player for doing the wrong thing.

It’s a Fine Line between Love and Hate

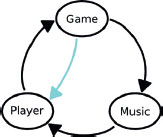

The way in which music and narrative interact could be seen as a continuum between Mickey Mousing at one end and actively playing against the apparent emotion of the scene at the other:

![]()

Hitting the action (Mickey Mousing)

Synchronising musical gestures with the physical action on the screen

Playing the emotion (Empathetic Music)

The music works in empathy with the emotions of the character or characters on the screen

Playing the meta-emotion (Narrative Music)

Here music might not directly reflect the moment to moment emotional state of the characters but instead might play a more over-arching emotion for the entire scene or indicate some larger scale narrative point

Counterpoint (Anempathetic Music)

This music will actually play against or be indifferent to the emotions that appear visually. The juxtaposition of happy upbeat music with a tragic scene for example.

Choosing the degree to which music is going to respond to the game action or narrative emotion is a difficult decision. If the music is too reactive, players can feel that they are “playing” the music rather than the game, and this can break their immersion in the game world. If there is too little reaction, then we can miss opportunities to heighten the player’s emotion through music.

Together with the game designer, you need to make emotional sense of the combination of the games variables. Music is effective in implying levels of tension and relaxation, conflict and resolution. Choosing whether to score the blow-by-blow action or the larger emotional sweep of a scene will depend on your aims. You can avoid the relationship between the game system and the music becoming too obvious by sometimes choosing not to respond, as this will reduce the predictability. However, sometimes you may want a consistent response because the music may be serving a more informational role.

Here are some questions to think about when deciding on how to score the action or mood:

• Are you scoring what is happening in terms of highlighting specific actions?

• Are you scoring what is happening in terms of the underlying mood or emotion you want to convey?

• Are you scoring what is happening now, what has happened, or what is about to happen?

Making the Right Choice

Bearing in mind some of the possible functions of music just described, you and your team need to decide upon what you aim to achieve with the music and then implement an appropriate mechanism to deliver this aim. Let’s be clear, it is perfectly possible to implement music into games effectively using simple playback mechanisms. It depends on what is appropriate for your particular situation. Many people are totally happy with simple linear music that switches clumsily from one piece to another. Look at the time and resources you have available together with what the game actually needs. Some circumstances will be most effective with linear music, and others may need a more interactive approach. Your experience with the prototypes outlined here should help you to make the right choice.

Music Concepting/Spotting/Prototyping/Testing

Most game producers, like most film directors, have little or no understanding of music and how to use it. The difference is that in film it doesn’t matter as much because the producer hands over the final edit to the composer and they can make it work. The problem for the composer for games is that the game producer, who doesn’t really understand music, will not have built a proper system to use it effectively. Either on your own or together with a game designer you should demonstrate your ideas and get involved with the process as early as possible.

It is not always meaningful to separate out these process into these stages because the implementation can (and should) often affect the stylistic considerations, but we will attempt to do so for the purposes of illustration.

Concepting

It’s quite likely that you will have been chosen for a composition role because of music you have written in the past or a particular piece in your show reel. Although this may seem like an established starting point, before you spend lots of time actually writing music consider putting a set of reference tracks together to establish the kind of aesthetic or tone your producer is after. This may differ from what you thought. Words are not accurate ways of describing music, especially to most people who lack the appropriate vocabulary, so some actual musical examples can often save a lot of confusion.

Editing together a bunch of music to some graphics or illustrations of the game can be an effective shortcut to establishing the style that the producers are after. You should also discuss other games (including previous games in the series) and movies as reference points. Certain genres of Hollywood film composition have become so established that they are a kind of shorthand for particular emotions (think of John Williams’ mysterious Ark music from Raiders of the Lost Ark, for example). There are many other approaches that could work; so rather than just pastiche these musical archetypes, why don’t you try to bring something new to the table that the producers might not have thought of? (If they hate it, that’s fine, as you will have also prepared another version in the more predictable style.)

Spotting

Spotting is a term taken from the film industry that describes what happens when a director and composer sit down and watch the film together to establish where the music is going to be. During this discussion they not only decide on start points and end points but also discuss the nature of the music in detail, the emotional arc of the scene, and its purpose. Unfortunately, when writing music for games you are often working on an imaginary situation with possibly only a text-based game design document to go on. Along with the producer or designers, you should discuss the roles you want music to play, the desired emotion or goal of the scenario, and how it might reflect the conflict and resolutions within the gameplay or the tempo of the action. You should establish particular events that require music, start points, end points, and how the music will integrate with different gameplay variables such as player health, enemy health, and so on. It’s important to have access to the right data so that you are able to discuss how to translate that data into emotional, and therefore musical, meaning.

During this process you should try to get involved as early as possible to act as a convincing advocate for music. Importantly, this also involves, where appropriate, being an advocate for no music!

Prototyping

As part of the concepting process, it’s important that you try not to be simply reactive, just supplying the assets as requested. To develop game music, you should be an advocate for its use. This means putting your time and effort where your mouth is and demonstrating your ideas.

It’s a continuing irony that decisions on interactive music are often made without it actually being experienced in an interactive context. Often music will be sent to the designers or producers and they will listen to it as “music” and make decisions on whether or not they like it. However, music for games is not, and should not be, intended to be listened to as a piece of concert music. Its primary goal should be to make a better game. Therefore, it should be heard in the interactive context within which it will be used. (This is not to say that there are not many pieces that are highly successful as concert music, just that the primary purpose of game music should be to be game music.) This problem is exacerbated by the fact that people’s musical tastes are largely formed by their exposure to linear music in films (and mostly of the “Hollywood” model).

Some people continue to argue that it is not necessary for composers to write music with the specifics of gameplay in mind, that they should simply write music in the way they always have, and then the game company’s little army of hacks will come along to cut and splice the music for interactive use. In some cases this process can be perfectly adequate, but there should be opportunities for the more intelligent composer to face the challenge more directly.

Although it is possible to prototype and demonstrate your music systems in some of the excellent middleware audio solutions (such as FMOD by Firelight Technologies and Wwise by AudioKinetic), an in-game example is always going to be more meaningful than having to say, “Imagine this fader is the player’s health, this one is the enemy’s proximity, oh, and this button is for when the player picks up an RBFG.”

Either by developing example systems on your own, based on the examples that follow, or by working directly with a game designer, the earlier you can demonstrate how music can contribute to the game, the more integral it will be considered. Remember, the aim is to not only make the use of music better but by extension, and more importantly, to make the game better.

Testing

The importance of testing and iteration can never be underestimated in any game design process and music is no exception. As you address these issues, keep in mind that the degree of reactiveness or interactivity the music should have can only be established through thorough testing and feedback. Obviously you will spend a significant amount of time familiarizing yourself with the game to test different approaches yourself, but it is also valuable to get a number of different people’s views. You’d be amazed how many QA departments (the quality assurance department, whose job it is to test the games) do not supply their testers with decent quality headphones. Of those actually wearing headphones, you’d also be surprised at the number who are actually listening to their friend’s latest breakbeat-two-step-gabba-trance-grime-crossover track, rather than the actual game.

In addition to determining whether people find that Bouzouki intro you love a bit irritating when they have to restart that checkpoint for 300th time, you may also discover that users play games in very different ways. Here are a few typical player approaches to “Imaginary Game”: Level 12c:

1. The Creeper. This player uses stealth and shadows to cunningly achieve the level’s objectives without being detected at any point (apart from just before a rather satisfying neck snap).

2. The Blazer. This player kicks open the door, a pistol on each hip, and doesn’t stop running or shooting for the next three minutes, which is how long it will take this player to finish the level compared to the creeper’s 15 minutes.

3. The Completist. This player is not satisfied until the dimmest crevice of every corner of the level has been fully explored. This player will creep when necessary or dive in shooting for some variation but will also break every crate, covet every pickup, read every self-referential poster and magazine cover, and suck up every drop of satisfaction from level 12c before moving on.

You should also note the positions of any save points, where and how many times people restart a level. Do you want your music to repeat exactly the same each time? By watching these different players with their different approaches, you may find that a one-size-fits-all solution to interactive music does not work and perhaps you need to consider monitoring the playing style over time and adapting your system to suit it.

The Key Challenges of Game Music

Once you’ve decided on the main role, or roles, you want the music to play in your game, two fundamental questions remain for implementing music in games, which will be the concerns of the rest of this chapter:

1. The transitions question: If the music is going to react to gameplay, then how are you going to transition between different pieces of music, when game events might not happen to coincide with an appropriate point in the music? This does not necessarily mean transitioning from one piece of music to another, but from one musical state to another. The majority of issues arising from using music effectively in games arise out of the conflict between a game’s interactive nature, where events can happen at any given moment, and the time-based nature of music, which relies on predetermined lengths in order to remain musically coherent.

2. The variation question: How can we possibly write and store enough music? In a game you typically hear music for a much longer period than in a film. Games may last anything from ten hours up to hundreds of hours. Given huge resources it may be possible to write that amount of music, but how would you store this amount of music within the disk space available?

The only way to decide what works is to try the music out in the context to which it belongs—in an actual game.

Source Music/Diegetic Music

Sometimes the music in games comes from a source that is actually in the game world. This is referred to as “diegetic” music in that it belongs to the diegesis, the story world. This is more representative of reality than the music that appears to come from nowhere; the “non-diegetic” score you more usually associate with film. The style of diegetic music you choose can carry a strong cultural message about the time, the place, and the people listening to it.

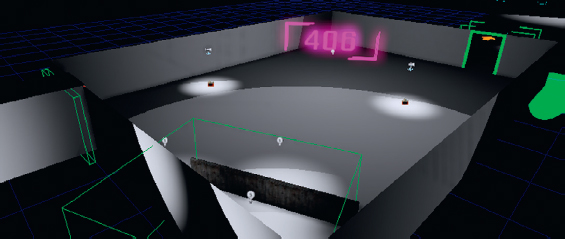

400 Radio Days

Outside of, and on the approach to, Room 400 you can hear two sources of diegetic music—that is, music that originates from “real” objects in the game world, in this case a Tannoy and a Radio. This kind of music can be used to define the time period, location, or culture in which we hear it. For example, if you heard gangsta rap on the radio it would give you the distinct impression that you weren’t walking into a kindergarten classroom!

As well as defining the place where you hear it, you can use sources of music in your games to draw the player toward, or away from, certain areas. The sound of a radio or other source playing would usually indicate that there are some people nearby. Depending on your intentions, this could serve as a warning, or players may also be drawn toward the source of the music out of curiosity.

You can interact with this radio to change channels by pressing E. This plays a short burst of static before settling on the next station. If you so wish, you can also shoot it. You did already, didn’t you?

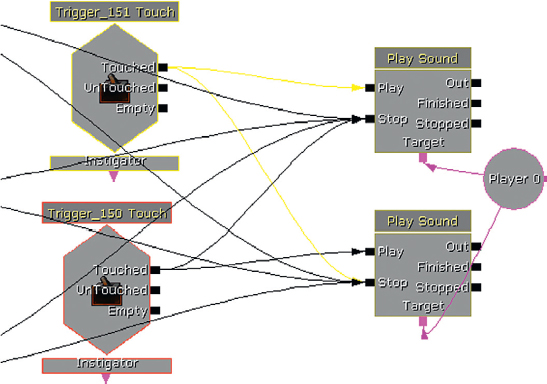

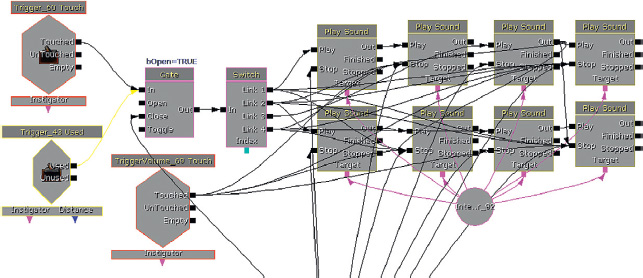

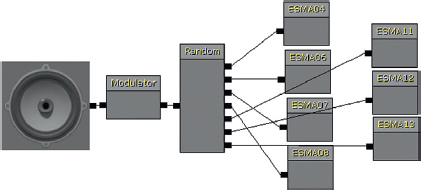

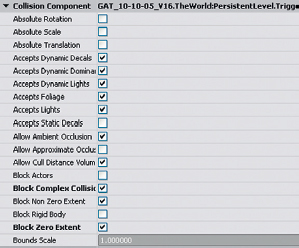

When you enter the outer corridor, a [Trigger] goes through the [Switch] to start the first [PlaySound], which holds our first music [SoundCue]. Notice that the target for the [PlaySound] object is the [StaticMesh] of the radio itself so that the sound appears to come from the radio rather than directly into the player’s ears. This spatialization will only work with mono sound files. As explained in Chapter 3, stereo sound files do not spatialize. (If you really want the impression of a diegetic stereo source that appears spatialized in game, then you’ll have to split your channels and play them back simultaneously through “left” and “right” point sources in the game.)

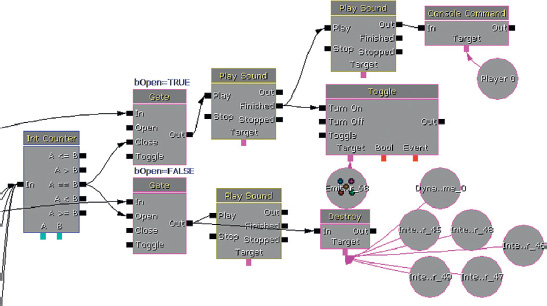

When the [Trigger] surrounding the radio is used, it then switches a new track on (with a [PlaySound] in between for the static crackles) and switches all the others off. In the [Switch]’s properties it is set to loop, and so after switch output 4 has been used it will return to switch output 1. Finally there is a [TriggerVolume] when leaving the room that switches them all off so that this music doesn’t carry over into the next room as well.

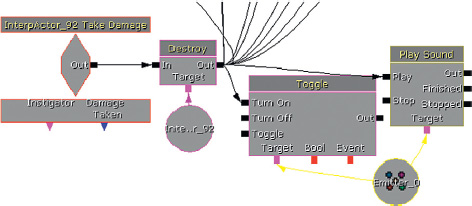

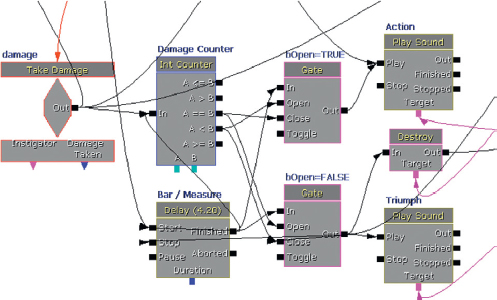

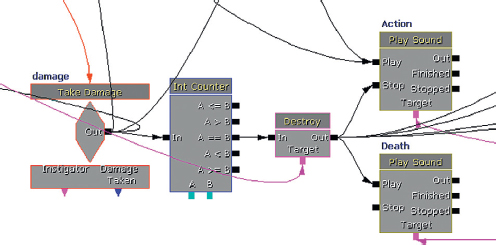

The [Gate] is there so that if or when the player decides to shoot the radio the Touch messages no longer get through. The [Take Damage] event destroys the radio [InterpActor], fires off an explosion [Emitter] and sound, and turns off all the [PlaySound]s. (For more on [Take Damage] events, see Appendix C: UDK Tips).

Exercise 400_00 Diegetic Music

In this room there are several possible sources for music. Add music to the sources. Perhaps include a “use” action to switch them on/off or to change the music that is playing.

Tips

1. In Kismet create a new [PlaySound] object (Right-Click/New Action/Sound/PlaySound, or hold down the S key and click in an empty space in the Kismet window).

2. Add your [SoundCue] to the [PlaySound] as usual by selecting it in the Content Browser and then clicking the green arrow in the [PlaySound]’s properties.

3. To choose where the sound plays from, select a [StaticMesh] in your Perspective view and then right-click on the Target output of the [PlaySound]. Choose “New Object Var Using Static Mesh (***).”

4. Remember from Chapter 3 that any stereo files will be automatically played back to the player’s ears, so if you want your music to sound localized to this object, you should use mono files and adjust the [Attenuation] object’s settings within your [SoundCue].

5. To start your [PlaySound], either use a [Level Start] event (Right-Click New event/Level Startup), a [Switch] (New Action/Switch/Switch), or a [Trigger].

Linear Music and Looping Music

The music for trailers, cut-scenes, and credits is produced in exactly the same way as you might create music for a film, animation, or advert. Working to picture in your MIDI sequencer or audio editor, you write music to last a specific length of time and hit specific spots of action within the linear visuals.

Composing and implementing music for trailers or cutscenes is not unique to games audio. Because they last a specific length of time, you can use the same approach you would use to write music for a film or animation. We’re interested here in the specific challenges that music for games throws up, so we’re not going to dwell on linear music. Instead we’re going to work on the presumption that you want your music to react (and perhaps interact) with gameplay in a smooth, musical way.

Perhaps an exception in which linear audio starts to become slightly interactive would be in so-called looping “lobby” music that might accompany menu screens. It’s “interactive”, as we can stop it to enter or exit the actual game at any time. This is a source of many heinous crimes in games audio. Producers that will lavish time and effort on and polishing every other aspect of a game will think nothing of brutally cutting off any music that’s playing during these menu scenes (and Pause menus). We understand that if players want to start (or exit) the game, then they want an immediate response. It would be possible to come up with more musical solutions to this but the least your music deserves is a short fade out.

Using the Fade Out functionality of the [PlaySound] object in Kismet would at least improve some of the clumsy attempts to deal with this issue.

If you don’t respect the music in your game, it shows that you don’t respect your game. After all, the music is part of your game, isn’t it? Don’t cut off music for menus or do clumsy fades—it’s just not classy!

Avoid the Problem: Timed Event Music

Often the most effective, and least complex, way of using music in games is to use it only for particular scenarios or events that last a set amount of time. This way you can use a linear piece of music that builds naturally and musically to a climax. Although it appears that the player is in control, the scenario is set to last for an exact amount of time, whether the player achieves the objective or not.

Linear music can be very effective if the game sequence is suitably linear as well. An event that lasts a specific amount of time before it is either completed or the game ends allows you to build a linear piece to fit perfectly.

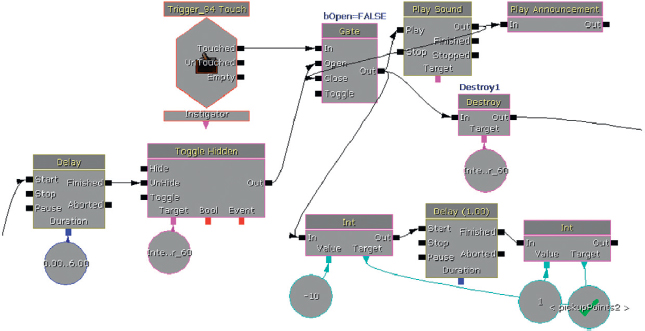

401 Timed Event

On entering this room you are told that the “Flux Capacitor” barring entry to the next room is dangerously unstable. You’ve got to pick up six energy crystals and get them into the Flux Capacitor before she blows. If you manage it, you’ll be able to get to the next room. If you don’t…

Around the room are a number of [InterpActor]s. Each one has a [Trigger] around it so it will sense when it is being “picked up.” As you enter the room, a [Trigger] Touch object starts the music track and a series of [Delay]s. The total value of all the [Delay]s is two minutes precisely. After the first delay time, one of the [InterpActor]s is toggled to Unhide by a [Toggle Hidden] object. At the same time, a [Gate] object is opened to allow the [Trigger] that surrounds this [InterpActor] to be utilized. This can then play the pickup sound and destroy the [InterpActor]. When the player then runs over to deposit this into the flux capacitor, a [Trigger] around this object is allowed through an additional [Gate] to play the [Announcement], telling the player how many are remaining.

Each time the pickup is “deposited,” the [Play Announcement] outputs to an [Int Counter]. As we saw earlier, this object increments its value A by a set amount (in this case 1) every time it receives an input. It then compares value A to value B (in this case set to 5). If value A does not equal value B, then the [Gate] that leads to the explosion event is left open. As soon as value A does equal value B (in other words when all five of the energy crystals have been picked up and deposited into the flux capacitor), the A==B output is triggered. This closes the explosion [Gate] and opens the triumph [Gate] so that when the music has finished, its output goes to the appropriate place. This also destroys the [DynamicBlockingVolume] that so far has been blocking our exit.

If you can still read this through your tears of bewilderment, the good news is that in terms of the actual music part of this system, it is probably the simplest you can imagine. When the events are started by the initial [Trigger] touch, this goes to a [PlaySound] object, which plays the music [SoundCue].

The [SoundCue] targets the [Player] so it is played back to the player’s ears and does not pan around. This is the most appropriate approach from music that is non-diegetic (i.e., music that does not originate from a source object within the game world).

Avoid the Problem: Non-Time-Based Approaches Using Ambiguous Music

The problems with musical transitions in response to gameplay events discussed earlier (and continued in the following examples) can in certain circumstances be avoided by using music that is ambiguous in tonality and rhythm. People will notice if a rhythm is interrupted, they will notice if a chord sequence never gets to resolve, and they will notice if a melody is left hanging in space. If none of these issues were there in the first place, then you won’t have set up these expectations. So avoid rhythm, tonality, and melody? You might ask if there’s any music left? Well, if you’re working in the horror genre, it’s your lucky day as this typically makes use of the kind of advanced instrumental and atonal techniques developed by composers in the 20th century that can deliver the kind of flexible ambiguity we’re after.

402 Fatal Void

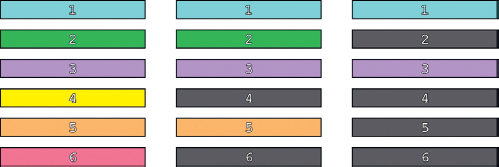

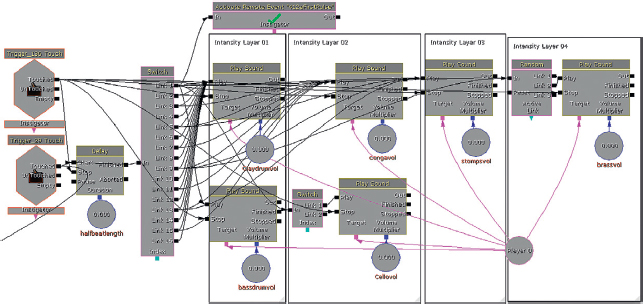

In this room there are three “bad things.” Each of these bad things carries a “scare” rating of 1. So if two overlap where you’re standing, then you have a scare rating of 2. If you are in the area where all three overlap, then things are looking bad with a scare rating of 3.

These scare ratings are associated with music of a low, medium, and high intensity. The music itself is fairly static within its own intensity. As you listen, notice that no real rhythmic pulse has been established, nor is any particular chord or tonality used. This makes it less jarring when the music crossfades between the three levels. (This is our homage to the music of a well-known game. If you haven’t guessed already, see the bibliography.)

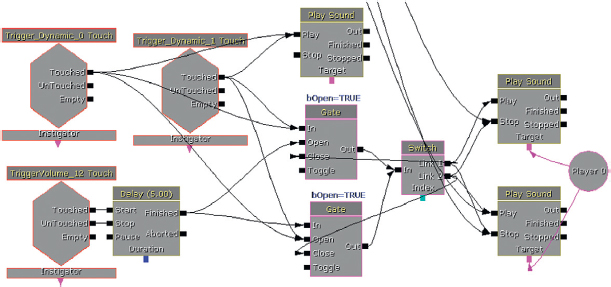

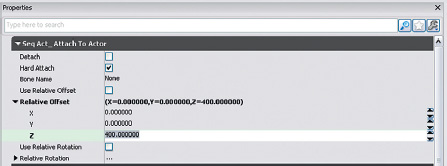

On the level start a [Trigger_Dynamic] (Actor Classes/Trigger/Trigger_Dynamic) and a [Particle Emitter] are attached to the two moving lights using an [Attach to Actor] object. A [TriggerVolume] on entry to the room starts the movement of the two colored lights, which is controlled in the usual way by a [Matinee] object. The [Trigger_Dynamic]s are movable triggers, which will now follow the movement of the lights. As the lights are higher up and we want the triggers to be on the floor, we have offset the triggers from the lights by 400 units in the vertical plane.

Attach to Actor properties.

When any of these triggers (including the central light area, which does not move) are touched, it adds to a central variable; when it is untouched, it subtracts from it. This central variable therefore represents our “scare” factor. Three [Compare] objects compare this variable to the values 3, 2, or 1 and then play the corresponding sound file. If the “scare” variable is the same as their compare value, they play their sound; if it differs, then they stop their [Playsound]. Each [PlaySound] object has a Fade in time of two seconds and a Fade out time of five seconds to smooth the transitions between the different levels of intensity.

The use of music that is ambiguous in its tonality and rhythmic structure can make the transitions necessary in interactive games appear less clumsy.

Exercise 402_00 Ambiguous

In this room there are four switches. The more that are switched on, the greater the danger. See if you can adapt the system and add your own ambiguous music so that the music changes with any combination of switches.

Tips:

1. Add a [Trigger] for each of the switches. Right-click in the Perspective viewport and choose Add Actor/Add Trigger. With the [Trigger] selected, right-click in Kismet and choose New Event using (Trigger***) Used.

2. In the [Trigger] Used event properties in Kismet, uncheck Aim to Interact and change Max Trigger count to zero.

3. In Kismet, duplicate the system from Room 402 so that your triggers add or subtract from a central variable.

4. By changing value B within the properties of the [Compare Int], you can choose which one triggers which level of music.

5. Experiment with different fade in/fade out lengths for your [PlaySound]s until you achieve a satisfying crossfade.

Avoid the Problem: Stingers

Sometimes you don’t want the whole musical score to shift direction, but you do want to acknowledge an action. Perhaps the action is over too quickly to warrant a whole-scale change in musical intensity. “Stingers” are useful in this regard, as they should be designed to fit musically over the top of the music that is already playing.

403 Music Stingers

A stinger is a short musical cue that is played over the top of the existing music. This might be useful to highlight a particular game event without instigating a large-scale change in the music. Two bots are patrolling this area. If you manage to shoot either one, then you are rewarded with a musical “stinger.”

When you enter the room, the [TriggerVolume] begins the background music via a [PlaySound] and also triggers the bots to start patrolling.

The bots that are spawned can send out a signal in Kismet when they [Take Damage], and this is used to trigger the musical stinger over the top via another [PlaySound]. (See the Spawning and Controlling Bots section of Appendix C: UDK Tips).

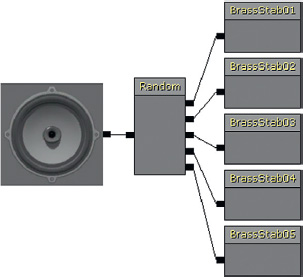

The stinger [SoundCue] is made up of five brass stabs that are selected at random.

This example works pretty well because the background music is purely rhythmic. This means that whatever melody or harmony the stinger plays over the top will not clash with the music already playing. If you want to put stingers over music that fits more rhythmically or harmonically, then we need to extend the system’s complexity to take into account of where we are in the music when the stinger is played.

Exercise 403_00 Stingers

The player needs to jump across the lily pads to get to the other side of the river. Set up a music background that loops. Now add a stinger so that every time the player successfully jumps on a moving pad there is a rewarding musical confirmation.

Tips

1. Set up your background using a looping [SoundCue] in a [PlaySound] triggered by either Level Start (New Event/Level Startup) or a [Trigger] touch.

2. The [InterpActor]s are already attached to a matinee sequence (created by right-Clicking in Kismet and choosing New Event using InterpActor (***) Mover). This has a [Pawn Attached] event, which you can use to trigger another [PlaySound] for your stinger(s).

403a Rhythmically Aware Stingers

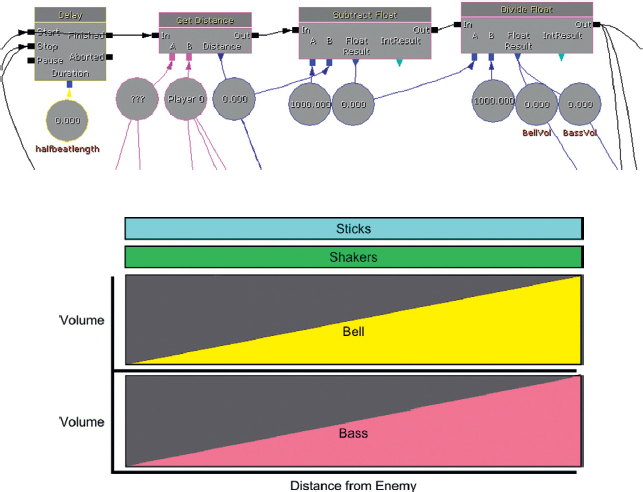

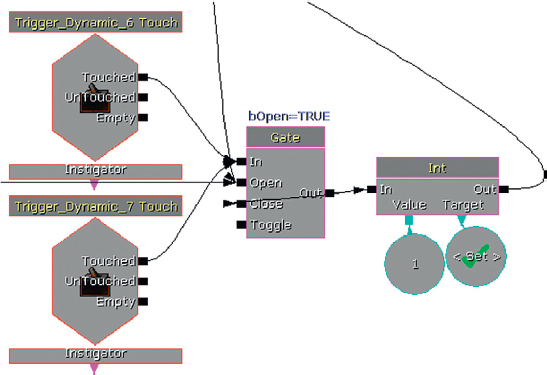

With certain types of musical material, it could be that the stingers would work even better if they waited until an appropriately musical point to play (i.e., until the next available beat). To do this you would need to set up a delay system that is aligned to the speed of the beats and then only allow the stinger message to go through at the musical point.

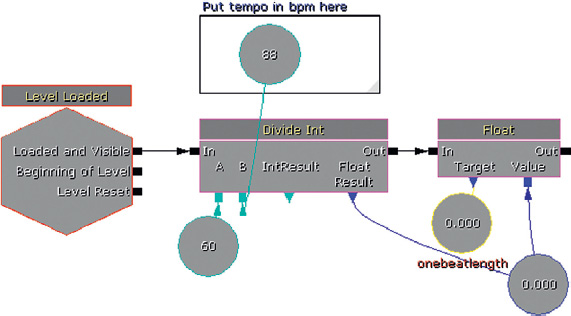

At [Level Loaded] we make a simple calculation to work out the length of one beat in seconds. A minute (60 seconds) is divided by the tempo (the number of beats in a minute) to give us the length of a beat. As this is unlikely to be a whole number (and as a [Delay] object only accepts floats anyway) we then need to convert this integer to a float before passing it as a [Named Variable] to a [Delay] object.

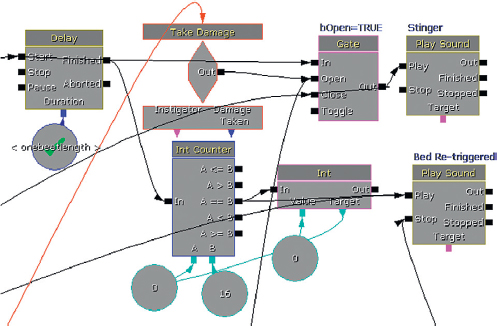

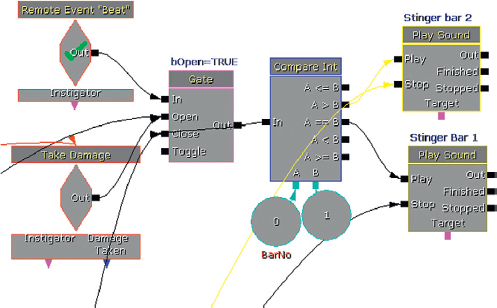

The [Delay] will now send out a pulse for every beat. This goes into the gate to go through and trigger the stinger (via the [PlaySound]) on the beat. It is only allowed to go through and do this, however, when the bot’s [Take Damage] event opens the [Gate]. Having taken damage, the [Gate] opens, the stinger plays on the beat (triggered by the [Delay]), and the [Gate] then closes itself.

There is also an additional system at work just to make sure that the looping background musical bed remains in time with the beat pulse. Instead of using a looping [SoundCue] for the musical bed, we are retriggering it. An [Int Counter] is set to increment by 1 every time it receives a beat from the [Delay] object. When this reaches 16 (the length in beats of this particular [SoundCue]), the music is retriggered. This helps avoid any slight discrepancies in the timing that may arise from using a looping sound.

Exercise 403a_00 Aligned Stingers

Now adapt your system so that the stingers only play on the next available beat. You’ll notice that this version also has some flowers that act as pickups; give them a different musical stinger.

Tips

1. You will have to calculate the tempo of your track, then add a bit. The timing system within UDK is not accurate, so some trial and error will be necessary. These timing of these systems will also run unreliably within the “Play in editor” window, so you should also preview your systems by running the full Start this level on PC option.

2. The system in Room 403a uses a [Delay] of one beat length to get the stingers in time. Copy and adapt this system for your exercise so that you’re using your [Pawn Attached] event instead of the [Take Damage]. Adjust the delay length and number of beats to match the length of your background piece of music. (You’ll need to use another version of your piece from 403_00, as the original [SoundCue] would have had a loop in it and now you are looping manually.)

403b Harmonically and Rhythmically Aware Stingers

Percussive-based stingers are easiest to use because they will fit with the music irrespective of the musical harmony at the time. Using more melodic or pitch-based stingers can be problematic, as they may not always be appropriate to the musical harmony that’s currently playing. For example, a stinger based around the notes C and E would sound fine if it happened to occur over the first two bars of this musical sequence, but it would sound dissonant against the last two bars.

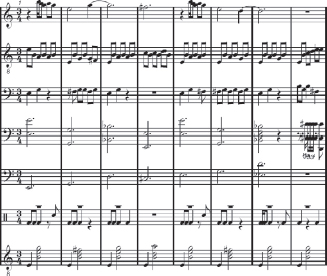

![]()

To make this work, we would need to write a specific stinger for each specific harmony that was in the piece. Then if we can track where we are in the music, we can tell the system to play the appropriate stinger for the chord that’s currently playing.

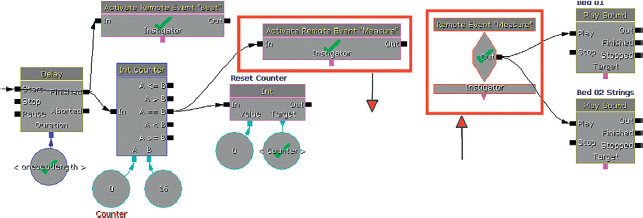

As the system is getting more complex, [Activate Remote Event] actions are used to transmit both the beat pulse and the measure event that retriggers the bed to make it loop. Passing events like this can help to stop your Kismet patches from becoming too cluttered. (See Appendix C: UDK Tips.)

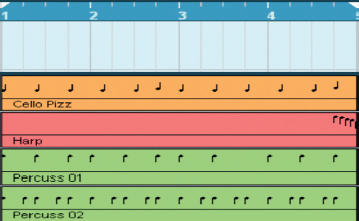

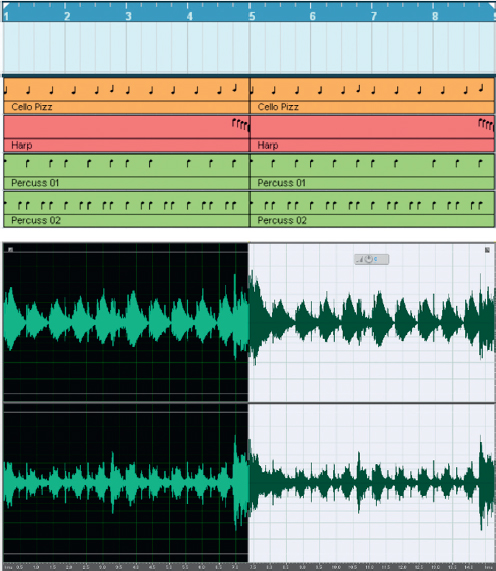

Although our rhythmic bed remains 16 beats long, now we have an additional harmonic element provided by some strings. This is in two halves of 8 beats each. To monitor which half is playing, to therefore choose the appropriate stinger, another [Int Counter] is used. This counts to 8 and is then reset. Each time it reaches 8, it equals the B variable and therefore outputs to the looping [Switch] to send either a value 1 or 2 to a named variable “Bar no.”

A [Compare Int] object is used to look at these numbers and then play either stinger 1 (if bar 1 is currently playing) or stinger 2 (if bar 1 is currently not playing).

You can see how this system could be extended and developed significantly so that we could have both harmonically variable music and stingers that fit with what’s currently playing. It would be even better if the system could transpose the stingers to play at a pitch appropriate to the current harmony. This would be trivial to do if we were using MIDI, and in theory this would be possible in UDK if one were to calculate the relationship between the pitch multiplier and “real pitch” (see the earlier Mario example if you really want to know.). Of course, the problem would be that the playback speed would also be altered.

Stingers have a role to play in highlighting game actions through musical motifs. However, when a significant change in musical state is required, we still need to tackle the issue of how we move from the piece of music that is playing now to the piece of music we want. This is referred to as the Transition Problem.

Transitional Forms/Horizontal Switching

Clunky Switching

Location-Based Switching

If you have different music for the different locations in your game, the issue is how you transition between them. The problem for the music is that the player may move between these locations at any given time and not necessarily at a nice “musical” moment like the end of a bar or even on a beat. This can upset the musical flow when you have to suddenly switch from one track to another. We call this “clunky” switching.

404 Music Switch

The idea of having different pieces of music playing in different game locations is common in genres such as MMOs (Massively Multiplayer Online game). The system in Room 404 represents the simplest (and most musically clumsy) way to implement this feature.

The floor tiles mark out two different locations in your game, and each has a [Trigger] to start the music for its particular location. (Obviously this would be happening over a much larger area in a game, but the principle still applies.) When each one is touched it simply plays the music cue through the [PlaySound] object and switches the other piece of music off.

This fails to take account of where we might be in musical terms within piece A or whether it’s an appropriate place to transition to the beginning of piece B (they may even be in completely different keys). Any of the three scenarios (and more) at the bottom of the image below are possible.

These fairly brutal music transitions to the start of piece B are pretty clunky.

Exercise 404_00 Simple Music Switch

In this exercise room, you have two areas: an outside sunny area and a dark, forbidding cave. Set up a system that switches between two pieces of music depending on where you are.

Tips

1. Use [Trigger]s or [TriggerVolume]s to mark out the transition between the two areas.

2. Use the simple system illustrated earlier so that each [PlaySound] that is triggered switches the other off by sending a signal to the Stop input.

3. Realize that we need to do a little better than this.

Gameplay-Based Switching

Like a change in location, gameplay events can also occur at any given time (not always musically appropriate ones). You want the music to change instantly to reflect the action, but the transition between the piece already playing and the new piece can be clunky.

404a Spotted

As you cross this room you are inevitably spotted by a bot. Fortunately, you can run behind the wall and hide from his attack. By an even happier coincidence, you notice that you are standing next to an electricity switch, and this happens to lead (via a smoldering wire) to the metal plate that the bot is standing on (what are the chances, eh?). Flick the switch (use the E key) to smoke him.

On entrance to the room a [TriggerVolume] is touched and triggers the initial ambient musical piece via a [PlaySound] object. (In case you show mercy and leave the room without killing the bot, there are also a couple of [TriggerVolume]s to switch off the music.)

A bot is spawned in the room, and when the player falls within the bot’s line of sight, a [See Enemy] event stops the music and starts the other [PlaySound] object which contains a more aggressive intense “combat” piece.

Although the inputs may be different (a game event rather than a change in location), you can see that this is effectively exactly the same system as the location based switching above and shares the same problem in that the musical transition is less than satisfying. In some circumstances this sudden “unmusical” transition can work well, as it contains an element of shock value. In others (most), we need to develop more sophistication.

Smoke and Mirrors

Simple swapping between two pieces of music dependent on either the location of the game or the current situation can sound clumsy. Masking the transition between the two musical states with a sound effect, so you don’t hear the “join,” can be surprisingly effective.

404b Smoke and Mirrors

Using a piece of dialogue, an alarm sound (or, as in this case, an explosion) to “mask” the transition between two pieces of music can mean that you can get away with simple switching without drawing attention to the clunky nature of the transition.

When you enter this room you’ll notice that the door locks behind you. You get a message that states, “They’re coming through the door; get ready.” The tension music starts. The other door glows and gets brighter until Bang! The action music kicks in as bots come streaming through the hole.

After the door closes, a [Delay] is set while the particle emitters build in intensity. When the [Delay] ends and the [Matinee] blows the door open, a loud explosion sound is heard, and the second music track is triggered. “Ducking” the currently playing music (lowering its volume temporarily) below a piece of important dialogue can also be useful in masking the transitions between your musical cues. We’ll discuss ducking in more detail in Chapter 6.

Better Switching: Crossfades

Rather than simply switching one track off and another track on, a short crossfade to transition between them can work. This method works best when the music is written in a fairly flexible musical language without strong directional melodies or phrases.

405 Music Crossfade

In this room there are four musical states. Enter the room and wander around before succumbing to temptation and picking up the ‘jewel’ (press E). This launches a trap. Now try and make it to the end of the room without getting killed.

Now restart the level, and this time allow yourself to be killed to hear how the music system reacts in a different way. (We’re using the “Feign death” console command here to “kill” the player because a real death would require the level to be restarted. When you “die,” simply press “fire” [left mouse button] to come back to life.)

Ambient. For when the player is just exploring.

Action. In this case, triggered when you “pick up” (use) the jewel.

Triumph. If you then make it to the end through the spears.

Death. If you allow the spears to kill you.

This works better than the location based or instant switching examples partly because we are now using a short crossfade between the cues and partly because the musical language here is more flexible. Writing in a musical style that is heavily rhythm based or music that has a strong sense of direction or arc can be problematic in games, as the player has the ability to undermine this at any time.

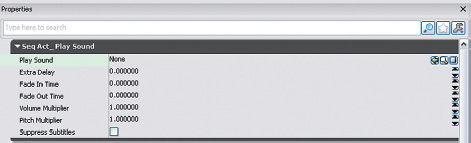

As you enter the room, the first [TriggerVolume] plays the “Ambient” [SoundCue]. When you use the jewel on the plinth to pick it up (okay, we know it’s a HealthPack, but use your imagination), this first [SoundCue] is stopped and the “Action” [SoundCue] is started. In the [PlaySound] object, the fade out time of the “Ambient” [SoundCue] is set to 1.5 seconds, and the fade in time of the “Action” [SoundCue] is set to the same, allowing these to crossfade for the transition between these two cues.

If the player is hit by any of the spears flying across the room (the [InterpActor] s Hit Actor event), then an impact sound is played, the “Action” is stopped (the fade out time is one second) and the “Death” [PlaySound] comes in (the fade in time is one second). Likewise if the player reaches the end then the [TriggerVolume] in this area will stop the “Action” (the fade out time is one second) and play the “Triumph” [SoundCue] (the fade in time is one second).

If you play the room through several times, you’ll see that the player could spend much longer exploring the room before picking up the jewel (in which case the transition to “Action” would be from a different part of the “Ambient” music) or remain on “Action” for some time while carefully navigating the spears. Or else the player could run just through the whole thing. For example, the music could be required to transition at any following points.

Or a different player with a different approach might result in this:

The more impulsive player might even get this:

When combined with the right musical language and the occasional masked transition, the use of simple crossfades can be quite effective.

Exercise 405_00 Music Crossfade on [Trigger]

In the center of this room is a valuable diamond (seem familiar?). Start the level with “Ambient” music, which then switches to “Action” if the player goes near the diamond. If the player defeats the bot that appears, play a “Triumph” track, if the player is killed, play a “Death” track.

Tips

1. In the Kismet setup there are four [PlaySound] objects. Add appropriate [SoundCue]s for “Ambient,” “Action,” “Triumph,” and “PlayerDeath” tracks.

2. For each [Playsound] object, set an appropriate Fade in/Fade out length.

3. Use the game events as commented in the given Kismet system to play the appropriate [PlaySound] object and to stop the others.

4. After “Triumph” has finished, you may want to go back to the “Ambient” track. Take a message from its finished output to play the “Ambient” [PlaySound].

UDK’s (Legacy) Music System

You may be wondering by now why we have been using the [PlaySound] object within Kismet rather than what appears to be more appropriate, the [Play Music Track]. The reason is that this offers little in terms of functionality and much that is broken or illogical as part of Unreal’s legacy music system.

The [Play Music Track] will allow you to fade your music in up to a given volume level, but this could be set in your [SoundCue] anyway. It then appears to offer the ability to fade back down to a given level, which could be useful for dropping the music down to a background bed, for example. However, the problem is that unlike the Fade Out features of the [PlaySound], which is triggered when the [PlaySound] is stopped, there is no apparent way of triggering a [Play Music Track] to apply this Fade Out feature, nor indeed is there any way of stopping a [Play Music Track] once it has been started. If you want one piece of music to play throughout your level irrespective of where in the level the character is or what situation the character is facing, then this could be a useful object for you. If that is your approach to music for games, then go back to the bookstore right now and try and get your money back for this book. (Once you’ve done that, you can go and crawl back under your rock.)

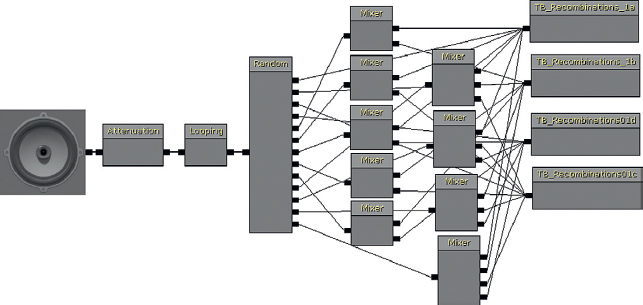

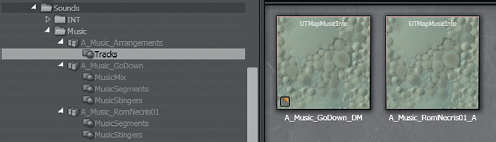

If you’ve been playing some of the Unreal Tournament 3 maps that come with UDK (C:UDK UDK(***)UDKGameContentMaps), then you will have noticed that there appears to be an interactive music system at work. In the UDKGame/Content/Sounds/Music folder you can see these music tracks grouped into Segments and Stingers. You can also a package called Music Arrangements. Open up the properties of one of these by double-clicking on it.

These arrangements govern how music used to work in UT3. If you open the map VCTF-Necropolis from the UDK maps folder and select World Properties from the top View menu, you can see that it was possible to assign one of these arrangements to your map.

You’ll also note that although you can create a new [UDKMapMusicInfo] item within UDK that appears to be the equivalent of these [Arrangement] objects, you cannot actually add these to a new UDK map because this functionality has been removed.

The music in these items is triggered by game events and allows some musical transitions by allowing you to specify the tempo and the number of measures/bars over which the music will transition between states. Although this might be potentially effective for certain styles of music, it is not working and so we won’t dwell on it anymore here.

(If you want to try it out, you can replace the map music info in one of the UDK example maps with your own by copying one of the existing [Arrangements] files. Using a new [UDKMapMusicInfo] will not work.)

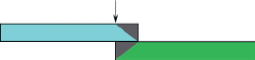

Maintaining Musical Structures: Horizontal Transitions

Write in Chunks

Although musical crossfades can be effective, they are never going to be totally satisfactory from a musical point of view, and if it doesn’t work musically, it’s less effective emotionally. If we write our music in relatively short blocks and only allow the transitions to be triggered at the end of these blocks, then we can react relatively quickly to game events while maintaining a musical continuity.

406 Searchlight Chunks

If the cameras in this room spot you, then you must hide behind the wall. The guards will quickly forget about you and the music will return to its more ambient state.

As in Room 402, a [Trigger_Dynamic] is attached to the search light (at a Z offset so that it is on the ground), and when this is touched it changes the music to the “Action” track. When you go behind the wall, the [TriggerVolume] starts a five-second delay before switching back the “Ambient” track. (The guards have a very short attention span; we blame Facebook).

Using a simple system, this effect could be achieved with the following steps. As the player enters the room, there is no music. When the [Trigger_Dynamic] (attached to the spotlights) hits the player, the first track is played and its gate is closed so it does not retrigger. At the same time, this action also opens the second gate so that when the player hides behind the wall and comes into contact with the [TriggerVolume], this is allowed to go through and adjust the switch to play the second track.

However, now we want to improve the system by only allowing changes at musical points. Out of these tracks only the “Ambient” track is able to be interrupted unmusically. We allow this because we want the music to react instantly when the player is spotted by the searchlight.

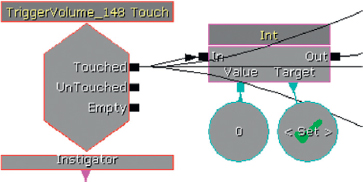

On entrance to the room, a [TriggerVolume] triggers a [Set Variable] Action to set the variable named ‘Set’ to zero.

This action triggers a [Compare Int] object, which compares the number 1 with the variable ‘Set’. In this case, input A is greater than B, so the result comes out of A > B and triggers the [PlaySound] for the “Ambient” track.

The room entry [TriggerVolume] also opens the [Gate] so that when the [Trigger_Dynamic] (searchlight triggers) are touched they can go through and change the variable ‘set’ to 1. The [Gate] then closes itself to avoid retriggering.

When the [Trigger_Dynamic]s (searchlight) have been touched, the variable ‘Set’ is the same as input A of the [Compare Int], so a trigger comes from the == output into the [Switch]. This initially goes through output 1, plays the “Intro,” then plays the “Action” track. When the “Action”track is finished, it sets the switch to go to output 2. That means that when the signal comes through the [Compare Int] again (assuming nothing has changed and we’re still in the “Action” state), the trigger will go straight to play the “Action” cue again instead of playing the “Intro.” Although the [SoundCue] does not have a [Looping] object in it, this system effectively “loops” the “Action” cue until the circumstances change.

When players hide behind the wall, they come into contact with another [TriggerVolume], which now changes the variable ‘Set’ to 2, causing an output from A < B. This plays the “Outro,” resets the ‘Set’ variable to zero, and resets the [Switch] to 1 (so on the next switch to “Action” it will play the “Intro” first). “Outro” then opens the [Gate] for the [Trigger_Dynamic]s. (This will make more sense by examining the system in the tutorial level. Honest.)

The key to this system is that triggers are only entering the [Compare Int] object when each track has come to its end using the [PlaySound]’s Finished output (with the exception of the move from “Ambient” to “Action” already discussed). This means that the changes will happen at musical points. You could put any music into this system with chunks of any length and it would work because it’s using the length of the pieces themselves as triggers. A typical use of this sort of system would be to check whether the player was still in combat. The “Action” cue keeps going back through the [Compare Int] object to check that the variable has not changed (i.e., we’re still in combat). If you anticipated being in this state for a significant length of time, you could build in variation to the “Action” [Soundcue] so that it sometimes plays alternate versions.

Exercise 406_00 Chunk

Adapt the system you used for 404, but this time instead of crossfading, choose music that would suit switching at the end of the bar. Again there is to be an “Ambient” track on level start, an “Action” track when the diamond is approached, and “Death” or “Triumph” tracks.

Tips

1. The system is illustrated with triggers below, but you should connect up the appropriate game events as described in the comments of the exercise.

2. As the “Ambient” finishes it goes back around through the [Gate] and starts again (as long as the gate remains open). It also goes to all the other [Gate]s so that if the situation has changed and one of them has opened then the new [SoundCue] will be triggered at this time (i.e., at the end of the ambient cue).

3. The “Action” does a similar thing so that it is relooped while “Action” is taking place. The “Death” and “Triumph” [PlaySound]s simply play and then stop, as they are a natural end to the music. When the “Action” is triggered it should open the [Gate] to the “Action” cue and close all the others.

4. Each [PlaySound] is triggered by the Finished output of another. In this way, the end and the start of each are correctly synchronized (presuming your tracks are properly edited).

Transitions and Transition Matrices

If you consider the range of musical states you might want for a typical game scenario, you can quickly see the challenges that face the composer in this medium. Let’s take a relatively straightforward example based in one general location at a particular time of day (you may wish to have different sets of pieces dependent on these variables, but we’ll leave this additional complexity to one side for now).

“Stealth 01”, “Stealth 02”:

Two stealth cues of slightly different intensities.

“Action 01”, “Action 02”:

Two action cues, one for the normal guard combat, one for the boss combat.

“Action 02a”:

One additional action intensity for the boss combat (when the boss’s health gets low).

“Death”:

The player dies.

“End”:

The player defeats the guards.

“Triumph”:

The player defeats the boss.

“Bored”:

If the player loiters on stealth for too long switch the music off.

“Stingers”:

The player lands a blow on the boss.

If we discount the “Stingers,” “Death” and “Bored” (because they don’t transition to any other cue), then we have seven musical cues. If we allow for a game state where it might be necessary to transition between any of these to any other, then there are 127 different possible transitions. Of course, not all of these would make sense (e.g. “Stealth 01” should not be able to transition straight to “End” as there would have been some combat “Action” in between), but nevertheless writing music that allows for this kind of flexibility requires a different mindset from composing traditional linear scores. Before beginning, the composer needs to consider which pieces will need to be able to link to which other pieces, and the ways in which you want to handle each of these transitions. This is sometimes referred to as a branching approach to interactive music because it can be reminiscent of a tree-like structure. At the planning stage, a flow diagram such as the one that follows can be useful.

Next you need to decide on the best way for each of these cues to transition between one another.

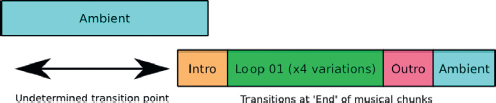

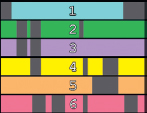

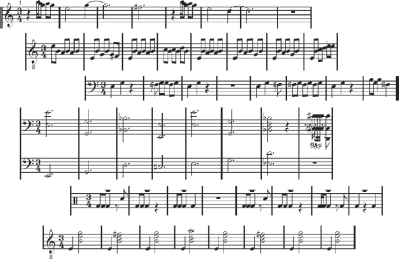

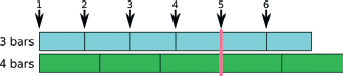

Transition Times/Switch Points

There are, of course, a variety of ways to move from one piece of music to another, some more musical than others. You need to make a choice that is preferably reactive enough to the gameplay to fulfill its function while at the same time maintaining some kind of musical continuity. Here are some illustrations of the types of transition you may want to consider.

The arrow indicates the time at which the transition is called by the game.

Immediate

This immediately stops the current cue and plays the new cue from its beginning.

Crossfade

This transition fades out the current cue and fades in the new cue.

This transition waits until the end of the currently playing cue to switch to the new cue.

![]()

At Measure

This transition starts the new cue (and silences the old one) at the next measure (bar) boundary.

At Beat

This transition plays the new cue at the next musical beat of the currently playing cue.

At Beat Aligned

This transitions at the next beat, but to the same position in the new cue, rather than the start of the new cue—that is, we exit cue A at beat 2, and we enter cue B also at its beat 2. More about this later.

Transition Cues

Often it is not satisfactory to simply move from one cue to another. You may need a “transition cue” to smooth the path between the two main cues. This is a specific cue whose role is to help the music move from one cue to another; of course, these cues can begin at any of the transition times described earlier.

A transition cue might take the form of an “End” to the current cue before starting the new one, on an “Intro” to the new cue (or a combination of both of these options (e.g., Cue 1/EndCue1/IntroCue2/Cue2).

Here is the transition cue leading from “Main Cue 01” to “Main Cue 02”:

![]()

Here are the transition cues leading from “Main Cue 01” to “End” to “Intro” to “Main Cue 02”:

![]()

A transition cue will normally ease the shift in intensities by leading down, building up, or managing a change in the tempo between the old and the new. It might also act as a “stinger” (! - see image) to highlight an action before then leading into the new cue.

![]()

A Transition Matrix

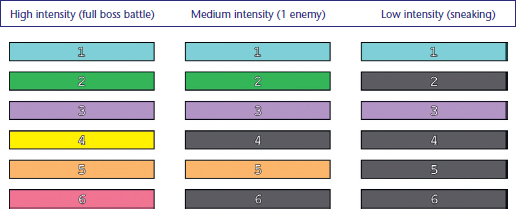

A table like the one shown here is useful for considering these kinds of systems. There is a blank template version for your own music available to download from the website. This defines which cues can move to which other cues and the transition time at which they can move. In this example, you can see that the “Intro_01”/”Intro_02” and “End_01”/”End_02” cues serve as transition cues between the main pieces.

Reading from left to right:

When moving from “Stealth_01” to “Stealth_02” the system should do it at the next measure.

When moving from “Stealth_01” to “Action_01” you should do it at the next available beat via the transition cue “Intro_01.”

When moving from “Action_01” back to “Stealth_01” or “Stealth_02” do it at the next available bar/measure using the “Action_End” cue to transition.

Although this might seem like a slightly academic exercise when you sit down to compose, the time you spend now to work these things out will save you a lot of pain later.

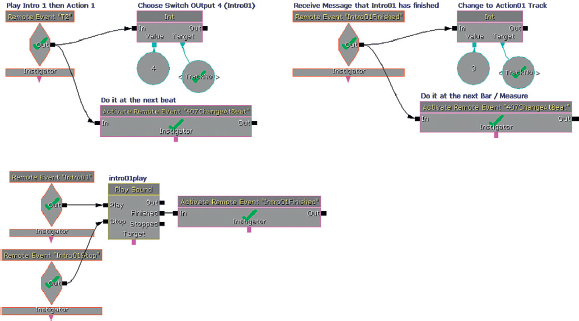

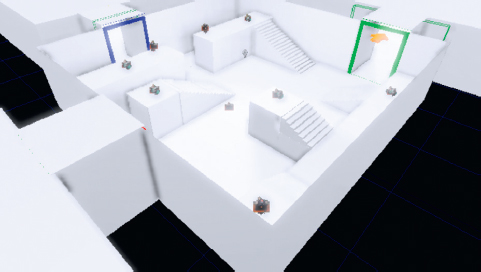

407 Transitions

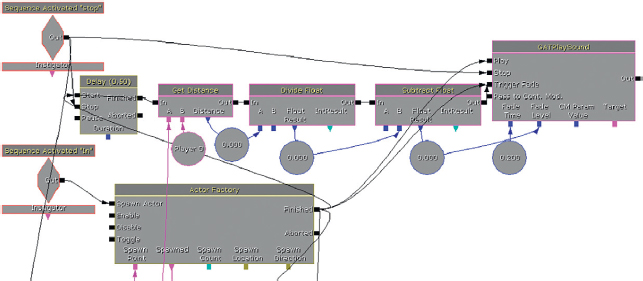

This series of rooms contains a “stealth” type scenario to illustrate a system of music transitions.

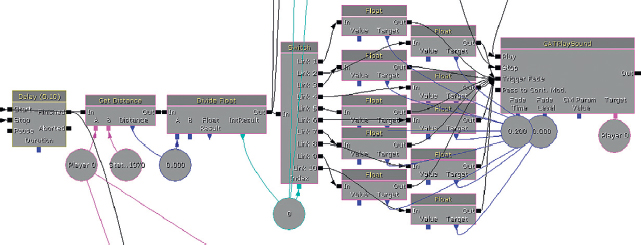

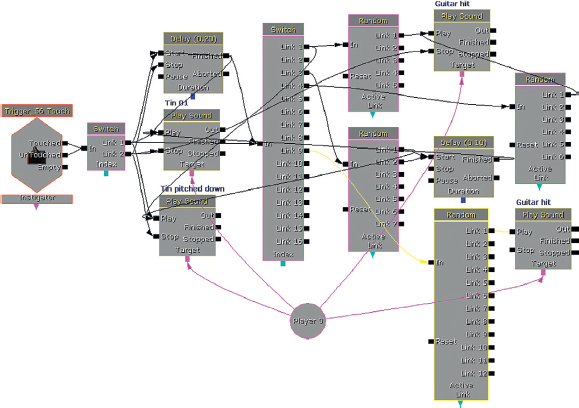

Because the system is reasonably complex, we’ll present it in both narrative and diagrammatic forms.

First, here’s the story of 407. Upon entering the first room, the “Stealth 01” cue begins. The player must avoid the security cameras to get to the next room undetected. If the player is detected, then “Intro 01” leads to “Action 01” as a bot appears and they do battle. When the enemy is defeated, the cue “End 01” leads back into “Stealth 01.”

In Room 02 the situation gets slightly more tense, so we switch to “Stealth 02” (a slight variation of “Stealth 01”). Here the player needs to get the timing right to pass through the lasers. If these are triggered, then again “Intro 01” leads to “Action 01” for a bot battle. This time a different end cue is used, “End 02”, as we’re not going back to “Stealth 01” but returning to “Stealth 02.”

The player needs the documents contained in the safe, so there’s nothing to do but to open the safe and be prepared for the boss battle that follows. The music in the room, initially remaining on “Stealth 02,” switches on the boss’s appearance to “Intro 02” to lead to “Action 02.” During the battle when the enemy’s health gets low, the music goes up in intensity to “Action 02a” and there’s also the occasional “Stinger.” Defeating this boss leads to the final “Triumph” cue.

In all the rooms, another two cues are available: “Death” if the player is defeated by the enemy at any time, and “Bored.”

The “Bored” Switch

“Bored” is a version of “Stealth 01” that has a fade to silence. Part of music’s power is to indicate that what is happening now is somehow of special significance or importance. If we have music playing all the time, it can lose its impact (and make your system look stupid). It’s useful to always have a “bored” switch (Marty O’Donnell;), so if the player remains in one musical state for an unnaturally long period, the music will fade out.

The diagram shown here also represents this music system.

(For the gameplay systems themselves, take a look in the room subsequences. These are simple systems based on triggers, dynamic (moving) triggers, bot spawning, bot take damage, and bot death.)

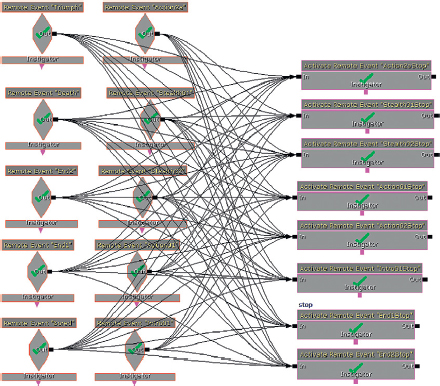

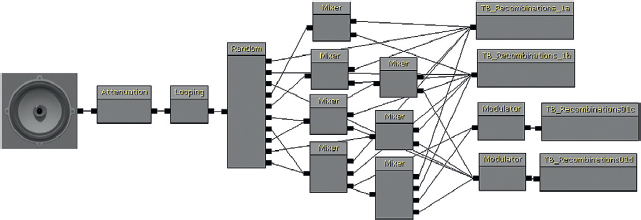

Here is a screen shot for the implementation. Sadly this is our idea of fun.

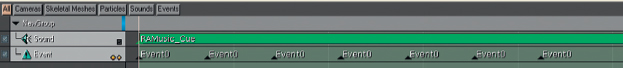

First a pulse is established that matches the tempo of the music. From this we get two outputs via [Activate Remote Event] s: the beat (407 Beat) and another signal on the first beat of a four-beat bar/measure (407 Measure). These will allow us to control transitions so that they occur on either the musical beat or Bar/Measure line.

Both these are received via [Remote Events] at our main switching system. (You can double-click on a [Remote Event] to see the [Activate Remote Event] that it relates to.)

This switching system receives a message from a game event, which comprises two parts:

Which track should I play?

(Named Variable “Track No”)

When should I switch to it?

([Remote Event]s “407ChangeAtBeat”/“407ChangeAtMeasure”)

On receipt of this message the track number goes straight to the [Switch] and changes its index so that the next message received by the [Switch] will go out of the appropriate link number of the [Switch]. You can see that these then go to further [Remote Event] objects, which then go to actually play the music when received by the [PlaySound] objects.

This message will only be sent out of the [Switch]’s link when the [Switch] receives an input, and this is governed by the second message that determines whether this will be at the next beat or the next bar/measure. Both impulses are connected to the [Switch] via a [Gate], and the choice of transition type (407ChangeAtBeat or 407ChangeAtMeasure) determines which pulse goes through by opening/closing their respective [Gate]s. (When a ChangeAtBeat message goes through to the switch, the [Int Counter] that counts the length of a measure is also reset so that the next measure is counted starting again at beat 1.)

How these transitions occur is under the composer’s control; all the game designer needs to do is to call one of the remote events named T0 to T9 (Trigger 0 to 9). You can then set these up to call a particular music cue, at a designated time.

Which track should I play?

(Named Variable “Track No”)

When should I switch to it?

([Remote Event]s “407ChangeAtBeat”/“407ChangeAtMeasure”)

At the moment they are set to do the following:

T0: Play “Intro 02” at next beat // Then play “Action 02”

T1: Play “Stealth 01” at next bar/measure

T2: Play “Intro 01” at next beat // Then play “Action 01”

T3: Play “End 01” at next bar/measure // Then play “Stealth 01”

T4: Play “Stealth 02” at next measure

T5: Play “End 02” at next bar/measure // Then play “Stealth 02”

T6: Play “Stinger” (this plays over the top and therefore operates outside of the usual switching system)

T7: Play “Triumph” at next bar/measure (this then stops the system) T8: Play “Death” at next beat (this then stops the system) T9: Play “Action 02a” at next bar/measure.

You will notice that many of these actually trigger two changes. In these cases, the second part of the system receives a [Remote Event] when the first music cue has finished playing.

Finally (you’ll be relieved to hear) we have what’s known in Yorkshire as a “Belt and Braces” system to stop all the cues apart from the one that is currently supposed to be playing—just in case. (We also did this because it makes a pretty pattern.)