Chapter 15

Risk Analysis: Walking Through the Fog

In This Chapter

![]() Playing with odds

Playing with odds

![]() Making decisions with uncertain outcomes

Making decisions with uncertain outcomes

![]() Developing decision-making criteria

Developing decision-making criteria

![]() Determining the value of information

Determining the value of information

![]() Incorporating risk preferences

Incorporating risk preferences

![]() Bidding to win

Bidding to win

You may remember Mattel’s Magic 8 Ball. It’s a black globe that answers questions about the future. You hold it, ask a question, turn it over, and in a window the answer to your question appears. The answer I always seem to get is “Reply hazy, try again.” Such is the future — hazy. Or consider Yogi Berra’s contribution to planning, “If you don’t know where you’re going, you might wind up someplace else.”

To this point, I’ve examined decision-making in a certain or known environment. However, frequently the consequences or payoffs resulting from a decision or action are uncertain, depending upon factors outside your control. Therefore, it’s essential to develop criteria that are used to evaluate different actions.

In an uncertain environment, decision-making criteria don’t guarantee you the highest payoff, because the payoff is influenced by factors outside of your control. These criteria, however, allow you to systematically evaluate alternative actions with variable and uncertain payoffs.

This chapter develops decision-making rules for environments where outcomes aren’t known in advance with certainty. The chapter starts with two simple decision-making criteria — the maxi-min and the mini-max regret rules. A more sophisticated criterion — expected monetary value — bases decisions on the likelihood of any outcome occurring. By using probability and expected value, you can also determine the value of additional information — for example, the value of hiring a consultant. Differences in risk preferences are incorporated in decision-making by using the expected utility criterion. The chapter concludes with strategies for determining prices through auctions.

Differentiating between Risk and Uncertainty

Risk and uncertainty refer to situations where outcomes aren’t known in advance. You don’t know which outcome will occur. Although many individuals use the terms risk and uncertainty interchangeably, economist Frank Knight (1885–1972) thinks these concepts are different. Knight believes a situation is risky when objective probabilities can be assigned to the situation’s possible outcomes. Therefore, as a decision-maker, you possess information concerning the likelihood of each possible outcome. (Using probabilities to measure risk is examined in the next section.)

On the other hand, Knight regards a situation to be uncertain when objective probabilities can’t be assigned to the possible outcomes. As a consequence, you have no objective information concerning the likelihood of a given outcome.

Determining the Odds with Probability

In a risky environment, you want to play the odds when making a decision. Remember that risk exists when objective probabilities can be assigned to the possible outcomes. Probability is simply the likelihood or chance that an event occurs. Probabilities are expressed as numbers between 0, meaning impossible, and 1.0, meaning certain. A probability of 0.50 represents a 50–50 chance or 50-percent chance that the event occurs. That’s the probability that “heads” comes up when you flip a coin.

Probabilities are further subdivided into objective, subjective, and expected probability.

![]() An objective probability is a probability determined by unbiased evidence, such as observed long-run relative frequencies of occurrence. For example, by collecting information, insurance companies determine objective probabilities to represent the likelihood of a home being robbed.

An objective probability is a probability determined by unbiased evidence, such as observed long-run relative frequencies of occurrence. For example, by collecting information, insurance companies determine objective probabilities to represent the likelihood of a home being robbed.

![]() A subjective probability is determined by an individual based upon the individual’s knowledge, information, and expertise.

A subjective probability is determined by an individual based upon the individual’s knowledge, information, and expertise.

![]() An expected probability is a theoretical probability based upon assumptions. For example, the 50-percent probability that a flipped coin comes up “heads” assumes you have a fair, two-sided coin.

An expected probability is a theoretical probability based upon assumptions. For example, the 50-percent probability that a flipped coin comes up “heads” assumes you have a fair, two-sided coin.

Considering Factors In and Out of Your Control

If only you could control everything, decision-making would be easy. But you can’t, so it’s important to differentiate between the things you can and can’t control. The terms actions and states of nature make a distinction between factors that are within or outside your control.

![]() Actions represent alternatives that you can choose. These are the things you control. In the decision-making process, you evaluate the desirability of alternative actions. Examples of actions include your firm’s pricing and advertising policies.

Actions represent alternatives that you can choose. These are the things you control. In the decision-making process, you evaluate the desirability of alternative actions. Examples of actions include your firm’s pricing and advertising policies.

![]() States of nature also affect your outcome. However, states of nature are things outside your control. Examples of states of nature are the advertising and pricing policies of rival firms, or whether or not the economy goes into a recession.

States of nature also affect your outcome. However, states of nature are things outside your control. Examples of states of nature are the advertising and pricing policies of rival firms, or whether or not the economy goes into a recession.

Payoff refers to the consequence or result of the simultaneous occurrence of a particular action and a specific state of nature. A payoff matrix includes all possible payoffs for several actions and several states of nature. Figure 15-1 illustrates a possible payoff matrix. The top of the matrix lists the three possible actions for Global Airlines: reduce fares, charge the same fares, and raise fares. These actions represent the alternatives that Global’s managers can choose.

The four possible states of nature are on the side of the payoff matrix. These states of nature represent what happens to the price of oil. Because Global’s managers can’t determine what happens to the price of oil, they’re uncertain about which state of nature will occur.

Assume that the payoffs in the payoff matrix represent Global Airline’s annual profit in millions of dollars for various scenarios. For example, if Global decides to reduce fares and oil prices increase by 10 percent, Global’s annual profit is $43 million. Similarly, if Global raises fares and oil prices don’t change, Global’s annual profit is $48 million.

Figure 15-1: Payoff matrix.

Simplifying Decision-Making Criteria

After you identify your possible actions and how the various states of nature affect the outcome of those actions, you need to decide what to do. A variety of criteria enable you to choose among alternative actions after you develop the payoff matrix; each criterion has different advantages and disadvantages.

Determining the biggest guaranteed win with the maxi-min rule

You’ve probably been told to make the best of a bad situation. That in essence is the maxi-min criterion. With the maxi-min criterion, your decision is based upon the minimum or worst payoff associated with each action. For each action, you note the minimum payoff.

1. Determine the worst payoff for reducing fares. For reduced fares, the possible payoffs are $50, $56, $43, and $35. The worst payoff is $35.

2. Determine the worst payoff for charging the same fares. For charging the same fares, the possible payoffs are $52, $51, $49, and $41. The worst payoff is $41.

3. Determine the worst payoff for raising fares. For raised fares, the possible payoffs are $42, $48, $47, and $44. The worst payoff is $42.

4. Chose the action with the best worst payoff. Global Airlines should raise fares because its worst payoff is $42 million, as compared to reduced fares’ worst payoff of $35 million and same fares’ worst payoff of $41 million.

Global Airlines raises fares because its worst payoff is $42 million. However, $42 million isn’t necessarily the profit it makes. The actual profit ultimately depends upon which state of nature actually occurs. So the actual profit depends on what actually happens to the price of oil.

The maxi-min criterion is used when you’re very conservative, and as a result you have a strong aversion to risk. This standard can be a good rule to use with a new business, when the firm’s continued existence necessitates that you avoid losses.

Making the best worst-case by using the mini-max regret rule

Don’t live with regrets. This phrase isn’t just a life philosophy, it’s a way to make business decisions. The mini-max regret criterion bases decisions on the maximum regret associated with each action. Regret measures the difference between each action’s payoff for a given state of nature and the best possible payoff for that state of nature.

1. Determine the regret for a 10-percent decrease in the price of oil.

For a 10-percent decrease in the price of oil, the best payoff is $52 million with same fares. The regret for reducing fares is $2 million, $52 – $50, and the regret for raising fares is $10 million, $52 – $42. The maximum regret is $10 million.

2. Determine the regret for no change in the price of oil.

For no change in the price of oil, the best payoff is $56 million with reduced fares. The regret for keeping the same fares is $5 million, $56 – $51, and the regret for raising fares is $8 million, $56 – $48. The maximum regret is $8 million.

3. Determine the regret for a 10-percent increase in the price of oil.

For a 10-percent increase in the price of oil, the best payoff is $49 million with the same fares. The regret for reducing fares is $6 million, $49 – $43, and the regret for raising fares is $2 million, $49 – $47. The maximum regret is $6 million.

4. Determine the regret for a 20-percent increase in the price of oil.

For a 20-percent increase in the price of oil, the best payoff is $44 million with raising fares. The regret for reducing fares is $9 million, $44 – $35, and the regret for keeping the same fares is $3 million, $44 – $41. The maximum regret is $9 million.

5. Choose the action with the minimum or smallest maximum regret.

Figure 15-2 summarizes the regrets for each action. The maximum regret associated with reducing fares is $9 million. The maximum regret in the same-fares column is $5 million, and the maximum regret you see with raising fares is $10 million. Global Airlines should charge the same fares because its maximum regret of $5 million is smaller than the maximum regret associated with any other action.

Figure 15-2: Regret matrix.

Calculating the expected value

Hope for the best but expect the worst. That’s in essence what the maxi-min and mini-max regret criteria are all about. Both of those decision-making criteria focus upon the worst possible outcome, and if you’re like Eeyore, the extremely pessimistic donkey in the Winnie-the-Pooh books, they’re probably the criteria you should use.

But I like at least some of my perspective to be based on a “hope for the best” attitude. I don’t want to ignore the worst-case scenario, but I don’t want it to be the exclusive basis for my decision.

The expected monetary value decision-making criterion overcomes the pessimistic approach by incorporating all possible outcomes in the decision-making process. Each state of nature is assigned a probability of occurrence. This probability can be determined from historical data, subjective criteria, or theory. In determining probabilities, however, the sum of the probabilities for all states of nature must equal 1. In other words, you have to specify all possible situations.

After you determine probabilities, calculate the expected monetary value (EMV) of a specific action, aj, through the following formula:

![]()

This formula indicates that action aj’s expected monetary value equals the summation for all states of nature i = 1 through i = n of the probability of θi occurring, P(θi), multiplied by the payoff associated with action aj and state of nature θi, πij.

To determine the expected monetary value for each action, you take the following steps:

1. Calculate the expected monetary value for reducing fares.

Let reducing fares represent action aj. For each state of nature, multiply the probability of that state of nature by the payoff associated with that state of nature and action aj, reduce fares. Add the resulting values to determine the expected monetary value.

2. Calculate the expected monetary value for keeping the same fares.

Let the same fares represent action aj. For each state of nature, multiply the probability of that state of nature by the payoff associated with that state of nature and action aj, same fares. Add the resulting values to determine the expected monetary value.

3. Calculate the expected monetary value for raising fares.

Let raising fares represent action aj. For each state of nature, multiply the probability of that state of nature by the payoff associated with that state of nature and action aj, raise fares. Add the resulting values to determine the expected monetary value.

4. Choose the action with the highest expected monetary value.

Global Airlines should charge the same fares because its expected monetary value is $48.65 million. That’s higher than the expected monetary value of reducing fares — $46.7 million — and the expected monetary value of raising fares — $46.65 million.

Changing the Odds by Using New Information to Revise Probabilities

A common lament is “If only I’d known this earlier.” This lament emphasizes the importance of information. Risk analysis exists because the future can’t be known with certainty. Nevertheless, more information enables you to better anticipate what the future holds. Because of this situation, more information has value to you. The crucial question is whether the value of this additional information is worth its cost.

Starting with prior probabilities

Prior probabilities are the probabilities of various states of nature associated with the problem’s initial specification. A prior probability is symbolically represented as P(θi), or the probability of state of nature θi occurring. These probabilities are determined prior to the acquisition of additional information. The probabilities in the illustration of the expected monetary value criterion in the previous section are prior probabilities.

Incorporating likelihoods

Prior probabilities can be revised based on new information. Frequently, this new information is the result of a sample. An old saying regarding Broadway plays is, “Did it play in Peoria?” Peoria refers to Peoria, Illinois, and the quote recognizes Peoria’s traditional role as a test market because many individuals believe Peoria represents “Main Street” America. So a business may test-market a new product in Peoria and, based upon the results, decide whether or not to launch the product nationally. Or, in the case of a Broadway play, a producer may do trial performances in Peoria before moving to Broadway.

In order to revise a prior probability, you need to know the probability of obtaining a specific sample outcome S given the various states of nature, θi. This probability is called a likelihood, and symbolically it’s represented as P(S|θi), or the probability of obtaining sample result S given state of nature θi. The vertical line within the parentheses indicates given. Likelihoods are usually based on previous experience.

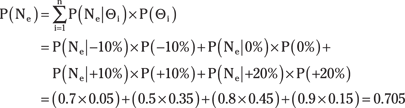

Determining marginal probabilities

Likelihoods are the probability of a certain sample result given a specific state of nature occurs. A marginal probability is the probability of getting a certain sample result irrespective of the state of nature. Thus, the probability of getting sample result S is P(S).

The formula used to determine a marginal probability is

![]()

where P(S) is the probability of getting sample result S, P(S|θi) is the likelihood or probability of getting sample result S given state of nature θi has already occurred, and P(θi) is the probability of state of nature θi occurring.

Revising probabilities

After sampling provides additional information, you can revise prior probabilities. For example, an oil company can use the results of an extensive geological survey to revise the probability that they’ll hit a given size oil reserve when they drill. Or a business can use an economic forecast to revise the probability that the economy will enter a recession.

Symbolically, the posterior probability is represented as P(θi|S), or the probability of state of nature θi occurring given sample result S. Again, the vertical line within the parentheses indicates given.

In order to revise a prior probability, you need to know the likelihoods — the probability of obtaining some sample outcome S given the various states of nature, θi, or the P(S|θi).

Assuming both prior probabilities and likelihoods are known, you can calculate the posterior probability given sample result S. The formula for calculating the posterior probability is

where P(θk|S) is the posterior probability of state of nature θk occurring given sample result S, P(S|θi) is the likelihood, and P(θi) is the prior probability.

The denominator in this formula is simply the marginal probability of a given sample result. Thus the formula can be rewritten as

![]()

where P(S) is the probability of getting sample result S.

Recalculating expected values

Although new information enables you to revise probabilities, obtaining that information is costly. You need to balance the cost of obtaining the information with its expected value. You want to obtain additional information only when its expected value exceeds the cost of obtaining it. The following example illustrates the process you use to determine the value of additional information.

Global is considering hiring a consultant to advise it on oil market conditions. Before hiring the consultant, Global Airlines must determine how the consultant’s forecast affects its decision. Obviously, if Global’s best course of action is the same regardless of the consultant’s forecast, hiring the expert is pointless.

To determine whether or not to hire the consultant, Global first notes the information the consultant provides. In this situation, the consultant will make a positive (Po) or negative (Ne) prediction regarding the price of oil. The consultant’s past track record shows the following:

![]() When the price of oil decreased by 10 percent, the probability the consultant made a positive (Po) prediction was 0.3, P(Po|–10%) = 0.3, and the probability the consultant made a negative (Ne) prediction was 0.7, P(Ne|–10%) = 0.7.

When the price of oil decreased by 10 percent, the probability the consultant made a positive (Po) prediction was 0.3, P(Po|–10%) = 0.3, and the probability the consultant made a negative (Ne) prediction was 0.7, P(Ne|–10%) = 0.7.

![]() When the price of oil remained the same, the probability of a positive (Po) prediction was 0.5, P(Po|0%) = 0.5, and a negative (Ne) prediction was 0.5, P(Ne|0%) = 0.5.

When the price of oil remained the same, the probability of a positive (Po) prediction was 0.5, P(Po|0%) = 0.5, and a negative (Ne) prediction was 0.5, P(Ne|0%) = 0.5.

![]() When the price of oil increased by 10 percent, the probability of a positive (Po) prediction was 0.2, P(Po|+10%) = 0.2, and a negative (Ne) prediction was 0.8, P(Ne|+10%) = 0.8.

When the price of oil increased by 10 percent, the probability of a positive (Po) prediction was 0.2, P(Po|+10%) = 0.2, and a negative (Ne) prediction was 0.8, P(Ne|+10%) = 0.8.

![]() When the price of oil increased by 20 percent, the probability of a positive (Po) prediction was 0.1, P(Po|+20%) = 0.1, and a negative (Ne) prediction was 0.9, P(Ne|+20%) = 0.9.

When the price of oil increased by 20 percent, the probability of a positive (Po) prediction was 0.1, P(Po|+20%) = 0.1, and a negative (Ne) prediction was 0.9, P(Ne|+20%) = 0.9.

These points are likelihoods.

Based upon the prior probabilities and likelihoods, Global Airlines can determine the value of the consultant’s report.

1. Calculate the probability of a positive (Po) report.

Because you’ve not yet decided whether or not to hire the consultant, you don’t know what report you may get. Therefore, to determine the expected value of the consultant’s report, you need to determine the marginal probability of a positive report.

2. Calculate the probability of a negative (Ne) report.

Determine the marginal probability of a negative report.

3. Calculate the posterior probability for oil prices decreasing by 10 percent given a positive (Po) report.

![]()

4. Calculate the remaining posterior probabilities given a positive (Po) report.

Posterior probabilities need to be calculated for oil prices that remain the same, oil prices that increase by 10 percent, and oil prices that increase by 20 percent given a positive report.

5. Calculate the posterior probabilities for oil price changes given a negative (Ne) report.

Given a negative report, you need to calculate the probabilities that oil prices decrease by 10 percent, oil prices remain the same, oil prices increase by 10 percent, and oil prices increase by 20 percent.

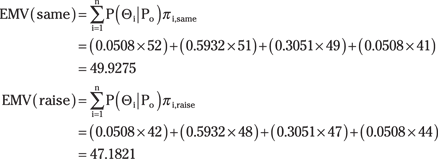

6. Calculate the expected monetary value for reducing fares given a positive (Po) consultant report.

Based upon the posterior probabilities, you can recalculate the expected monetary value of each action. In this calculation, the payoffs associated with each combination of action and state of nature are multiplied by the posterior probability in the place of the prior probability. Reducing fares represents action aj. For each state of nature, multiply the posterior probability of that state of nature given a positive consultant’s report by the payoff associated with that state of nature and action aj. Add the resulting values to determine the expected monetary value.

7. Calculate the expected monetary value for charging the same fares and increasing fares given a positive (Po) consultant’s report.

For each action, multiply the posterior probability of each state of nature given a positive consultant’s report by the payoff associated with that state of nature and the appropriate action — same fares or raised fares.

8. Choose the action with the highest expected monetary value given a positive consultant’s report.

Global Airlines should reduce fares because its expected monetary value is $50.6565 million. That’s higher than the expected monetary value of keeping the same fares — $49.9275 million — and the expected monetary value of raising fares — $47.1821 million.

9. Calculate the expected monetary value for reducing fares, keeping the same fares, and increasing fares given a negative (Ne) consultant’s report.

Expected monetary values based upon posterior probabilities must also be calculated for the other possible forecasts — you need to know what decision you’ll make if the consultant’s report is negative instead of positive. Remember to use the posterior probabilities associated with a negative consultant’s report.

10. Choose the action with the highest expected monetary value given a negative consultant’s report.

Global Airlines should charge the same fares because its expected monetary value is $48.1083 million. That’s higher than the expected monetary value of reducing fares — $45.0375 million — and the expected monetary value of raising fares — $46.421 million.

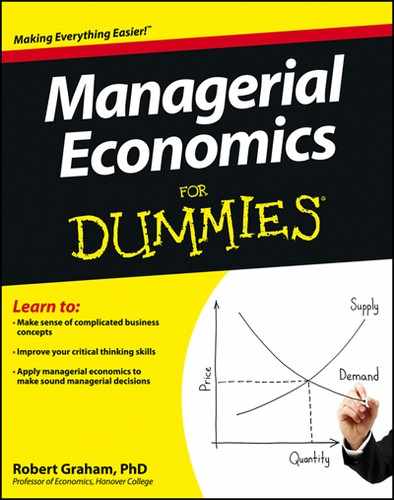

11. Determine the value of the consultant’s report.

The consultant’s report affects your decision. If it’s positive, you’ll reduce fares, and if it’s negative, you’ll charge the same fares. Multiply the expected payoff of your decision by the probability of getting a consultant’s report that leads to that decision.

![]()

12. Determine the value of the consultant’s report.

The expected value of the consultant’s report is determined by comparing the EMV for the consultant with the EMV for the best action determined based on prior probabilities. Based upon prior probabilities used in the previous example, Global Airlines charged the same fares with an expected monetary value of $48.65 million. If Global Airlines hires the consultant and acts on the consultant’s report, the expected monetary value increases to $48.8601. The difference between the expected monetary value given the consultant’s report and the expected monetary value based on prior probabilities is the maximum amount Global Airlines is willing to pay the consultant. This difference is $0.2101 million or $210,100 — $48.8601 minus $48.65.

Taking Chances with Risk Preferences

Individuals have different risk preferences. Some people buy lottery tickets all the time, while others never buy them. Some people invest millions in the newest innovation, while others stay with the tried and true. Different risk preferences result from differences in individual satisfaction or dissatisfaction arising from risk.

As I note in Chapter 5, utility is a subjective measure of satisfaction that’s unique to an individual. A utility function is an index or scale that measures the relative utility of various outcomes. Economists use utils to measure the amount of an individual’s satisfaction. The concept of utility enables individual risk preferences to be incorporated in decision-making criteria.

Constructing a utility function

Because utility is subjective, one individual’s utility function can’t be compared to another individual’s utility function. You must construct a utility function by determining the individual’s utility associated with each possible outcome.

To construct a utility function, you need to compare two alternatives. One alternative is called the standard lottery. The standard lottery has two possible outcomes, such as a payoff of $A occurring with probability P and a payoff of $B occurring with probability 1 – P. The second alternative is called the certainty equivalent. This alternative has a certain payoff of $C.

You’re indifferent when the expected utility from the certainty equivalent (first alternative) equals the expected utility from the standard lottery (second alternative). Mathematically, an individual is indifferent if

![]()

where U($) represents the utility associated with that payoff and P and (1 – P) represent probabilities.

1. Assign utility values to two outcomes.

For example, receiving $0 may have a utility value of 0, while receiving $100 has a utility value of 200.

2. Define the monetary certainty equivalent.

Assume the two outcomes specified in Step 1 represent a standard lottery, where each outcome has a 50 percent chance of occurring. After you specify the standard lottery, you must determine what amount of money received with certainty would make you indifferent to the standard lottery. For example, you may decide that you’d be indifferent between receiving $45 for certain and taking the gamble represented by the standard lottery.

3. Determine the utility of the certainty equivalent.

![]()

Thus, you get 100 utils of satisfaction from $45.

4. Repeat these calculations for other standard lottery and certainty equivalent combinations to lead to other utilities.

For example, the new standard lottery has two possible outcomes: receive $0 or $45. Each outcome has a 50 percent chance of occurring. You decide that you’d be indifferent between receiving $25 for certain and taking the gamble represented by the standard lottery.

![]()

You get 50 utils of satisfaction from $25.

Risking attitude

Attitudes toward risk vary among individuals. Some individuals gladly take on additional risk, while other individuals willingly pay a substantial premium, such as with health insurance, to reduce their risk of loss. Individual attitudes toward risk are grouped into the following three categories:

![]() You’re risk adverse if you have two alternatives with the same expected monetary value, and you choose the alternative with less variation in outcomes.

You’re risk adverse if you have two alternatives with the same expected monetary value, and you choose the alternative with less variation in outcomes.

![]() You’re risk neutral if you have two alternatives with different variations in outcomes and the same expected monetary value and you’re indifferent between those alternatives. Risk neutral individuals aren’t influenced positively or negatively by risk. Risk neutral individuals will always choose the alternative with the highest expected value.

You’re risk neutral if you have two alternatives with different variations in outcomes and the same expected monetary value and you’re indifferent between those alternatives. Risk neutral individuals aren’t influenced positively or negatively by risk. Risk neutral individuals will always choose the alternative with the highest expected value.

![]() You’re a risk taker or risk lover if two alternatives have the same expected monetary value and you choose the one with the highest variability. Lottery players are risk takers.

You’re a risk taker or risk lover if two alternatives have the same expected monetary value and you choose the one with the highest variability. Lottery players are risk takers.

Figure 15-3 illustrates the relationship between the expected payoff and utility for individuals with different risk preferences. Note that the additional satisfaction risk adverse individuals get from higher payoffs is decreasing while the additional satisfaction risk takers get from higher payoffs is increasing.

Figure 15-3: Attitudes toward risk.

Using the expected utility criterion

After you determine the utilities, you maximize a decision’s expected utility. Expected utility equals the sum of each possible outcome’s utility multiplied by the probability of the outcome occurring.

![]()

where the expected utility of action aj, E(utility aj), equals the summation for all states of nature of the utility associated with the payoff corresponding to action aj and state of nature θi, U(πij), multiplied by the probability of state of nature θi occurring, P(θi).

Figure 15-4: Payoff matrix for business expansion.

To determine which action to take based upon the expected utility criterion, you take the following steps:

1. Calculate the expected utility for not expanding.

For each state of nature, multiply the probability of that state of nature by the payoff’s utility given that state of nature and not expanding. Add the resulting values to determine the expected utility.

![]()

2. Calculate the expected utility for expanding.

3. Choose the action with the highest expected utility.

You shouldn’t expand. The expected utility of not expanding is 130, which is higher than the expected utility of expanding, which is only 45.

Using Auctions

There’s nothing like an eBay auction. First, you can find almost anything on eBay — from accordions to z-scale model trains. And there’s nothing like bidding. You hover over the computer as the seconds tick off, waiting to get in that last bid. But be careful — get too excited and you may pay more than the item is worth to you.

Auctions are situations where potential buyers compete for the right to own a good, or anything of value. As in any situation, the seller in an auction wants the highest possible price, while the buyer wants the lowest possible price. What makes an auction different is the competition among buyers, which can lead to a higher price for the seller.

Bidding last wins: The English auction

An English auction is the auction you’re probably most familiar with. In an English auction, the auction starts at a low price set by the seller. Potential buyers, or bidders, incrementally raise the price until no one is willing to bid the price higher. At that point, the item is sold to the last individual to bid.

In an English auction, you know what other bidders are willing to pay at any given point. Your decision on whether or not to bid is simply determined by whether or not you’re willing to pay a higher amount.

1. All three individuals will bid as long as the price is $4,000 or less.

All three individuals are willing to pay less than $4,000 for the land.

2. Only Kent and Lars bid at prices between $4,000 and $4,200.

Douglas drops out of the auction because he’s not willing to pay more than $4,000.

3. Lars purchases the land at the first bid over $4,200.

After the price per acre exceeds $4,200, Kent drops out of the auction, leaving Lars as the only bidder. Thus, the minimum amount Lars must spend to purchase the land is $4,200.01.

Bidding first wins: The Dutch auction

In a Dutch auction, the first bid wins. The bidding starts with the seller asking an extremely high price — a price nobody is willing to pay. The price is then gradually lowered until one buyer indicates a willingness to purchase the item. At that point, the auction is over and the item sold.

In a Dutch auction, no information regarding other bidders’ preferences is available to potential buyers. Because the first bid is the winning bid, potential buyers can’t determine the item’s potential value to anyone else. As a result, to avoid losing the opportunity to purchase the item, buyers tend to bid the maximum amount they’re willing to pay.

1. The auction starts at an extremely high price; perhaps $10,000 per acre.

The auctioneer sets the price so high that nobody is willing to purchase the land.

2. The auctioneer progressively lowers price.

As long as the price remains higher than the price anyone is willing to pay, no one bids. However, potential buyers don’t know anything about other bidders’ preferences. Therefore, potential buyers start getting nervous about losing the opportunity to buy the land as the auction price is lowered toward the price they’re willing to pay.

3. The price is lowered to $5,000 and Lars purchases the land by making the first and only bid.

Because Lars knows nothing about the other bidders’ preferences, he bids as soon as the land hits the price he’s willing to pay. Because the first bid is both the only bid and the winning bid, at this point the auction is over.

In comparing the English auction to the Dutch auction, you should note that the winning bidder remains the same — Lars in the examples. However, in the English auction, Lars winning bid was only $4,200.01, while in the Dutch auction his winning bid was $5,000.

Sealing the deal: The sealed-bid auction

In a first-price, sealed-bid auction, potential buyers submit written bids without knowing what anyone else is bidding. The auctioneer collects the bids and sells the item to the highest bidder.

In both an English auction and a sealed-bid auction, the item is sold to the highest bidder. However, in a sealed-bid auction, potential buyers don’t know anything about the amount others are willing to bid. There is no bidding back-and-forth. As a result, bidders tend to bid the maximum amount they’re willing to pay with the result being similar to a Dutch auction.

Whenever risk is present, even the risk of not buying an item you want at an auction, you can’t be sure of the outcome. This risk can be very stressful. So, if you want to further reduce stress, you can always follow humorist Frank McKinney Hubbard’s advice, “The safe way to double your money is to fold it over once and put it in your pocket.”

Because it doesn’t know what’s going to happen to the price of oil, Global Airlines uses the maxi-min criterion to determine what fare to set. To apply the maxi-min criterion, Global takes the following steps:

Because it doesn’t know what’s going to happen to the price of oil, Global Airlines uses the maxi-min criterion to determine what fare to set. To apply the maxi-min criterion, Global takes the following steps: This section deals extensively with statistical techniques that enable you to manipulate probabilities. If you’re unfamiliar with concepts like conditional probabilities, you should review those concepts and probability theory in general before tackling this section. I call this technical stuff because the warning icon doesn’t use a mushroom cloud; thus, it seems insufficient for this caution.

This section deals extensively with statistical techniques that enable you to manipulate probabilities. If you’re unfamiliar with concepts like conditional probabilities, you should review those concepts and probability theory in general before tackling this section. I call this technical stuff because the warning icon doesn’t use a mushroom cloud; thus, it seems insufficient for this caution.