CHAPTER 5

Series Solutions of ODEs. Special Functions

In the previous chapters, we have seen that linear ODEs with constant coefficients can be solved by algebraic methods, and that their solutions are elementary functions known from calculus. For ODEs with variable coefficients the situation is more complicated, and their solutions may be nonelementary functions. Legendre's, Bessel's, and the hypergeometric equations are important ODEs of this kind. Since these ODEs and their solutions, the Legendre polynomials, Bessel functions, and hypergeometric functions, play an important role in engineering modeling, we shall consider the two standard methods for solving such ODEs.

The first method is called the power series method because it gives solutions in the form of a power series a0 + a1x + a2x2 + a3x3 + ….

The second method is called the Frobenius method and generalizes the first; it gives solutions in power series, multiplied by a logarithmic term or a fractional power xr, in cases such as Bessel's equation, in which the first method is not general enough.

All those more advanced solutions and various other functions not appearing in calculus are known as higher functions or special functions, which has become a technical term. Each of these functions is important enough to give it a name and investigate its properties and relations to other functions in great detail (take a look into Refs. [GenRef1], [GenRef10], or [All] in App. 1). Your CAS knows practically all functions you will ever need in industry or research labs, but it is up to you to find your way through this vast terrain of formulas. The present chapter may give you some help in this task.

COMMENT. You can study this chapter directly after Chap. 2 because it needs no material from Chaps. 3 or 4.

Prerequisite: Chap. 2.

Section that may be omitted in a shorter course: 5.5.

References and Answers to Problems: App. 1 Part A, and App. 2.

5.1 Power Series Method

The power series method is the standard method for solving linear ODEs with variable coefficients. It gives solutions in the form of power series. These series can be used for computing values, graphing curves, proving formulas, and exploring properties of solutions, as we shall see. In this section we begin by explaining the idea of the power series method.

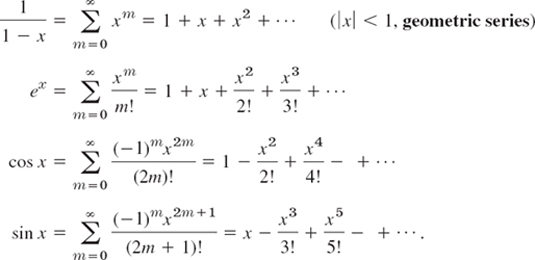

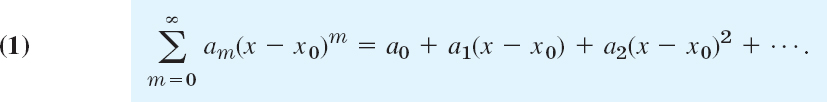

From calculus we remember that a power series (in powers of x − x0) is an infinite series of the form

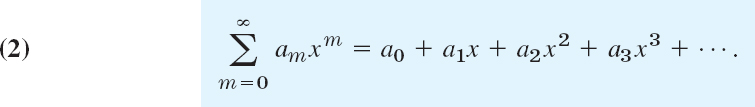

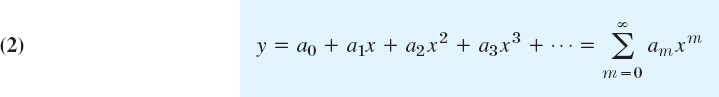

Here, x is a variable. a0, a1, a2, … are constants, called the coefficients of the series. x0 is a constant, called the center of the series. In particular, if x0 = 0, we obtain a power series in powers of x

We shall assume that all variables and constants are real.

We note that the term “power series” usually refers to a series of the form (1) [or (2)] but does not include series of negative or fractional powers of x. We use m as the summation letter, reserving n as a standard notation in the Legendre and Bessel equations for integer values of the parameter.

Idea and Technique of the Power Series Method

The idea of the power series method for solving linear ODEs seems natural, once we know that the most important ODEs in applied mathematics have solutions of this form. We explain the idea by an ODE that can readily be solved otherwise.

EXAMPLE 2 Power Series Solution. Solve y′ − y = 0.

Solution. In the first step we insert

and the series obtained by termwise differentiation

into the ODE:

![]()

Then we collect like powers of x, finding

![]()

Equating the coefficient of each power of x to zero, we have

![]()

Solving these equations, we may express a1, a2, … in terms of a0, which remains arbitrary:

![]()

With these values of the coefficients, the series solution becomes the familiar general solution

Test your comprehension by solving y″ + y = 0 by power series. You should get the result y = a0 cos x + a1 sin x.

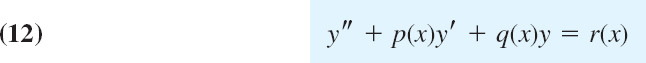

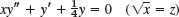

We now describe the method in general and justify it after the next example. For a given ODE

![]()

we first represent p(x) and q(x) by power series in powers of x (or of x − x0 if solutions in powers of x − x0 are wanted). Often p(x) and q(x) are polynomials, and then nothing needs to be done in this first step. Next we assume a solution in the form of a power series (2) with unknown coefficients and insert it as well as (3) and

into the ODE. Then we collect like powers of x and equate the sum of the coefficients of each occurring power of x to zero, starting with the constant terms, then taking the terms containing x, then the terms in x2, and so on. This gives equations from which we can determine the unknown coefficients of (3) successively.

EXAMPLE 3 A Special Legendre Equation. The ODE

![]()

occurs in models exhibiting spherical symmetry. Solve it.

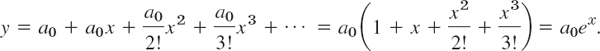

Solution. Substitute (2), (3), and (5) into the ODE. (1 − x2)y″′ gives two series, one for y″ and one for −x2y″. In the term −2xy′ use (3) and in 2y use (2). Write like powers of x vertically aligned. This gives

Add terms of like powers of x. For each power x0, x, x2, … equate the sum obtained to zero. Denote these sums by [0] (constant terms), [1] (first power of x), and so on:

This gives the solution

![]()

a0 and a1 remain arbitrary. Hence, this is a general solution that consists of two solutions: x and ![]() . These two solutions are members of families of functions called Legendre polynomials Pn(x) and Legendre functions Qn(x); here we have x = P1(x) and

. These two solutions are members of families of functions called Legendre polynomials Pn(x) and Legendre functions Qn(x); here we have x = P1(x) and ![]() . The minus is by convention. The index 1 is called the order of these two functions and here the order is 1. More on Legendre polynomials in the next section.

. The minus is by convention. The index 1 is called the order of these two functions and here the order is 1. More on Legendre polynomials in the next section.

Theory of the Power Series Method

The nth partial sum of (1) is

![]()

where n = 0, 1, …. If we omit the terms of sn from (1), the remaining expression is

![]()

This expression is called the remainder of (1) after the term an(x − x0)n.

For example, in the case of the geometric series

![]()

we have

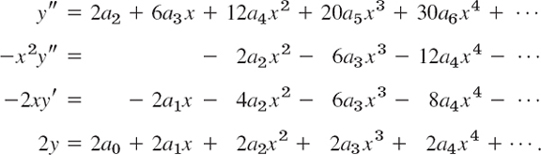

In this way we have now associated with (1) the sequence of the partial sums s0(x), s1(x), s2(x), …. If for some x = x1 this sequence converges, say,

![]()

then the series (1) is called convergent at x = x1, the number s(x1) is called the value or sum of (1) at x1, and we write

Then we have for every n,

![]()

If that sequence diverges at x = x1, the series (1) is called divergent at x = x1.

In the case of convergence, for any positive ![]() there is an N (depending on

there is an N (depending on ![]() ) such that, by (8)

) such that, by (8)

![]()

Geometrically, this means that all sn(x1) with n > N lie between s(x1) − ![]() and s(x1) +

and s(x1) + ![]() (Fig. 104). Practically, this means that in the case of convergence we can approximate the sum s(x1) of (1) at x1 by sx(x1) as accurately as we please, by taking n large enough.

(Fig. 104). Practically, this means that in the case of convergence we can approximate the sum s(x1) of (1) at x1 by sx(x1) as accurately as we please, by taking n large enough.

Where does a power series converge? Now if we choose x = x0 in (1), the series reduces to the single term a0 because the other terms are zero. Hence the series converges at. In some cases this may be the only value of x for which (1) converges. If there are other values of x for which the series converges, these values form an interval, the convergence interval. This interval may be finite, as in Fig. 105, with midpoint x0. Then the series (1) converges for all x in the interior of the interval, that is, for all x for which

![]()

and diverges for |x − x0| > R. The interval may also be infinite, that is, the series may converge for all x.

Fig. 105. Convergence interval (10) of a power series with center x0

The quantity R in Fig. 105 is called the radius of convergence (because for a complex power series it is the radius of disk of convergence). If the series converges for all x, we set R = ∞ (and 1/R = 0).

The radius of convergence can be determined from the coefficients of the series by means of each of the formulas

provided these limits exist and are not zero. [If these limits are infinite, then (1) converges only at the center x0.]

EXAMPLE 4 Convergence Radius R = ∞, 1, 0

For all three series let m → ∞

Convergence for all x(R = ∞) is the best possible case, convergence in some finite interval the usual, and convergence only at the center (R = 0) is useless.

When do power series solutions exist? Answer: if p, q, r in the ODEs

have power series representations (Taylor series). More precisely, a function f(x) is called analytic at a point x = x0 if it can be represented by a power series in powers of x − x0 with positive radius of convergence. Using this concept, we can state the following basic theorem, in which the ODE (12) is in standard form, that is, it begins with the y″. If your ODE begins with, say, h(x)y″, divide it first by h(x) and then apply the theorem to the resulting new ODE.

THEOREM 1 Existence of Power Series Solutions

If p, q, and r in (12) are analytic at x = x0, then every solution of (12) is analytic at x = x0 and can thus be represented by a power series in powers of x − x0 with radius of convergence R > 0.

The proof of this theorem requires advanced complex analysis and can be found in Ref. [A11] listed in App. 1.

We mention that the radius of convergence R in Theorem 1 is at least equal to the distance from the point x = x0 to the point (or points) closest to x0 at which one of the functions p, q, r, as functions of a complex variable, is not analytic. (Note that that point may not lie on the x-axis but somewhere in the complex plane.)

Further Theory: Operations on Power Series

In the power series method we differentiate, add, and multiply power series, and we obtain coefficient recursions (as, for instance, in Example 3) by equating the sum of the coefficients of each occurring power of x to zero. These four operations are permissible in the sense explained in what follows. Proofs can be found in Sec. 15.3.

1. Termwise Differentiation.A power series may be differentiated term by term. More precisely: if

converges for |x − x0| < R, where R > 0, then the series obtained by differentiating term by term also converges for those x and represents the derivative y′ of y for those x:

Similarly for the second and further derivatives.

2. Termwise Addition.Two power series may be added term by term. More precisely: if the series

have positive radii of convergence and their sums are f(x) and g(x), then the series

converges and represents f(x) + g(x) for each x that lies in the interior of the convergence interval common to each of the two given series.

3. Termwise Multiplication.Two power series may be multiplied term by term. More precisely: Suppose that the series (13) have positive radii of convergence and let f(x) and g(x) be their sums. Then the series obtained by multiplying each term of the first series by each term of the second series and collecting like powers of x − x0, that is,

converges and represents f(x)g(x) for each x in the interior of the convergence interval of each of the two given series.

4. Vanishing of All Coefficients (“Identity Theorem for Power Series.”) If a power series has a positive radius of convergent convergence and a sum that is identically zero throughout its interval of convergence, then each coefficient of the series must be zero.

- WRITING AND LITERATURE PROJECT. Power Series in Calculus. (a) Write a review (2–3 pages) on power series in calculus. Use your own formulations and examples—do not just copy from textbooks. No proofs. (b) Collect and arrange Maclaurin series in a systematic list that you can use for your work.

2–5 REVIEW: RADIUS OF CONVERGENCE

Determine the radius of convergence. Show the details of your work.

- 2.

- 3.

- 4.

- 5.

6–9 SERIES SOLUTIONS BY HAND

Apply the power series method. Do this by hand, not by a CAS, to get a feel for the method, e.g., why a series may terminate, or has even powers only, etc. Show the details.

- 6. (1 + x)y′ = y

- 7. y′ = −2xy

- 8. xy′ − 3y = k (= const)

- 9. y″ + y = 0

10–14 SERIES SOLUTIONS

Find a power series solution in powers of x. Show the details.

- 10. y″ − y′ + xy = 0

- 11. y″ − y′ + x2y = 0

- 12. (1 − x2)y″ − 2xy′ + 2y = 0

- 13. y″ + (1 + x2)y = 0

- 14. y″ − 4xy′ + (4x2 − 2)y = 0

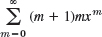

- 15. Shifting summation indices is often convenient or necessary in the power series method. Shift the index so that the power under the summation sign is xm. Check by writing the first few terms explicity.

16–19 CAS PROBLEMS. IVPs

Solve the initial value problem by a power series. Graph the partial sums of the powers up to and including x5. Find the value of the sum s (5 digits) at x1.

- 16. y′ + 4y = 1, y(0) = 1.25, x1 = 0.2

- 17. y″ + 3xy′ + 2y = 0, y(0) = 1, y′(0) = 1, x = 0.5

- 18. (1 − x2)y″ − 2xy′ + 30y = 0, y(0) = 0, y′(0) = 1.875, x1 = 0.5

- 19. (x − 2)y′ = xy, y(0) = 4, x1 = 2

- 20. CAS Experiment. Information from Graphs of Partial Sums. In numerics we use partial sums of power series. To get a feel for the accuracy for various x, experiment with sin x. Graph partial sums of the Maclaurin series of an increasing number of terms, describing qualitatively the “breakaway points” of these graphs from the graph of sin x. Consider other Maclaurin series of your choice.

Fig. 106. CAS Experiment 20. sin x and partial sums s3, s5, s7

5.2 Legendre's Equation. Legendre Polynomials Pn(x)

Legendre's differential equation1

is one of the most important ODEs in physics. It arises in numerous problems, particularly in boundary value problems for spheres (take a quick look at Example 1 in Sec. 12.10).

The equation involves a parameter n, whose value depends on the physical or engineering problem. So (1) is actually a whole family of ODEs. For n = 1 we solved it in Example 3 of Sec. 5.1 (look back at it). Any solution of (1) is called a Legendre function. The study of these and other “higher” functions not occurring in calculus is called the theory of special functions. Further special functions will occur in the next sections.

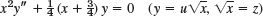

Dividing (1) by 1 − x2, we obtain the standard form needed in Theorem 1 of Sec. 5.1 and we see that the coefficients −2x/(1 − x2) and n(n + 1)/(1 − x2) of the new equation are analytic at x = 0, so that we may apply the power series method. Substituting

and its derivatives into (1), and denoting the constant n(n + 1) simply by k, we obtain

By writing the first expression as two separate series we have the equation

![]()

It may help you to write out the first few terms of each series explicitly, as in Example 3 of Sec. 5.1; or you may continue as follows. To obtain the same general power xs in all four series, set m − 2 = s (thus m = s + 2) in the first series and simply write s instead of m in the other three series. This gives

![]()

(Note that in the first series the summation begins with s = 0.) Since this equation with the right side 0 must be an identity in x if (2) is to be a solution of (1), the sum of the coefficients of each power of x on the left must be zero. Now x0 occurs in the first and fourth series only, and gives [remember that k = n(n + 1)]

![]()

x1 occurs in the first, third, and fourth series and gives

![]()

The higher powers x2, x3, … occur in all four series and give

![]()

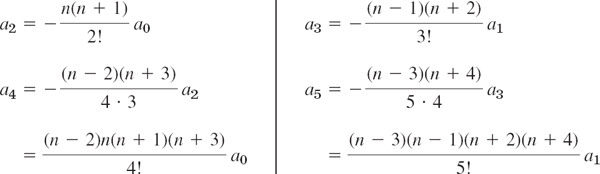

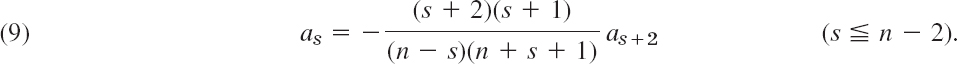

The expression in the brackets […] can be written (n − s)(n + s + 1), as you may readily verify. Solving (3a) for a2 and (3b) for a3 as well as (3c) for as+2, we obtain the general formula

This is called a recurrence relation or recursion formula. (Its derivation you may verify with your CAS.) It gives each coefficient in terms of the second one preceding it, except for a0 and a1, which are left as arbitrary constants. We find successively

and so on. By inserting these expressions for the coefficients into (2) we obtain

![]()

where

These series converge for |x| < 1 (see Prob. 4; or they may terminate, see below). Since (6) contains even powers of x only, while (7) contains odd powers of x only, the ratio y1/y2 is not a constant, so that y1 and y2 are not proportional and are thus linearly independent solutions. Hence (5) is a general solution of (1) on the interval −1 < x < 1.

Note that x = ±1 are the points at which 1 − x2 = 0, so that the coefficients of the standardized ODE are no longer analytic. So it should not surprise you that we do not get a longer convergence interval of (6) and (7), unless these series terminate after finitely many powers. In that case, the series become polynomials.

Polynomial Solutions. Legendre Polynomials Pn(x)

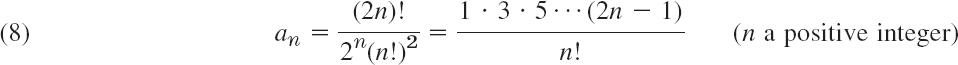

The reduction of power series to polynomials is a great advantage because then we have solutions for all x, without convergence restrictions. For special functions arising as solutions of ODEs this happens quite frequently, leading to various important families of polynomials; see Refs. [GenRef1], [GenRef10] in App. 1. For Legendre's equation this happens when the parameter n is a nonnegative integer because then the right side of (4) is zero for s = n, so that an+4 = 0, an+6 = 0, …. Hence if n is even, y1(x) reduces to a polynomial of degree n. If n is odd, the same is true for y2(x). These polynomials, multiplied by some constants, are called Legendre polynomials and are denoted by Pn(x). The standard choice of such constants is done as follows. We choose the coefficient an of the highest power xn as

(and an = 1 if n = 0). Then we calculate the other coefficients from (4), solved for as in terms of as+2, that is,

The choice (8) makes Pn(1) = 1 for every n (see Fig. 107); this motivates (8). From (9) with s = n − 2 and (8) we obtain

Using (2n)! = 2n(2n − 1)(2n − 2)! in the numerator and n! = n(n − 1)! and n! = n(n − 1)(n − 2)! in the denominator, we obtain

n(n − 1)2n(2n − 1) cancels, so that we get

and so on, and in general, when n − 2m ![]() 0,

0,

The resulting solution of Legendre's differential equation (1) is called the Legendre polynomialof degree n and is denoted by Pn(x).

From (10) we obtain

where M = n/2 or (n − 1)/2, whichever is an integer. The first few of these functions are (Fig. 107)

and so on. You may now program (11) on your CAS and calculate Pn(x) as needed.

Fig. 107. Legendre polynomials

The Legendre polynomials Pn(x) are orthogonal on the interval −1 ![]() x

x ![]() 1, a basic property to be defined and used in making up “Fourier–Legendre series” in the chapter on Fourier series (see Secs. 11.5–11.6).

1, a basic property to be defined and used in making up “Fourier–Legendre series” in the chapter on Fourier series (see Secs. 11.5–11.6).

1–5 LEGENDRE POLYNOMIALS AND FUNCTIONS

- Legendre functions for n = 0. Show that (6) with n = 0 gives P0(x) = 1 and (7) gives (use ln (1 + x) =

Verify this by solving (1) with n = 0, setting z = y′ and separating variables.

- Legendre functions for n = 1. Show that (7) with n = 1 gives y2(x) = P1(x) = x and (6) gives

- Special n. Derive (11′) from (11).

- Legendre's ODE. Verify that the polynomials in (11′) satisfy (1).

- Obtain P6 and P7.

6–9 CAS PROBLEMS

- 6. Graph P2(x), …, P10(x) on common axes. For what x (approximately) and n = 2, …, 10 is

?

? - 7. From what n on will your CAS no longer produce faithful graphs of Pn(x)? Why?

- 8. Graph Q0(x), Q1(x), and some further Legendre functions.

- 9. Substitute asxs + as+1xs+1 + as+2xs+2 into Legendre's equation and obtain the coefficient recursion (4).

- 10. TEAM PROJECT. Generating Functions. Generating functions play a significant role in modern applied mathematics (see [GenRef5]). The idea is simple. If we want to study a certain sequence (fn(x)) and can find a function

we may obtain properties of from those of (fn(x)) from those of G, which “generates” this sequence and is called a generating function of the sequence.

(a) Legendre polynomials. Show that

is a generating function of the Legendre polynomials. Hint: Start from the binomial expansion of

, then set ν = 2xu − u2, multiply the powers of 2xu − u2 out, collect all the terms involving un, and verify that the sum of these terms is Pn(x)un.

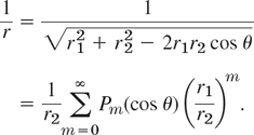

, then set ν = 2xu − u2, multiply the powers of 2xu − u2 out, collect all the terms involving un, and verify that the sum of these terms is Pn(x)un.(b) Potential theory. Let A1 and A2 be two points in space (Fig. 108, r2 > 0). Using (12), show that

This formula has applications in potential theory. (Q/r is the electrostatic potential at A2 due to a charge Q located at A1. And the series expresses 1/r in terms of the distances of A1 and A2 from any origin O and the angle θ between the segments OA1 and OA2.)

(c) Further applications of (12). Show that Pn(1) = 1, Pn(−1) = (−1)n, P2n+1(0) = 0, and P2n(0) = (−1)n · 1 · 3 … (2n − 1)/[2 · 4 … (2n)].

11–15 FURTHER FORMULAS

- 11. ODE. Find a solution of (a2 − x2)y″ − 2xy′ + n(n + 1)y = 0, a ≠ 0, by reduction to the Legendre equation.

- 12. Rodrigues's formula (13)2 Applying the binomial theorem to (x2 − 1)n, differentiating it n times term by term, and comparing the result with (11), show that

- 13. Rodrigues's formula. Obtain (11′) from (13).

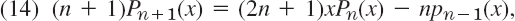

- 14. Bonnet's recursion.3 Differentiating (13) with respect to u, using (13) in the resulting formula, and comparing coefficients of un, obtain the Bonnet recursion.

where n = 1, 2, …. This formula is useful for computations, the loss of significant digits being small (except near zeros). Try (14) out for a few computations of your own choice.

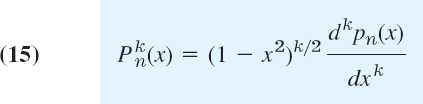

- 15. Associated Legendre functions

are needed, e.g., in quantum physics. They are defined by

are needed, e.g., in quantum physics. They are defined by

and are solutions of the ODE

where q(x) = n(n + 1) − k2/(1 − x2). Find

,

,  ,

,  , and

, and  and verify that they satisfy (16).

and verify that they satisfy (16).

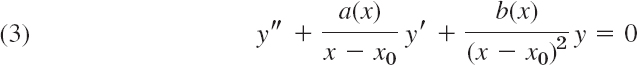

5.3 Extended Power Series Method: Frobenius Method

Several second-order ODEs of considerable practical importance—the famous Bessel equation among them—have coefficients that are not analytic (definition in Sec. 5.1), but are “not too bad,” so that these ODEs can still be solved by series (power series times a logarithm or times a fractional power of x, etc.). Indeed, the following theorem permits an extension of the power series method. The new method is called the Frobenius method.4 Both methods, that is, the power series method and the Frobenius method, have gained in significance due to the use of software in actual calculations.

Let b(x) and c(x) be any functions that are analytic at x = 0. Then the ODE

has at least one solution that can be represented in the form

where the exponent r may be any (real or complex) number (and r is chosen so that a0 ≠ 0).

The ODE (1) also has a second solution (such that these two solutions are linearly independent) that may be similar to (2) (with a different r and different coefficients) or may contain a logarithmic term. (Details in Theorem 2 below.)

For example, Bessel's equation (to be discussed in the next section)

is of the form (1) with b(x) = 1 and c(x) = x2 − v2 analytic at x = 0, so that the theorem applies. This ODE could not be handled in full generality by the power series method.

Similarly, the so-called hypergeometric differential equation (see Problem Set 5.3) also requires the Frobenius method.

The point is that in (2) we have a power series times a single power of x whose exponent r is not restricted to be a nonnegative integer. (The latter restriction would make the whole expression a power series, by definition; see Sec. 5.1.)

The proof of the theorem requires advanced methods of complex analysis and can be found in Ref. [A11] listed in App. 1.

Regular and Singular Points. The following terms are practical and commonly used. A regular point of the ODE

![]()

is a point x0 at which the coefficients p and q are analytic. Similarly, a regular point of the ODE

![]()

is an x0 at which ![]() are analytic and

are analytic and ![]() (so what we can divide by

(so what we can divide by ![]() and get the previous standard form). Then the power series method can be applied. If x0 is not a regular point, it is called a singular point.

and get the previous standard form). Then the power series method can be applied. If x0 is not a regular point, it is called a singular point.

Indicial Equation, Indicating the Form of Solutions

We shall now explain the Frobenius method for solving (1). Multiplication of (1) by x2 gives the more convenient form

We first expand b(x) and c(x) in power series,

![]()

or we do nothing if b(x) and c(x) are polynomials. Then we differentiate (2) term by term, finding

By inserting all these series into (1′) we obtain

We now equate the sum of the coefficients of each power xr, xr+1, xr+2, … to zero. This yields a system of equations involving the unknown coefficients am. The smallest power is xr and the corresponding equation is

![]()

Since by assumption a0 ≠ 0, the expression in the brackets […] must be zero. This gives

This important quadratic equation is called the indicial equation of the ODE (1). Its role is as follows.

The Frobenius method yields a basis of solutions. One of the two solutions will always be of the form (2), where r is a root of (4). The other solution will be of a form indicated by the indicial equation. There are three cases:

Case 1. Distinct roots not differing by an integer 1, 2, 3, ….

Case 2. A double root.

Case 3. Roots differing by an integer 1, 2, 3, ….

Cases 1 and 2 are not unexpected because of the Euler–Cauchy equation (Sec. 2.5), the simplest ODE of the form (1). Case 1 includes complex conjugate roots r1 and ![]() because

because ![]() Im r1 is imaginary, so it cannot be a real integer. The form of a basis will be given in Theorem 2 (which is proved in App. 4), without a general theory of convergence, but convergence of the occurring series can be tested in each individual case as usual. Note that in Case 2 we must have a logarithm, whereas in Case 3 we may or may not.

Im r1 is imaginary, so it cannot be a real integer. The form of a basis will be given in Theorem 2 (which is proved in App. 4), without a general theory of convergence, but convergence of the occurring series can be tested in each individual case as usual. Note that in Case 2 we must have a logarithm, whereas in Case 3 we may or may not.

THEOREM 2 Frobenius Method. Basis of Solutions. Three Cases

Suppose that the ODE (1) satisfies the assumptions in Theorem 1. Let r1 and r2 be the roots of the indicial equation (4) . Then we have the following three cases.

Case 1. Distinct Roots Not Differing by an Integer. A basis is

![]()

and

![]()

with coefficients obtained successively from (3) with r = r1 and r = r2, respectively.

Case 2 . Double Root r1 = r2 = r. A basis is

![]()

(of the same general form as before) and

![]()

Case 3 . Roots Differing by an Integer. A basis is

![]()

(of the same general form as before) and

![]()

where the roots are so denoted that r1 − r2 > 0 and k may turn out to be zero.

Typical Applications

Technically, the Frobenius method is similar to the power series method, once the roots of the indicial equation have been determined. However, (5)–(10) merely indicate the general form of a basis, and a second solution can often be obtained more rapidly by reduction of order (Sec. 2.1).

EXAMPLE 1 Euler–Cauchy Equation, Illustrating Cases 1 and 2 and Case 3 without a Logarithm

For the Euler–Cauchy equation (Sec. 2.5)

![]()

substitution of y = xr gives the auxiliary equation

![]()

which is the indicial equation [and y = xr is a very special form of (2)!]. For different roots r1, r2 we get a basis ![]() , and for a double root r we get a basis xr, xr ln x. Accordingly, for this simple ODE, Case 3 plays no extra role.

, and for a double root r we get a basis xr, xr ln x. Accordingly, for this simple ODE, Case 3 plays no extra role.

EXAMPLE 2 Illustration of Case 2 (Double Root)

Solve the ODE

![]()

(This is a special hypergeometric equation, as we shall see in the problem set.)

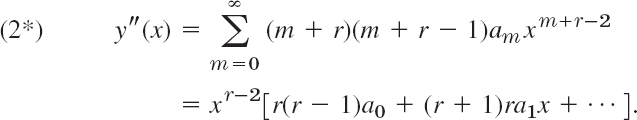

Solution.W riting (11) in the standard form (1), we see that it satisfies the assumptions in Theorem 1. [What are b(x) and c(x) in (11)?] By inserting (2) and its derivatives (2*) into (11) we obtain

The smallest power is xr−1, occurring in the second and the fourth series; by equating the sum of its coefficients to zero we have

![]()

Hence this indicial equation has the double root r = 0.

First Solution. We insert this value r = 0 into (12) and equate the sum of the coefficients of the power xs to zero, obtaining

![]()

thus as+1 = as. Hence a0 = a1 = a2 = …, and by choosing a0 = 1 we obtain the solution

Second Solution. We get a second independent solution y2 by the method of reduction of order (Sec. 2.1), substituting y2 = uy1 and its derivatives into the equation. This leads to (9), Sec. 2.1, which we shall use in this example, instead of starting reduction of order from scratch (as we shall do in the next example). In (9) of Sec. 2.1 we have p = (3x − 1)/(x2 − x), the coefficient of y′ in (11) in standard form. By partial fractions,

![]()

Hence (9), Sec. 2.1, becomes

![]()

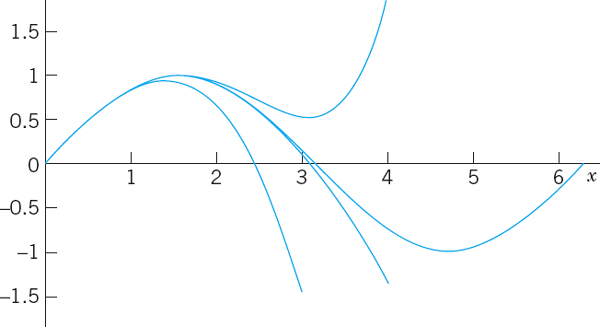

y1 and y2 are shown in Fig. 109. These functions are linearly independent and thus form a basis on the interval 0 < x < 1 (as well as on 1 < x < ∞).

Fig. 109. Solutions in Example 2

EXAMPLE 3 Case 3, Second Solution with Logarithmic Term

Solve the ODE

![]()

Solution. Substituting (2) and (2*) into (13), we have

![]()

We now take x2, x, and x inside the summations and collect all terms with power xm+r and simplify algebraically,

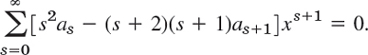

In the first series we set m = s and in the second m = s + 1, thus s = m − 1. Then

The lowest power is xr−1 (take s = −1 in the second series) and gives the indicial equation

![]()

The roots are r1 = 1 and r2 = 0. They differ by an integer. This is Case 3.

First Solution. From (14) with r = r1 = 1 we have

This gives the recurrence relation

Hence a1 = 0, a2 = 0, … successively. Taking a0 = 1, we get as a first solution ![]() .

.

Second Solution. Applying reduction of order (Sec. 2.1), we substitute y2 = y1u = xu, y′2 = xu′ + u and y″2 = xu″ + 2u′ into the ODE, obtaining

![]()

xu drops out. Division by x and simplification give

![]()

From this, using partial fractions and integrating (taking the integration constant zero), we get

Taking exponents and integrating (again taking the integration constant zero), we obtain

![]()

y1 and y2 are linearly independent, and y2 has a logarithmic term. Hence y1 and y2 constitute a basis of solutions for positive x.

The Frobenius method solves the hypergeometric equation, whose solutions include many known functions as special cases (see the problem set). In the next section we use the method for solving Bessel's equation.

- WRITING PROJECT. Power Series Method and Frobenius Method. Write a report of 2–3 pages explaining the difference between the two methods. No proofs. Give simple examples of your own.

2–13 FROBENIUS METHOD

Find a basis of solutions by the Frobenius method. Try to identify the series as expansions of known functions. Show the details of your work.

- 2. (x + 2)2y″ + (x + 2)y′ − y = 0

- 3. xy″ + 2y′ + xy = 0

- 4. xy″ + y = 0

- 5. xy″ (2x + 1)y′ + (x + 1)y = 0

- 6. xy″ + 2x3y′ + (x2 − 2)y = 0

- 7. y″ + (x − 1)y = 0

- 8. xy″ + y′ − xy = 0

- 9. 2x(x − 1)y″ − (x + 1)y′ + y = 0

- 10. xy″ + 2y′ + 4xy = 0

- 11. xy″ + (2 − 2x)y′ + (x − 2)y = 0

- 12.

- 13. xy″ + (1 − 2x)y′ + (x − 1)y = 0

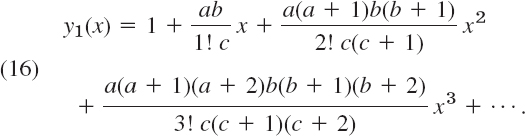

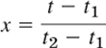

- 14. TEAM PROJECT. Hypergeometric Equation, Series, and Function. Gauss's hypergeometric ODE5 is

Here, a, b, c are constants. This ODE is of the form p2y″ + p1y′ + p0y = 0, where p2, p1, p0 are polynomials of degree 2, 1, 0, respectively. These polynomials are written so that the series solution takes a most practical form, namely,

This series is called the hypergeometric series. Its sum y1(x) is called the hypergeometric function and is denoted by F(a, b, c; x). Here, c ≠ 0, −1, −2, …. By choosing specific values of a, b, c we can obtain an incredibly large number of special functions as solutions of (15) [see the small sample of elementary functions in part (c)]. This accounts for the importance of (15).

(a) Hypergeometric series and function. Show that the indicial equation of (15) has the roots r1 = 0 and r2 = 1 − c. Show that for r1 = 0 the Frobenius method gives (16). Motivate the name for (16) by showing that

(b) Convergence. For what a or b will (16) reduce to a polynomial? Show that for any other a, b, c (c ≠, −1, −2, …) the series (16) converges when |x| < 1.

(c) Special cases. Show that

Find more such relations from the literature on special functions, for instance, from [GenRef1] in App. 1.

(d) Second solution. Show that for r2 = 1 − c the Frobenius method yields the following solution (where c ≠ 2, 3, 4, …):

Show that

(e) On the generality of the hypergeometric equation. Show that

with

, etc., constant A, B, C, D, K, and t2 + At + B = (t − t1)(t − t2), t1 ≠ t2, can be reduced to the hypergeometric equation with independent variable

, etc., constant A, B, C, D, K, and t2 + At + B = (t − t1)(t − t2), t1 ≠ t2, can be reduced to the hypergeometric equation with independent variable

and parameters related by Ct1 + D = −c(t2 − t1), C = a + b + 1, K = ab. From this you see that (15) is a “normalized form” of the more general (18) and that various cases of (18) can thus be solved in terms of hypergeometric functions.

15–20 HYPERGEOMETRIC ODE

Find a general solution in terms of hypergeometric functions.

- 15. 2x(1 − x)y″ − (1 + 6x)y′ − 2y = 0

- 16.

- 17. 4x(1 − x)y″ + y′ + 8y = 0

- 18.

- 19.

- 20.

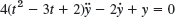

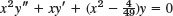

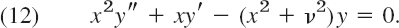

5.4 Bessel's Equation. Bessel Functions Jν(x)

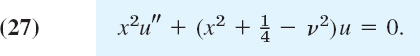

One of the most important ODEs in applied mathematics in Bessel's equation,6

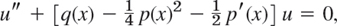

where the parameter ν (nu) is a given real number which is positive or zero. Bessel's equation often appears if a problem shows cylindrical symmetry, for example, as the membranes in Sec. 12.9. The equation satisfies the assumptions of Theorem 1. To see this, divide (1) by x2 to get the standard form y″ + y′/x + (1 − ν2/x2)y = 0. Hence, according to the Frobenius theory, it has a solution of the form

Substituting (2) and its first and second derivatives into Bessel's equation, we obtain

We equate the sum of the coefficients of xs+r to zero. Note that this power xs+r corresponds to m = s in the first, second, and fourth series, and to m = s − 2 in the third series. Hence for s = 0 and s = 1, the third series does not contribute since m ![]() 0.

0.

For s = 2, 3, … all four series contribute, so that we get a general formula for all these s. We find

From (3a) we obtain the indicial equation by dropping a0,

The roots are r1 = ν(![]() 0) and r2 = −ν.

0) and r2 = −ν.

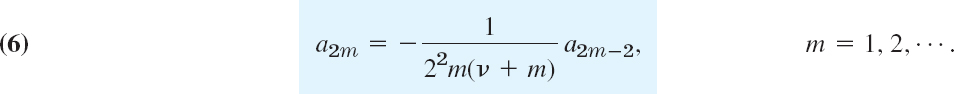

Coefficient Recursion for r = r1 = ν. For r = ν, Eq. (3b) reduces to (2ν + 1)a1 = 0. Hence a1 = 0 since ν ![]() 0. Substituting r = ν in (3c) and combining the three terms containing as gives simply

0. Substituting r = ν in (3c) and combining the three terms containing as gives simply

![]()

Since a1 = 0 and ν ![]() 0, it follows from (5) that a3 = 0, a5 = 0, …. Hence we have to deal only with even-numbered coefficients as with s = 2m. For s = 2m, Eq. (5) becomes

0, it follows from (5) that a3 = 0, a5 = 0, …. Hence we have to deal only with even-numbered coefficients as with s = 2m. For s = 2m, Eq. (5) becomes

![]()

Solving for a2m gives the recursion formula

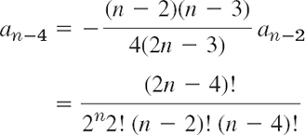

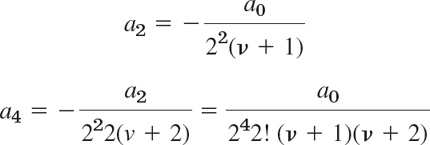

From (6) we can now determine a2, a4, … successively. This gives

and so on, and in general

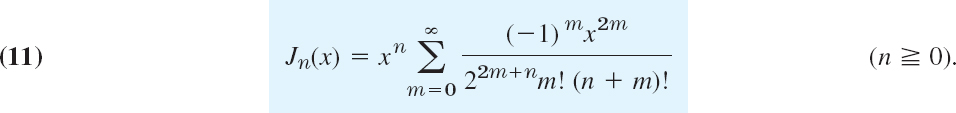

Bessel Functions Jn(x) for Integer ν = n

Integer values of ν are denoted by n. This is standard. For ν = n the relation (7) becomes

a0 is still arbitrary, so that the series (2) with these coefficients would contain this arbitrary factor a0. This would be a highly impractical situation for developing formulas or computing values of this new function. Accordingly, we have to make a choice. The choice a0 = 1 would be possible. A simpler series (2) could be obtained if we could absorb the growing product (n + 1)(n + 2) … (n + m) into a factorial function (n + m)! What should be our choice? Our choice should be

because then n! (n + 1) … (n + m) = (n + m)! in (8), so that (8) simply becomes

By inserting these coefficients into (2) and remembering that c1 = 0, c3 = 0, … we obtain a particular solution of Bessel's equation that is denoted by Jn(x):

Jn(x) is called the Bessel function of the first kind of order n. The series (11) converges for all x, as the ratio test shows. Hence Jn(x) is defined for all x. The series converges very rapidly because of the factorials in the denominator.

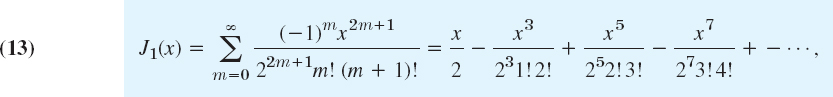

EXAMPLE 1 Bessel Functions J0(x) and J1(x)

For n = 0 we obtain from (11) the Bessel function of order 0

which looks similar to a cosine (Fig. 110). For n = 1 we obtain the Bessel function of order 1

which looks similar to a sine (Fig. 110). But the zeros of these functions are not completely regularly spaced (see also Table A1 in App. 5) and the height of the “waves” decreases with increasing x. Heuristically, n2/x2 in (1) in standard form [(1) divided by x2] is zero (if n = 0) or small in absolute value for large x, and so is y′/x, so that then Bessel's equation comes close to y″ + y = 0, the equation of cos x and sin x; also y′/x acts as a “damping term,” in part responsible for the decrease in height. One can show that for large x,

where ∼ is read “asymptotically equal” and means that for fixed n the quotient of the two sides approaches 1 as x → ∞.

Fig. 110. Bessel functions of the first kind J0 and J1

Formula (14) is surprisingly accurate even for smaller x (>0). For instance, it will give you good starting values in a computer program for the basic task of computing zeros. For example, for the first three zeros of J0 you obtain the values 2.356 (2.405 exact to 3 decimals, error 0.049), 5.498 (5.520, error 0.022), 8.639 (8.654, error 0.015), etc.

Bessel Functions Jν(x) for any ν  0. Gamma Function

0. Gamma Function

We now proceed from integer ν = n to any ν ![]() 0. We had a0 = 1/(2nn!) in (9). So we have to extend the factorial function to any ν

0. We had a0 = 1/(2nn!) in (9). So we have to extend the factorial function to any ν ![]() 0. For this we choose

0. For this we choose

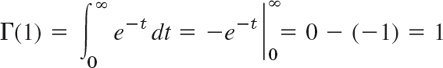

with the gamma function Γ(ν + 1) defined by

(CAUTION! Note the convention ν + 1 on the left but ν in the integral.) Integration by parts gives

This is the basic functional relation of the gamma function

Now from (16) with ν = 0 and then by (17) we obtain

and then Γ(2) = 1 · Γ(1) = 1!, Γ(3) = 2Γ(1) = 2! and in general

Hence the gamma function generalizes the factorial function to arbitrary positive ν. Thus (15) with ν = n agrees with (9).

Furthermore, from (7) with a0 given by (15) we first have

Now (17) gives (ν + 1)Γ(ν + 1) = Γ(ν + 2), (ν + 2)Γ(ν + 2) = Γ(ν + 3) and so on, so that

![]()

Hence because of our (standard!) choice (15) of a0 the coefficients (7) are simply

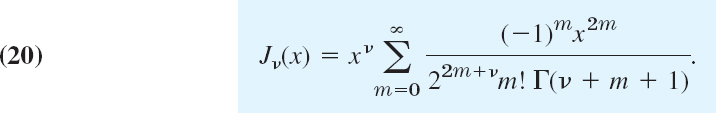

With these coefficients and r = r1 = ν we get from (2) a particular solution of (1), denoted by Jν(x) and given by

Jν(x) is called the Bessel function of the first kind of order ν. The series (20) converges for all x, as one can verify by the ratio test.

Discovery of Properties from Series

Bessel functions are a model case for showing how to discover properties and relations of functions from series by which they are defined. Bessel functions satisfy an incredibly large number of relationships—look at Ref. [A13] in App. 1; also, find out what your CAS knows. In Theorem 3 we shall discuss four formulas that are backbones in applications and theory.

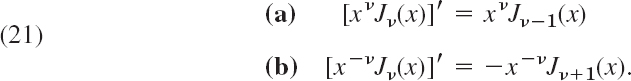

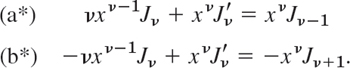

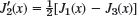

THEOREM 1 Derivatives, Recursions

The derivative of Jν(x) with respect to x can be expressed by Jν−1(x) or Jν+1(x) by the formulas

Furthermore, Jν(x) and its derivative satisfy the recurrence relations

(a) We multiply (20) by xν and take x2ν under the summation sign. Then we have

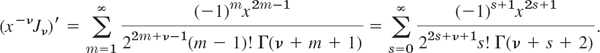

We now differentiate this, cancel a factor 2, pull x2ν−1 out, and use the functional relationship Γ(ν + m + 1) = (ν + m)Γ(ν + m) [see (17)]. Then (20) with ν − 1 instead of ν shows that we obtain the right side of (21a). Indeed,

(b) Similarly, we multiply (20) by x−ν, so that xν in (20) cancels. Then we differentiate, cancel 2m, and use m! = m(m − 1)!. This gives, with m = s + 1,

Equation (20) with ν + 1 instead of ν and s instead of m shows that the expression on the right is −x−ν Jν+1(x). This proves (21b).

(c), (d) We perform the differentiation in (21a). Then we do the same in (21b) and multiply the result on both sides by x2ν. This gives

Substracting (b*) from (a*) and dividing the result by xν gives (21c). Adding (a*) and (b*) and dividing the result by xν gives (21d).

EXAMPLE 2 Application of Theorem 1 in Evaluation and Integration

Formula (21c) can be used recursively in the form

![]()

for calculating Bessel functions of higher order from those of lower order. For instance, J2(x) = 2J1(x)/x − J0(x), so that J2 can be obtained from tables of J0 and J1 (in App. 5 or, more accurately, in Ref. [GenRef1] in App. 1).

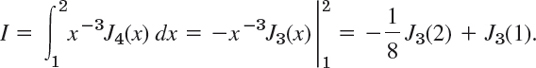

To illustrate how Theorem 1 helps in integration, we use (21b) with ν = 3 integrated on both sides. This evaluates, for instance, the integral

A table of J3 (on p. 398 of Ref. [GenRef1]) or your CAS will give you

![]()

Your CAS (or a human computer in precomputer times) obtains J3 from (21), first using (21c) with ν = 2, that is, J3 = 4x−1J2 − J1, then (21c) with ν = 1, that is, J2 = 2x−1J1 − J0. Together,

This is what you get, for instance, with Maple if you type int(…). And if you type evalf (int(…)), you obtain 0.003445448, in agreement with the result near the beginning of the example.

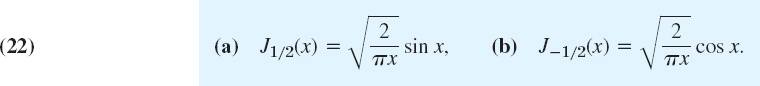

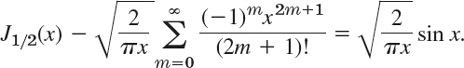

Bessel Functions Jν with Half-Integer ν Are Elementary

We discover this remarkable fact as another property obtained from the series (20) and confirm it in the problem set by using Bessel's ODE.

EXAMPLE 3 Elementary Bessel Functions Jν with ![]() . The Value

. The Value ![]()

We first prove (Fig. 111)

The series (20) with ![]() is

is

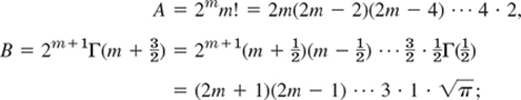

The denominator can be written as a product AB, where (use (16) in B)

here we used (proof below)

The product of the right sides of A and B can be written

![]()

Hence

Fig. 111. Bessel functions J1/2 and J−1/2

This proves (22a). Differentiation and the use of (21a) with ![]() now gives

now gives

This proves (22b). From (22) follow further formulas successively by (21c), used as in Example 2.

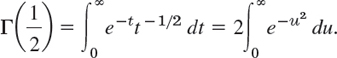

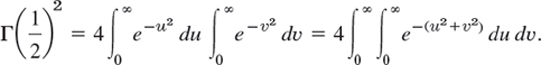

We finally prove ![]() by a standard trick worth remembering. In (15) we set t = u2. Then dt = 2u du and

by a standard trick worth remembering. In (15) we set t = u2. Then dt = 2u du and

We square on both sides, write v instead of u in the second integral, and then write the product of the integrals as a double integral:

We now use polar coordinates r, θ by setting u = r cos θ, ν = r sin θ. Then the element of area is du dv = r dr dθ and we have to integrate over r from 0 to ∞ and over θ from 0 to π/2 (that is, over the first quadrant of the uv-plane):

By taking the square root on both sides we obtain (23).

General Solution. Linear Dependence

For a general solution of Bessel's equation (1) in addition to Jν we need a second linearly independent solution. For ν not an integer this is easy. Replacing ν by −ν in (20), we have

Since Bessel's equation involves ν2, the functions Jν and J−ν are solutions of the equation for the same ν. If ν is not an integer, they are linearly independent, because the first terms in (20) and in (24) are finite nonzero multiples of xν and x−ν. Thus, if ν is not an integer, a general solution of Bessel's equation for all x ≠ 0 is

![]()

This cannot be the general solution for an integer ν = n because, in that case, we have linear dependence. It can be seen that the first terms in (20) and (24) are finite nonzero multiples of xν and x−ν, respectively. This means that, for any integer ν = n, we have linear dependence because

To prove (25), we use (24) and let ν approach a positive integer n. Then the gamma function in the coefficients of the first n terms becomes infinite (see Fig. 553 in App. A3.1), the coefficients become zero, and the summation starts with m = n. Since in this case Γ(m − n + 1) = (m − n)! by (18), we obtain

The last series represents (−1)nJn(x), as you can see from (11) with m replaced by s. This completes the proof.

The difficulty caused by (25) will be overcome in the next section by introducing further Bessel functions, called of the second kind and denoted by Yν.

- Convergence. Show that the series (11) converges for all x. Why is the convergence very rapid?

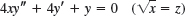

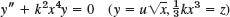

2–10 ODEs REDUCIBLE TO BESSEL'S ODE

This is just a sample of such ODEs; some more follow in the next problem set. Find a general solution in terms of Jν and J−ν or indicate when this is not possible. Use the indicated substitutions. Show the details of your work.

- 2.

- 3.

- 4.

- 5. Two-parameter ODE

x2y″ + xy′ + (λ2x2 − ν2)y = 0 (λx = z)

- 6.

- 7.

- 8. (2x + 1)2y″ + 2(2x + 1)y′ + 16x(x + 1)y = 0 (2x + 1 = z)

- 9. xy″ + (2ν + 1)y′ + xy = 0 (y = x−νu)

- 10. x2y″ + (1 − 2ν)xy′ + ν2(x2ν + 1 − ν2)y = 0 (y = xνu, xν = z)

- 11. CAS EXPERIMENT. Change of Coefficient. Find and graph (on common axes) the solutions of

for k = 0, 1, 2, …, 10 (or as far as you get useful graphs). For what k do you get elementary functions? Why? Try for noninteger k, particularly between 0 and 2, to see the continuous change of the curve. Describe the change of the location of the zeros and of the extrema as k increases from 0. Can you interpret the ODE as a model in mechanics, thereby explaining your observations?

- 12. CAS EXPERIMENT. Bessel Functions for Large x.

(a) Graph Jn(x) fot n = 0, …, 5 on common axes.

(b) Experiment with (14) for integer n. Using graphs, find out from which x = xn on the curves of (11) and (14) practically coincide. How does xn change with n?

(c) What happens in (b) if

? (Our usual notation in this case would be ν.)

? (Our usual notation in this case would be ν.)(d) How does the error of (14) behave as a function of x for fixed n? [Error = exact value minus approximation (14).]

(e) Show from the graphs that J0(x) has extrema where J1(x) = 0. Which formula proves this? Find further relations between zeros and extrema.

13–15 ZEROS of Bessel functions play a key role in modeling (e.g. of vibrations; see Sec. 12.9).

- 13. Interlacing of zeros. Using (21) and Rolle's theorem, show that between any two consecutive positive zeros of Jn(x) there is precisely one zero of Jn+1(x).

- 14. Zeros. Compute the first four positive zeros of J0(x) and J1(x) from (14). Determine the error and comment.

- 15. Interlacing of zeros. Using (21) and Rolle's theorem, show that between any two consecutive zeros of J0(x) there is precisely one zero of J1(x).

16–18 HALF-INTEGER PARAMETER: APPROACH BY THE ODE

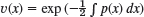

- 16. Elimination of first derivative. Show that y = uν with

gives from the ODE the ODE y″ + p(x)y′ + q(x)y = 0 the ODE

gives from the ODE the ODE y″ + p(x)y′ + q(x)y = 0 the ODE

not containing the first derivative of u.

- 17. Bessel's equation. Show that for (1) the substitution in Prob. 16 is y = ux−1/2 and gives

- 18. Elementary Bessel functions. Derive (22) in Example 3 from (27).

19–25 APPLICATION OF (21): DERIVATIVES, INTEGRALS

Use the powerful formulas (21) to do Probs. 19–25. Show the details of your work.

- 19. Derivatives. Show that J′0(x) = −J1(x), J′1(x) = J0(x) − J1(x)/x,

.

. - 20. Bessel's equation. Derive (1) from (21).

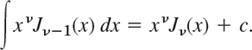

- 21. Basic integral formula. Show that

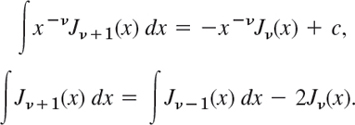

- 22. Basic integral formulas. Show that

- 23. Integration. Show that ∫x2J0(x)dx = x2J1(x) + xJ0(x) − ∫J0(x)dx. (The last integral is nonelementary; tables exist, e.g., in Ref. [A13] in App. 1.)

- 24. Integration. Evaluate ∫x−1J4(x) dx.

- 25. Integration. Evaluate ∫J5(x)dx.

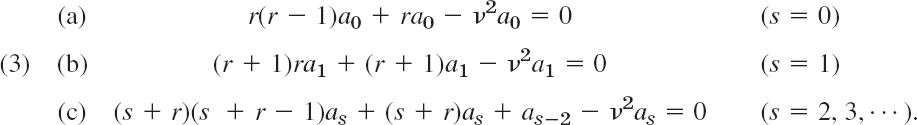

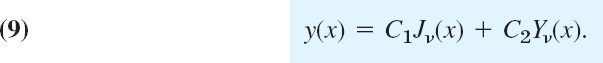

5.5 Bessel Functions Yν(x). General Solution

To obtain a general solution of Bessel's equation (1), Sec. 5.4, for any, we now introduce Bessel functions of the second kind Yν(x), beginning with the case ν = n = 0.

When n = 0, Bessel's equation can be written (divide by x)

Then the indicial equation (4) in Sec. 5.4 has a double root r = 0. This is Case 2 in Sec. 5.3. In this case we first have only one solution, J0(x). From (8) in Sec. 5.3 we see that the desired second solution must be of the form

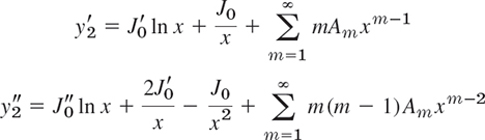

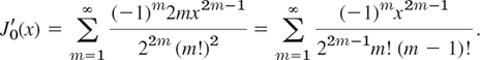

We substitute y2 and its derivatives

into (1). Then the sum of the three logarithmic terms xJ″0 ln x, J′0 ln x, and xJ0 ln x is zero because J0 is a solution of (1). The terms −J0/x and J0/x (from xy″ and y′) cancel. Hence we are left with

Addition of the first and second series gives Σm2Amxm−1. The power series of J′0(x) is obtained from (12) in Sec. 5.4 and the use of m!/m = (m − 1)! in the form

Together with Σm2Amxm−1 and ΣAmxm+1 this gives

First, we show that the Am with odd subscripts are all zero. The power x0 occurs only in the second series, with coefficient A1. Hence A1 = 0. Next, we consider the even powers x2s. The first series contains none. In the second series, m − 1 = 2s gives the term (2s + 1)2A2s+1x2s. In the third series, m + 1 = 2s. Hence by equating the sum of the coefficients of x2s to zero we have

![]()

Since A1 = 0, we thus obtain A3 = 0, A5 = 0, …, successively.

We now equate the sum of the coefficients of x2s+1 to zero. For s = 0 this gives

![]()

For the other values of s we have in the first series in (3*) 2m − 1 = 2s + 1, hence m = s + 1, in the second m − 1 = 2s + 1, and in the third m + 1 = 2s + 1. We thus obtain

For s = 1 this yields

![]()

and in general

Using the short notations

and inserting (4) and A1 = A3 = … = 0 into (2), we obtain the result

Since J0 and y2 are linearly independent functions, they form a basis of (1) for x > 0. Of course, another basis is obtained if we replace y2 by an independent particular solution of the form a(y2 + bJ0), where a (≠ 0) and b are constants. It is customary to choose a = 2/π and b = γ − ln 2, where the number γ = 0.57721566490 … is the so-called Euler constant, which is defined as the limit of

![]()

as s approaches infinity. The standard particular solution thus obtained is called the Bessel function of the second kind of order zero (Fig. 112) or Neumann's function of order zero and is denoted by Y0(x). Thus [see (4)]

For small x > 0 the function Y0(x) behaves about like ln x (see Fig. 112, why?), and Y0(x) → −∞ as x → 0.

Bessel Functions of the Second Kind Yn(x)

For ν = n = 1, 2, … a second solution can be obtained by manipulations similar to those for n = 0, starting from (10), Sec. 5.4. It turns out that in these cases the solution also contains a logarithmic term.

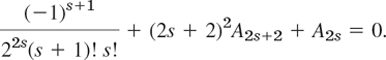

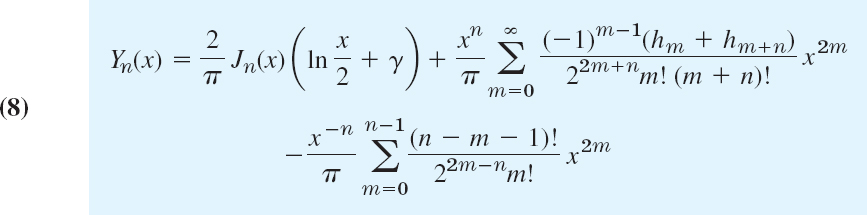

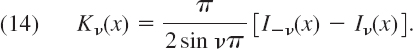

The situation is not yet completely satisfactory, because the second solution is defined differently, depending on whether the order is an integer or not. To provide uniformity of formalism, it is desirable to adopt a form of the second solution that is valid for all values of the order. For this reason we introduce a standard second solution Yν(x) defined for all ν by the formula

This function is called the Bessel function of the second kind of order ν or Neumann's function7 of order. Figure 112 shows Y0(x) and Y1(x).

Let us show that Jν and Yν are indeed linearly independent for all ν (and x > 0).

For noninteger order ν, the function Yν(x) is evidently a solution of Bessel's equation because Jν(x) and J−ν(x) are solutions of that equation. Since for those ν the solutions Jν and J−ν are linearly independent and Yν involves J−ν, the functions Jν and Yν are linearly independent. Furthermore, it can be shown that the limit in (7b) exists and Yn is a solution of Bessel's equation for integer order; see Ref. [A13] in App. 1. We shall see that the series development of Yn(x) contains a logarithmic term. Hence Jn(x) and Yn(x) are linearly independent solutions of Bessel's equation. The series development of Yn(x) can be obtained if we insert the series (20) in Sec. 5.4 and (2) in this section for Jν(x) and J−ν(x) into (7a) and then let ν approach n; for details see Ref. [A13]. The result is

Fig. 112. Bessel functions of the second kind Y0 and Y1. (For a small table, see App. 5.)

where x > 0, n = 0, 1, …, and [as in (4)] h0 = 0, h1 = 1,

![]()

For n = 0 the last sum in (8) is to be replaced by 0 [giving agreement with (6)].

Furthermore, it can be shown that

![]()

Our main result may now be formulated as follows.

THEOREM 1 General Solution of Bessel's Equation

A general solution of Bessel's equation for all values of ν (and x > 0) is

We finally mention that there is a practical need for solutions of Bessel's equation that are complex for real values of x. For this purpose the solutions

are frequently used. These linearly independent functions are called Bessel functions of the third kind of order ν or first and secondHankel functions8 of order ν.

This finishes our discussion on Bessel functions, except for their “orthogonality,” which we explain in Sec. 11.6. Applications to vibrations follow in Sec. 12.10.

1–9 FURTHER ODE's REDUCIBLE TO BESSEL'S ODE

Find a general solution in terms of Jν and Yν. Indicate whether you could also use J−ν instead of Yν. Use the indicated substitution. Show the details of your work.

- x2y″ + xy′ + (x2 − 16)y = 0

- xy″ + 5y′ + xy = 0 (y = u/x2)

- 9x2y″ + 9xy′ + (36x4 − 16)y = 0 (x2 = z)

- xy″ − 5y′ − 5y′ + xy = 0 (y = x3u)

- CAS EXPERIMENT. Bessel Functions for Large x. It can be shown that for large x,

with ∼ defined as in (14) of Sec. 5.4.

(a) Graph Yn(x) for n = 0, …, 5 on common axes. Are there relations between zeros of one function and extrema of another? For what functions?

(b) Find out from graphs from which x = xn on the curves of (8) and (11) (both obtained from your CAS) practically coincide. How does xn change with n?

(c) Calculate the first ten zeros xm, m = 1, …, 10, of Y0(x) from your CAS and from (11). How does the error behave as m increases?

(d) Do (c) for Y1(x) and Y2(x). How do the errors compare to those in (c)?

11–15 HANKEL AND MODIFIED BESSEL FUNCTIONS

- 11. Hankel functions. Show that the Hankel functions (10) form a basis of solutions of Bessel's equation for any ν.

- 12. Modified Bessel functions of the first kind of order ν are defined by Iν(x) = i−νJν(ix),

. Show that Iν satisfies the ODE

. Show that Iν satisfies the ODE

- 13. Modified Bessel functions. Show that Iν(x) has the representation

- 14. Reality of Iν. Show that Iν(x) is real for all real x (and real ν), Iν(x) ≠ 0 for all real x ≠ 0, and I−n(x) = In(x), where n is any integer.

- 15. Modified Bessel functions of the third kind (sometimes called of the second kind) are defined by the formula (14) below. Show that they satisfy the ODE (12).

CHAPTER 5 REVIEW QUESTIONS AND PROBLEMS

- Why are we looking for power series solutions of ODEs?

- What is the difference between the two methods in this chapter? Why do we need two methods?

- What is the indicial equation? Why is it needed?

- List the three cases of the Frobenius method, and give examples of your own.

- Write down the most important ODEs in this chapter from memory.

- Can a power series solution reduce to a polynomial? When? Why is this important?

- What is the hypergeometric equation? Where does the name come from?

- List some properties of the Legendre polynomials.

- Why did we introduce two kinds of Bessel functions?

- Can a Bessel function reduce to an elementary function? When?

11–20 POWER SERIES METHOD OR FROBENIUS METHOD

Find a basis of solutions. Try to identify the series as expansions of known functions. Show the details of your work.

- 11. y″ + 4y = 0

- 12. xy″ + (1 − 2x)y′ + (x − 1)y = 0

- 13. (x − 1)2y″ − (x − 1)y′ − 35y = 0

- 14. 16(x + 1)2y″ + 3y = 0

- 15. x2y″ + xy′ + (x2 − 5)y = 0

- 16. x2y″ + 2x3y′ + (x2 − 2)y = 0

- 17. xy″ − (x + 1)y′ + y = 0

- 18. xy″ + 3y′ + 4x3y = 0

- 19.

- 20. xy″ + y′ − xy = 0

SUMMARY OF CHAPTER 5 Series Solution of ODEs. Special Functions

The power series method gives solutions of linear ODEs

![]()

with variable coefficients p and q in the form of a power series (with any center x0, e.g., x0 = 0)

Such a solution is obtained by substituting (2) and its derivatives into (1). This gives a recurrence formula for the coefficients. You may program this formula (or even obtain and graph the whole solution) on your CAS.

If p and q are analytic at x0 (that is, representable by a power series in powers of x − x0 with positive radius of convergence; Sec. 5.1), then (1) has solutions of this form (2). The same holds if ![]() in

in

![]()

are analytic at x0 and ![]() , so that we can divide by

, so that we can divide by ![]() and obtain the standard form (1). Legendre's equation is solved by the power series method in Sec. 5.2.

and obtain the standard form (1). Legendre's equation is solved by the power series method in Sec. 5.2.

The Frobenius method (Sec. 5.3) extends the power series method to ODEs

whose coefficients are singular (i.e., not analytic) at, but are “not too bad,” namely, such that a and b are analytic at x0. Then (3) has at least one solution of the form

where r can be any real (or even complex) number and is determined by substituting (4) into (3) from the indicial equation (Sec. 5.3), along with the coefficients of (4). A second linearly independent solution of (3) may be of a similar form (with different r and am's) or may involve a logarithmic term. Bessel's equation is solved by the Frobenius method in Secs. 5.4 and 5.5.

“Special functions” is a common name for higher functions, as opposed to the usual functions of calculus. Most of them arise either as nonelementary integrals [see (24)–(44) in App. 3.1] or as solutions of (1) or (3). They get a name and notation and are included in the usual CASs if they are important in application or in theory. Of this kind, and particularly useful to the engineer and physicist, are Legendre's equation and polynomials P0, P1, … (Sec. 5.2), Gauss's hypergeometric equation and functions F(a, b, c; x) (Sec. 5.3), and Bessel's equation and functions Jν and Yν (Secs. 5.4, 5.5).

1ADRIEN-MARIE LEGENDRE (1752–1833), French mathematician, who became a professor in Paris in 1775 and made important contributions to special functions, elliptic integrals, number theory, and the calculus of variations. His book Éléments de géométrie (1794) became very famous and had 12 editions in less than 30 years.

Formulas on Legendre functions may be found in Refs. [GenRef1] and [GenRef10].

2OLINDE RODRIGUES (1794–1851), French mathematician and economist.

3OSSIAN BONNET (1819–1892), French mathematician, whose main work was in differential geometry.

4GEORG FROBENIUS (1849–1917), German mathematician, professor at ETH Zurich and University of Berlin, student of Karl Weierstrass (see footnote, Sect. 15.5). He is also known for his work on matrices and in group theory.

In this theorem we may replace x by x − x0 with any number x0. The condition a0 ≠ 0 is no restriction; it simply means that we factor out the highest possible power of x.

The singular point of (1) at x = 0 is often called a regular singular point, a term confusing to the student, which we shall not use.

5CARL FRIEDRICH GAUSS (1777–1855), great German mathematician. He already made the first of his great discoveries as a student at Helmstedt and Göttingen. In 1807 he became a professor and director of the Observatory at Göttingen. His work was of basic importance in algebra, number theory, differential equations, differential geometry, non-Euclidean geometry, complex analysis, numeric analysis, astronomy, geodesy, electromagnetism, and theoretical mechanics. He also paved the way for a general and systematic use of complex numbers.

6FRIEDRICH WILHELM BESSEL (1784–1846), German astronomer and mathematician, studied astronomy on his own in his spare time as an apprentice of a trade company and finally became director of the new Königsberg Observatory.

Formulas on Bessel functions are contained in Ref. [GenRef10] and the standard treatise [A13].

7 CARL NEUMANN (1832–1925), German mathematician and physicist. His work on potential theory using integer equation methods inspired VITO VOLTERRA (1800–1940) of Rome, ERIK IVAR FREDHOLM (1866–1927) of Stockholm, and DAVID HILBERT (1962–1943) of Göttingen (see the footnote in Sec. 7.9) to develop the field of integral equations. For details see Birkhoff, G. and E. Kreyszig, The Establishment of Functional Analysis, Historia Mathematica 11 (1984), pp. 258–321.

The solutions Yν(x) are sometimes denoted by Nν(x); in Ref. [A13] they are called Weber's functions; Euler's constant in (6) is often denoted by C or ln γ.