Chapter 17

Running SQL Server in a Virtual Environment

WHAT’S IN THIS CHAPTER?

- Why virtualize a server?

- Common virtualization products

- Virtualization concepts

- Extended features of virtualization

- Managing contention

- Identifying candidates for virtualization

- Architecting successful virtual database servers

- Monitoring virtualized database servers

WROX.COM CODE DOWNLOADS FOR THIS CHAPTER

The wrox.com code downloads for this chapter are found at www.wrox.com/remtitle.cgi?isbn=1118177657 on the Download Code tab. The code is in the Chapter 17 download and individually named according to the names throughout the chapter.

THE SHIFT TO SERVER VIRTUALIZATION

Of all the innovations in server technology over the last 10 years, in my view virtualization has had the biggest impact, and made the biggest improvements, to server computing. Although 64-bit architectures, multi-core processors, and solid-state drives have revolutionized their niches of the industry, only virtualization has fundamentally changed the way we can choose to deploy, manage, and protect server workloads.

Today, it’s likely then that the IT environments you use have virtualized servers in them. While a few years ago these servers might have run the smaller workloads such as domain controllers and print servers, today the capability of virtualization technology means you are more likely to also find mission critical servers with high workloads, such as database servers, being virtualized.

This chapter’s aim is to review the concepts of virtualization technology, some of the products that run virtualized servers, their features, benefits and some of their limitations.

Finally, we’ll consider how you can deploy SQL Server 2012 successfully in a virtual environment and monitor it post go-live.

Where later parts of the chapter show examples of server virtualization being used, Microsoft’s Hyper-V feature has been used; this is because its terminology and interface will be more familiar to SQL Server professionals who are new to server virtualization, and an evaluation of Windows Server, which provides access to it, is available as a download from Microsoft.

AN OVERVIEW OF VIRTUALIZATION

A typical textbook definition of virtualization defines the concept of sharing a single physical resource between multiple isolated processes, by presenting each with their own virtual version of the physical resource. For example, several virtualized instances of Windows can run concurrently on a single physical server, each believing they have exclusive access to the server’s hardware. One of the many benefits of doing this is to increase the physical server’s overall utilization, therefore increasing the value the physical server delivers.

A simple real-world example of deploying virtualization is to have a single physical server hosting four virtual servers.

Let’s assume that the physical server has four CPU cores, 16GB of memory, and the necessary virtualization software to run virtual servers installed on it.

In our example, four virtual servers can then be created by the virtualization software and each configured to have four virtual CPUs and 3GB of memory.

By default, none of the virtual servers are aware of each other, let alone that they are sharing the physical server’s hardware between them — nor would they know in our example that each physical CPU core has potentially been allocated twice (8 physical cores but 16 virtual CPUs allocated).

When the four virtual servers are running concurrently, the virtualization software manages access to the physical server’s resources on an “as and when needed” basis.

In a well-configured environment, we could expect the person who configured the virtual servers to know that no more than two of them would ever need to use all of their CPU resources at any one time. Therefore, the physical host should always be able to satisfy requests by the virtual servers to use all of their allocated CPU resources without having to introduce any significant scheduling overhead.

In a badly configured environment, there might be a need for three virtual servers to use all of their allocated CPU resources at the same time. It’s when this happens that performance could begin to degrade for each of the virtual servers, as the virtualization software has to start scheduling access to the physical server’s resources; a quart has to be made out of a pint pot!

However, as you can probably already see, if the virtual server workloads in this example were correctly sized and their workloads managed, then a significant amount of data center space, power, cooling, server hardware, CPUs, and memory can be saved by deploying one rather than four physical servers.

This “deploy only what you actually need” approach provided by virtualization explains why the technology moved so quickly from being deployed in the development lab to enterprise data centers. In fact, other than smartphone technology, it’s hard to find another technological innovation in recent years that has been adopted so widely and rapidly as virtualization has.

This rapid adoption is highly justifiable; virtualization brought IT departments an efficient data center with levels of flexibility, manageability, and cost reduction that they desperately needed, especially during the server boom of the mid-2000s and then the recession of the late 2000s. Moreover, once virtualization is deployed and the benefits of replacing old servers with fewer new servers are realized, the technology then goes on to deliver more infrastructure functionality — and interestingly, functionality that wasn’t available with traditional physical servers.

Indeed, it’s rare to find a SQL Server environment now which doesn’t use virtualization technologies in some way. In larger environments, companies might only be deploying it on developer workstations or in the pre-production environment; but increasingly I am finding small, mid-size, and even large infrastructures that are hosting their entire production environment in a virtualized manner.

History of Virtualization

The concepts of the virtualization technology that people are deploying today are nothing new, and you can actually trace them back to IBM’s mainframe hardware from the 1960s! At the time, mainframe hardware was very expensive, and customers wanted every piece of hardware they bought to be working at its highest capacity all of the time in order to justify its huge cost. The architecture IBM used partitioned a physical mainframe into several smaller logical mainframes that could each run an application seemingly concurrently. The cost saving came from each logical mainframe only ever needing to use a portion of the mainframe’s total capacity. While hardware costs would not have decreased, utilization did, and therefore value increased, pleasing the finance director.

During the 1980s and 1990s, PC-based systems gained in popularity; and as they were considerably cheaper than mainframes and minicomputers, the use of virtualization disappeared from the technology stack for a while. However, in the late 1990s, VMware, a virtualization software vendor, developed an x86-based virtualization solution that enabled a single PC to run several operating system environments installed on it concurrently. I remember the first time I saw this running and was completely baffled! A backup engineer had a laptop running both Windows and Linux on it; from within Windows you could watch the virtual server boot with its own BIOS and then start up another operating system. At the time, very few people knew much about the Linux operating system, especially me, so the idea of running it on a Windows laptop looked even more surreal!

This example was a typical use of VMware’s original software in the late 1990s and early 2000s, and for a few years, this was how their small but growing customer base used their technology. It was only a few years later that a version of their virtualization software hosted on its own Linux-based operating system was released and data center hosted server-based virtualization solutions began appearing.

Fundamentally, this server-based virtualization software is the basis of the platform virtualization solutions we use today in the biggest and smallest server environments.

The Breadth of Virtualization

When we talk about virtualization today, it is mostly in terms of physical servers, virtual servers, and the virtualization software known as a hypervisor, all terms this chapter defines later. However, your data center has probably had virtualization in it in some form for a long time, for the reasons we mentioned earlier — to help increase the utilization of expensive and typically underused physical hardware assets.

Today, most Storage Area Network hardware, SANs, use virtualization internally to abstract the storage partitions they present a server with from their physical components, such as the different speed hard drives it might use internally for storing data on.

While a system administrator will see an amount of usable storage on a storage partition the SAN creates for them, the exact configuration of the physical disks that store the data are hidden, or abstracted, from them by a virtualization layer within the SAN.

This can be a benefit for system administrators, allowing them to quickly deploy new storage while the SAN takes care of the underlying technical settings. For example, modern SANs will choose to store the most regularly used data on fast disks and the less frequently used data on slower disks. Yet, the data accessed most frequently might change over time, but by using virtualization, the SAN can re-distribute the data based on historic usage patterns to optimize its performance without the system administrator knowing.

Of course, this may not always be appropriate, a DBA might ask to use storage with consistent performance metrics; but like all virtualization technologies, once the product’s options and limitations are known, an optimized configuration can be used.

Cisco and other network vendors also use virtualization in their network hardware. You may wonder how a collection of network cables and switches could benefit from virtualization, but the concept of virtual LANS (VLANs) enables multiple logical networks to be transmitted over a common set of cables, NICs and switches, removing the potential for duplicated network hardware.

Finally, believe it or not, SQL Server still uses memory virtualization concepts that date back to the Windows 3.1 era! Windows 3.1 introduced the concept of virtual memory and the virtual address spaces, and as discussed in Chapter 3 of this book, it is still core to the Windows memory management architecture that SQL Server uses today. By presenting each Windows application with its own virtual memory address space, Windows (rather than the application) manages the actual assignment of physical memory to applications. This is still a type of virtualization where multiple isolated processes concurrently access a shared physical resource to increase its overall utilization.

Platform Virtualization

Having looked at the background of virtualization and some of the reasons to use it, this section clarifies what the term platform virtualization means, as it’s the focus for the rest of this chapter.

Platform virtualization is a type of hardware virtualization whereby a single physical server can concurrently run multiple virtual servers, each with its own independent operating system, application environment and IP address, applications, and so on.

Each virtual server believes and appears to be running on a traditional physical server, with full access to all of the CPU, memory, and storage resources allocated to it by the system administrator. More importantly, in order for virtualization technology to work, the virtual server’s operating system software can use the same hardware registers and calls, and memory address space, which it would use if it were running on a dedicated physical server. This allows software to run on a virtual, rather than physical, server without being recompiled for a different type of hardware architecture.

Cloud Computing

It’s almost impossible to read technology news these days without seeing references to cloud computing, and more commonly private clouds and public clouds. One of the advantages of cloud computing is that new servers can be deployed very quickly, literally in just minutes, and to do this they use platform virtualization. While this chapter won’t go into how cloud computing works, it’s worth remembering that at the technology layer, virtualization is a key enabler of this technology revolution.

Private Clouds

In summary, private clouds are usually a large and centrally managed virtualization environment deployed on-premise, typically in your data center. The virtualization management software they use often has management features added that allow end users to provision their own new servers through web portals, and for the dynamic allocation of resources between virtual servers. A key benefit for businesses too is the ability to deploy usage-based charging models that allow individual business departments or users to be charged for their actual usage of a virtual server, as well as allowing more self-service administration of server infrastructures.

Public Clouds

Public clouds, more often referred to as just cloud computing, are very similar to private clouds but are hosted in an Internet connected data center that is owned and managed by a service provider rather than an internal IT department. They allow users from anywhere in the world to deploy servers or services, through non-technical interfaces such as a web portal, with no regard for the underlying physical hardware needed to provide them. Microsoft’s Windows Azure service is an example of a cloud computing service.

WHY VIRTUALIZE A SERVER?

“Why would you want to virtualize a server?” is a question I surprisingly still hear, particularly from people with no experience of having used virtualization technology. A typical follow-on comment is often “I’ve heard you can’t virtualize database servers.”

A few years ago, that question and comment were probably worth asking when IT teams were discussing virtualization of servers running SQL Server. SQL Server is a resource hungry application that needs particularly large amounts of memory and fast storage to process big workloads, and a few years ago, virtualization technology sometimes struggled to deliver those resources. As an example, some of the ways virtualization software presented storage to a virtual server meant it was inherently slow, and some virtualization software architecture meant it could only assign relatively low amounts of memory to a virtual server. Because of these issues, it was quite a few years before organizations I worked in considered mixing SQL Server with virtualization.

However, these technical limitations quickly disappeared, so the pace of adoption increased, justified by benefits that business and technical teams couldn’t ignore any longer. The following sections describe the main benefits of using virtual servers:

Business Benefits

Selling the idea of virtualization to a business is easy; in fact, it’s too easy. Even worse, I’ve had finance directors tell me that I can design only virtualized infrastructures for them regardless of what the IT teams want — or, more worryingly, need! From a business perspective, the major driver for using virtualization is obviously cost reduction. While the cost of physical servers has dropped over time, the number we need has increased, and increased quite quickly too. Today, even a relatively small business requires several servers to deploy products such as Microsoft’s SharePoint Server or Exchange Server, with each server performing perhaps a compartmentalized role or high-availability function. Therefore, even though server hardware became more powerful, their “average utilization” dropped — and often to very low values. For example, I’m willing to bet that if you checked one of your domain controllers, its average CPU utilization would constantly be under 30%. That means there’s 70% of its CPU utilization that could be used for something else.

Therefore, it was no surprise when even systems administrators, IT managers, and CIOs started to question why they had 10 servers running at 10% utilization and not 1 running at 100%. The potential cost savings, often described by businesses as the savings from consolidation, can be realized with virtualization by migrating from multiple underutilized servers to a single well-utilized server. In addition to cost savings, other benefits of consolidation can have a big impact on a business too. For example, at one company where I worked, we virtualized a lot of older servers because the facilities department couldn’t get any more power or cooling into a data center.

In reality, the savings aren’t as straightforward as the 10 times 10% utilization example, but it does demonstrate why both business teams and technical teams began taking a big interest in virtualization.

Technical Benefits

For IT teams, adopting virtualization has also meant needing to learn new skills and technologies while changing the way they’ve always worked to some degree. However, despite these costs, IT teams across the world have embraced and deployed virtualization solutions even though it likely represented the biggest change in their way of working for a generation. This section looks at the benefits that drove this adoption.

One of the main benefits comes from consolidation. Before virtualization was available, data centers had stacks of servers hosting lightweight roles, such as domain controllers, file servers, and small database servers. Each of these functions had to either share a physical server and operating system with another function or have its own dedicated physical server deployed in a rack. Now, using virtualization we can potentially deploy dozens of these low-utilization functions on a single physical server, but still give each its own operating system environment to use. Consequently, server hardware expenditure decreases, but also equally and perhaps more importantly, so do power, cooling, and space costs.

Another technical benefit comes from how virtual servers are allocated resources, such as memory and CPU. In the virtual world, providing sufficient physical server resources are available, creating a new virtual server is purely a software operation. When someone wants a new server deployed, no one would need to install any physical memory, storage, or CPU hardware, let alone a completely new physical server.

Likewise, an existing virtual server can have additional resources such as extra CPUs or memory allocated to it at the click of a mouse — providing the physical host server has the capacity—then the next time the virtual server reboots it will see and be able to use the additional resources.

Both deploying a new virtual server and allocating addition resources can be done in seconds, drastically increasing the flexibility of the server environment to react to planned and un-planned workloads.

Encapsulation

The final technical advantage we’ll discuss is a benefit of something virtualization does called encapsulation. Despite how they appear to the operating system and applications running within the virtual server, when virtual servers are created, their data is stored as a set of flat files held on a file system; therefore, it can be said that the virtual server is “encapsulated” into a small set of files. By storing these flat files on shared storage, such as a SAN, the virtual servers can be “run” by any physical server that has access to the storage. This increases the level of availability in a virtual environment, as the virtual servers in it do not depend on the availability of a specific physical server in order to be used.

This is one of the biggest post-consolidation benefits of virtualization for IT teams because it enables proactive features to protect against server hardware failure, regardless of what level of high availability support the virtual server’s operating system or application has; more about these are discussed in the Virtualization Concepts section. This type of feature won’t usually protect against an operating system or database server crashing, but it can react to the physical server the virtual server was running on un-expectedly going offline.

This level of protection does incur some downtime however, as the virtual server needs to be restarted to be brought back online. For those looking for higher levels of protection, VMware’s Fault Tolerance feature lock-steps the CPU activity between a virtual server and a replica of it; every CPU instruction that happens on one virtual server happens on the other.

The features don’t stop there. Some server virtualization software allows virtual servers to be migrated from one physical server to another without even taking them offline, which is known as online migration and is covered in the “Virtualization Concepts” section of this chapter. This feature can be critical to reducing the impact of planned downtime for a physical server as well, whether it is for relocation, upgrading, etc.

There are, as you’d expect, limitations to how this can be used, but generally it’s a very popular feature with system administrators. The “Extended Features of Virtualization” section of this chapter discusses more about these features.

SQL Server 2012 and Virtualization

Many people ask me how SQL Server behaves when it’s virtualized. The answer is that it should behave no differently to when it runs on a physical server, especially when it’s deployed in a properly resourced virtual environment, just like you would do with a physical server. However, virtualized instances of SQL Server still need adequate, and sometimes large, amounts of CPU, memory, and storage resources in order to perform well. The challenge with virtualization is making sure the resources SQL Server needs to perform adequately are always available to it.

Additionally, virtual servers running SQL Server can benefit from some of the features that encapsulation brings, which we’ve just discussed; however, it’s at this point that some virtualization features, such as snapshotting a virtual server, which we’ll discuss later in this chapter, Microsoft does not support using with SQL Server.

However, regardless of all the resource allocation activity that happens between the physical server and virtualization software, it’s true to say that SQL Server itself does not change its behavior internally when run in a virtualized environment. That should be reassuring news, as it means that SQL Server will behave the same way whether you run it on a laptop, a physical server, or a virtual server. Nor are any new error messages or options enabled within SQL Server because of it running on a virtual server, with the exception of Dynamic Memory support that’s described in a moment. That’s not to say that you don’t need to change how you configure and use SQL Server once it is virtualized; in fact, some of the server resource configurations are more important in the virtual world, but they are still all configured with the standard SQL Server tools.

The one feature in SQL Server 2012 that does automatically get enabled on start-up as a consequence of being in a virtual environment is hot-add memory support. This feature was released in SQL Server 2005 and originally designed to support physical servers that could have hundreds of gigabytes of memory and large numbers of processors, yet could still have more added without them being powered down or rebooted. Once additional memory had been plugged in and the server hardware had brought it online, Windows and SQL Server would then auto-detect it and begin making use of it by expanding the buffer pool. While this sounds like a clever feature, I suspect very few users ever had both the right hardware and a need to use it, so the feature never gained widespread use.

Fast-forward a few years and Microsoft’s Hyper-V virtualization technology shipped a new feature called Dynamic Memory. By monitoring a virtual server’s Windows operating system, the Dynamic Memory feature detects when a virtual server is running low on memory; and if spare physical memory is available on the host server, it allocates more to the virtual server. When this happens, the hot-add memory technology in Windows and SQL Server recognize this new “physical memory” being added and dynamically reconfigure themselves to use it — without needing to reboot Windows or restart SQL Server.

This behavior was available in the Enterprise and Data Center Editions of SQL Server 2008, but support for it has expanded in SQL Server 2012 to include the Standard Edition. This expanded support demonstrates how closely Microsoft wants its virtualization software, operating system, and database server software to work together. The expectation by Microsoft is that use of this feature will become routine once it’s made available to the Standard Edition of SQL Server.

Limitations of Virtualization

Like all technologies, virtualization has limits, restrictions, and reasons not to use it in certain situations. Some virtualization vendors would like you to virtualize every server you have, and in fact, some now even claim that today that’s possible. However, this all-virtual utopia is likely to be challenged by your applications, IT team, and budget.

Why might you not virtualize a new or existing server? The original reason people didn’t virtualize has rapidly disappeared in recent years: a perceived lack of support from application vendors. In hindsight, I attribute lack of adoption more to a fear of not knowing what effect virtualization might have on their systems, rather than repeatable technical issues caused by it. The only actual problems I’ve heard of are related to Java-based applications, but fortunately they seem rare and SQL Server doesn’t use Java.

Another rapidly disappearing reason for restricting the reach of virtualization is the resource allocation limitations that hypervisors put on a virtual server. Despite VMware’s technology supporting a virtual server with as many as 8 virtual CPUs and as much as 255GB of memory as far back as 2009, most people weren’t aware of this and assumed virtual servers were still restricted to using far less than their production servers needed. As a result, it was domain controllers, file servers, and other low-memory footprint workloads that were usually virtualized in the early phases of adoption.

Today, the capabilities of virtualization software has increased considerably; VMware’s software and Windows Server 2012 now support 32 virtual CPUs and 1TB of memory, per virtual server! This means even the most demanding workloads can be considered for virtualization. The only current exceptions are what are considered to be “real time” workloads — that is, applications that process or control data from an external source that expects reactions or outputs within a specific number of milliseconds rather than a certain number of CPU clock cycles. To do this normally, the application requires constant access to CPU resources, which is something that virtualization software by default removes. You can enable support for real-time workloads in some virtualization software but doing so removes some of the management flexibility and resource utilization benefits virtualization has.

COMMON VIRTUALIZATION PRODUCTS

If you search for virtualization products using your favorite search engine, you’ll get dozens of results for different products, and many opinions about which is best. While it’s true that the virtualization marketplace is crowded, there are still only a handful of vendors that offer production-ready server virtualization products. Developers, testers, and DBAs may already be familiar with a wider range of virtualization products, such as Oracle’s Virtual Box and VMware Workstation, but VMware and increasingly Microsoft have the lion’s share of the virtualized data center marketplace. This section looks at the primary server virtualization products available, and some of the virtualization support that hardware vendors have built into their products in recent years.

VMware

Regardless of what any other virtualization vendor’s marketing department may tell you, in my experience more businesses currently use VMware for their server virtualization platforms than any other. In my view, the main reason for this is because for a long time, VMware was almost the only vendor selling production grade virtualization software, and they also created the features that today, we expect every virtualization vendor to provide by default.

VMware’s current server virtualization product set, vSphere, consists of two components: the VMware vSphere Hypervisor, also known as ESXi, and the enterprise virtual environment management platform, vSphere.

VMware’s basic hypervisor software is available free of charge, even for production environments, and it supports running and managing a reasonable number of virtual servers on it — not bad for a free product. However, its feature set and manageability are quite limited when compared to capabilities of the VMware tools designed for the enterprise; for example, it supports only 32GB of memory in the physical host server. Nonetheless, for smaller environments or those new to virtualization, this product is often sufficient and can significantly reduce the deployment costs associated with VMware’s larger vSphere product.

To provide an enterprise-scale and feature-rich virtualization solution, VMware couples its hypervisor with the vSphere management platform. This not only provides significantly more management and reporting functionality, but also increases scalability and availability. The other major difference is that groups of physical host servers running the VMware hypervisor are managed collectively, blurring the boundaries between individual server resources and a cluster of host servers as VMware refers to it.

While production environments can be deployed using just VMware’s hypervisor, most of the businesses I work with have invested in the vSphere infrastructure to get the fuller feature set not available in the standalone hypervisor. The software is often expensive and it requires a strong commitment to virtualization, but it has been successful enough to make VMware the size of company it is today. That said, however, Microsoft is offering ever-increasing levels of virtualization functionality in the Windows operating system, and VMware will be forced at some point to reconsider the cost models and feature sets of its products.

Microsoft Hyper-V

Until very recently most of us probably didn’t think of Microsoft as a virtualization software vendor although they have in fact produced desktop virtualization software, such as VirtualPC and Virtual Server, for a number of years now. Sadly for Microsoft, my experience showed they were the kind of products that were loved by those who used them but unknown to everyone else.

First released as a role within Windows Server 2008, Hyper-V was intended to bring Microsoft’s new server virtualization capabilities to the massive Windows Server marketplace. This was an excellent product marketing decision, as anyone new to and curious about server virtualization now had the technology bought and paid for in their server operating system. No longer would they have to research, select, and download a product before installing it — more often than not on a dedicated physical server.

Hyper-V is more than just a software feature which gets installed within Windows though, it’s a component which sits deep within the operating system itself, and in some areas is closer to the physical hardware than Windows itself is once Hyper-V is enabled. It’s this low-level code that allows Hyper-V to schedule all of the different CPU requests its virtual servers make and allocate them CPU time so they can run.

Not all of the reaction to Hyper-V’s initial release was good for Microsoft though. The first version suffered from the usual inadequacies of v1.0 software we’ve become used to. In fact, it wouldn’t be unfair to say that the version that shipped with Windows Server 2008 was unsuitable for most production workloads. However, progress was made in making people aware that Microsoft was entering the server virtualization market.

Significant improvements were made to Hyper-V in Windows Server 2008 R2 and again with Service Pack 1. Live migration, dynamic storage, Dynamic Memory, and enhanced processor feature support made deploying Hyper-V in a busy production environment a reality. It is likely that many of the people who have chosen to adopt Hyper-V have done so because of Microsoft’s dominance and reputation with other applications, along with the pricing model.

In the same way that VMware offers a hypervisor product and an enterprise management platform, vSphere, so does Microsoft. System Center Virtual Machine Manager is a suite of management tools designed to manage large Hyper-V environments, as well as deploy, orchestrate, and monitor private clouds. Known sometimes as just VMM, it’s not as widely adopted as vSphere, but I suspect that will change as Hyper-V is adopted by more and more enterprise-scale customers. System Centre Virtual Machine Manager 2012 has been released with many private cloud management capabilities built into it and will be core to Microsoft’s server products strategy over the next few years.

Windows Server 2012 enhances Hyper-V’s capabilities with a compelling update of the feature. Its virtual servers will support up to 32 virtual CPUs and 1TB of memory each, while support for replication of virtual servers will offer new high availability capabilities.

Xen

Of the three server virtualization products covered in this section, XEN is undoubtedly the rarest and least widely adopted. Xen was the output of a research project by the University of Cambridge in the early 2000s, and its legacy was an open-source hypervisor. Although the open-source version still exists, a number of commercial versions are also available. Citrix Systems now owns and sells a commercial version of it known as XenServer, while the technology has also been adopted by vendors such as Sun and Oracle. Of more interest, however, is its adoption by a number of cloud service providers such as Amazon and Rackspace, demonstrating that cloud technology does not differ fundamentally from on-premise technology.

Hardware Support for Virtualization

While we can very easily see and interact with the virtualization software we install on our servers, what we can’t see is that the CPUs inside our servers now have components built into them to assist with virtualization. In the same way that CPUs had specific logic and components added to them to support floating-point and multimedia operations, they now have similar features built into them to help make virtualization software run faster. For example, Intel’s Extended Page Tables feature provides support for second-level address translation (SLAT). SLAT helps optimize the translation of a virtual server’s memory addresses to physical server memory addresses through the use of cached lookup tables.

Both AMD and Intel provide these features but with different names. AMD’s CPUs have feature sets called AMD-V and Rapid Virtualization Indexing (RVI) now built-in, while Intel’s CPUs have built-in features called VT-x and EPT. Although it isn’t necessary to know the specific roles of these components, a SQL Server professional should understand that the latest generations of virtualization software work only on server’s with these CPU features available. However, that shouldn’t be a problem, as I haven’t seen a server for a few years now that doesn’t have them built-in.

VIRTUALIZATION CONCEPTS

Like any technology, virtualization covers a minefield of new terminology, features, and capabilities. To make things even more complicated, different vendors often use different terms for the same item. To help remove that ambiguity, this section covers the main terms and features commonly used by virtualization software currently being deployed.

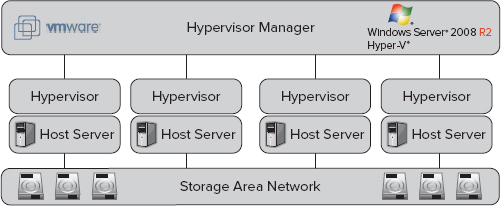

Host Server

The host server, shown in Figure 17-1, is called the physical server deployed within the virtual environment. Today people use standard x64-based servers, such as an HP DL360, which are usually configured with a large number of CPU cores, large amounts of memory, some local disks for the hypervisor, and host bus adapters for access to storage area network (SAN) storage. The only difference between a host server and other servers is that its installed operating system’s only function is to manage the physical server’s resources to allow multiple virtual servers to run concurrently on the same physical hardware, rather than directly run application software such as SQL Server.

Hypervisor

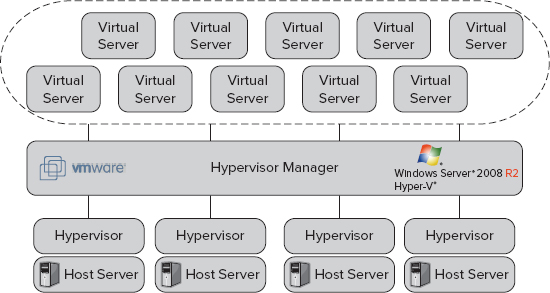

By this point in the chapter, you will be familiar with the term virtualization software which we’ve used, and you will have seen how important that is to providing virtual servers. One of the components of that software is the hypervisor.

The hypervisor’s role is to coordinate the hosting and running of a number of virtual servers and manage the allocation of the host server’s physical resources between them. For example, on a host server with 4 physical CPU cores, the hypervisor enables a number of currently running virtual servers to behave as though each one has access to four physical CPU cores, known as virtual CPUs (see Figure 17-2).

What happens during periods of high workloads when there isn’t enough physical CPU resource to satisfy all of the virtual server requests for CPU time is perhaps one of the most performance sensitive qualities of a hypervisor. The last thing you want is for virtual servers to become slow just because one specific virtual server is busy, although this problem has yet to be eliminated and can still happen with some hypervisors.

How the hypervisor manages these situations varies between vendors. At a high level, they track how much CPU time a virtual server has used recently, and use that data, along with system administrator configured priority information known as shares or weighting, to determine in what order a queue of requests for CPU time should be processed during periods of high demand.

VMware has an extra feature built into their hypervisor’s CPU scheduling algorithms called relaxed co-scheduling. The purpose of this is to identify which particular virtual CPUs in a multi-CPU virtual server are the ones needing to do the work so it can avoid supplying un-required physical CPU time to the virtual server; the principle being that lots of smaller workloads are easier to find CPU resources for than a single large workload.

When installing VMware’s server virtualization software, the hypervisor is installed directly on the host server as its operating system; you don’t, for example, install Windows first. Those who deploy VMware’s hypervisor will actually see a custom Linux installation boot to then run a set of VMware services, but it’s a self-contained environment that doesn’t allow application software to be installed. Meanwhile, users of Hyper-V will install a regular installation of the Windows Server software and then add the Hyper-V role to the server. Installing this role is more than just adding some components to the operating system; though, when the Hyper-V hypervisor gets installed it actually becomes the server’s operating system. The Windows installation that was installed on the server now gets converted to become a virtual server that is run by the newly installed Hyper-V hypervisor. This all happens transparently, but it is why Microsoft recommends not using the host server’s operating system for anything other than Hyper-V services.

Virtual Server (or Guest Server or Virtual Machine)

The running of virtual servers, also called guest servers or virtual machines, is the sole purpose of a virtual environment. Each virtual server has very similar properties to a traditional physical server in that it will have a number of virtual CPUs, an amount of memory, and a quantity of virtual hard drives assigned to it. “Inside” the guest server, a regular operating system such as Windows Server 2008 will be installed on drive C: — just like a physical server would. Figure 17-3 shows a diagram representing the relationship between the hypervisor and the guest servers.

Inside virtual servers the hypervisor normally has a set of tools installed, often called client, or integration, services. These provide a level of integration between the virtual server and its hypervisor that wouldn’t otherwise be possible, such as sharing files between hypervisor and client or perhaps synchronizing the system time with the host server.

However, also installed is a driver that, on command from the hypervisor, can begin consuming specific quantities of memory within the virtual server. We discuss more about these in the “Demand-Based Memory Allocation” section of this chapter but for now it’s sufficient to say its purpose is to be allocated memory within the virtual server so some of the physical memory the virtual server was previously using can be re-allocated by stealth to another virtual server.

It’s called a balloon driver because it inflates as needed to consume memory within the virtual server’s operating system. Its purpose is not to actually use the memory but to set it aside to ensure that nothing else within the virtual server is using it.

In comparison with the virtualization software and technology, there’s very little to say about virtual servers, and that’s a good thing, as the idea of virtualization is to make the fact they’re not running on a physical server invisible to them.

While virtual servers can be configured to “run” on different physical host servers using technologies like online migration that we’ll cover in the next section, at any point in time, a running virtual server is assigned to a specific physical host server. Virtual servers cannot be allocated and use physical server resources, such as memory, from multiple physical host servers.

EXTENDED FEATURES OF VIRTUALIZATION

Now that you are familiar with some of the fundamental concepts of virtualization, this section looks at some of the more advanced features and capabilities the technology offers. This is where the unique magic of the technology begins to appear, as some of these concepts simply weren’t available to traditional physical servers for all the time we were using them. While a hypervisor’s primary function is to “run” a virtual server and grant it the resources it requires as it needs them, the current versions of VMware and many of Microsoft’s server virtualization products also provide many of the features discussed in the following sections.

Snapshotting

Snapshotting a virtual server is very similar to how SQL Server’s own snapshot function works. In principle, the hypervisor suspends the virtual machine, or perhaps requires it to be shut down, and places a point-in-time marker within the virtual machine’s data files. From that point on, as changes are made within the virtual machine’s virtual hard drive files, the original data is written to a separate physical snapshot file by the hypervisor. This can have a slight performance overhead on the I/O performance of the virtual server and, more important, require potentially large amounts of disk space because multiple snapshots can be taken of a virtual server, each having its own snapshot file capturing the “before” version of the data blocks. However, a copy of all of the pre-change data gets saved to disk.

Having these snapshot files available to the hypervisor enables it, upon request, to roll back all the changes in the virtual server’s actual data files to the state they were in at the point the snapshot was taken. Once completed, the virtual server will be exactly in the state it was at the point in time the snapshot was taken.

While this sounds like a great feature which can offer a level of rollback functionality, it is un-supported by Microsoft for use with virtual servers running SQL Server. Microsoft gives more information about this in the Knowledge Base article 956893; however, until Microsoft supports its use, snapshotting should not be used with virtual servers running SQL Server.

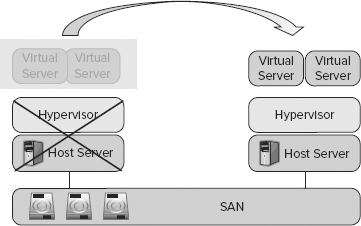

High-Availability Features

You read earlier that encapsulation means that a virtual server is ultimately just a collection of files stored on a file system somewhere. These files can normally be broken down into the virtual hard drive data files, as well as a number of small metadata files that give the hypervisor information it needs to “run” the virtual server, such as the CPU, memory, and virtual hard drive configuration. Keeping these files in a centralized storage location — a SAN, for example — enables several different host servers to access the virtual server files. The trick that the file system and hypervisor have to perform is controlling concurrent read/write access to those files in a way that prevents corruption and two host servers running the same virtual server at once.

Support for this largely comes from the file systems they use; VMware, for instance, has a proprietary VMFS file system that is designed to allow multiple host servers to both read and write files to and from the same logical storage volumes at the same time. Windows Server 2008 has a similar feature called Clustered Shared Volumes that is required in larger Hyper-V environments where multiple physical host servers concurrently run virtual servers from the same file system volume. This is a departure from the traditional NTFS limitation of granting only one read/write connection access to an NTFS volume at a time. Ensuring that a virtual machine is only started in one place at a time is controlled by the hypervisors themselves. A system using traditional file system file locks and metadata database updates is typically used to allow or prevent a virtual server from starting (see Figure 17-4).

By the way, while the cluster shared volumes feature of Windows sounds like a great solution to numerous other requirements you might have, the technology is only supported for use with Hyper-V. Microsoft warns of unintended and unexpected results if you try to use it for anything else and you can find more information in the Microsoft TechNet article at http://technet.microsoft.com/en-us/library/dd630633%28v=ws.10%29.aspx.

Online Migration

After you have all the files needed to run your virtual servers stored on some centralized storage, accessible by multiple physical host servers concurrently, numerous features unique to virtualization become available. The key differentiator here between the physical and virtual worlds is that you are no longer dependent on a specific physical server’s availability in order for your virtual server to be available. As long as a correctly configured physical host server with sufficient CPU and memory resources is available and it can access your virtual server’s files on the shared storage, the virtual server can run.

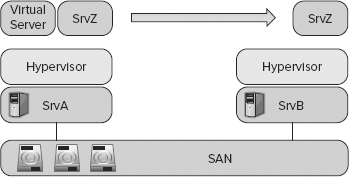

The first of these features unique to virtualization is generically described in this chapter as online migration, although Microsoft calls it Live Migration and VMware calls it vMotion for their implementations. Online migrations enable a virtual server to be moved from one physical host server to another without taking the virtual server offline.

For those unfamiliar with this technology and who can’t believe what they’ve just read, an example should clarify the idea. In Figure 17-5, the virtual server SrvZ is currently running on the physical host server SrvA, while all of its files are stored on the SAN. By performing an online migration, you can move SrvZ to run on SrvB without having to shut it down, as shown in the second half of the diagram.

Why you might want to do this is a legitimate question for someone new to virtualization, especially as in the physical world this kind of server administration was impossible. In fact, server administrators receive many benefits from being able to move running virtual servers off of a specific physical host server. If a specific host requires patching, upgrading, or repairing, or perhaps has too much load, then these issues can be resolved without affecting the availability of the applications and services that the virtual servers support. Some or all of the virtual servers running on a host server can transparently be migrated to another host, freeing up the host server for maintenance.

The basic concept behind online migration is readily understandable, but some complex operations are needed to actually perform it. After the virtualization administrator identifies where the virtual server should move from and to, the hypervisor logically “joins” the two host servers and they start working together — to support not only the running of the virtual server but also its migration. Each host server begins sharing the virtual server’s data files stored on the shared storage; the new host server loads the virtual server’s metadata, allocates it the physical hardware and network resources it needs, such as vCPUs and memory, and, the final clever part, the hypervisor also sends a snapshot of the virtual machine’s memory from the original host server to the new host server over the local area network.

Because changes are constantly being made to the memory, the process can’t finish here, so at this point every memory change made on the original server needs to be copied to the new server. This can’t happen as quickly as the changes are being made, so a combination of virtual server activity and network bandwidth determine how long this “synchronization” takes. As a consequence, you may need to perform online migrations during quiet periods, although server hardware, hypervisor technology, and 10GB Ethernet mean that these migrations are very quick these days. Before the last few remaining memory changes are copied from the original host server to the new host server, the hypervisor “pauses” the virtual server for literally a couple of milliseconds. In these few milliseconds, the last remaining memory pages are copied along with the ARP network addresses the virtual server uses and full read/write access to the data files. Next, the virtual server is “un-paused” and it carries on exactly what it was doing before it was migrated with the same CPU instructions and memory addresses, and so on.

If you are thinking that this pause sounds dangerous or even potentially fatal to the virtual server, in reality this technology has been tried and tested successfully — not only by the vendors themselves but also by the industry. Online migrations have been performed routinely in large service provider virtualization environments, and with such confidence that the end customer never needed to be told they were happening. Nor is this technology limited to virtual servers with low resource allocations; Microsoft has written white papers and support articles demonstrating how its LiveMigration feature can be used with servers running SQL Server. In fact, the SQLCat team has even released a white paper downloadable on their website with advice about how to tune SQL Server to make online migrations slicker and more efficient.

However, while the technology is designed to make the migration as invisible to the virtual server being migrated as possible, it is still possible for it to notice. The dropping of a few network packets is typically the most visible effect, so client connections to SQL Server can be lost during the process; or perhaps more critical, if you deploy Windows Failover Clustering on to virtual servers, the cluster can detect a failover situation. Because of this, Windows Failover Clustering is not supported for use with online migration features.

While online migrations may seem like a good solution to virtual and host server availability, keep in mind that they are on-demand services — that is, they have be manually initiated; and, most important, both the original and the new servers involved have to be available and online in order for the process to work. They also have to have the same type of CPU as well; otherwise, the difference in low level hardware calls would cause issues. You could script and then schedule an online migration, but for the purpose of this chapter we’ll still consider that a manual migration. In short, while this feature is good for proactive and planned maintenance, it cannot be used to protect against unplanned downtime and host server failures.

Highly Available Virtual Servers

Understanding how online migrations work will help you understand how some of the high-availability features in hypervisors work. When comparing the high-availability features of the two most prevalent server platform hypervisors, you can see a difference in their approach to providing high availability. VMware’s vSphere product has a specific high-availability feature, vSphere HA, built-in; whereas Microsoft’s Hyper-V service utilizes the well-known services of Windows Failover Clustering.

Both of these HA services use the same principle as online migration in that all the files needed to start and run a virtual server have to be kept on shared storage that is always accessible by several physical host servers. This means a virtual server is not dependent on any specific physical server being available in order for it to run — other than the server on which it’s currently running, of course. However, whereas online migrations require user intervention following an administrator’s decision to begin the process, HA services themselves detect the failure conditions that require action.

VMware and Microsoft’s approach is ultimately the same, just implemented differently. Both platforms constantly monitor the availability of a virtual server to ensure that it is currently being hosted by a host server and the host server is running it correctly. However, running according to the hypervisor’s checks doesn’t necessarily mean that anything “inside” the virtual server is working; monitoring that is an option available in VMware’s feature where it can respond to a failure of the virtual server’s operating system by re-starting it.

As an example, the hypervisor would detect a physical host server going offline through unexpected failure, causing all the virtual servers running on it to also go offline — the virtual equivalent of pulling the power cord out of the server while it’s running, and then if configured to, re-start all of the virtual servers on another host server.

In this situation, whatever processes were running on the virtual server are gone and whatever was in its memory is lost; there is no preemptive memory snapshotting for this particular feature as there is for online migrations. Instead, the best the hypervisor can do is automatically start the virtual server on another physical host server when it notices the virtual server go offline — this is the virtual equivalent of powering up and cold booting the server. If the virtual server is running SQL Server, then, when the virtual server is restarted, there may well be an initial performance degradation while the plan and data catches build up, just like in the physical world.

What makes this feature exciting is the opportunity to bring some form of high availability to virtual servers regardless of what operating system or application software is running inside the virtual server. For example, you could have standalone installations of Windows and SQL Server running on a virtual server, neither of which are configured with any high-availability services, and yet now protect SQL Server against unplanned physical server failure.

This technology isn’t a replacement for the application-level resilience that traditional failover clustering brings; we already saw that while the hypervisor might be successfully running the virtual machine, Windows or SQL Server may have stopped. However, this feature can provide an increased level of availability for servers that may not justify the cost of failover clustering or availability groups.

Host and Guest Clustering

To conclude this discussion of virtualization’s high-availability benefits, this section explains how the traditional Windows failover clustering instances we’re used to using fit in with it. Host clustering is Microsoft’s term for implementing the virtual server high availability covered in the previous section; that is, should a physical host server fail, it will re-start the virtual servers that were running on it on another physical host server. It does this by using the Windows Failover Clustering services running on the physical host servers to detect failure situations and control the re-starting of the virtual servers.

Guest clustering is where Windows Failover Clustering is deployed within a virtual server to protect a resource such as an instance of SQL Server and any resource dependencies it might have like an IP address and host name.

This is deployed in the same way a Windows Failover Clustering would be in a physical server environment, but with virtual rather than physical servers.

Support from Microsoft for clustering SQL Server in this manner has been available for some time now, but adoption had been slow as the range of storage options that could be used was small. Today however, there are many more types of storage that are supported, including the SMB file share support in SQL Server 2012 and raw device mappings by VMware, which is making the use of guest clustering much more common.

Deploying SQL Server with Virtualization’s High-Availability Features

When SQL Server is deployed in virtual environments, trying to increase its availability by using some of the features described becomes very tempting. In my experience, every virtualization administrator wants to use online migration features, and quite rightly so. Having the flexibility to move virtual servers between host servers is often an operational necessity, so any concerns you may have about SQL Server’s reaction to being transparently relocated should be tested in order to gain confidence in the process. You might find that you agree to perform the task only at quiet periods, or you might feel safe with the process irrespective of the workload.

Likewise, the virtualization administrator is also likely to want to use the vendor’s high-availability feature so that in the event of a physical host server failure, the virtual servers are automatically restarted elsewhere. This is where you need to carefully consider your approach, if any, to making a specific instance of SQL Server highly available. My advice is not to mix the different high-availability technologies available at each layer of the technology stack. This is because when a failure occurs, you only want a single end-to-end process to react to it; the last thing you want is for two different technologies, such as VMware’s HA feature and Windows Failover Clustering to respond to the same issue at the same time.

MANAGING CONTENTION

In looking at some of reasons for virtualization’s popularity, the preceding sections identified the concept of contention, the capability to better use previously underutilized physical resources in a server in order to reduce the total number of physical servers deployed. For the purposes of this discussion, we can split the idea of contention into two parts: good contention and bad contention.

Good Contention

Good contention is straightforward: It enables you to see positive benefits from virtualizing your servers, ultimately resulting in less time and money spent on deploying and maintaining your physical server estate.

For example, if the average CPU utilization of 6 single CPU physical servers was 10% and none of them had concurrent peak CPU usage periods, then I would feel comfortable virtualizing those 6 servers and running them as a single server with a single CPU — the logic being 6× 10% = 60%, and therefore less than the capacity of a single server with a single CPU. I’d want to make sure there was sufficient physical memory and storage system performance available for all 6 virtual servers, but ultimately the benefit would be the ability to retire 5 physical servers.

That’s a very simple example but one that most businesses can readily understand. CPU utilization is an absolute number that is usually a good reflection of how busy the server is. Conversely, sizing the server’s memory is something to which you can’t apply such an easy consolidation methodology to. Instead, you usually need to determine the total memory requirement of all the virtual servers you want to run on a host server and then ensure you have more than that amount of physical memory in the host. However, VMware’s hypervisor complicates that by offering a memory de-duplication feature that allows duplicate memory pages to be replaced with a link to a single memory page shared by several virtual servers, but over-estimating the benefit this technology could deliver wrong can result in the performance issues you tried to avoid. For SQL Server environments that are dependent on access to large amounts of physical memory, trusting these hypervisor memory consolidation technologies still requires testing, so their use in sizing exercises should be minimized.

Bad Contention

Not all contention is good. In fact, unless you plan well you’re more likely to have bad contention than good contention. To understand bad contention, consider the CPU utilization example from the preceding section: 6 servers with average CPU utilization values of 10% being consolidated onto a single CPU host server. This resulted in an average CPU utilization for the host server of around 60%. Now imagine if the average CPU utilization for two of the virtual servers jumps from 10% to 40%. As a consequence, the total CPU requirement has increased from 60% to 120%. Obviously, the total CPU utilization cannot be 120%, so you have a problem. Fortunately, resolving this scenario is one of the core functions of hypervisor software: How can it look like CPU utilization is 120%, for example, when actually only 100% is available?

Where does the missing resource come from? Behaviors such as resource sharing, scheduling, and time-slicing are used by hypervisors to make each virtual server appear to have full access to the physical resources that it’s allocated all of the time. Under the hood, however, the hypervisor is busy managing resource request queues — for example, “pausing” virtual servers until they get the CPU time they need, or pre-empting a number of requests on physical cores while the hypervisor waits for another resource they need to become available.

How much this contention affects the performance of virtual servers depends on how the hypervisor you’re using works. In a worst-case scenario using VMware, a virtual server with a large number of virtual CPUs can be significantly affected if running alongside a number of virtual servers with small numbers of virtual CPUs; this is due to VMware’s use of their co-scheduling algorithm to handle CPU scheduling. Seeing multi-second pauses of the larger virtual server while it waits for sufficient physical CPU resources is possible in the worst-case scenarios, indicating not only the level of attention that should be paid to deploying virtual servers, but also the type of knowledge you should have if you’re going to be using heavily utilized virtual environments.

Although that example of how VMware can affect performance is an extreme example, it does show how bad contention introduces unpredictable latency. Previously, on a host server with uncontended resources, you could effectively assume that any virtual server’s request for a resource could be fulfilled immediately as the required amounts of resource were always available. However, when the hypervisor has to manage contention, a time penalty for getting access to the resource gets introduced. In effect, “direct” access to the physical resource by the virtual server can no longer be assumed.

“Direct” is in quotes because although virtual servers never directly allocate to themselves the physical resources they use in an uncontended situation, the hypervisor does not have difficulty finding the requested CPU time and memory resources they require; the DBA can know that any performance penalty caused by virtualization is likely to be small but, most important, consistent. In a contended environment, however, the resource requirements of other virtual servers now have the ability to affect the performance of other virtual servers, and that becomes un-predictable.

Demand-Based Memory Allocation

I mentioned earlier that some hypervisors offer features that aim to reduce the amount of physical memory needed in a virtual environment’s host servers. Memory is still one of the most expensive components of a physical server, not so much because of the cost per GB but because of the number of GBs that modern software requires in servers. It’s not surprising therefore that virtualization technologies have tried to ease the cost of servers by making what memory is installed in the server go farther. However, there is no such thing as free memory; and any method used to make memory go farther will affect performance somewhere. The goal is to know where that performance impact can occur with the least noticeable effects.

Demand-based memory allocation works on the assumption that not all the virtual servers running on a host server will need all their assigned memory all the time. For example, my laptop has 4GB of memory but 2.9GB of it is currently free. Therefore, if it were a virtual server, the hypervisor could get away with granting me only 1.1GB, with the potential for up to 4GB when I need it. Scale that out across a host server running 20 virtual servers and the potential to find allocated but un-required memory could be huge.

The preceding scenario is the basis of demand-based memory allocation features in modern hypervisors. While VMware and Hyper-V have different approaches, their ultimate aim is the same: to provide virtual servers with as much memory as they need but no more than they need. That way, unused memory can be allocated to extra virtual servers that wouldn’t otherwise be able to run at all because of memory constraints.

In an ideal situation, if several virtual servers all request additional memory at the same time, the host server would have enough free physical memory to give them each all they need. If there’s not enough, however, then the hypervisor can step in to reclaim and re-distribute memory between virtual servers. It may be, for example, that some have been configured to have a higher priority than others over memory in times of shortages; this is called weighting and is described in the next section. The rules about how much memory you can over-provision vary by hypervisor, but the need to reclaim and re-distribute memory is certainly something VMware’s software and Microsoft’s Hyper-V could have to do.

Re-claiming and re-distributing memory ultimately means taking it away from one virtual server to give to another, and from a virtual server that was operating as though the memory allocated to it was all theirs, and it may well have been being used by applications. When this reclamation has to happen, a SQL Server DBA’s worst nightmare occurs, and the balloon driver we mentioned earlier has to inflate.

We briefly mentioned the purpose of a balloon driver in the “Virtualiztion Concepts” section of this chapter; however, to summarize its purpose, when more memory is required than is available in the host server, the hypervisor will have to re-allocate physical memory between virtual servers. It could do this to ensure that any virtual servers that are about to be started have the configured minimum amount of memory allocated to them, or if any resource allocation weightings between virtual servers need to be maintained, for example, if a virtual server with a high weighting needs more memory. Resource weightings are described in the next section.

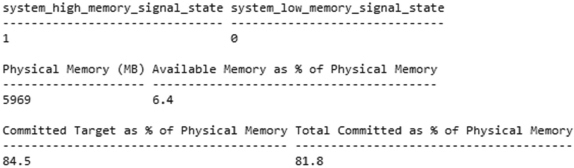

Different hypervisors employ slightly different methods of using a balloon driver, but the key point for DBAs here is that SQL Server always responds to a low Available Megabytes value, which the inflating of a balloon driver can cause. SQL Server’s response to this low-memory condition is to begin reducing the size of the buffer pool and release memory back to Windows, which after a while will have a noticeable effect on database server performance.

The advice from the virtualization vendors about how to configure their demand-based memory allocation technology for SQL Server varies. Hyper-V is designed to be cautious with memory allocations and will not allow the minimum amount of memory a virtual server needs to become unavailable, while VMware allows the memory in a host server to be over-committed. Because of the potential performance issues this can cause, VMware does not recommend running SQL Server on a host that’s had its memory over-committed.

Weighting

Finally, when there is resource contention within a host server, the virtualization administrator can influence the order in which physical resources are protected, reserved, or allocated. This is determined by a weighting value, and it is used in various places throughout a virtualization environment — especially one designed to operate with contention. For example, an environment might host virtual servers for production, development, and occasionally testing. The priority may be for production to always have the resources it needs at the expense of the development servers if need be. However, the test servers, while only occasionally used, might have a higher priority than the development servers, and therefore have a weighting lower than the production servers but higher than the development servers.

IDENTIFYING CANDIDATES FOR VIRTUALIZATION

As virtualization’s popularity has grown, so has the debate about which server roles can be virtualized. Some of the very broad generalizations have contained a grain of truth. Certainly for a long time there was the view that production database servers could never be virtualized but virtualization technology has developed significantly to not normally make that a problem. In fact, VMware now suggests that virtualizing 100% of an enterprise server estate is now a possibility with the latest versions of their software.

Ultimately, the only way to determine how much of your server estate can be virtualized is to adopt some high-level guiding principles before performing a detailed review of relevant performance data to ensure your virtual environment can handle your anticipated workload.

Guiding Principles

When people ask me which servers they can and can’t virtualize, their question is often “what’s the biggest server you’d virtualize?” My answer is always that far more factors should influence the decision than the server’s size alone. This section outlines some general guiding principles that you can follow when considering virtualization. More detailed information is provided in the next section.

Server Workload

The first area is related to the server’s workload. Although you might assume that this is the same as the server’s size, a small server working consistently at its highest capacity may be harder to virtualize than a larger server that is often relatively idle.

Today, with a well-designed virtualization environment it’s safe to work to the following design assumptions:

- Quiet server workloads can be and are routinely virtualized today. These might well be domain controllers; file servers; or the database servers for your anti-virus software, your expense tracking, or your HR system in a small to medium-size business. If the server is one whose performance doesn’t require monitoring or there is no question about it always meeting its business requirements, then you can consider this a small workload.

- Active server workloads also can be and are often routinely virtualized; and as long as capacity checks for the required CPU, memory, and storage throughput are made first, these workloads usually virtualize well. This kind of server might well host your finance system’s database server, where for the majority of the time a constant number of data entry users execute low-cost queries, some utilization spikes occur with ad-hoc reports, while month-end processing creates an infrequent but demanding workload. You may well already be using some performance-monitoring tools to proactively look for slowdowns in the end user experience, as well as perhaps deploying a physical server to which you know you can add more CPU or memory as needed.

- Busy server workloads need planning. The latest hypervisors claim to be able to accommodate them, but you need to design and tune your environment well first, in order to ensure the success promised by your virtualization vendor’s marketing. These workloads reflect those servers that you already have to proactively manage, even on relatively new server hardware. In the database world, these are likely to be transactional systems that have a high throughput of order or quotes being processed, or perhaps reporting servers that routinely perform CPU-intensive server-side aggregations. These are demanding workloads that require thorough planning and testing before deploying in a virtual environment.

Gathering Sizing Data

So far we’ve approached the identification of “good” virtualization candidates fairly unscientifically, whereas some virtualization specialists I’ve worked with would have immediately started collecting performance data and analyzing it. That step is still crucial for your virtualization planning, but working through the guiding principles just described should only take a couple of moments, and it will help you to quickly identify your potential risks and concerns, or even nonstarters, and save you some time.

More detail is provided in the next section on how to use collected configuration, utilization, or performance data to help design a virtual server running SQL Server successfully, but the following list describes some data you should collect and explains why it will be useful to your planning:

- Memory utilization — This is one of the most important, if not the most important, piece of performance data to capture. How much memory does your database server currently have installed, how much does SQL Server currently actually use, and does SQL Server even have sufficient memory right now?

- CPU utilization — This data will help you understand the server’s workload patterns and identify how easy it will be for your server to coexist with other virtual servers on the same host server once virtualized. As well as collecting the Average Total CPU utilization, you should also monitor how often periods of high activity occur and how long they last. For example, you might run a recalculation job every hour that takes 10 minutes to run. In addition to collecting CPU utilization data from within Performance Monitor, you should also understand how your instance of SQL Server uses parallelism. While your current physical server might have 16 cores, running a smaller number of parallelized queries on it requires different planning than if you run a much larger number of serial queries.

- Storage utilization — This is an area often overlooked when designing virtual environments, yet it’s an easy area to capture data about. The regular logical disk counters from within Performance Monitor will help you size your host server requirements when you know the average and peak period IOPS and MB/s demands of your current server in order to deliver satisfactory query times. If available, also ensure that you capture the same data from your storage subsystem. Both sets of data should show the same trends even if they show slightly different values, and will be useful in your planning.

- Network utilization — In the same way storage utilization is often overlooked, so is network utilization. The most useful Performance Monitor counter you capture here is the Mb/s throughput of the server’s Network Interface Cards during peak periods, perhaps during a ETL process, backup window, or busy business period.

Sizing Tools

Several tools are available for gathering the data you will need to understand the resourcing requirements of your future virtual servers. The Microsoft Assessment and Planning (MAPS) toolkit can scan a specific server or an entire network to report on the software and hardware resources currently being both deployed and utilized. It even has a built-in feature to specifically advise on physical-to-virtual (P2V) migrations. VMware has a similar tool called the Capacity Planner that also analyzes a workload over a period of time and advises on the best P2V approach.

Other than these tools designed to aid P2V migrations, you may be currently using others that are already storing the kind of information you need. SQL Server’s Management Data Warehouse and Utility Control Point features might be performing such roles in your environment.

Non-Performance Related Requirements

Having collected data about how hard your server may or may not need to work once it is virtualized, you should also collect information about when your server needs to work hard and, more important, be available.

For these requirements I suggest collecting the following information:

- Peak workload periods — If your database server operates according to a structured and planned routine, then knowing when you need your server to be able to work especially hard is important. Being able to plan this in a calendar format will help you visualize all your different server workloads and prevent potentially negative conflicting workloads on the same host server.

- Availability requirements — Currently, your existing server is likely deployed in a way that ensures it meets the business availability standards required of it. For example, it might be standalone or it might be clustered. Knowing what level of availability is expected of the server once it’s virtualized plays a large role in determining the virtualization technologies you adopt.

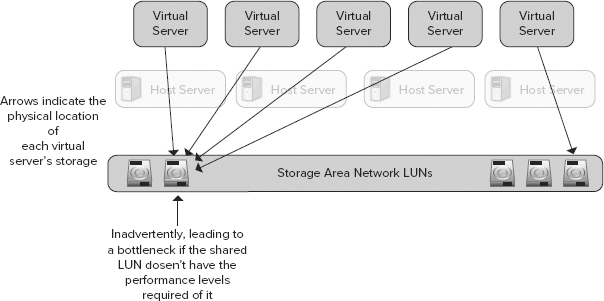

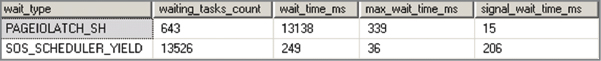

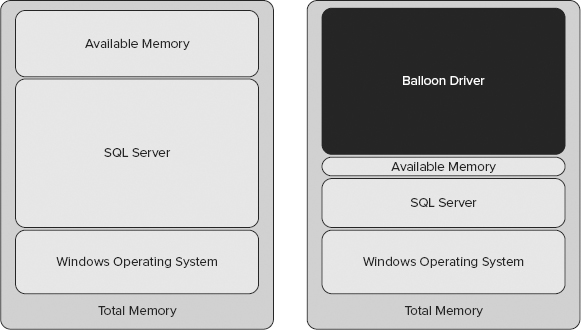

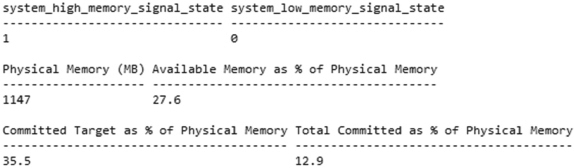

ARCHITECTING SUCCESSFUL VIRTUAL DATABASE SERVERS