Populating a data warehouse involves all of the tasks related to getting the data from the source operational systems, cleansing and transforming the data to the right format and level of detail, loading it into the target data warehouse, and preparing it for analysis purposes.

Figure 5.1 shows the steps making up the extraction, transformation, and load (ETL) process. Data is extracted from the source operational systems and transported to the staging area. The staging area is a temporary holding place used to prepare the data. The staging area may be a set of flat files, temporary staging tables in the Oracle warehouse, or both. The data is integrated with other data, cleansed, and transformed into a common representation. It is then loaded into the target data warehouse tables. Sometimes this process is also referred to as ETT—extraction, transportation, and transformation.

During the initial population of the data warehouse, historical data is loaded that could have accumulated over several years of business operation. The data in the operational systems may often be in multiple formats. If, for instance, the point-of-sales operational system was replaced two years ago, the current two years of history will be in one format, while data older than two years will be in another format.

After the initial historical load, new transaction and event data needs to be loaded on a periodic basis. This is typically done on a regular time schedule, such as at the end of the day, week, or month. During the load, and while the indexes and materialized views are being refreshed, the data is generally unavailable to warehouse users for querying. The period of time allowed for inserting the new data is called the batch window. The batch window is a continuously shrinking amount of time as more businesses are on-line for longer periods of time. Currently, with the ability to refresh the warehouse in real time, or near real time, batch windows are becoming a higher priority and requirement as businesses want and need to react to changes in a more immediate fashion. Higher availability can be achieved by partitioning the fact table. While data is loaded into a new partition, or being updated in an existing partition, the rest of the partitions are still available for use.

A large portion of the work in building a data warehouse will be devoted to the ETL process. Finding the data from the operational systems, creating extraction processes to get it out, transporting, filtering, cleansing, transforming, integrating data from multiple sources, and loading it into the warehouse can take a considerable amount of time.

If you are not already familiar with the company’s data, part of the difficulty in developing the ETL process is gaining an understanding of the data. One object can have different names in different systems. Even worse, two different things could have the same name. It can be a challenge to discover all of this, particularly in a company where the systems are not well documented. Each column in the target data warehouse must be mapped to the corresponding column in the source system. Some of the data will be mapped directly; other data will need to be derived and transformed into a different format.

Once the data is loaded into the warehouse, further processing to integrate it with existing data, update indexes, gather statistics, and refresh materialized views needs to take place prior to it being “published” as ready for users to access. Once the data is published, it should not be updated again until the next batch window, to ensure users do not receive different answers to the same query asked at a different time.

Once you have identified the data you need in the warehouse for analysis purposes, you need to locate the operational systems within the company that contain that data. The data needed for the warehouse is extracted from the source operational systems and written to the staging area, where it will later be transformed. To minimize the performance impact on the source database, data is generally extracted without applying any transformations to it.

Often the owners of the operational systems will not allow the warehouse developers direct access to those systems but will provide periodic extracts. These extracts are generally in the form of flat, sequential operating system files, which will make up the staging area.

In order to extract the fields and records needed for the warehouse, specialized application programs may need to be developed. If the data is stored in a legacy system, then these programs may require special logic—for example, if written in COBOL—in order to handle things such as repeating fields in the “COBOL occurs” clause. The data warehouse designers need to work closely with the application developers for the OLTP systems that are building the extract scripts to provide the necessary columns and formats of the data.

As part of designing the ETL process, you need to determine how frequently data should be extracted from the operational systems. It may be at the end of some time period or business event, such as at the end of the day or week or upon closing of the fiscal quarter. It should be clearly defined what is meant by the “end of the day” or the “last day of the week,” particularly if you have a system used across different time zones. The extraction may be done at different times for different systems and staged to be loaded into the warehouse during an upcoming batch window. Another aspect of the warehouse design process involves deciding which level of aggregation is needed to answer the business queries. This also has an impact on which and how much data is extracted and transported across the network.

Some operational systems may be in relational databases, such as Oracle 8i, 9i, or 10g; Oracle Rdb; DB2/MVS; Microsoft SQL Server; Sybase; or Informix. Others may be in a legacy database format, such as IMS or Oracle DBMS. Others may be in VSAM, RMS indexed files, or some other structured file system.

If extracting and transporting the data from the source systems must be done by writing the data to flat files, then there is also the issue of defining:

The file naming specification.

Which files constitute the batch—for example, if more than one source table is being read from each source system, then data in each table will probably be written to its own flat file. All of the data extracted at the same time from the source system should normally be batched together and transferred and loaded into the data warehouse as a logical unit of work.

The method for transporting the files between the source system and the warehouse—for example, is the data pushed from the source system or pulled by the warehouse system? If FTP is being used, then typically it may require a new operating system account on the warehouse server if the source system is pushing the data, but this new account will normally only be able to write to a very restricted directory area that the warehouse load processes can then read from.

Let’s consider the file naming convention, and, in particular, the situation if multiple different source systems provide their data using flat files. With this situation, it is quite typical for the file naming convention to incorporate the following:

The source system name

The date of extraction

A file batch number—particularly if there can be more than one data extraction in a business day

The source table name

A single character indicator to show whether this is an original extraction or a repeat—for example, if some corruption occurred and the data had to be reextracted and resent

Alternatively, if you are able to access the source systems directly without recourse to using flat files, you can retrieve the data by using a variety of techniques, depending on the type of system it is. For small quantities of data, a gateway such as ODBC can be used. For larger amounts of data, a custom program directly connecting to the source database in the database’s native Application Programming Interface (API) can be written. Many ETL tools simplify the extraction process by providing connectivity to the source.

After the initial load of the warehouse, as the source data changes, the data in the warehouse must be updated or refreshed to reflect those changes on a regular basis. A mechanism needs to be put into place to monitor and capture changes of interest from the operational systems. Rather than rebuilding the entire warehouse periodically, it is preferable to apply only the changes. By isolating changes as part of the extraction process, less data needs to be moved across the network and loaded into the data warehouse.

Changed data includes both new data that has been added to the operational system as well as updates and deletes to existing data. For example, in the EASYDW warehouse, we are interested in all new orders as well as updates to existing product information and customers. If we are no longer selling a product, the product is deleted from the order-entry system, but we still want to retain the history in the warehouse. This is why surrogate keys are recommended for use in the data warehouse. If the product_key is reused in the production system, it does not affect the data warehouse records.

In the data warehouse, it is not uncommon to change the dimension tables, because a column such as a product description may change. Part of the warehouse design involves deciding how changes to the dimensions will be reflected. If you need to keep one version of the old product description, you could have an additional column in the table to store both the current description and the previous description. If you need to keep all the old product descriptions, you would have to create a new row for each change, assigning different key values. In general, you should try to avoid updates to the fact table.

There are various ways to identify the new or changed data. One technique to determine the changes is to include a time stamp to record when each row in the operational system was changed. The data extraction program then selects the source data based on the time stamp of the transaction and extracts all rows that have been updated since the time of the last extraction. For example, when moving orders from the order processing system into the EASYDW warehouse, this technique can be used by selecting rows based on the PURCHASE_DATE column, as illustrated later in this chapter.

However, this technique does have some potential disadvantages:

If multiple updates have occurred to a record since the date and time of the last extraction, then only the current version of the record is read and not all of the interim versions. Typically, this may not have a significant impact on the warehouse unless it is necessary for analysis purposes to track all changes.

The query to select the changed data based on the time stamp can have an impact on the source system.

If records are deleted in the source system, then this mechanism is unsuitable because you cannot select a record that is no longer present. Converting the delete into a “logical delete” by setting a flag is normally not practical and involves considerable application change. This is where the next technique using triggers can help.

If the source is a relational database, triggers can be used to identify the changed rows. Triggers are stored procedures that can be invoked before or after an event, such as when an insert, update, or delete occurs on each record. The trigger can be used to save the changed records into a separate table from where the extract process can later retrieve the changed rows. One advantage of triggers is that the same transaction is used for writing the changed record to another table as is used to alter the source record itself. If this transaction aborts and rolls back for any reason, then our change record is also rolled back. However, be very careful of triggers in high-volume applications, as they can add significant overhead to the operational system.

Sometimes it may not be possible to change the schema to add a timestamp or trigger. The system may already be heavily loaded, and you do not want to degrade the performance in any way. Or the source may be a legacy system, which does not have triggers. Therefore, you may need to use a file comparison to identify changes. This involves keeping before and after images of the extract files to find the changes. For example, you may need to compare the recent extract with the current product or customer list to identify the changes.

Changes to the metadata, or data definitions, must also be identified. Changes to the structure of the operational system, such as adding or dropping a column, impact the extraction and load programs, which may need to be modified to account for the change.

Oracle Database 10g uses a feature called Change Data Capture, often referred to as CDC, to facilitate identifying changes when the source system is also an Oracle 9i or 10g database. CDC was introduced in Oracle 9i with just the synchronous form, where generation of the change records is tied to the original transaction. In Oracle Database 10g the new asynchronous form is introduced; this disassociates the generation of the change records from the original transaction and reduces the impact on the source system for collecting the change data. In this section, we will look at both forms and work through examples of each mechanism.

With CDC, the results of all INSERT, UPDATE, and DELETE operations can be saved in tables called change tables. The data extraction programs can then select the data from the change tables. CDC uses a publish-subscribe interface to capture and distribute the change data, as illustrated in Figure 5.2. The publisher, usually a DBA, determines which user tables in the operational system are used to load the warehouse and sets up the system to capture and publish the change data. A change table is created for each source table with data that needs to be moved to the warehouse.

The extract programs then subscribe to the source tables; therefore, there can be any number of subscribers. Each subscriber is given his or her own view of the change table. This isolates the subscribers from each other while they are simultaneously accessing the same change tables. The subscribers use SQL to select the change data from their subscriber views. They see just the columns that they are interested in and only the rows that they have not yet processed. If the updates of a set of tables are dependent on each other, the change tables can be grouped into a change set. If, for example, you had an order header and an order detail table, these two tables would be grouped together in the same change set to maintain transactional consistency. In order to create a change table, you must first, therefore, create the parent change set. A change source is a logical representation of the source database that contains one or more change sets.

In Oracle 9i only synchronous data capture existed, where changes on the operational system are captured in real time as part of the source transaction. The change data is generated as DML operations are performed on the source tables. When a new row is inserted into the user table, it is also stored in the change table. When a row is updated in a user table, the updated columns are stored in the change table. The old values, new values, or both can be written to the change table. When a row is deleted from a user table, the deleted row is also stored in the change table. The change data records are only visible in the change table when the source transaction is committed.

Synchronous CDC is based on triggers, which fire for each row as the different DML statements are executed. This simplifies the data extraction process; however, it adds an overhead to the transaction performing the DML. In Asynchronous Change Data Capture, which is introduced in Oracle Database 10g, the capture of the changes is not dependent upon the DML transaction on the source system. With asynchronous CDC, the changes are extracted from the source system’s redo logs, which removes the impact on the actual database transaction. The Oracle redo logs are special files used by the database that record all of the changes to the database as they occur. There are two forms of redo logs:

On-line redo logs

Archive redo logs

The on-line redo logs are groups of redo logs that are written to in a round-robin fashion as transactions occur on the database. When a redo log fills up, the database swaps to the next one, and if archiving of the logs is turned on (which is practically a certainty on any production database), then the database writes the completed redo log file to a specified destination where it can subsequently be safely stored. This is the archived redo log. Asynchronous CDC reads redo logs to extract the change data for the tables that we are interested in and therefore it is a noninvasive technique, which does not need to alter the source schema to add triggers to tables. The action of reading the logs in this fashion is often called mining the logs. In addition, the mining of the log files for the change data is disassociated from the source transaction itself, which reduces, but doesn’t remove, the impact on the source system.

Asynchronous CDC needs an additional level of redo logging to be enabled on the source system, which adds its own level of impact on the source database, but this is much less than that of synchronous CDC.

Asynchronous CDC can be used in two ways:

HOTLOG, where the changes are extracted from the on-line redo logs on the source database and then moved (by Oracle Streams processes) into local CDC tables also in the source database. The changed data in the CDC tables will still need to be transported to our warehouse database.

AUTOLOG, where the changed data is captured from the redo logs as they are moved between databases by the Log Transport Services. The changes are then extracted from these logs and made available in change tables on this other database. If this other database is our data warehouse database, then, by using AUTOLOG, we have removed the transportation step to make our changed data available in the staging area of the warehouse.

Log Transport Services are a standard part of the operation of the database—for example, they are used by Oracle Data Guard for moving the log files to other servers in order to maintain standby databases and as such do not add any additional impact to the source database operation.

The time between the change being committed by the transaction and it being detected by CDC and moved into the change table is called the latency of change. This is smaller with the HOTLOG method than with the AUTOLOG method. This is because HOTLOG is reading the on-line redo logs and publishing to tables on the same source system, compared with AUTO LOG, where the logs must first be switched and then transported between databases—in which case the frequency of transporting the logs is the determining factor. For HOTLOG, mining the redo logs occurs on a transaction-by-transaction basis when the source transaction commits.

In the EASYDW warehouse, we are interested in all new orders from the order-entry system. The DBA creates the change tables, using the DBMS_CDC_PUBLISH.CREATE_CHANGE_TABLE procedure, and specifies a list of columns that should be included. A change table contains changes from only one source table.

In many of the examples in this chapter, we will use a schema named OLTP, which represents a part of the operational database. In the following example, the DBA uses the CREATE_CHANGE_TABLE procedure to capture the changes to the columns from the ORDERS table in the OLTP schema.

The next two sections provide an example of the two different methods for setting up synchronous and asynchronous CDC and, following that, the common mechanism for subscribing to the change data. To do this we are going to use three new schemas:

OLTP for the owner of our warehouse source tables

OLTPPUB for the publisher of the change data that has occurred on our OLTP tables

OLTPSUBSCR for the subscriber to the change data

Our source table for both methods is ORDERS, and the other two differences between the methods to note are:

For synchronous, the change set is EASYDW_SCS and the change table is ORDERS_SYNCH_CT

For asynchronous, the change set is EASYDW_ACS and the change table is ORDERS_ASYNCH_CT

In the subscriber section, we will highlight what needs to change in order to subscribe to the change data created by these two methods.

From a DBA account, create the new accounts and create a source table in the OLTP account:

CREATE USER oltp IDENTIFIED BY oltp DEFAULT TABLESPACE users TEMPORARY TABLESPACE temp QUOTA UNLIMITED ON users; GRANT connect, resource TO oltp ; CREATE USER oltpsubscr IDENTIFIED BY oltpsubscr DEFAULT TABLESPACE users TEMPORARY TABLESPACE temp QUOTA UNLIMITED ON users; GRANT connect, resource TO oltpsubscr ; CREATE USER oltppub IDENTIFIED BY oltppub QUOTA UNLIMITED ON SYSTEM QUOTA UNLIMITED ON SYSAUX; GRANT CREATE SESSION TO oltppub; GRANT CREATE TABLE TO oltppub; GRANT CREATE TABLESPACE TO oltppub; GRANT UNLIMITED TABLESPACE TO oltppub; GRANT SELECT_CATALOG_ROLE TO oltppub; GRANT EXECUTE_CATALOG_ROLE TO oltppub; GRANT CREATE SEQUENCE TO oltppub; GRANT CONNECT, RESOURCE, DBA TO oltppub; CREATE TABLE oltp.orders (order_id varchar2(8) NOT NULL, product_id varchar2(8) NOT NULL, customer_id varchar2(10) NOT NULL, purchase_date date NOT NULL, purchase_time number(4,0) NOT NULL, purchase_price number(6,2) NOT NULL, shipping_charge number(5,2) NOT NULL, today_special_offer varchar2(1) NOT NULL, sales_person_id varchar2(20) NOT NULL, payment_method varchar2(10) NOT NULL ) TABLESPACE users ;

For synchronous CDC, creation of the change set must use the predefined change source, SYNC_SOURCE, which represents the source database. The following PL/SQL block performs both of these steps:

BEGIN

DBMS_CDC_PUBLISH.CREATE_CHANGE_SET

(change_set_name =>'EASYDW_SCS',

description => 'Synchronous Change set for EasyDW',

change_source_name => 'SYNC_SOURCE'

);

DBMS_CDC_PUBLISH.CREATE_CHANGE_TABLE

(

owner => 'oltppub',

change_table_name => 'ORDERS_SYNCH_CT',

change_set_name => 'EASYDW_SCS',

source_schema => 'oltp',

source_table => 'ORDERS',

column_type_list =>

'order_id varchar2(8),product_id varchar2(8),'

||'customer_id varchar2(10), purchase_date date,'

||'purchase_time number(4,0),purchase_price number(6,2),'

||'shipping_charge number(5,2), '

||'today_special_offer varchar2(1),'

||'sales_person varchar2(20), '

||'payment_method varchar2(10)',

capture_values => 'both',

rs_id => 'y',

row_id => 'n',

user_id => 'n',

timestamp => 'n',

object_id => 'n',

source_colmap => 'y',

target_colmap => 'y',

options_string => 'TABLESPACE USERS'

);

END;

/This script creates the change set EASYDW_SCS for changes to the table ORDERS owned by account OLTP to publish the changes into the change table ORDERS_SYNCH_CT owned by OLTPPUB. We must now grant select privilege on the change table to the subscriber account, OLTPSUBSCR, so that it can see the contents of the table.

GRANT SELECT ON ORDERS_SYNCH_CT TO OLTPSUBSCR ;

When creating the change table, you must specify the column list, which indicates the columns you are interested in capturing. In addition, there are a number of other parameters that allow you to specify:

Whether you want the change table to contain the old values for the row, the new values, or both

Whether you want a row sequence number, which provides the sequence of operations within a transaction

The rowid of the changed row

The user who changed the row

The time stamp of the change

The object id of the change record

A source column map, which indicates the source columns that have been modified

A target column map to track which columns in the change table have been modified

An options column to append to a CREATE TABLE DDL statement

An application can check either the source column map or the target column map to determine which columns have been modified.

A sample of the output will be seen later in the chapter. To see a list of change tables that have been published, query the CHANGE_TABLES dictionary table.

SQL> SELECT CHANGE_TABLE_NAME FROM CHANGE_TABLES; CHANGE_TABLE_NAME ------------------ ORDERS_SYNCH_CT

The DBA then grants SELECT privileges on the change table to the subscribers.

For the asynchronous CDC example, we will use the HOTLOG method, where the change data is mined from the on-line redo logs of the source system.

First, make sure that your source database is in ARCHIVELOG mode, where the log files are being archived to a separate destination area on your file system. It would be very unusual for a source production system not to be already operating in archive log mode, because this is fundamental to any recovery of the database in the event of failure. To put the database in ARCHIVELOG mode, you will need to shut down the database and restart it, as summarized in the following code segment. These commands are executed from SQL*Plus using a sysdba account—for example, SYS:

shutdown immediate startup mount alter database archivelog ; alter database open ;

Hint

Simply changing the ARCHIVELOG mode like this may be fine on a sandpit, test, or play system, but this operation can invalidate your backups—so on any production or similarly important system, you will want to redo your backups.

The next step requires altering the database in order to create the additional logging information into the log files.

ALTER DATABASE FORCE LOGGING;

ALTER DATABASE ADD SUPPLEMENTAL LOG DATA;

ALTER TABLE oltp.orders

ADD SUPPLEMENTAL LOG GROUP log_group_orders

(order_id,product_id,customer_id,

purchase_date,purchase_time,purchase_price,

shipping_charge,today_special_offer) ALWAYS;The FORCE LOGGING clause to the alter database statement specifies that the database will always generate redo logs, even when database operations have been used with the NOLOGGING clause. This ensures that asynchronous CDC always has the necessary redo log data to mine for the changes. The SUPPLEMENTAL LOG DATA clause is adding minimal supplemental logging, but this does not add a significant overhead to the database performance and performing this enables the use of the Oracle log mining features. This statement is creating an unconditional log group for the data changes for those source table columns to be captured in a change table. Without the unconditional log group, CDC records unchanged column values in UPDATE operations as NULL, making it ambiguous whether the NULL means the value was unchanged or changed to be NULL. With the unconditional log group, CDC records the actual value in UPDATE operations for unchanged column values, so that a NULL always means the value was changed to NULL.

Asynchronous CDC utilizes Oracle Streams for the propagation of our change data within the database and to use Streams we must be granted certain privileges. These privileges enable the user to use Streams and the underlying Oracle Advanced Queue objects, such as queues, propagations, and rules. Execute the following command from your SYSDBA (e.g., SYS) account:

EXECUTE DBMS_STREAMS_AUTH.GRANT_ADMIN_PRIVILEGE

(GRANTEE=>'oltppub'),Each source table must be instantiated with Oracle Streams in order that Streams can capture certain information that it requires in order to record the source table data changes. This is achieved from a DBA account by calling the PREPARE_TABLE_INSTANTIATION procedure as shown here:

EXECUTE DBMS_CAPTURE_ADM.PREPARE_TABLE_INSTANTIATION

(TABLE_NAME=>'oltp.orders'),Now we must create our change set, which we are going to call EASYDW_ACS, using the predefined change source HOTLOG_SOURCE, which represents the current redo log files of the source database. If we were performing an AUTOLOG type of asynchronous CDC, then there would be no predefined change source to be used in this step. Instead, the DBA on the target staging database must define and create the change source using the CREATE_AUTOLOG_CHANGE_SOURCE procedure in the DBMS_CDC_PACKAGE. The call to this procedure uses:

The global name of the source database

The SCN number of the data dictionary build, which is determined by a call to DBMS_CAPTURE_ADM.BUILD() on the source database

In order to interpret the redo logs—for example, in order to know which internal reference number identifies what table—the log mining functionality needs a version of the source system data dictionary. This SCN number identifies a source system redo log, which contains the Log-Miner dictionary and therefore the correct definition of the tables.

Execute the following from the OLTPPUB publisher account. This command creates the change set and its associated Oracle Streams processes but does not start them.

BEGIN

DBMS_CDC_PUBLISH.CREATE_CHANGE_SET

(

change_set_name => 'EASYDW_ACS',

description => 'Asynchronous Change set for purchases info',

change_source_name => 'HOTLOG_SOURCE',

stop_on_ddl => 'y'),

END;

/Now we can create the change table, ORDERS_CT, in the OLTPPUB publisher account, which will contain the changes that have been mined from the on-line redo log. Execute the following from the OLTPPUB account:

BEGIN

DBMS_CDC_PUBLISH.CREATE_CHANGE_TABLE

(

owner => 'oltppub',

change_table_name => 'ORDERS_ASYNCH_CT',

change_set_name => 'EASYDW_ACS',

source_schema => 'OLTP',

source_table => 'ORDERS',

column_type_list =>

'order_id varchar2(8),product_id varchar2(8),'

||'customer_id varchar2(10), purchase_date date,'

||'purchase_time number(4,0),purchase_price number(6,2),'

||'shipping_charge number(5,2),today_special_offer varchar2(1),'

||'sales_person varchar2(20), payment_method varchar2(10)',

capture_values => 'both',

rs_id => 'y',

row_id => 'n',

user_id => 'n',

timestamp => 'n',

object_id => 'n',

source_colmap => 'n',

target_colmap => 'y',

options_string => 'TABLESPACE USERS'),

END;

/

GRANT SELECT ON ORDERS_ASYNCH_CT TO OLTPSUBSCR ;Finally, we will enable our change set EASYDW_ACS, which starts the underlying Oracle Streams processes for moving our change data:

BEGIN

DBMS_CDC_PUBLISH.ALTER_CHANGE_SET

(

change_set_name => 'EASYDW_ACS',

enable_capture => 'y'

);

END;

/To verify that everything is working, perform this simple test. Insert and commit a record into your OLTP.ORDERS source table. After a few minutes you will be able to see the change record in the publisher table OLTPPUB.ORDERS_CT. We have now created the facility using database asynchronous CDC mechanisms to capture changes on our ORDERS table without needing to invasively alter the source schema to create triggers or amend any application.

The extraction programs create subscriptions to access the change tables. A subscription can contain data from one or more change tables in the same change set.

The ALL_SOURCE_TABLES dictionary view lists the source tables that have already been published by the DBA. In this example, changes for the ORDERS table in the OLTP schema have been published.

SQL> SELECT * FROM ALL_SOURCE_TABLES; SOURCE_SCHEMA_NAME SOURCE_TABLE_NAME ------------------------------ ------------------ OLTP ORDERS

There are several steps to creating a subscription.

Create a subscription.

List all the tables and columns the extract program wants to subscribe to.

Activate the subscription.

The first step is to create a subscription. The following is performed from the subscriber account OLTPSUBSCR. Note that for our example, we are going to access the change data created by the asynchronous CDC method via the EASYDW_ACS change set. If we wanted to access the synchronous change data, then we would simply have to use the change set that we created for synchronous CDC, i.e., EASYDW_SCS.

SQL> BEGIN

DBMS_CDC_SUBSCRIBE.CREATE_SUBSCRIPTION

(SUBSCRIPTION_NAME => 'ORDERS_SUB',

CHANGE_SET_NAME => 'EASYDW_ACS',

DESCRIPTION => 'Changes to orders table'),

END;

/Next, specify the source tables and columns of interest using the SUBSCRIBE procedure. A subscription can contain one or more tables from the same change set. The SUBSCRIBE procedure lists the schema, table, and columns of change data that the extract program will use to load the warehouse. In this example, the subscribe procedure is used to get changes from all the columns in the ORDERS table in the OLTP schema. The subscribe procedure is executed once for each table in the subscription, and in this example we were only interested in changes from one table. However, you could repeat this procedure to subscribe to changes to other tables in the change set.

Instead of accessing the change tables directly, the subscriber creates a subscriber view for each source table of interest. This is also done in the call to the SUBSCRIBE procedure.

SQL> BEGIN

DBMS_CDC_SUBSCRIBE.SUBSCRIBE

(SUBSCRIPTION_NAME => 'ORDERS_SUB',

SOURCE_SCHEMA => 'oltp',

SOURCE_TABLE => 'orders',

COLUMN_LIST => 'order_id,product_id,'

||'customer_id, purchase_date,'

||'purchase_time,purchase_price,'

||'shipping_charge, today_special_offer,'

||'sales_person, payment_method',

SUBSCRIBER_VIEW => 'ORDERS_VIEW'

);

END;

/After subscribing to all the change tables, the subscription is activated using the ACTIVATE_SUBSCRIPTION procedure. Activating a subscription is done to indicate that all tables have been added, and the subscription is now complete.

SQL> EXECUTE DBMS_CDC_SUBSCRIBE.ACTIVATE_SUBSCRIPTION (SUBSCRIPTION_NAME => 'ORDERS_SUB'),

Once a subscription has been activated, as new data gets added to the source tables it is made available for processing via the change tables.

To illustrate how change data is processed, let us assume two rows are inserted into the ORDERS table. As data is inserted into the ORDERS table, the changes are also stored in the change table, ORDERS_ASYNCH_CT.

SQL> INSERT INTO oltp.orders

(order_id,product_id,customer_id,

purchase_date,

purchase_time, purchase_price,shipping_charge,

today_special_offer,

sales_person_id,payment_method)

VALUES ('123','SP1031', 'AB123495',

to_date('01-JAN-2004', 'dd-mon-yyyy'),

1031,156.45,6.95,'N','SMITH','VISA'),

1 row created.

SQL> INSERT INTO oltp.orders

(order_id,product_id,customer_id,

purchase_date,

purchase_time,purchase_price,shipping_charge,

today_special_offer,

sales_person_id,payment_method)

VALUES ('123','SP1031','AB123495',

to_date('01-FEB-2004', 'dd-mon-yyyy'),

1031,156.45,6.95,'N','SMITH','VISA'),

1 row created.

SQL> commit;In order to process the change data, a program loops through the steps described in the following text and illustrated in Figure 5.3. A change table is dynamic; new change data is appended to the change table at the same time the extraction programs are reading from it. In order to present a consistent view of the contents of the change table, change data is viewed for a window of source database transactions. Prior to accessing the data, the window is extended. In Figure 5.3, rows 1–8 are available in the first window. While the program was processing these rows, rows 9–13 were added to the change table. Purging the first window and extending the window again can access rows 9–13.

Rather than accessing the change table directly, the program selects the data from the change table using the subscriber view specified earlier in the SUBSCRIBE procedure.

Change data is only available for a window of time: from the time the EXTEND_WINDOW procedure is invoked until the PURGE_WINDOW procedure is invoked. To see new data added to the change table, the window must be extended using the EXTEND_WINDOW procedure.

SQL> BEGIN

DBMS_CDC_SUBSCRIBE.EXTEND_WINDOW

(SUBSCRIPTION_NAME => 'ORDERS_SUB'),

END;

/In this example, the contents of the subscriber view will be examined.

SQL> describe ORDERS_VIEW Name Null? Type ----------------------- -------- ---------------- OPERATION$ CHAR(2) CSCN$ NUMBER COMMIT_TIMESTAMP$ DATE RSID$ NUMBER SOURCE_COLMAP$ RAW(128) TARGET_COLMAP$ RAW(128) CUSTOMER_ID VARCHAR2(10) ORDER_ID VARCHAR2(8) PAYMENT_METHOD VARCHAR2(10) PRODUCT_ID VARCHAR2(8) PURCHASE_DATE DATE PURCHASE_PRICE NUMBER(6,2) PURCHASE_TIME NUMBER(4) SALES_PERSON VARCHAR2(20) SHIPPING_CHARGE NUMBER(5,2) TODAY_SPECIAL_OFFER VARCHAR2(1)

The first column of the output shows the operation: I for insert. Next, the commit scn and commit time are listed. The new data is listed for each row, and the row source id, indicated by RSID$, shows the order of the statements in the transaction.

SQL> SELECT OPERATION$, CSCN$, COMMIT_TIMESTAMP$, RSID$,

CUSTOMER_ID, ORDER_ID, PAYMENT_METHOD,

PRODUCT_ID, PURCHASE_DATE, PURCHASE_PRICE,

PURCHASE_TIME, SALES_PERSON, SHIPPING_CHARGE,

TODAY_SPECIAL_OFFER

FROM ORDERS_VIEW;

OP CSCN$ COMMIT_TIMESTAMP RSID$ CUSTOMER_ID

-- ---------- ---------------- ---------- -----------

ORDER_ID PAYMENT_METHOD PRODUCT_ID PURCHASE_DATE PURCHASE_PRICE PURCHASE_TIME

-------- -------------- ---------- ------------- -------------- -------------

SALES_PERSON SHIPPING_CHARGE TODAY_SPECIAL_OFFER

-------------------- --------------- -------------------

I 6848693 05-JUN-04 10001 AB123495

123 VISA SP1031 01-JAN-04 156.45 1031

6.95 N

I 6848693 05-JUN-04 10002 AB123495

123 VISA SP1031 01-FEB-04 156.45 1031

6.95 NThe window is purged when the data is no longer needed, using the PURGE_WINDOW procedure. When all subscribers have purged their windows, the data in those windows is automatically deleted.

SQL> EXECUTE DBMS_CDC_SUBSCRIBE.PURGE_WINDOW

(SUBSCRIPTION_NAME => 'ORDERS_SUB'),When an extract program is no longer needed, you can end the subscription, using the DROP_SUBSCRIPTION procedure.

SQL> EXECUTE DBMS_CDC_SUBSCRIBE.DROP_SUBSCRIPTION

(SUBSCRIPTION_NAME => 'ORDERS_SUB'),For synchronous and HOTLOG asynchronous CDC, now that the changes have been captured from the operational system, they need to be transported to the staging area on the warehouse database. The extract program could write them to a data file outside the database, use FTP to copy it, and SQL*Loader or external tables to load the change data into the staging area. Alternatively, the changes could be written to a table and moved to the staging area using transportable tablespaces. Both these techniques are discussed later in this chapter. Of course, if we were using the AUTOLOG form of asynchronous CDC, then we will have already moved our changes to the warehouse database and into the staging area as part of the CDC operation.

Once the data has been extracted from the operational systems, it is ready to be cleansed and transformed into a common representation. Differences between naming conventions, storage formats, data types, and encoding schemes must all be resolved. Duplicates are removed, relationships are validated, and unique key identifiers are added. In this section, various types of transformations will be introduced; later in the chapter, we’ll see specific examples of transformations.

Often the information needed to create a table in the warehouse comes from multiple source systems. If there is a field in common between the systems, the data can be joined via that column.

Integrating data from multiple sources can be very challenging. Different people may have designed the operational systems, at different times, and using different styles, standards, and methodologies. They may use different technology (e.g., hardware platforms, database management systems, and operating system software). If data is coming from an IBM mainframe, the data may need to be converted from EBCDIC to ASCII or from big endian to little endian or vice versa.

To compound the problem, there may not be a common identifier in the source systems. For example, when creating the customer dimension, there may not be a CUSTOMER_ID in each system. You may have to look at customer names and addresses to determine that it is the same customer. These may have different spacing, case, and punctuation. Oracle Warehouse Builder helps address the customer deduplication problem.

The majority of operational systems contain some dirty data, which means that there may be:

Duplicate records

Data missing

Data containing invalid values

Data pointing to primary keys that do not exist

Sometimes, business rules are enforced by the applications; other times, by integrity constraints within the database; and sometimes there may be no enforcement at all.

Data must be standardized. For example, any given street address, such as 1741 Coleman Ave., can be represented in many ways. The word “Avenue” may be stored as “Ave,” “Ave.,” “Avenue,” or “AVE.”. Search & Replace transforms allow you to search for any of these values and replace them with the standard value you’ve chosen for your warehouse.

You may want to check the validity of certain types of data. If a product is sold only in three colors, you can validate that the data conforms to a list of values, such as red, yellow, and green. This list may change over time to include new values, for example in February 2002; the product may also be available in blue. You may want to validate data against a larger list of values stored in a table, such as the states within the United States.

Some types of cleansing involve combining and separating character data. You may need to concatenate two string columns—for example, combining LAST_NAME, comma, and FIRST_NAME into the CUSTOMER_NAME column. Or you may need to use a substring operation to divide a string into separate parts, such as separating the area code from a phone number.

An important data integrity step involves enforcement of one-to-one and one-to-many relationships. Often these are checked as part of the transformation process rather than by using referential integrity constraints in the warehouse.

While loading the data, you may want to perform calculations or derive new data from the existing data. For example, you may want to keep a running total or count of records as they are moved from the source to the target database.

During the design process, the appropriate level of granularity for the warehouse is determined. It is often best to store data at various levels of granularity with different retention and archive periods. The most finegrained transaction data will usually be retained for a much shorter period of time than data aggregated at a higher level. Transaction granular sales data is necessary to analyze which products are purchased together. Daily sales of a product by store are used to analyze regional trends and product performance.

Data may be aggregated as part of the transformation process. If you did not want to store the detailed transactions in your data warehouse, the data can be aggregated prior to moving it to the data warehouse.

Instead of using the keys that were used in the operational system, a common design technique is to make up a new key, called the surrogate or synthetic key, to use in the warehouse. The surrogate key is usually a generated sequence of integers.

Surrogate keys are used for a variety of reasons. The keys used in the operational system may be long character strings, with meaning embedded into the components of the key. Because surrogate keys are integers, the fact tables and B*tree indexes are smaller, with fewer levels, and take less space, improving query response time. Surrogate keys provide a degree of isolation from changes in the operational system.

If the operational system changes the product-code naming conventions, or format, all data in the warehouse does not have to be changed. When one company acquires another, you may need to load products from a newly acquired company into the warehouse. It is highly unlikely that both companies used the same product encoding schemes. If there is a chance that the two companies used the same product key for different products, then the product key in the warehouse may need to be extended to add the company id as well. The use of surrogate keys can greatly help integrate the data in these types of situations.

Both the surrogate keys and operational system keys are stored in the dimension table, as shown in Figure 5.4, where product code SR125 is known in the data warehouse as PRODUCT_ID 1. Therefore, we can see that in the fact table, the product key is stored as 1. However, users can happily query using code SR125, completely unaware of the transformation being done within the data warehouse.

The surrogate key is used in the fact table as the column that joins the fact table to the dimension table. In this example, there are two different formats for product codes. Some are numeric, separated by a dash, “654-123”. Others are a mix of alphanumeric and numeric characters, “SR125”. As part of the ETL process, as each fact record is loaded, the surrogate key is looked up in the dimension tables and stored in the fact table.

Transformations of the data may be done at any step in the ETL process. You need to decide the most efficient place to do each transformation: at the source, in the staging area, during the load operation, or in temporary tables once the data is loaded into the warehouse. Several powerful features are present in 9i and new in 10g to facilitate performing transformations.

Transformations can be done as part of the extraction process. In general, it is best to do filtering types of transformations whenever possible at the source. This allows you to select only the records of interest for loading into the warehouse and consequently this also reduces the impact on the network or other mechanism used to transport the data into the warehouse. Ideally, you want to extract only the data that has been changed since your last extraction. While transformations could be done at the source operational system, an important consideration is to minimize the additional load the extraction process puts on the operational system.

Transformations can be done in a staging area prior to loading the data into the data warehouse. When data needs to be integrated from multiple systems, it cannot be done as part of the extraction process. You can use flat files as your staging area, your Oracle database as your staging area, or a combination of both. If your incoming data is in a flat file, it is probably more efficient to finish your staging processes prior to loading the data into the Oracle warehouse. Transformations that require sorting, sequential processing and row-at-a-time operations can be done efficiently in the flat file staging area.

Transformations can be done during the load process. Some important types of transformations can be done as the data is being loaded using SQL*Loader—for example, converting the “endian-ness” of the data, or, changing the case of a character column to uppercase. This is best done when a small number of rows need to be added—for example, when initially loading a dimension table. Oracle external tables facilitate more complex transformations of the data as part of the load process. Sections 5.4.1 and 5.4.3 discuss some examples of transformations while loading the data into the warehouse.

If your source is an Oracle 8i, 9i, or 10g database, transportable tablespaces make it easy to move data into the warehouse without first extracting the data into an external table. In this case, it makes sense to do the transformations in temporary staging tables once the data is in the warehouse. By doing transformations in Oracle, if the data can be processed in bulk using SQL set operations, they can be done in parallel.

Transformations can be done in the warehouse staging tables. Conversion of the natural key to the surrogate key should be performed in the warehouse where the surrogate key is generated. But, in addition in Oracle Database 10g, a new SQL feature, called REGEXP, is introduced for processing character data using regular expressions. This new, powerful feature operates in addition to the simpler, existing text search and replace functions and operators and enables true regular expression matching, substitution, and manipulation to be performed on character data.

When loading the warehouse, the dimension tables are generally loaded first. The dimension tables contain the surrogate keys or other descriptive information needed by the fact tables. When loading the fact tables, information is looked up from the dimension tables and added to the columns in the fact table.

When loading the dimension table, you need both to add new rows and make changes to existing rows. For example, a customer dimension may contain tens of thousands of customers. Usually, only 10 percent or less of the customer information changes. You will be adding new customers and sometimes modifying the information about existing customers.

When adding new data to the dimension table, you need to determine if the record already exists. If it does not, you can add it to the table. If it does exist, there are various ways to handle the changes, based on whether you need to keep the old information in the warehouse for analysis purposes.

If a customer’s address changes, there is generally no need to retain the old address, so the record can simply be updated. In a rapidly growing company the sales regions will change often. For example, “Canada” rolled up into the “rest of the world” until 1990, and then rolled up into the “Americas,” after reorganization. If you needed to understand both the old geographical hierarchy as well as the new one, you can create a new dimension record containing all the old data plus the new hierarchy, giving the record a new surrogate key. Alternatively, you could create columns in the original record to hold both the previous and current values.

One dimension that will usually be present in any data warehouse is the time dimension, which contains one row for each unit of time that is of interest in the warehouse. In the EASYDW shopping example, purchases can be made on-line 365 days a year, so every day is of interest. For each given date, information about the day is stored, including the day of the week, the week number, the month, the quarter, the year, and if it is a holiday. The time dimension may be loaded on a yearly basis.

When loading the fact table, you typically append new information to the end of the existing fact table. You do not want to alter the existing rows, because you want to preserve that data. For example, the PURCHASES fact table contains three months of data. New data from the source order-entry system is appended to the purchases fact table monthly. Partitioning the data by month facilitates this type of operation.

In the next sections, we will take a look at different ways to load data, including:

SQL*Loader—which inserts data into a new table or appends to an existing table when your data is in a flat file that is external to the database.

Data Pump utilities for export and import.

External Tables—inserts data into a new table or appends to an existing table when your data is in a flat file that is external to the database and you want to transform it while loading.

Transportable Tablespaces—used to move the data from between two Oracle databases, such as the operational system and the warehouse, which may reside on different operating system platforms.

One of the most popular tools for loading data is SQL*Loader, because it has been designed to load records as fast as possible. Its particular strength is that the format of the records that it can load are fully user definable, which can make it an ideal mechanism for loading data from non-Oracle source systems. For example, one possible format is to use comma-separated value (CSV) files. SQL*Loader can be used either from the operating system command line or via its wizard in Oracle Enterprise Manager (OEM), which we will discuss now.

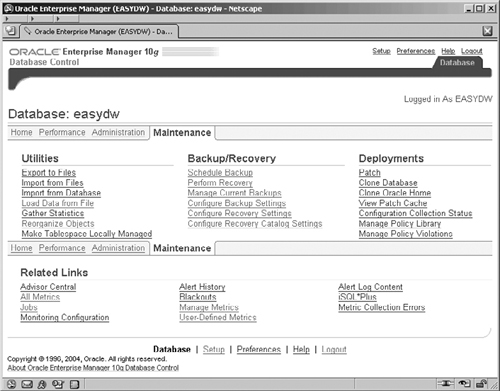

Figure 5.5 shows the Oracle Enterprise Manager Maintenance screen, from where you can launch the load wizard from the Utilities section. Click on the Load Data from File link. The wizard guides you through the process of loading data from an external file into the database according to a set of instructions in a control file and the subsequent submission of a batch job through Enterprise Manager to execute the load.

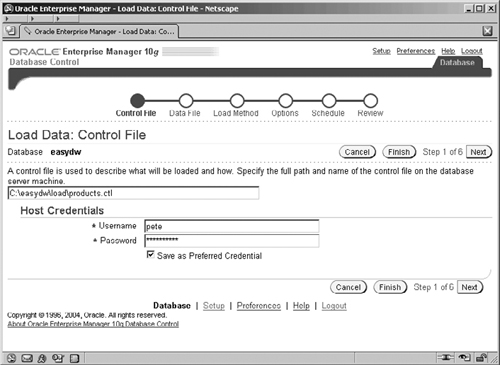

The control file is a text file that describes the load operation. The role of the control file, which is illustrated in Figure 5.6, is to tell SQL*Loader which datafile to load, how to interpret the records and columns, and into which tables to insert the data. At this point you also need to specify a host server account, which Enterprise Manager can use to execute your SQL*Loader job.

Hint

This is a server account and not the database account that you wish to use to access the tables that you’re loading into.

The control file is written in SQL*Loader’s data definition language. The following example shows the control file that would be used to add new product data into the product table in the EASYDW warehouse. The data is stored in the file product.dat. New rows will be appended to the existing table.

- Load product dimension LOAD DATA INFILE 'product.dat' append INTO TABLE product FIELDS TERMINATED BY ',' OPTIONALLY ENCLOSED BY "'" (product_id, product_name, category, cost_price, sell_price, weight, shipping_charge, manufacturer, supplier)

The following example shows a small sample of the datafile product.dat. Each field is separated by a comma and optionally enclosed in a single quote. Each field in the input file is mapped to the corresponding columns in the table. As the data is read, it is converted from the data type in the input file to the data type of the column in the database.

'SP1242', 'CD LX1','MUSC', 8.90, 15.67, 2.5, 2.95, 'RTG', 'CD Inc' 'SP1243', 'CD LX2','MUSC', 8.90, 15.67, 2.5, 2.95, 'RTG', 'CD Inc' 'SP1244', 'CD LX3','MUSC', 8.90, 15.67, 2.5, 2.95, 'RTG', 'CD Inc' 'SP1245', 'CD LX4','MUSC', 8.90, 15.67, 2.5, 2.95, 'RTG', 'CD Inc'

The datafile is an example of data stored in Stream format. A record separator, often a line feed or carriage return/line feed, terminates each record. A delimiter character, often a comma, separates each field. The fields may also be enclosed in single or double quotes.

In addition to the Stream format, SQL*Loader supports fixed-length and variable-length format files. In a fixed-length file, each record is the same length. Normally, each field in the record is also the same length. In the control file, the input record is described by specifying the starting position, length, and data type. In a variable-length file, each record may be a different length. The first field in each record is used to specify the length of that record.

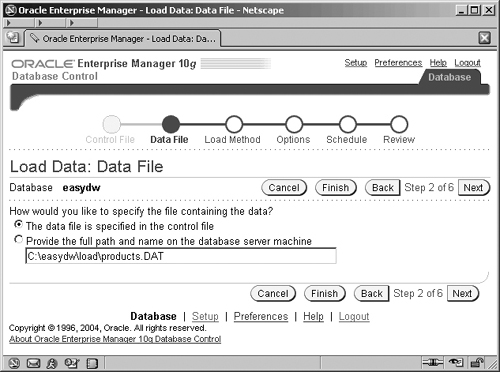

In OEM, the screen shown in Figure 5.7 is where the datafile is specified, and here it is c:easydwloadproducts.dat. The alternative mechanism to specify the data file location is within the control file, as shown previously by the INFILE parameter.

Figure 5.8 shows SQL*Loader’s three modes of operation:

Conventional path

Direct path

Parallel direct path

Conventional path load should only be used to load small amounts of data, such as initially loading a small dimension table or when loading data with data types not supported by direct path load. The conventional path load issues SQL INSERT statements. As each row is inserted, the indexes are updated, triggers are fired, and constraints are evaluated. When loading large amounts of data in a small batch window, direct path load can be used to optimize performance.

Direct path load bypasses the SQL layer. It formats the data blocks directly and writes them to the database files. When running on a system with multiple processors, the load can be executed in parallel, which can result in significant performance gains.

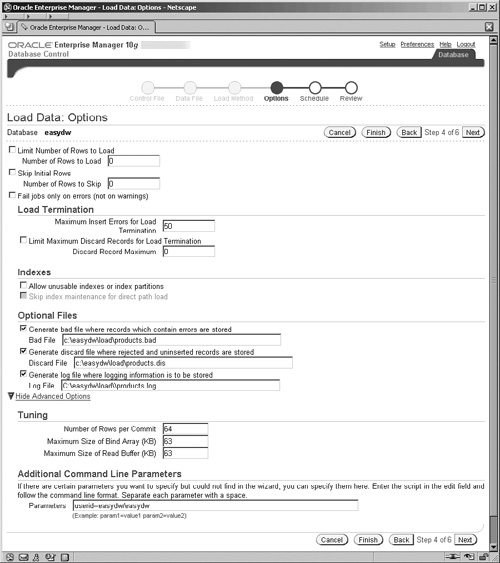

In the next step in the wizard, you are presented with the screen shown in Figure 5.9, which contains various options to control the execution of your SQL*Loader operation.

A common method when extracting datafiles from the source system is to have the first record in the file contain the names of the fields of the subsequent data records. This mechanism helps to self-document the datafile, which can be particularly useful when resolving errors for files with large and complex record formats. However, we do not want to have to manually remove this record prior to loading files of this format every single time this datafile is received. The Skip Initial Rows option will instruct SQL*Loader to do this removal for us automatically.

You can create additional files during the load operation to aid in the diagnosis and correction of any errors that may occur during the load. A log file is created to record the status of the load operation. This should always be reviewed to ensure the load was successful. Copies of the records that could not be loaded into the database because of data integrity violations can be saved in a “bad” file. This file can later be reentered once the data integrity problems have been corrected. If you receive an extract file with more records than you are interested in, you can load a subset of records from the file. The WHEN clause in the control file is used to select the records to load. Any records that are skipped are written to a discard file.

Select which optional files you would like created using the advanced option, as shown in Figure 5.9. In this example, we’ve selected a bad file, a discard file and a log file.

In order to specify the database account that SQL*Loader will log on with, you need to use the advanced options. Clicking on the Show Advanced Options link displays a hidden part of the page, where you can specify the account and password in the Parameters, field using the USERID parameter as shown.

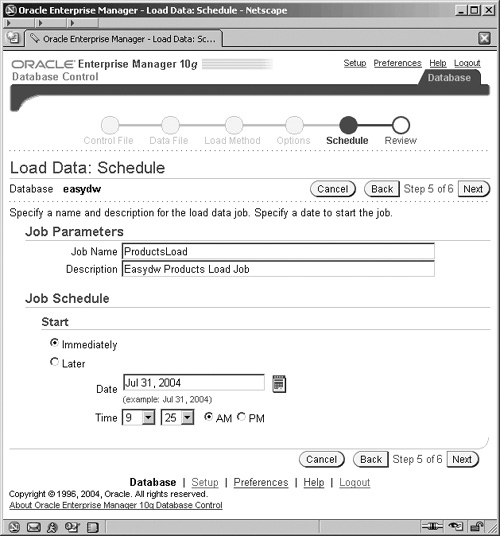

Enterprise Manager’s job scheduling system allows you to create and manage jobs, schedule the jobs to run, and monitor progress. You can run a job once or choose how frequently you would like the job to run. If you will run the job multiple times, you can save the job in Enterprise Manager’s jobs library so that it can be rerun in the future. In Figure 5.10, the job will be scheduled to run immediately. Click on Next and you will see the review screen (not shown) and clicking the Submit Job on this screen will actually submit your job for execution and display a status screen. At this point you will also have the option to monitor your job by clicking the View Job button which displays the screen shown in Figure 5.11 for monitoring your job.

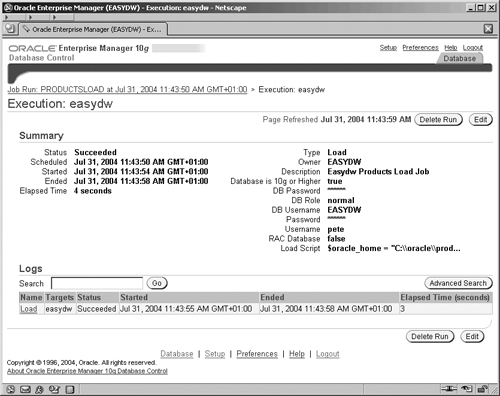

You can also monitor the progress of a job while it is running by going to the bottom of the Administration screen and clicking on Jobs in the Related Links section. The Job Activity page shown in Figure 5.11 lists all jobs that are either running, are scheduled to run, or have completed.

The Results section contains a list of jobs and their status. You can check to see if the job ran successfully after it has completed and look at the output to see any errors when a job has failed. By selecting the job and clicking on the View or Edit buttons you can view information about the job’s state and progress, as shown in Figure 5.12.

When SQL*Loader executes, it creates a log file, which can be inspected. The following example shows a log file from a sample load session that can be accessed by clicking on the Load name on the screen shown in Figure 5.12. The log file is also written to the file system (by default into the directory where the loader control file is held). Four rows were appended to the product table; one record was rejected due to invalid data. Copies of those bad records can be found in the file products.bad.

SQL*Loader: Release 10.1.0.2.0 - Production on Sat Jul 31 11:43:56 2004

Copyright (c) 1982, 2004, Oracle. All rights reserved.

Control File: C:easydwloadproducts.ctl

Data File: c:easydwloadproducts.dat

Bad File: C:easydwloadproducts.bad

Discard File: none specified

(Allow all discards)

Number to load: ALL

Number to skip: 0

Errors allowed: 50

Bind array: 64 rows, maximum of 64512 bytes

Continuation: none specified

Path used: Conventional

Table PRODUCT, loaded from every logical record.

Insert option in effect for this table: APPEND

Column Name Position Len Term Encl Datatype

------------------------------ ---------- ----- ---- ---- --------

PRODUCT_ID FIRST * , O(') CHARACTER

PRODUCT_NAME NEXT * , O(') CHARACTER

CATEGORY NEXT * , O(') CHARACTER

COST_PRICE NEXT * , O(') CHARACTER

SELL_PRICE NEXT * , O(') CHARACTER

WEIGHT NEXT * , O(') CHARACTER

SHIPPING_CHARGE NEXT * , O(') CHARACTER

MANUFACTURER NEXT * , O(') CHARACTER

SUPPLIER NEXT * , O(') CHARACTER

value used for ROWS parameter changed from 64 to 27

Record 5: Rejected - Error on table PRODUCT, column PRODUCT_ID.

Column not found before end of logical record (use TRAILING

NULLCOLS)

Table PRODUCT:

4 Rows successfully loaded.

1 Row not loaded due to data errors.

0 Rows not loaded because all WHEN clauses were failed.

0 Rows not loaded because all fields were null.

Space allocated for bind array: 62694 bytes(27 rows)

Read buffer bytes: 64512

Total logical records skipped: 0

Total logical records read: 5

Total logical records rejected: 1

Total logical records discarded: 0

Run began on Sat Jul 31 11:43:56 2004

Run ended on Sat Jul 31 11:43:56 2004

Elapsed time was: 00:00:00.66

CPU time was: 00:00:00.08When loading large amounts of data in a small batch window, a variety of techniques can be used to optimize performance:

Using direct path load. Formatting the data blocks directly and writing them to the database files eliminate much of the work needed to execute a SQL INSERT statement. Direct path load requires exclusive access to the table or partition being loaded. In addition, triggers are automatically disabled, and constraint evaluation is deferred until the load completes.

Disabling integrity constraint evaluation prior to loading the data. When loading data with direct path, SQL*Loader automatically disables all CHECK and REFERENCES integrity constraints. When using parallel direct path load or loading into a single partition, other types of constraints must be disabled. You can manually disable evaluation of not null, unique, and primary-key constraints during the load process as well. When the load completes, you can have SQL*Loader reenable the constraints, or do it yourself manually.

Loading the data in sorted order. Presorting data minimizes the amount of temporary storage needed during the load, enabling optimizations to minimize the processing during the merge phase to be applied. To tell SQL*Loader which indexes the data is sorted on, use the SORTED INDEXES statement in the control file.

Deferring index maintenance. Indexes are maintained automatically whenever data is inserted or deleted, or the key column is updated. When loading large amounts of data with direct path load, it may be faster to defer index maintenance until after the data is loaded. You can either drop the indexes prior to the beginning of the load or skip index maintenance by setting SKIP_INDEX_MAINTENANCE=TRUE on the SQL*Loader command line. Index partitions that would have been updated are marked “index unusable,” because the index segment is inconsistent with respect to the data it indexes. After the data is loaded, the indexes must be rebuilt separately.

Disabling redo logging by using the UNRECOVERABLE option in the control file. By default, all changes made to the database are also written to the redo log so they can be used to recover the database after failures. Media recovery is the process of recovering after the loss of a database file, often due to a hardware failure such as a disk head crash. By disabling redo logging, the load is faster.

However, if the system fails in the middle of loading the data, you need to restart the load, since you cannot use the redo log for recovery. If you are using Oracle Data Guard to protect your data with a logical or physical standby database, you may not want to disable redo logging. Any data not logged cannot be automatically applied to the standby site.

After the data is loaded, using the UNRECOVERABLE option, it is important to do a backup to make sure you can recover the data in the future if the need arises.

Loading the data into a single partition. While you are loading a partition of a partitioned or subpartitioned table, other users can continue to access the other partitions in the table. Loading the April transactions will not prevent users from querying the existing data for January through March. Thus, overall availability of the warehouse is increased.

Loading the data in parallel. When a table is partitioned, it can be loaded into multiple partitions in parallel. You can also set up multiple, concurrent sessions to perform a load into the same table or into the same partition of a partitioned table.

Increasing the STREAMSIZE parameter can lead to better direct path load times, since larger amounts of data will be passed in the data stream from the SQL*Loader client to the Oracle server.

If the data being loaded contains many duplicate dates, using the DATE_CACHE parameter can lead to better performance of direct path load. Use the date cache statistics (entries, hits, and misses) contained in the SQL*Loader log file to tune the size of the cache for future similar loads.

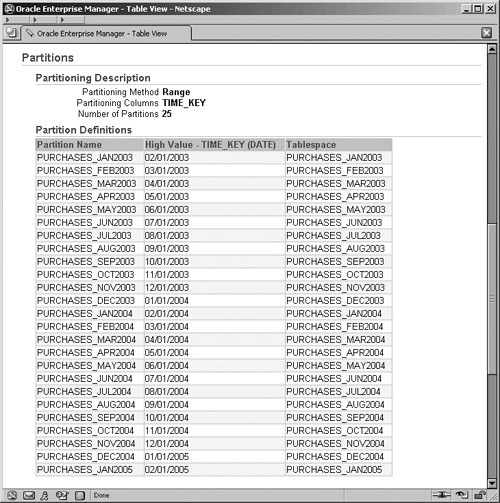

Next, we will look at an example of loading data into a single partition. In the EASYDW warehouse, the fact table is partitioned by date. At the end of April, the April sales transactions are loaded into the EASYDW warehouse.

In the following example, we create a tablespace, add a partition to the purchases table, and then use SQL*Loader direct path load to insert the data into the January 2005 partition.

CREATE TABLESPACE purchases_jan2005 DATAFILE 'C:ORACLEPRODUCT10.1.0ORADATAEASYDWPURCHASESJAN2005.f' SIZE 5M REUSE AUTOEXTEND ON DEFAULT STORAGE (INITIAL 64K NEXT 64K PCTINCREASE 0 MAXEXTENTS UNLIMITED);

If our new partition is higher than the last partition in the table (i.e., based on the boundary clauses), then we can add a partition, as follows:

ALTER TABLE easydw.purchases

ADD PARTITION purchases_jan2005

VALUES LESS THAN (TO_DATE('01-02-2005', 'DD-MM-YYYY'))

PCTFREE 0 PCTUSED 99

STORAGE (INITIAL 64K NEXT 64K PCTINCREASE 0)

TABLESPACE purchases_jan2005;When using direct path load of a single partition, referential and check constraints on the table partition must be disabled, along with any triggers.

SQL> ALTER TABLE purchases DISABLE CONSTRAINT fk_time; SQL> ALTER TABLE purchases DISABLE CONSTRAINT fk_product_id; SQL> ALTER TABLE purchases DISABLE CONSTRAINT fk_customer_id;

The status column in the USER_CONSTRAINTS view can be used to determine if the constraint is currently enabled or disabled. Here we can see that the special_offer constraint is still enabled.

SQL> SELECT TABLE_NAME, CONSTRAINT_NAME, STATUS

FROM USER_CONSTRAINTS

WHERE TABLE_NAME = 'PURCHASES';

TABLE_NAME CONSTRAINT_NAME STATUS

------------- ----------------------- --------

PURCHASES NOT_NULL_PRODUCT_ID DISABLED

PURCHASES NOT_NULL_TIME DISABLED

PURCHASES NOT_NULL_CUSTOMER_ID DISABLED

PURCHASES SPECIAL_OFFER ENABLED

PURCHASES FK_PRODUCT_ID DISABLED

PURCHASES FK_TIME DISABLED

PURCHASES FK_CUSTOMER_ID DISABLED

7 rows selected.The status column in the USER_TRIGGERS view can be used to determine if any triggers must be disabled. There are no triggers on the PURCHASES table.

SQL> SELECT TRIGGER_NAME, STATUS

FROM ALL_TRIGGERS

WHERE TABLE_NAME = 'PURCHASES';

no rows selectedThe following example shows the SQL*Loader control file to load new data into a single partition. Note that the partition clause is used.

OPTIONS (DIRECT=TRUE)

UNRECOVERABLE LOAD DATA

INFILE 'purchases.dat' BADFILE 'purchases.bad'

APPEND

INTO TABLE purchases

PARTITION (purchases_jan2005)

(product_id position (1-6) char,

time_key position (7-17) date "DD-MON-YYYY",

customer_id position (18-25) char,

ship_date position (26-36) date "DD-MON-YYYY",

purchase_price position (37-43) decimal external,

shipping_charge position (44-49) integer external,

today_special_offer position (50) char)The unrecoverable keyword is specified, disabling media recovery for the table being loaded by disabling the redo logging for this operation; this also necessitates that the DIRECT option be used as well. Database changes being made by other users will continue to be logged. After disabling media recovery, it is important to do a backup to make it possible to recover the data in the future if the need arises. If you attempted media recovery before the backup was taken, you would discover that the data blocks that were loaded have been marked as logically corrupt. To recover the data, if you haven’t performed the backup following the load operation, you would have to drop the partition and reload the data.

Any data that cannot be loaded will be written to the file purchases.bad. Data will be loaded into the PURCHASES_JAN2005 partition. This example shows loading a fixed-length file named purchases.dat. Each field in the input record is described by specifying the starting position, its ending position, and its data type. Note: These are SQL*Loader data types representing the data formats in the file, not the data types in an Oracle table. When the data is loaded into the tables, each field is converted to the data type of the Oracle table column, if necessary.

The following example shows a sample of the purchases data file. The PRODUCT_ID starts in column 1 and is six bytes long. “Time_key” starts in column 7 and is 11 bytes long. The data mask “DD-MON-YYYY” is used to describe the input format of the date fields.

12345678901234567890123456789012345678901234567890

| | | | | |

SP100001-jan-2005AB12367501-jan-20050067.23004.50N

SP101001-jan-2005AB12367301-jan-20050047.89004.50NThe alternative method to invoke SQL*Loader direct path mode is from the command line using DIRECT=TRUE. In this example, skip_index_ maintenance is set to true, so the indexes will need to be rebuilt after the load.

sqlldr USERID=easydw/easydw CONTROL=purchases.ctl LOG=purchases.log DIRECT=TRUE SKIP_INDEX_MAINTENANCE=TRUE

The following example shows a portion of the SQL*Loader log file from the load operation. Rather than generating redo to allow recovery, invalidation redo was generated to let Oracle know this table cannot be recovered. The indexes were made unusable. The column starting position and length are described.

SQL*Loader: Release 10.1.0.2.0 - Production on Mon Jun 7 14:59:24 2004 Copyright (c) 1982, 2004, Oracle. All rights reserved. Control File: purchases.ctl Data File: purchases.dat Bad File: purchases.bad Discard File: none specified (Allow all discards) Number to load: ALL Number to skip: 0 Errors allowed: 50 Continuation: none specified Path used: Direct Load is UNRECOVERABLE; invalidation redo is produced. Table PURCHASES, partition PURCHASES_JAN2005, loaded from every logical record. Insert option in effect for this partition: APPEND Column Name Position Len Term Encl Datatype ------------------------------ ---------- ----- ---- ---- --------------------- PRODUCT_ID 1:6 6 CHARACTER TIME_KEY 7:17 11 DATE DD-MON-YYYY CUSTOMER_ID 18:25 8 CHARACTER SHIP_DATE 26:36 11 DATE DD-MON-YYYY PURCHASE_PRICE 37:43 7 CHARACTER SHIPPING_CHARGE 44:49 6 CHARACTER TODAY_SPECIAL_OFFER 50 1 CHARACTER Record 3845: Discarded - all columns null. The following index(es) on table PURCHASES were processed: index EASYDW.PURCHASE_CUSTOMER_INDEX partition PURCHASES_JAN2005 was made unusable due to: SKIP_INDEX_MAINTENANCE option requested index EASYDW.PURCHASE_PRODUCT_INDEX partition PURCHASES_JAN2005 was made unusable due to: SKIP_INDEX_MAINTENANCE option requested index EASYDW.PURCHASE_SPECIAL_INDEX partition PURCHASES_JAN2005 was made unusable due to: SKIP_INDEX_MAINTENANCE option requested index EASYDW.PURCHASE_TIME_INDEX partition PURCHASES_JAN2005 was made unusable due to: SKIP_INDEX_MAINTENANCE option requested

After loading the data into a single partition, all references to constraints and triggers must be reenabled. All local indexes for the partition can be maintained by SQL*Loader. Global indexes are not maintained on single partition or subpartition direct path loads and must be rebuilt. In the previous example, the indexes must be rebuilt since index maintenance was skipped.

These steps are discussed in more detail later in the chapter.

When a table is partitioned, the direct path loader can be used to load multiple partitions in parallel. Each parallel direct path load process should be loaded into a partition of a table stored on a separate disk to minimize I/O contention.

Since data is extracted from multiple operational systems, you will often have several input files that need to be loaded into the warehouse. These files can be loaded in parallel, and the workload distributed among several concurrent SQL*Loader sessions.

Figure 5.13 shows an example of how parallel direct path load can be used to initially load the historical transactions into the purchases table. You need to invoke multiple SQL*Loader sessions. Each SQL*Loader session takes a different datafile as input. In this example, there are three data files, each containing the purchases for one month: January, February, and March. These will be loaded into the purchases table, which is also partitioned by month. Each datafile is loaded in parallel into its own partition.

It is suggested that the following steps be followed to load data in parallel using SQL*Loader.

Constraints cannot be evaluated, and triggers cannot be fired during a parallel direct path load. If you forget, SQL*Loader will issue an error.

Indexes cannot be maintained during a parallel direct path load. However, if we are loading only a few partitions out of many, then it is probably better to skip index maintenance and have them marked as unusable instead.

By invoking multiple SQL*Loader sessions and setting direct and parallel to true, the processes will load concurrently. Depending on your operating system, you may need to put an “&” at the end of each line (i.e., to be able to invoke one sqlldr and immediately progress to invoking the second without waiting for number one to complete).

sqlldr userid=easydw/easydw CONTROL=jan.ctl DIRECT=TRUE PARALLEL=TRUE sqlldr userid=easydw/easydw CONTROL=feb.ctl DIRECT=TRUE PARALLEL=TRUE sqlldr userid=easydw/easydw CONTROL=mar.ctl DIRECT=TRUE PARALLEL=TRUE

A portion of one of the log files follows. Note that the mode is direct with the parallel option.

SQL*Loader: Release 10.1.0.2.0 - Production on Sat Jun 5 18:30:43 2004

Copyright (c) 1982, 2004, Oracle. All rights reserved.

Control File: c:feb.ctl

Data File: c:feb.dat

Bad File: c:feb.bad

Discard File: none specified

(Allow all discards)

Number to load: ALL

Number to skip: 0

Errors allowed: 50

Continuation: none specified

Path used: Direct - with parallel option.

Load is UNRECOVERABLE; invalidation redo is produced.

Table PURCHASES, partition PURCHASES_FEB2004, loaded from every logical record.

Insert option in effect for this partition: APPENDAfter using parallel direct path load, reenable any constraints and triggers that were disabled for the load. Recreate any indexes that were dropped.

If you receive extract files that have data in them that you do not want to load into the warehouse, you can use SQL*Loader to filter the rows of interest. You select the records that meet the load criteria by specifying a WHEN clause to test an equality or inequality condition in the record. If a record does not satisfy the WHEN condition, it is written to the discard file. The discard file contains records that were filtered out of the load, because they did not match any record-selection criteria specified in the control file. Note that these records differ from rejected records written to the BAD file. Discarded records do not necessarily have any bad data. The WHEN clause can be used with either conventional or direct path load.

You can use SQL*Loader to perform simple types of transformations on character data. For example, portions of a string can be inserted using the substring function; two fields can be concatenated together using the CONCAT operator. You can trim leading or trailing characters from a string using the trim operator. The control file in the following example illustrates both the use of the WHEN clause to discard any rows where the PRODUCT_ID is blank and how to uppercase the PRODUCT_ID column.

LOAD DATA INFILE 'product.dat' append INTO TABLE product WHEN product_id != BLANKS FIELDS TERMINATED BY ',' OPTIONALLY ENCLOSED BY "'" (product_id "upper(:product_id)", product_name, category, cost_price, sell_price, weight, shipping_charge, manufacturer, supplier)

The discard file is specified when invoking SQL*Loader from the command line, as shown here, or with the OEM Load wizard, as seen previously.

sqlldr userid=easydw/easydw CONTROL=product.ctl LOG=product.log BAD=product.bad DISCARD=product.dis DIRECT=true

These types of transformations can be done with both direct path and conventional path modes; however, since they are applied to each record individually, they do have an impact on the load performance.

After the data is loaded, you may need to process exceptions, reenable constraints, and rebuild indexes.

Always look at the logs to ensure that the data was loaded successfully. Validate that the correct number of rows have been added.