CHAPTER 1

The Future, So Far

Behind all the great material inventions of the last century and a half was not merely a long internal development of technics: There was also a change of mind.

—LEWIS MUMFORD

There is a point of view—generally called “technological determinism”—that essentially says that each technological breakthrough inexorably leads to the next. Once we have light bulbs, we will inevitably stumble upon vacuum tubes. When we see what they can do, we will rapidly be led to transistors, and integrated circuits and microprocessors will not be far behind. This process—goes the argument—is essentially automatic, with each domino inevitably knocking down the next, as we careen toward some unknown but predetermined future.

We are not sure we would go that far, but it is certainly the case that each technological era sets the stage for the next. The future may or may not be determined, but a discerning observer can do a credible job of paring down the alternatives. All but the shallowest of technological decisions are necessarily made far in advance of their appearance in the market, and by the time we read about an advance on the cover of Time magazine, the die has long since been cast. Indeed, although designers of all stripes take justifiable pride in their role of “inventing the future,” a large part of their day-to-day jobs involves reading the currents and eddies of the flowing river of science and technology in order to help their clients navigate.

Although we are prepared to go out on a limb or two, it won’t be in this chapter. Many foundational aspects of the pervasive-computing future have already been determined, and many others will follow all but inevitably from well-understood technical, economic, and social processes. In this chapter, we will make predictions about the future, some of which may not be immediately obvious. But we will try to limit these predictions to those that most well-informed professionals would agree with. If you are one of these professionals (that is to say, if you find the term pervasive computing and its many synonyms commonplace), you may find this chapter tedious, and you should feel free to skip ahead. But if the sudden appearance of the iPad took you by surprise, or if you have difficulty imagining a future without laptops or web browsers, then please read on.

TRILLIONS IS A DONE DEAL

To begin with, there is this: There are now more computers in the world than there are people. Lots more. In fact, there are now more computers, in the form of microprocessors, manufactured each year than there are living people. If you step down a level and count the building blocks of computing– transistors–you find an even more startling statistic. As early as 2002 the semiconductor industry touted that the world produces more transistors than grains of rice, and cheaper. But counting microprocessors is eye-opening enough. Accurate production numbers are hard to come by, but a reasonable estimate is ten billion processors per year. And the number is growing rapidly.

Many people find this number implausible. Where could all these computers be going? Many American families have a few PCs or laptops—you probably know some geeks that have maybe eight or ten. But many households still have none. Cell phones and iPads count, too. But ten billion a year? Where could they all possibly be going?

The answer is everywhere. Only a tiny percentage of processors find their way into anything that we would recognize as a computer. Every modern microwave oven has at least one; as do washing machines, stoves, vacuum cleaners, wrist watches, and so on. Indeed, it is becoming increasingly difficult to find a recently designed electrical device of any kind that does not employ microprocessor technology.

Why would one put a computer in a washing machine? There are some quite interesting answers to this question that we will get to later. But for present purposes, let’s just stick to the least interesting answer: It saves money. If you own a washer more than ten years old, it most likely has one of those big, clunky knobs that you pull and turn in order to set the cycle. A physical pointer turns with it, showing at a glance which cycle you have chosen and how far into that cycle the machine has progressed. This is actually a pretty good bit of human-centered design. The pointer is clear and intuitive, and the act of physically moving the pointer to where you want it to be is satisfyingly literal. However, if you have a recently designed washer, this knob has probably been replaced with a bunch of buttons and a digital display, which, quite possibly, is not as easy to use.

So why the step backward? Well, let’s think for a second about that knob and pointer. They are the tip of an engineering iceberg. Behind them is a complex and expensive series of cams, clockwork, and switch contacts whose purpose is to turn on and off all the different valves, lights, buzzers, and motors throughout the machine. It even has a motor of its own, needed to keep things moving forward. That knob is the most complex single part in the appliance. A major theme of twentieth-century industrialization involved learning how to build such mechanically complex devices cheaply and reliably. The analogous theme of the early twenty-first century is the replacement of such components with mechanically trivial microprocessor-based controllers. This process is now ubiquitous in the manufacturing world.

In essence, the complexity that formerly resided in intricate electromechanical systems has almost completely migrated to the ethereal realm of software. Now, you might think that complexity is complexity and we will pay for it one way or another. There is truth in this statement, as we will see. However, there is a fundamental economic difference between complexity-as-mechanism and complexity-as-software. The former represents a unit cost, and the latter is what is known as a nonrecurring engineering expense (NRE). That is to say, the manufacturing costs of mechanical complexity recur for every unit made, whereas the replication cost of a piece of software—no matter how complex—approaches zero.

This process of substituting “free” software for expensive mechanism repeats itself in product after product, and industry after industry. It is in itself a powerful driver in our climb towards Trillions. As manufacturing costs increase and computing costs decrease, the process works its way down the scale of complexity. It is long-since complete in critical and subtle applications such as automotive engine control and industrial automation. It is nearly done in middling applications such as washing machines and blenders, and has made significant inroads in low-end devices such as light switches and air-freshener dispensers.

Money-saving is a powerful engine for change. As the generalization from these few examples makes clear, even if computerized products had no functional advantage whatsoever over their mechanical forebears, the rapid computerization of the built world would be assured. But this is just the beginning of the story. So far, we have been considering only the use of new technology to do old things. The range of products and services that were not practical before computerization is far larger. For every opportunity to replace some existing mechanism with a processor, there are hundreds of new products that were either impossible or prohibitively expensive in the precomputer era. Some of these are obvious: smartphones, GPS devices, DVD players, and all the other signature products of our age. But many others go essentially unnoticed, often written off as trivialities or gimmicks. Audio birthday cards are old news, even cards that can record the voice of the sender. Sneakers that send runners’ stride data to mobile devices are now commonplace. Electronic tags sewn into hotel towels that guard against pilferage, and capture new forms of revenue from souvenirs, are becoming common. The list is nearly endless.

Automotive applications deserve a category of their own. Every modern automobile contains many dozens of processors. High-end cars contain hundreds. Obvious examples include engine-control computers and GPS screens. Less visible are the controllers inside each door that implement a local network for controlling and monitoring the various motors, actuators, and sensors inside the door—thus saving the expense and weight of running bulky cables throughout the vehicle. Similar networks direct data from accelerometers and speed sensors, not only to the vehicle’s GPS system, but also to advanced braking and stability control units, each with its own suite of processors. Drilling further down into the minutiae of modern vehicle design, one finds intelligent airbag systems that deploy with a force determined by the weight of the occupant of each seat. How do they know that weight? Because the bolts holding the seats in place each contain a strain sensor and a microprocessor. The eight front-seat bolts plus the airbag controller form yet another local area network dedicated to the unlikely event of an airbag deployment.

We will not belabor the point, but such lists of examples could go on indefinitely. Computerization of almost literally everything is a simple economic imperative. Clearly, ten billion processors per year is not the least bit implausible. And that means that a near-future world containing trillions of computers is simply a done-deal. Again, we wish to emphasize that the argument so far in no way depends upon a shift to an information economy or a desire for a smarter planet. It depends only on simple economics and basic market forces. We are building the trillion-node network, not because we can but because it makes economic sense. In this light, a world containing a trillion processors is no more surprising than a world containing a trillion nuts and bolts. But, of course, the implications are very different.

CONNECTIVITY WILL BE THE SEED OF CHANGE

In his 1989 book Disappearing through the Skylight, O. B. Hardison draws a distinction between two modes in the introduction of new technologies—what he calls “classic” versus “expressive”:

To review types of computer music is to be reminded of an important fact about the way technology enters culture and influences it. Some computer composers write music that uses synthesized organ pipe sounds, the wave forms of Stradivarius violins, and onstage Bösendorf grands in order to sound like traditional music. In this case the technology is being used to do more easily or efficiently or better what is already being done without it. This can be called “classic” use of the technology. The alternative is to use the capacities of the new technology to do previously impossible things, and this second use can be called “expressive.” . . .

It should be added that the distinction between classic and expressive is provisional because whenever a truly new technology appears, it subverts all efforts to use it in a classic way. . . . For example, although Gutenberg tried to make his famous Bible look as much like a manuscript as possible and even provided for hand-illuminated capitals, it was a printed book. What it demonstrated in spite of Gutenberg—and what alert observers throughout Europe immediately understood—was that the age of manuscripts was over. Within fifty years after Gutenberg’s Bible, printing had spread everywhere in Europe and the making of fancy manuscripts was an anachronism. In twenty more years, the Reformation had brought into existence a new phenomenon—the cheap, mass-produced pamphlet-book.

Adopting Hardison’s terminology, we may state that the substitution of software for physical mechanism, no matter how many billions of times we do it, is an essentially classic use of computer technology. That is to say, it is not particularly disruptive. The new washing machines may be cheaper, quieter, more reliable, and conceivably even easier to use than the old ones, but they are still just washing machines and hold essentially the same position in our homes and lives as their more mechanical predecessors. Cars with computers instead of carburetors are still just cars. At the end of the day, a world in which every piece of clockwork has experienced a one-to-one replacement by an embedded processor is a world that has not undergone fundamental change.

But, this is not the important part of the story. Saving money is the proximal cause of the microprocessor revolution, but its ultimate significance lies elsewhere. A world with billions of isolated processors is a world in a kind of supersaturation—a vapor of potential waiting only for an appropriate seed to suddenly trigger a condensation into something very new. The nature of this seed is clear, and as we write it is in the process of being introduced. That seed is connectivity. All computing is about data-in and data-out. So, in some sense, all computing is connected computing—we shovel raw information in and shovel processed information out. One of the most important things that differentiates classic from expressive uses of computers is who or what is doing the shoveling. In the case of isolated processors such as our washing-machine controller, the shoveler is the human being turning that pointer. Much of the story of early twenty-first century computing is a story of human beings spending their time acquiring information from one electronic venue and re-entering it into another. We read credit card numbers from our cell phone screens, only to immediately speak or type them back into some other computer. So we already have a network. But as long as the dominant transport mechanism of that network involves human attention and effort, the revolution will be deferred.

Things are changing fast, however. Just as the advent of cheap, fast modems very rapidly transformed the PC from a fancy typewriter/calculator into the end nodes of the modern Internet, so too are a new generation of data-transport technologies rapidly transforming a trillion fancy clockwork-equivalents into the trillion-node network.

An early essay in such expressive networking can be found in a once wildly popular but now largely forgotten product from the 1990s. It was called the Palm Pilot. This device was revolutionary not because it was the first personal digital assistant (PDA)—it was not. It was revolutionary because it was designed from the bottom up with the free flow of information across devices in mind. The very first Palm Pilot came with “HotSync” capabilities. Unlike previous PDAs, the Pilot was designed to seamlessly share data with a PC. It came with a docking station having a single, inviting button. One push, and your contact and calendar data flowed effortlessly to your desktop—no stupid questions or inscrutable fiddling involved. Later versions of the Palm also included infrared beaming capabilities—allowing two Palm owners to exchange contact information almost as easily as they could exchange physical business cards.

In this day—only a decade later—of always-connected smartphones, these capabilities seem modest—even quaint. But they deserve our attention. It is one thing to shrink a full-blown PC with all its complexity down to the size of a bar of soap and then put it onto the Internet. It is quite another to do the same for a device no more complex than a fancy pocket calculator. The former is an impressive achievement indeed. But, it is an essentially classic application of traditional client-server networking technology. The iPhone truly is magical, but in most ways, it stands in the same relation to the Internet as the PC, which it is rapidly supplanting—namely it is a terminal for e-mail and web access and a platform for the execution of discrete apps. It is true that some of those apps give the appearance of direct phone-phone communications. (Indeed, a few really do work that way, and Apple has begun to introduce new technologies to facilitate such communication). But it is fair to say that the iPhone as it was originally introduced—the one that swept the world—was essentially a client-server device. Its utility was almost completely dependent upon frequent (and for many purposes, constant) connections to fixed network infrastructure.

The Palm Pilot, in its modest way, was different. It communicated with its associated PC or another Palm Pilot in a true peer-to-peer way, with no centralized “service” intervening. Its significance is that it hinted at a swarm of relatively simple devices directly intercommunicating where no single point of failure can bring down the whole system. It pointed the way toward a new, radically decentralized ecology of computational devices.

The Pilot turned out to be a false start, rapidly overtaken by the vastly greater, but essentially classic capabilities of the PC-in-a-pocket. But the true seeds of expressive connectivity are being sown. A design engineer would be hard-pressed to select a current-production microprocessor that did not have some kind of communications capability built-in, being thus essentially free. Simple serial ports are trivial, and adequate for many purposes. USB, Ethernet, and even the higher-level protocols for connecting to the Internet are not uncommon. Wireless ports such as Bluetooth, ZigBee, and WiFi currently require extra chips, but they are increasingly trivial to add. Although these capabilities often go unused, they are there, beckoning to be employed. And the demand is growing. It is the rare manufacturer who does not have a connectivity task force. What CEOs are not asking their CTOs when their products will be controllable via a mobile “app?” Much of MAYA’s business in the last decade has involved helping our clients understand their place in this future information ecology. Whether they are manufacturers of kitchen appliances or medical devices or garage-door openers, or whether they are providers of financial services or medical insurance, the assumption of universal connectivity is implicit in their medium-term business planning. We won’t just have trillions of computers; we will have a trillion-node network. Done deal. The unanswered question is how, and how well, we will make it work.

COMPUTING TURNED INSIDE OUT

As consumer products go, the personal computer has had quite a run. From its origins in the 1970s as a slightly silly geek toy with sales in the thousands, PC sales figures sustained a classic exponential growth curve for more than 35 years. Cumulative sales exceeded one billion units quite some time ago. In 2008 it was reported that there were in excess of one billion computers in use worldwide. In comparison, after 100 years of production, there are an estimated 600 million automobiles in use worldwide. For the postindustrial world, the PC is the gift that keeps on giving.

After all these years of consistent growth, it is difficult to imagine a world without PCs. But the phrase post-PC era has entered the lexicon. The precipitous collapse of an entire industry is the kind of thing—like a serious economic recession—that happens only a few times per career. As a result, many midcareer professionals have never actually witnessed one and therefore lack a visceral understanding of what such an event is like.

But, as a consumer product, the PC is dead—as dead as the eight-track tape cartridge. In another decade, a desktop PC will look as anachronistic in a home office as a CRT terminal looks today. Your parent’s Dell tower over in the corner will remind you of your grandparent’s doily-covered console record player. The laptop form-factor will survive longer—maybe even indefinitely. But such machines will increasingly be seen as outliers—ultra–high power, ultra-flexible machines tuned to the needs of an ever-dwindling number of professionals who think of themselves as computer workers, as opposed to information workers.

We are not saying that keyboards, mice, or large-format displays are going away. This may well be the case, but this chapter is about sure things, not speculations, and our guess is that more or less conventional input/output devices will linger for quite some time. But the Windows-based PC has seen its day. There are many ways to measure such things, and the details vary by methodology, but, generally speaking, PC revenues peaked almost a decade ago. Unit sales in the developed world have recently peaked as well.

The nearly complete transition from desktop to laptop PCs represents a mere evolution of form-factor. The modes of usage remain fundamentally unchanged. The same cannot be said about the transition to the post-PC era. The functions that were once centralized in a single device are increasingly being dispersed into a much broader digital environment. People who write a lot and people who spend their days crunching numbers still reach for their laptops, and they probably will for a while. But surfing the web is no longer a PC thing. People may still like the experience of viewing web pages on a spacious screen using a tangible mouse, but they like getting information when and where it is needed even more, even if it involves poking fat fingers at a pocket-sized screen. E-mail is no longer something kept in a PC—it is something floating around in the sky, to be plucked down using any convenient device. And, of course, in many circles e-mail itself is something of a quaint formalism—rather like a handwritten letter—appropriate for thank-you notes to grandma and mass-mailing party invitations, but a poor, slow-speed substitute for phone-to-phone texting or tweeting for everyday communication.

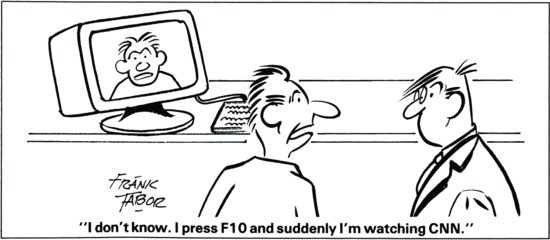

Figure 1.1 Datamation Magazine, March 15, 1991. Just 20 years ago, the very idea of television playing on a computer was fodder for absurdist humor. Today, no one would get the joke.

Source: Courtesy of the artist.

The important point in all of this is not the specific patterns of what has been substituted for what, but rather the larger point that, for the first time, all of these patterns are in play. During the hegemony of the PC, it was difficult for most people to see the distinction between medium and message. If cyberspace was a place, it was a place that was found inside a computer. But, the proliferation of devices has had the effect of bringing about a gradual but pervasive change of perspective: The data are no longer in the computers. We have come to see that the computers are in the data. In essence, the idea of computing is being turned inside out. This is a new game. It is not a game that we are yet playing particularly well, but the game is afoot.

THE POWER OF DIGITAL LITERACY

There is one more topic that belongs in this chapter—one that is rarely discussed. It does not directly relate to evolving technologies per se, but rather about the evolving relationship between those technologies and nonprofessional users. Put simply, people aren’t afraid of computers anymore. Computers today are part of the air we breathe. It is thus difficult to recapture the emotional baggage associated with the word computer during the 1960s and 1970s. This was a generation whose parents watched Walter Cronkite standing in front of a room-sized UNIVAC computer as it “predicted” Eisenhower’s 1952 presidential election victory (Figure 1.2). Phone bills arrived on punched cards, whose printed admonitions not to “spindle, fold, or mutilate” became a metaphor for the mutilation of humanity by these mindless, omnipotent machines. The trend toward uniformity of language and thought that began with the printing press would surely be forced to closure by these power tools of conformity.

Figure 1.2 1952: Walter Cronkite watches UNIVAC predict the electoral victory of Dwight Eisenhower.

Source: U.S. Census Bureau.

In his dark 1976 critique of computer technology and culture, Joseph Weizenbaum reflects this bleak assessment of the effects of technology on the humane:

“The scientific man has above all things to strive at self-elimination in his judgments,” wrote Karl Pearson in 1892. Of the many scientists I know, only a very few would disagree with that statement. Yet it must be acknowledged that it urges man to strive to become a disembodied intelligence, to himself become an instrument, a machine. So far has man’s initially so innocent liaison with prostheses and pointer readings brought him. And upon a culture so fashioned burst the computer.

Moreover, computers were quite correctly seen as huge, expensive, vastly complex devices. They were in the same category as nuclear power plants and spaceships: futuristic and maybe useful, but practical only in the hands of highly skilled professionals under the employ of large corporations or the government. And as with all members of this category, they were frightening and perhaps dangerous.

These were the market conditions faced by the first-generation of PC manufacturers as they geared up to put a computer in every home. The 20-year journey from there to the iPhone represents one of history’s greatest market transformations. It was a triumph, and it was no accident. But it was not fundamentally a triumph of marketing. Rather, it was a triumph of human-centered design.

The story might have been very different had it not been for an extraordinarily devised but unfortunately named innovation known as the WIMP paradigm. WIMP, which stands for “Windows, Icons, Menus, and Pointers,” was a highly stylized, carefully crafted architecture for human-computer interaction via graphical media. Jim Morris, one of the founders of MAYA, worked on the team that invented the first computing system that used the WIMP paradigm and watched the story unfold firsthand. Its development at the famed Xerox Palo Alto Research Center (Xerox PARC) and its subsequent appropriation by Steve Jobs during his famous and fateful visit is well-documented and oft-told. Less often discussed is the pivotal role of this story in paving the way for mass-market computing.

The details aren’t important to our story. What is important to point out is that the WIMP paradigm presented the first generation of nonprofessional users with a single, relatively simple, standardized mode of interaction. Equally important was the fact that this style was essentially identical for all applications. Whether the user was playing a game, sending e-mail, using a spreadsheet, or editing a manuscript, it was always windows and icons. Why is this important? The obvious answer is that simple, logical rules are easier to learn than complex, idiosyncratic ones. Moreover, the transfer of learning that results from a high level of consistency more than makes up for the disadvantages associated with a one-size-fits-all approach to design. And, of course, limiting the “creative” freedom granted to workaday designers was not necessarily a bad thing back in a day when experienced user interface (UI) designers were few and far between.

But the biggest advantage accruing from such a rigorous framework (or from any other widely-accepted architectural framework) is that it forms the basis of a community of practice. That is, such frameworks encourage a virtuous cycle in which early adopters (who, generally speaking, can take care of themselves) take on the role of first-tier consulting resources for those who come later. As a whole society struggled together to figure out these strange new machines, having everybody trying to sing the same song was of inestimable value.

But along with this value, there were significant costs. Most notably, rigid UI standards brought with themselves a deep conservatism. In 1987 when Apple’s Bill Atkinson released the HyperCard multimedia development environment (in our opinion one of the most important innovations of the pre-web era), it was widely criticized for a few small and well-motivated deviations from the Apple WIMP style-guide. As the years went by and a new generation of digital-from-birth users entered the marketplace, the costs of this conservatism eventually came to exceed the benefits. A long string of innovations, including interactive multimedia, hypertext systems, touchscreens, multitouch displays, and above all immersive video games, gradually forced developers and platform providers to mellow out and relax the doctrinal grip of WIMP. This has led to a far less consistent but much richer and more generative computing environment. The interfaces aren’t always good, but new ideas are now fair game.

Concomitant with this evolution has emerged a market populated by users who are pretty much up for anything, in a way that just wasn’t the case even a few years ago. Having learned to use keyboards and mice before pencils and pens, they are not fazed by such mysteries as dragging a scrollbar down in order to make text move up. And, if Apple decides to reverse this convention (as it recently did, presumably in order to improve consistency with touch-oriented devices), that’s fine with them. The minor mental rewiring involved is taken in stride. This underappreciated trend is a significant market enabler, which sets the stage for bigger changes to come.