INTERLUDE

Yesterday, Today, Tomorrow: Platforms and User Interfaces

This first interlude deals with the intertwined history of platforms and user interfaces (UIs). It’s not a matter of forcing two issues into one discussion. Standardized user interface paradigms are in fact a kind of platform—platforms used by developers to deliver standardized user experiences to end users.

The computer represents a profound discontinuity in the history of technology, and though the term platform is relatively new to the scene, the idea that it describes goes to the heart of that discontinuity. Computers as such don’t actually do anything useful. Their utility lies in their ability to serve as a medium (read platform) for software. Much of the action in the evolution, business, and politics of computing is intimately related to the evolution of various hardware and software platforms.

YESTERDAY

For a long time, the only platforms were large boxes of hardware. In the 1950s and 1960s, if you were a business with a need for computing,1 you bought or leased a mainframe from IBM, Burroughs, UNIVAC, or RCA, or later, a minicomputer from DEC, Data General, or Prime. Difficult as it is to imagine today, nobody to speak of had yet conceived of the possibility of making money on software. What little software came with these machines from the manufacturer had to do with low-level hardware bookkeeping and was considered a necessary evil. The manufacturers would no more consider charging for it than they would for the pallets and crates in which the machines were shipped. Beyond these most basic programs, you were on your own. If you needed a piece of software, you wrote it yourself or you swapped for it with your colleagues at the annual users group meeting of your particular hardware tribe.

In those days, each brand of computer—indeed, each particular machine—was an almost completely isolated island. There was, of course, no Internet. But nor was there any other practical way to connect even pairs of machines together. If you needed to move information (code or data) from one machine to another, your choices were (a) to retype it; (b) to carry around a stack of punched cards; or (c) if you were at a high-end shop, move it via magnetic tape. Not that any of these were very useful—there was little chance that the information from one machine would be of use on any other. There existed languages like FORTRAN and COBOL that were, in theory, cross-platform, but in practice all but the most trivial programs were tied to the idiosyncrasies of particular machine architectures. If you owned a Lionel train set, there wasn’t much point in borrowing your friend’s American Flyer engine.

Then, at roughly the same time, two watershed developments occurred. First, transistors evolved into integrated circuits (ICs), setting the stage for the microprocessor. In addition to reducing size and cost, this development had crucial effects on the nature of computing platforms. Before the microprocessor, each computer architecture was more or less the result of handicraft—different machines influenced one another, but each was ultimately unique. The emergence of a small number of industry-standard microprocessor families changed that. Machines built around the Intel 8086 CPU chip, for example, were all pretty much the same, no matter whether the logo on the box said IBM, Digital, or Dell. It was this fact that opened the door for Bill Gates & Co. to redefine the notion of platform for a generation.

Concurrent with the birth of the microprocessor emerged the notion of the graphical user interface (GUI), with the introduction of the experimental Xerox Alto, and its commercial successor the Star office workstation. A little-noted characteristic of these platforms was that they were not organized around applications. In the Xerox Star, if you wanted to compose an e-mail message or a business letter, you did not “run” an e-mail application or a word processor app. Rather, you simply “tore off” a new document of the appropriate type from a “stationery pad” and began typing.

This “document-centric” approach to interface design represented a compelling and important simplification of the computing landscape—one that, regrettably, did not survive the GUI revolution. When Steve Jobs appropriated the GUI concept after his famous visit to Xerox PARC, the notion of document-centricity did not survive the transplant from Alto to Macintosh. The reasons for this were fundamentally economic and organizational. Seamless document-centricity requires a high level of architectural integration that is much easier to achieve by a single development team than in the context of an open, multivendor platform. The required architectural techniques were simply not widely available at the time. In addition, exposing the application as the currency of software provided a convenient unit of commerce—something for third-party developers to put in a box and sell without the necessity to directly interact with the authors of the operating system. As a result, the Macintosh and its more successful imitator Windows evolved not as platforms for information (even though this is what users actually care about), but as a platform for applications, which are at best viewed by users as a necessary evil.

The consequences of this rarely discussed choice are hard to overstate. Viewed from an information-centric perspective, each application for all practical purposes acts as a data silo: Data created by and for one application are commonly unavailable to other applications. If a document happens to be born as an e-mail message, it is doomed to that fate forever. It cannot, for example, be placed onto a calendar, or entered into a cell of a spreadsheet, or added to a list of personal contacts. It is what it is, and what it is was determined by the application used to create it. Things don’t have to be this way. They weren’t that way for users of the Star in the 1980s, and they will not be that way for users of the successors of the World Wide Web.

TODAY

The Internet, as originally conceptualized, represents possibly the most well conceived piece of de novo engineering design in the history of technology. Starting essentially from scratch, the designers of the Net managed to imbue it with such an ability to scale, such a bias toward liquid data flow, such a propensity toward neutrality, that it has so far withstood every insult to which the vicissitudes of the marketplace have subjected it. The subtlety of this design has been exhaustively examined, and we will not repeat it here, except to say this: Implicit in the ideal of the Internet is the existence of a free, open, distributed, seamless public information space. This ideal is directly at odds with the idea of “applications” as the fundamental building block of the “platform” for worldwide computing. This is not news—the tension has been felt for a long time. This tension has led to several attempts at reform (e.g., CORBA and Java “applets”). The only one that can claim large-scale success is the web browser. The browser is in fact a meta-application, aspiring to be the “last application.” That is to say, it aspires to be The Platform of the Future. This is all well and good.

The problem is, the web browser is not a very good platform.

For starters, it is incredibly impoverished as a UI environment. One of the most important innovations of the GUI revolution was the idea of direct manipulation: that we represent data objects as almost-physical “things” in almost-real “places.” Yes, the “places” are unfortunately behind an inconvenient piece of glass, but we can equip users with various prosthetic devices—mouse, trackpad, touchscreen—that empower them to reach through the glass and directly manipulate these new kinds of things in their new kinds of places. In this way, all the skills that the human species has acquired in a million years of coping with the physical world would be more or less directly applicable to the brave new world of data. It was a compelling, even thrilling, vision, and the so-called desktop metaphor of the 1980s barely scratched the surface of its potential. By the early 1990s, we were on the verge of dramatic breakthroughs in this realm.2

But then came the web browser. This innovation was focused exclusively on another Big Idea, that of the Hypertext Link: a magic wormhole that permitted users to “travel” instantly from one part of the Information Space to any other part with a single click of the mouse button. Here, as we have seen, was a quick-and-dirty answer to some very sticky questions about how to empower users to navigate the huge new “space” of the burgeoning Internet. The 4,800-baud modems of the early 1990s were far too slow for a proper GUI experience, but they supported the click-and-wait pattern of hypertexts quite well. Well enough, in fact, to take the wind out of the sails of direct manipulation. Progress in the realm of designing and interacting with data objects virtually ceased, its potential all but forgotten.

As always, the good became the enemy of the great. Moore’s Law and the advent of ubiquitous broadband have long since removed the technical impediments to direct-manipulation Internet navigation. But the train had left the station. The browser is the End of History. For better or worse, we are stuck with it.

This, of course, is nonsense. We are already moving on. Even within the current paradigm, there are those who won’t take no for an answer. For example, through some truly heroic engineering, Google and the developers of a technique known as Ajax have managed to reintroduce a remarkably refined set of direct-manipulation operations to Google Maps. But one can’t shake the feeling that this (and the rest of the suite of techniques that are often lumped under the label of “HTML-5”) is a stunt—remarkable in the same sense as Dr. Johnson’s dog walking on its hind legs. Direct manipulation bends a web browser in a direction for which it was not designed, and no long-term good can come from this. We know how to do much better, and it is time to do so.

A second problem with the browser paradigm is that it is intrinsically asymmetrical. The line between suppliers of web content and its consumers could hardly be brighter. Most of us get to “browse” web pages. Only the elite (mostly large corporations) get to “serve” those pages. Yes, it is possible for sufficiently determined individuals to own and run their own web servers, but it is an uphill battle. Your Internet Service Provider quite possibly blocks outgoing web traffic through your home Internet connection; they very likely change the address of that connection at unpredictable times; and they most certainly provide a much slower connection for traffic leaving your house than for your inbound connection. It is also true that some websites let users “contribute” information for others to see, but it is per force under terms and conditions dictated by the owner of the website. End users can take it or leave it. If the power of the press is limited to those who own the presses, the web has not fundamentally improved the situation.

Yet, here again, things don’t have to be this way, and they did not start out that way. The World Wide Web was created at a Swiss physics lab, and its ostensive original purpose was to permit scientists to more easily share drafts of scientific papers without the need for mediation by a central “publisher.” The vision of everyone’s computer communicating directly with everyone else’s presents technical challenges, but these challenges are not obviously more difficult than the ones involved in creating immense central server farms to permit millions of users to browse the same pages at the same time. The difference is less technical than economic. Of the many ways that one could imagine making money via a mass-market Internet, hoarding data is merely the most obvious. But it has proven to be an extremely effective way, so there has—so far—been little incentive to explore farther. Thus, the client/server interests are deeply vested, and users remain emasculated.

Thirdly, information on the web is extremely illiquid. Data flow almost exclusively from server to client. Lateral data paths, either client-to-client or server-to-server are practically nonexistent. For all practical purposes, most data are trapped within a given server’s data silo. This fact has two primary negative consequences: (1) When data flow together and intermingle, their quality and value multiply. Anyone who has ever successfully merged two ostensibly redundant databases knows that all large datasets contain many errors, and that the merged dataset is always of greatly higher quality (since each serves as a check on the other). This is just one example of the many synergies that occur when data intermingle. (2) Data illiquidity tends to prevent reuse and repurposing of existing data. On the web, the person who decides what data are available is the same person who decides how they are displayed. The architects of the web gave lip service to the separation of data from formatting, but never really delivered on this promise. The many half-hearted attempts to address this glaring deficiency—things like RSS, SOAP, and especially the so-called Semantic Web—have made some progress, but it is far too little and far too late.

Finally, and perhaps most basically, the web is poorly engineered—embarrassingly so. This is something of a taboo topic. The relatively few people who are entitled to an informed opinion on this subject almost all have a vested interest in the status quo. Moreover, most of them are too young ever to have experienced a genuinely well-engineered computing system of any kind. They quite literally don’t know what one looks like. Well, there is the Internet itself. But the Internet is so well engineered, so simple, so properly layered, and so stable that it tends to recede from consciousness. It is like the air we breathe—rarely thought of, much less studied, but a thing of beauty, economical and elegant. In stark contrast, the web and its machinery is a huge, unprincipled mishmash of needlessly complex, poorly layered protocols, ad hoc mechanisms driven by the needs of shallow UI features, held together with Band-Aids pasted upon Band-Aids, and devised for the most part by volunteer amateurs.

How could this be? Judged by the bottom line, the web is miraculous. Everyone uses it, and it very obviously changed the world. If the engineering is so bad, how could we have accomplished so much? The answer is simple and can be stated in two words: “Moore’s Law.” As everyone knows, Intel founder Gordon Moore proclaimed in 1965 that the number of transistors on a commercially viable integrated circuit would double every two years. The fact that this is more or less a self-fulfilling prophecy does not lessen the mind-numbing implications of what has proven to be an astoundingly precise prognostication. Exponential growth can balance out many deficiencies, even those as egregious as the way we run the web. The fact is that the amount of raw computing power now at our disposal is such that almost anything can be made to work, after a fashion. The question, then, becomes not one of how we manage to make the web creak along, but what we are missing by squandering our wealth on the support of amateurism.

TOMORROW

We find ourselves at a peculiar juncture in the evolution of computing platforms. Some aspects of the status quo are good, and others not so good. This is, of course, always the case. What is unusual about the current juncture is that the parts that are good are extraordinarily good, while the parts that are bad are bad almost beyond belief. Moreover, the bad parts—at least so far—tend to manifest themselves as lost opportunities and retarded progress, rather than as crash-and-burn disasters. As we argue elsewhere in this book, this will soon change. In the meantime, however, the net effect is that everyone—sellers and buyers alike—are having such an overwhelmingly good time with the good parts, that it is difficult to even begin a conversation about the merits of roads not followed. As has often been observed, not even the denizens of Star Trek have devices with the capabilities of the iPhone. As Pangloss would say, “This is the best of all possible worlds and couldn’t possibly be any better.”

But history is not over, and we can already glimpse the future. As has already been noted, direct manipulation of data objects is struggling back onto the user interface scene. Spearheaded by the heroic engineers of Google Maps, even web browsers have been coaxed into supporting a quality of interaction design that threatens to approach that of the early 1990s. More ambitious efforts, most notably Google Earth, have demonstrated that life outside of the browser is still viable when the payoff to the user is sufficient. Apple’s Applescript scripting language and its Quartz Composer visual programming environment, although platform-specific and limited largely to technically savvy developers, provide a glimpse of the future of software development. Enthusiasm among the digerati for the Semantic Web, although naive in the extreme, is illustrative of a widespread understanding that the future lies in the separation of data from presentation.

These trends, among others, provide a reasonably clear suggestion of the future. None of them, however, represents a viable path forward. The situation is analogous to aviation technology in the 1940s: Propeller-driven airplanes were advancing steadily toward the speed of sound. None, however, ever reached it. Achieving that goal took new thinking and new architectures. The transition to the next stable plateau of computer platform design will be marked by a similar discontinuity.

What will a “platform” look like in such a world? Attempting a detailed answer to such a question is, of course, doomed to failure. But, there is much we can say with reasonable certainty about the characteristics of the next platform.

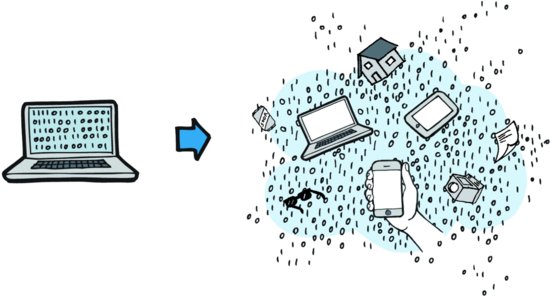

The first and most important such characteristic is, it seems to us, an inevitable consequence of the emergence of a global public information space. As the data are liberated from their long entrapment within our machines, and assuming that we dodge the current efforts to re-entrap them within large, corporate pseudo-cloud silos, the notion of “application” will invert: Rather than being vessels for containing and manipulating specific kinds of information, apps will be applied to a vast sea of diverse information. That is to say, they will become tools for navigating, visualizing, accumulating, publishing, and sharing disembodied information objects (Figure I1.1). They will become information centric.

Figure I1.1 Inverting the notion of computing: From information “in” in the machine to applications, people, environments, and devices being “in” the information.

Articulating exactly what this means is something of a challenge. However, we can get some purchase on the topic by extrapolating from the status quo:

Figure I1.2 CNN.com homepage interpreted as a collection of information objects

Let’s start by pointing a web browser to a familiar web page: say, today’s CNN.com homepage (Figure I1.2).

This is a good choice in that it benefits from a carefully thought out internal structure—one inspired by traditional newspaper layouts. More so than in many less-well-designed websites, this structure is clear at a glance: The “currency” of this page is the “story”—made up of headlines, text, pictures and video. There are dozens of them—their headlines immediately available for human browsing, their contents available at a click. Across the top, there are numerous “tabs”—stylized buttons that allow one to change focus to other sets of stories, topically grouped. Each “story box” is carefully rendered to look like a “thing”—separate from all the other things on the page. It is almost as if users could “clip” the stories that interest them and “paste” them into a scrapbook of accumulated knowledge for later reference. Almost.

Actually, in some cases, exactly that can be done. Assuming you are using a modern browser, you can drag any photograph that may catch your fancy and drop it onto your computer’s “desktop.” This will immediately and invisibly copy the picture file onto your local disk drive, where it will stay until you discard it.3

This is rather nice. It works in part because in the web, images (for reasons lost in history) have a weak kind of identity in the form of a URL. They are well-defined, so the drag-an-image feature was an obvious one to implement. Unfortunately, this is not true of most of the other things on the page. If you try the same trick on a video clip, for example, it will look like it worked. But, the thing you will get on your desktop will not be a video, it will just be the image used as a thumbnail to represent the video. If you try to drag an entire “story” you will discover that their nice thingness is only skin-deep. They can’t be dragged at all.

And then there are the little “tabs” along the top of the page. The “tab” metaphor suggests that they represent a collection of today’s stories about a given topic (“sports,” “politics,” etc.). Dragging one of them would be very nice: I could, for example, save all of today’s political stories in a single gesture. Does it work? Of course not. You can drag the tab all right. But you don’t get a collection of stories at all. Instead, you get a URL that points to whatever collection of stories happens to be available on CNN at the moment. This is just a shortcut to a “place” on the web. It doesn’t refer to a collection of specific stories at all.

This little thought experiment illustrates that the web is a long way from information centricity. But that is not the point. The point is that all of this is easily fixed. Let’s imagine a slightly different CNN.com home page. It looks and works exactly like the current one, except that there is a little “locked” icon in the upper right corner of the page. Clicking on that icon “unglues” all of the “stories”—allowing them to be dragged around (much like holding your finger over the icons on an iPhone for a second permits you to rearrange them). Now you can easily redo the whole page to your needs: moving interesting articles to the top; grouping similar articles according to your taste; deleting silly sports stuff entirely.

Even better, you could create new empty pages to be used as containers for your stuff—dragging things that interest you and making new pages that you can save and share with your friends. And, of course, just as with pictures, you can drag articles, videos, and even entire tabs onto your desktop, into e-mail messages, or anywhere else that strikes your fancy. Suddenly, all of your data have ceased being mere pixels on a screen, and have turned into well-defined, concrete things, and things can be moved, arranged, counted, sorted, shared and subject to human creativity. The data have been liberated. They have become liquid. It is hard to overstate the significance of this seemingly small step.

It will come as no surprise that this future scenario plays out using the little boxes of data we discussed in Chapter 2. These boxes have the potential to become the currency of an entire information economy. More than an economy: an ecology. Remember these same containers can hold more than text and pictures: They can contain numbers. With just a tiny bit of standardization (far, far simpler than currently proposed schemes for standardizing web pages), these numbers (as well as the text and pictures) can become the fodder for apps. For, you see, the very act of making the little boxes easily manipulable by people also makes them easily manipulable by code. These apps—since they will also be stored and delivered in the same little boxes—will themselves be manipulable, sharable (not necessarily for free), and composable.

That last point—composability of apps—is worth some elaboration. The act of moving from a world in which the data are in the apps to one in which the apps are applied to the data has profound effects on the potential for interoperability across apps. When all apps are essentially operating on the same pool of data objects, it becomes far easier for the user to creatively assemble composites of independently developed apps to solve complex problems. This, in turn, will promote the emergence of simple component architectures designed to facilitate the construction of complex, purpose-built “virtual appliances” out of collections of simple, general-purpose tools. This is the essence of what it means to be a “platform.” When a public information space blossoms, it will be the Mother of All Datasets. It will give rise to the Mother of All Platforms.

1 At this stage of the game, the idea of an individual owning a computer was about as practical as an individual owning a bulldozer—and about as useful.

2 Our own “Workscape” effort, done in collaboration with Digital Equipment Corporation, is but one example.

3 Actually, and somewhat ironically, the image was very likely already on your disk drive, stored in a mysterious and invisible place called your cache. Further, the same images have been copied to millions of other users’ caches. But this is for the benefit of your browser, not for you. The pieces of the global information space are already there—they are just not doing anyone much good.