CHAPTER 9

Aspects of Tomorrow

The only simplicity for which I would give a straw is that which is on the other side of the complex—not that which never has divined it.

—OLIVER WENDELL HOLMES, JR.

There is a framed poster that has been around MAYA’s offices from almost the beginning. Its title is YESTERDAY’S TOMORROWS, and it advertised some long-past art exhibit featuring old visions of the future—skyscrapers shaped like art deco spaceships and so forth. The image (Figure 9.1) is a useful reminder that detailed predictions about the future are a fool’s errand and that one’s efforts in this direction are doomed to pathos. History is fraught with chaotic and idiosyncratic processes that determine its salient details, and we are no better than anybody else at reading those kinds of tea leaves. We know enough to steer clear. Nonetheless, we can see no way to make our exit without painting some sort of picture to provide coherence to the story we have been telling. So, we will attempt an impressionist painting—endeavoring to emphasize the essential patterns that we can see clearly, while avoiding dubious detail. When we drift too far into specificity, we trust the reader to smile at our naïveté and just squint.

BEYOND THE INTERNET

The Internet will be with us for the foreseeable future. At its core, it is just too simple and too correct to change much. Indeed, the Internet in the age of Trillions is likely to be even more like the Internet as it was originally conceived than it is today. At present, the vast majority of the Net’s traffic is aggregated into a few very large “pipes” that are operated and controlled by a small handful of commercial entities. Moreover, these systems are highly managed, using semi-manual processes and a great deal of human tweaking. Much of the self-configuring and self-healing nature of the original, far flatter Internet, while still latent in the architecture has little relevance in its present mode of operation.

We do not predict that the big pipes will go away. Indeed, they will grow in number and size, but not in importance. As bandwidth requirements continue to grow without bound, these trunks will evolve into specialized services, moving vast amounts of audio, video, and other very large and/or time-critical data objects in highly optimized ways. Such optimization will worry advocates of Net neutrality (a community of which we consider ourselves members), but it will hardly matter. By the time this happens there will be so many alternative paths for data to flow that effective neutrality of payload will have long since been assured. Billions upon billions of cooperative, Internet-enabled, peer-to-peer devices will have grown together in an incomprehensible but very effective tangle of self-configuring arteries and veins such that the very concept of an Internet connection will recede from consciousness.

A much larger percentage of data flow will be strictly local. No longer will e-mail messages travel across continents on their way between two cell phones in the same room. Suburban neighborhoods and urban apartment buildings will enjoy immense intramural bandwidth simply by virtue of the devices—wired and wireless—owned by their residents. Such short-haul data will flow as the Internet’s founders imagined—through ever-changing, self-healing, dynamically determined paths, whose robustness to hardware failure, changes in demand, and censorship will go unquestioned.

Returning to the analogy of a circulatory system, the commercial trunk lines will serve as the major arterial pathways, feeding a vastly larger web of peripheral veins and arteries formed by individually owned, locally communicating peer-to-peer devices. All of these devices will speak today’s Internet protocols, with only evolutionary improvements. But vast as this network will be, it will pale in size to a third, even more local tier—the capillaries of our metaphorical circulatory system. Comprising trillions of single-purpose data paths, many of them trivial in capacity, this layer of the network will lack the generality (and so, the complexity) of the Internet protocols. Installed and configured by blue-collar technicians and consumers, such links will support data flows in very local settings, more often than not between an unchanging pair of devices. Not only is it not necessary for light switches to have the ability to connect directly to any other device in the world (the sine qua non of the Internet), it is harmful. Harmful in two ways: First, as we have seen repeatedly, complexity of any kind has a cost, and so should be avoided when possible. More importantly, it is dangerous. The Internet—like all public places—is potentially dangerous, and always will be. Yes, we can build firewalls, but they will never be perfect and will very often themselves contain more complexity than the devices they are intended to protect.1

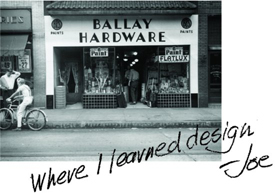

We will eschew the complexity of the Internet at the lowest levels of our infrastructure with good riddance. But something will be lost. The Internet brings with it ready-made answers to many difficult questions concerning communications protocols and standards. In its place will emerge a free market of best practices for dealing with the myriad of special-case requirements that such a scenario implies. We see this in today’s building trades, where a relatively small number of standard component specifications and design patterns have emerged from years of accumulated experience in a marketplace of both products and ideas. There are lots of different plumbing components in the hardware store—more than are strictly necessary. But they represent time-proven, cost-effective designs that emerged from a process that works better than any process involving central planning or attempts at grand unification.

SIMPLIFICATION

That last point extends far beyond network protocols. Throughout this book we have been careful to use phrases like taming complexity rather than simplification. This was no mere affectation. Computers are all about complexity, and we wanted to make clear that our goal is to manage it—to harness its power—not eliminate it. Here, however, we will use the “S word” and mean it. Although the aggregate complexity of the systems we will build on Trillions Mountain will make today’s systems look like toys, this will only be possible by the vast simplification of the building blocks of those systems. Not only is today’s PC the most complex integrated artifact that humanity has ever produced, we suspect that it will prove to be the most complex single artifact it will ever produce. It is on the edge of what is worth building in this way. As we learn how to orchestrate vast numbers of tiny devices, we will come to realize that many of the tasks for which we use PCs2 today can be done with vastly simpler devices.

Here are two examples: Almost every urban parking garage contains one or more machines that dispense gate tickets, compute parking charges, and read credit cards. If you ever get a chance to peek over the shoulder of the service guy when this machine is opened up, you may get a glimpse of how it is built. Very likely, you will see some kind of a PC. Similarly, if you go to your local library branch, the librarian will have an electronic system for scanning barcodes on books and library cards, and a simple database to keep track of who has borrowed what books. It is overwhelmingly likely that this system is built using a PC. In other words, we are using a device that in many ways is more complex than a space shuttle to perform tasks that require less computing power than is found in a typical pocket calculator. Why? Because, unless you are prepared to commit to huge unit volumes, the only economically viable way to deliver a quantum of computing power is to buy and program a PC. There just aren’t enough libraries or parking garages to justify purpose-built hardware. These examples typify why gratuitous complexity proliferates.

How will we get out of this box? The answer, as we have seen, lies in fungible components. Component architectures and open APIs will finally lead us to the packaging of much smaller units of computation in a form that is practical for developers to use without engaging in a science project. The simplification implicit in this agenda will come in three stages: Stage 1 will involve the exposure of the internal capabilities of otherwise conventional products. As the clock-radio example of Chapter 2 illustrates, this will be driven by the needs of interoperability. We will probably never witness the absurdity of ticket dispensers with clock radios inside them (not that this would be much more absurd than using a computer running Windows). But once we get in the habit of exposing the internal power of the devices we sell, we will discover that there are a lot of MacGyvers out there who will find creative uses (and thus create new markets) for the strangest combinations of components.3 Slowly, the market will sort out which of the exposed capabilities of existing products are in high demand, thus leading to Stage 2, which will involve the engineering of components that directly implement those capabilities in more rational packages.

The outsides of these transitional components will tend to be simple (the market will by now value simplicity and reward those who deliver it). But the insides will still be complex. The reason is that, for a while, this will be the only practical way to engineer such modules. The accumulated crud of unavoidable complexity has poisoned the market for simple, low-functionality components, and so designers have little choice but to use overly powerful, overly complex microprocessors and absurdly large memory chips, whether they are needed or not. So, at this stage, we will not so much be reducing complexity as sequestering it. Designers will compete in the open-component marketplace to deliver the most general, stable, and inexpensive implementations of the simplest possible standardized APIs. Market pressures will quickly shape these APIs into simple elegance, but the insides of the boxes will still be needlessly complex.

But this will not last long. Once we get this far, the absurdity of the situation will finally become apparent to all. Because a successful component will find uses in many vertical markets, its volumes will rise. This, in turn, will revive markets for less powerful and simpler components. Old chip designs will be dusted off and brought back into production, and new manufacturing techniques (such as organic semiconductors) that have been kept out of the market due to performance limitations will start to become economically viable. And so, in Stage 3 the insides of the boxes will finally shed their complexity. We emphasize yet again that the complexity won’t go away, but it will migrate from the low levels of our systems, where it is malignant, up to the higher layers where it can be shaped and effectively managed by exposure to market forces. Prices will drop, tractability, volumes, and profits will soar. By this three-step process we will have pulled off a slick trick. The menacing tide of undesigned complexity will be stemmed, and we will finally be able to return to the normal pattern of “complex things built out of simpler things” that characterizes all competent engineering design.

DEVICES

It is interesting to imagine what the hardware store of the future will be like. On a given day, its customers may include a DIY homeowner repairing a “smart room” that can no longer accurately detect its occupants; a contractor preparing a bid on an air-conditioning system that has to play well with a specific building energy management system; and a one-person repair shop sorting out a botched home theater installation that never did work properly. How will all these folks cope with the kinds of complexity we have been talking about? We can assume that the aisles will still be organized by physical function (plumbing, lighting, structural . . .). But then what? Universal standards will be too much to hope for, so by no means will everything be able to communicate with everything else. What we can hope for is the incremental evolution of well-defined groups of loosely interoperable devices. In MAYA’s architectural work, we call such groups realms. A realm is defined as a set of devices, all of which are capable of directly passing at least one kind of message to each other. In practice, this means that they have a common means of communication, and they speak a common language (or at least a common subset of a language). Thus, if I can connect a light switch to a light fixture and have the switch successfully send “on” and “off” messages to the fixture, then the switch and the fixture are in the same realm. Note that objects in different realms might still be used together, it is just that some kind of an adaptor would have to be used to bridge the realms. (As mentioned in Chapter 2, MAYA calls such adaptors transducers.)

We have long since reached this stage with more mature technologies, such as plumbing. While there are many realms of plumbing, consider just three: water pipes, gas tubing, and a garden hose. Most of the pipe and tubing that constitutes these realms falls in approximately the same range of scale—a fraction of an inch to a couple of inches in diameter. And, while all three do roughly the same thing—conduct a fluid along a controlled path—they are intentionally incompatible. This is accomplished by specifying slightly different diameters and incompatible screw threads at the connections (device interfaces)—a means for facilitating the adherence to various building codes. Of course, some interrealm connections are possible—you can connect a garden hose to a water pipe—but it takes an adapter.

The notion of realm is deceptively simple. It in no way restricts, or even informs, the development of new communications technologies or protocols, so you might think we were right back where we started. But merely recognizing the existence of such an architectural principle gives designers and manufacturers something to aim for, and purchasers in our hardware store something to shop for. In other words, it helps to organize a marketplace. The notion does not imply any kind of heavy-handed standards process—an approach that we view with considerable skepticism. Rather, it looks to market forces to sort out the wheat from the chaff. Popular realms will attract many products, since they represent a proven market. On the other hand, flawed realms, even if popular, will beg for competition by those who think they can do better. If this works like other markets, we will first see chaos, followed by plateaus of stability punctuated by occasional paradigm shifts.

The payoff happens in the hardware store. Assuming some trade organization emerges to assign unambiguous identifiers to popular realms (a relatively easy task) and perhaps do some compliance testing (a harder but still tractable process), we can envision a world in which every product on the shelves is labeled as to its realm membership. This is not all that different from what happens in today’s hardware stores. In the electrical department, you will find two or three incompatible styles of circuit breaker boxes—with perhaps several different brands of each. If you go in to purchase a replacement circuit breaker, you had best know beforehand which style of box is in your basement. The same is true of basic styles of plumbing pipe, backyard irrigation systems, and so on. The realm idea simply extends this pattern to pervasive computing devices—adding just a little bit more structure, as demanded by the greater complexity of computing devices. Just as there are often adaptors that permit the intermixing of otherwise incompatible products, the aforementioned transducers will be offered by various manufacturers when the market demands them.

Healthy markets always live on the edge of chaos, and our future hardware store will be no exception. But if manufacturers can resist the urge to hide behind closed, proprietary standards and protocols (and those who cannot will in the long run simply fail), and if the design community does its ordained job of defining open, forward-looking architectures to provide a modicum of organization, that store will be the hangout of a vibrant, creative, and generative community.

And, of course, we must not forget that, as always, disruptive new technologies will come onto the stage from time to time. This is a particularly dicey place to attempt predictions, so we will for the most part forbear. But one important area to which we have already alluded involves new circuit and display fabrication technologies. We do not refer to exotic, high-end, ever-faster and denser chip technologies. These are always with us, and so are not particularly disruptive. We mean the opposite: low-end, slower, lower-density, but much cheaper circuits and displays. Our poster child for such technologies is circuits literally printed on paper using something like ink-jet printers and semiconductor inks. Whether this particular technology works out is not of the essence. Throw-away, cheap electronics in one form or another will not be long in coming. This, combined with device fungibility will be the last nail in the coffin of computing feeling like something we “do” rather than being just part of the milieu. When one can pick up a discarded newspaper on the subway and use it to check your e-mail, any sense of distance between space and cyberspace will have vanished.

THE INFORMATION COMMONS

The next step toward the emergence of the kind of “real cyberspace” that we have already discussed will be the gradual unfolding of an Information Commons. The Commons will be a true public resource—dedicated to the commonweal. We are not describing something like Wikipedia. In fact, the Commons in some ways is the exact opposite. Wikipedia, although free, philanthropic, and dedicated to the public interest, is in fact a single collection of information under the ultimate control of a single organization (some would say a single individual). Users of the service must ultimately trust the reliability and integrity of that organization for its continued availability. In contrast, the Commons will be controlled by no one (although it probably will require some kind of nongovernmental organization to coordinate it). Its purpose will be less the accumulation of information than its organization. It will serve as a kind of a trellis upon which others may hang information (both free and proprietary).

The essence of this trellis will be what amounts to an enumeration of the basic facts of the world, starting with simple assertions of existence. Thus, to start with the most obvious and compelling example, it will maintain a definitive gazetteer of geopolitical and geophysical features. It is a remarkable fact that no single, universally-recognized worldwide list exists (recall our discussion of the Hanover problem in Chapter 6). The consolidation of such a list from readily available sources, and the assignment of each entry a single universally-unique identifier will alone have an immeasurable impact on our ability to coordinate disparate, independent datasets. No longer will search engines need to employ exotic text-processing algorithms just to figure out whether the string “Jersey” is meant to refer to a state, an island, a breed of cow, or an article of clothing. Now, imagine if we also had such definitive lists of corporations and other businesses and of not-for-profit organizations; of cars and appliances and all other manufactured products; of all known chemical compounds; of all known species of plants and animals; and on and on. It is not that we don’t already have such lists. The problem is that we have too many. They are compiled over and over for special purposes, they are often proprietary, rather than freely available, and they come in diverse formats and organizations, with idiosyncratic and mutually incompatible identifier schemes. All of these things conspire to make them ineffective as the foundation for a true Commons, although their existence makes the creation of one very feasible.

One thing to note about these basically ontological assertions is that they are for the most part, noncontroversial. People argue about the age of the Earth, but rarely about its existence. A few basic organizational facts about geopolitics are in dispute (e.g., the status of Taiwan as an independent state), but such disputes are infrequent enough so as to not challenge the basic utility of the agenda. As long as the Commons sticks to basic facts, its wide acceptance as the definitive source of universal identifiers will remain within reach.

It is hard to overstate the improvement that such a regime will make to the information architecture of the Internet. When widespread consistency is attained on the simple matter of reference to real-world entities, search will become vastly more efficient, “data fusion” across independently maintained datasets will move from being a black art to a trivial science, whole new industries of higher-level data organization and visualization will be enabled. In a sense, the web will be turned inside out. Today, virtually all content is organized by the owner of the information, and we depend on the miracle of the search engines to sort it all out. The existence of the trellis of the Commons will support the evolution of a web organized by topic. This will not eliminate the need for search engines, but it will make their task much easier, and accelerate their evolution toward truly intelligent agents, sharing a common referential framework with the humans they exist to serve.4

THE WORLD WIDE DATAFLOW

Getting past the client-server model will be a long, slow slog. Technological inertia and backward-looking economic interests will conspire to ensure this. But slowly the barriers to progress will yield. The pressure will come from several sources. The already-common disasters associated with too-big-to-fail centralized services will become ever more common and serious. As a result, it will begin to dawn on the public that the vulnerabilities that these events expose are not growing pains but are inherent in the model. Moreover, the potential for political and criminal manipulation of vital information infrastructure will become an increasing concern.5

A second source of pressure will be the growing need for a more nuanced model of data ownership and control. It is an inherent characteristic of today’s database technologies (and the client/server model is simply a thin veneer over these technologies) that whoever controls the database also controls all of its contents. For practical purposes, the database and the entries it contains are one entity. But it doesn’t have to be this way. The kinds of information architectures explored in Chapter 7 are quite capable of separating the ownership and control of an aggregation of data from that of the individual data items themselves. Thus, for example, an aggregator of urban travel information could maintain geographically organized collections of, say, restaurant menus, while the restaurants themselves could maintain ownership and control of their respective menus. (The web accomplishes this today only via the use of lists of links, which assume constant connectivity and cannot achieve uniformity of information presentation.) More importantly, since the restaurateurs own and can edit the “truth copy” of their menus, they need make their updates only once, without having to worry about dozens of obsolete copies floating around in other people’s databases, as is common practice today.

But the most important motivation to abandon client-server lies in the requirement for data liquidity. As we climb toward Trillions, it will become increasingly obvious that the number of mobile devices for which the average consumer will be willing to pay a $29.95/month Internet connectivity fee will be extremely limited. And, as we have seen, for a great many information devices, direct Internet connectivity of any kind is simply not appropriate. Yet, these devices will need to be able to acquire and hold information objects of various and, in general, unpredictable kinds. We cannot build such a world if all access to information is predicated upon real-time connectivity with remote, centralized servers.

Two major changes will have to happen before there is much progress here: Peer-to-peer networking has to cease being thought of as synonymous with music stealing, and end-to-end encryption of consistently identified data objects must become routine. Once both of these milestones are reached, things will start to move quickly. First of all, various kinds of storage cooperatives, some planned and others completely accidental, will start to appear. All users will be in possession of more data storage capacity than they could possibly know what to do with (they won’t be able to help it). Therefore, the cost of backing up your friends’ data objects will be negligible. But, the same will be true of strangers’ data. The data will flow where they will, being cached repeatedly on the way. People will just stop thinking about it. It will be realized that the best way to protect data will be to scatter copies to the wind, trusting to encryption to protect sensitive data. If you lose your last copy, Google or some descendent of it will find a copy somewhere using its UUID as its definitive identity.

The resulting information space will eventually begin to feel less like a network and more like an ocean, with data everywhere, flowing both in waves of our making and also in natural currents. It is not that everything will be everywhere all the time, but what data objects are available, and where, will be a complex function of deliberate actions on our part and the natural results of vast numbers of uncoordinated incidental actions. Increasingly intelligent caching algorithms will speculatively “pre-position” data where they are likely to be needed. For example, the act of using the Net to research a summer vacation road trip from the comfort of your living room will automatically cause all relevant information about points of interest along all of the candidate routes to be pushed to your car down in the garage, where it will be available on the trip, even if you are in the middle of nowhere out of Internet range. If your plans change, your car will be able to fill in missing information along your new route—picking it up from cooperative passing vehicles.

Kids too young to have real cell phones will have toy facsimiles for use on the playground—talking and texting to each other, and their parents, via short-hop no-cost P2P data links. The range will be very short, but messages will hop from toy to toy bucket-brigade style, such that they will tend to work just fine over useful distances. Their parents, having real cell phones, will carry around a good slice of all human knowledge in their pockets. Certainly a recent snapshot of whatever Wikipedia evolves into will be routinely cached for off-line browsing, as will a large library of public-domain reference books, supplemented, as today, by purchased copyrighted materials. Also available for purchase will be tiny bits of very fresh data—reviews of today’s specials in nearby restaurants and so forth—produced on a for-profit basis by bored diners with a few minutes on their hands and paid for via tiny microtransactions, rendered profitable by the lack of any middleman—not even the cell company.

But this is not to say that amateurism will reign. Even if we achieve the exceptionally high standard of usability design such that average users would be able to work such magic—a dubious premise amid such vast complexity—self-reliance will be limited by individual motivation. Put another way, most people have better things to do than to futz with their technological environs, no matter how fascinating and powerful. Entire new industries will be born around managing and visualizing people’s personal information spaces. It will be taken for granted that—one way or another—any bit of data can be coaxed to flow to any desired place. But that doesn’t mean that it won’t sometimes be tedious. As today’s technical challenges become routine and trivial, new ones will pop up.

PUBLISHING

In Chapter 8 we explored the idea of publisher as aggregator of trust. As we said, this is the one aspect of the role that publishers play today that is least likely to be supplanted by new technologies. This assumption interacts with the emergence of the Information Commons and the World Wide Dataflow in interesting ways. We are going to need a scheme that allows this important role to survive in the absence of ink and paper and physical bookstores and big central servers. In the case of traditional “large” documents like books and magazines, this is a problem that is nearly solved. Despite appearances, the publishing industry is actually a bit ahead of the curve in several respects, including content delivery in the form of locally-stored data objects, the use of per-item encryption, (mostly) multiformat off-line ebook readers (both hardware and software), and a fairly seamless cross-device reading experience.6

The industry was forced to embrace these forward-looking techniques by the market reality that readers were not about to accept electronic books until they were as portable and untethered as their traditional paper competitors. As a result, the rapidly emerging ebook industry provides a case study of the future of these techniques.

What has not yet happened is the extension of these ideas to smaller and more intimate acts of publication. Blogging is still almost exclusively client-server based and under the administrative thumb of large, dedicated service providers. The same is true of the many Internet discussion groups, which mostly depend on (and are bound to the policies of) services such as Yahoo! Groups. Wikipedia’s success is dependent in part on its (and its users’) willingness to eschew attribution and stable, well-defined releases. The latter example is particularly provocative. How might we evolve the Wikipedia model to support features requiring clear object identity and provenance (and thus make it acceptable for use in mission-critical situations, such as legislation and regulation) without killing the goose that laid the golden egg? We suspect that most members of the Wikipedia community would say that this is impossible—that we just don’t get it. We respectfully disagree. The Wikipedia model as it now exists stands as a spectacular monument to the potential of distributed, community sourced authorship. But we believe taking it to the next level will require an equally bold experiment in distributed publication.

The key to making this work lies in the Information Commons. Wikipedia already contains much of the same information that will form the core of the Commons. It is probably the world’s best source of “lists” of various sorts: Countries and their administrative subdivisions, feature films over the decades, episodes of The X-Files—they are all there. But they are just lists, useful to humans but awkward and unreliable as organizers. Once they are moved into the Commons and given unique identifiers they will become much more. They will be part of the trellis against which third parties can publish their own information, outside of Wikipedia’s (or anybody else’s) administrative or policy framework. It will work something like this: If I wanted to post, say, a literary analysis of the important X-Files episode “The Erlenmeyer Flask,” I would compose my paper in the form of an information object.

It (and all other objects in this story) would have a unique identifier and would identify me as the author. I would sign this document with a cryptographic signature. Although this would not prevent others from editing my document after I send it out into the wild, it would make it possible for any such modification to be detected by any reader, thus flagging it as unreliable. By signing the document myself, I am essentially self-publishing—nobody is vouching for the veracity of the information except me. On the other hand, if I wanted to publish the review under the auspices (and policies) of some third-party publisher, such as a future Yahoo! Groups, or even Wikipedia, I would instead submit the item to them for editorial review. After approval, they would sign the object on my behalf. I would still be author, but they would be publisher, thus presumably adding a modicum of credibility to my work. Note that in neither case do Yahoo! or Wikipedia assume physical control of my information. It remains a mobile data object, free to flow through the World Wide Dataflow without anybody’s permission. But it can do so without losing provenance. Readers can count on the answer to the question “who says?” Publishers can count on being able to enforce their standards and practices; and authors always retain the ultimate ability to self-publish without anybody’s permission.

As always, whether anyone will read the author’s work is another matter. For starters, how will anyone find my review? The answer is largely the same as it is today: Indexing and search services will point to it. But the index will be slightly different. Note that in this story, my review has three basic attributes: It is about something (“The Erlenmeyer Flask”); it is a type of document (a literary review); and it has a publisher (myself, Yahoo!, Wikipedia, etc.). Courtesy of the Information Commons, each of these items has a unique identifier. So, the indexing task reduces to associating each information object with three numbers. This is technically trivial and can be easily done using existing search engine technology, although completely decentralized implementations are also possible. So, if one of my readers wishes to follow my literary “blog”, they can subscribe to “Everything published by xxx about any topic of type ‘Literary Review.’ ’’7

Conversely, if I am an aficionado of the X-Files, I can subscribe to “everything published by anybody about ‘The Erlenmeyer Flask’ ’’ of any type. Of course, I might then find myself drinking from a pretty indiscriminate firehose. A good compromise might be to subscribe to “everything published by Yahoo! or Wikipedia (or whatever publishers I prefer as my gatekeepers) about ‘The Erlenmeyer Flask.’ ’’

It can be seen from this rough sketch that it is quite possible to have our cake and eat it too with respect to distributed but trusted publication. It can also be seen that far from putting online publishers such as Yahoo! out of business, such a scheme defines a new and important role for them. Monetizing that role is a different matter, but it is a challenge not fundamentally different from the one faced in today’s client-server world.

SAFETY, SECURITY, AND PRIVACY

Anyone who has ever received an electric shock at a home power outlet knows that we live our lives surrounded by potentially lethal power potentials. Every outlet is like a powerful and incredibly taut spring, waiting for a chance to release its destructive power. Yet, we never give it a thought. This is a testament to more than a century of accumulated engineering, design, and administrative wisdom in the management of our electrical infrastructure. As should by now be clear, the new infrastructure of pervasive computing, although embodying power of a different kind, will be no less potent and therefore potentially no less dangerous. For all the wonders of today’s consumer computing milieu, most of us still think of it as a thing apart from the rest of our lives, not a critical part of our environment. As this changes, so must our attitudes concerning safety and security. Belatedly, references to the computational environment will begin to creep into the building codes. Whether they will be new standards, or an extension to the electrical codes, we cannot say. Just as the electrical code requires a minimum number of outlets in each room and a minimum amount of current for each house, so too, certain minimum data services will eventually come to be part of our definition of “suitable for human habitation.” We are not referring to something like “minimum bandwidth Internet connection” (although, like “lifeline” telephone service, something like that may happen, too). Rather, we are talking about the basic infrastructure built into a house that will have to be there in order for everyday objects to operate properly. The time will come when such capabilities will be seen as basic necessities, like heat and light, and they will be subject to similar safety rules.

There is no point in speculating about the details of such rules, beyond getting a feel for their general nature. But, here are a few guesses: We have already said that many devices will have no need to be on the Internet. But, perhaps certain devices won’t be allowed to be on the Internet. Just as today’s electrical codes require a strict separation between 120 volt power circuits and low-voltage wiring such as doorbell circuits, it may be that strict rules about sequestering certain basic functions from the public information space will prove to be the ultimate protection against malicious remote tampering. Similarly, certain combinations of computational and physical power in the same device might be proscribed.

One of the most interesting potential safety rules involves aggregate complexity. We have already seen that the best single way to thwart the bad guys is to give them no place to hide. Microwave ovens have computers, but they do not at present get hacked. The reason they don’t get hacked is that they can’t get hacked. Their computational systems are simple enough that the ability to make this determination is within our cognitive capacity. Of all the bad things that happen when things get too complex, perhaps the worst is that they become too complex to audit. One could never prove that the last bug has been removed from a modern operating system, no matter how important it might be to do so. For this reason, anything that is too complex to understand is inherently dangerous. So, it is reasonable to predict that when we start taking the safety of our computing infrastructure seriously, part of our arsenal of danger-fighting weapons will be restrictions on the presence of unneeded complexity. We can’t wait.

We have already discussed at length the issues around security, both of data and of devices. The only thing to add here is the observation that in a world as distributed as the one that we are describing, security must be everywhere. Just as every outside door of pretty much every building in the industrial world has a lock, so too will every venue of computation have to take appropriate security measures. Most data will flow in the form of digitally signed data objects. It is likely that the descendants of today’s “firewalls” will, as a first order of business, check the validity of those signatures. Any objects that show signs of tampering will simply be turned away at the door. The next step up from this (and it is a big step) will be to discard (or sequester) any object that is signed by an unknown publisher. Such a policy, although very powerful, would be untenable today for most purposes. It would be like refusing all e-mail from anyone but your close friends. It would effectively limit spam, but you would be giving up much of the value of e-mail access. However, once an infrastructure for adequate networks-of-trust are in place, such tactics will begin to become viable. Traffic limited to “n degrees of separation,” with an appropriately small value of “n,” may well prove to be an efficacious approach to the security problem.

Which brings us to privacy. We have already admitted that we don’t have a whole lot of new ideas in this space. As mentioned, we find the analogy with environmental pollution to be useful. In both cases, technologies that we find too valuable to forgo have very serious negative side effects that can be mitigated but not entirely avoided. As in the case of environmental issues, we suspect that we as a society will deal with the destructive effects of information technology on privacy with a pragmatic combination of government regulation, individual activism, public education, better technologies, and a certain amount of toleration. But, if we go into the future with our eyes open, we can perhaps avoid the analogs to the worst consequences of our blindness to the environmental impacts of the industrial revolution. In The Lord of the Rings, Tolkien had his Elves say, “We put the thought of all that we love into all that we make.” If we love privacy, we need to keep its thought in mind during every step of our climb up Trillions Mountain.

1 At the time of this writing, the U.S. Post Office is running an ad campaign listing all the things (refrigerators, cork boards, snail mail) that have never been hacked. The effort is kind of pathetic, but they do have a point: The best way to protect a device from being hacked is to make it too simple to be hackable. In a sufficiently simple system, there is just no place to hide. This, it seems to us, is a desirable trait for the light switches in hospital operating rooms.

2 And in this we include the only marginally less-complex post-PC devices such as tablets and smartphones.

3 One sees this kind of thing today in the hobbyist “maker” community. Unfortunately, it usually involves voiding warranties, skirting safety certifications, and other practices that are unacceptable to professionals. Engineered component architectures will solve these problems.

4 MAYA has worked for many years toward the development of an architecture for the Commons in the context of its “Civium” initiative. Read about it here: http://www.civium.org.

5 On the very day that we write these words (January 18, 2012), many web sites, including Wikipedia have “gone dark” in a 24-hour protest against pending legislation—the Stop Online Piracy Act (SOPA) and the PROTECT IP Act (PIPA). We happen to agree with their sentiment, but the fact that a single organization (or in some cases, a single individual) can unilaterally flip a switch and disable what has become a vital bit of worldwide infrastructure deserves even more attention than a piece of ill-advised legislation.

6 We refer here to such features as the ability to read a single licensed copy of an ebook on multiple platforms; to have bookmarks and “what page am I on” information shared across devices; and such collaborative features as group annotation.

7 The skeptical reader might well ask, who assigned the ID to the document type “Literary Review?” This is an example of the so-called ontology problem. Creating generalized type systems of this sort is a known hard problem. Our answer is “don’t worry about it.” What is important is the framework, not the particulars. Once the information architecture is in place to make this an important problem, the creative pressures of the marketplace will soon carve out a good enough solution to this problem. Markets are better than designers at this kind of thing.