INTERLUDE

Yesterday, Today, Tomorrow: Data Storage

Like the foundations of a building, data storage technologies have little glamour. Yet, they are the base upon which the edifice of modern computing is built. One of the major theses of this book is that many otherwise obvious innovations have been rendered impossible due to inadequate data storage architectures. It is worth a closer look at the evolution of these architectures.

YESTERDAY

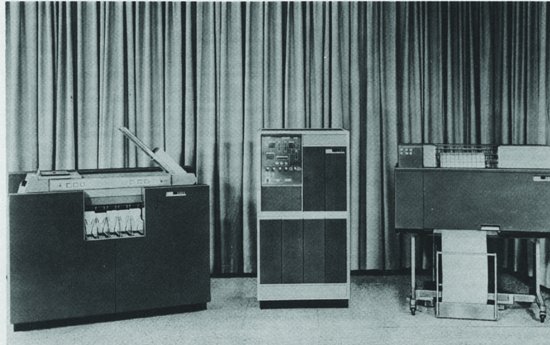

We have long since come to think of computers as containers for information, so it is a bit surprising to note that an entire generation of commercially viable computers operated with essentially no persistent storage at all. Typical of this era was the IBM 1401 (see Figure I2.1). Introduced in 1959, and sold through the early 1970s, this extremely successful low-end machine brought electronic data processing within reach of thousands of small businesses. And yet, it had no disk drive or other digital storage of any kind. Each power cycle was essentially a “factory reset.”

Figure I2.1 IBM 1401 computer circa 1961

Source: Ballistic Research Laboratories, Aberdeen Proving Ground, Maryland

How was such a device useful? The key was a complete reliance on external storage, typically punched cards. Bootstrapping the 1401 involved placing a deck of cards into a bin and pressing a button labeled LOAD. Each of the 80 columns of punches on each card was interpreted as a character of data and copied into main memory and then executed. What happened then was completely dependent on the particular deck of cards that one had chosen. Thus, for example, a programmer might type (on a “keypunch machine”) a program in the Fortran programming language and stack it behind a pre-punched deck containing the Fortran compiler. Finally, additional cards containing the data to be processed would be appended to the end of the deck, which would then be fed into the card reader. When the user hit LOAD, the cards would be read and, if all went well, a few seconds later the massive, noisy 1403 line printer would cheerfully spew out the desired results. Then, the user would step aside, and the next programmer in line would repeat the operation with his or her own deck.1

Even larger machines that did have mass-storage tended to use it only for supervisory purposes, and this general pattern of external storage for user data persisted well into the time-sharing era. A well-equipped college campus of the 1970s, for example, had “satellite” computing stations consisting of a row or two of keypunch machines, a card reader, and a line printer, connected to the campus mainframe via telephone wires. Except for the fact that the Fortran compiler was probably stored centrally rather than in cards, the user experience at these self-service stations was practically unchanged from that of the 1401 days.

During the 1970s and 1980s, this usage pattern was slowly supplanted by timesharing systems, in which the satellite card readers/printers were replaced by “robot typewriters” (and later, CRT terminals) with which users “talked” to the mainframe using arcane “command line” languages. Since such devices had no card readers, this mode of interaction required the development of “file systems:” conventions for persistently storing and editing what amounted to images of card decks on remote disk drives. This card image model of storage was, at first, taken quite literally, with editors limiting each line of text to the same 80 columns of data found on punch cards.2 Often, the storage of binary (i.e., non-human-readable) data was difficult or impossible.

Dealing with this deficiency was key to the development of early online interactive systems, most notably the SABRE airline reservation system. Such systems were barely feasible given the hardware of the era, and so required a great deal of exotic optimization. As a result, their storage systems tended to be different animals from the largely textual file systems familiar to timesharing users. Such efforts took a big step forward with the development of what came to be known as the Relational Model—the first mathematically rigorous approach to data storage. Relational databases promised (and eventually delivered) a dramatic increase in storage efficiency, one that was badly needed given the impoverished hardware of the day.

Such efficiency, however, was purchased at a great cost: The “relations” after which the approach was named are in fact large tables of identically formatted data items. This works very well when we are dealing with “records” of many identical transactions (such as airline ticket sales) whose structures are fully specified in advance and rarely change, and that are under the control of a single agency. It is not so good if the structure of the data changes frequently or if we want to routinely pass around individual records from machine to machine and from user to user as one might in a pervasive computing environment. But such requirements were not even on the radar, so all was well.

As mainframes gave way to minicomputers and then to personal computers, the relationship between users and their data evolved as well. Instead of files being a remote abstraction kept on the user’s behalf on some remote machine, they became more tangible and local—more like personal property. One kept them first on floppy disks, and then on hard drives backed up by floppy disks. This is how we came to think of data as being in the PC. Gradually, “computing” started to seem incidental. Essentially, PCs came to be seen as containers for data: a place to keep my stuff (where “stuff” first meant words, but quickly evolved to include pictures, games, music, and movies). This is where things stood at the dawn of the consumer Internet.

TODAY

Given the above trajectory, one might have expected that adding the Internet to the mix would have had a predictable result: Users’ stuff would begin to flow from machine to machine in the form of files. Services would emerge permitting users to find things on each other’s disks. File systems would become distributed (i.e., they would span many machines). The boundaries among machines would start to blur. Cyberspace would come into focus.

In the event, of course, nothing of the sort happened. Despite a number of large-scale efforts in that direction—most notably CMU’s Andrew Project (headed by MAYA co-founder Jim Morris)—no such public information space has emerged. It has long since become technically easy to move files from machine to machine (e.g., via e-mail attachments), but such transactions, while not uncommon, are peripheral to the workings of the Net, not central to it.

There are a number of reasons for this, but perhaps the most basic one has to do with the management of the identity of information. Strange to say, neither file systems nor database architectures have seriously grappled with this fundamental issue. In the system we have built, our ability to keep different pieces of information straight is dependent on the walls provided by machine boundaries. If we took down those walls, we would have chaos. To see why this is true, imagine if even two computers in your office had their disks merged. Even ignoring privacy and security issues, the experiment would likely produce disaster. Surely there would be instances where the same names were used for different files and folders. What if older and newer versions of the “same” file found themselves on the same disk? Worse, what if versions had diverged? Which one should prevail? And the situation would be no better if we attempted to merge “records” from relational databases. The identity of each row (its so-called primary key) is only unique within a given table. Unless we move the entire table (which is likely to be immense) and then carefully keep it separate from other such tables, chaos will ensue.

It is not that these problems are overly difficult. It is just that they are unaddressed. The industry has found it more convenient to keep the walls and instead to encourage users to stop thinking of their computers as data containers and to return to what amounts to the timesharing model of data storage—cunningly renamed The Cloud. And this has happened at a time in which the cost of local disk storage has become almost ludicrously cheap.

TOMORROW

However much the industry may prefer centralized data storage, such models make little long-term sense and certainly will wane. Nonetheless, the move to network-based storage will likely be successful at driving the last nails into the coffin of the file system as a storage model. What will happen instead, while clear, is a bit difficult to describe.

We are about to witness the emergence of a single public information space. This space does not yet have a name. As we mentioned in Chapter 3, we at MAYA call it GRIS, for “Grand Repository In the Sky.” In articulating what GRIS is, it is helpful to first list some things that it isn’t. It isn’t a network of public computers. In this vision, the computers remain in private hands. It isn’t the Internet. The Internet is “public” in the same sense that GRIS is, but the Internet is a public communications utility. It is made of wires, fiber optic cables, and switches. GRIS is made entirely of data. It isn’t a collection of “free” (in the sense of “without cost”) data. Much of it will be without cost and available to all—just like the web. But, also like the web, much information will be private and/or available only at a price. Finally, it isn’t a huge database or data storage service that gathers together all the world’s information.

Rather, GRIS is a massively replicated, distributed ocean of information objects, brought into existence by shared agreement on a few simple (very simple) conventions for identifying, storing, and sharing small “boxes” of data, and by a consensual, peer-to-peer scheme by which users—institutional and individual—agree to store and share whichever subset of these boxes they find useful—as well as others that just happen to come their way. Popular “boxes” will tend to be replicated in especially large numbers and thus be readily available almost instantly when needed. Obscure and little-used items, in contrast, might take some time and effort to track down. But, as long as there is any interest at all—even if only by archivists or hobbyists—the chances of any given bit of information being lost forever can be arranged to be arbitrarily small.

Once this system is in place, the idea of putting data in particular devices (be they removable media, disk drives, or distant pseudo-“cloud” server farms) will rapidly begin to fade. Taking its place will be something new in the world: definite “things” without definite locations. Because of this newness, we don’t yet quite know how to talk about such things. These things will be quite real and distinct, but they will not depend on spatial locality for this distinctness. Asking where they “are” will be like asking where Moby-Dick is. They will be everywhere and nowhere—existing all around us in something that properly deserves the metaphor of cloud. “Tangible” isn’t quite the right word to describe them, yet we will rapidly come to take for granted the ability to pull them out of GRIS on-demand, using any computing device that may be handy to give them tangible form and human meaning.

Most importantly, all of this will come without the need to cede control of our data (and thus our privacy) to any central authority, either corporate or governmental. We will no longer have to trade control of our own information in order to benefit from the magic of the Net. We will, finally, have a true Information Commons, part of the human commonweal and free to all. The stage will be fully set for the emergence of a true cyberspace. The real Internet revolution will have begun.

1 In MAYA’s offices, we maintain a little museum dedicated to the history of technology, design, and culture. Among its holdings is a card deck that, if fed into a 1401, will churn out a staccato rendition of “Anchors Aweigh” on the line printer. Assuming that there are no remaining operational 1401s in the world, it is an interesting philosophical question whether that deck still counts as a representation of “Anchors Aweigh.”

2 Indeed, the aforementioned “glass teletype” CRT terminals almost universally were designed to display 24 lines of 80 characters each. Old habits die hard.