Network connectivity options for Oracle on Linux on IBM System z

This chapter describes the various options that are available to configure the private interconnect in a shared infrastructure environment for Oracle Real Application Clusters (RAC) with Linux on System z. In addition, the network best practices that are described can be used when application servers are configured to Oracle databases that are running under Linux on System z in a highly available environment.

If a single instance Oracle database without Oracle Clusterware (RAC) is installed, you do not need to configure the private interconnect for RAC. We introduce this topic for planning purposes so you can review your options as you are preparing for your installation.

This chapter focuses primarily on configuring the network on Linux on System z for performance and high availability when you are connecting to another System z system for Oracle RAC and connectivity to Application Servers that are running on machines that are separate than that of the database.

The following High Availability (HA) network connectivity options are described:

•Two Open System Adapter (OSA) network cards that are configured to a z/VM VSWITCH that is defined as active/passive.

•Two OSA cards that are configured to a VSWITCH defined by using z/VM link aggregation (active/active).

•Two OSA cards that are configured with Linux Ethernet bonding.

•Two OSA cards that are configured with Oracle’s new Redundant Interconnect feature (Oracle RAC Interconnect only).

The z/VM and Linux configurations for these options are described in detail in 3.6, “Setting up z/VM” on page 38 and section 3.7, “Linux setup for Oracle RAC Interconnect Interfaces” on page 41. The decision to incorporate more HA network solutions is based on the business requirements, hardware availability, and the skill sets available. For example, a site that has strong z/VM skills might decide to use the z/VM VSWITCH options. Sites with stronger DBA skill sets might decide to use the Oracle redundant Interconnect capabilities with Linux on System z.

This chapter includes the following topics:

3.1 Overview

Oracle RAC with Linux on System z was one of the first certified and supported virtualized platforms for running Oracle RAC in a virtual environment with z/VM. Table 3-1 shows the current certification matrix for running Oracle RAC with Linux on System z.

Table 3-1 Supported Virtualization Technologies for Oracle database and RAC product releases1

|

Platform

|

Virtualization Technology

|

Operating System

|

Certified Oracle Single Instance Database Releases

|

Certified Oracle RAC Databases Releases

|

|

IBM System z

|

z/VM and System z

LPARs

|

Red Hat Enterprise Linux

SUSE Linux Enterprise Server

|

10gR21

11gR2a

|

10gR2a

11gR2a

|

1 Oracle RAC is certified to run virtualized with z/VM and by using native logical partitions.

A key component for a successfully running Oracle RAC database is the network interconnect between nodes. The default for Oracle RAC in 11gR2 allows up to 30 seconds of network interruption between nodes before the cluster evicts an unresponsive node.

Performance of the cluster interconnect server connectivity is an important consideration to reduce latencies, lost fragments, and Oracle data blocks between connecting nodes. Table 3-2 shows the certified Oracle RAC interconnect configurations for running RAC on System z.

Table 3-2 RAC Technologies Matrix for Linux Platforms1

|

Platform

|

Technology categories

|

Technology

|

Notes

|

|

IBM System z

Linux

|

Server Processor Architecture

|

IBM System z

|

Certified and supported on certified distributions of Linux running natively in LPARs or as a guest OS on z/VM virtual machines, deployed on IBM System z 64-bit servers

|

|

Network Interconnect Technologies

|

VLAN with one System z Ethernet over Gigabit OSA card for two System z

HiperSockets

|

|

IBM System z is an ideal platform for consolidating Oracle RAC workloads. One example of this is the unique System Assist Processors (SAP) of System Z. SAP processors are internal System z CPU processors that assist with the offload of network and I/O CPU cycles to the SAP processors from the main processors that an Oracle RAC node might be using.

CPU offload helps prevent Oracle RAC interconnect CPU starvation wait events. CPU usage still should be monitored to avoid interconnect waits that are related to CPU starvation.

Consolidating databases in a shared System z RAC environment is supported, if the network traffic for the private interconnect is restricted to networks with similar performance and availability characteristics. If the environment requires increased security between RAC clusters, VLAN tagging can be used between distinct Oracle cluster nodes.

3.2 Network considerations for running Oracle RAC with Linux on System z

This section describes some of the architectural requirements for designing an Oracle RAC Interconnect environment on a System z Linux system.

The minimum recommendation for an online transaction processing (OLTP) database is a 1 Gb network. Decision Support/Data Warehouse databases can require greater than a 1 Gb network interface for the Oracle Interconnect. It is important to note that this is the minimum recommendation and monitoring of the network interfaces is needed to ensure that the performance is running at acceptable levels.

For example, 1000 Mbits (1 Gb) is equivalent to 125 MBps. When you exclude header and pause frames, this can equate to a maximum of 118 MBps per 1 Gb network interface. When sharing a network card across multiple databases, the interface must support the peak workload of all the databases. It is recommended that 10 Gb network interfaces are used for shared network workloads.

The network interconnect for Oracle RAC must be on a private network. If you are configuring the private interconnect between System z physical machines, there must be a physical switch configured ideally with one network hop between systems. The private interconnect should also be a private IP address in the range that is shown in the following example:

Class A: 10.X.X.X

Class B: 172.(16-31).X.X

Class C: 192.168.X.X where (0 <= X <= 255)

For example, if the Oracle private interconnect is configured with a public routable IP address, it is possible for other systems to affect the Oracle RAC database’s interconnect traffic. Although not mandatory, it is recommended and a best practice to use private IP address ranges for the interconnect configuration.

An Oracle RAC workload sends a mixture of short messages of 256 bytes and database blocks of the database block size for the long messages. Another important consideration is to set the Maximum Transition Unit (MTU) size to be a little larger than the data base block size for the database. To support a larger MTU size, the network infrastructure (switches) should be configured to support Jumbo frames for the Oracle Interconnect network traffic.

Table 3-3 shows the extra number of network reassemblies that are required when the MTU size is not set to a value that is larger than database block size (8K).

Table 3-3 Example of setting the MTU Size to a size that is greater than the DB Block Size

|

‘netstat -s’ of interconnect

|

MTU Size of 1492

(default)

|

MTU Size of 8992 (with 8K DB block size)

|

|

Before reassemblies

|

43,530,572

|

1,563,179

|

|

After reassemblies

|

54,281,987

|

1,565,071

|

|

Delta assemblies

|

10,751,415

|

1,892

|

The smaller MTU results in a higher number of network assemblies. High network reassemblies on the receive and transmit side can result in higher CPU to be used because of the breaking apart and then reassembling of these other network packets.

In the physical switch configuration that is uplinked from the System z machine, it is recommended to prune out the private Oracle Interconnect traffic from the rest of the network.

3.3 Virtual local area network tagging

Larger network environments with many systems are sometimes configured into virtual local area networks (VLANs). VLAN tagging helps address issues such as scalability, security, and network management.

The use of a separate interconnect VLAN for each Oracle RAC cluster helps minimize the impact of network spanning tree events that affect other RAC clusters in the network.

VLAN tagging can be used to segregate the Oracle Cluster interconnect network traffic between Linux guests that are configured for different RAC Clusters. VLAN tagging is fully supported for Oracle Clusterware Interconnect deployments, if the interconnect traffic is segregated.

VLAN tagging is also useful for sharing network interfaces between clusters. Monitoring of shared network interfaces should be done to avoid performance problems with a maximum usage of up to 60% - 70% of the bandwidth of the interfaces.

Other interfaces can be aggregated to help provide more bandwidth to a saturated network interface.

VLAN tagging is configured by setting up the Linux network interfaces files, as described in 3.7, “Linux setup for Oracle RAC Interconnect Interfaces” on page 41. Depending on the type of network switch that is used, configuring of the switch ports might be required.

3.4 Designing the network configuration for HA with Oracle on Linux on System z

Another important consideration when you are designing the network for running Oracle on System z is to review the high availability (HA) requirements for the applications.

The following methods can be used to configure an HA solution for a System z environment that uses a multi-node configuration:

•Virtual Switch (Active/Passive): When one Open System Adapter (OSA) Network port fails, z/VM moves the workload to another OSA card port; z/VM handles the fail over.

•Link Aggregation (Active/Active): Allows up to eight OSA-Express adapters to be aggregated per virtual switch. Each OSA-Express port must be exclusive to the virtual switch (for example, it cannot be shared); z/VM handles the load balancing of the network traffic.

•Linux Bonding: Creates two Linux interfaces (for example, eth1 and eth2) and create a bonded interface bond0 made up of eth1 and eth2, which the application uses. Linux can be configured in various ways to handle various failover scenarios.

•Oracle HAIP: New in 11.2.0.2+, Oracle can have up to four Private Interconnect interfaces to load balance Oracle RAC Interconnect traffic. Oracle handles the load balancing and is exclusive to Oracle Clusterware implementations.

Figure 3-1 shows a shared Active/Passive VSWITCH configuration. An Active/Passive VSWITCH configuration provides HA if an OSA card fails. The failover time to the other redundant OSA card must be considered when you are working in a Oracle RAC configuration to not affect the performance of the cluster.

Figure 3-1 Shared Private Virtual Switch across Multiple System z systems (CPCs)

Similar to the Active/Passive VSWITCH configuration is z/VM Link Aggregation. z/VM Link Aggregation allows for up to eight OSA cards to be aggregated to provide failover and extra bandwidth capabilities. One restriction with link aggregation is that any OSA ports that are defined with Link Aggregation must be exclusive to the VSWITCH in the LPAR and cannot be shared with other LPARs or VSWITCHES in that LPAR.

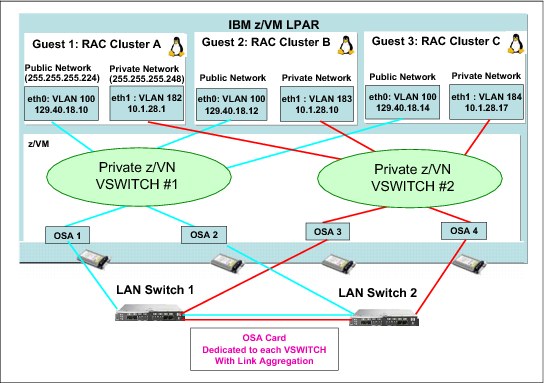

Figure 3-2 shows a VSWITCH active/active Link Aggregation configuration. An entire OSA-Express card is placed in a state of exclusivity by the virtual switch for Link Aggregation operations. The VSWITCH attempts to load balance network traffic across all the available ports automatically in the Link Aggregation port group.

Figure 3-2 Active/Active z/VM Virtual Switch Link Aggregation configuration

Another HA option is to use Linux bonding across multiple network OSA cards, as shown in Figure 3-3 on page 36. If the network configuration fails, the other network interface that uses a separate network/OSA card and switch provides the failover capability for the Oracle Interconnect.

Figure 3-3 Linux Bonding Oracle Interconnect across Multiple System z systems (CPCs)

The final HA option to consider is Oracle’s new 11gR2 HAIP capabilities. Similar to Linux bonding, two separate Linux OSA channels that are configured on separate cards and switches are presented to the Linux guest (for example, as eth1 and eth2). Oracle HAIP provides the failover and load balances the Oracle Interconnect traffic across both interconnect connections (eth1 and eth2), as shown on Figure 3-4.

Figure 3-4 Oracle HAIP Interconnect configuration

3.5 Oracle RAC recommended configurations for Linux on System z

Oracle RAC nodes can be configured in many ways in a System z environment. System z’s mainframe heritage of high redundancy and availability allow for greater flexibility in these design considerations.

In Chapter 2, “Getting started on a proof of concept project for Oracle Database on Linux on System z” on page 13, various network architectures were introduced that were based on availability.

Table 3-4 provides the recommended network configurations that are based on the architectures available for System z.

Table 3-4 Recommended Oracle RAC network configurations for Linux on System z

|

Architecture

|

Oracle Private Network (interconnect)

|

Oracle Public Network

|

|

All z/VM Linux guests in one LPAR

|

•Private Layer2 VSwitch Guest LAN OSA recommended

•Real layer 2 HiperSocket possible

•Guest LAN HiperSocket not supported

|

•Shared Public VSwitch recommended

•Shared or dedicated OSA card is possible

|

|

z/VM Linux guests on different LPARs

|

•Real Layer 2 HiperSocket recommended

•Private Layer 2 Gigabit OSA card possible

|

•Shared Public VSwitch recommended

•Shared or dedicated OSA card

|

|

z/VM Linux guests on different physical machines

|

Private Layer 2 Gigabit OSA card recommended with physical switch in between (one hop)

|

Dedicated OSA card Possible

|

If business requirements allow, HiperSocket across multiple LPARs on the same System z machine (CPC) is recommended for production environments because this provides protection from any LPAR or z/VM maintenance and key performance benefits with HiperSockets. HiperSockets can provide low latencies in the range of 50 microseconds for a 250 byte packet compared to 300 microseconds for an OSA network card.

3.5.1 Other considerations: Using Oracle Server Pools

Another method of sharing a network interface between systems is to use an Oracle feature called Server Pools.

Server Pools create a logical division of the cluster into pools of servers with many Linux Guests using the same cluster interconnect, as shown in Figure 3-5 on page 38.

Figure 3-5 Using Server Pools

Cluster management that uses Oracle Server Pools can be used for planned or unexpected outages. For example, if one of the systems that is shown in Figure 3-5 needs maintenance in LPAR 1, Oracle Clusterware migrates the instance to a free available node in LPAR 3. The relocation occurs while the database is fully operational to users.

Oracle Server Pools work well in a System z environment because resources such as network interfaces, IFL (CPU) capacity, and disk storage are shared. When the database moves to another node, the resources can be used by the other nodes.

3.6 Setting up z/VM

The following section describes the z/VM setup steps when you are configuring a private VSWITCH with VLAN tagging for Oracle RAC systems.

To make use of VLAN tagging and failover, the following commands are used to dynamically define and grant access to a separately created Layer 2 VSWITCH:

DEFINE VSWITCH ORACHPR ETHERNET RDEV 1100 3870 VLAN 100 PORTTYPE TRUNK

DEFINE NIC 1420 QDIO DEV 3

SET VSWITCH ORACHPR GRANT MAINT

COUPLE 1420 TO SYSTEM ORACHPR

SET VSWITCH ORACHPR GRANT ORARACA2 VLAN 182

SET VSWITCH ORACHPR GRANT ORARACB2 VLAN 183

SET VSWITCH ORACHPR GRANT ORARACC2 VLAN 184

To change the MTU size for the interconnect interfaces to a value that is slightly larger than the 8K database block size, we modified the VSWITCH MTU size to 8992 from the default 1492 size, as shown in the following example:

SET VSWITCH ORACHPR PATHMTUD VAL 8992

To make the change permanent, the SYSTEM CONFIG file that is shown in Example 3-1 requires updating.

Example 3-1 Sample SYSTEM CONFIG for VLAN Tagging

/*-------------------------------------------------------------------------------

/* Perf VSWITCH Definition

/*-------------------------------------------------------------------------------

DEFINE VSWITCH ORACHPR ETHERNET VLAN 100 RDEV 1100 3870 PORTTYPE TRUNK

MODIFY VSWITCH ORACHPR GRANT MAINT

MODIFY VSWITCH ORACHPR GRANT ORARACA2 VLAN 182

MODIFY VSWITCH ORACHPR GRANT ORARACB2 VLAN 183

MODIFY VSWITCH ORACHPR GRANT ORARACC2 VLAN 184

VMLAN MACPREFIX 021112 USERPREFIX 020000

For Ethernet vswitches, a unique MACPREFIX must be defined for each switch, even if they exist on different CPCs if they must communicate with each other. Example 3-2 shows the access list of a vswitch with VLAN tagging.

Example 3-2 VLAN Tagging access list

q vswitch orachpr acc

VSWITCH SYSTEM ORACHPR Type: QDIO Connected: 4 Maxconn: INFINITE

PERSISTENT RESTRICTED ETHERNET Accounting: OFF

USERBASED

VLAN Aware Default VLAN: 0100 Default Porttype: Trunk GVRP: Enabled

Native VLAN: 0001 VLAN Counters: OFF

MAC address: 02-11-12-00-00-1E MAC Protection: Uspecified

IPTimeout: 5 QueueStorage: 8

Isolation Status: OFF

Authorized userids:

MAINT Porttype: Trunk VLAN: 0100

ORARACA2 Porttype: Trunk VLAN: 0182

ORARACB2 Porttype: Trunk VLAN: 0183

ORARACC2 Porttype: Trunk VLAN: 0184

Uplink Port:

State: Ready

PMTUD setting: EXTERNAL PMTUD value: 8992

RDEV: 1100.P00 VDEV: 0600 Controller: DTCVSW1

RDEV: 3870.P00 VDEV: 0600 Controller: DTCVSW2 BACKUP

Ready; T=0.01/0.01 14:10:43

Example 3-3 shows a detailed configuration for the Private Interconnect VSWITCH with VLAN tagging.

Example 3-3 Detailed VLAN Tagging access list

q vswitch orachpr det

VSWITCH SYSTEM ORACHPR Type: QDIO Connected: 4 Maxconn: INFINITE

PERSISTENT RESTRICTED ETHERNET Accounting: OFF

USERBASED

VLAN Aware Default VLAN: 0100 Default Porttype: Trunk GVRP: Enabled

Native VLAN: 0001 VLAN Counters: OFF

MAC address: 02-11-12-00-00-1E MAC Protection: Uspecified

IPTimeout: 5 QueueStorage: 8

Isolation Status: OFF

Uplink Port:

State: Ready

PMTUD setting: EXTERNAL PMTUD value: 8992

RDEV: 1100.P00 VDEV: 0600 Controller: DTCVSW1

Uplink Port Connection:

RX packets: 39638266 Discarded: 515 Errors: 0

TX packets: 42728123 Discarded: 0 Errors: 0

RX Bytes: 28111347573 TX Bytes: 32500322442

Device: 0600 Unit: 000 Role: DATA Port: 2049

Unicast IP Addresses:

169.254.88.208 MAC: 02-11-13-00-00-0C Remote

169.254.126.130 MAC: 02-11-13-00-00-09 Remote

169.254.204.100 MAC: 02-11-13-00-0A-28 Remote

RDEV: 3870.P00 VDEV: 0600 Controller: DTCVSW2 BACKUP

Adapter Connections: Connected: 4

Adapter Owner: MAINT NIC: 1420.P00 Name: UNASSIGNED Type: QDIO

Porttype: Trunk

Port: 0001

Adapter Owner: ORARACA2 NIC: 1420.P00 Name: UNASSIGNED Type: QDIO

Porttype: Trunk

RX packets: 20220767 Discarded: 515 Errors: 0

TX packets: 15132101 Discarded: 0 Errors: 34

RX Bytes: 15524758705 TX Bytes: 8166344863

Device: 1422 Unit: 002 Role: DATA Port: 0010

VLAN: 0182

Options: Ethernet Broadcast

Unicast MAC Addresses:

02-11-12-00-00-38 IP: 169.254.28.198

Multicast MAC Addresses:

01-00-5E-00-00-01

01-00-5E-00-00-FB

01-00-5E-00-01-00

When you are switching from this setup to use Link Aggregation, it is important to remember that the entire OSA card is used for a port group, not just a subset of three addresses.

If you try to use a range of addresses on this card for another function, then you see “exclusive use errors.”

The network administrator also must change the physical switch to enable port groups. Otherwise, you see a message stating: “LACP (Link Aggregation Control Protocol) was not enabled on partner.”

The advantages of a Link Aggregation setup include increased throughput, resiliency, and bandwidth because I/O that is sent from one of the interfaces (in this case, 1100 interface) can be returned via the other interface, which in our case was 3870.

3.7 Linux setup for Oracle RAC Interconnect Interfaces

There were four different private interconnect scenarios tested. Scenarios 1 and 2 that were based on VSWITCH are not apparent to Linux on System z. The Linux guests recognize the VSWITCHES as the same device address whether it was configured active/passive or with link aggregation. The following scenarios were tested:

•Two OSA cards configured to a VSWITCH defined as active/passive.

•Two OSA cards configured to a VSWITCH defined by using link aggregation.

•Two physical OSA cards that Linux on System z used to perform Ethernet Bonding.

•Two physical OSA cards given to Oracle to implement redundant interconnect HAIP feature.

The following steps were used to define the various VLAN tagged network interfaces (VSWITCH, Ethernet Bonding, and HAIP). After the z/VM definitions are in place, we assume that the following points:

•The virtual NIC device address of 0.0.1410-0.0.1412 was used for our public interface and was always named eth0.

•The private z/VM VSWITCH device address 0.0.1420-0.0.1422 was used for the Oracle Interconnect and was always called eth1.

•The dedicated OSA card device addresses varied between clusters and physical CPCs. There were always two physical OSA cards defined to each of our Linux guests and they were named eth2 and eth3. Table 3-5 shows how these interfaces were used in our study.

Table 3-5 Consolidated table of all Linux Interfaces Used

|

Usage

|

Interface Name

|

Description

|

|

Public Interface

|

eth0

|

z/VM VSWITCH (separate from

interconnect)

|

|

Private interconnect

|

eth1

|

z/VM VSWITCH

|

|

Private interconnect

|

eth2

|

Ethernet bonding / HAIP

|

|

Private interconnect

|

eth3

|

Ethernet bonding / HAIP

|

Table 3-5 also shows the multiple Linux interfaces that were configured. If you are using Linux bonding eth0 and eth1 can be bonded for the Public Interface. We configured two Private Interfaces (eth1 with VSWITCH Aggregation) and used eth2 and eth3 for the Bonded private interconnect solutions.

For the private interconnect, we used two physical OSA cards on each CPC. To segregate different networks (per RAC cluster) that use the same NIC (such as a VSWITCH), we configured VLAN tagging.

This process required the following tasks:

•The system router that the OSA cards of the CPC were connected to needed to be trunked into separate subnets. Each subnet was given a VLAN tag. The VLAN tag identifiers that were used in this study were 182, 183 and 184. Each tag was for a different Oracle RAC cluster.

•The z/VM VSWITCH had to be defined as VLAN aware.

•The z/VM VSWITCH must be defined as Layer 2 for an Oracle Interconnect to function properly.

•Linux configuration files also set the physical OSA cards used for Ethernet bonding and HAIP as Layer 2.

•The MTU size had to match throughout the environment, from system router to z/VM to Linux configuration.

3.7.1 Setting up the private VSWITCH

It is assumed that the VSWITCH device address is online to the Linux system. We use device 0.0.1420-0.0.1422 as an example.

SUSE Linux Enterprise Server 11

Complete the following steps to set up a VSWITCH in SUSE Linux Enterprise Server 11:

1. Add the VSWITCH in /etc/udev/rules.d

2. Ensure that Layer2 is enabled for the Oracle RAC interconnect. Configure the VSWITCH by using the ifcfg scripts. A standard and VLAN tagged script is shown. The ifcfg scripts can be found under /etc/sysconfig/network/ directory.

3. Reboot the system and issue the ifconfig command.

4. Add the VSWITCH in /etc/udev/rules.d/. Each network device is placed under the following directory:

-rw-r--r-- 1 root root 1661 Jul 17 10:52 51-qeth-0.0.1410.rules

-rw-r--r-- 1 root root 1661 Sep 14 01:19 51-qeth-0.0.1420.rules

-rw-r--r-- 1 root root 1661 Sep 12 09:14 51-qeth-0.0.1423.rules

The value of ATTR{layer2}="1"is required for the Oracle Interconnect, as shown when running the following command (note the second last line):

$ cat 51-qeth-0.0.1420.rules

#Configure qeth device at 0.0.1420/0.0.1421/0.0.1422

ACTION=="add", SUBSYSTEM=="drivers", KERNEL=="qeth", IMPORT{program}="collect 0.0.1420 %k 0.0.1420 0.0.1421 0.0.1422 qeth"

ACTION=="add", SUBSYSTEM=="ccw", KERNEL=="0.0.1420", IMPORT{program}="collect 0.0.1420 %k 0.0.1420 0.0.1421 0.0.1422 qeth"

ACTION=="add", SUBSYSTEM=="ccw", KERNEL=="0.0.1421", IMPORT{program}="collect 0.0.1420 %k 0.0.1420 0.0.1421 0.0.1422 qeth"

ACTION=="add", SUBSYSTEM=="ccw", KERNEL=="0.0.1422", IMPORT{program}="collect 0.0.1420 %k 0.0.1420 0.0.1421 0.0.1422 qeth"

ACTION=="remove", SUBSYSTEM=="drivers", KERNEL=="qeth", IMPORT{program}="collect --remove 0.0.1420 %k 0.0.1420 0.0.1421 0.0.1422 qeth"

ACTION=="remove", SUBSYSTEM=="ccw", KERNEL=="0.0.1420", IMPORT{program}="collect --remove 0.0.1420 %k 0.0.1420 0.0.1421 0.0.1422 qeth"

ACTION=="remove", SUBSYSTEM=="ccw", KERNEL=="0.0.1421", IMPORT{program}="collect --remove 0.0.1420 %k 0.0.1420 0.0.1421 0.0.1422 qeth"

ACTION=="remove", SUBSYSTEM=="ccw", KERNEL=="0.0.1422", IMPORT{program}="collect --remove 0.0.1420 %k 0.0.1420 0.0.1421 0.0.1422 qeth"

TEST=="[ccwgroup/0.0.1420]", GOTO="qeth-0.0.1420-end"

ACTION=="add", SUBSYSTEM=="ccw", ENV{COLLECT_0.0.1420}=="0", ATTR{[drivers/ccwgroup:qeth]group}="0.0.1420,0.0.1421,0.0.1422"

ACTION=="add", SUBSYSTEM=="drivers", KERNEL=="qeth", ENV{COLLECT_0.0.1420}=="0", ATTR{[drivers/ccwgroup:qeth]group}="0.0.1420,0.0.1421,0.0.1422"

LABEL="qeth-0.0.1420-end"

ACTION=="add", SUBSYSTEM=="ccwgroup", KERNEL=="0.0.1420", ATTR{portno}="0"

ACTION=="add", SUBSYSTEM=="ccwgroup", KERNEL=="0.0.1420", ATTR{layer2}="1"

ACTION=="add", SUBSYSTEM=="ccwgroup", KERNEL=="0.0.1420", ATTR{online}="1"

5. Configure the VSWITCH by using the ifcfg scripts. The following example is a standard ifcfg script (settings such as netmask and broadcast differ based on your network). An MTU size of 8992 was used throughout the network (VSWITCH, OSA cards and network switch):

ora-raca-1:/etc/sysconfig/network # cat ifcfg-eth1

BOOTPROTO='static'

IPADDR='10.1.28.1/29'

NETMASK='255.255.255.248'

BROADCAST='10.1.28.7'

STARTMODE='onboot'

MTU='8992'

NAME='OSA Express Network card (0.0.1420)'

6. Configure the VSWITCH by using the ifcfg scripts for VLAN tagging. The following examples are the two ifcfg scripts that were used. All IP information was removed from ifcfg-eth1 and placed into ifcfg-eth1_182 (182 is our VLAN tag). The VLAN tag is given to you by your network administrator. Separate IP information can be placed into the ifcfg-eth1 script for non-VLAN tagging use. No additions are needed under /etc/udev/rules.d:

ora-raca-1:/etc/sysconfig/network # cat ifcfg-eth1

BOOTPROTO='static'

STARTMODE='onboot'

MTU='8992'

NAME='OSA Express Network card (0.0.1420)'

ora-raca-1:/etc/sysconfig/network # cat ifcfg-eth1_182

BOOTPROTO='static'

ETHERDEVICE='eth1'

IPADDR='10.1.28.1/29'

NETMASK='255.255.255.248'

BROADCAST='10.1.28.7'

STARTMODE='auto'

MTU='8992'

NAME='OSA Express Network card (0.0.1420)'

|

Important: The ETHERDEVICE statement is required on SUSE Linux Enterprise Server for VLAN tagging and is the link back to the eth1 device.

|

7. Reboot the system and run ifconfig to verify (only the eth1 interfaces are shown here):

ora-raca-1:~ # ifconfig

eth1 Link encap:Ethernet HWaddr 02:00:00:6F:77:CF

inet6 addr: fe80::ff:fe6f:77cf/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:8992 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:15 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 b) TX bytes:1254 (1.2 Kb)

eth1_182 Link encap:Ethernet HWaddr 02:00:00:6F:77:CF

inet addr:10.1.28.1 Bcast:10.1.28.7 Mask:255.255.255.248

inet6 addr: fe80::ff:fe6f:77cf/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:8992 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:7 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:0 (0.0 b) TX bytes:578 (578.0 b)

Red Hat Enterprise Linux 6

Complete the following steps to setup a VSWITCH in Red Hat Enterprise Linux 6 (RHEL6):

1. Configure the VSWITCH and ensure Layer 2 in the ifcfg scripts in the /etc/sysconfig/network-scripts/ directory. A standard and VLAN tagged script is shown in steps 3 and 4 of this procedure.

2. Reboot the system and run the ifconfig command to verify.

3. Configure the VSWITCH by using the ifcfg scripts. The following example is a standard ifcfg script (settings such as netmask and broadcast differ based on your network). An MTU size of 8992 was used throughout the network (VSWITCH, OSA cards and network switch):

[root@ora-racb-1 network-scripts]# cat ifcfg-eth1

DEVICE="eth1"

BOOTPROTO="static"

IPADDR="10.1.28.9"

MTU="8992"

NETMASK="255.255.255.248"

NETTYPE="qeth"

NM_CONTROLLED="yes"

ONBOOT="yes"

OPTIONS="layer2=1"

SUBCHANNELS="0.0.1420,0.0.1421,0.0.1422"

4. Configure the VSWITCH by using the ifcfg scripts for VLAN tagging. The following examples are the two ifcfg scripts used. All IP information was removed from ifcfg-eth1 and placed into ifcfg-eth1_183 (183 is our VLAN tag for our RHEL6 RAC cluster). The VLAN tag is given to you by your network administrator. Separate IP information can be placed into the ifcfg-eth1 script for non-VLAN tagging use:

[root@ora-racb-1 network-scripts]# cat ifcfg-eth1

DEVICE="eth1"

BOOTPROTO="static"

NETTYPE="qeth"

NM_CONTROLLED="yes"

ONBOOT="yes"

OPTIONS="layer2=1"

MTU="8992"

SUBCHANNELS="0.0.1420,0.0.1421,0.0.1422"

[root@ora-racb-1 network-scripts]# cat ifcfg-eth1_183

DEVICE="eth1_183"

BOOTPROTO="static"

IPADDR="10.1.28.9"

MTU="8992"

NETMASK="255.255.255.248"

VLAN="yes"

|

Important: The VLAN=yes statement is required on RHEL6 for VLAN tagging. Also, the DEVICE statement is the link back to the eth1 device. The VLAN tag is appended to the name.

|

5. Reboot the system and run the ifconfig command to verify (only the eth1 interfaces are shown here):

[root@ora-racb-1 network-scripts]# ifconfig

eth1 Link encap:Ethernet HWaddr 02:11:13:00:13:85

inet6 addr: fe80::11:13ff:fe00:1385/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:8992 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:35 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 b) TX bytes:4867 (4.7 KiB)

eth1_183 Link encap:Ethernet HWaddr 02:11:13:00:13:85

inet addr:10.1.28.9 Bcast:10.1.28.15 Mask:255.255.255.248

inet6 addr: fe80::11:13ff:fe00:1385/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:8992 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:28 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:0 (0.0 b) TX bytes:4289 (4.1 KiB)

3.7.2 Setting up Ethernet bonding

Ethernet bonding is an effective way to increase bandwidth and reliability. In our example, two separate OSA cards were bonded. For our purposes, the device addresses for the two OSA cards are 0.0.1303-0.0.1305 and 0.0.1423-0.0.1425

SUSE Linux Enterprise Server 11

Complete the following steps to setup Ethernet bonding in SUSE Linux Enterprise Server 11:

1. Add the two OSA card devices to the /etc/udev/rules.d directory.

2. Ensure that Layer2 is enabled for the Oracle RAC Interconnect.

3. Configure each OSA card by using the ifcfg scripts. The VLAN tag example is used. The ifcfg scripts can be found in the /etc/sysconfig/network/ directory.

4. Reboot the system and issue the ifconfig command.

5. Add the following OSA device addresses to the /etc/udev/rules.d/ directory (each network device is placed under this directory):

ora-raca-1:/etc/udev/rules.d # ls -la 51-qeth*

-rw-r--r-- 1 root root 1661 Jul 30 16:12 51-qeth-0.0.1303.rules

-rw-r--r-- 1 root root 1661 Jul 17 10:52 51-qeth-0.0.1410.rules

-rw-r--r-- 1 root root 1661 Sep 14 01:19 51-qeth-0.0.1420.rules

-rw-r--r-- 1 root root 1661 Sep 12 09:14 51-qeth-0.0.1423.rules

The contents of the file are no different than that of a VSWITCH other than the device addresses. Ensure that layer2 parameter is set to 1. The contents for the 1303 OSA device is shown in the following example (note the second to last line):

ora-raca-1:/etc/udev/rules.d # cat 51-qeth-0.0.1303.rules

# Configure qeth device at 0.0.1303/0.0.1304/0.0.1305

ACTION=="add", SUBSYSTEM=="drivers", KERNEL=="qeth", IMPORT{program}="collect 0.0.1303 %k 0.0.1303 0.0.1304 0.0.1305 qeth"

ACTION=="add", SUBSYSTEM=="ccw", KERNEL=="0.0.1303", IMPORT{program}="collect 0.0.1303 %k 0.0.1303 0.0.1304 0.0.1305 qeth"

ACTION=="add", SUBSYSTEM=="ccw", KERNEL=="0.0.1304", IMPORT{program}="collect 0.0.1303 %k 0.0.1303 0.0.1304 0.0.1305 qeth"

ACTION=="add", SUBSYSTEM=="ccw", KERNEL=="0.0.1305", IMPORT{program}="collect 0.0.1303 %k 0.0.1303 0.0.1304 0.0.1305 qeth"

ACTION=="remove", SUBSYSTEM=="drivers", KERNEL=="qeth", IMPORT{program}="collect --remove 0.0.1303 %k 0.0.1303 0.0.1304 0.0.1305 qeth"

ACTION=="remove", SUBSYSTEM=="ccw", KERNEL=="0.0.1303", IMPORT{program}="collect --remove 0.0.1303 %k 0.0.1303 0.0.1304 0.0.1305 qeth"

ACTION=="remove", SUBSYSTEM=="ccw", KERNEL=="0.0.1304", IMPORT{program}="collect --remove 0.0.1303 %k 0.0.1303 0.0.1304 0.0.1305 qeth"

ACTION=="remove", SUBSYSTEM=="ccw", KERNEL=="0.0.1305", IMPORT{program}="collect --remove 0.0.1303 %k 0.0.1303 0.0.1304 0.0.1305 qeth"

TEST=="[ccwgroup/0.0.1303]", GOTO="qeth-0.0.1303-end"

ACTION=="add", SUBSYSTEM=="ccw", ENV{COLLECT_0.0.1303}=="0", ATTR{[drivers/ccwgroup:qeth]group}="0.0.1303,0.0.1304,0.0.1305"

ACTION=="add", SUBSYSTEM=="drivers", KERNEL=="qeth", ENV{COLLECT_0.0.1303}=="0", ATTR{[drivers/ccwgroup:qeth]group}="0.0.1303,0.0.1304,0.0.1305"

LABEL="qeth-0.0.1303-end"

ACTION=="add", SUBSYSTEM=="ccwgroup", KERNEL=="0.0.1303", ATTR{portno}="0"

ACTION=="add", SUBSYSTEM=="ccwgroup", KERNEL=="0.0.1303", ATTR{layer2}="1"

ACTION=="add", SUBSYSTEM=="ccwgroup", KERNEL=="0.0.1303", ATTR{online}="1"

6. Configure both OSA devices by using the ifcfg scripts (the following examples are ifcfg scripts for two OSA qeth devices that are bonded to a third interfaced named bond0. The interface names eth2 and eth3 were used. All IP information was removed from the ifcfg-eth2, ifcfg-eth3 and ifcfg-bond0 files. VLAN tagging is added and a bond0_182 is created. Separate IP information can be placed into the ifcfg-bond0 script for non-VLAN tagging use. An MTU size of 8992 was used throughout the network (VSWITCH, OSA cards and network switch):

ora-raca-1:/etc/sysconfig/network # cat ifcfg-eth2

BOOTPROTO='static'

STARTMODE='onboot'

ETHTOOL_OPTIONS=''

INTERFACETYPE='qeth'

USERCONTROL='no'

MTU='8992'

NAME='OSA Express Network card (0.0.1303)'

ora-raca-1:/etc/sysconfig/network # cat ifcfg-eth3

BOOTPROTO='static'

STARTMODE='onboot'

ETHTOOL_OPTIONS=''

INTERFACETYPE='qeth'

USERCONTROL='no'

MTU='8992'

NAME='OSA Express Network card (0.0.1423)'

ora-raca-1:/etc/sysconfig/network # cat ifcfg-bond0

BONDING_MASTER='yes'

BONDING_MODULE_OPTS='mode=0 miimon=100'

BONDING_SLAVE0='eth2'

BONDING_SLAVE1='eth3'

BOOTPROTO='static'

STARTMODE='auto'

MTU='8992'

NAME='OSA Express Network card (0.0.1423 and 1303 bond)

ora-raca-1:/etc/sysconfig/network # cat ifcfg-bond0_182

BOOTPROTO='static'

ETHERDEVICE='bond0'

IPADDR='10.1.28.3/29'

NETMASK='255.255.255.248'

BROADCAST='10.1.28.7'

STARTMODE='auto'

MTU='8992'

NAME='OSA Express Network card (0.0.1423 and 1303 bond)'

|

Important: The ETHERDEVICE statement is required on SUSE Linux Enterprise Server for VLAN tagging and is the link back to the bond0 device.

For bonding options, the mode is the bonding policy such as round robin, active/backup, and so on. The default is 0 (round robin). The miimon option specifies how often each slave is monitored for link failures.

Also, in the ifcfg-bond0 file the BONDING_SLAVE0 and BONDING_SLAVE1 statements link the bond back to the eth2 and eth3 devices.

|

7. Reboot the system and run the ifconfig command to verify (only the eth2, eth3, bond0, and bond0_182 interfaces are shown here):

ora-raca-1:~ # ifconfig

bond0 Link encap:Ethernet HWaddr 02:00:00:8F:36:87

inet6 addr: fe80::ff:fe8f:3687/64 Scope:Link

UP BROADCAST RUNNING MASTER MULTICAST MTU:8992 Metric:1

RX packets:2 errors:0 dropped:0 overruns:0 frame:0

TX packets:19 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:136 (136.0 b) TX bytes:1582 (1.5 Kb)

bond0_182 Link encap:Ethernet HWaddr 02:00:00:8F:36:87

inet addr:10.1.28.3 Bcast:10.1.28.7 Mask:255.255.255.248

inet6 addr: fe80::ff:fe8f:3687/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:8992 Metric:1

RX packets:2 errors:0 dropped:0 overruns:0 frame:0

TX packets:5 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:128 (128.0 b) TX bytes:438 (438.0 b)

eth2 Link encap:Ethernet HWaddr 02:00:00:8F:36:87

UP BROADCAST RUNNING SLAVE MULTICAST MTU:8992 Metric:1

RX packets:2 errors:0 dropped:0 overruns:0 frame:0

TX packets:10 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:136 (136.0 b) TX bytes:844 (844.0 b)

eth3 Link encap:Ethernet HWaddr 02:00:00:8F:36:87

UP BROADCAST RUNNING SLAVE MULTICAST MTU:8992 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:9 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 b) TX bytes:738 (738.0 b)

Red Hat Enterprise Linux 6

Complete the following steps to setup Ethernet bonding in RHEL 6:

1. Configure two OSA card devices and ensure Layer 2 is in the ifcfg scripts in the /etc/sysconfig/network-scripts/ directory. The VLAN tag example is used.

2. Reboot the system and run the ifconfig command

3. Configure the OSA devices by using the ifcfg scripts. The following examples are ifcfg scripts for two OSA qeth devices that are bonded to a third interfaced named bond0. The interface names eth2 and eth3 were used. All IP information was removed from the ifcfg-eth2, ifcfg-eth3, and ifcfg-bond0 files. VLAN tagging is added and a bond0_183 is created. Separate IP information can be placed into the ifcfg-bond0 script for non-VLAN tagging use. The interface names of eth2 and eth3 were used. An MTU size of 8992 was used throughout the network (VSWITCH, OSA cards and network switch):

[root@ora-racb-1 network-scripts]# cat ifcfg-eth2

DEVICE="eth2"

BOOTPROTO="static"

NETTYPE="qeth"

NM_CONTROLLED="no"

MASTER="bond0"

SLAVE="yes"

ONBOOT="yes"

OPTIONS="layer2=1"

MTU="8992"

SUBCHANNELS="0.0.1429,0.0.142a,0.0.142b"

[root@ora-racb-1 network-scripts]# cat ifcfg-eth3

DEVICE=eth3

BOOTPROTO="static"

NETTYPE="qeth"

NM_CONTROLLED="no"

MASTER="bond0"

SLAVE="yes"

ONBOOT="yes"

OPTIONS="layer2=1"

MTU="8992"

SUBCHANNELS="0.0.1309,0.0.130a,0.0.130b"

[root@ora-racb-1 network-scripts]# cat ifcfg-bond0

DEVICE=bond0

ONBOOT=yes

BOOTPROTO=none

USERCTL=no

MTU=8992

BONDING_OPTS='mode=0 miimon=100'

[root@ora-racb-1 network-scripts]# cat ifcfg-bond0_183

DEVICE=bond0_183

BOOTPROTO=static

IPADDR=10.1.28.11

MTU="8992"

NETMASK=255.255.255.248

VLAN=yes

|

Tips: The VLAN=yes statement is required on RHEL6 VLAN tagging.

Different from SUSE Linux Enterprise Server, each ifcfg script that is used for bonding states whether that device is a slave and who the master is (in our case, bond0).

For bonding options, the mode is the bonding policy, such as round robin, active/backup, and so on. The default is 0 (round robin). The miimon option specifies how often each slave is monitored for link failures.

|

4. Reboot the system and issue ifconfig to verify (only the eth2, eth3, bond0, and bond0_183 interfaces are shown here):

[root@ora-racb-1 ~]# ifconfig

bond0 Link encap:Ethernet HWaddr 02:00:00:3F:FD:F3

inet6 addr: fe80::ff:fe3f:fdf3/64 Scope:Link

UP BROADCAST RUNNING MASTER MULTICAST MTU:8992 Metric:1

RX packets:79 errors:0 dropped:0 overruns:0 frame:0

TX packets:31 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:16217 (15.8 KiB) TX bytes:4657 (4.5 KiB)

bond0_183 Link encap:Ethernet HWaddr 02:00:00:3F:FD:F3

inet addr:10.1.28.11 Bcast:10.1.28.15 Mask:255.255.255.248

inet6 addr: fe80::ff:fe3f:fdf3/64 Scope:Link

UP BROADCAST RUNNING MASTER MULTICAST MTU:8992 Metric:1

RX packets:79 errors:0 dropped:0 overruns:0 frame:0

TX packets:23 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:16217 (15.8 KiB) TX bytes:3917 (3.8 KiB)

eth2 Link encap:Ethernet HWaddr 02:00:00:3F:FD:F3

UP BROADCAST RUNNING SLAVE MULTICAST MTU:8992 Metric:1

RX packets:34 errors:0 dropped:0 overruns:0 frame:0

TX packets:17 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:6899 (6.7 KiB) TX bytes:2276 (2.2 KiB)

eth3 Link encap:Ethernet HWaddr 02:00:00:3F:FD:F3

UP BROADCAST RUNNING SLAVE MULTICAST MTU:8992 Metric:1

RX packets:45 errors:0 dropped:0 overruns:0 frame:0

TX packets:14 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:9318 (9.0 KiB) TX bytes:2381 (2.3 KiB)

3.7.3 Setting up Oracle HAIP

Oracle introduced a new feature called HAIP. This feature allows for the setup of the private interconnect on RAC without the need for the user to create bonded interfaces. HAIP provides load balancing and HA across multiple network devices (up to four). You can enable HAIP during the Oracle installation process. HAIP requires two network interfaces from each node from the operating system. These interfaces incorporate VLAN tagging.

SUSE Linux Enterprise Server 11

Complete the following steps to set up HAIP in SUSE Linux Enterprise Server 11:

1. Add the two OSA card devices in the /etc/udev/rules.d/ directory. The udev rules files are not shown. For more information, see “Setting up Ethernet bonding” on page 45.

2. Ensure that Layer2 is enabled for the Oracle RAC Interconnect.

3. Configure the two OSA card devices for VLAN tagging by using the ifcfg scripts. The ifcfg scripts can be found in the /etc/sysconfig/network/ directory.

4. Reboot the system and run the ifconfig command.

5. Configure both OSA devices by using the ifcfg scripts. The following examples are ifcfg scripts for two OSA qeth devices that are bonded to a third interfaced named bond0. The interface names eth1 and eth2 were used. We reused the eth1 interface name; therefore, we did not need an eth3. All IP information was removed from the ifcfg-eth1, ifcfg-eth2, and ifcfg-bond0 files. VLAN tagging is added and a bond0_182 is created. Separate IP information can be placed into the ifcfg-bond0 script for non-VLAN tagging use. An MTU size of 8992 was used throughout the network (VSWITCH, OSA cards and network switch):

ora-raca-1:/etc/sysconfig/network # cat ifcfg-eth1

BOOTPROTO='static'

STARTMODE='onboot'

ETHTOOL_OPTIONS=''

INTERFACETYPE='qeth'

USERCONTROL='no'

MTU='8992'

NAME='OSA Express Network card (0.0.1303)'

ora-raca-1:/etc/sysconfig/network # cat ifcfg-eth1_182

BOOTPROTO='static'

ETHERDEVICE='eth1'

IPADDR='10.1.28.1/29'

NETMASK='255.255.255.248'

BROADCAST='10.1.28.7'

STARTMODE='auto'

MTU='8992'

NAME='OSA Express Network card (0.0.1303)'

ora-raca-1:/etc/sysconfig/network # cat ifcfg-eth2

BOOTPROTO='static'

STARTMODE='onboot'

ETHTOOL_OPTIONS=''

INTERFACETYPE='qeth'

USERCONTROL='no'

MTU='8992'

NAME='OSA Express Network card (0.0.1423)'

ora-raca-1:/etc/sysconfig/network # cat ifcfg-eth2_182

BOOTPROTO='static'

ETHERDEVICE='eth2'

IPADDR='10.1.28.3/29'

NETMASK='255.255.255.248'

BROADCAST='10.1.28.7'

STARTMODE='auto'

MTU='8992'

NAME='OSA Express Network card (0.0.1423)

6. Reboot the system and run the ifconfig command to verify (only the eth1, eth2, eth1_182, and eth2_182 interfaces are shown here):

ora-raca-1:~ # ifconfig

eth1 Link encap:Ethernet HWaddr 02:00:00:09:EC:87

inet6 addr: fe80::ff:fe09:ec87/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:8992 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:14 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 b) TX bytes:1184 (1.1 Kb)

eth1_182 Link encap:Ethernet HWaddr 02:00:00:09:EC:87

inet addr:10.1.28.1 Bcast:10.1.28.7 Mask:255.255.255.248

inet6 addr: fe80::ff:fe09:ec87/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:8992 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:7 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:0 (0.0 b) TX bytes:578 (578.0 b)

eth2 Link encap:Ethernet HWaddr 02:00:00:2A:C8:F4

inet6 addr: fe80::ff:fe2a:c8f4/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:8992 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:15 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 b) TX bytes:1254 (1.2 Kb)

eth2_182 Link encap:Ethernet HWaddr 02:00:00:2A:C8:F4

inet addr:10.1.28.3 Bcast:10.1.28.7 Mask:255.255.255.248

inet6 addr: fe80::ff:fe2a:c8f4/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:8992 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:7 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:0 (0.0 b) TX bytes:578 (578.0 b)

3.8 Notes and observations

For VLAN tagging, we found that with RHEL6, we had to temporarily disable the Network Manager service to configure the VLAN tagging. Use the following chkconfig command to see if Network Manager is enabled:

[root@ora-racc-1 network-scripts]# chkconfig --list | grep NetworkManager

NetworkManager 0:off 1:off 2:on 3:on 4:on 5:on 6:off

To disable or re-enable Network Manager, use the following command:

[root@ora-racc-1 network-scripts]# chkconfig NetworkManager off

[root@ora-racc-1 network-scripts]# chkconfig --list | grep NetworkManager

NetworkManager 0:off 1:off 2:off 3:off 4:off 5:off 6:off

To change the MTU size, we had to try multiple times to increase MTU for eth1_182 because it failed with the following message:

ora-raca-1:/etc/sysconfig/network # ifup eth1_182

eth1_182 name: OSA Express Network card (0.0.1420)

RTNETLINK answers: Numerical result out of range

RTNETLINK answers: Numerical result out of range

Cannot set mtu of 8992 to interface eth1_182.

The following problems existed:

•We must make sure that our network router also was changed to accommodate the larger MTU size and Jumbo frames. All components in your network should have matching MTU sizes. This configuration prevents one component from attempting to auto-negotiate down to a lower MTU size.

•After this issue is addressed, ensure that you change the MTU size of the base ethx device before the VLAN tagged device is changed.

After the network router was set to an MTU of 8992, the interface eth1 also can be set to MTU=8992. Also, if you are using VLAN tagging, change the VLAN tagged interface eth1_xxx to the appropriate MTU size.

3.9 Summary

The performance of each of these HA solutions is similar, including the operating system versions that were selected.

With all of these solutions, careful monitoring is needed from the Oracle AWR reports to the Linux netstat output to the z/VM network or HMC Network usages reports to ensure network bandwidths are not exceeded.

Figure 3-6 shows the Interconnect Ping latencies section of an Oracle AWR report when the performance of the Oracle RAC Interconnect is monitored.

Figure 3-6 Oracle AWR Interconnect bandwidth

|

Important: The decision to add more HA into your network IT solutions is based on your business requirements, hardware availability (OSA features), and the skill sets that are available.

|

VSWITCH aggregation, Linux Bonding, and Oracle’s Redundant Interconnect have many benefits for providing more HA for running Oracle databases with Linux on System z workloads for planned or unplanned network outages.

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.