6

How Fog Computing Can Support Latency/Reliability-sensitive IoT Applications: An Overview and a Taxonomy of State-of-the-art Solutions

Paolo Bellavista1, Javier Berrocal2, Antonio Corradi1, Sajal K. Das3, Luca Foschini1, Isam Mashhour Al Jawarneh1, and Alessandro Zanni1

1Department of Computer Science and Engineering, University of Bologna, 40136 Bologna, Italy

2Department of Computer and Telematics Systems Engineering, University of Extremadura, 10003, Cáceres, Spain

3Department of Computer Science, Missouri University of Science and Technology, Rolla, MO, 65409, USA

6.1 Introduction

The widespread ubiquitous adoption of resource-constrained Internet of Things (IoT) devices has led to the collection of massive amounts of heterogeneous data on a continual basis, that when coupled with data coming from highly trafficked websites forms a challenge that exceeds the capacities of today's most powerful computational resources. Those avalanches of data coming from sensor-enabled and alike devices hides a great value that normally incorporates actionable insights if extracted in a timely fashion.

IoT is loosely defined as any network of connected devices spanning from home electronic appliances, connected vehicles, and sensor-enabled devices and actuators that interact and exchange data in a nonstationary fashion. It has been predicted that by 2020 the number of IoT devices could reach 50 billion connected to the Internet, and twice that number are anticipated within the next decade.

IoT devices are normally resource-constrained, limiting their ability to contribute toward advanced data analytics. However, nowadays with cloud infrastructures, the computations required to perform costly operations are hardly ever an issue. Cloud computing environments have gained an unprecedented spread and adoption in the last decade or so, aiming at deriving (near) real-time actionable insights from fully loaded ingestion pipelines. This is in part attributed to the fact that those environments are normally elastic in their offering for dynamic provisioning of data management resources on a per-need basis. Amazon Web Services (AWS) remains the most widely accepted competitor in the market and looks set to remain that way for some time. For those aims to be achieved, production-grade continuous applications must be launched, which typically raises several obstacles. Most important, however, is the ability to guarantee the end-to-end reliability of the overall structure, which is achieved by being resilient to failures such as those most common in upstream components, which ensures delivering highly dependable and available actionable insights in real time. Furthermore, the architecture should guarantee the correctness in handling late and out-of-order data, which is a fact in real-life scenarios.

Cloud computing environments offer a great ability to process highly trafficked loads of data. However, IoT applications have binding prerequisites. The vast majority of them are time-sensitive and require practically instant responsiveness while, in the meantime, quality of service (QoS), security, privacy, and location-awareness are well preserved [1]. With an expansive number of IoT devices continuously sending huge amounts of data, two-tier architectures fall short in meeting desired requirements [2].

There are several challenges that render solo-cloud deployments insufficient. From those, we focus on the case where there is an oscillation in data arrival rates, which is sometimes characterized as ephemeral, whereas in other circumstances it can be persistent and severe. Current cloud-based solutions highly depend either on elastic provisioning of resources on-the-fly or aggressively trading-off accuracy for latency by early discarding of some data or (worse) some processing stages. While those ad-hoc and glue-code solutions constitute conceptually appealing approaches in specific scenarios, they are undesirable in systems that are resistant to approximations and anticipate exact answers that, if not provided, can cause the system to become untrustworthy, thus requiring extra care when analysis of such data is essential. This does not detract from the values obtained by the two-layered cloud-edge architectures but rather complementing them in a manner that ensures reliability for scenarios that seek exact results on-the-fly. Scenarios where this applies are innumerable, e.g. smart traffic lights (STL), smart connected vehicles (SCV), and smart buildings to mention just a few. However, throughout our discussions we place due importance on both cloud-only and cloud-fog architectures. All that said, we next present an alternative in this chapter.

In the relevant literature, several works are proposed for facing up to the challenges introduced by the two-tiered architecture. Some of them merely depend on integrating lightweight sensors with the cloud, to counter common cloud issues such as latency, the capacity to support intermittent recurrent events, and the absence of flexibility when various remote sensors transmit information at the same time [3, 4]. Another solution focuses on increasing the number of layers in the architecture to push part of the processing load uphill to intermediate layers [5], subsequently reducing information traffic, reaction time, and the response location-awareness.

There is significant overload caused by spikes in network traffic, when data arrival rates grow at an unprecedented and mostly unpredictable pace. To meet this challenge, fog computing comes into play, which is simply treated as a programming and communication paradigm that conveys the cloud assets closer to the IoT devices. Stated another way, it fills in as an interface that associates cloud with IoT devices in a manner that improves their cooperation fundamentally by keeping the advantages of the two universes by broadening the application field of cloud computing and expanding the asset accessibility in IoT settings.

Fog importance stems from the fact that there are situations in which a little computation is performed just-near the edge, thus lightening the burden on the shoulders of network hops and the cloud. Fog computing is somewhat novel but is receiving elevated attention among researches and becoming a widely discussed topic. For example, fog nodes can handle some nonsubstantial computing loads to act as an intermediate caching system that stores some intermediate computational results (e.g. preserving the state of an online aggregation framework), thus preventing cold-start scenarios (those scenarios that mandates recomputation in case of state loss in stateful operations), so that a stream processing engine can resume from where it left off before the (non-)intentional continuous query restarts. However, one hindering challenge could be the fact that fog is still in its infancy. Nevertheless, there are a considerable number of works that focus on different aspects for fog optimization, ranging from communication requirements to security to privacy and to responsiveness, to mention just a few. Current efforts are mostly following layer stack-up trends. Older systems of the technology are maintained while a person or group is trying to adapt to new technologies. This serves as a new jumping-off point for incorporating newer approaches. As such, we here posit the importance of incorporating fog computing as a core player in the current two-tiered architecture in order to reap the benefits of fog. To better corroborate our conclusions, we justify the incorporation of six service layers that span all three worlds (fog, cloud, and edge). With this setting in mind, some may be misled by intuitively concluding that sudden data arrival spikes are no longer a problem when seeking reliable answers. More rigorously, by this division we aim at breaking the architecture into its granular constituent parts to simplify their comprehension.

In this chapter, we are flipping the switch on significant architectural changes that, most importantly, incorporate fog as a central player in a novel three-tiered architecture. We demystify the explanation of the three-tiered architecture that we dub as cloud-fog-edge by dividing into major sections the pattern defined herein for the stacked-up architecture. First, we establish some basic definitions before getting under the hood. Thereafter, we start detailing mechanisms required for fully building a convenient self-organized fog between cloud and edge devices that hides management details so to relieve the user from reasoning about the underlying technical details and focuses on analytics instead of the heavy lifting of resource management. Most this chapter is devoted to summarizing the underlying components that constitute our three-layered architecture and how each contributes toward a fast fault-tolerant low-latency IoT application's operation. We herein point out that several alternative designs and proposals for related architectural models are found in the relevant literature that differ significantly in the way they present the interplay between the three worlds. We specifically refer the interested reader to a recent survey by [6]. The downside, however, is that the authors focus mainly on comparing existing models without introducing a novel counterpart. A similar trend also follows in [7].

This chapter is organized as follows. We first start by defining major aspects of core players in the architecture, such as fog, IoT, and cloud. In what follows, we discuss the challenging requirements of fog when applied to IoT application domains. In a later section, we draw a taxonomy of fog computing for IoT. In the last section, we discuss challenges and recommended research frontiers. Finally, we close the chapter with some concluding remarks.

6.2 Fog Computing for IoT: Definition and Requirements

In this section, first, we give a depiction of what fog computing is, what it performs, and the upgrades it conceivably presents in IoT application areas and deployment environments. Then, we clarify the inspirations that lead to the presentation of the fog computing layer atop the stack and the inappropriateness of a two-tier architecture composed only by cloud computing and IoT. Finally, we propose an original reference architecture model with the aim of clarifying its structure and the interactions among all the elements, providing also a description of its components.

6.2.1 Definitions

Fog computing alludes to a distributed computing paradigm that off-loads calculation, for some parts, close to the edge nodes of the network system with the purpose of lightening their computational burden and thus speed up their responses. We stress the fact that fog is becoming increasingly focal in enriching the responsiveness of computations by intermediating the stacked-up architecture between the two IoT and cloud universes, bringing substantial computing power near the edge and helping time-intensive applications in their front-stage loads that may need instant reactions, which normally cannot wait for the whole cycle, from sending data upstream to cloud to getting results back. Fog can be considered as a significant extension of the cloud computing concept, capable of providing virtualized computation and storage resources and services with the essential difference of the distance from utilizing end-points. While cloud exploits virtualization to provide a global view of all available resources and consists of mostly homogeneous physical resources, far from users and devices, fog tends to exploit heterogeneous resources that are geographically distributed (often with the addition of mobility support) and situated in proximity of data sources and targeted devices.

Moreover, fog is based on a large-scale sensor network to monitor the environment and is composed of extremely heterogeneous nodes that can be deployed in different locations and must cooperate and combine services across domains. In fact, fog communicates, at the same time, with a wide range of nodes at different level of the stack, from constrained devices (with very restricted resources) to the cloud (which has virtually infinite resources). Many applications require, at the same time, both fog localization and cloud centralization: fog to perform real-time processes and actions, and cloud to store long-haul information, and thereby perform long-haul analytics.

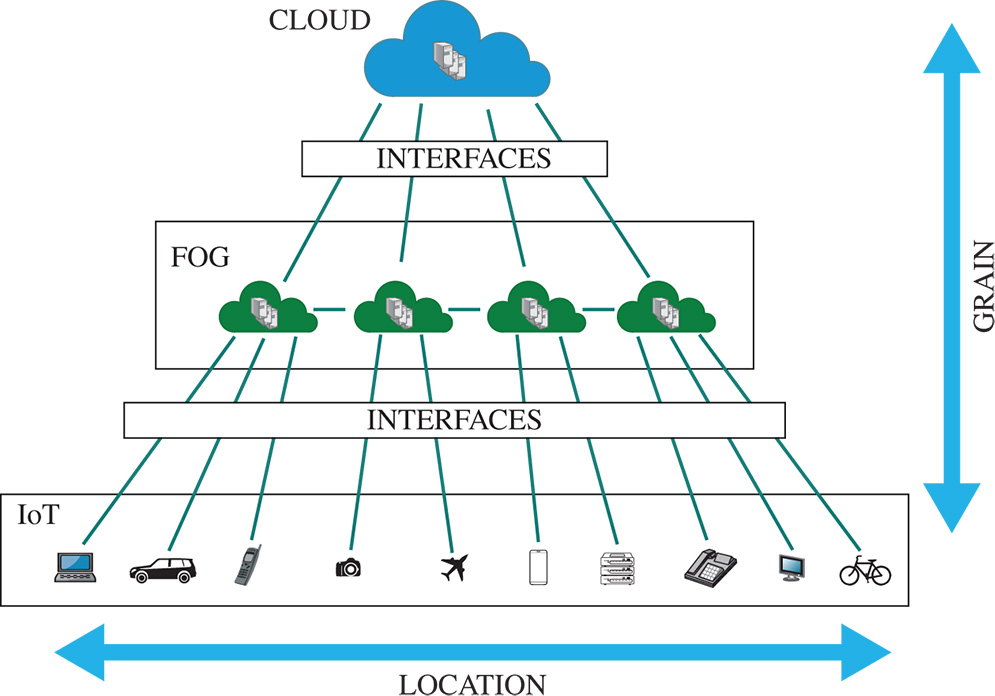

A primary idea emerging from existing fog solutions in the literature is to deploy a common platform supporting a wide range of different applications, and the same support platform with multitenancy features, can be used also by a multiplicity of client organizations that anyway should perceive their resources as dedicated, without mutual interference [8]. Figure 6.1 shows a high-level architecture that summarizes the above vision by positioning the IoT, cloud, and fog computing layers.

Figure 6.1 Cloud-fog-IoT architecture. (See color plate section for the color representation of this figure)

Fog interacts with the other layers through interfaces with different communication specifications, simplifying the connection among the different technologies. Due to cloud technology maturity and well-defined standardizations, cloud-side interfaces are more defined, and it is currently easier to make cloud service platforms interact with outside users. Cloud interfaces allow connecting the cloud with any device, anywhere, as a virtually unique huge component, and independently of where cloud services are located. On the contrary, IoT-side interfaces and, even more, fog ones are more various and heterogeneous, and much work should be done to homogenize the different approaches and implementations that are emerging.

6.2.2 Motivations

The incorporation of cloud in IoT applications is twofold, bringing significant preferences to the suppliers and end clients on one hand, yet bringing new unsuitableness in the integration with ubiquitous services on the other hand. In spite of its generous offering when it comes to the resource-rich assets that cloud may bring to an IoT setting, excessive misuse of cloud assets by ubiquitous IoT devices may present a few technical difficulties, such as network latency, traffic, and communication overhead, to mention a few. In particular, “dumbly” connecting a bunch of sensors legitimately to the cloud computing framework is resource-demanding, which are not designed, implemented, and deployed for high-frequency remote interactions, e.g. in the extreme case of one cloud invocation per each sensor duty cycle. Ubiquitous devices gather enormous quantity of data during normal operations, a condition that is worsened in crowded places during peak-load conditions or in future applications, because the purpose of IoT systems is to sense as much as possible and, thus, increasingly collecting more data, with the end result of exceeding the bandwidth capacity of the networks. In addition, IoT sensors usually use a high sampling rate, in particular for critical applications, to better monitor and act instantly, which typically generates a huge amount of data that need to be managed.

Interfacing a horde of sensors straightforwardly with cloud is incredibly demanding and potentially challenges the capacity of cloud resources. The result is a continuous iteration of cloud, which stays occupied per every sensor duty cycle, rendering cloud's “scale per sensor” property inefficient. To this end, we argue that an architecture that is based on a direct communication between cloud and IoT devices is infeasible. A direct rationale for this judgment is that a network's bandwidth is no longer able to support excessive data loads. In addition, the planned optimizations of networking and intercommunication capabilities are not promising for keeping up with the unprecedented avalanches of data, which are growing at a continuous rate and coming downstream at a fast pace. Such huge amounts of real-time data challenges the processing capabilities of any cloud deployment and potentially causes performance to take a severe dive in case the data are fed directly to the cloud layer, thus rendering the whole processing power a single point of failure and counteracting the benefits of parallelization.

Future web applications, which are arising from the advancement of IoT, are large-scale, latency-sensitive, and are never again meant to work in seclusion, but instead will potentially share foundation and inter-networking assets. These applications have stringent new requirements, such as mobility support, large-scale geographic distribution, location awareness, and low [9]. As a general idea, it begins to be broadly perceived that an architectural model that is just founded based on a direct interconnection between IoT devices and the cloud is unseemly for some IoT application situations [8, 9]. Cloud environments are too globally available and “far” from IoTs devices, which hinders meeting the IoT application's requirements and critical issues.

A distributed intelligent intermediate layer that adds extra functionalities to the system is required by, e.g. pushing some processing workloads into the data sources themselves, thus off-loading some heavy lifting that otherwise may cause congestion if sent abroad to the cloud. For this to happen, a support infrastructure is needed for the system to work properly and efficiently, thus providing QoS and capitalizing on the great potential of cloud. For a comprehensive survey that covers relevant literature methods that can be employed for off-loading time-sensitive tasks just-near the edge, in fog, we refer the interested reader to [10]. Authors basically discuss various methods and algorithms for off-loading, mostly by taking a utilitarian perspective that depicts the relevance of each method in achieving a streamlined off-loading conduct.

In the relevant literature, some works propose moving part of resources toward network edge to overcome limitations of cloud computing. In this chapter, we recapitulate main fog-related research directions including cloudlet, edge computing, and follow-me cloud (FMC).

The term cloudlet was first coined by [11] and describes small clouds. In simple terms, it is composed of a cluster of multicore computers with gigabit internal connectivity near endpoints that aim at bringing the computing power of cloud data centers closer to end devices in order to satisfy real-time and location-awareness requirements. An important distinction with what existed in traditional data centers is that a cloudlet has only soft state. In simpler terms, this means that management burden is kept considerably low and, once configured, a cloudlet can dynamically self-provision from remote data center [12]. Satyanarayanan et al. [13] highlights that usually cloudlet depends on a three-level stacking (mobile devices, cloudlet, and cloud) and is totally transparent under typical conditions, giving portable clients the deception that they are directly communicating with the cloud. Cloudlet stands as a possible realization of the resource-rich nodes, while those components deployed in the cloudlet are able to respond to requests coming from the resource-poor nodes in a timely manner [11].

Edge computing aims to move applications, data and services from cloud toward the edge of the network. Firdhous et al. [14] has summarized different advantages of edge computing, including a significant reduction in data movement across the network resulting in reduced congestion, cost and latency, elimination of bottlenecks, improved security of encrypted data (as it stays closer to the end-user, reducing exposure to hostile elements) and improved scalability arising from virtualized systems. Davy et al. [15] presents the idea of edge-as-a-service (EaaS), an idea that decouples the strict ownership relationship between network operators and their access network infrastructure that, through the development of a new novel network interface, allows virtual networks and functions to be requested on demand to support the delivery of more adaptive services.

FMC [16] is a technology developed to help novel mobile cloud processing applications, by giving both the capacity to move network end-points and adaptively relocating network services relying upon client's location, so as to ensure sufficient execution throughput and to have a fine-grained control over network resources. [16] analyses the scalability properties of an FMC-based system and proposes a role separation strategy based on distribution of control plane functions, which enable system's scale-out. [17] proposes a framework that aims at smoothing migration of an ongoing IP service between a data center and user equipment of a 3GPP mobile network to another optimal data center with no service disruption. [18] proposes and evaluates an implementation of FMC based on OpenFlow and underlines that services follow the user throughout his movements, always provided from data center locations that are optimal for the current locations of the users and the current conditions of the network. In a similar vein, [16] introduces an analytical model for FMC that provides the performance related to the user experience and to the cloud/mobile operator, stressing the importance of attention when triggering service migration.

In addition to these activities, some standardization efforts are geared toward improving interoperability and thus fostering fog and edge computing ecosystems. Recent initiatives include open edge computing [19], open fog computing [20], and mobile edge computing (MEC) [21].

The Open Edge Computing Consortium is a joint activity among industries and the scholarly community that calls for driving the advancement of an ecosystem around edge computing by giving open and internationally acknowledged standardized instruments, reference implementations, and live demonstrations. To that end, this community leverages cloudlets and provides a testbed environment for the deployment of cloudlet applications.

The OpenFog Computing Consortium aims mainly at defining standards in order to improve the interoperability of IoT applications. They indicate that cloud-only architectural approaches is not able to keep up with the fast data arrival rates and volume requirements of the IoT applications. Given this observation, efforts are geared toward a novel architectural view that emphasizes information processing and intelligence at logical edge. The upcoming reference architecture is a first step in creating standards for fog computing [22]. Being a multilayer architecture, where some are vertical while others are horizontal, it thus covers all aspects and requirements for achieving a multivendor interoperable fog computing ecosystem. The vertical layers, considering the cross-cutting properties, are as follows: (1) performance, including time critical computing, time-sensitive networking, network time protocols, etc.; (2) security, covering end-to-end security, and data integrity; (3) manageability, with remote access services (RAS), DevOps, Orchestration, etc.; (4) data analytics and control, containing machine learning, rules engines, and so on; and (5) IT business and cross fog applications, providing characteristic to properly operate applications at any level of the fog. The horizontal view, which aims at satisfying different stakeholder requirements is composed of (1) node view, including the protocol abstraction layer and sensors, actuators and control; (2) system view, providing support for the infrastructure and hardware virtualization; and (3) software view, providing services and supporting the deployment of applications.

MEC is a reference design and a standardization exertion by the European Telecommunication Standards Institute (ETSI). MEC gives a service environment and cloud-computing capabilities at the edge of the portable system, within the radio access network (RAN). Therefore, MEC environment is characterized by low latency, proximity, highly efficient network operation and service delivery, real-time insight into radio network information, and location awareness. Its key element is the MEC server, which is integrated at the RAN element and provides computing resources, storage capacity, connectivity, and access to user traffic and radio and network information. The MEC server's architecture comprises a facilitating framework and an application platform. The application platform gives the ability to facilitate applications and is composed of an application's virtualization administrator and application-platform services. MEC applications from third parties are deployed and executed within virtual machines (VMs) and managed by their related application manager. The application-platform services provide a set of middleware application services and infrastructure services to the hosted applications. Thus, ETSI is working on a standardized environment to enable the efficient and seamless integration of such applications across multivendor MEC platforms. This also guarantees ensure serving vast majority of the mobile operator's customers.

Finally, the fog vision was conceived to address applications and services that do not fit well the paradigm of the cloud [8]. Fog computing is pushed between IoT and cloud, leveraging the best from both worlds in enabling IoTs applications to become established as future enabling technologies. Along the same lines, [23] emphasizes that, as indicated by IoT developing paradigm, everything will be seamlessly associated to structure a virtual continuum of interconnected and addressable items in a global networking system. The outcome will be a strong hidden structure on which clients may create novel applications helpful for the whole community. Fog computing is considered a driver for enterprise/industrial-based IoTs that brings connections to the real world in an unprecedented way and aims at interfacing with new business models introduced by IoTs, rethinking about how to create and capture value.

6.2.3 Fog Computing Requirements When Applied to Challenging IoTs Application Domains

IoTs systems raise requirements that burden fog and cloud computing to accomplish the right activity and fulfill the client's expectations. The following subsections discuss these highlights and elucidate their definitions.

6.2.3.1 Scalability

Scalability is a core requirement, not only for big data management, but also a proper geo-distribution of devices. To state the obvious, [8] proposes to add geo-distributed property as a further data dimension in big data analysis, in order to manage the information distributed nature as a coherent whole. In this scenario, fog plays an important role, thanks to its proximity to the edge and, thus provides information's location-awareness.

By considering scalability referred to big data processes, we feature the characteristic of the framework to scale, depending on the amount of data and, if necessary, being able to manage great amount of data. On the other side, regarding “scalability” of device geo-distribution, we underline the ability of fog computing to manage a large number of nodes in a highly distributed system.

Big data scalability is a fundamental necessity for IoT applications, where a developing number of devices must be interconnected. Geo-distributed scalability is a demand that underlines the paradigm of fog computing to have a capacity of overseeing distributed services and applications, even profoundly distributed frameworks, which falls in stark contrast with the more centralized cloud settings. In exceptionally conveyed systems, fog is dealing with a huge number of nodes across the board in geographic zones, and nodes can likewise be spread out with different degrees of density on the ground. Fog computing is thus dealing with various sorts of topology and distributed configurations systems and have the capacity to scale and adjust so as to meet the demands for every scenario.

6.2.3.2 Interoperability

The IoT is a very heterogeneous setting that is normally found in real-life situations, in light of a wide scope of various devices that gather heterogeneous data from the encompassing geography. Sensors differs when it comes to range coverage, from short- to long-distance coverage. Bonomi et al. [8] lists various heterogeneity inside the fog: (1) fog nodes extend from high-end servers, edge switches, access points, set-top boxes and, even, end devices such as vehicles and cell phones; (2) the different hardware platform has varying levels of RAM memory, secondary storage, and real estate to support new functionalities; (3) the platforms run various kinds of operating systems and software applications resulting in a wide variety of hardware and software capabilities; (4) the fog network infrastructure is also heterogeneous, ranging from high-speed links, connecting enterprise data centers and the core, to multiple wireless access technologies, toward the edge. In addition, inside fog computing, services must be unified on the grounds that they require the participation of various suppliers [9]. Fog computing is a very virtualized setting that needs heterogeneous devices and their running services to be unified under one umbrella in a homogeneous way.

In complex settings, heterogeneity can influence technical interoperability, in addition to semantic interoperability. Technical interoperability concerns communication norms, components executions, or parts interfaces with various information formats or diverse media sorts of data streams. Whereas semantic interoperability is more concerned with the information inside data interleaved and the likelihood that two components comprehend and share similar data in an unexpected way. A standard method to portray and exchange data, together with an abstraction layer that anonymizes physical diversities among components are thus required to make interoperability possible. Diallo et al. [24] explains under which conditions systems are interoperable, proving definitions and classifications and many approaches to address interoperability at different levels.

Fog computing is there for empowering interoperability, so as to make an exceptional information stream to be later handled by sensing and information analytics parts or to host conventional application programming interfaces (APIs) that can be utilized by various applications, without the costly need to move calculations to the cloud layer.

6.2.3.3 Real-Time Responsiveness

Real-time responsiveness is a principle empowering agent for IoT applications and their organization in real-life situations. Fog computing is vital to accomplish low-latency prerequisite in cases where cloud-IoT collaborations are not able to achieve the target latency for several reasons including the distance. (1) IoT and cloud are, in practice, geometrically distant and information requires considerable time in the loop, arriving as an input to cloud and thereafter returning as results (final or intermediate) to IoT devices. Fog is a promising field that alleviates the overhead costs caused by long-distance traveling of information; instead it is clear that performing computations near the edge costs less than sending data all the way downstream to cloud nodes. For some jobs, which do not require high computational power or are less demanding, fog promotes instant computation. (2) Real-time interactions loosely mean processing the unbounded streams of fresh data that arrive continuously in the cloud. Moments where data arrival rates exceed the processing capacities of cloud resources, in addition to Internetwork communication overheads, are not unheard of. Such conditions normally challenge the capacities of fully resource-loaded cloud deployments, where neither reactive nor proactive solutions make a difference. The promising (near) real-time operation of cloud environments is hindered by such facts. To top that off, in a highly dynamic and real-time scenario, such as those in Industry 4.0 (I4.0) or smart cities, data from IoT is fed very quickly to cloud, and because different chunks follow different networking routes, so that it arrives, sometimes, out of order, thus negatively affecting the overall accuracy. Fog diminishes this by sensing information, processing it, and acting in real-time using data that reflect instantly the situation. (3) Sensors accumulate a tremendous amount of continuously arriving data that if sent to the cloud potentially causes system congestion and consequently causing system's performance to hit a wall. On the contrary, fog acts as a front-stage that locates data traffic in a defined space surrounding sensors and preprocesses information before uploading data to the cloud, with lower focused loads and reduced core network load.

6.2.3.4 Data Quality

Data quality is a pertinent demand in real-life scenarios, making it essential for high-performing systems' operations. Also, this element essentially performs initial data filtering so as to early discard unnecessary duplicate and noisy loads, thus improving significantly the overall system quality and performance.

Data quality support is provided by the fog layer with the aim of early discarding useless data, aiming at relieving the burden on subsequent computational stages and consequently decreasing network data traffic by confining traffic near the edge, and reducing the amount of data pushed toward the cloud. Data quality depends on the association of various strategies. The mix of data filtering, aggregation, standardization and analytics, big data and small data analysis is fundamental to understand the surrounding environment, thus performing proactive maintenance and anomaly detection in real time, among a huge amount of data gathered from IoT sensors. In a ubiquitous environment, finding noisy data is challenging, especially in nonstationary settings where data is arriving fast and may thus challenge a front-stage system's resources.

6.2.3.5 Security and Privacy

An essential challenge in ubiquitous settings and fog computing is to harmonize security and reliability of systems, citizens' privacy concerns and personal data control, with the possibility to access data to provide better services. Particularly, with multitenancy support, fog offers policies to specify security, isolation, and privacy management that are required for different applications.

Fog computing is utilized in real applications that appear in critical settings, so reliability and safety are basic requirements. Moreover, it must consider that actuator's operations may be irreversible. Subsequently, the presence of unforeseen conduct, even due to bugs in applications, must be minimized with precautionary measures. Security is a key issue that must be solved to help industrial organizations and it concerns the entire system's architecture, from IoT devices reaching down to cloud. We need to provide important features such as (1) confidentiality, ensuring that the data arrives to the target spot, thus counteracting divulgence of data to unapproved objects with access limitations; (2) integrity, detecting, and preventing unauthorized alteration of the system by steps that control the preserving of consistency, accuracy, and trustworthiness of data over its entire life-cycle; (3) availability, ensuring that services are available when requested by authorized users, and performing repairs, if necessary, to maintain a correct functioning of the system.

A rich arrangement of security features that empowers essential security for every condition for the entire framework is thus required to avoid implementing security mechanisms on a node-by-node basis. Many controls are needed at all levels, including network and communications, from the physical and computational points of view. In fact, intelligence, data processing, analysis, and other computing workloads move toward the edge but, on the downside, many devices will be located in low-security locations, thus protecting devices, and their data becomes a big challenge.

Distributed and internetworked security arrangements are required to ensure complete and superior intelligence and for responsiveness reactions with automated decisions based on M2M communications and M2M security control without human interventions.

What is more, privacy is an undeniably critical issue that is growing in importance with ubiquitous and pervasive settings, where clients are aware concerning the privacy of their private information. Storing encrypted sensitive data in traditional clouds for privacy is not a suitable option as it causes many processing problems when applications have to access these data. In fog computing, personal information is kept in the system for better protection of privacy. It is imperative to characterize the responsibility for information inside the fog, since applications must utilize o information that they have access to [1]. In particular, we must consider the geographic diversity of information and certain data. For example, sensitive military or government data cannot be sent outside of certain geometrical areas. Fog can anonymize and aggregate user data and is thus useful for localizing intelligence and preventing the discovery of protected data. Anyway, it is necessary to introduce additional ways to protect data privacy and thereby to incentive the utilization of fog computing in privacy-critical contexts.

6.2.3.6 Location-Awareness

In dynamic real-world scenario, such as IoT applications, the location-awareness is the property of fog computing, due to its proximity to the edge, to own a widespread knowledge of its subnetwork and to comprehend the outer setting in which it is submerged. Fog improves adaptability because of its behavior adjustability in response to different events, where it adapts itself to better suit certain circumstances, assisted by the awareness of the context.

6.2.3.7 Mobility

Extending the concept of availability and trying to satisfy novel IoT application requirements, fog adapts itself in accordance with geographical distribution of its devices, thus providing mobility support. MIoT (Mobile Internet of Things) is proving itself to be a challenge for distributed supports [25, 26]. The ubiquity of mobile devices raises the need to present mobility support in fog computing, which allows sensing information and reacting while they are moving around the environment. Fog computing, in order to be effective, even with systems that have mobility as a peculiarity, must adjust to oversee high mobility devices. In addition, fog computing supports the likelihood that devices can move between fog nodes without causing issues that may bring the system into halts.

6.2.4 IoT Case Studies

At present, there are diverse IoT scenarios that are broadly promoted in the relevant literature to inspire the distinctive prerequisites of these frameworks and fog computing settings. Likewise, numerous researches are dealing with those situations so as to propose novel arrangements hybridizing the three worlds – IoT, fog and cloud – to address the demands [8, 27]. We herein provide a brief description of most important case studies. Those scenarios are used throughout the rest of this chapter to present how different approaches and solutions are applied in fog-enabled environments.

- Smart traffic light (STL). STLs focus on better handling of traffic congestion in metropolitan cities. These frameworks depend on camcorders and sensors distributed along the streets to detect vehicles and components in streets, distinguishing the nearness of bikers, vehicles, or ambulances. To decrease errors, these frameworks can be fine-tuned to sense when traffic lights must be turned green or red depending on vehicles congestion in a single direction. Similarly, when an ambulance with blazing lights is distinguished, the STLs change road lights to open paths for the emergency vehicle. In this situation, traffic lights are fog devices.

- Smart connected vehicle (SCV). SCVs are systems located inside a vehicle controlling every sensor and actuator in the vehicle, such as tires pressure, temperature inside the car, and the street lane in which the car is located. All the information collected by different sensors and sent to the closest fog node, which is usually located in the vehicle, so that all information can be quickly processed, and a real-time response can be given to any dangerous situation, e.g. stopping the car if a puncture is detected in a tire. In addition, different information can be exchanged between vehicles (vehicle-to-vehicle [V2V] communication), with the road infrastructure (through the roadside units [RSUs]) or with the Internet at large (through Wi-Fi, 3G, etc.).

- Wind farm. These are systems that aim at improving the capture of wind power as well as preserving the wind tower structure under adverse condition. Diverse sensors to distinguish the turbine speed, the produced power, or the weather conditions are essential. These data can be given to a nearby fog node situated in every turbine to tune it in order to build the effectiveness and to decrease the probabilities of harm because of wind conditions. Furthermore, wind farms may comprise several individual turbines that must be facilitated to achieve the highest possible efficiency. The optimization of a single turbine can likewise lessen the effectiveness of different turbines in a row at the back of a farm.

- Smart grid frameworks are promoted to counteract the waste of electrical energy. Those frameworks analyze energy requests and evaluate the accessibility and the cost to adaptively switch to green powers such as sun and wind. For this to occur, distinctive fog nodes are deployed on system edge devices hosting software responsible for balancing the equations.

- Smart building systems are one of the most demanding IoT applications. In this scenario, different sensors are deployed throughout a house or a building to get information about different parameters, such as temperature, humidity, light, or levels of various gases. In addition, a fog node could be deployed in-house for collecting and combining all that information in order to react to different situations (e.g. turning the air conditioning on if the temperature is too high or activating a fan depending on gas level). With fog computing applied in those systems, they can better control the waste of energy and water in order to execute actions to better conserve them.

For an overwhelming review that better sheds light on more case studies, we refer the interested reader to a recent survey by [28], which focuses mainly on smart city scenarios and compares more than 30 related research efforts. For another six more scenarios, we point to a recent survey by [29].

6.3 Fog Computing: Architectural Model

This section explains an architectural framework dubbed as cloud-fog-IoT for interwoven application scenarios (see Figure 6.2). It depicts a high-level view of the composing essential elements and their associations. These elements form the ground for our taxonomy of IoT fog computing that we present hereafter. They have been identified considering both the different solutions and approaches surveyed, and the requirements presented in Section 6.2.3. First, the architecture is divided into three areas: cloud, fog, and IoT. These areas mirror the diverse types of nodes that could normally execute activities and tasks of components comprised within those areas. A component can be completely performed by a specific kind of nodes or by diverse nodes relying on the granularity of tasks (for instance, the IoT layer may contain some activities done by fog nodes and others performed by IoT devices). Second, the architecture consists of six layers that span the three worlds (fog, cloud, and IoT). For example, the communication layer must act on improving the interconnection between IoT devices and fog nodes, among fog nodes themselves, and between fog nodes and cloud environments. We expand the explanation of those layers as follows.

6.3.1 Communication

The communication layer is in charge of the communication among the constituent nodes of the network. It contains different techniques for a proper communication between those nodes, including standardization mechanisms for facilitating the exchange of information between different nodes of the network or between different subsystems of the IoT application. These techniques directly address the infrastructure interoperability requirement (see Section 6.2.3.2 for more details). Furthermore, IoT applications are typically described by a high mobility of a portion of its IoT devices. To this end, this layer should contain methods permitting the relocation of a device from a subnetwork to a different one without corrupting a system's normal operation. Meanwhile, this layer is significant for accomplishing real-time responsiveness, which can be obstructed by the inefficiency of communication protocols. In this manner, it additionally incorporates diverse procedures for decreasing communications latency. As a final perspective to consider, this layer needs to guarantee the dependability of communications, ensuring that data will not be lost in the system and that each node or subsystem expecting a particular information ingestion is getting it properly, subsequently improving information quality.

Figure 6.2 Our proposed architecture for cloud-fog-IoT integration.

6.3.2 Security and Privacy

The security and privacy layer influences the entire design, since all interconnections, information, and activities must be done in ways that guarantee the safety of the system and its clients. This layer achieves security on three unique dimensions: security, privacy, and safety. In the first place, security focuses on various methods to guarantee the dependability, confidentiality and integrity of the interconnections between diverse nodes of the setting. Second, unreliable privacy-awareness strategies normally render the whole framework trustworthy. Hence, this layer incorporates access control component to submit data to just-approved clients. As a final consideration, IoT frameworks act in critical environments where safety matters a lot. Fog computing settings encourage the promotion of such approaches just near the edge.

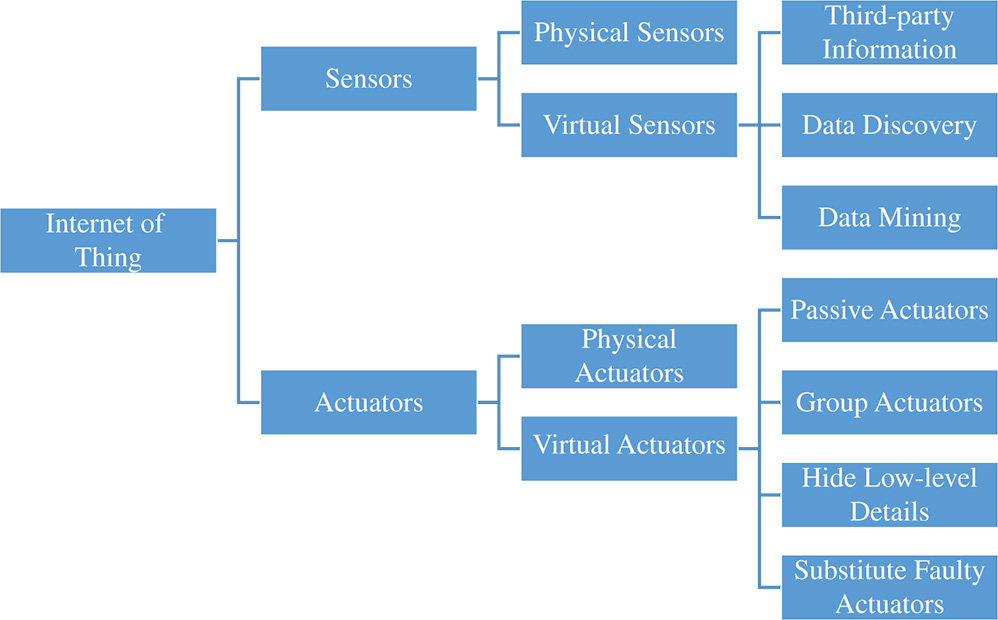

6.3.3 Internet of Things

IoT applications consist of interconnected objects, embedded with sensors, gathering information from the surrounding world, and actuators, and acting upon the environment. The IoT layer is included by each one of those devices responsible for sensing the surroundings and adaptively acting in specific circumstances. This is implanted in IoT and fog worlds. Sensing straightforwardly influences the quality of generated data. Actuation is mostly important in IoT settings, as these are naturally required to interactively respond to flaws so as to avoid disasters. Fog computing can improve the actuation with convenient responses to data.

6.3.4 Data Quality

The data quality layer oversees the processing of all sensed and gathered data so as to build their quality and to diminish the size of data to be stored in the fog nodes or to be transmitted to the cloud environments. This layer comprises three different phases that are successively executed: data normalization, filtering, and aggregation. To start with, data normalization techniques get raw data sensed by heterogeneous devices in order to unify it in a common homogeneous language. Thereafter, since many of the data collected are useless (in the sense that they are not contributing to the final result) and only a part of them are valuable, different data-filtering techniques are employed to get just the contributing subsets, relevantly discarding worthless information, aiming at better exploiting scarce computational resources during subsequent steps. Finally, data aggregation is a process through which fog nodes take filtered data to construct a unique information stream and thereby improve its analysis. Fog should be able to follow aggregation rules in order to identify homogeneous information and, thus, produces a uniform data flow. Architectural components have long been utilized by various settings; they are rather essential for connecting heterogeneous sensors' data with computational assets spread out within the premises of the architecture remainder, thus improving significantly the quality, the scalability, and the general responsiveness of the framework.

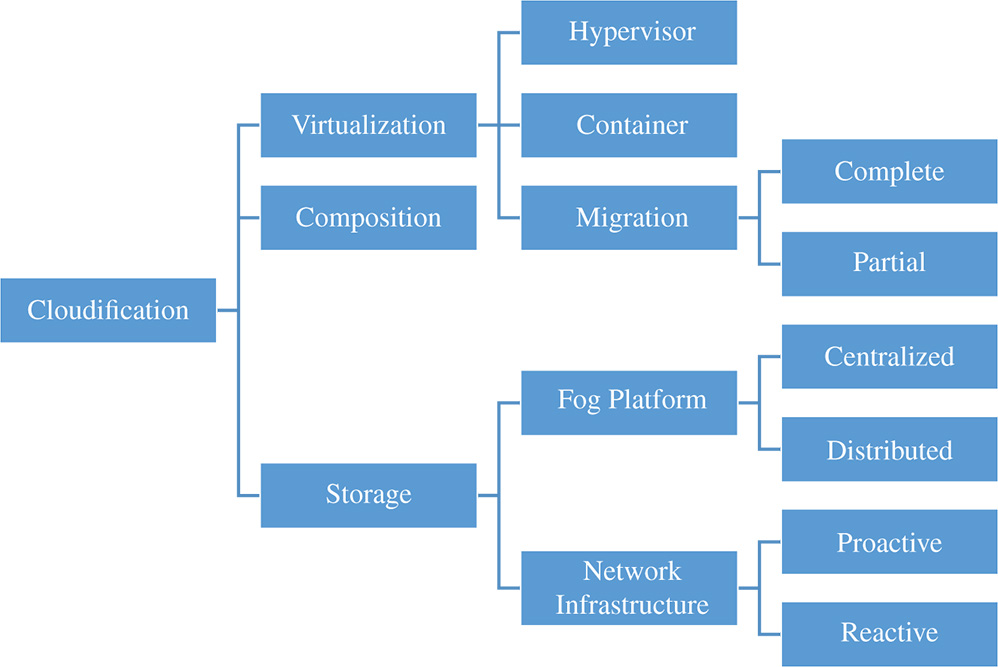

6.3.5 Cloudification

The cloudification layer forms tiny cloud-analogy inside a fog. It helps to close the gap by bringing a defined set of cloud services just-near the edge of the targeted deployment environment or temporarily storing a portion of data and recurrently uploading them to a remote cloud setting, thus diminishing overwhelmed remote communications with the cloud. In order to achieve distributed clouds in the fog nodes, virtualization techniques must be provided so that different applications can be deployed in the same node. In addition, diverse services deployed on fog nodes should be composed and coordinated with the target of orchestrating challenging services for supporting higher-level business processes. Finally, the storage subcomponent is in charge of orchestrating distributed data, which are normally stored in diverse fog nodes, thus managing their processing when applications have to access these data and controlling their privacy. Different and non-irrelevant advantages are normal with that framework in light of the fact that each task is normally achieved in a location-aware setting with better analytics, responsiveness, and results. Moreover, it is not unheard of to confine traffic near IoT devices, without overwhelming traffic load on the shoulders of the network interconnections, improving also privacy, since users have their data in-proximity and can control them. Therefore, this layer provides important benefits in the parlance of responsiveness and user experience quality.

6.3.6 Analytics and Decision-Making

The analytics and decision-making segment are accountable for getting insights from the stored information to create distinctive analytics and to identify various situations. These analytics can lead to making specific decisions that systems should perform. In analogy with previously explained layers, this layer spans the three components of the architecture. In ubiquitous settings, where an immense number of sensors always accumulate data and forward it to fog, the mix of short and long-haul analytics, in fog and cloud respectively, normally accomplishes reactive and proactive decision-making, in a row.

The multifaceted nature of IoT environments prompts the need of a precise introductory conduct of the surroundings to characterize a substantial model to use in system. In fog computing, the prediction of input/output is favored by the proximity to end-users that allows a greater location-awareness of the environments where they execute. This enables further processes, performed by the system, to the external context and, thus improve every future task.

While short-lived analytics and lightweight processing with constrained data amounts are recommended to be handled by fog, cloud are normally performing long-haul and overwhelming asset-demanding activities in Big Data as underpinned in [30] for, specifically, health-care applications, these capabilities transfer over to any similar IoT scenario. Often within a Big Data analysis and processing cycle, significant resource assets are utilized for supporting data-intensive resource-demanding operations, which mostly cannot be satisfied by constrained devices with few available resources near IoT environment. However, big data processing algorithms that perform efficiently even with constrained-resources devices is not a situation that is unheard of. This specifically rationale our decision to expand this layer so that it covers the edge component. Ultimately, in case of resource incapability near the edge, cloud computing is taking the lead and offering great amount of computing power, relieving scalability, cost and performance issues. Moreover, those long-lived analysis cycles are normally utilized for performing orchestrated and proactive decisions processes.

6.4 Fog Computing for IoT: A Taxonomy

In this section, we propose a novel taxonomy for elucidating the principal constituent parts found normally in fog for IoT computing application scenarios. We specifically aim at explaining our categorization, which flows naturally from our previously sketched architectural model. Aiming at facilitating a comprehension of our taxonomy, we introduce a breed of over-the-shelf proposals that support specific elements or traits similar to those appearing in our architectural model. Thus, the proposed classification is populated with different approaches.

Instead of analyzing a complete list of solutions proposed for each characteristic of the taxonomy, we also detail different approaches, applying parts of them to different scenarios, as introduced in Section 6.2.4. We argue that this conceptualization serves as a comprehensive guideline, assisting IoT application designers in deploying effective cloud-fog-edge computing environments.

The presentation order of our taxonomy follows the same pattern as proposed in our logical architecture. Therefore, six parts of our taxonomy are drawn as one for each architectural element in a row. Section 6.4.1 presents the various interconnection aspects that should be considered for the interaction between IoT devices and fog and cloud nodes. Section 6.4.2 elucidates the various security and privacy measures required by IoT scenarios. Section 6.4.3 details interactions between fog nodes and IoT devices. Section 6.4.4 classifies how gathered data is processed. Section 6.4.5 spots the light on various aspects that need to be managed to enable off-loading some cloud loads to fog nodes. Finally, Section 6.4.6 sums up by explaining the taxonomy for data analytics and decision-making perspectives.

6.4.1 Communication

The communication layer offers four distinctive parts, providing help to the diverse attributes and prerequisites of IoT applications with respect to the communication between edge devices and fog and cloud nodes (see Figure 6.3). First, the standardization component, Section 6.4.1.1, explains various protocols that are used in standardizing the intercommunication aspects among the IoT devices ands fog and cloud nodes. Second, not only the communication between network elements should be standardized; rather, in critical IoT applications, the reliability of the transmitted information is essential for correct operation of the system. Section 6.4.1.2 details some of the main techniques to achieve the communication reliability. Likewise, the latency of transmitted information must also be considered in those applications requiring real-time communication. Section 6.4.1.3 analyzes some protocols and techniques focused on reducing communication latency. Finally, the mobility component is discussed in Section 6.4.1.4, which reviews some of the most important mechanism to reduce the mobility issues in IoT applications. For simplicity, Figure 6.3 sums up the proposed taxonomy elements. Some communication protocols actualize diverse methods to support the above parts. However, each method has its pros and cons, making it appropriate for various conditions.

Figure 6.3 Taxonomy for the classification of the communication layer.

6.4.1.1 Standardization

A standout amongst the most basic focuses for the right coordination and intercommunication between IoT devices and IoT applications is the networking protocol utilized. Those protocols standardize the communication among sensors, actuators, and fog and cloud nodes, allowing programmers to accomplish proper levels of infrastructure interoperability in IoT settings. Various authors [31, 32] partition the infrastructure interoperability into two diverse arrangements of protocols: application protocols and infrastructure protocols. Application protocols are utilized to guarantee communicating messages among applications and their devices (Constrained Application Protocol [CoAP] [33], Message Queuing Telemetry Transport [MQTT] [34], Advanced Message Queuing Protocol (AMQP) [35], HTTP, Data Distribution Service [DDS] [36], ZigBee [37], Universal Plug and Play (UPnP) [38]). The latter are required to launch the underlying interconnection among various networks (RPL [39], 6LoWPAN [IPv6 low-power wireless personal area network] [40], IEEE 802.15.4, BLE [Bluetooth Low Energy] [41], LTE-A [Long-Term Evolution – Advanced] [42], Locator/ID Separation Protocol [LISP] [43]). Each system is to utilize a layered-up stack of protocols relevant to a set of requirements and the sensate traits of every application.

For instance, IEEE 802.15.4 offers a generally very secure wireless personal area network (WPAN), which focuses on low-cost, low-power usage, low-speed ubiquitous communication among devices, and with support to facilitate very large-scale management with a dense number of fixed endpoints [44]. On the top of the IEEE 802.15.4 standard, the ZigBee protocol takes advantage of the lower layers in order to facilitate the interoperability between wireless low-power devices, the optimization for time-critical environment, and the discoverability support for mobile devices. In addition, it is possible to extend the IEEE 802.15.4 standard, with 6LoWPAN network protocol that creates an adaptation layer that fits IPv6 packets, improving header compression with up to 80% compression rate, packet fragmentation, and thus direct end-to-end Internet integration [44].

The smart grid is composed of frameworks made out of an enormous number of distributed and heterogeneous devices spread out in various networks. Wang and Lu [45] represents Smart Grid communication network onto a hierarchical network composed by backbone network and millions of different local-area networks with ad-hoc nodes. Infrastructure interoperability is needed to allow devices and networks to cooperate in order to create a unique vision of the system state or to execute a common task. In smart building applications, in addition to the devices, the integration and communication among buildings allow managers to share the infrastructure and management costs, thus reducing the capital and operational expenses [46]. ZigBee is specifically utilized in smart building and smart grid applications because of its short-range and robustness under noise traits [47]. In [48], ZigBee is utilized in smart grid applications for associating sensors with smart meters, considering the overarching characteristic of its low bandwidth prerequisites and minimal-effort deployment.

ZigBee can also be used in vehicular applications, especially to perform short intra-vehicles communications among all the devices inside a vehicle and, for certain specific applications, to communicate outside the vehicle, as shown in [49], where it is used to improve the safety short-range system requirements. SCV acts in a heterogeneous scenario, with the involvement of a swarm of in-built sensors inside the vehicle that communicate, as well as many types of vehicles, externally seen as macro-endpoints, and access point stations that must cooperate among themselves. Several wireless technologies are used to communicate in the network environment [50]. Hence, infrastructure interoperability must be provided in order to allow V2V communications, access-point-to-vehicle and access-point to access-point.

Another well-known kind of WPAN connection is that of Bluetooth tech, characterized by an exceptionally low transmission range and a poor transmission rate, but on the upside with low power consumption. In IoT situations, BLE has gained momentum. This version extends the Bluetooth technology to face and support connections among constrained devices, optimizing lightweight coverage (about 100 m), latency (about 15 times lower than that of a classic Bluetooth), and energy requirements, with a transmission power range of 0.01–10 mW. BLE provides a good trade-off between energy requirements, communication range and flexibility, and a lower bit rate, combined with a low latency and reduced transmitting power, allows developers to transmit beacons beyond 100 m. For these reasons, BLE has several advantages that make it suitable for V2V applications, and thus could be successfully adopted in SCV systems [51].

Moreover, in heterogeneous environments, OSGi framework is widely adopted as a lightweight application container that defines dynamic managements of software components, allowing deployment of bundles that expose services' discoverability and accessibility. As examples, [52, 53] present platforms for SCV where the middleware is based on OSGi services and modules in order to improve the interaction between devices. In [54, 55] the proposal architecture, utilized in Smart Grid and Smart Building scenarios, capitalizes OSGi as a component platform, which enables to activate, deactivate, or deploy system modules easily, to provide a platform on which new components can be integrated in a plug-and-play fashion, keeping the design as flexible and technology independent as possible.

6.4.1.2 Reliability

Another imperative property of the communication protocols is that of the intercommunication reliability. This property guarantees the gathering of the information transmitted by diverse nodes of the setting. Presently, various systems can be utilized to guarantee the quality of the communications, e.g.: retransmission, handshake, and multicasting.

First, retransmission requires the acknowledgment of every packet and thereby retransmitting each lost packet. Several application protocols, like CoAP, MQTT, AMQP, DDS, concentrate on communication's dependability and are founded on retransmission schemes that are designed to handle the packet loss in lower layers. For example, the scheme per-hop retransmission (often called automatic repeat request [ARQ] at the mandatory access control [MAC] layer) tries to retransmit a packet several times before reaching a defined level before the packet is declared lost. Losses are on-the-spot realized and corrected, and even a few per-link retransmissions can substantially enhance end-to-end dependability [56, 57]. CoAP is based on UDP, an unreliable transport layer protocol, but it promotes the utilization of confirmable messages, that require an acknowledgment message, and non-confirmable messages, that does not need an acknowledgment [58].

Second, the handshake mechanism is designed so that two nodes or devices attempting to communicate can agree on parameters of the interconnection before data transmission. MQ Telemetry Transport (MQTT) and Advanced Message Queuing Protocol (AMQP) support three different layers of reliability that are used based on the domain-specific needs: (1) level 0, where a message is delivered at most once, with no acknowledgment of reception; (2) level 1, where every message is delivered at least once with a confirmation message; and (3) level 2, where the message is delivered exactly once and uses a four-way handshake mechanism.

Third, the publish/subscribe technique permits a device to publish some specific information. Other devices or nodes can be subscribed to that information. To state the obvious, each time the publisher posts new information, it is forwarded to the subscribers. Multiple nodes can subscribe to the same information; therefore, the information would be multicast to all of them. DDS uses multicasting for bringing excellent QoS and high reliability to its applications, with the support of various QoS approaches in connection with a wide scope of adjustable communication paradigms [59]: network scheduling policies (e.g. end-to-end network latency), timeliness policies (e.g. time-based filters to control data delivery rate), temporal aspects for specifying a rate at which recurrent data is refreshed (stated another way, timelines between data sampling), network transport priority policies, and other policies that influence how data is processed alongside the communication in relative to its reliability, urgency, importance, and durability [60].

In SVC and STL case studies, with huge increase in the number of connected vehicles, the number of sensors they incorporate, and with their unprecedented mobility, the support for low latency and unobstructed communication among sensors and fog nodes is crucial to guarantee a correct flow of applications [61]. DDS has been used as basis for building an intra-vehicle communication network. To that end, the vehicle was divided into six modules constituting the intra-vehicle network; the vehicle controller, inverter/motor controller, transmission, battery, brakes, and the driver interface and control panel. Fifty-three signals were shared among the different modules, some of them periodic and some sporadic. The test showed that this protocol improves the reliability and the QoS [60].

6.4.1.3 Low-Latency

As fog computing is implemented at the edge of the network, it is easier to provide low latency response, but it is also necessary to use the right protocol. Distinctive protocols can be utilized to improve the interplay between fog or cloud nodes or among devices and nodes. For instance, [59] utilizes MQTT publish/subscribe protocol to acknowledge continuous and low-latency streams of data, in a real-time setting dependent on fog computing capabilities toward cloud and IoT, utilizing fog layer in the meantime as broker and message interpreter: MQTT conveys data stream among fog and cloud and MQTT-SN, while the lightweight variant transports information from edge devices to the fog layer. CoAP [33] is yet another application protocol specifically utilized in IoT scenarios to provide low-latency cycles. In addition, [58] explains performance differences between MQTT and CoAP, focusing on the response delay variation in association with reliability and the QoS provided for communication: lower packet loss or bigger message size implies that MQTT outperforms CoAP; the opposite holds, reversing the conditions. So, deciding the protocol to use is essential depending on the type of application. In other terms, this means employing MQTT for reliable communications or for communication of large packets, and CoAP elsewhere for decreasing latency and thereby increasing the system's performance accordingly.

Finally, DDS [36] is a brokerless publish/subscribe protocol, recursively used for real-time M2M communication scenarios among resource-constrained devices [59]. Amel et al. [60] consider DDS as a favorable solution for real-time distributed industrial deployments and applies the protocol for improving the performance of vehicular application scenarios, evaluating the performance with tests that encapsulate hard real-time applications benchmark. Hakiri et al. [62] suggests utilizing DDS for enterprise-distributed real-time scenarios and embedded systems, those common in smart grid and smart building applications, for the efficient and predictable circulation of time-critical data.

In smart grid and smart building scenarios, most control capacities have strict latency prerequisites and need instant responses. Low-latency activities are fundamental to improve the framework's adaptability on the two sides of the electricity market. In smart grid scenarios, electricity markets expect to utilize demand-response instance pricing and charge clients for time-fluctuating costs that mirror the time-changing savings for power obtained at the discount level [63]. Wang and Lu [45] highlights the importance of low-latency actions in order to collect correlated data samples from local-area systems to enable a global power signal quality at a particular time instant. All samples must be collected by the phasor measurement unit (PMU) in a timely fashion to estimate the power signal quality for a certain instant and, depending on applications, the frequency of synchronization is usually 15–60 Hz, leading to delay requirements of tens of milliseconds for PMU data delivery. For modern power distribution automation, the IEDs (intelligent electronic devices) that are implanted in substations are sending their measurements to data collectors within 4 ms ranges in row, while intercommunications between data collectors and utility control centers need a network latency that falls roughly within the 8–12 ms range [32]; and for the standard communication protocol IEC 61850, maximum acceptable delay requirements vary from 3 ms; for fault isolation and protection purposes messages, to 500 ms; for less time-critical information exchange, such as monitoring and readings [45].

6.4.1.4 Mobility

One of the characteristics of IoT applications is the high mobility of some of their devices [9]. Currently, there are different protocols applying different techniques to support such mobility, such as routing and resource discovery mechanism.

Routing mechanisms oversee constructing and maintaining paths among remote nodes. Few protocols are responsible for building such routes, despite the need of some nodes for mobility peculiarities. For example, the LISP [43] indicates a design for decoupling host identity from its locational data in the present address scheme. This division is accomplished by supplanting the addresses utilized in the Internet with two separate name spaces: endpoint identifiers (EIDs), and routing locators (RLOCs). Isolating the host identity from its locations allows good improvements to its mobility, by enabling the applications to tie to a perpetual address, which is dubbed as the host's EID. Host location changes commonly amid an active connection. RPL is yet another routing protocol for constrained communications, utilizing insignificant routing necessities through structuring a strong topology over light connections and supporting straightforward and complex traffic models such as multipoint-to-point, point-to-multipoint, and point-to-point [31].

In STL and SCV case studies, mobility and routing support are essential needs for creating dependable applications ready for high rates of mobility of vehicles edges. Vehicles must be managed as macro-endpoints, allowing them to switch from one fog subnetwork to another. SCV must incorporate a routing mechanism for supporting vehicles' mobility externally but it does not require these mechanisms internally, since the swarm of sensors normally act statically inside the vehicle. RPL is adaptable for a multi-hop-to-infrastructure design, as a protocol that enables huge area coverage in real geometries, hosting connected vehicles with minimal deployment of infrastructure [64]. This protocol has practical applications in SCV and STL systems, and it is emerging as the reference Internet-related routing protocol for advanced metering infrastructure applications, since it can meet the requirements of a wide range of monitoring and control applications, such as building automation, smart grid, and environmental monitoring [65].

Resource discovery techniques strategies concentrate on recognizing adjacent nodes when a device relocates, so as to build up new communications. For instance, CoAP is able to discover nodes resources in a subnetwork, through URI that host a rundown of assets given by the server. On the other hand, MQTT does not offer out-of-the-box support resources discovery, and thus clients must understand the message design and associated topics to enable the communication. UPnP is a discovery protocol widely used in many application contexts that enables automatic devices' discovery in distributed environments. Fog solutions use UPnP+ [66], which is complementary to IoT applications. This version encapsulates light-tailed protocols and architectural parts (e.g. REST interface, JSON data format instead of XML) aimed at enhancing communication levels with resource-constrained devices. Moreover, Kim et al. [54] proposes an architectural view encompassing both smart building and smart grid utilizing UPnP for further detecting and adding new devices dynamically with no user intervention, unless the system wants additional information about the user's environment. Likewise, Seo et al. [67] propose using UPnP in vehicular applications, to allow external smart devices to communicate with an in-vehicle network, sharing data over a single network with the services provided by each device.

In other scenarios, like wind farm, smart grid, and smart building, the mobility of devices is not a priority in building the application, due to the static nature of the systems.

6.4.2 Security and Privacy Layer

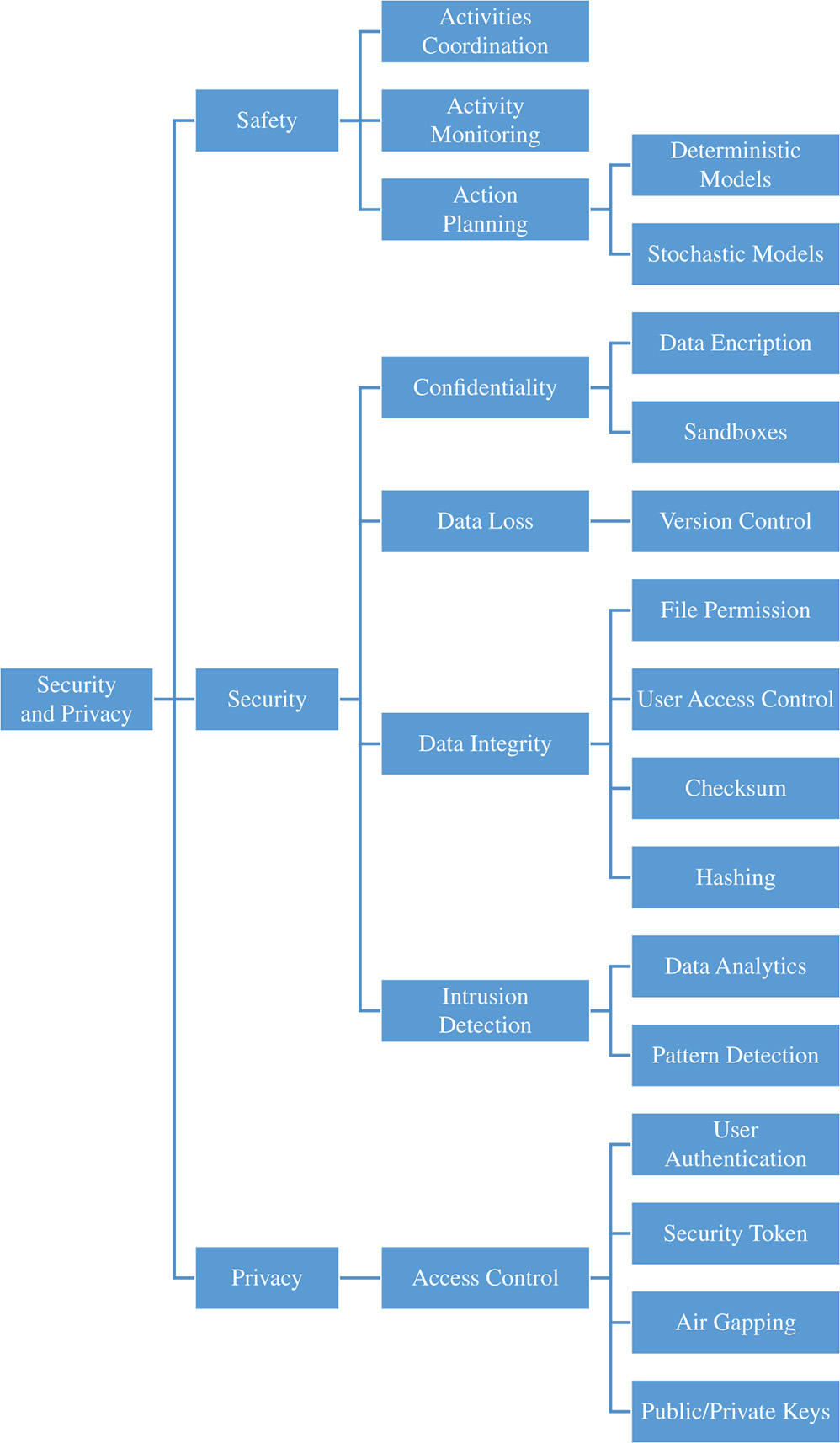

The Security and Privacy layer encompasses three essential elements constituting parts of the IoT settings: those are safety, security, and privacy (see Figure 6.4). In the shown use cases, security and privacy essentially span the whole cycle from computational all the way down to physical aspects. First, few IoT systems are providing minimal safety policies for their users. Section 6.4.2.1 analyses few of the most significant safety mechanisms used in different fog computing–IoT applications. Second, security is a key element for every industrial development. Section 6.4.2.2 details the most important techniques and approaches in IoT systems. Finally, Section 6.4.2.3 presents the most important mechanism to preserve the privacy of the data. As detailed above, this is a vertical layer in the architecture because different kind security and privacy policies can be implemented throughout the data life cycle, from the gathering of the data to their storage in the fog nodes or the cloud environments. For the sake of clarity, Figure 6.4 sums up our taxonomy with all alternatives of modular constituting components.

6.4.2.1 Safety

Safety is fundamental for critical IoT systems. Safety is most often found in a lawful society and the corresponding business logic of IoT systems. That is, these systems must be designed to maximize the safety of any element, entity, good, or user of the system.

The most widely adopted safety practices are activity coordination for orchestrating actions with a concentration on maximizing the users' safety or even those of goods; activity monitoring for ensuring a streamlined and correct execution of actions; and, action planning for controlling the actions required in hazardous situations by either one of deterministic or stochastic designs [68].

Evaluating the application of these techniques in the different use cases, for instance, in STL, different applications can execute coordinated activities to construct green waves for assisting emergency vehicles in avoiding traffic jams or to reduce noise and fuel consumption [69]. SCV systems also rely on action control techniques for monitoring each and every operation, through acquisition of images or vehicles' movement patterns. More often, all users' actions are traced utilizing targeted surveillances.

As a final consideration, in wind farm scenarios, the framework must face fluctuating weather conditions, compare them with a predefined set of thresholds, and apply a lot of arranged activities, e.g. stopping the turbines in the event of a strong wind, for safety reasons. In [70], the authors survey diverse ways to deal with location vulnerability in wind control generation in the unit commitment issue, with fascinating results that demonstrate the presence of models that can adequately adjust expenses, revenue, and safety in a balanced fashion. What's more, as it is demonstrated in [68], the use of stochastic models for unit commitment, instead of deterministic models, can expand the penetration of wind power without any trade-off of safety.

6.4.2.2 Security

Security is a fundamental perspective that must be confronted by industrial settings. The security of IoT systems is usually supported by at least four basic pillars: the confidentiality of the information, ensuring the arrival of data to safe locations, thus preventing their circulation among unauthorized parties. Data encryption and the use of sandboxes to isolate executions, data, and communications are standard methods of ensuring confidentiality [71, 72]. Data loss, can occur; information can be lost throughout circulation or in the different nodes of the fog environment. Normally, these situations are controlled by various protocols utilized for sending data to fog nodes or to cloud settings, through the channels of version control, and configuration management [73]. The integrity of the data must be ensured, discovering and disallowing nonauthorized dissemination of information throughout the whole cycle. Common ways to ensure integrity are file permissions, user-access controls, checksum, and hashing [74] methods. Intrusion detection allows a system to identify if an nonauthorized client is intending to access protected data [27]. Data analytics techniques are used to detect intrusions (observing the behavior of the system), to check for anomalies, and to discover fault recognitions. Finally, pattern detection techniques can be used to compare the users' behaviors with already known patterns, or with the prediction of the system's behavior. Notwithstanding, adapting intrusion detection mechanisms for each IoT setting is challenging as it requires domain-specific knowledge in addition to the technical aspects.

Figure 6.4 Taxonomy for the classification of the security and privacy layer.

Some techniques are utilized for the shown case studies. For example, in smart grid and smart building, security levels needs are obviously high, because any potential breach could result in a blackout. In [45], the authors highlight that these attacks commonly come through intercommunication networks, from intruders with the motive and ability to perform malicious attacks, such as the following. (1) Denial-of-service (DoS) attacks can be targeted toward a variety of communication layers (applications, network/transport, MAC, physical) to degrade intercommunication performance and thus obstruct the normal operation of associated electronic devices. (2) Attacks can target integrity and confidentiality of data, trying to acquire/manipulate information. The great number of devices and providers connected to these systems require the implementation of different security policies to prevent and face any possible cyberattack. Those systems have to ensure that devices are protected against physical attacks, utilizing user-access control policies, and also that sensitive data will never be altered throughout their transmission life cycles, using data encryption and sandboxes techniques [32]. Additionally, fault-tolerant and integrity-check mechanisms are normally operated hand-in-hand with power systems for protecting data integrity and for thereby defending and anonymizing user's actions and their associated localizations.