4

Caching, Security, and Mobility in Content-centric Networking

Osman Khalid1, Imran Ali Khan1, Rao Naveed Bin Rais2, and Assad Abbas1

1Department of Computer Sciences, COMSATS University Islamabad, Abbottabad Campus, Pakistan, 22010

2College of Engineering and Information Technology, Ajman University, Ajman, UAE

4.1 Introduction

Over the years, our world has seen significant growth in the number of mobile devices, such as personal laptops, smart phones, and tablets with sensing capabilities and multiple wireless technologies. With the evolution of 4G/5G technologies, previously resource-constrained devices like sensors and smart objects can easily connect to the outer world on a large scale. Generally, a large number of devices involved in wireless networking are mobile in nature, which tends to modify network topology with the passage of time. Traditional end-to-end Internet communication protocols have limitations in coping with such mobility and dynamic network conditions. A user requesting an item needs to have the IP address of the information provider to reach the content. This makes the traditional Internet protocols host centric, i.e. they need to reach the content host. However, the end user is usually not interested in knowing the location of a content item; rather, the user is interested only in the content, no matter from where the content is retrieved for the user. Therefore, there is a need for a communication mechanism that is more oriented to be data centric, rather than host centric. Keeping in view these issues, new architectures and paradigms are required to be proposed. For this purpose, content-centric networking (CCN) has been developed as an emerging candidate for upcoming data-centric Internet architecture.

CCN has gained considerable popularity as it appears to be a resourceful architecture for the future Internet. Several related projects are active worldwide [1]. Basically, CCN is an emerging technology in which content is accessed using its designated name, rather than an IP address of the host storing the content. Since the content is stored by name rather than IP address, both communication cost and bandwidth usage are reduced. Kim et al. proposed a technology that provides an essential decrease of congestion produced by network traffic, rapid distribution of information, and contents security [2]. Mobile devices have been transformed into multimedia computers with embedded features (like messaging, browsing, cameras, and media players) [3]. The devices are made capable to create and use their own content easily and rapidly. Usually in CCN, media servers and web portals are used to host contents. To retrieve content, an end-to-end connection is required to be established. For that purpose, CCN is a self-organized approach that enables to push only the relevant data when desired.

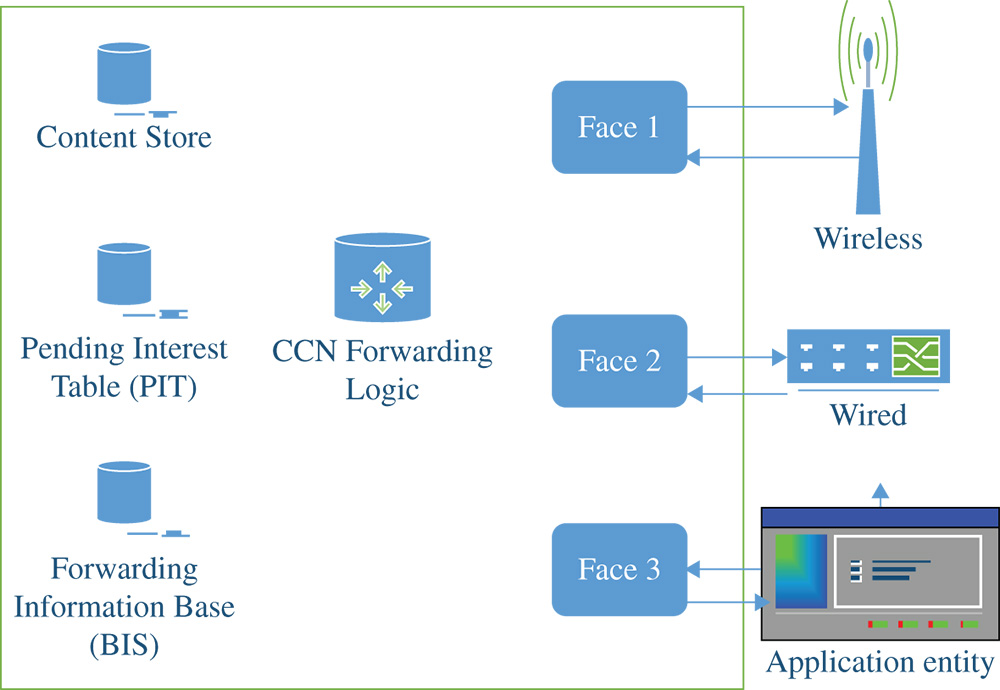

CCN differs from traditional TCP/IP network in many aspects. CCN is an extension of the information-centric network (ICN) research projects comprising four main entities: the producers, the consumers, the publishers, and the routers. Currently CCN 1.0 is the latest protocol design for these networks. The forwarding information base (FIB), pending interest table (PIT), and content store (CS) are elements of a router in a CCN, as shown in Figure 4.1. The FIB replaces the traditional routing tables. The PIT is a cache for the requests. The CS is an optional element and acts as an extra cache where requests (also known as interests) might be stored. Manifests, interests, and content objects are types of messages dealt with in a CCN, which provides a system with data names that are independent of their location. Content search and retrieval is based on these names directly, i.e. content is identified and accessed solely by their names. Communication begins with the request of the consumer. An interest packet is requested, which is basically a content “by name.” The node that contains the requested data can either be the owner or any temporary holder, and responds to the request with the data and additional authentication, ownership, and integrity information. With all aforementioned advantages of CCN, there are still challenges that need to be addressed such as naming, mobility management, and security of CCN.

Figure 4.1 CCN router components.

The major research directions within CCN include: architecture, naming, mobility, security, caching, and routing [4]. These areas have been addressed by some literature surveys on content-centric networks [4]. However, the authors have considered a general discussion on the aforementioned research directions not going into enough details of any specific field. This chapter purely focuses on three major areas of research in CCN, which are caching, security, and mobility management. The discussion encompasses content management, i.e. where to place content (caching), how to secure it from threats (security), and how network mobility affects cached content (mobility).

The rest of the chapter is as follows. Section 4.2 discusses caching and fog computing. Section 4.3 explains the comprehensive analysis of various recent approaches proposed for mobility management. Section 4.4 describes the security aspects and approaches proposed for security management of the network while caching concept of CCN is discussed in Section 4.5, and finally Section 4.6 concludes the chapter.

4.2 Caching and Fog Computing

Recent years have seen fog computing as an emerging technology to address the latency-related issues in the traditional cloud computing paradigms [5]. Fog computing aims to bring the computing and storage capabilities from cloud and deploy them closer to the end user. The fog servers can be placed over multiple layers between network edge and cloud. Having the resources placed near the end users helps save bandwidth consumption at the network core, and reduces the latency, as a user's request can now be processed locally, instead of forwarding to remote cloud servers. This helps in meeting the demands of applications that require real-time response, e.g. augmented reality, real-time health monitoring, and localized traffic management apps, to name a few.

Data caching plays a pivotal role in fog computing—the efficient design of caching on a fog node exploits temporal locality of data and broader coverage of requests to save network bandwidth and reduce delay [5]. The CCNs can be integrated with fog networks, along with efficient caching mechanisms to improve the data content availability for end users. The caching in such scenarios can be classified as reactive/transparent or proactive. In transparent caching, the end user is not fully aware of the caching at the fog server, and the caching is made transparent to the end user. In proactive caching, the data is precached on the nodes before it is actually requested by the end user devices. For example, the software updates can be cached at fog nodes, before they are actually requested by the users. This saves the network from high bandwidth utilization. Another example is caching proximity-related data: geo-social networks (GSNs) like Google Latitude and Yelp store region-related content. Mobile users often use these services to request information about geographically nearby locations and places (restaurants, etc.). Proactive caching is highly related to content distribution networks and is expected to lead to further improvements in terms of bandwidth reduction for the core net.

4.3 Mobility Management in CCN

With the growing number of mobile devices, global mobile data traffic increased up to 18-fold from 2011 to 2016, and especially, mobile-connected devices using mobile video services have increased up to 1.6 billion. Current architecture of the Internet has been designed for a fixed network environment. In order to support mobile nodes over the network, existing topology of the Internet requires more operations like multi-staged address resolution, frequent location updates, and so on. CCN, as an alternative to IP-based content access, has enhanced the mobility features. IP addresses of sender and receiver are used for the delivery of contents over a pre-established network. In such networks, movement of mobile users results in disconnections. Moreover, because content is accessed through its name instead of IP, an end-to-end connection is no longer required to retrieve content. Consequently, a mobile user can achieve a seamless hand-off while continuously accessing content from a new and/or different location. CCN mobility can be classified as a client mobility and content mobility. Client mobility is the type of mobility that deals with client movements during requests for data objects. Likewise content mobility refers to the handling of location changes made by an object or set of objects. Network mobility is another kind of mobility in which a complete network changes location (e.g. a body area network or a train network). In CCNs, when a complete collection of an object set is changing its location, the new path needs to be broadcasted while keeping the old routing records safe. Besides, movements of some chunks of objects belonging to a collection of objects to a new location (e.g. a company employee takes a laptop on a trip) also needs to be taken care of. Moving an entire network results in too many routing updates—for instance, a network comprising a heterogeneous set of publishers. Too many updates result in bottlenecks unless they allow for relative route broadcasting.

4.3.1 Classification of CCN Contents and their Mobility

Keeping in view the stability and mobility concerns in CCN, there are two types of contents named as data contents and user contents. Data content is significantly termed as access to the content itself. Persistent content providers are used to serve the data contents and disseminate their replication for the ease of access to content. So, locations are comparatively stable for applications like web pages, streaming services, and files. While user-contents, on the other hand, contain data having real-time characteristics, e.g. Internet phone, chatting, and e-gaming constitutes user-content. Creation and management of the content is performed by individual mobile devices. Content service components such as rendezvous points and control messages are used for serving data contents and user contents. A rendezvous point is basically a location manager used to keep track of locality information of all providers containing location information of peers. Moreover, a rendezvous point is used as mobility handler by intervening in the relay of all interest and response packets. It is also known as indirection point. While control messages address the issues related to changing of content provider's routable prefixes. However, a sender-driven control message is needed for source mobility.

4.3.2 User Mobility

Mobility for the buffered contents in CCN on the user side is supported by using re-transmission mechanism of request and response packets that first come to Internet service provider (ISP). On the caller's hand-off, it receives a sequence number of the contents from a caller. During communication, lost chunks of information data can be recovered by simple process known as retransmission of data packets. User-side mobility deals with persistent interests. Kim et al. sectioned the CCN services as three types: real-time documents, channels, and on-demand documents based on service characteristics [6]. There is difference in persistent interest and the regular interest. In case of persistent interest, after hand-off, the route remains persistent to the old location and responses are multi-casted to the old location. This concept of multicasting results in waste of network resources. To address this, the signaling message can be used if the frame router could not successfully deliver content corresponding to persistent interest. When a threshold is reached, the edge router generates “host unreachable” with the specific name, for example, (persistent interest name)/Host unreachable, and so on. This signaling message is then delivered to the content server. The gateway used to communicate that signaling message can abolish the related obsolete persistent request and responses from their PIT. While the hand-off occurs due to the mobile client, persistent interests are retransmitted to the new path. Process of retransmission includes a dedicated bit that eliminates the outdated PIT entry. If the interest received on content server, it sets it to “on” in the next responses. It requires additional clients to send interests in addition to preserving the valid path to the clients.

4.3.3 Server-side Mobility

For receiving interests smoothly after hand-off, the name of the mobile is required to be updated in the routing table for each individual content. For this purpose, binding service between changing names is required at both old and new locations. To change the content name, the application server, such as a rendezvous point or a proxy agent binding service, are useful to provide binding service. Rendezvous-based approaches are used for changing names and binding services; each is discussed in subsequent sections.

4.3.4 Direct Exchange for Location Update

The hand-off during direct exchange of location updates indicates data source mobility. Callee is then informed about the new classified pathname of the caller for smooth running of ongoing service. Callee can continue getting replies even during hand-off. Recovery of lost data portion by simple retransmission is impossible. In order to recover the lost data, application regenerates the interests with the new hierarchical pathname. With the factors of simplicity and effectiveness, the issues arise when hand-offs are performed simultaneously by the peers.

4.3.5 Query to the Rendezvous for Location Update

On initiation of caller service with the mobile callee, caller queries a rendezvous server for obtaining callee's location record. As location information of all clients is maintained with rendezvous server, so on occurrence of hand-off new path-name of the client is communicated to the rendezvous server. Therefore, using the rendezvous services, a peer can obtain the routable prefix even after simultaneous hand-offs.

4.3.6 Mobility with Indirection Point

Another technique for reducing the hand-off delay is the indirection scheme. To initiate a request, caller issues a request and response packet with the name (callee). On successful receiving of packet, it issues another interest to the callee with its new routable name. On callee's response, the indirection server issues a corresponding response packet that is referred toward the caller. During a caller hand-off, it notifies occurrence of the hand-off event toward the indirection server. However, on receiving interest for the caller, indirection server buffers that interest and delays generating another corresponding interest toward the respective caller. When caller conveys new hierarchical path name to the server, the indirection server continues to generate corresponding interests and its service toward the caller.

4.3.7 Interest Forwarding

In order to cope with the drawbacks in previous schemes, layered approach known as “interest forwarding” is proposed. Unlike the previous schemes, in this approach a new name is not required for seamless service. It requires the modifiable routers, as incoming interests are buffered in CCN routers toward a hand-off client. Overhead caused by addition of new entry routers on the way between the access routers to corresponding hand-offs is somewhat reduced. In addition to this, hand-off process does not require a new routable prefix, while hand-off latency is diminished as well.

4.3.8 Proxy-based Mobility Management

This scheme is based on the concept that user proxies with CCN environment are configured over overall architecture of IP network. Contents are available to users via proxy node when required. User will send query packet to proxy node instead of making connection with all other devices having required contents. CCN on receiving the query packet (interest) will try to get content, thus reducing the overhead of network configuration. Said scheme is composed of the following steps:

- Handover detection. By using the information of physical link or router advertisement, mobile node detects the change network status.

- Handover indication. While the handover detection event is in progress, hold request message will be sent to proxy node by the mobile node before detection of actual handover. On receiving the hold message, proxy node will stop the process of sending content data to MS's old location and stores content data only to its local repository for future retransmissions.

- Handover completion. In case of acquiring new IP address, mobile node notifies the new IP to proxy node by using handover message piggybacking. CCN proxy node transmits the stored data to new location and does not transmit the repeated interest packets. On receiving content packet at old location, the mobile node can ask for next content data segment using normal CCN interest packet.

4.3.9 Tunnel-based Redirection (TBR)

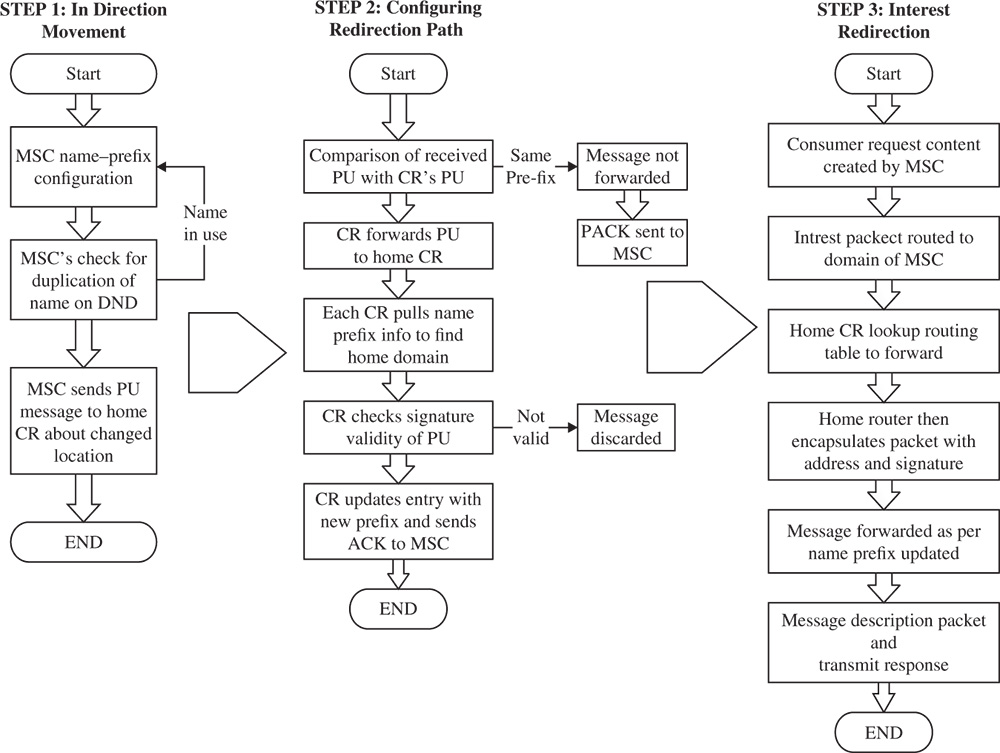

This scheme performs the redirection of incoming interest packets between content router of MSC's home domain and the content router in the moved domain. Tunneling is used for this redirection. To guarantee the presence of a router, it periodically broadcasts the prefix names. Point of attachment in CCN is located by the comparison of this broadcasted information. This information is also used to know whenever a network initiates a prefix update (PU) round to the home domain. Home content router announces the prefix of the name of the MSC initially to inform the other cognitive radio (CR). The PU message about its current movement is broadcasted by MSC and its active state is also announced to the local routers of the domain. Then, the CR of the new domain sends the PU message to the home domain. The redirection path can be extracted by the exchange of the prefix update and its acknowledgment messages (P-ACK). The data packets and interests are then exchanged among the MSC and home CR through this name prefix. This scheme works in the following three steps Figure 4.2:

- Step 1. Indicates movement as the first step that identifies whether MSC would change the status of networks by the network address information or through the underlying physical link information. Normally, data is provided by wireless access point (AP) to MSC containing all CRs in the access domain. When the change of domain occurs in network location, depending on new domain router's prefix, then initially MSC forms a tentative name-prefix in order to tunnel the interest packets. The tentative name prefix composed of MSC's original name prefixes and moved domain name. In order to check validity of tentative name prefix, MSC performs duplicate name prefix detection (DND). Interest packets are broadcasted by MSC to the all in-range peripherals that are on a physical link residing in CR's domain that have been moved. This broadcast checks whether its tentative name prefixes is used by someone else. If it is used by anyone else, then the MSC repeats the DND process for creating new tentative name. Thus, repeated DND is just to guarantee unique tentative name-prefix to adjacent neighbors. After a successful DND process MSC forwards the PU message indicating its new location to its home-based domain CR. Tentative name prefixes and MSC's signature in the PU is used for checking its validity.

- Step 2. This step deals with the configuration of the redirect path. The CR that has received a PU message compares name prefix domain of the PU message with that of its own. CR sends the PU message to the content router based on FIB reference. This information is based on difference of its own domain prefix from that of the PU message. Otherwise, the PU message is not forwarded, instead a PACK message is sent to the MSC. When a PU message is received, each content router pulls out the name prefix information immediately from the router to find the message's home domain router. Then, PIT entry is formed with the name-prefix of the MSC's home domain CR. On receiving PU, a home domain CR checks whether the PU message is valid or not. If the signature validity is proved, the CR sends an acknowledgment message to indicate a successful PU. At that point, the home switch's entrance for that MSC is changed to the new one which the PU message has set. While getting the PACK message, middle of the route CRs look into the direction table once more. The CRs consume the significant PIT section and afterwards forward the PACK message.

- Step 3. This is the last step of the scheme and deals with interest redirection. The consumer requests are directed toward the MSC. Home CR looks up in the routing table to send the interest packet. The newly created interest packet is encapsulated by the home router and forwarded to the tentative name prefix that was extracted from the routing table. Intermediate CRs between the home content router and the MSC deliver this message through regular CCN forward techniques. The MSC receives and decrypts the encapsulated interest packet and transmit the data response.

Figure 4.2 Tunnel-based redirection (TBR) scheme.

4.4 Security in Content-centric Networks

In this section, we highlight the architectural weaknesses of generic content centric networks. This will not include implementation weaknesses due to faulty cryptography schemes and algorithm selection. The major issues discussed will be the system inherent loopholes [7]. One of the major areas regarding research in security of CCNs is the naming convention. Most of the currently proposed naming schemes provide integrity of content using public/private key pairs. The real-world objects are linked to their keys using public-key infrastructure (PKI). Security risks relevant to naming schemes and PKIs are still under-researched and need more focus [8]. Signatures are also included in packets to provide authentication [4]. Certification chains are hierarchical and require an inheritance-based system for its application. The security of CCN is majorly linked to the naming schemes and architectures [9]. For human-readable names to be implemented, we need to trust a third party for the verification of data and name. The third party can be replaced if the network develops a trusted relationship with the system performing name resolutions. Although flat names support self-certification, which is extremely useful, they are not human readable. In this situation, the third party becomes mandatory and needs to be trusted since we require flat names to be mapped on by the human-readable names. The name of the data being requested by a user is made available to the nodes. This includes all those CCN nodes that will take part in processing the request [10].

Name resolution involves two steps. Content name resolution to a single IP address or a set of IP addresses is the first step in this process. These location-defining addresses are called locators. The routing of the request after its locators are marked is the second step. The message request is routed to one of the locators previously configured and is carried out by ISIS, OSPF, or any other shortest path-routing mechanism. In name-based routing, the content directly propagates back to the requesting party. This is ensured since names are used instead of addresses for routing and route-tracking information is maintained throughout the route.

Naming schemes are broadly classified as flat names and hierarchical or human-friendly names. Using flat names hides the original meaning and context of the object since they are hashed. The drawback of this mechanism is that they are difficult to remember. On the other hand, the hierarchical naming schemes are human friendly because of the fact that their structure is more like that of a URL, describing the content and context of the requested object. Human friendliness makes it easier for users to find the objects they are interested in but also poses a few challenges like authenticity, security binding, and ensuring the uniqueness of objects globally [11]. A few naming architectures have been designed recently to overcome security issues caused by naming schemes, as described below.

CCNs are host independent and data dependent. Because of this, we need to associate the security protocols with either the naming schemes or the data rather than securing the channel or the machine. In [1], the authors present such a naming scheme that enables verification of data integrity, owner authentication, and identification along with naming the content. This paper analyzes information-centric network architecture and highlights its requirement based on naming policies and schemes. The scheme proposed in this paper embeds these requirements into an overall architecture. The scheme provides a combination of name persistence, self-certification, owner authentication, and owner identification, all at once. Name persistence is ensured even if there is a change in content specifications, e.g. owner or location. Self-certification makes it easier for the user to verify the data integrity without requiring trust in any third party or the producer of the content. It is usually provided by joining the message/content digest with the original message. Owner authentication and owner identification binds an ID to an entity/content, which enables its verification at the consumer end. This is provided by keeping the encryption key pairs of the owner separate from the self-certification key pairs (which authenticate the content of the data).

In the NetInf—the proposed naming scheme [1]—any entity/content is represented by a globally unique ID. Together with the entity's own data and metadata, the resultant object provides the features mentioned above. Metadata contains information needed for the security functions of the NetInf naming scheme, e.g. public keys, content hashes, certificates, and a data signature authenticating the content. It also includes non-security-related information, i.e. any attributes associated with the object. Metadata is a mandatory part of a generic content-centric network data object according to this naming scheme and can be stored independently. The prototype of the naming scheme is built in Java and is tested in Windows, Linux and Android platforms. It has proven easy to implement because the security mechanism used for hashing, certificates, and encryption are library based and easily available. The naming scheme can be easily implemented in various web browsers and clients as a plug-in.

Wong and Nikander propose a secure naming scheme that can locate resources in information-centric networks, specifically generic-content-centric networks [12]. It is an extension of the existing URL-naming scheme. Allowing secure content retrieval from various unknown and untrusted sources is among the primary goals of this algorithm. The features of this scheme include backward compatibility with URL naming schemes and permission for content identification independently. The storage methods and routing and forwarding mechanisms do not play any role in identifying the content that is transferred. The source and the rules defining a location of URL/URI in its authority fields are separated to provide the independence level in the solution. As mentioned above, backward compatibility is the main feature of this scheme. Besides these, it provides secure content retrieval from varying sources, content authentication and validation with reference to the source, and uninterrupted mobility over the network.

A name-based trust and security approach is proposed in [13]. It is constructed on top of the identity-based cryptography, which is based on allotting tokens of identification to the users and only the authorized users can access data. The identity token derived from any feature of the user's or owner's identity, name of the content, or its prefix is also used as the public. Since the signed identity creates a direct link between the name (identity) and the content, it can be easily accessed by a malicious user and an attack can be launched [14]. An attacker can be anyone from the ISP to the end-users of the system. The technique presented in [14] is an enhanced version of a previous scheme [13], which has replaced identity-based cryptography with hierarchical identity-based cryptography to overcome identified drawbacks.

Besides the naming-related security issues, we briefly discuss the effect of caching on the security and privacy of generic content-centric networks, and denial of service (DOS) attacks.

4.4.1 Risks Due to Caching

The feature of caching in generic content-centric networks makes them different from traditional TCP/IP networks as it stores all the data in the router caches. Since data leaves its traces even after it is removed or forwarded, and can be retrieved by an attacker by probing and other such techniques. Hence caching puts the system security at risk in spite of being a defining feature of the CCNs. Attacks due to caching of data can be reduced by keeping data strictly up to date and restricting the replay of any old data.

4.4.2 DOS Attack Risk

DOS attacks, although difficult to carry out in these networks, are still possible. They are carried out through interests. Since data packets of request and response always follow the same path, they can be exploited and a destination can be flooded with irrelevant data packets. The complications arise due to the fact that the origin of the packet with respect to its location cannot be identified. Due to the architecture of the Internet and the generic CCNs, DOS attackers can easily cover their tracks. In order to cope with these attacks, a few security patches (to be added to the existing system) and trust mechanisms like firewalls and spam filtering have been developed. New security protocols that complement the existing networking protocols are also introduced to solve this problem [4].

4.4.3 Security Model

Establishing security of data in such a system, which is largely content-oriented, is mandatory before it can be implemented on a large scale. This has been a relatively unexplored area as compared to the caching or mobility of generic CCNs and needs further investigation to determine a better security model.

4.5 Caching

A key feature of CCN is in-network caching of content [15]. The CCN idea is based upon showing of interest in content and in turn serving of actual content against the interest. This idea is achieved by allocating a globally unique identifier for each content in the network. Such identifier can be comprehended by all network nodes including routers. Hence routers can cache data packets and, therefore, serve future content interest requests via its own cache instead of forwarding interests to server. Due to the increase in content number and variety, caching has become the leading variable for efficient utilization of CCNs. The problems of caching in CCN include: (a) which content is to be cached, (b) when is appropriate timing for caching, (c) how content would be cached (storing and eviction), and (d) on which network path a content be cached. Furthermore, there is a problem of cache allocation to network routers that states how much space must be allocated to each router. There are two approaches: (1) homogeneous and (2) heterogeneous [15]. The homogeneous approach allocates equal cache size to each router while heterogeneous allocates higher space to some routers and lower to others, based on some optimal criteria. Some recent research projects on the aforementioned caching problems are analyzed and compared in following subsections.

4.5.1 Cache Allocation Approaches

The authors in [15] presented an algorithm to compute optimal cache allocation among routers. The algorithm focuses on maximization of aggregate benefit, where benefit means reduction in hops taken by an interest packet from client to server. The interest packet is assumed to follow shortest path from client to server. Therefore, a shortest path tree rooted at the server that has the desired data for clients is formed. The shortest path tree structure leads the problem of cache allocation to be divided in two subproblems: (1) maximizing benefit of a data packet that has highest probability of being requested in a shortest path tree and (2) maximizing overall benefit of all data packets in whole network that will be sum of all maximizations in previous step. The former problem is being solved with help of k-means clustering algorithm while a greedy approach is adopted for the latter. The proposed greedy algorithm produces a binary matrix having nodes in rows and content packets in columns, depicting content allocations on nodes. The complexity of proposed optimization algorithm is max (O(sn3),O(ctotal logN)) where s is the number of servers, n is total number of nodes, N is number of contents, and ctotal is the total cache space of the network. Experimental evaluation showed that cache allocation should be heterogeneous and related to topology and content popularity.

In [16], the authors modeled network topology as an arbitrary graph and computed several centrality metrics of the graph (betweenness, closeness, stress, graph, eccentricity, and degree centralities). Total amount of network's cache is distributed heterogeneously based on centrality values among nodes of the graph. One homogeneous and 12 heterogeneous cache allocations were simulated using ccnSim, an open-source simulator. Simulation results depicted that cache allocation based on degree centrality is better among all centrality metrics. Moreover, heterogeneous cache allocation had very little (2.5%) performance gain over homogeneous cache allocation.

The cache allocation problem is described as a part of content-aware network planning problem in a budget constrained scenario in [17]. The content-aware network planning derives an optimal strategy to migrate from an IP network to content-aware network. The proposed algorithm for that purpose applies linear programming approach. The algorithm finds optimal placement of content on network routers based on minimization of flow and price per unit traffic. Probability of caching each content on each router is calculated. If cost of storage addition on a router for caching of a content with certain probability is less than budget, then cache is allocated to that router. Content popularity distribution has a major impact on the proposed approach for cache allocation in [17]. For highly skewed content popularity, there is about 87% less storage needed than the one deployed for uniformly distributed popularity content.

In [18], a centralized global cooperative caching placement strategy named generalized dominating set-based caching (GDSC) has been proposed for content-centric ad hoc networks. GDSC minimizes the network power consumption by selection of proper cache nodes and also achieves a better trade-off between round trip nodes and cost of caching. The selection of nodes depends upon nodes degree and number of hops, and the node with high degree will cache the content. Changing the number of hops selection results in different nodes being selected for caching. The number of hops that results in reduced power consumption for the whole network is selected.

The problem of trade-off between in-network storage provisioning cost and network performance has been addressed in [19]. The authors initially proposed a holistic model for intra-domain networks to portray network performance of routing contents to clients and network cost sustained by globally coordinating in-network storage capability. Furthermore, an optimal strategy for provisioning of storage capability has been derived that results in optimization of overall network performance and cost. The authors also demonstrated that the optimal strategy can result in significant improvement on both the load reduction at origin servers and routing performance. Moreover, provided an optimal coordination level, a routing-aware content placement algorithm has been designed in [19] that runs on a centralized server. The algorithm computes and assigns contents to every router for caching, which leads to minimization of overall routing cost in terms of transmission delay or hop counts.

4.5.2 Data Allocation Approaches

Cache management and content request routing policies have been proposed in [20]. Cache management policy decides whether a piece of data that passes through a router must be cached or not. If so, then the policy determines which portion of data residing in the cache must be removed to make room for data to be cached. In-caching and removal process of data, link congestion along the path of data retrieval, and data popularity are considered. A utility function is used in the decision of caching a piece of content on a node. The utility function takes minimum bandwidth of content retrieval path and content popularity as parameters. If a piece of content has higher utility value than lowest utility value of any content at a node, the content is cached in place of lowest utility value content. The main idea of cache management policy is that content forwarded over congested links is retained by caches while evicting the content forwarded over uncongested links. The content request routing policy utilizes a scoped-flooding protocol. The protocol locates requested cached content with minimum download delay as there are multiple caches having a piece of content. The scope is boundary for the protocol to stop searching for cached content, and is calculated in terms of number of hops that a packet traverses. An issue with such caching policy is that there might be no interested node for a content after some time that is residing with a high utility value in a cache. Such a content will never be removed from a cache due to higher utility value.

Caching approach presented in [21] focuses on fair sharing of the available cache capacity on a path among various content flows. Several clients connected to a server with different hop counts may share a network path. A probability factor called ProbCache is proposed in to resolve which content must be cached among all the contents of several users passing through a router. ProbCache is a product of two factors called TimesIn and CacheWeight. TimesIn determines the number of times a path can afford to cache a packet. TimesIn takes into consideration the remaining hop count to client and cache size of the path. CacheWeight resolves contention for caching among contents of several users on a router. CacheWeight decides where to cache the number of copies indicated by TimesIn. A content with highest CacheWeight on a router gets cached on that router. Only that client's content will have higher CacheWeight for which the client is nearest to the cache. CacheWeight is calculated by the ratio of number of hops from cache to server to the number of hops from client to server. The ultimate objective of ProbCache is to utilize caches efficiently to reduce caching redundancy and, in turn, network traffic redundancy. The caching approach achieved 20% fewer server hits and 8% hop count reduction in simulation results. However, only binary tree topology was being considered in evaluation.

In [22], the authors proposed a latency-aware cache management mechanism for CCN. The mechanism is based upon the principal that whenever a content is retrieved, it is stored into cache with a probability proportional to its recently observed retrieval latency. There is a trade-off between cache size and delivery time as reducing delivery time of every content requires a cache of size appropriate to store every content at every node [22]. The proposed mechanism minimizes this trade-off by assigning caching priority to contents that are more distant. It is a fully distributed mechanism since the network caches do not exchange caching information. Two main benefits of the proposed mechanisms are: (1) faster delivery time as compared to latency insensitive approaches, and (2) fast convergence to optimal caching situation. However, dynamic network conditions have not been considered while calculating optimal data caching.

A betweenness centrality-based caching strategy has been presented in [23]. The authors argued that a ubiquitous caching strategy results in data redundancy. Furthermore, a random caching strategy is useful where a high number of overlapping paths exists in network. The proposed caching strategy assumes that each node's betweenness centrality has already been calculated. A content request message stores every node's centrality value on its path to server. The content message generated by a server against a request message gets cached on a node with highest centrality value on the path to client. There is no collaboration between network caches, therefore it is a distributive caching strategy. The proposed caching strategy also considers dynamic network environments where topology tends to change with time. An ego network betweenness measure has been calculated instead of betweenness centrality in dynamic networks. An ego network consists of only immediate neighbors of a node.

In [24], the authors propose an age-based cache replacement policy that specifies which content objects to be cached in routers. An age is associated with each content object. The age decides lifetime of a content copy in a router. Age of a duplicate content object is calculated when it is added to a cache. The age value depends on two factors: (1) distance in terms of node count of the duplicate content object from server, and (2) popularity of the duplicate content object. The age is directly proportional to distance of duplicate content object from server and popularity of duplicate content object. This scheme allows for the content chunks to be pushed to network edges and release redundant content object storage at intermediate nodes. Moreover, this scheme assigns popular contents greater priority for getting cached by routers. The duplicate content object is removed when its age expires. Cooperation exists between routers to modify the age, hence it is a collaborative approach. The approach makes assumptions that content's popularity and network topology are already known.

It has been revealed in [25] that by jointly considering forwarding and meta-caching decisions, the performance gains are achieved in terms of content average distance traveled. The potential gains offered by smart forwarding policies such as ideal nearest replica routing (iNRR) are enabled by meta-caching policies such as leave a copy down (LCD). Cache pollution dynamics completely counterpoise these potential gains otherwise. The authors in [26] argued that iNRR with LCD acquires significant performance improvement over iNRR with leave a copy everywhere (LCE), provided best-case CCN topology. LCD has been designed for hierarchical topologies, so in general case (alternative meta-caching policy), least recently used (LRU) may be desirable.

In [26], the authors argued against the necessity of an indiscriminate in-path caching strategy in ICN and investigated the possibility to achieve higher performance gain by caching less. It was proved that a simple random caching strategy can overtake the current pervasive caching paradigm under certain network topology conditions. The authors proposed a caching policy on the basis of betweenness centrality that caches the content at the nodes having the highest probability of getting a cache hit along the content delivery path. The main goal of designing this policy is to ensure that content always spreads toward users and hence it reduces the content access latency. An approximation of the caching policy was also proposed by the authors for scalable and distributed realization in dynamic network environments where full topology is not known a priori. This approximation is based on betweenness centrality of ego networks. The research in [26] is an extension to work in [23], discussed previously. The authors realized that effectiveness of betweenness is dependent on the betweenness distribution. It was concluded that topologies that exhibit power law distribution such as WWW networks ensure effectiveness of betweenness policy.

The authors have proposed a collaborative caching policy unlike any caching policy mentioned before in [27]. The nodes collaborate by serving each other's request instead of independently determining if a specific item should be cached or which item must be eliminated to make room for new item. This way the policy allows nodes to eliminate redundancy of their cached items. The policy is based on a simple rule that an originally cached item should either be kept in place or be found at one of the neighbor nodes. This way, same performance of distributed caching policy can be preserved while increasing the available free caching slots.

In [28], a low complexity content placement scheme combined with dynamic request routing in CCN infrastructure has been proposed. The objective of the scheme is to reduce caching redundancy and make more efficient use of available cache resources. That way the scheme increases overall cache utilization and potentially increase user-perceived quality. The authors in [28] designed a self-assembly caching (SAC) scheme of collaborative content placement along a path of caches that is based on an analysis of the feasible cache size distribution. The hot content is being pulled by the SAC scheme toward the edge of the network while the cold content is pushed back to the core of the network based on popularity. Therefore, a low caching redundancy and low download delay is achieved because SAC could automatically distribute the content to the proper cache location according to its popularity. A trail having content cache locations is created at some special routers along the content delivery path. The trail is updated during the content chunk caching or eviction, leading to enabling of dynamic and absolute direction of following request search. The trail information makes the cached chunk detectable locally and so improves the in-network caching utilization.

In [29], a cross-layer cooperative caching strategy for CCN is proposed. Three parameters that are user preference, betweenness centrality, and cache replacement rate are introduced as application layer, network layer, and physical layer metrics. A caching probability function is derived based on Grey relational analysis (GRA) among all nodes along the content delivery path. Multiple requests for the same content are aggregated in PIT, which keeps track of interest packets forwarded upstream so that returned data packets can follow the path back to end-point of content requester. Some nodes aggregate requests and termed as aggregated nodes. The aggregate node computes the caching probabilities and adds them in the PIT. Caching probability fields of data packet is updated by the caching probability in PIT when the data packet arrives at the aggregated node.

A novel caching method to deliver content over the CCN has been proposed in [30]. The authors analyze caching content distribution and related interest distribution by considering the mechanism of content store and pending interest table of CCNs. Based on the analysis, a caching algorithm has been proposed to efficiently use the capacity of content store and pending interest table. The caching is done based on a cost model derived in the paper. The cost model is based on total delay to retrieve a piece of content. The content store will calculate the cost to remove a cached content for caching a newly requested piece of content. If the cost is below a threshold, the new content is cached. The proposed scheme does not account for the dynamic network conditions and is a distributed caching mechanism.

In [31], the authors studied the user-behavior-driven CCN caching and jointly investigated video popularity and video drop ratio. It has been said that this is the first paper taking the video drop ratio into consideration in CCN caching policy design. The video drop ratio means that video consumer does not always watch the video till the end. An intra-domain caching in CCN system has been considered that is composed of servers/repositories, routers, and users. Content store (CS) of each router acts as a buffer memory. The routers are divided in levels hierarchy and videos are ranked according to popularity. The main idea of proposed scheme is that ith chunk is cached in jth router if, and only if, the higher ranking chunks than ith chunk can be stored in lower level routers than jth router, and if the lower level routers cannot cache all the chunks having higher ranking than ith chunk. The proposed algorithm achieves optimal values in terms of average transmission hops needed and server hit rate, given a cascade network topology.

A caching policy similar to the one mentioned in [31] has been proposed in [32], where the similarity is used for classification of routers into levels. The proposed strategy maximizes cache utilization and improves content diversity in networks by content popularity and node level matching-based caching probability. Three parameters including hop count to the requester, betweenness centrality, and cache space replacement rate are considered for evaluation of nodes' caching property along the delivery path. The proposed strategy performs classification of nodes into levels on the basis of their caching property. High caching property nodes are defined as first-level nodes; while less capable nodes are termed as second-level nodes. Moreover, in this approach, the popularity of content is matched to the affiliated node using probabilistic methods. Content is cached in nodes based on their popularity in an increasing order, starting by placing the most popular content in the first level, the lesser popular in the second level, and so on. This decreases the replication of less popular content and access delay. The proposed policy improved up to 23% cache hit ratio, reduced up to 13% content access delay, and accommodated up to 14% more contents, compared with leave copy everywhere (LCE) policy.

The research work conducted in [33] emphasizes the need of an algorithm for selection of a subset of nodes among those available to serve new incoming requests for longer time. The authors proposed a cost-effective caching (CEC) algorithm that selects eligible node based on node's remaining capacity to cache more content. In CEC, a node with highest space left in content store will be designated to cache the content. The term cost in CEC is the cost of computation carried on each node in network for the process of sending interest packet and receiving data packet. CEC tries to minimize this cost. The motivation behind CEC is that if a new content replaces already cached content then the cost increases. The cost is minimized by caching a content on some other node that has sufficient free space to store that content without replacement. That way CEC maximizes cache hit and minimizes both cache miss and content replacement.

Table 4.1 Caching schemes comparison.

| Reference | Topology used | Caching objective | Cache allocation type (in case of cache allocation) | Cache allocation criteria (in case of cache allocation) | Data allocation criteria (in case of data allocation) | Performance metric | Performance metric used |

| Wang et al. [15] | Barbasi-Albert, Watts-Strogatz | Cache allocation | Heterogeneous and homogeneous | Topology and interest dependent (heterogeneous in BA while homogeneous in WS) | — | Network-centric | Remaining traffic |

| Rossi and Rossini [16] | Arbitrary topologies | Cache allocation | Heterogeneous | Nodes centrality dependent | — | Network-centric and user-centric | Cache hit probability and path stretch |

| Badov et al. [20] | Grid, scale-free, Rocketfuel, hybrid | Data allocation | — | — | Links congestion and interest dependent | User-centric | Content download delay |

| Psaras et al. [21] | Binary tree | Data allocation | — | — | Hop count from client to cache | Network-centric | Server hits and hop reduction |

| Mangili et al. [17] | Netrail, Abilene, Claranet, Airtel, Geant | Data and cache allocation | Heterogeneous | Data caching probability and budget | Traffic cost and flow minimization | Network and user-centric | Budget and traffic flow |

| Carofiglio et al. [22] | Line, binary tree | Data allocation | — | — | Content popularity and latency | Network-centric | Data retrieval latency |

| Chai et al. [23] | k-ary tree, scale-free | Data allocation | — | — | Betweenness centrality of node | Network-centric | Hop reduction ratio, server hits reduction ratio |

| Ming et al. [24] | CERNET2 | Data allocation | — | — | Content popularity | User-centric | Network delay |

| Rossini and Rossi [25] | 10 × 10 grid, 6-level binary tree | Data allocation | — | — | Ubiquitous | Network- centric | Content traveling average distance |

| Chai et al. [26] | k-ary tree, scale-free | Data allocation | — | — | Betweenness centrality of node | Network and user-centric | Latency and congestion |

| Wang et al. [27] | AS 1755, AS 3967, Brite1, Brite2 | Data allocation | — | — | Benefit of eviction of a content | Network-centric | Content redundancy and cache hit rate |

| Li et al. [28] | 5-level binary tree, | Data allocation | — | — | Content popularity | Network and user-centric | Content redundancy and content download delay |

| Wu et al. [29] | Scale-free | Data allocation | — | — | User preference, betweenness centrality, cache replacement rate | Network-centric | Cache hit ratio, average hops |

| Zhou et al. [30] | Unspecified | Data allocation | — | — | Network delay cost | User-centric | Network delay |

| Liu et al. [31] | Cascade topology | Data allocation | — | — | Video popularity and drop ratio | Network and user-centric | Average transmission hops and server hit rate |

| Li et al. [32] | Scale-free | Data allocation | — | — | Probability based on nodes level | Network and user-centric | Cache hit ration, content number, and content access delay |

| Mishra and Dave [33] | Unspecified | Data allocation | — | — | Cache free space | Network and user-centric | Cache hit, cache miss, and total number of replacements |

| Zhou et al. [18] | Arbitrary | Cache allocation | Heterogeneous | Power consumption | — | Network and user-centric | Average round trip hops, power consumption, number of caching nodes |

| Li et al. [19] | Abilene, CERNET, GEANT, US-A | Cache allocation | Heterogeneous | Network performance and cache provisioning cost | — | Network and user-centric | Hop count, latency |

Due to dynamic change in bandwidth in VANETs, dynamic adaptive streaming (DAS) technology is used for delivery of video content with different bit rates according to available bandwidth. DAS requires several versions of a same video content, resulting in reduced cache utilization. Hence a cache management scheme for adaptive scalable video streaming in Vehicular CCNs has been proposed. The scheme aims to provide high quality of experience (QoE) video-streaming services through caching of appropriate bit-rate content near consumers. A video content is divided into layers where each layer is the video content with specific bit rate. Chunk of layers are pushed to neighbors according to their available bandwidth through broadcast hop-by-hop.

Table 4.1 compares the CCN caching schemes mentioned so far on various critical parameters. Table 4.2 depicts the other objectives (if any) of aforementioned caching schemes coupled with caching.

Table 4.2 Objectives-based comparison.

| References | Caching | Routing | Forwarding |

| Wang et al. [15] | ✓ | ✗ | |

| Rossi and Rossini [16] | ✓ | ✗ | ✗ |

| Badov et al. [20] | ✓ | ✓ | ✗ |

| Psaras et al. [21] | ✓ | ✗ | ✗ |

| Mangili et al. [17] | ✓ | ✗ | ✗ |

| Li et al. [19] | ✓ | ✓ | ✗ |

| Carofiglio et al. [22] | ✓ | ✗ | ✗ |

| Chai et al. [23] | ✓ | ✗ | ✗ |

| Ming et al. [24] | ✓ | ✓ | ✗ |

| Rossini and Rossi [25] | ✓ | ✗ | ✓ |

| Chai et al. [26] | ✓ | ✗ | ✗ |

| Wang et al. [27] | ✓ | ✗ | ✗ |

| Li et al. [28] | ✓ | ✓ | ✗ |

| Wu et al. [29] | ✓ | ✗ | ✗ |

| Zhou et al. [30] | ✓ | ✗ | ✗ |

| Liu et al. [31] | ✓ | ✗ | ✗ |

| Li et al. [32] | ✓ | ✗ | ✗ |

| Mishra and Dave [33] | ✓ | ✗ | ✗ |

| Zhou et al. [18] | ✓ | ✗ | ✗ |

4.6 Conclusions

This chapter has explored the features of ICN, looking at how the architecture could support mobility, caching, and security elements. Different management techniques of each of the challenges have been discussed and analyzed in detail. Due to the caching of contents at local level, replication is challenging for CCN architectures. There are two issues of caching in CCN: (1) data allocation, and (2) cache allocation. Data allocation issue has been addressed by simpler techniques like centrality based or by complex probabilistic techniques. Moreover, routing and forwarding techniques are being coupled with caching schemes by some of the studied approaches to enhance network performance. The cache allocation problem is further classified into homogeneous and heterogeneous cache allocation to the routers. Most of the researchers prefer heterogeneous while some argue that heterogeneous policies have a very lesser gain than homogeneous allocations. Based on caching schemes analysis, we can conclude that there are a lot of parameters such as network topology that affect caching scheme performance. Every scheme achieves some gains based on the prior assumptions on those parameters. Hybrid caching schemes could be designed, but in terms of complexity trade-off on network routers. According to all three aspects, security is the main focus of the survey that has to be explored for future of CCN.

References

- 1 C. Dannewitz, J. Golic, B. Ohlman, and B. Ahlgren, Secure naming for a network of information, INFOCOM IEEE Conference on Computer Communications Workshops, 2010.

- 2 Kim, D., Kim, J., Kim, Y. et al. (2012). Mobility support in content-centric networks. In: Proceedings of the Second Edition of the ICN Workshop on Information-centric Networking. ACM.

- 3 Lee, J., Cho, S., and Kim, D. (2012). Device mobility management in content-centric networking. IEEE Communications Magazine 50 (12): 28–34.

- 4 Xylomenos, G., Ververidis, C.N., and Siris, V.A. (2014). A survey of information-centric networking research. IEEE Communications Surveys & Tutorials 16 (2): 1024–1049.

- 5 Bilal, K., Khalid, O., Erbad, E., and Khan, S.U. (2018). Potentials, trends, and prospects in edge technologies: fog, cloudlets, mobile edge, and micro data centers. Computer Networks 130: 94–120.

- 6 Kim, D., Kim, J., Kim, Y. et al. (2015). End-to-end mobility support in content-centric networks. International Journal of Communication Systems 28 (6): 1151–1167.

- 7 Kuriharay, J., Uzun, E., and Wood, C.A. (2015). An encryption-based access control framework for content-centric networking. In: IFIP Networking Conference (IFIP Networking). IEEE.

- 8 Ellison, C. and Schneier, B. (2000). Ten risks of PKI: what You're not being told about public key infrastructure, Computer. Security Journal 16 (1).

- 9 A. Ghodsi, T. Koponen, J. Rajahalme et al., Naming in content-oriented architectures, ACM Workshop on Information-Centric Networking (ICN), 2011.

- 10 A. Ghodsi, S. Shenker, T. Koponen et al., Information-centric networking: seeing the forest for the trees, ACM Workshop on Hot Topics in Networks (HotNets), 2011.

- 11 A. Ghodsi, Naming in content-oriented architectures, ACM SIGCOMM Workshop on Information Centric Networking, 2011.

- 12 W. Wong and P. Nikander, Secure naming in information-centric networks, Proceedings of the Re-Architecting the Internet Workshop, Philadelphia, Pennsylvania, November 30–31, 2010.

- 13 Hamdane, B., Serhrouchni, A., Fadlallah, A., and El Fatmi, S.G. (2012). Named-data security scheme for named data networking. In: Network of the Future (NOF). IEEE.

- 14 B. Hamdane, R. Boussada, M. E. Elhdhili, and S. G. El Fatmi, Towards a secure access to content in named data networking, IEEE 26th International Conference on Enabling Technologies: Infrastructure for Collaborative Enterprises (WETICE), Poznan, Poland, 2017.

- 15 Y. Wang, Z. Li, G. Tyson, S. Uhlig, and G. Xie, “Optimal cache allocation for content-centric networking,” 21st IEEE International Conference on Network Protocols (ICNP), October 2013.

- 16 D. Rossi and G. Rossini, On sizing CCN content stores by exploiting topological information, IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), March 2012.

- 17 M. Mangili, F. Martignon, A. Capone, and F. Malucelli, “Content-aware planning models for information-centric networking,” Global Telecommunications (GLOBECOM), IEEE Conference and Exhibition, 2014, DOI: 10.1109/GLOCOM.2014.7037078.

- 18 L. Zhou, T. Zhang, X. Xu et al., Generalized dominating set-based cooperative caching for content-centric ad hoc etworks, IEEE/CIC ICCC Symposium on Next Generation Networking, 2015.

- 19 Li, Y., Xie, H., Wen, Y. et al. (2015). How much to coordinate? IEEE Transactions on Network and Service Management 12 (3): 420–434.

- 20 M. Badov, A. Seetharam, J. Kurose, and A. Firoiu, “Congestion-aware caching and search in information-centric networks,” ACM-ICN '14: Proceedings of the 1st ACM Conference on Information-Centric Networking, September 2014, pp. 37–46, https://doi.org/10.1145/2660129.2660145.

- 21 I. Psaras, W. K. Chai, and G. Pavlou, “Probabilistic In-Network Caching for Information-Centric Networks,” In Proceedings of ACM Sigcomm Conference, pp. 50–60, 2012.

- 22 G. Carofiglio, L. Mekinda, and L. Muscariello, “LAC: Introducing latency-aware caching in Information-Centric Networks,” 2015 IEEE 40th Conference on Local Computer Networks (LCN), DOI: 10.1109/LCN.2015.7366343, 2015.

- 23 Chai, W.K., He, D., Psaras, I., and Pavlou, G. (2012). Cache “less for more” in information-centric networks. In: Part I, LNCS 7289 (ed. R. Bestak), 27–40. IFIP International Federation for Information Processing.

- 24 Z. Ming, M. Xu, and Dan Wang, “Age-based cooperative caching in information-centric networking,” 23rd International Conference on Computer Communication and Networks (ICCCN), September 2014.

- 25 G. Rossini and D. Rossi, Coupling caching and forwarding: benefits, analysis, and implementation, ICN'14, September 2014.

- 26 G. Rossini and D. Rossi, Proceedings of the 1st ACM Conference on Information-Centric Networking, September 2014, pp. 127–136, https://doi.org/10.1145/2660129.2660153.

- 27 J. M. Wang, J. Zhang, and B. Bensaou, Self assembly caching with dynamic request routing for information-centric networking, ICN'13, August 2013, pp. 61–66.

- 28 Li, Y. Xu, T. Lin, G. Zhang, Y. Liu, and S. Ci, “Self assembly caching with dynamic request routing for Information-Centric Networking,” 2013 IEEE Global Communications Conference (GLOBECOM), Atlanta, GA, USA, DOI: 10.1109/GLOCOM.2013.6831394, (2013).

- 29 Wu, L., Zhang, T., Xu, X. et al. (2015). Grey relational analysis based cross-layer caching for content-centric networking. In: Symposium on Next Generation Networking IEEE/CIC. ICCC.

- 30 Zhou, S., Dongfeng, F., and Bo, H. (2015). Caching algorithm with a novel cost model to deliver content and its interest over content centric networks. China Communications 12 (7): 23–30.

- 31 Z. Liu, Y. Ji, X. Jiang, and Y. Tanaka, “User-behavior Driven Video Caching in Content Centric Network,” ICN'16 September 26–28, 2016, Kyoto, Japan.

- 32 Y. Li, T. Zhang, X. Xu et al., Content popularity and node level matched–based probability caching for content-centric networks, IEEE/CIC International Conference on Communications in China (CCC), July 2016.

- 33 G. P. Mishra and M. Dave, “Cost Effective Caching in Content-Centric Networking,” 2015 1st International Conference on Next Generation Computing Technologies (NGCT), DOI: 10.1109/NGCT.2015.7375111, (2015).