CHAPTER 4

How Nature Does It

The proliferation of microprocessors and the growth of distributed communications networks hold mysteries as deep as the origins of life [and] the source of our own intelligence. . . .

—GEORGE DYSON

You arrive in Utah and set out on a hike in early October. You fill your backpack with some fruits and veggies, a bottle of water, and a snack bar or two. You put on your hiking boots and set off into a glorious stand of trees that are glowing in the still morning light. A few hours later, having worked up a good sweat, you shed your jacket and stop by a stream that bubbles through the rocks below. You lean against one of the trees and just listen to the sound of the forest. It is fall, and the leaves are turning. As you look around, you notice something odd. It seems as if the entire forest has gotten the idea to change colors all at the same time. As far as you can see, all the leaves are a particular shade of yellow. You are surrounded by Nature with a capital N. In fact, you are in the midst of one of the world’s most massive living organisms.

Say hello to Pando.1 It is a male quaking aspen and it has been your companion since you set out this morning. It has been thriving here for over 80,000 years, just waiting for you to stop by. Pando is an example of what Nature can do when she feels like showing off. This stand of trees is called a monoclonal colony. Pando propagates using something called a rhizome, which is an underground horizontal stem of a plant. Beneath the surface of the forest floor is an extensive network of these rootstocks spreading out for miles and miles. What look like individual trees are shoots sent up by Pando’s rhizal network.

It is believed that in times of great fires, when other types of trees were destroyed, Pando’s rhizal network survived under the soil. Destroying a quaking aspen turns out to be very difficult. Experiments that employed a rototiller dragged three feet beneath the soil to chop up the roots failed to kill an aspen clone.

Quaking aspens aren’t the only plants to benefit from rhizal networks beneath the soil. It turns out that an estimated 80 percent of land plant species employ a related mechanism known as a mycorrhizal network. The word mycorrhizal comes from the Greek words for fungus and roots. These networks transfer nutrients from the soil into nearby trees that would suffer if left to their own devices. You can also find them digging veins into the earth to access trapped water and free up nitrogen.

THE INTERNET OF PLANTS

Mycorrhizal networks have been shown to move water to areas of drought, confer resistance against toxic surroundings or disease, and even support interplant communication. The fungi often benefit by getting access to carbohydrates, while the plants are supplied with a greater store of water and minerals such as phosphorus that the fungi free up from the soil. Carbon has been shown to migrate, via mycorrhizal networks, from paper birch to Douglas fir trees. There is evidence that inoculating degraded soil with a mixture of mycorrhizal fungi leads to more robust long-term growth in self-sustaining ecosystems. Finally, scientists have discovered that some species of fungus in certain mycorrhizal networks act as hubs interconnecting various species. While we often hear of parasitism (good for one, bad for the other) or commensalism (good for one, does no harm to the other) in symbiotic relationships, these networks are classified as examples of mutualism (good for one, good for the other, too), where each member in the community benefits from the relationship.

These patterns represent one of Nature’s grand experiments in building a massively connected, diverse, sustainable, resilient, and mutually beneficial social network.

NATURE HAS BEEN THERE BEFORE

When contemplating the trillion-node network, it’s easy to become overwhelmed by the sheer scale of the undertaking. We haven’t solved the problem of Trillions, but Nature has gotten a head start. The human body has many trillions of cells. And yet we can expect to live for the better part of a century without a catastrophic system failure. Unlike the current crop of computing devices, humans don’t have to be rebooted every few weeks. Nature is the most advanced and resilient system for managing information that we can find.

If you want to accomplish something particularly difficult, a good first step is to find people who have already succeeded at something analogous, and to study how they did it. We are not claiming that Nature has all the answers to the design of our computational future, but we are claiming that Nature is a very good place to begin looking for clues.

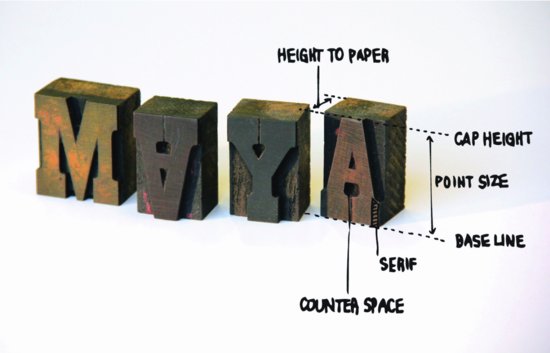

Humans are really just beginners when it comes to understanding information systems. It was only in 1948 that Claude Shannon first provided a rigorous definition of the term information. It was around 1440 when Gutenberg first provided us with the technology for reproducing an unbounded spectrum of information using a limited set of parts—moveable type (Figure 4.1). Counting even primitive writing systems, humans have been encoding, storing, displaying, and manipulating information for, at most, tens of thousands of years.

Figure 4.1 Movable type

But Nature has been running mature, ultracomplex, resilient information systems for billions of years.2 And, as far as we can tell, all of this magnificent work has been based on a single, incredibly simple but vastly expressive information architecture.

In this chapter we will explore design patterns found in Nature that we believe are necessary to the building of a resilient, successful, and sustainable trillion-node world.

Atoms Get Identity for Free

In Chapter 2, we introduced the idea that we should imbue unique identity to every digital object we create. While we’d like to claim credit for this idea, the Universe beat us to it. There is an example of this sort of identity in Nature. It can be found in a principle of physics called Pauli’s Exclusion Principle.

Down near the foundations of the Universe, we find protons, neutrons, and electrons combining to form atoms. In 1925, Wolfgang Pauli formulated the principle that no two fermions (protons, neutrons, and electrons are examples of the class of particles called fermions) may occupy the same quantum state simultaneously. While it is not our intention to pursue a diversion into quantum mechanics, suffice it to say that one of the consequences of this principle is that no two atoms can occupy the same place at the same time. As a result, each particle has a unique path through space-time. In effect, that unique path is the particle’s identity.

This turns out to be very convenient if you are trying to build things, or refer to things, or express relations among things. For instance, if you and I are discussing a can of cola that is on the table in front of us, we generally have confidence that we are talking about the same can of cola. Two different containers can’t be sitting there in the same place and time. How we experience an object may be very different. I may be sitting farther from the table than you. From my distant perspective the soft drink may look like a little rectangular blob of color. From your perspective, sitting right at the table, it may look like a cylinder with a logo on it and some text. But we both know that we are talking about the same thing. Everything made out of atoms has a unique identity, and this “contract” with the Universe is rigorously enforced. No two atoms can exist in the same place at the same time, period.

Thus, in the world of atoms we get identity for free. In the world of bits, however, things are different. Consider an “information object” like, say, Moby-Dick. What exactly are we talking about when we refer to Moby-Dick? Do we mean the story encoded in the book in my hand, my copy? Or do we mean the (not necessarily identical) one stored over there in your library, your copy? Or the copy from the 1960s that had those amazing illustrations? Or do we mean all copies of Moby-Dick everywhere? Or, do we mean the “idea” that there is such a book? Or, do we mean the words themselves?

These are serious questions if we are to have a trillion devices capturing and sending out uncounted numbers of messages about every aspect of our world and our lives. If we take Trillions Mountain seriously as a place where information will not be stored “in” the computers, but rather where computers, people, and devices will be embedded “in” the information, we need to have a plan for how we are going to consistently identify those free-flowing information containers, for determining whether we have the current version (or even what counts as “current”), for resolving conflicts, for maintaining security and privacy, and for fostering collaboration.

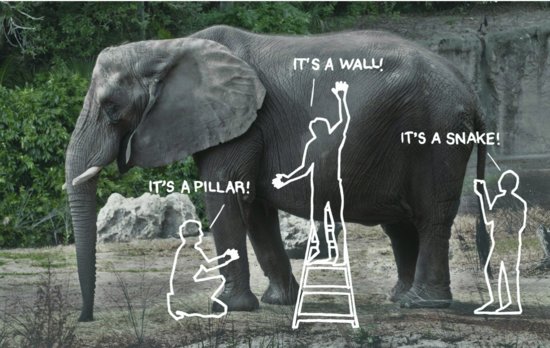

Consider the familiar fable of the blind men and the elephant (Figure 4.2). A group of blind men are asked to describe something sitting in the king’s court. The first one grabs the tail and shouts, “Ah, it’s a snake!” The second holds one of the legs and says, “No, it’s not. It’s a pillar.” “What?” the third exclaims, “This is surely a wall before us” as he pushes on the elephant’s belly.

Figure 4.2 Exploring the elephant

While the story is well trod, it highlights deep questions. How do you collaborate when you’re not even sure you’re talking about the same thing? How do you find important patterns in a sea of information when a global, top-down view is impossible? Many arguments that people have turn out to be confusions about identity. They think they are talking about the same thing. But they’re not.

The Architecture of Chemistry

Pauli’s Exclusion Principle underpins another pattern found in Nature that has widespread consequences for pervasive computing. Those atoms with the universal identity form the basis for a higher-level architectural framework called chemistry. If you think of an architecture as the blueprint for how to define all the constants and variables in a system, you can find no better example of the power of architecture than the Periodic Table of the Elements. Dmitri Mendeleev is credited with drawing one of the first versions of this “map” of chemistry’s territory in 1869.

He inferred that atoms of different elements fall into a repeating pattern and that by formatting the pattern as a table he could predict the existence of undiscovered elements—sometimes decades before they were proven to exist in Nature. His chart, unlike other attempts by his peers, left some places blank in anticipation of future discoveries. He also at times ignored the most obvious way to order the atoms (by their weight) but rather grouped them by similar properties. As scientists learned more about the nature of atomic structures, they found that Mendeleev had ordered his elements by atomic number (which denotes the number of protons in an atom’s nucleus)—a concept that didn’t even exist when he did his work. One of the powerful things about architectural frameworks like this is that they confer predictive powers and help us future-proof our endeavors. When he found an empty square in his table, rather than forcing the next element to “fit,” he left it empty. Not only that, he made predictions about the weights and properties of these missing elements and gave them placeholder names.

For example, in 1869 he predicted the existence of a then-unknown element, which he called “eka-silicium,” which he said would have a high melting point, be gray in color, and would have an atomic mass of 72 and a density of 5.5. When the element—now called Germanium—was isolated in 1886, it was demonstrated to have an atomic mass of 72.61, a high melting point, was gray, and had a density of 5.32.

The periodic table gave us a way to think about the basic building blocks of chemistry. More importantly it enabled us to understand the physical world and use that knowledge to figure out how to combine atoms in new ways to discover, understand, and build all sorts of amazing new composite materials. In essence it gave us generativity, which is an example of what we call beautiful complexity.

Life’s Currency

Of course, the real payoff of all this architecture is this: Implicit in the structure of the periodic table is the potential—actually, the inevitability—for the formation of molecules. But for this, we would live in a world built exclusively out of 92 discrete elemental chemicals—a situation reminiscent of those 51 noninteracting application-level protocols we find in today’s Internet.

Instead, the real world contains an unbounded number of chemically distinct molecules, setting the stage for the emergence of one epochal macro-molecule called DNA. DNA is found inside just about every cell in your body.3 The information about what makes you you, is largely stored in that DNA. The building blocks of life are encoded in this molecule in the form of genes on chromosomes. It is Life’s way of not only encoding critical information, but also copying it, moving it around, and experimenting with new ways to use that information. A chromosome is a “container” for genetic code. In fact, it is not just the container for what makes you and me what we are as humans; it is the standard information container for all life on Earth.

That container has some remarkable properties. Its contents are not immutable—they can be changed. Information can be added and removed. This process is called mutation. DNA can also be copied through a process that nearly all cells have the machinery to perform. Consider: An information container that is standardized across literally all life forms; that can be mutated and extended; and that can be copied and stored by simple (for a cell) mechanisms. Sound familiar?

The evolution of a universal container for storing information—one that could be readily replicated and extended—was a very big deal. In Nature, this drove vast experimentation within huge spaces of possibilities. Nature did get locked-in, eventually, on certain powerful patterns, such as bilateral symmetry, but only after life went through the Cambrian Explosion where all kinds of alternatives were explored.

Consider the parallels between genetics and a liquid economic system. One member of a species combines genes from another and creates an entirely new unit of “value” in the form of a unique offspring. DNA is Nature’s information container but it is also Life’s idea of liquid currency. We don’t call it the Gene Pool for nothing. This is information liquidity writ large. Nature’s “blueprints” are stored in lots of these little, recombinable boxes of information, replicated in unimaginable numbers, recomposable in unpredictable permutations, flowing freely across space, time, and generational boundaries. Moreover, even the inevitable errors inherent in this process prove to be essential, genetic transcription errors being the primary engine of evolution.

Today we are reaping the benefits of this liquidity using a technique called transgenesis. Scientists now routinely combine genetic materials from various species to create new variants, such as goats that produce human drugs in their milk. Recently, students at MIT created transgenic E. coli (a kind of bacteria found in our digestive system) that had genes from plants. When the E. coli was growing, it smelled like mint, and when it was mature it smelled like bananas. Vaccines for hepatitis B have been built this way, and there are a number of trials in progress looking at ways to use these techniques to cure debilitating diseases. If every kind of organism stored its information in some unique format, we wouldn’t be here, and none of those advances in science would be possible.

Resilience

As we build the trillion-node network, resilience will be ever more important. A number of patterns can be found in Nature to handle the challenges of resilience. We will discuss two of the most valuable ones.

First of all, Nature employs massive redundancy as a universal principle. A newt can regrow an amputated toe because the structural pattern for newt toes resides in the DNA of each of its cells.

With this information (and a bit of biological processing magic that humans lack) a lost toe can be regenerated. Memory is cheap in Nature, so the structural patterns that describe what we are can be stored in every cell. Every one of us stores the information equivalent of 200 volumes of the proverbial Manhattan-sized phone book in each of our cells.

Secondly, Nature uses peer-to-peer (P2P) networking. We’ve already discussed the prevalence of mycorrhizal networks in plant life. Obviously, there is no one central “server” in these networks. Imagine how brittle and short-lived life on Earth would have been if there were single points of failure in such massive networked systems.

When cells communicate, they mostly communicate with their peers. When they replicate, they carry a copy of all their information with them. That information turns out to be very handy when things go wrong. Consider when you get a cut on your skin. Almost immediately a cascade of events begin at the location of the wound. First blood platelets produce inflammation and begin to aggregate at the site to aid in clotting. As healing progresses, new blood vessels grow into place, collagen is used to form scaffolding, and finally new skin cells proliferate and mend the wound. The skin cells on your toe don’t talk to the skin cells on your ear.4 The cells in your body don’t ask some central server for permission to repair, replicate, process, or exchange information. That isn’t to say that at higher levels of complexity there aren’t also more centralized control mechanisms. Beyond cells and organs, there are components that do overall coordination. These include the nervous system for fast-paced responses and the endocrine system for more long-term modulation of activities. Even those more centralized functions benefit from this pattern of massive redundancy. There are countless cases of patients with some form of brain damage going through rehabilitation and regaining some level of function via another part of the brain.

Before the advent of the Internet, society had already learned how to use peer-to-peer networks. As we have already mentioned, good old-fashioned libraries are a nice example. The information stored in libraries is massively replicated in the form of books. Literary citations don’t point to individual copies—any copy of Moby-Dick will resolve a link request. Copies are stored in a vast number of independent places. And any library can request a copy of some missing volume through the interlibrary loan network, and some other library will almost certainly be able to serve it up. Books have been a common currency of information for many hundreds of years, and they, like DNA, have been promiscuously replicated and distributed throughout the world. We couldn’t get rid of Moby-Dick if we tried.

Biological systems and world-spanning ecologies have much to offer the student of Trillions. Our efforts at biomimicry for information systems have only really just begun. We’d like to highlight four patterns that recur in different guises throughout the remainder of this book, all found in Nature, all valuable to our future.

THE QUALITIES OF BEAUTIFUL COMPLEXITY

Earlier we referred to beautiful complexity—a made-up term to distinguish good complexity, the kind of complexity we want to foster, the complexity that provides power, efficiency, and adaptability without standing in the way of usability or comprehensibility. We can recognize this beautiful complexity because it is built on a few powerful structural (or “architectural”) principles. These include hierarchy, modularity, redundancy, and generativity.

Hierarchy

We are inspired by the lessons of atoms and chemistry, but the devil is in the details. The power comes when atoms are put together to make molecules, and how those molecules make cells, and how cells make organs, and organs systems, and those systems make you, and how you and I and others make us. Each level can be described and modeled without reference to its use in any higher level. This is what we mean by the term layered semantics. The levels below don’t need to “know” how they will be used by the layers above or what those higher layers do.

Hierarchical composition is the most fundamental quality of beautiful complexity and the one we mention most often and with the most variations. In a hierarchical design, parts are assembled into components, which become the parts of larger wholes, and so on. It is a revelation to discover the extent to which hierarchical organization pervades the natural world and complex systems in general, but not in itself a particularly useful revelation. The natural advantages do not result from simply dividing things into parts. The boundaries between parts and wholes must be arranged in particular ways or the hierarchy doesn’t achieve beautiful complexity.

Consider a bucket of fried chicken. Choosing pieces from the bucket—looking for your favored white meat—casually chewing on the bones in a moment of reflection—you become aware that the “chicken” represented by this bucket of pieces makes no obvious sense. Instead of wings, legs, breasts, and so on, the pieces look as if some insensible hand had randomly cleaved the bird into roughly equal-sized pieces. And it is difficult to understand how these pieces could be reassembled into a whole chicken (Figure 4.3).

Figure 4.3 Randomized chicken

The joints between bones are the boundaries that define the architecture of a chicken—in life and on the butcher block. Similarly, in the design of complex systems, one of the important, and often difficult, tasks is to identify the best boundaries between the layers of the hierarchy—to find the natural joints. In naturally evolving systems, the boundaries emerge over a long time in response to functional needs, such as the requirements of replication or growth. Military units and ranks evolved in response to the pressures that war places on command. The assembly we call an automobile engine (which is simultaneously both a component and a complex system) evolved, of course, in response to the needs of its mechanical function, but also to the constraints of material properties, manufacturing limitations, and the geometry of assembling and servicing all those many, many parts.

The important lesson from these systems is that, over time, hierarchical layers and their boundaries become well established when they support the system’s survival—its creation, its modification or adaptation, its performance. Whether we are talking about squads, battalions, and armies—or crankcases, valve trains, and fuel systems—the stability of the boundaries is important to the evolution of better subsystems. If every time we wanted to develop a better fuel delivery system (e.g., fuel injector versus carburetor) we had to redesign all the way down to the level of pistons and bearing journals, evolutionary progress would be stymied.

Simon’s tale suggests that hierarchical composition, and the systematic decomposition that it permits, is important not only for understanding, describing, and working with complex systems, but it also affects, in a fundamental way, their very viability. Through hierarchical means, complex systems can evolve—part by part, level by level—to adapt to changing conditions and shifting needs. And in designing to this advantage it is important to consider, not just the hierarchy itself, but the number and size of the layers, and to get the boundaries between layers in the right places.

As a cautionary note, Simon also highlighted something interesting that he found in exploring complex systems. Not only were they often hierarchical in nature but they tended to be “nearly decomposable.” That word, nearly, is an important one. He specifically didn’t say “completely” decomposable. There are times when no matter how hard you try to turn things into little black boxes, they show some effects from other components in the system. Not everything is hierarchical, and you can’t assume that systems can be completely decomposed without losing some critical essence. Effects can leak through hierarchical containment. For instance, if you’re building a physical system, you can make a box, but the box isn’t going to hide the mass of the stuff inside, or prevent it from radiating or absorbing heat. These unmodeled effects often give rise to unexpected, hard-to-predict, emergent properties. In the world of software systems, there are many examples of unmodeled effects like CPU usage, memory requirements, consumption of stack space, and shared mutable state.

Software designers often build centralized services to mitigate these side effects. Such services allow them to “cheat” their way around the inconvenient aspects of strict modularity. Yet if we are to build the trillion-node network, the demands of arbitrary scalability will require more sophisticated solutions. Unlike Mendeleev’s peers who just put things together without leaving places for future discoveries, we have to plan for unbounded complexity.

Hierarchy gives us a very powerful tool for understanding and building complex systems, but emergent properties and unintended consequences remain a challenge for the design of the trillion-node network.

Modularity

Modularity is sometimes confused with hierarchy—they’re not the same thing. Modules may be arranged in hierarchies, but not necessarily. And hierarchic layers may be modular, but again, not necessarily.

Generally, a module is a standardized part or unit that can be used to construct a more complex structure. But in the field of design, the word also carries an implication that a unit can be added, deleted, or swapped out with minimal impact on the overall structure or assembly. That is, the designer need not modify the underlying structure in order to add a module or as a consequence of removing a module. Thus, you can plug a coffeemaker into your kitchen outlet, or unplug it, or exchange it for a toaster without making any modifications to either appliance or to your kitchen wiring. This example is so simple and common that we don’t think of it as an example of modularity, but it does capture the feature of modularity that makes it significant to achieving the beautiful complexity that is our goal.

Note that for all its local modularity, you wouldn’t be able to take your toaster from your kitchen in the United States to a kitchen in Sweden and plug it in so easily—you’d have to find an adapter. Adapters are, of course, possible as a means of correcting for failures in modularity. But we never want to resort to them. They ruin the beauty of the complexity. Eventually, it becomes a question of how far to push the boundaries of local modularity. Context matters in this regard. This question is one that will become increasingly important with the spread of pervasive computing. What makes modules so significant to beautiful complexity is not just standardization of independent units, but in particular the standardization of the interfaces where one module meets another or adds on to the underlying structure.

Recall that in Chapter 2 we referred to Legos as an everyday example of a system with fungibility. Get out your Legos again and look at the interface aspects of the toy. It’s all about the bumps. It works because of the particular size, shape, and spacing of the bumps on the tops of the blocks, and the shape of the hollow on the bottoms of the blocks. Pretty simple. Works great with little brick like shapes. But can it do more than that, more than what the originators of Lego had in mind?

A look in any toy store confirms that the answer is yes. Beyond the general-purpose Lego blocks, which maintain the basic integrity of the system, kits are available, including specialized parts of limited (not general) utility—like rolling wheels with tires, spacecraft fins, miniature doors and windows, and people. It all works because the designers of the system have been absolutely scrupulous about the details of the interface—where part meets part, or module meets module. Examine one of the people closely: Their hands and feet are shaped to grip the bumps. The top of the body ends in another standard bump that fits into a variety of heads. And all the heads are the same shape, so that they can be printed with a variety of faces. Finally, all the heads are topped with a bump that fits a variety of hats. A system with few distinctly shaped parts enables a vast variety of distinct creations.5

Redundancy

The core meaning of redundancy has to do with the inclusion, in an object or system, of information or components that are not strictly necessary to its meaning or function. Complex hierarchical systems generally have a great deal of redundancy.6 Yet redundancy as such is superficially thought to be a symptom of inefficiency. But in a real, entropic world, redundancy is the safety net of life. It keeps things going when some of the details fail or are ignored. In engineering, redundancy provides backup capability in case of the failure of components or the unexpected exceeding of environmental assumptions. In languages, redundancy is the extra information that protects meaning when part of an expression is vague, corrupted, or simply missing.

Human communication via natural language uses far more words than are strictly necessary to convey the meaning of a sentence or utterance. We repeat ourselves, but we learn through repeated hearing—and by repeating we can carry on a semblance of a conversation even in a noisy bar. In the printed word, our letterforms themselves are full of redundancy. A common demonstration in basic typography courses shows students the importance of letter shapes and word shape by covering the bottom half of printed words. In most cases, especially with lower case letterforms, the text is still nearly perfectly readable7 (Figure 4.4).

Figure 4.4 What is this word? How much of the word is actually there?

Symmetry is a kind of redundancy that deserves special attention because of its conceptual origins in the physical and perceptual dimensions of our world. Symmetry provides additional information about the form, structure, or action of some physical object—natural or artificial. If you have seen one side of a car, you can be pretty sure what the other side is going to look like; all that’s needed is your memory of the side you see, plus a spatial “mirror” transformation that our brains perform quite easily, as long as the image isn’t too complicated. We aren’t generally conscious of performing such symmetry operations in everyday life, but they are going on at a deeper level, and we notice them in a jarring way when our mental predictions are not met—as in a car that is different on each side or in the Batman character Two Face.

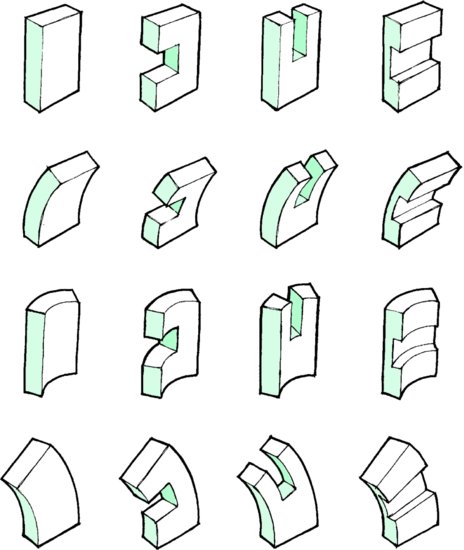

Though the bilateral or mirror sort of symmetry is the one that first comes to mind, there are other symmetries that are common to physical/perceptual experience. The terminology varies, but three other fundamental symmetries are generally known as rotational, translational, and dilatational symmetry. Sometimes repetition is included as a fifth type (Figure 4.5).

Figure 4.5 A Studio exercise about the effect of symmetry on perceived complexity

At their roots, all these symmetries do the same thing. They reduce the information that we need to process in order to recognize, describe, or construct a complex physical object. But we are discussing the design of a world where physical objects and digital objects exist in concert—information architectures, connected experiences, relationships among people and information devices—we need to be aware of symmetries and other kinds of redundancies in these circumstances as well.

We are already familiar with a sort of intermodal redundancy in user interfaces that pair icons with descriptive words. It’s repetition with a difference, where one mode supports the other. In a world of ubiquitous information devices, how will we accomplish similar enhancements of usability—perhaps a word coupled to a gesture, or a vibration coupled to a feeling of heat, or a texture coupled to an odor?

In Chapter 3 we discussed the current instantiation of “the cloud” and how it often excludes the sorts of redundancy found in Nature. We noted that this lack of redundancy could be not only damaging but catastrophic. Redundancy may seem inefficient at some level, but it turns out to be a critical optimization if your goal is long-term resilience. In the pervasive computing community one sometimes encounters the phrase creative waste. We are not fond of the term—redundancy is not waste at all.

In the world of Trillions Mountain, redundancy will become an even more important path to beautiful complexity than it is today. In a world that is international and intercultural; in which our possessions are informational as well as physical; and where we interact with one another across widening gaps of space, time, and intention; designers of connected systems will need, more than ever, to pay conscious attention to this aspect of beautiful complexity.

Generativity

Generativity is qualitatively different from the other aspects of beautiful complexity. Hierarchy, modularity, and redundancy are concepts that describe and qualify the states of things. By contrast, generativity is fundamentally about processes. Of course we can speak of a process as being hierarchical, but it is more likely that we are actually talking about a structure—or organization, or its state—which gives rise to the process we describe as hierarchical. The notion of generativity entered the design profession by way of linguistics. Generative grammar, developed and promoted in the 1950s by Noam Chomsky and others, is a type of grammar that describes language in terms of a set of rules, called transformations, which operate within a hierarchy of cognitive levels, to generate an unbounded number of possible sentences from a limited number of semantic primitives, word parts, and possible sounds.

A prior approach, known as phrase structure grammars, tried to account for human language by proposing what amounted to sentence templates, with the various parts of speech at their appointed places in the templates, waiting for the speaker to fill in the template with actual words. It was a view of language that owed more to the methods by which scholars analyzed speech than to an understanding of how ordinary people speak.8 Phrase structure grammar was ultimately inadequate because it seemed to require far too much preplanning on the part of the speaker.

The importance of generative grammar was that it accounted, in a reasonable way, for our human ability to concoct an apparently endless stream of complex sentences out of a very limited range of semantic, syntactic, and phonological means. That is, through generativity, it doesn’t take much to say a lot.

Soon after the notion of generative grammar was in the wind, other examples of generative systems were quickly proposed in other realms of creative production.

Architectural theorists proposed generative “form grammars” by which they characterized practicing architects as generating building forms through a set of hierarchical rules. While no working architect may admit to consciously invoking generative rules, the notion of an architectural form-generation process maintains its viability. After all, no speaker necessarily admits to invoking generative rules in speaking either, but the evidence that it’s happening, unconsciously, is too strong to be disregarded.

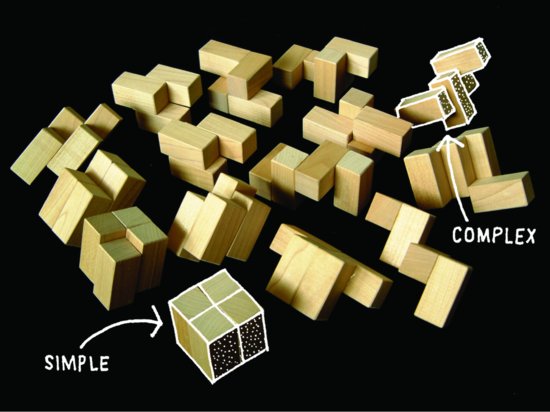

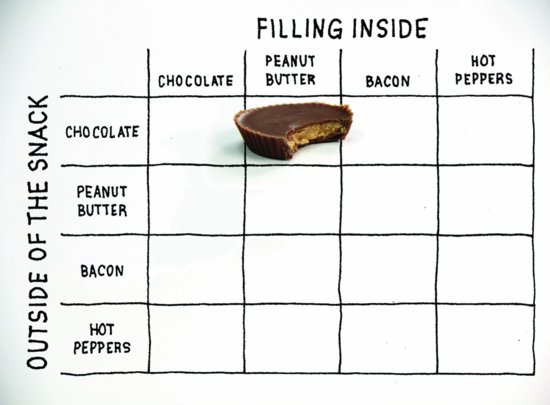

A simple generative system—much simpler than the grammars proposed for natural language—can be illustrated using a set of possible combinations expressed in a matrix. Imagine a matrix for generating snacks; four rows by four columns (Figure 4.6). The rows represent the “outside of the snack,” and are labeled “chocolate,” “peanut butter,” “bacon,” and “hot peppers.” The columns are similarly labeled, but represent the “filling inside.”

Figure 4.6 A matrix for comparing snack foods

Now if the rule is “choose the intersection of one row and one column, and describe the snack at that intersection,” you find you can generate 16 different snacks. Granted, some may not be very interesting, like the intersection of chocolate/chocolate—unless you’re a chocoholic. And some may not be very appealing. But you do generate a Reese’s Cup, which is good, but already exists. And you also generate peanut butter outside, with a chocolate filling inside. A sort of Inverse Reese’s Cup, which doesn’t exist, and might be pretty good if you could find some way to structurally stabilize peanut butter. Fortunes have been made from these kinds of experiments in generativity. The concept of generativity is very broad, found even in the elementary processes of visual design (Figure 4.7).

Figure 4.7 Generative Sketching: Combinations and permutations are used to generate a large number of unique shapes from a few simple rules.

Generativity makes it onto the list of qualities for beautiful complexity specifically because of the needs we see arising in the land of Trillions. Not only will there be trillions of nodes—trillions of devices—but similarly large numbers of opportunities for satisfying human needs and desires—the needs and desires of billions of individual and subtly different people who may each claim their fair—and individualized—share of the digital resources of the planet.

The well-worn practice of designing just states (products, devices, messages) will not do to satisfy such a demand. We will have to also get good at designing processes by which people author and tune the digital environment in which they live. Imagine a world in which purchasing a product, or an app, or a service becomes more like the process by which you buy a home, or gather your circle of friends around you. It’s not just about price or features, but has a lot to do with personal identity, preference of lifestyle, comfort, and familiarity. Maybe it sounds complex, but if it works, it will be rich and beautiful. There is no better route to beautiful complexity than to seek generativity in the things we design.

AT THE INTERSECTION OF PEOPLE AND INFORMATION

The reader may note that to this point we haven’t mentioned many of the actual physical things that humankind has copied from Nature. We haven’t mentioned the rise of biomimicry that has driven new inventions in artificial limbs, airfoils, adhesives, or living quarters. We didn’t talk about geodesic domes or seashells.

While we think these efforts are all well and good, our focus is not on the design of things per se, but on the discovery and application of design patterns and processes that can be applied at the intersection of information systems and people. As Tolkien taught us, we are not merely copiers, we are subcreators—we need to understand the rules that give rise to the systems we create.

In the next chapter, design itself will take center stage as we explore ways in which we can use the building blocks of the natural world along with practices honed over decades—in some cases centuries—of deliberate creativity to drive thoughtful systematic change.

1 Pando is Latin for “to spread out, stretch out, extend.”

2 The fossil record for living organisms has recently been pushed back to almost 3.5 billion years ago.

3 Mature human red blood cells do not have a cell nucleus or DNA.

4 In the popular conception of the Internet of Things, many people believe that everything will talk to everything else. If Nature is our guide, we would beg to disagree.

5 And the story doesn’t end there. A product line called Lego Technics extends the architecture to support active electrical and mechanical components—including bumps containing electrical contacts.

6 This is often where we encounter the nearly decomposable quality of complex systems. But just as nearly can frustrate decomposability, by relying on redundancy across layer boundaries, we can arrange to separate the layers without losing information. Looked at another way, this redundancy places a limit of the semantic isolation in semantic layering.

7 Interestingly, the trick doesn’t work nearly as well if you cover the top half of the words. This demonstrates that most of the information is located in the top halves of the letterform shapes.

8 Those “sentence diagrams” they made you do in fourth grade were essentially exercises in phrase structure grammar.