Damage Repair

You’ve smoothed your scenes, and your dialogue tracks, although still unmixed, sound quieter and more pleasant. Your control over perspective has added new depth to the scenes, and it’s possible to hear nuance in your massaged tracks. What you probably don’t notice are the endless noises populating the recordings. Your brain wants to hear content, so it filters out most noises and leaves you with the relatively “low-baud” information: the words. So what’s the problem? After all, you understand everything.

You can hold a conversation at a loud dinner party or even in a dance club. Under such taxing conditions you lose nuance and perhaps a bit of information, but you usually figure out the missing words thanks to context. Still, you’ll inevitably find such a conversation exhausting and at some point give up and zone out. It’s just too much work. So, too, with motion picture dialogue. Any dialogue that’s “polluted” with even small noises wears down the viewer and diminishes the movie experience. Background noises lessen dialogue’s punch and give a false impression of greater overall room tone level. And since most background noises afflicting dialogue tracks come not from something within the story but rather from sounds on the set or in the environment, the viewer is constantly reminded, “This is a movie!” The magic is broken, even if most viewers are unaware of it.

What Are Noises?

The world is a noisy place. Even the most vigilant location mixer can’t do much about airplanes, traffic, and other exterior sounds other than to beg for additional takes.

Usually, you solve such steady-state noise problems (severe traffic, air conditioning and compressors, birds) and momentary background noises (car-bys, horns, neighbors yelling) by replacing words, sentences, or entire scenes with alternate takes or wild sound or looping. There are also many borderline cases, where noise is a nuisance but doesn’t interfere with the dialogue. A scene shot next to a busy street, for example, can be justifiably noisy. The fact that you have to labor to hear a few words might help the viewer to sympathize with the character, who also must strain to understand and be understood. Remember, though, that a noisy dialogue track saddles you with a noisy scene. The scene will never be quieter than the dialogue premix, and you won’t be able to isolate the dialogue.

Whereas a reasonably noisy dialogue track might be acceptable in such a logically loud setting, even the slightest amount of inappropriate background noise can kill a quiet, intimate scene. A scene where a couple sits in their living room in the middle of the night discussing their troubled relationship loses its intimacy and edge if we hear traffic, airplanes, the neighbor’s TV, or a crewmember walking around the set. It has to begin with dead quiet dialogue. The supervising sound editor may choose to color the scene with quiet spot effects, an interesting and mood-evoking background, or music. But the dialogue editor must be able to deliver a track with no disturbances to create a world of two people in a very quiet room—alone with all their problems.

When a scene is downright noisy, you’ll probably have to call the entire scene for ADR, forcing the supervising sound editor to create a sound space from scratch. On the other hand, there are steady-state noises that the rerecording mixer can clean in the mix. In either case, you should consult the rerecording mixer or the supervising sound editor.

There are, however, many more tiny noises that you won’t repair by ADR line replacement or through equalization or electronic noise control. Transient noises, the not-so-silent warriors in the conspiracy to screw up your tracks, have several sources for which you must be on the lookout. For example:

Actors

• |

Unusual or inappropriate vocal sounds: unsavory, off-camera, or loud lip smacks |

• |

Dentures, bridges, and other dental work (always a delicate subject) |

• |

Stomach gurgling (you can always tell when a take or ADR line was recorded just before lunch) |

• |

Footsteps that interfere with the dialogue or introduce an unwanted accent |

• |

Body mic clothing noise or other rustle interference |

• |

Other clothing interference (jangling earrings, clothing “swooshes”) |

• |

Unusual diction that results in clicks or pops |

• |

Brief but loud clicks caused by electrical disturbances or static discharge |

• |

Clicks caused by bad mic cable connections (usually louder and longer than static discharge clicks) |

• |

Radio mic breakup (usually a long-duration problem that most often requires replacement of the shot) |

Crew

• |

Dolly pings |

• |

Camera pedestal hydraulic/pneumatics |

• |

Crew footsteps |

• |

Crew talking(!) |

• |

Continuity stopwatch(!) |

• |

Grip tool rattles |

Another common source of damaged dialogue is sounds that don’t come from the set, but we’ll deal with them later.

Finding Noises

Before you can fix the noises in your dialogue, you have to find them. In most cases, eliminating noises isn’t difficult, but noticing them is daunting. It sounds easy, but it’s a skill that separates experienced dialogue editors from novices. Even if you pride yourself on your superhuman listening skills and canine hearing, you have to learn how to listen for the annoying ticks, pops, and screeches that besiege every dialogue track. They’re in there, coyly taunting you to find them.

First, you must discover as many noises as you have time, patience, and budget for. Then, before blindly eliminating every one, you have to ask yourself, “What is this noise? Does it help or hurt the story?” Period. It’s actually as simple as that; you just have to stay awake and aware.

Focusing on Noises

Every step in the process of filmmaking is sorcery. It’s all about getting the viewer to believe that this story really happened as shown in the final print. As a film professional you like to think that you’re immune to this seduction. You know it’s not real. Yet when you screen the offline picture cut or watch a scene over and over, the most obnoxious noises might slip right past you. Just like the average movie fan, you’re sucked in.

How do you learn to spot noises before someone else does? After all, it’s pretty embarrassing to screen your seemingly perfect tracks—that you lovingly massaged for weeks—only to have a friend of the assistant picture editor comment, “What about those dolly sounds?” Sure enough, three scenes with flagrant dolly noises. No professional heard them because they were captivated by the story, but someone who walked in off the street noticed immediately. It’s your job to hear and correct these sounds, so you must find ways not to fall victim to the story’s siren song.

Evaluating Noises

Question every noise you hear. Don’t fall into the trap of “Well, it was part of the location recording, so it must be legitimate.” Obviously, if the gaffer dropped a wrench during a take, you replace the damaged word. However, when an actor’s footstep falls on a delicate phrase, you might be reluctant to make the repair, thinking it’s a “natural” sound.

Remember, there’s nothing natural in the movies. To see if it’s a good decision to lose the errant footstep, fix it. Either you’ll miss it, finding the rhythm of the scene suddenly damaged and unnatural, or you’ll see a new clarity and focus. If removing the footstep results in a rhythmic hole but greatly improves articulation, you can call for Foley at that point so that the necessary footstep is in place but controllable. Better yet, find another, quieter production footstep to replace the offender.

The most rewarding part of careful listening is that once you’ve heard a noise or had it pointed out to you, you’ll never again not hear it. It’s just like the “Young Maiden/Old Woman” illusion, where if you look at it one way you see an old woman but when you look at it another way, you see a beautiful maiden. For some it takes a long time to see the maiden as well as the hag, but once your brain has identified both, you will always be able to quickly separate the two. Just so, you can listen to a track many times and never hear the truth. But once your brain wakes up to it, you’ll hear that click in the middle of a word and wonder how you missed it the first few times around. Ignoring the meaning of the dialogue and focusing on the sounds is a useful tool when searching for unwanted noises. Listening at a reduced monitor level can help you hear beyond the words.

The following sections describe the most common origins of the noises you’ll encounter. Use them to learn how to locate unexpected infiltrators.

Dolly noise is easy to spot since dollies and cranes don’t make noise when they’re not moving. It’s simple: When the camera moves, listen for weird sounds—for example:

• |

Gentle rolling, often accompanied by light creaking and groaning. |

• |

One or more pairs of feet pushing the dolly. |

• |

Quiet metallic popping or ringing, indicating flexing dolly track rails as the heavy dolly/camera/camera operator combination crosses over them. The tracks are made of metal, so this flexing resembles the sound of an aluminum baseball bat striking a ball. Occasionally you’ll hear this bat sound even when the dolly isn’t moving. It might come from the track settling after the dolly passes over it or from a crew member lightly bumping the track. |

Too Many Feet

Unnecessary footsteps are easy to hear but hard to notice. When you first listen to a scene involving two characters walking on a gravel driveway, all seems normal. You hear dialogue and some footsteps. But something inside tells you to study this shot more closely and check for problems. Ask yourself how many pairs of feet you hear. If it’s more than two (which is likely), you have a problem. Picture how the shot was made and you’ll understand where all the noise comes from. How many people were involved? Let’s see: two actors, one camera operator, one assistant camera operator, one boom operator, one location mixer (probably), one cable runner (probably), one continuity person, one director. That’s a lot of feet. But because you expect to hear some feet in the moving shot, you initially overlook the problem.

As with dolly noise, be on the lookout for a moving camera—in this case handheld; that’s where so many noise problems breed. Find out how the footsteps interfere with the scene by replacing a section of dialogue from alternate takes or wild sound (discussed in later sections), noting any improvement. It’s likely that the scene will be more intimate and have a greater impact after you remove the rest of the crew’s feet from the track.

Fortunately, a good location recordist will spot the trouble in the field and provide you with workable wild lines, and perhaps even wild footsteps, to fill in the gaps. Otherwise, you’ll have to loop the shot.

Remember, there are a lot of people on a set all of whom move, breathe, and make noise, so be on the lookout for these noises:

• |

Footsteps that have nothing to do with the actors. |

• |

“Quiet” background whispers. (There’s no such thing while sound is rolling.) |

• |

Pings from tools inadvertently touching light stands, the dolly, the track, and so on. |

• |

The continuity person’s stopwatch when she times the take. It’s got to be done, but unfortunately most modern stopwatches beep. Dumb. You’ll rarely hear beeps during the action part of a take (although they can sneak in), but they’ll make you crazy while searching for room tone. And they can really wreck a period scene. |

Sound Recording

Sadly, many unwelcome noises can and will get into the tracks as by-products of the recording process. The boom operator, sound recordist, and cable puller are all very busy capturing manageable dialogue, and sometimes bad things happen.

Actors

With so much attention paid to getting the best sound from actors’ voices, it’s no surprise that you’re occasionally faced with all sorts of sounds coming from an actor that you’d just as soon not hear. We all make noises that aren’t directly part of speech. Someone you’re talking to may be producing an array of snorts, clicks, pops, and gurgles, yet you’ll rarely notice.

Comparatively normal human noises often sneak under the radar when we’re in the heat of a conversation because our brains simply dismiss them as non-information of no consequence. Yet when you record this conversation, the body sounds lose their second-class status and take their rightful place as equal partners with the dialogue.

What sorts of vocal and body noises should you be on the lookout for?

Dental Work Dentures, plates, bridges, and the like can make loud clicking noises. They’re easy to spot because the clicks almost always coincide with speech. Unfortunately, denture clicks usually get louder in the dialogue premix, where dialogue tends to pick up some brightness. At that point, they become impossible to ignore.

Most people with fake teeth aren’t thrilled about advertising the fact, so a serious round of click removal is usually welcome. Also, relentless dental noise is almost certain to get in the way of story and character development. There’s a chance that the character’s persona calls for such unenviable dental problems. If that’s the case, the supervising sound editor may elect to remove all dental details from the dialogue and have the effects or Foley department provide something more stylized, personalized, and controllable.

Mouth Smacks and Clicks People make lots of nonverbal sounds with their mouths. Sometimes these sounds have meaning that would be difficult to express in words: a sigh or a long, dramatic breath can say worlds; a contemplative wetting of the lips can imply a moment of reflection before words of wisdom; a clicking of the teeth or tongue may suggest thought or nervousness. An actor’s clicking, chomping, snorting, and sighing may be just what the scene calls for, or it may be just more commotion that comes between the scene and the audience.

Your job is to spot each nonverbal sound and decide if it conveys the mood and information you want for the scene or if you need to thin out or replace or eliminate it. Things to think about when listening for smacks:

• |

Is it off-screen? Probably lose it. Here taste and style are more important than rules of thumb, but in general if you don’t see the lip smack there’s probably no real reason to keep it. All those unseen noises are just that: more noise to get in the way of the story and mood. |

• |

Is it appropriate? If not, replace it. Just because the actor made a vulgar, slippery onscreen smack immediately before tenderly saying “I love you” doesn’t mean you have to use it. Yes, it’s on-camera, so you must have something in order to avoid lip flap, but need it be so ugly? Did that phlegm-soaked snort really make the scene more effective? What does it do for your emotional reaction to the lines? Wouldn’t a nice, moist lip opening sound better than the original slurp? Also, if any mouth noise attacks sharply, sounding more like a click, replace it. |

• |

Is it missing? Sometimes the problem is a missing mouth noise. When you see a character open her lips, you may need to reinforce the action with some sort of smack, small though it may be. Otherwise the viewer senses lip flap, the annoying movie sensation when you see mouth action but don’t hear any corresponding vocalization to make it real. |

The sounds a character makes between sentences or words can be as important as the information contained in the text. Get it right and you’ll dramatically increase the drama and emotion of the scene.

Clothing We’ve seen how clothes rustling against a body mic can be a nuisance. Many other common clothing noises are just as bad and require a sharp mind and a keen ear.

• |

Inappropriate or annoying shoe sounds, whether footsteps or squeaks |

• |

Corduroy pants or jackets, which often make a rustle when moving |

• |

Anything made of plastic |

• |

Coins and keys |

• |

Large earrings and bangles |

Actors and Microphones You’ll inevitably encounter places in the track where the actor sounds a plosive consonant, such as a P or B or the equivalent, in whatever language you’re working in. There’s no point getting into why this happens; your job is to fix it. Usually, you’ll have to replace that section of the contaminated word, but there are some filtering tricks that may work.

Fixing Noises

Once you’ve trained yourself to listen for the countless rattles, pops, clicks, and snorts squatting in your tracks, the next step is to decide what to do with them. There are two basic editorial tools for removing unwanted noises: room tone fill and replacement. Noises falling between words or action can almost always be removed by filling with appropriate room tone, whereas noises falling on top of words or actions, or even just before or after dialogue, require searching through alternate material to find appropriate replacements.

Let’s look at these two techniques, remembering that there are many ways of fixing noises and as many opinions as there are editors. With time you’ll settle into your own way of working, synthesizing all of the techniques and creating your own private stockpile.

Room Tone Fill

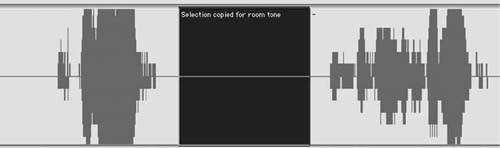

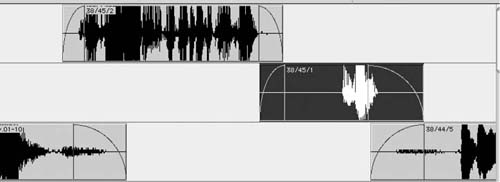

Small electrical clicks, individual clothing–mic collisions, lip smacks, and the like, are easily removed with room tone, but only between words, not within them (or only rarely within them). (See Figure 12-1.) Here’s what you do to remove these tiny noises:

• |

When you hear the unwanted noise, stop and note its whereabouts on the timeline. |

• |

Use a scrub tool to localize the noise. Set your workstation preferences so that the insertion point will “drop” wherever you stop scrubbing, so that you can easily zoom in to the click. On Pro Tools this is called “Edit Insertion Follows Scrub/Shuttle.” You’ll be surprised how difficult it can be to see a click. Common sense would say that a relatively loud one would proudly display itself in the waveform, but the nastiest of clicks can hide amid ambient noise and are found only with a combination of scrubbing and enough experience to know the telltale signs. |

• |

Zoom in closely enough to identify exactly what you’re removing. There’s no point fixing an area wider than needed. If the click is hard to spot, you might want to place a comment or a temporary sync mark at the location so you don’t lose it. |

• |

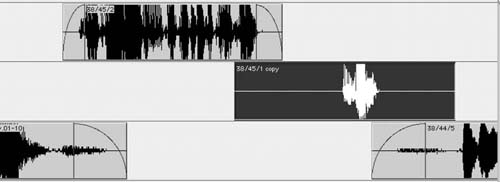

Find the room tone you’ll use to eliminate the noise. If the noise occurs in a relatively boring stretch of tone, without rapid changes in pitch, level, or texture, you can usually copy a small piece of tone immediately adjacent to it and blast over the problem area. Make the patch just a bit wider than the noise you’re covering to leave room for crossfades and adjustments. If you’re copying a section of room tone between two noises, leave a bit of extra, unused tone outside your selection to provide a handle area and some “fudge space” after you make the edit. (See Figure 12-2.) |

• |

If removing more than one closely spaced noise, don’t use the same piece of room tone to repair both problems, as it may create a looplike sound. |

• |

If you can’t copy a short piece of room tone adjacent to the offending noise, find a piece from another portion of the take. (See the section on room tone in Chapter 10.) |

• |

Usually, you’ll make a small crossfade at the head and tail of the insert to avoid a click and to help smooth the transition. This is especially true when the underlying room tone is very quiet or if it contains substantial low-frequency information. |

Figure 12-1 Small clicks typical of radio microphone trouble.

Figure 12-2 Good room tone can usually be found nearby. Note that the selection is smaller than it could be. Leaving a bit of usable room tone outside the selection creates a handle that gives you maneuvering room when removing the noise. The handles also allow more flexibility with crossfades.

A couple additional tips and tricks will come in handy:

• |

If you smooth your edit with a crossfade, don’t allow your fades to enter the area of the noise you’re covering. If you do, you’ll hear vestiges of the noise and you won’t be any better off than when you started. As a rule, listen separately to all fades you create—fade-ins, fade-outs, and crossfades—to ensure that you haven’t left behind a little gift. |

• |

Pay attention to the waveform cycle when inserting room tone. Most workstations can perform a decent, click-free edit despite wave cycle interruptions, but if there are a lot of low-frequency components in the room tone it’s hard to avoid a click. Help your workstation do its job by lining up the waveforms as best you can, then make crossfades to smooth the leftover bump. |

Removing Short Clicks with the Pro Tools Pencil

Very short, nonacoustic sounds such as static discharges and very brief cable clicks can be removed with the Pro Tools Pencil. (Most workstations have similar tools that enable you to redraw the soundfile’s waveform.) When used on an appropriate noise, the pencil and its counterparts can be miraculous. However, there are a couple of very important things to remember when using them. First, unlike almost every other process, the pencil modifies the parent soundfile. Second, it’s inappropriate for all but the shortest problems and if there’s a significant acoustic element—such as a long ringout—it just won’t work and you’ll be stuck with a low-frequency “thud” where once you had only a tiny click.

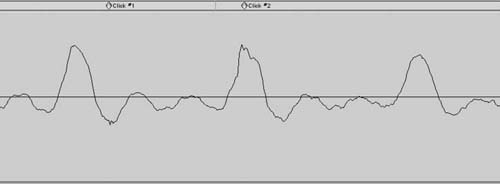

Using the Pencil Using the scrub tool and waveform display, find the click in question. (See Figure 12-3.) The waveform usually isn’t very helpful until you’ve zoomed in close. More often than not, the click will appear as a jagged edge along an otherwise smooth curve. It could also appear as a very small sawtooth pattern along the line of the curve. Although small, a glitch like this can cause a lot of trouble.

Remember that the pencil is a destructive process, which is rare in nonlinear editing. Any change you make with it will affect the original file as well as every occurrence of this part of the soundfile. A mixed blessing. If the click occurs in the middle of a line repeated many times in the film, the modification will present itself in every recurrence of that line.

Say the film begins on the deathbed of a family patriarch, who at the moment of his demise manages to murmur, “Don’t forget the peanut butter!” On several later occasions we hear Dad’s ghost say, “Don’t forget the peanut butter.” It’s the same clip. The film ends and we still don’t know what it means. Bad film, what can I say?

Figure 12-3 Compare the three troughs and note the small irregularity on the left (click 1). Note the rough edge on the center peak (click 2). These tiny irregularities caused a crackling noise.

If there’s a short electrical click in the middle of the word “peanut,” you fix it once with the pencil and you’ve fixed every occurrence. This is an unusual case, however. Normally, you want to protect the soundfile from any stupidities you might cause with the pencil, so remember this rule of thumb: Before using the pencil tool, make a copy of the section you intend to repair. To make a safety copy of the region you’re about to operate on:

• |

Highlight a section of the region, a bit wider than the damaged area. |

• |

Make a copy of the sound in the region. It’s not enough to copy the region itself, as this doesn’t create a new soundfile. Instead, consolidate or bounce the selected region, creating a new soundfile and leaving the original audio safe from the destructive pencil. (See Figure 12-4.) |

• |

Zoom in very close to the click. Filling the screen with one sound cycle is a good view. Select the pencil tool and draw over the damaged area. Take care to create a curved line that mimics the trajectory of the underlying (damaged) curve, or what that curve “should have been.” |

• |

Redraw the smallest possible portion of the waveform. Otherwise, you run the risk of creating a low-frequency artifact. |

• |

Move the boundaries of the now fixed consolidation until there’s no click on the edit. Fade if necessary to avoid clicks (it’s generally better to move the edit location than to fade). |

Figure 12-4 Top: The segment from Figure 12-3 with two clicks (labeled with markers). Bottom: The area to be repaired was consolidated (highlighted in black) to create a new, tiny soundfile. The pencil was used to redraw the waveform. Compare the smooth curves below with the jagged originals.

Reducing Clothing Rustle and Body Mic Crackle

When clothing rubs against a lavaliere microphone you hear a nasty grinding. This can often be avoided with careful mic placement, but by the time the problem gets to you, elegant mic positioning isn’t really the first thing on your mind. You can’t filter out the noise, as it covers a very broad, mostly high, frequency range. Normally, the only way to rid yourself of this sound is to collect the alternate takes of the shot and piece together an alternate assembly (see the upcoming section on alternate takes). You should also add this line to your ADR wish list.

However, if you’ve exhausted the alternate lines and the actor is no longer on speaking terms with the director and refuses to be looped, you can try one cheap trick that occasionally works. Many workstations offer a plug-in designed to reduce surface noise on 78-rpm recordings. Called “de-clickers” and “de-cracklers,” they’re usually found in restoration suites used in cleaning and remastering old recordings. Two examples among many are Waves’ X-Crackle and X-Click and Sonic Solutions’ DeCrackle and DeClick offered as part of its NoNOISE® suite.1

When closely compared, the waveform of a transcription from an old vinyl record and that of a dialogue recording contaminated with mild clothing rustle have many similarities. In each case, what should be a smooth curve is instead serrated stubble. De-cracklers and de-clickers use interpolation to smooth out local irregularities. Maybe, just maybe, you can use them to smooth out your curve, reducing the clothing rustle to a manageable distortion. Before you start, make a copy of the region so that you have a listening reference and can return to normal should this noise-removal plan prove ill conceived.

As with all interpolation processes, you’re better off making several small, low-power passes than one powerful pass. Compare this to the window-painting analogy I use in Chapter 11: You’ll get far better results with several finishing coats than with one thickly slathered soaking. All in all, don’t develop great expectations for this method of cleaning up clothing rustle. Its results are often mediocre. Still, when you have no other choice, a bit of de-crackle may be an acceptable fix. Besides, what are your options?

Repairing Distortion with De-Crackling

Distortion can’t be removed. Really, it can’t, and your only recourse is to replace the distorted words with alternate material. However, when your back is against the wall and there’s no choice, de-click and de-crackle may help. Why? Look closely at the waveform of a distorted track and you’ll see two main problems. First, the waveform is truncated, like sawed-off pyramids. That gives you the ugly compression of a distorted sound. Second, the plateaus are jagged and rough, not unlike the waveform of a 78-rpm record. As with removing clothing rustle, repeated passes of a de-click utility followed by de-crackling may smooth the rough edges and even rebuild some of the waveform’s natural contours.

• |

Make a copy of the section of the waveform you’re repairing to get back to the original soundfile if necessary. Processes like de-click and de-crackle often add a bit of level to a region, so make sure that there’s at least 3 dB of headroom in the original soundfile when you start this operation.2 It’s not good enough to lower the level through volume automation. You have to lower the level of the original file before making the copy. Or you can make a copy with the “Gain” AudioSuite processor, which will result in a new soundfile with enough headroom to accommodate the peaks that will likely result from the de-click and de-crackle processes. |

• |

Start with the de-clicker on a very high threshold setting. One of the few good things to say for distortion is that most of the damage is confined to the loudest material. That’s why you start with a very high threshold, leaving the undamaged majority of the signal unaffected. In essence, what you’re doing is aggressive processing on the topmost part of the waveform. |

• |

After one very powerful pass, apply five or six additional passes, each one less aggressive than its predecessor. Remember to keep the threshold high to avoid harming the undamaged, lower-level material. Repeat the same process with the de-crackler. Begin very aggressively and then progressively back off. |

Many restoration tools allow you to monitor the removed noises, switching between the dregs you’re removing and the cleaned results. This is handy for determining if you’re overprocessing. If you can hear components of the program material (that is, the dialogue) while monitoring the removed noises, you’re damaging the original track and should back off. If you don’t have this monitoring option, you can listen to what you’ve removed by placing the de-clicked/de-crackled soundfile on a track adjacent to the original region, syncing the two, and listening with one of the regions out of phase. If your sync and level are precisely aligned, you’ll hear only the removed sounds or distortion harmonics.

De-crackling isn’t the first line of defense against distortion or removing clothing rustle. You stand a much better chance of making a proper fix if you go back to the original recordings and find an alternate take, or just give up and loop the line. Even so, it’s good to have a bag of tricks to help you out of impossible situations. The result may not be glorious, but at times mediocre is better than nothing.

Solving Dolly and Crane Noise Problems

Dollies and cranes are a particularly ugly source of noises, which tend to go on much longer than run-of-the-mill ticks, clicks, and pops. A moving dolly can spread its evil across several lines of dialogue, so doing something to fix such noises is much more complicated. Before giving up and calling the scene for ADR, try to reconstruct the damaged part of the scene from alternate takes, hoping that the noises don’t always fall on the same lines.

Fixing a line ruined by dolly noise is no different from other fixes that call on alternate takes. First find valid alternates, then line them up under the damaged shot and finally piece together the outtakes to create a viable line. You have to know how to quickly and painlessly locate the other takes from that shot in order to find alternate lines, more room tone options, and the comfort of knowing you’ve checked all possibilities.

Alternate Takes

Because life isn’t always fair, sooner or later you’ll run into noises within words. Then you’ll have to look through the dailies for alternate takes that convey the same energy and character as the original but without the unfortunate noise. At first, going back for alternate takes seems a monumental task, so you invent all sorts of excuses not to do it. Once you realize that it’s a not lot of work, though, you’ll discover a huge new world of possibilities that make your editing not only more professional and effective but much more fun and rewarding.

Before you begin the quest for alternate takes, check your list of wild dialogue cues to see whether you have wild coverage for the scene. You never know, and it could save you some grief.

Finding Alternate Takes

The process of finding alternate takes starts at the beginning of the project. It hangs on getting from the production the items you absolutely must have to safely start any dialogue-editing project. (See Chapter 5.) Let’s review:

• |

All original recordings. These may consist of a box of DAT or Nagra tapes, a Firewire drive with all the disk-originated BWF files, a DVD with the same files, or even a DA-88 tape or a hard disk from an MX-2424 if the original recordings were made in a multitrack format. However the sound was recorded, you have to have all of the original sound elements. |

• |

Safety copies. You don’t need to have the safety copies with you. In fact, it’s not a good idea for the master and the safety copy of anything to be stored in the same space. Just make sure that the production went to the trouble (and expense) of making safety copies before beginning postproduction. They’re comparatively inexpensive, and the potential consequences of not having them are appalling. Few things cause that heart-sinking feeling like the sight of a master cassette coming out of your studio DAT player followed by a trail of crinkled, ruined tape. If there’s no copy there’s no hope. |

• |

Sound reports. It’s pretty hard to hold a DAT tape to the light, squint, and divine what sounds reside there. You need help to find the scene and take you’re looking for. When the location mixer records sound, she makes logs of the scenes, shots, and takes on each tape, as well as room tone, wild lines, and wild SFX. Her logs are essential for you to determine the options you have for solving problems on your tracks. |

• |

Lined script. If possible, get the lined script; it contains notes on each scene’s coverage. You can never have too much information. |

• |

EDLs. You need one video EDL for each reel of film, as well as one audio EDL for each set of four audio tracks in the Avid or FCP edit for each reel. (See Chapter 5 for an explanation of EDLs.) The EDL is one of your most important tools. You can’t get by without it, especially if your original sound is on tape. |

• |

List of scenes and their shooting dates, if the location sounds originated on hard disk. It’s much easier to find alternate takes on disk-originated recordings because you don’t have to wade through tapes. However, you need to know in which folder the scene is hiding. |

It’s useful to have the continuity and camera reports and whatever relevant notes you can get your hands on. Some dialogue editors think this is too much information, a waste of space, and an indulgence. Let them. When it’s three in the morning and you must find an alternate take and all of your normal paper trails have failed you, the script continuity or camera reports may be just what it takes to fix the shot and get home to bed.

Finding Alternate Takes

If the original location sound was recorded on tape, whether DAT, TC Nagra, or DA-88, for the most part you can use the procedure in the following sections to find all the alternate takes. However, experience and desperation may lead you to explore other routes too.

Highlight the Damaged Region If you’re using Pro Tools, the start and end timecodes of the highlighted region are displayed at the top of the screen as Start, End, and Length. (See Figure 12-5.) Other workstations have similar displays. At this point, the length of the segment isn’t interesting, so ignore that cell. Use the Start and End timecode values to find this take in the EDL.

Figure 12-5 If the original recordings are on tape, begin your hunt for alternate takes by selecting the region and noting the start and end times. Find these times in the appropriate EDL to determine the source DAT and timecode.

Find the Correct EDL When you printed the EDLs for each reel, you added the header “Title, Reel, Audio CH 1-4 [or 5-8, or 9-12, and so on], page .” Perhaps this seemed unnecessary when you made the printouts, but now it pays off. The secret of success at this point is to find the material as quickly and painlessly as possible so you don’t lose your excitement and creative spirit. When you made the printouts, you were still clueless about the film and were hardly in the heat of creative passion, so you could afford the time. Now you don’t want to slow down for anything.

If your EDLs span 9 or 12 tracks, you don’t know in which one you’re going to find your shot. However, most film editors edit with the production sound on the first four tracks, using the rest for temporary sound effects, music, temporary mixes, and the like. Odds are good that you’ll find your problematic production sound in the list “CH 1-4.”

Find the Event Always follow the same sequence of EDL columns to track down your alternates:

• |

Record In and Record Out, the two rightmost columns. Unless something is terribly wrong, your EDL will be printed “A-mode,” sorted in ascending Record In time. The easiest way to locate an event is to look through the Record In times until you find a timecode that matches or is near the region you’re looking for. Next, look at the Record Out time for the same event and make sure that your highlighted region falls between Record In and Record Out. Be careful—it’s not unusual to find two events with the same Record In time, so make sure that the event you’ve marked in the EDL is the most likely candidate. |

• |

Confirm scene and take. To make certain you’ve found the EDL event describing the region you’re trying to replace, look at the Comment field immediately under the event line. A decent picture department will insert Comment fields that contain scene and take information. Confirm that the event listed in the Comment field is indeed the same as the name in your session region. |

• |

Determine the source tape. Look on the source tape column to see which DAT or other source the recording came from. |

Find the Correct Tape Go to the pile and find the tape in question.

Check the Sound Reports In the sound report that comes with each sound roll, you’ll find a list of the tape’s contents. Usually it won’t include timecode, but this is no real loss. There are many useful items in a sound report, but at this point the most interesting are the sequence of shots and the presence of any wild sound. Location recordists commonly use abbreviations to describe takes. (See Table 12-1.)

Table 12-1 Common Abbreviations for Shot Types

Code |

Meaning |

Explanation |

FT |

Full take |

Complete take of scene from beginning to end, or the complete text blocked for this shot. |

FS |

False start |

Scene was stopped immediately after beginning because of acting mistake or technical problem. Action can restart within the same take after a false start. |

INC |

Incomplete |

Scene was stopped midway because of a problem or because the director needed only a portion of it. |

PU |

Pickup |

Take doesn’t begin at the top of the scene but at a later point because of midscene mistake or because the director wants just one section of the shot. |

WT |

Wild track (wild sound) |

Sound was recorded without picture because there was no practicable way to record sound or because the location mixer found sound problems. |

MOS |

Picture without sound |

Scene shot without sound. This could be a silent cutaway or an action shot. All slow-motion or speeded-up shots are filmed MOS. |

How Many Takes Are Available? If your damaged take is 12C/4, you can be reasonably sure that there are at least three other attempts at a good take. Of course, takes 1, 2, and 3 could be false starts or simply no good, but they’ll probably still give you a chance for replacement sound. There could easily be takes later than 4, so check the sound reports before winding to the location on the DAT just to see what you’re up against.

Cue the DAT to the Correct Take If your sounds were recorded on a DAT, you’ll most likely have a start ID at the beginning of each take. Most useful. To find your initial cue, fast-wind the tape to the approximate source timecode of your damaged take. Once safely within the original take, press “Backwards Index” on the DAT player to find where it begins. Listen to the voice slate to confirm that you’re within the correct take. If your damaged take is take 4, press Backwards Index four times (you’re already in take 4, so you have to subtract for that take, too). You’ll be cued to the beginning of take 1. Use the sound report as your map through the sound roll and use the index function to navigate to the available takes.

Find Applicable Takes and Record Them Cue to the applicable sections of each alternate take and copy into the workstation. Check each one.

Plan B: Check Other Angles Obviously, you want to find an alternate take from the same shot (or angle) as the original to increase your chances of a decent sound match. However, sometimes you can’t find useable alternate takes from the same angle. When that happens, first make a note in your ADR spotting calls but don’t give up on the sound rolls just yet. Perhaps you can find the replacement lines within a compatible shot. This is where the sound reports really pay off.

An Example of Locating an Alternate Take from Another Angle

Say you’re working on scene 88, an interior scene with two characters, Alfred and Elizabeth. The scene is made up of these shots:

• |

88—master shot (wide) with both characters |

• |

88A—single shot of Alfred |

• |

88B—single shot of Elizabeth |

• |

88C—medium two-shot of both characters |

• |

88D—dolly shot for part of the scene |

You need to fix Alfred’s close-up lines, but you’ve already exhausted all takes for shot 88A, the angle used in the film. Where else should you look for material that will save Alfred from looping?

• |

Shot 88B isn’t likely to help, as Alfred will either be off-mic or nonexistent, with the continuity person or director reading his lines. Still, it’s worth checking the sound reports to see if your actor is present and was given a mic. Unlikely, but possible. |

• |

You probably won’t find joy in the master shot (shot 88) either. Because it’s a wide shot, the sound will be either very wide or on radio mics, both of which can make for a difficult match. However, desperate dialogue editors do desperate things, so if all else fails give it a try. |

• |

The dolly shot (shot 88D), depending on what it is, could be of help, but don’t make it your first choice as it may introduce new problems. Since this is a pickup (a take including only a part of the scene), you can use the shooting script to find out if it includes the parts of the shot you want. Do this before you start looking to save yourself some unnecessary wading through takes. |

• |

Head straight for 88C, the medium two-shot. It’s the most likely place to find Alfred with a microphone in the right place. |

Once you find some qualifying alternate takes, record them into your workstation, making sure that the filenames reflect the scene and take numbers. A collection of files named “Audio 1” isn’t particularly helpful, especially when it’s time to import the files into a session.

Finding Alternate Takes on Hard-Disk-Recorded Projects

More and more location mixers are recording field tracks on portable hard-disk recorders. These recorders run the gamut in price and quality, but in general they deliver good tracks, naturally better suited to the needs of postproduction. There are many advantages to recording on hard disk versus on a DAT.

Editing sounds originating on hard disk rather than tape makes life easier in two ways. First, you have every take available on your workstation all the time. Second, you can organize your database any way you choose, making it conform to your way of thinking or even your mood.

For a dialogue editor, the true beauty of jobs recorded on a nonlinear system isn’t the technical considerations or even the vastly improved sound. It’s that you’ll edit better because you’ll lose any excuse not to check the alternate takes. When you work with DAT-based projects, there’s always a tiny (or not so tiny) voice in your head telling you all the perfectly good reasons not to go back to the dailies. You eventually convince yourself that there probably aren’t any decent alternates or at least nothing that compares to the original. “Besides,” you tell yourself, “this noise isn’t really all that bad.” Human nature maybe, but not a formula for great dialogue editing. Having everything at your fingertips strips you of most excuses for laziness.

There are any number of ways to organize the database of your original recordings. Some editors prefer Excel-style spreadsheets. Others like to create a database in a program such as FileMaker Pro, which enables complex searches and endless report formats. You can view in creation order (like a DAT) or by scene, shot, comment, or whatever else you like. I find it useful to make comments in the database as it helps me find other takes. At the very least, my notes warn me that I’ve already visited a take only to discover it was no good. You feel pretty stupid checking out the same take more than twice.

Choose the Right Parts

You’ve imported the likely alternates into your session. Now you have to find out which one will make the best fix and then cut it into your track. I find it easiest to move my work tracks directly beneath the track with the damaged region. (See Figure 12-6.)

To select the best alternate take, try the following procedure. You’ll develop your own technique with time, but this isn’t a bad way to start.

Figure 12-6 Move several work tracks directly beneath the track with the damaged line. This way you can easily and safely compare and edit your alternate takes. In this example, the original line is on Dial A (top) and three alternates are lined up on the work tracks.

• |

Place a marker at the location of the noise you want to eliminate so you can navigate back to your target location after scrolling through long soundfiles. |

• |

Import the alternate take soundfiles into your session. |

• |

Drag alternate take regions onto work tracks, more or less lined up with your original region (refer to Figure 12-6). |

• |

On each alternate take region, remove all but the desired material. |

• |

Line up the beginning of each alternate take region with the beginning of the original damaged region. |

• |

Listen to the original take and then each of the alternates. Pay attention to cadence, tone, and attitude and how they match up with the originals. |

• |

Put aside the takes that patently differ from the original, but don’t remove them from the session as they might hold hidden secrets. Just get them out of the way. Now you’ll have only the best takes, lined up roughly in sync under the original line. |

On rare occasions, an alternate take will have all the right attributes—the speed, mood, and linguistic “music” (cadance, timbre, energy, spirit) of the original. You need only sync it to the original and edit it into the track. However, you usually have to work a bit harder. Often, one part of the line will work well but another will be wrong. There are a number of things you can do to create the perfect replacement.

Combine Parts of Several Takes Listen to the original line—beginning, middle, and end. Describe to yourself its spirit. I often invent a nonsense rhyme to describe the music and energy of each part of a line. Then I play back the nonsense tune in my head as I listen to parts of each potential replacement. By taking the language out of the dialogue, I can better focus on its music. It’s not uncommon to combine pieces of two or three or more takes to make a good alternate line.

Get the Sync Right Tricks and tips for syncing are akin to fishing hints—everyone has the perfect secret, certain to give you great results in the shortest time. In truth, it’s a matter of time, experience, and a knack for pattern recognition. Try a few of these pointers and develop them into a technique of your own.

After you’ve completed these steps, it’s time to listen again. It’s easy to get caught up in the graphics and begin slipping here, nipping and tucking there, with little regard for content. Remember, we’re performing a very delicate operation here, replacing words while respecting the character, mood, focus, and drama of the original line and at the same time worrying about sync. Listen to the original. Close your eyes so that you can visualize the flow of the phrase. Sometimes I see a phrase as colors with varying intensities, modulating with the line. This lava lamp of transposed information helps me categorize the line’s technical as well as emotional attributes.

If you’re allergic to touchy-feely notions like “visualize the phrase,” please indulge me. First, I find closing my eyes very valuable. It removes the stimulus of the computer monitor so I’m not influenced by visual cues. Second, I find it useful to assign shapes and/or colors to the elements of a phrase or word, as this rich shorthand is often easier to code and remember than the raw sound. As I said, sometimes I reduce the phrase to nonsense sayings to provide a sort of mental MIDI map for interpreting it. Finally, imagining the phrase as colors or shapes is very visceral and helps me quantify its real workings. Of course, you’re free to think of all of this as hogwash and use your own tricks.

Saving the Original Line Once you find the replacements for each section of the line, you’re ready to construct the fake. First, however, you need to copy the original line to a safety track. I use my junk tracks for this.

There are two kinds of lines you never want to throw away: those you replace with alternates and those you replace with ADR. The reasons are pretty obvious.

All of the preceding is true for ADR as well as alternate take replacements.

Changing the Speed

Most workstations have plug-ins for “fitting” replacement lines, whether ADR or alternates, to match your original, but you need to know how they operate before you can make them work for you. It’s not uncommon to hear the telltale artifacts that these voice fitters create when used irresponsibly. The trick is to prepare the track before you use the fitter, never to ask the processor to do more than is reasonable, and to honestly listen after its every use. If it sounds weird, it will never get better.

Time Expansion/Compression Tools Time-stretch tools (“word-fitting” tools like VocAlign fall into this category) change the duration of an event without changing its pitch. Unlike pitch-shift tools, which behave like variable-speed analogue tape machines by changing the sample rates and then resampling, time stretchers add or subtract samples as needed. If a phrase is too long, they’ll remove enough samples to get to the right length. If the phrase is too short, they’ll duplicate samples to lengthen the selection.

You have to know where to make the splices. If you tell it that you can’t tolerate any glitches, the time-stretch tool will make all of its splices in the pauses between words or in other very safe places. After all, who’s going to hear the splice where nothing is said? Or sung? Or played? What you end up with are essentially unchanged words with dramatically shortened pauses as well as truncated vowels and sibilants. Thus, if you order a 10 percent length reduction, the line will indeed be 10 percent shorter but the speed change won’t be consistent. This is especially noticeable with music, where time compression/ expansion can result in a “rambling” rhythm section.

If you want better local sync, choose a more “accurate” time shift. You’re now telling the tool that local sync is more important, even at the risk of glitching. In extreme cases, you’ll have perfect local rhythm because the tool is splicing at mathematically ideal locations, ignoring content. But the glitches resulting from this “damn the torpedoes” approach are often unacceptable.

Here you have to make informed compromises. All time expansion/compression tools provide a way to control the delicate balance between “perfect audio” and “perfect rhythmic consistency.” You just have to figure out what it’s called. Usually there’s a slider called something like “Quality” that indicates how glitch-tolerant the tool should be. The less glitch tolerance (that is, higher “quality”), the worse the rhythmic consistency. The more you force the rhythm to be right (lower “quality”), the greater your chances of running into a glitch. As expected, the default Average setting will generally serve you well.

Before you process a region with a time expansion/compression algorithm (see Figure 12-7), make an in-sync copy of it. Here’s how this will help you:

• |

If you need different time expansion/compression ratios for separate parts of the line, you’ll find it helpful to have the original version handy. Given that time expansion/compression routines are far from transparent, the last thing you want is to process an already altered file. |

• |

As you construct the phrase, you’ll find sections that need time flex and others that don’t. With a copy of the unprocessed region standing by, it’s easy to access the original for editing. |

• |

If you process the original without making a copy first, and then decide your entire syncing logic was wrong, you’ll have to re-edit the line. |

Word-Fitting Tools As you’ll see in Chapter 14, many tools are available for locally time-stretching a line; that is, comparing the waveform of the original with that of the alternate and manipulating the speed of the alternate to match the reference. Word fitters use, more or less, the technology of time expansion/compression, but they’re largely automatic—able to look at small units of time and make very tight adjustments. Still, they have the same real-world limitations that time expansion/compression has: quality versus sync. All of these tools offer some sort of control to enable you to make that choice. Play with them and get used to how they work.

Time expansion/compression and word-fitting tools create new files. You’ll have to name these. Do it, and be smart about it. I’ll name a section of a shot that I stretched something like “79/04/03 part 1, +6.7%.” A word-fit cue I might name “79/04/03 part1, VOC” (for VocAlign). If you don’t sensibly name your new files, you’ll eventually regret it.

Figure 12-7 Digidesign’s Time Compression/Expansion AudioSuite processor. Many manufacturers offer such products, and they all work in more or less the same way.

Syncing the Alternate Line

You’ll find a full treatment of syncing and editing alternate lines and ADR in Chapter 15. Here I’ll just briefly outline the steps.

Overlaps

People interrupt each other all the time. Sometimes out of excitement, sometimes out of anger or arrogance, actors are always stepping on each other’s lines, and such “overlaps” cause ceaseless headaches. Let’s return to our friends Alfred and Elizabeth and see what can happen to movie sound when people step on each other. Here, again, is the list of shots for scene 88:

• |

88—master shot (wide) with both characters |

• |

88A—single shot of Alfred |

• |

|

• |

88C—medium two-shot of both characters |

• |

88D—dolly shot for part of the scene |

Odds are pretty good that the master shot has both characters either on a boom or on two radio mics. Same goes for 88D, the dolly shot. 88C was probably recorded with a boom; the two single shots, 88A and 88B, almost certainly. During shot 88A the boom was focused on Alfred; anything Elizabeth said was hopelessly off-mic.

In an otherwise outstanding take of 88A, in which Alfred is complaining to Elizabeth about the cost of hockey pucks in Brazil, Elizabeth interrupts the end of Alfred’s sentence to tell him that he’s an idiot. Back in the picture editing room, the editor and director piece together a back-slapping row between our two characters. The picture editor includes Elizabeth’s interruption on Alfred’s track, cutting to Elizabeth at the first rhythmic pause. No one but you notices that Elizabeth’s first four words are off-mic, having come from Alfred’s track. What do you do? You announce that it must be fixed, either with ADR or alternate lines.

Overlaps put you in a bad position. Often the director and the editor won’t notice them while editing because they’re so used to hearing the cut. You’re the only one who notices, so you’ll be stuck trying to justify the extra ADR lines or the time spent rooting around in the originals to find the replacement material. Still, if you ever want to show your face at the sound editors’ sports bar, you can’t let it go. Overlaps with off-mic dialogue aren’t acceptable.

When Elizabeth (off-mic) interrupted Alfred (on-mic), she ruined both of their lines. The end of Alfred’s line is now corrupted by an ill-defined mess, so it must be replaced from alternates. Let’s hope that Elizabeth won’t jump the gun in other 88A takes. We also have to replace the head of Elizabeth’s line (88B) so that she’ll have a clean, steady attack. Again, we have to rub our lucky rabbit’s foot in the hope that there’ll be a well-acted alternate 88B from which we can steal Lizzy’s first few words. If alternates don’t help, you’ll have to call both characters for ADR on the lines in question. But since you’ll face the problem of matching the ADR into the production lines, it’s in everyone’s interest to use alternates to fix the problem.

When shooting a fast-paced comedy in which the characters regularly step on each other’s lines, a location mixer may use a single boom plus a radio mic on each actor. If this is recorded on a multichannel hard-disk recorder, you stand a better chance of sorting out the overlap transitions. However, even if you have nice, tight radio mic tracks of each character, you’ll have to be careful of the off-mic contamination from one of them. There’s no free lunch.

Fixing the Acting

It’s not unusual for actors to slip in their diction, slur a word, or swallow a syllable. Often you can fix these problems the same way you remove noises—go back to the alternate takes and find a better word or phrase. Of course, you’ll copy and put aside the original since that little “slip” may turn out to be the reason the director chose the shot. Also, when replacing a line because of an actor’s problems, you’ll keep it to the bare minimum so that the spirit of the line is unaltered.

The hardest part of “actor cleaning” is remembering that you have the resources to improve the line and then overcoming your natural laziness, which keeps telling you, “What’s the big deal? That’s the way she said it.”

Removing Wind Distortion

Location recordists go to great lengths to avoid wind distortion. They protect the mic from the wind using shock mounts and screens with all sorts of lovely names (including “zeppelin,” “wind jammer,” “wooly,” and “dead cat”). Regardless, it’s a certainty that sooner or later you’ll curse the location mixer for “not noticing” the wind buffeting the mic on the Siberian blizzard wide shot.

You can often remove this very low-frequency distortion in the mix with a high-pass filter set to something like 60 Hz. As with all filtering issues, you should talk with the rerecording mixer or the supervising sound editor about how to proceed. If you’re really lucky, the mix room will be available and you can listen to the scene in the proper environment. You can also do a poor man’s test by running the track through a high-pass filter in your editing room and playing with cut-off frequencies between 60 and 100 Hz. Keep in mind that wind distortion will always sound less severe in your cutting room than on the dubbing stage, so don’t get too excited by the results.

If, God forbid, you decide to filter the tracks yourself in the cutting room, you must make a copy of the fully edited track before filtering and put the original on a junk track. Many a time I thought I was doing my mixer a favor by “helping” the track a bit with a high-pass filter, only to have the mixer stop, turn to me, and ask, “What were you thinking?” What sounded like a vast improvement in my little room was now thin and cheap. Plus, the energy from the wind noise was still evident. The mixer gently reminded me of the division of labor. “You cut,” he said, “I make it sound good.” Thankfully, I had stashed the original (completely cleaned and edited) onto a junk track and with no effort the Empire was saved. Still, who needs the humiliation?

So what should you do about wind distortion? I suggest you build two parallel tracks: the original—fully edited and faded and cleaned of nonwind noises, but still containing the wind buffeting; and an alternate version assembled from other takes, free of the wind noises. Mute your least favorite version. This way you’re prepared for anything that might happen in the mix. If the mixer can remove the wind noise from the original take without causing undue damage to the natural low frequency, great. If not, you’re prepared with a wind-free alternate. Either way you don’t get yelled at.

Removing Shock Mount Noises

Like wind noises, shock mount noises appear as unwanted low-frequency sounds. But unlike wind, which usually lasts a long time, they’re almost always very brief. Like dolly noise, which occurs with camera motion, shock mount noise is usually tied to a moving fishpole. This makes it easier to spot.

You can often succeed in removing shock mount noise with very localized high-pass filtering, usually up to 80 Hz or so. As with any filtering you perform in the cutting room, save a copy of the original. You usually won’t want to filter the entire region but just the small sections corrupted by the low-frequency noise. If possible, listen to the results of your filtering in the mix room, with the mixer. Here you’ll learn if you under- or overfiltered, and you’ll hear any artifacts you couldn’t hear in the edit room.

Of course, the right way to fix shock mount noise is, yes, to find replacements for the damaged word in the outtakes. This way you don’t risk any surprises in the mix.

Getting Rid of Dolly Noises

It was dolly noise over dialogue that started the discussion on using alternate takes to repair damaged lines. By now it should be clear how to piece together a new sentence from fragments of other lines. What makes dolly-related damage interesting is the fact that the noise source is always changing, so you usually must line up all reasonable alternates and hope that the annoying cry of the dolly occurs at slightly different places on each one. You end up constructing an entirely new line from the best moments of all the takes. If this doesn’t work, you’ll have to rerecord the line.

Background noise is the bane of dialogue tracks. Location recordings are noisy, suffering from traffic, air conditioning, generator and camera noises, and assorted rumbles. You’ve smoothed each scene so that shot transitions are bearable, but there’s still an overall unacceptable noise level. What can be done?

Traditionally, noise reduction is done in the dialogue premix. You edit, the mixer mixes. Simple. However, as technology improves, plug-ins get cheaper and better, budgets degenerate, and mixes get shorter, you may find yourself doing some noise reduction in your cutting room. It’s not necessarily a positive trend, but you should know how to deal with it.

Noise reduction can miraculously save a scene. Or it can make your tracks sound like a cell phone. Here are the secrets to nursing your tracks through noise reduction:

• |

Talk to your supervising sound editor and mixer to come up with a plan. Stick to noise reduction processing, leaving normal EQ and dynamics for the dialogue premix. |

• |

Make fully edited safety copies of all regions you’re going to process. |

• |

Understand what each processor does. |

• |

Get to know your noise problem so that you can intelligently attack it. |

• |

Know when to stop. |

Getting Answers from the Supervising Sound Editor and Mixer

Select representative samples of the noises throughout the film. String them together so that your presentation will be as efficient as possible, and note the timecodes of the original scenes in case you need to return to them for context. Discuss each noise problem with the mixer and supervising sound editor, and remember to ask the following questions:

• |

Can this problem be fixed, or will the lines be rerecorded (ADR)? |

• |

Who’ll fix each line? For each problem, determine if the editor or the mixer will do the processing. |

• |

What sorts of processing will be used? |

• |

If you plan to use inserted plug-ins, what ones are available in the mix room and what DSP limitations and latencies will be encountered? |

Even an abbreviated version of a meeting like this will make for an enormously more productive mix. And you’ll avoid those damning looks from the mixer during the dialogue premix that say, “I can’t believe you did that!”

Preparing for Noise Reduction

Broadband noise reduction plug-ins often have enormous latencies, so you usually can’t use them as real-time processors amid your other tracks. If you did, your processed tracks would be miserably out of sync. Instead, you have to process the tracks and create new soundfiles. However, before processing you have to make a copy of the original region. I place this safety copy on one of the junk tracks, in sync, of course. Some people put it on the X, Y, Z tracks, but I like to reserve those for ADR-related regions. Truthfully, this is between you and the mixer.

Since any AudioSuite operation will create a new soundfile, you may want to open up the beginning and ending handles of the region first. (See Figures 12-8 and 12-9.) Just because the edit works well before noise reduction doesn’t mean that the transition will be effective once the tracks are cleaned. Previously unheard background sounds (such as “Cut!”) may emerge after cleaning. Similarly, track cleaning may alter the balance between adjacent shots, necessitating a longer handle. Pulling out an extra bit of handle—if available—means you’ll have to redo the fade after noise reduction is complete, but you’ll be left with a better set of options to play with.

Noise Reduction Tools

One reason noise reduction so often turns out miserably is that editors don’t understand the tools. This is one of the key arguments that mixers have against editors reducing noise in the editing room rather than in the mix. You may have an impressive selection of tools at your disposal but use them incorrectly and you’ll inflict damage. Typically, there are three types of tools for managing background noise:

Figure 12-8 The highlighted region of this soundfile needs to be processed. Any AudioSuite operation will create a new region without handles.

Figure 12-9 Before processing, delete the fades and open the handles. This will give you greater editing and fade options when working with the soon-to-becreated region.

• |

Filters. These equalizers, notches, buzz eliminators, and so on, are best suited for harmonic noises. |

• |

Interpolation processors. These tools remove clicks and pops on vinyl, but can also be used for lavaliere microphone rustle and even mild distortion. (The de-crackle and de-click plug-ins for reducing radio microphone rustle and mitigating distortion, described earlier, are examples.) |

• |

Broadband processors. Originally designed to eliminate hiss on archival recordings, these multiband expanders have become more and more common for general-purpose noise reduction. |

Most botched, artifact-laden noise reduction jobs happen during broadband processing. Because processors that remove broadband noises (de-noisers) often have names like “Z-Noise”3 or “DeNoise”4 or “BNR”5 (broadband noise reduction), it’s not irrational to think that this is the place to head with all your noise problems. The result: Having failed to use filters or interpolation processors to remove harmonic noises or unwanted transients, you end up asking too much of the broadband processor, which repays you with a signature “I’ve been noise reduced” sound. The trick is to identify your particular noise problem and then apply the correct processors in the right sequence and in the proper amount.

Remember, you’re not mixing the dialogue at this point—save that for later. Right now you’re just doing cleanups that would be too time-consuming or expensive in the dialogue premix. Don’t bother with the shelving and “shaping” that naturally apply to all of the dialogue elements. Almost all of your tracks will have some low-frequency rumble, which the mixer will roll off in the premix. Don’t attack these problems in your cutting room; address only the odd but exceptional noise problem there.

A Typical Noise Reduction Plan

The following paragraphs describe a typical multipass noise reduction sequence. Usually, you will work in this order:

1. |

Use filters to remove rumble, buzz, and hum. |

2. |

Use interpolation processors to remove crackle that occurs when words are being spoken. |

3. |

Use broadband processors to remove random noise. |

4. |

Use filters again to remove remaining harmonic problems. |

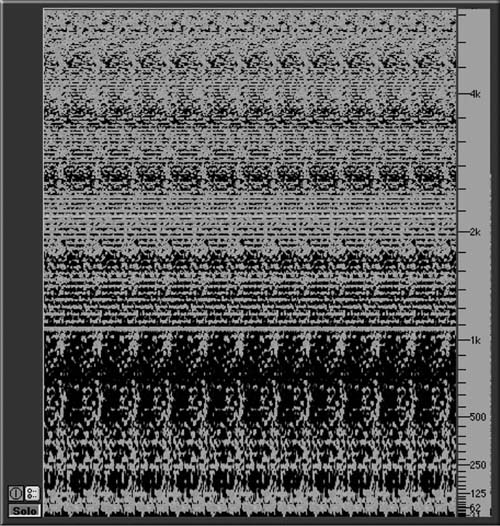

Rumble, Hum, and Buzz Removal Create an FFT or spectrogram of your noise,6 and look at the low-frequency information (below 500 Hz), as shown in Figure 12-10. If you’re chasing a harmonic problem like a rumble, hum, or buzz, you’ll notice a distinct pattern. Look for the lowest-frequency peak in the FFT—that’s the fundamental frequency of the noise. You should easily see harmonics occurring at multiples of the fundamental frequency. You can also analyze harmonic patterns using a spectrogram, as shown in Figure 12-11.

Write down the center frequencies of the fundamental and its harmonics, up to the tenth harmonic (or until you can’t stand it any longer). Note the approximate “height” that each harmonic rises above noise floor as well as its “width.” You’ll use the width to calculate the Q for each filter and the height to determine the cut value. (See Figures 12-12 and 12-13.) Write all of this down or enter it into a spreadsheet (Figure 12-14), which has the advantage of doing the math for you.

Figure 12-10 An FFT display created with soundBlade.7 This sample shows a classic North American hum, with peaks at approximately 30, 60, 120, 240 Hz, and so on. The frequency callout (left) reveals that the 60 Hz fundamental measure 58.91 Hz, indicating that the original analogue recording was at some point transferred off-speed. 58.91 Hz, indicating that the original analogue recording was at some point transferred off-speed.

Use a multiband EQ to create a deep-cut filter for the fundamental and for each harmonic (see Figure 12-15). For each filter, enter center frequency and calculate Q (center frequency ÷ bandwidth). If your processor allows you to control the amount of attenuation, set it to a couple of dBs less than the height to which each specific harmonic rose above the visual noise floor on the FFT. You’ll end up with several deep, narrow filters. These aren’t notch filters because they’re not infinitely deep; rather, they remove only what’s necessary to reduce the noise back to the level of the existing noise floor. This should effectively eliminate hum, buzz, and rumble. If not, extend the filters further to the right to eliminate harmonics at higher frequencies and recheck the FFT to make sure you accurately measured the components of the noise.

Click and Crackle Removal Interpolation noise removal tools work in two steps: identification and interpolation. They first identify telltale noises within a signal (which have an unnaturally short attack and decay) likely attributed to surface noise. These noises show little or no acoustic characteristics, so they’re pretty easy to spot. Once the processor identifies the click, it removes it and then “looks” to the left and right of the event to interpolate appropriate audio to fill the hole. As a dialogue editor, you can exploit this technology to fix sharp, short irregularities such as static clicks, microphone cable noises, or maybe clothing rustle. Use relatively high thresholds to “protect” the unaffected portion of the soundfile, then repeatedly apply aggressive processing to the distorted/clicky/rustly sections.

Figure 12-11 A display created with SpectraFoo8 from Metric Halo. The pronounced horizontal line at 1081 Hz indicates a strong harmonic element.

Figure 12-12 A Waves PAZ Frequency Analyzer displaying center frequency and amplitude of a harmonic.

Figure 12-13 A frequency versus amplitude display of the same buzz shown in Figure 12-10; note the strong harmonic at 1081 Hz.

Figure 12-14 A spreadsheet for logging harmonics and calculating the Q. If the frequency pattern is predictable, you can use a spreadsheet to predict the higher harmonics.

Figure 12-15 Once you determine the center frequency, gain, and Q for the principal harmonics, you can use a multiband EQ to remove the harmonic noise. In this case, a Waves Q10 is set to the parameters shown in the spreadsheet in Figure 12-14.

Broadband Noise Removal Many manufacturers sell broadband noise reduction processors. Perhaps because they require relatively little user interaction, or maybe because they’re very sexy, they’re best-sellers. The key to using them successfully is understanding what they do and don’t do.

Broadband noise reduction devices work by first taking a sample of “pure noise,” ideally from a pause free of any valid signal. This serves as the blueprint of what’s wrong with the sound. Next they move the noise sample and signal from the time domain to the frequency domain by creating ever updated FFTs. The FFT of the noise sample is divided into 2000 (or so) narrow-frequency bins, in which the noise is reduced to a formula.9

When the signal is played through the processor it, too, is assessed in the frequency domain. At each of the 2000-ish bins, the formula for the signal is compared to that of the noise sample. If the match is sufficient, the sound within that bin is attenuated by a user-controlled amount. If there’s no correlation between the noise formula and the incoming signal, no attenuation occurs in that bin since the dissimilar signal is likely valid audio rather than noise. This process is repeated for all of the frequency bins.

At this point there are usually control parameters for threshold and reduction. As would be expected, threshold determines the sound level at which processing begins; attenuation (or “reduction”) dictates what’s done within each bin flagged as “noise.” If these settings are too aggressive, you’ll hear very obvious artifacts. Back off first on the attenuation and then on the threshold.

After noise reduction, the signal must be recorrelated into a “normal” time domain sound. You usually have some control over this. “Sharpness” controls the slope between adjacent bins. The steeper the slope during the recombination process, the more effective the noise reduction but the “edgier” the sound. If you set the sharpness too high, you’ll hear digital “swimming” artifacts, often called “bird chirping.” There’s usually a control called “bandwidth” that determines how much sharing occurs between adjacent bins during the recorrelation. The higher the bandwidth, the warmer (but perhaps less articulate) the sound.

Low-frequency (LF) cutoff, if available on your processor, isn’t what you think: a high-pass filter. Rather, it dictates the frequency beneath which there’s no processing. If you’re attacking traffic, set the LF cutoff to zero. If all you’re fighting is hiss, set it to 2000 Hz or higher and you won’t run the risk of damaging anything in your audio source below that level. Many processors have a high-frequency cutoff, which normally defaults to the Nyquist frequency. Change this setting if you’re processing only low frequencies and want to leave higher frequencies unaffected.

All broadband noise removers are shackled by the fact that as they aim for greater resolution in the frequency domain resolution in the time domain suffers. No way around it. Clever manufacturers offer options between frequency resolution and time resolution, giving you control over this dilemma.

Ambient Noise Complexity

Very few background noises have but one component. A typical noise floor will have harmonic elements from air conditioners or other machinery, ticking noises from microphones or cables if not from speech and broadband, random elements. Labeling a simple noise like air conditioning as “simple” is misleading. In addition to the obvious hissing air (solution = broadband de-noise), there’ll be harmonic sounds from the motor (solution = notch filters) and perhaps clicking from ineffective isolation springs or other causes (solution = de-click interpolation). The answer is simple: Don’t run straight for the broadband. Think about the source of the noise and appropriately plan your processing.

Knowing When to Stop

Aside from picking the wrong noise reduction tool for the job, the most common way to bungle the process is not knowing when to quit. When you repeatedly listen to a noise, trying to get an even cleaner signal, you inevitably lose touch with the audio you’re processing. Almost certainly you’ll over-process the tracks.

The antidote is annoyingly obvious: Process less. You can always do more in the mix. Also, when you’re happy with the noise reduction on a file, leave it. Do something else. Take a walk and rest your ears. Listen to it later with a fresh ear to see what you think. If it passes this delayed listening test, it’s probably acceptable.

To Process or Not to Process