Management of Cache Consistency

In any memory system of at least moderate complexity, maintaining cache consistency is a non-trivial matter. Cache consistency is loosely defined as follows:

In the presence of a cache, reads and writes behave (to a first order) no differently than if the cache were not there.

The choice of the word consistency in this book is deliberate, despite the confusion that may result within the architecture community (a memory-consistency model is a contract between programmer and memory system, and a cache-coherence scheme is an implementation of a given memory-consistency model; by cache consistency we mean something different). The reasons for the choice include the following:

• We would rather not invent a new term unless it is unavoidable.

• The term “cache consistency” is no longer used in computer architecture. Rather, as mentioned, the terms “cache coherence” and “consistency model” are used.

• The web cache community already uses the term cache consistency, and their definition mirrors ours.

There are three things that lead to cache consistency, all of which are reflections of the fact that a datum must have one value and only one value. If two requests to the same datum at the same time return different values, then the correctness of the memory system is in question. Put another way, the presence of the cache must not alter the correctness of the memory system’s handling of requests. The use of the term correctness is not an accident. There are many situations in which it is contractually correct, though perhaps not intuitively logical, for the memory system to provide different values for the same datum.

To return to the point, the three aspects of cache consistency are (i) that the cache remain consistent with the backing store, (ii) that the cache remain consistent with itself, and (iii) that the cache remain consistent in the presence of multiple requestors of the datum in question—other clients of the same backing store.

• Consistency with Backing Store: A cache’s data must reflect wwhat is in the backing store, and the backing store must reflect what is in the cache to the extent that no request should get the “wrong” data if the two are out of sync.

• Consistency with Self: If a datum is allowed to exist in multiple locations within the cache, no request must be allowed to get the wrong value.

• Consistency with Other Clients: If multiple requestors are in the system (e.g., multiple processors, multiple caches), the presence of the cache must not enable incorrect data to be propagated to anyone.

The remaining sections discuss these three behaviors in more detail.

4.1 Consistency with Backing Store

Whenever a value is written into a cache, there is immediately a synchronization problem: the cache and its backing store have different values for the same datum. If the backing store serves no other caches, the problem is not particularly severe, as the only way that an incorrect value can be propagated is for the cache to lose the written value or forget that it has it. (Note that such a scenario is possible in virtually indexed caches; see Section 4.2.)

In a more general scenario, the backing store can serve multiple caches and requestors, and the synchronization problem must be solved. There are several obvious solutions to the problem:

• Delayed write, driven by the cache

• Delayed write, driven by the backing store or external client

4.1.1 Write-Through

In a write-through policy, every value written to the cache is written to the backing store immediately, thereby minimizing the window of vulnerability. Though it is far from a complete solution to the cache-consistency problem (it does not provide consistency between multiple clients), it provides a reasonable degree of consistency with the backing store. The scheme is simple and yields a system with, at least potentially, the fewest number of “gotchas.”

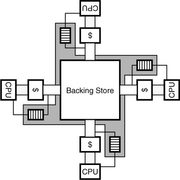

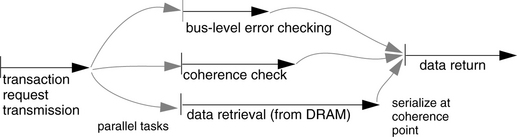

However, the speed differential between the cache and its backing store is likely to be non-trivial; otherwise, why have the cache in the first place? If the differential is large, then the cost of sending every write to the backing store can overwhelm the system, bringing performance to a halt. The typical solution is to use the mechanisms of caching and pipelining to solve the problem, as shown in Figure 4.1. The speed differential is equalized by inserting a new memory structure into the system: one that is physically part of the cache (i.e., built of the same technology) and thus can handle frequent writes without slowing down the system, but one that is logically considered part of the backing store.

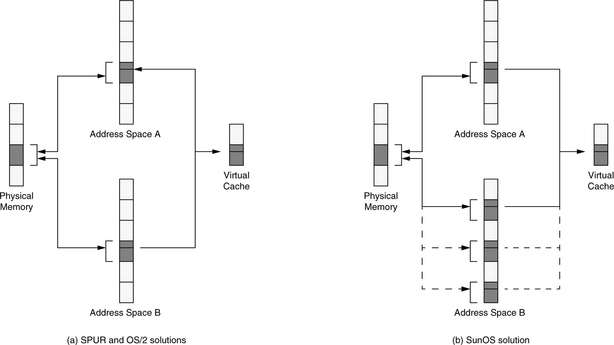

FIGURE 4.1 The use of write buffers and write caches in a write-through policy. (a) The write buffer or write cache is physically part of the cache, but logically part of the backing store. (b) Shows the implication as more caches become clients of the backing store.

The new memory structure is called a write buffer1 if it is a tagless FIFO organization or a write cache if it has tags and can be probed like a cache. Data in the write buffer or write cache is “newer” than the data in the backing store, and therefore it takes precedence over data in the backing store. Data is written to the write buffer/cache immediately, and it is written to the backing store from the write buffer/cache as a background task whenever the channel to the backing store is unused for demand data. Thus, the size of the write buffer/cache is an important consideration; it must be large enough to handle spikes in traffic without overfilling. Overfilling typically requires the structure to be emptied to the backing store, whether it is a convenient time or not.

When handling a request to the backing store, note that all associated write buffers/caches are logically part of the backing store, and therefore they must all be checked for the requested data. In the case of tagless structures (write buffers, as opposed to write caches), there is no way to probe the structure, and therefore its contents must be emptied to memory before the request is handled. Thus, any cache miss results in a write buffer dumping its entire contents to the backing store. Needless to say, this is expensive, and so many systems use tagged buffers, or write caches, which must be emptied to the backing store only when they become overfull.

However, back to the point: on any request to the backing store, all associated write caches must be considered logically part of the backing store, and thus all must be probed for the requested data. This can be avoided to some extent by the use of directories (see the cache-coherence protocols in Section 4.3.2). But, at the very least, the requesting processor usually checks its associated write cache for requested data before sending the request to the backing store.

4.1.2 Delayed Write, Driven By the Cache

This policy delays writing the data to the backing store until later, where “later” is determined by the cache. There are some obvious triggers.

Conflict-Driven Update

In this policy, the data written into the cache is written to the backing store when there is a cache conflict with that block, i.e., data from a written block (i.e., a “dirty” block) is written to the backing store when another block of data is brought into the cache, displacing the dirty block. This is called the writeback policy.

There are some obvious benefits to using a writeback policy. The main things are data coalescing and reduction of write traffic, meaning that oftentimes, an entire block of data will be overwritten, requiring multiple write operations (a cache block is usually much larger than the granularity of data that a load/store instruction handles). Coalescing the write data into a single transfer to the backing store is very beneficial. In addition, studies have found that application behavior is such that writes to one location are frequently followed by more writes to the same location. So, if a location is going to be overwritten multiple times, one should not bother sending anything but the final version to the backing store.

Nonetheless, write-back causes problems in a multi-user scenario (e.g., multiprocessors). Sometimes you will want all of those little writes to the same location to be propagated to the rest of the system so that the other processors can see your activity. One can either return to a write-through policy, or one can create additional update scenarios driven by the backing store, i.e., in the case that the data is needed by someone else. This is discussed briefly in the next section and in more detail in Section 4.3.

Capacity-Driven Update

Note that there exist caches in which the concept of cache conflicts is hazy at best. Many software caches do not implement any organizational structure analogous to cache sets, and waiting to write data to the backing store until the cache is totally full (an event that would be analogous to a cache conflict) may be waiting too late. Such a cache might instead use a capacity-driven update. In this sort of scenario, for example, data could be written to the backing store after a certain threshold amount of data has passed through the cache.

Timer-Driven Update

It should be obvious that cache conflicts or capacity thresholds are not the only trigger events that one might want to have drive data from the cache into the backing store. Another event might be a countdown timer reaching zero: if the goal is to propagate the “final” version of a written datum, the cache could keep track of the time since its last update and write to the backing store once write activity has dropped below a threshold.

Power-Driven Update

An obvious policy is to write data to the backing store when the cost of keeping it in the cache exceeds some threshold. A popular cost metric is power dissipation: if an item is sitting in a solid-state cache, the very act of storing it dissipates power. The transistors dissipate leakage power, and the fact that the data is active means that its tag is checked when the cache is probed (something that applies equally well to software caches).

4.1.3 Delayed Write, Driven by Backing Store

The next obvious policy is for the backing store to decide when the data should be written back. This corresponds to software upcalls, hardware interrupts, coherence messages, etc. The cache holds onto the written data until it can no longer store the data (e.g., for capacity or power dissipation reasons or for other limitations of resources) or until the backing store asks for it.

This is an obvious extension of the write-buffer-write-cache-management policy described earlier, but it is more general than that. In this policy, the backing store serves as the synchronization point for multiple users of data, and it can demand that its client caches update it with the most recent versions of cached, written blocks. The most intuitive use for this is to implement coherence-driven updates (e.g., an incoming request triggers a broadcast or directory-driven request to client caches for the most recent copy of the requested data), but one can also envision other triggers, such as capacity (i.e., the backing store passes a threshold of available free space, particularly applicable to exclusive and non-inclusive caches), timers, or power dissipation.

4.2 Consistency with Self

A cache can run into problems if it allows a particular datum to reside at multiple locations within its structure. This is true of multiprocessor caches, wherein the “cache” can be thought of comprising all processor caches at the same level combined together. It is also true of monolithic caches. We will deal with the multiprocessor cache scenario in the next section; this section focuses on the problem in monolithic caches.

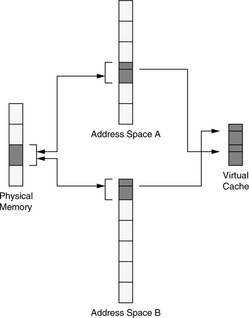

The primary enabler of monolithic caches containing multiple copies of a single datum is naming: to wit, if a datum can have more than one name, and the cache stores data within its extent according to their names, then it is certainly possible for a datum to reside at multiple places within the same cache. Moreover, the primary naming mechanism causing exactly these types of headaches is the virtual memory system and its implementation of shared memory. When these mix with virtual caches, headaches abound. Virtual cache organizations are discussed in “Virtual Addressing and Protection,” Chapter 2, Section 2.4.

4.2.1 Virtual Cache Management

Shared memory causes many headaches for systems designers and developers porting operating systems across microarchitectures. It is beneficial in that it allows two otherwise independent address spaces to overlap at a well-defined intersection, thereby allowing two independent processes to communicate with little to no overhead. However, it also introduces the possibility for a single datum to be placed at multiple locations in a cache, requiring careful cache management to keep data inconsistencies from occurring.

It becomes clear that this feature—shared memory—breaks the cache model of virtual memory. If a single datum is allowed to have several equivalent names, then it is possible for the datum to reside in a cache at multiple locations. This can easily cause inconsistencies, for example, when one writes values to two different locations that map to the same datum. It is for this reason that virtual memory is described as a mapping between two namespaces; one must remember this when dealing with virtual caches. As long as there is a one-to-one mapping between data and names, no inconsistencies can occur, and the entire virtual memory mechanism behaves no differently than a traditional cache hierarchy. Thus, virtual caches can be used without fear of data inconsistencies. As soon as shared memory is introduced, the simple cache model becomes difficult to maintain, because it is very convenient for an operating system to allow one-to-many namespace mappings. However, as we will see in later chapters, there are many tricks one can play to keep the cache model and still support shared memory.

The Consistency Problem of Virtual Caches

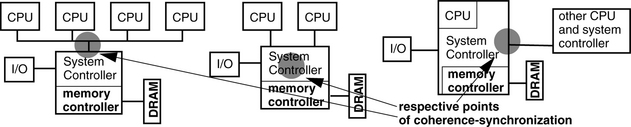

A virtually indexed cache allows the processor to use the untranslated virtual address as an index. This removes the TLB from the critical path, allowing shorter cycle times and/or a reduced number of pipeline stages. However, it introduces the possibility of data-consistency problems occurring when two processes write to the same physical location through different virtual addresses; if the pages align differently in the cache, erroneous results can occur. This is called the virtual cache synonym problem [Goodman 1987]. The problem is illustrated in Figure 4.2; a shared physical page maps to different locations in two different process-address spaces. The virtual cache is larger than a page, so the pages map to different locations in the virtual cache. As far as the cache is concerned, these are two different pages, not two different views of the same page. Thus, if the two processes write to the same page at the same time, using two different names, then two different values will be found in the cache.

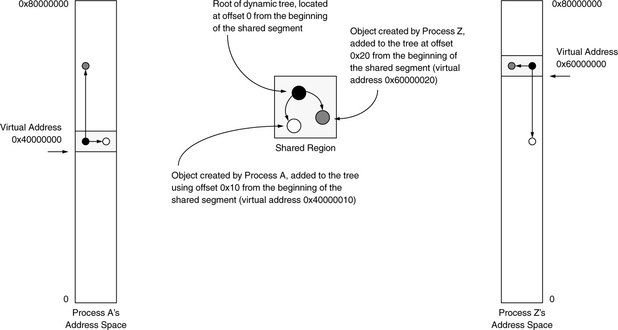

FIGURE 4.2 The synonym problem of virtual caches. If two processes are allowed to map physical pages at arbitrary locations in their virtual-address spaces, inconsistencies can occur in a virtually indexed cache.

Hardware Solutions

The synonym problem has been solved in hardware using schemes such as dual tag sets [Goodman 1987] or back-pointers [Wang et al. 1989], but these require complex hardware and control logic that can impede high clock rates. One can also restrict the size of the cache to the page size or, in the case of set-associative caches, similarly restrict the size of each cache bin (the size of the cache divided by its associativity [Kessler & Hill 1992]) to the size of one page. This is illustrated in Figure 4.3; it is the solution used in many desktop processors such as various PowerPC and Pentium designs. The disadvantages are the limitation in cache size and the increased access time of a set-associative cache. For example, the Pentium and PowerPC architectures must increase associativity to increase the size of their on-chip caches, and both architectures have used 8-way set-associative cache designs. Physically tagged caches guarantee consistency within a single cache set, but this only applies when the virtual synonyms map to the same set.

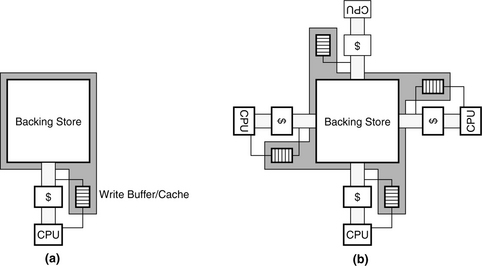

Software Solutions

Wheeler and Bershad describe a state-machine approach to reduce the number of cache flushes required to guarantee consistency [1992]. The mechanism allows a page to be mapped anywhere in an address space, and the operating system maintains correct behavior with respect to cache aliasing. The aliasing problem can also be solved through policy, as shown in Figure 4.4. For example, the SPUR project disallowed virtual aliases altogether [Hill et al. 1986]. Similarly, OS/2 locates all shared segments at the same address in all processes [Deitel 1990]. This reduces the amount of virtual memory available to each process, whether the process uses the shared segments or not. However, it eliminates the aliasing problem entirely and allows pointers to be shared between address spaces. SunOS requires shared pages to be aligned on cache-size boundaries [Hennessy & Patterson 1990], allowing physical pages to be mapped into address spaces at almost any location, but ensuring that virtual aliases align in the cache. Note that the SunOS scheme only solves the problem for direct-mapped virtual caches or set-associative virtual caches with physical tags; shared data can still exist in two different blocks of the same set in an associative, virtually indexed, virtually tagged cache. Single address space operating systems such as Opal [Chase et al. 1992a, 1992b] or Psyche [Scott et al. 1988] solve the problem by eliminating the concept of individual per-process address spaces entirely. Like OS/2, they define a one-to-one correspondence of virtual to physical addresses and in doing so allow pointers to be freely shared across process boundaries.

FIGURE 4.4 Synonym problem solved by operating system policy. OS/2 and the operating system for the SPUR processor guarantee the consistency of shared data by mandating that shared segments map into every process at the same virtual location. SunOS guarantees data consistency by aligning shared pages on cache-size boundaries. The bottom few bits of all virtual page numbers mapped to any given physical page will be identical, and the pages will map to the same location in the cache. Note that this works best with a direct-mapped cache.

Combined Solutions

Note that it is possible, using a segmented hardware architecture and an appropriate software organization, to solve the aliasing problem. The discussion is relatively long, so we have placed it in Chapter 31, Section 31.1.7, “Perspective: Segmented Addressing Solves the Synonym Problem.”

An important item to note regarding aliasing and set-associative caches is that set associativity is usually a transparent mechanism (the client is not usually aware of it), and the cache is expected to guarantee that the implementation of set associativity does not break any models. Thus, a set-associative cache cannot use virtual tags unless the set associativity is exposed to the client. If virtual tags are used by the cache, the cache has no way of identifying aliases to the same physical block, and so the cache cannot guarantee that a block will be unique within a set—two different references to the same block, using different virtual addresses, may result in the block being homed in two different blocks within the same set.

Perspective on Aliasing

Virtual-address aliasing is a necessary evil. It is useful, yet it breaks many simple models. Its usefulness outweighs its problems. Therefore, future memory-management systems must continue to support it.

Virtual-Address Aliasing Is Necessary

Most of the software solutions for the virtual cache synonym problem address the consistency problem by limiting the choices where a process can map a physical page in its virtual space. In some cases, the number of choices is reduced to one; the page is mapped at one globally unique location or it is not mapped at all. While disallowing virtual aliases would seem to be a simple and elegant way to solve the virtual-cache-consistency problem, it creates another headache for operating systems—virtual fragmentation.

When a global shared region is garbage-collected, the region cannot help but become fragmented. This is a problem because whereas de-fragmentation (compaction) of disk space or physically addressed memory is as simple as relocating pages or blocks, virtually addressed regions cannot be easily relocated. They are location-dependent; all pointers referencing the locations must also be changed. This is not a trivial task, and it is not clear that it can be done at all. Thus, a system that forces all processes to use the same virtual address for the same physical data will have a fragmented shared region that cannot be de-fragmented without enormous effort. Depending on the amount of sharing, this could mean a monotonically increasing shared region, which would be inimical to a 24 × 7 environment, i.e., one that is intended to be operative 24 hours a day, 7 days a week. Large address SASOS implementations on 64-bit machines avoid this problem by using a global shared region that is so enormous it would take a very long time to become overrun by fragmentation. Other systems [Druschel & Peterson 1993, Garrett et al. 1993] avoid the problem by dividing a fixed-size shared region into uniform sections and/or turning down requests for more shared memory if all sections are in use.

Virtual-Address Aliasing Is Detrimental

There are two issues associated with global addresses. One is that they eliminate virtual synonyms, and the other is that they allow shared pointers. If a system requires global addressing, then shared regions run the risk of fragmentation, but applications are allowed to place self-referential pointers in the shared regions without having to swizzle [Moss 1992] between address spaces. However, as suggested above, this requirement is too rigid; shared memory should be linked into address spaces at any (page-aligned) address, even though allowing virtual aliasing can reduce the ability to store pointers in the shared regions.

Figure 4.5 illustrates the problem: processes A and Z use different names for the shared data, and using each other’s pointers leads to confusion. This problem arises because the operating system was allowed or even instructed by the processes to place the shared region at different virtual addresses within each of the two address spaces. Using different addresses is not problematic until processes attempt to share pointers that reference data within the shared region. In this example, the shared region contains a binary tree that uses self-referential pointers that are not consistent because the shared region is located at different virtual addresses in each address space.

It is clear that unless processes use the same virtual address for the same data, there is little the operating system can do besides swizzle the pointers or force applications to use base+offset addressing schemes in shared regions. Nonetheless, we have come to expect support for virtual aliasing. Therefore, it is a requirement that a system support it.

Virtual Caches and the Protection Problem

A virtual cache allows the TLB to be probed in parallel with the cache access or to be probed only on a cache miss. The TLB traditionally contains page protection information. However, if the TLB probe occurs only on a cache miss, protection bits must be stored in the cache on a per-block basis, or else protection is effectively being ignored. When the protection bits for a page are replicated across several cache lines, changing the page’s protection is non-trivial. Obvious mechanisms include flushing the entire cache on protection modification or sweeping through the cache and modifying the appropriate lines. The operating system changes page protections to implement such features as copy-on-write, to reduce copy overhead when a process forks, or simply to share memory safely between itself and user processes.

A similar problem happens when a process terminates. If the cache is write-back, it is possible for a stale portion of a process’s address space to remain in the cache while the physical page is remapped into a new address space. When the stale portion is written back, it overwrites the data in the new address space. Obvious solutions include invalidating the entire cache or selected portions. These types of problems are often discovered when porting operating systems to architectures with virtual caches, such as putting Mach or Chorus on the PA-RISC.

4.2.2 ASID Management

Described earlier, ASIDs are a non-trivial hardware resource that the operating system must manage on several different levels. To begin with, an operating system can maintain hundreds or thousands of active processes simultaneously, and in the course of an afternoon, it can sweep through many times that amount. Each one of these processes will likely be given a unique identifier, because operating systems typically use 32-bit or 64-bit numbers to identify processes. In contrast, many hardware architectures only recognize tens to hundreds of different processes [Jacob & Mudge 1998b]—MIPS R2000/3000 has a 6-bit ASID; MIPS R10000 has an 8-bit ASID; and Alpha 21164 has a 7-bit ASId. The implication is that there cannot be a one-to-one mapping of process IDs to ASIDs, and therefore an operating system must manage a many-to-many environment. In other words, the operating system will be forced to perform frequent remapping of address-space IDs to process IDs, with TLB and cache flushes required on every remap (though, depending on implementation, these flushes could be specific to the ASID involved and not a whole-scale flush of the entire TLB and/or cache). Architectures that use IBM 801-style segmentation [Chang & Mergen 1988] and/or larger ASIDs tend to have an easier time of this.

ASIDs also complicate shared memory. The use of ASIDs for address space protection makes sharing difficult, requiring multiple page table and TLB entries for different aliases to the same physical page. Khalidi and Talluri describe the problem:

Each alias traditionally requires separate page table and translation lookaside buffer (TLB) entries that contain identical translation information. In systems with many aliases, this results in significant memory demand for storing page tables and unnecessary TLB misses on context switches. [Addressing these problems] reduces the number of user TLB misses by up to 50% in a 256-entry fully-associative TLB and a 4096-entry level-two TLB. The memory used to store hashed page tables is dramatically reduced by requiring a single page table entry instead of separate page table entries for hundreds of aliases to a physical page, [using] 97% less memory. [Khalidi & Talluri 1995]

Since ASIDs identify virtual pages with the processes that own them, mapping information necessarily includes an ASId. However, this ensures that for every shared page there are multiple entries in the page tables, since each differs by at least the ASId. This redundant mapping information requires more space in the page tables, and it floods the TLB with superfluous entries. For instance, if the average number of mappings per page were two, the effective size of the TLB would be cut in half. In fact, Khalidi and Talluri [1995] report the average number of mappings per page on an idle system to be 2.3, and they report a decrease by 50% of TLB misses when the superfluous-PTE problem is eliminated. A scheme that addresses this problem can reduce TLB contention as well as physical memory requirements.

The problem can be solved by a global bit in the TLB entry, which identifies a virtual page as belonging to no ASID in particular; therefore, every ASID will successfully match. This is the MIPS solution to the problem; it reduces the number of TLB entries required to map a shared page to exactly one, but the scheme introduces additional problems. The use of a global bit essentially circumvents the protection mechanism and thus requires flushing the TLB of shared entries on context switch, as the shared regions are otherwise left unprotected. Moreover, it does not allow a shared page to be mapped at different virtual addresses or with different protections. Using a global-bit mechanism is clearly unsuitable for supporting sharing if shared memory is to be used often.

If we eliminate the TLB, then the ASID, or something equivalent to distinguish between different contexts, will be required in the cache line. The use of ASIDs for protection causes the same problem, but in a new setting. Now, if two processes share the same region of data, the data will be tagged by one ASID, and if the wrong process tries to access the data that is in the cache, it will see an apparent cache miss simply because the data is tagged by the ASID of the other process. Again, using a global bit to marked shared cache lines leaves them unprotected against other processes, and so the cache lines must be flushed on context switch. This is potentially much more expensive than flushing mappings from the TLB, because the granularity for flushing the cache is usually a cache line, requiring many operations to flush an entire page.

4.3 Consistency with Other Clients

When the concept of caching is introduced into a multi-client or multiprocessor system, the consistency of the memory system should not change—the mere existence of caches should not change the value returned by a client’s request.

Or, at least, that is the way things started. However, while it is certainly possible to build mechanisms that ensure this happens (to wit, to ensure that a distributed system of caches and memories behaves like a monolithic memory system), and while systems are quite capable of doing it, the performance cost of doing so can be prohibitively expensive as compared to the performance one can get were one to relax the constraints a little. Consequently, different memory-consistency models have been proffered wherein the constraints are progressively relaxed—these are contracts with the memory system that provide looser guarantees on ordering but higher potential performance. In such scenarios, just as in a lossy data network, for example, the programmer can always ensure the desired behavior by using higher level synchronization protocols. For example, in a lossy network (i.e., any realistic network), one can implement a reliable transmission service by creating a handshaking protocol that notifies a sender of a packet’s delivery and generates a retransmission in the event that no acknowledgment arrives within a given time period. Similar mechanisms can be used to make a relaxed consistency model behave like a tighter model for when the programmer needs such guarantees.

4.3.1 Motivation, Explanation, Intuition

To make the concepts more clear, we present the types of problems that can occur, give some intuition for the range of solutions, and then discuss specific implementation details.

Scenario One

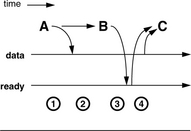

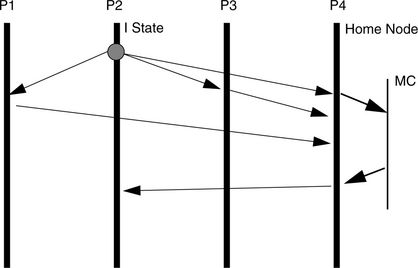

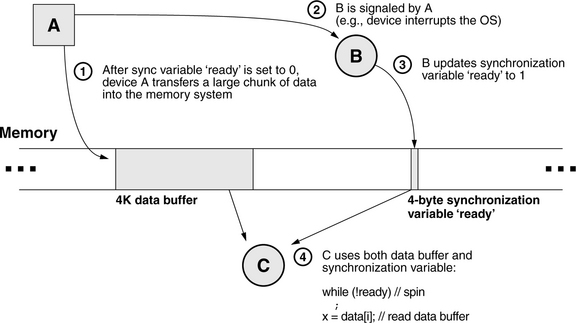

Figure 4.6 illustrates an example scenario.

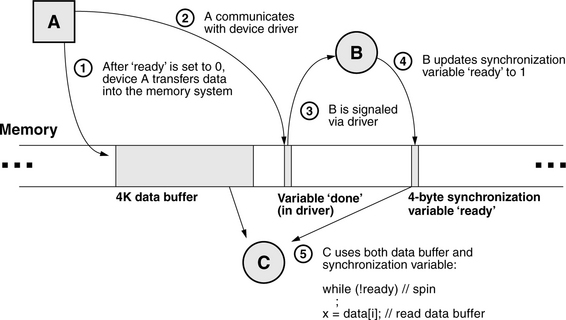

FIGURE 4.6 Race condition example. At time 1, the synchronization variable “ready” is initialized to 0. Meanwhile, hardware device A begins to transfer data into the memory system. When this transfer is complete, A sends B a message, whereupon B updates the synchronization variable. Meanwhile, another software process, C, has been spinning on the synchronization variable ready waiting for it to become non-zero. When it sees the update, it reads the data buffer.

• Hardware device A transfers a block of data into memory (perhaps via a DMA [direct memory access] controller).

• Software process B is A’s controller. It is a user-level process communicating with device A via a control link such as a serial port.

• Software process C is a consumer of the data and waits for B’s signal that the data is ready.

Though the scenario is contrived to have a (potential) race condition buried within it, this general structure is representative of many embedded systems in which software processes interact with hardware devices. The specifics of the scenario are as follows:

1. B communicates to client processes via a synchronization variable called “ready” that indicates when a new block of data is valid in the memory system. At time 1, B sets this variable to the value 0 and initiates the transfer of data from hardware device A to the memory system.

2. When the data transfer is complete, the hardware device signals the controlling software process via some channel such as a device interrupt through the operating system, or perhaps the controlling software must continuously poll the hardware device. Whatever the implementation, process B is aware that the data transfer has finished at time 2.

3. At time 3, B updates the synchronization variable to reflect the state of the data buffer. It sets the variable to the value 1.

4. Meanwhile, process C has been spinning on the synchronization variable, waiting for the data to become available. A simple code block for the client process C could be the following:

When the synchronization variable indicates that the data is in memory, the client process starts reading it. When it finishes, the process begins again. For example, the server process B could be responsible for initializing the variable ready at the outset, starting up C, and, from then on, B spins on ready to become 0, initiates a new data transfer, sets ready to 1, and spins again—in the steady state, B is responsible only for the 0 -> 1 transition, and C is responsible only for the 1 ->0 transition.

The timing of events is shown in Figure 4.7. In a simple uniprocessor system, the example has very straightforward behavior; the memory system enforces sequentiality of memory references by definition; the single processor enforces sequentiality of task execution by definition; and the only thing that could cause a problem is if the software process that does the transferring of data from A into the memory system (e.g., perhaps a device driver within the operating system) takes too long to transfer the data from its own protected buffer space into the user-readable target buffer.

In a simple uniprocessor system, causality is preserved because the system can only do one thing at a time: execute one instruction, handle one memory request. However, once this no longer holds, e.g., if this example is run on a multiprocessor or networked multicomputer, then all bets are off. Say, for example, that the memory system is distributed across many subsystems and that each of the processes (B, C, and the data transfer for A) are each running on a different subsystem. The question arises in such a scenario: does C get the correct data? This is a perfectly reasonable question to ask. Which happens first, the data buffer being available or the synchronization variable being available? In a complex system, the answer is not clear.

An Analogy: Distributed Systems Design

Let’s first explore why arbitrary ordering of memory requests might be a good thing. We have put forth a scenario in which arbitrary ordering in the memory system could create a race condition. This happens because our scenario exhibits a causal relationship between different, seemingly unrelated, memory locations, but note that not all applications do. Moreover, while consistency models exist that make a multiprocessor behave like a uniprocessor, their implementations usually come at the price of lower achievable performance. A better scheme may be to relax one’s expectations of the memory system and instead enforce ordering between events explicitly, when and where one knows causal relationships to exist.

The same concepts apply in network-systems design, and the issues can be more intuitive when couched in network terms. In particular, the following analogy gives implementation details that apply not just to distributed systems, but also to cache-coherence implementations, both hardware and software. Those already familiar with the issues might want to skip ahead, as the analogy is long and intended for readers who have not really thought about the issues of timing and causality in a networked system.

Imagine the development of a distributed system, a set of software processes on a network that provide some function and that use the network for communication to coordinate their behavior. The details of the high-level algorithm (the function that the set of processes provides in their cooperative fashion) do not matter for this discussion. At the outset of the system design, the designer has a wide range of choices to make regarding his assumptions of the network. For instance,

• Is delivery of a message guaranteed? In particular, if a process places a message packet onto the network, does the network guarantee that the intended recipient will receive that packet? Can the recipient receive the packet more than once? Can the network lose the packet? If yes, is any process on the network alerted to the loss?

• Is the delivery time of a message bounded? A network, even if it does not guarantee delivery of a packet, may be able to guarantee that, if the packet is delivered, it will be delivered within a certain window of time; otherwise, the packet will not be delivered even if not lost.

• Is the ordering of messages on the network preserved? If a process places a series of packets on the network, will they be delivered in that same serial order?

Depending on the assumptions of how the network behaves, the developer will choose to code the program very differently.

• If delivery of a message is guaranteed to be 1 (not 0, not 2 or more times), the time is bounded, and the network is guaranteed to preserve the ordering of messages, then the code can look very much like that of a functional programming model written for a sequential processor. In such a scenario, processes can send messages as if they are executing functions, and, in many cases, this is exactly how distributed systems are built from originally monolithic software implementations: by dividing work between processes at the function-call boundary. This is the nature of the remote procedure call (RPC) paradigm. It makes for a very simple programming model, because neither the designer nor the programmer need concern himself with details of the underlying medium.

• What if it is possible for the network to deliver a given message multiple times? For example, what if a packet placed on the network can be delivered to the recipient twice? If such an event would make no difference whatsoever to the functional correctness of the program, then this is not a big deal. However, if it can cause problems (e.g., imagine banking software receiving a credit/debit message for a particular account more than once), then the software must expect such a situation to occur and provide a mechanism to handle it. For example, the software could add to every outgoing packet a message number that is unique2 within the system, and a recipient discards any packet containing a message number that it has already seen.

• What if message ordering is not guaranteed? For example, what if a series of packets sent in a particular order arrive out of order at the destination? If such an event can cause problems, then the software must expect such a situation to occur and provide a mechanism to handle it. For example, the software could build into its message-handling facility a buffering/sequencing mechanism that examines incoming messages, delivering them up to the higher level if the sequence number is consecutive from the last packet received from that sender or buffering (and rearranging) the message until the packet/s with the intervening sequence number/s arrive. If the delivery time of a message is bounded, then the handler need only wait for that threshold of time. If the intervening sequence numbers are not seen by then, the handling software can assume that the sender sent packets with those sequence numbers to different recipients, and thus, there is no gap in the sequence as far as this recipient is concerned (the gap is not an indication of functional incorrectness).

• But what if delivery time is not bounded? Well, then things get a bit more interesting. Note that this is functionally (realistically) equivalent to the case that delivery is not guaranteed, i.e., a packet sent to a destination may or may not reach the destination. As before, if this poses any problem, the software must expect it and handle it. For instance, the recipient, upon detecting a gap in the sequence, could initiate a message to the recipient asking for clarification (i.e., did I miss something?), resulting in a retransmission of the intervening message/s if appropriate. However, the receiver in a distributed system is often a server, and, in general, it is a bad idea to allow servers to get into situations where they are blocking on something else, so this particular problem might best be handled by a sender-/client-side retry mechanism. To wit, build into the message-passing facility an explicit send-acknowledge protocol: every message sent expects a simple acknowledgment message in return from the recipient. If a sender fails to receive an acknowledgment in a timely fashion, the sender assumes that the message failed to reach the destination, and the sender initiates a retry. Separate sequence numbers can be maintained per recipient so that gaps seen by a recipient are an indication of a problem.

A designer always builds into a system explicit mechanisms to handle or counter any nonideal characteristics of the medium.

A designer that assumes the medium to be ideal needs no such mechanisms, and his code can look virtually identical to its form written for a single processor (no network). The less ideal a designer assumes the medium, the more workarounds his program must include.

In Particular, Cache Systems

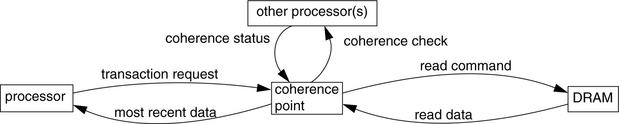

How does the analogy apply here? It turns out that writing software to deal with a distributed cache system (e.g., a multiprocessor cache system, a web-document proxy cache, etc.) is very much like writing for a network. In particular, the memory-consistency model describes and codifies one’s assumptions of the medium; it describes a designer’s understanding of how the cache and memory systems behave, both independently of each other and interactively. The memory-consistency model represents an understanding of how the memory system will behave, on top of which a designer creates algorithms; it is a contract between programmer and memory system.

It is reasonable to ask why, in the network case, would anyone ever choose to write the complicated software that makes very few assumptions about the behavior of the network. The answer is that such systems always represent trade-offs. In general, there is no free lunch, and complexity will be found somewhere, either in the infrastructure (in the network), in the user of the infrastructure (the distributed-systems software), or somehow spread across the two. The level of guarantee that an infrastructure chooses to provide to its clientele is often a case of diminishing returns: an infrastructure stops providing guarantees once its implementation reaches a level of complexity where the next improvement in service would come at a prohibitive cost. Thus, as an example, the Internet Protocol (IP) provides message delivery, but not reliable message delivery, and in cases where a designer wants or needs reliable message delivery, the facility is built on top of the base infrastructure (e.g., TCP/IP, reliable datagrams, etc).

The same is true in cache systems. It is very simple to write programs for a uniprocessor that has a single cache, and it is enticing to think that it should be no harder to write programs for multiprocessors that have many caches. In fact, many modern multiprocessor cache systems provide exactly that illusion: an extremely simple memory-consistency model and associated cache-coherence mechanism that support the illusion of simplicity by forcing a large number of distributed hardware caches to behave as one.

As one can imagine, such a system, lying as it does at one end of the complexity trade-off continuum, might not give the best performance. Thus, there are many different consistency models from which to choose, each representing a level of service guarantee less stringent than the preceding one, a hardware system that is less costly to build, and the potential for greater performance during those windows of time during which the software does not need the illusion of a single memory port.

The following are some example issues to note about the implementation, once the cache system becomes complex and/or distributed about a network:

• Suppose it is possible for writes to be buffered (not propagated directly to the backing store) and for reads to be satisfied from nearby caches. Such is the case with web proxies or multiprocessor caches. Such a system can have multiple cached copies of a given item, each with a different value, and if no effort is made to reconcile the various different versions with one another, problems can arise.

• Suppose that, in an implementation, the cache’s backing store is not a monolithic entity, but rather a collection of storage structures, each with its own port. This is the case for NUMA multiprocessor organizations, a popular design choice today. If data migration is possible, i.e., if it is possible for a given datum to reside in two places in the distributed backing store, then one can envision scenarios in which two writes to the same datum occur in different orders, depending on which segment of the backing store handles the requests.

• Whatever mechanism is used to ensure consistency between different caches and different users/processors must also be aware of the I/O system, so that I/O requests behave just like “normal” memory requests. The reason for this will become clear in a moment.

Note that this is by no means a complete list of issues. It is just given to put the problem into perspective.

4.3.2 Coherence vs. Consistency

At this point we should formally discuss memory coherence and memory consistency, terms that speak to the ordering behavior of operations on the memory system. We illustrate their definitions within the scope of the race condition example and then discuss them from the perspective of their use in modern multiprocessor systems design.

Memory Coherence: The principle of memory coherence indicates that the memory system behaves rationally. For instance, a value written does not disappear (fail to be read at a later point) unless that value is explicitly overwritten. Write data cannot be buffered indefinitely; any write data must eventually become visible to subsequent reads unless overwritten. Finally, the system must pick an order. If it is decided that write X comes before write Y, then at a later point, the system may not act as if Y came before X.

So, in the race condition example, coherence means that when B updates the variable ready, C will eventually see it. Similarly, C will eventually see the data block written by A. When C updates the variable to 0 at the end of its outer loop, the spin loop immediately following that update [while(!ready)] will read the value 0 until and unless B updates the variable in the meantime.

Memory Consistency: Whereas coherence defines rational behavior, the consistency model indicates how long and in what way/s the system is allowed to behave irrationally with respect to a given set of references. A memory-consistency model indicates how the memory system interleaves read and write accesses, whether they are to the same memory location or not. If two references refer to the same address, their ordering is obviously important. On the other hand, if two references refer to two different addresses, there can be no data dependencies between the two, and it should, in theory, be possible to reorder them as one sees fit. This is how modern DRAM memory controllers operate, for instance. However, as the example in Figure 4.6 shows, though a data dependence may not exist between two variables, a causal dependence may nonetheless exist, and thus it may or may not be safe to reorder the accesses. Therefore, it makes sense to define allowable orderings of references to different addresses.

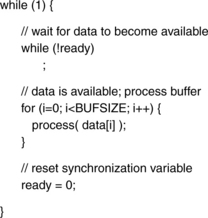

In the race condition example, depending on one’s consistency model, the simple code fragment

![]()

may or may not work as one expects. If the model allows arbitrary reordering, then the value of the variable ready may be seen by outside observers (i.e., by process C) before the values written to the data buffer become available, in which case C would get the wrong results.

To the newcomer, these terms have arguably unsatisfying definitions. Despite the clear distinction between the two, there is significant overlap when one goes a bit deeper. Many little items demonstrate a significant grey area at the intersection of the concepts of coherence and consistency. For instance,

• The popular definitions of consistency and coherence differentiate the two with the claim that one (coherence) defines the behavior of reads and writes to the same address, while the other (consistency) defines the behavior of reads and writes to different addresses. However, nearly every such discussion follows its definition of consistency with examples illustrating the behavior of reads and writes to the same memory location.

• Most memory-consistency models do not explicitly mention memory coherence. It is simply assumed to be part of the package. However, one cannot assume this to be the case generally, as several memory-consistency models violate3 implicitly or explicitly the principle of memory coherence.

• The stated primary function of a cache-coherence scheme is to ensure memory coherence, but any caching mechanism also implicitly adheres to a particular consistency model.

As the editors at Microprocessor Report said long ago, and we paraphrase here, “superpipelined is a term that means, as far as we can tell, pipelined.” Similarly, memory consistency is a term that means, as far as we can tell, memory coherence, … within a multi-client context. In many ways, memory consistency behaves like a superset of memory coherence, subsuming the latter concept entirely, and, as far as we can tell, the decision to give the two concepts different names4 only serves to confuse newcomers to the field. Consequently, outside of this section of the book, we will avoid using both terms. We will use the term memory consistency to indicate the behavior of the memory system, including the degree to which the memory system ensures memory coherence.

That is the way the terms, arguably, should be used. In particular, in an ideal world, one could argue that the cache-coherence engine (or perhaps rename it to the “cache-consistency engine”) should be responsible for implementing the given consistency model. However, the terms as used today within the computer-architecture community have very specific implementation-related connotations that differ from the ideal and must be explained to the uninitiated (as they were to us). In modern multiprocessor systems the cache-coherence mechanism is responsible for implementing memory coherence within a multi-client, multi-cache organization. Modern microprocessors execute their instructions out of order and can reorder memory requests internally to a significant degree [Jaleel & Jacob 2005]. This creates problems for the cache-coherence mechanism. In particular, when writes may be buffered and/or bypassed locally by other memory operations, the ability for the coherence engine to provide tight consistency guarantees becomes difficult to the point of impossibility (we will discuss this in more detail in Section 4.3.3). As a result, it would seem that all of the high-performance reordering and buffering mechanisms used in modern out-of-order cores—mechanisms that are necessary for good performance—must be thrown away to ensure correct operation.

To address this problem, researchers and developers propose relaxed consistency models that tolerate certain classes of irrational memory-system behavior for brief periods of time and thus define different programming models. Most significantly, these models allow the local reordering and buffering that modern processors implement by off-loading onto the shoulders of the programmer the responsibility of ensuring correct behavior when ordering is important. It is important to note that these models are implemented on top of the existing cache-coherence engines, an arrangement implying that the cache-coherence engines provide guarantees that are, perhaps, unnecessarily tight relative to the specific consistency model running on top. The relatively tight guarantees in the coherence engine are really only needed and used for synchronization variables so that fences and such5 can be implemented through the memory system and not as message-passing primitives or explicit hooks into the coherence engine. Rather, the processors intentionally disable the reordering and buffering of memory operations in the neighborhood of synchronization activity (releases, acquires) so that memory requests are sent immediately and in process order to the coherence engine, which then provides tight consistency and coherence guarantees for those variables. Once the synchronization activity is over, the reordering and buffering are turned back on, and the processors return to high-performance operation.

4.3.3 Memory-Consistency Models

The memory-consistency model defines the ordering of externally visible events (i.e., reads and writes to the memory system: when a read is satisfied and when a write’s data becomes visible to a subsequent request6), even if their corresponding requests are satisfied out of the local cache. Within the scope of a memory-consistency model, the cache is considered part of the memory system. In particular, read and write requests are allowed to be reordered to various degrees, as defined by the model in question. The following are some of the most commonly used models for the behavior of the memory system (tenets stolen from Tanenbaum [1995]):

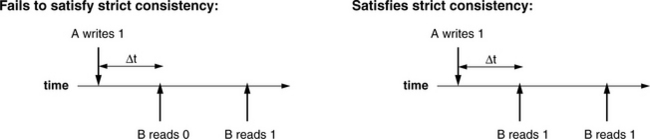

Strict Consistency: A read operation shall return the value written by the most recent store operation.

Sequential Consistency: The result of an execution is the same as a single interleaving of sequential, program-order accesses from different processors.

Processor Consistency: Writes from a process are observed by other clients to be in program order; all clients observe a single interleaving of writes from different processors.

The following sections describe these in more detail.

Strict Consistency

Strict consistency is the model traditionally provided by uniprocessors, and it is the model for which most programs are written. The basic tenet is

This is illustrated in Figure 4.8, which demonstrates the inherent problem with the model: it is not realistic in a multiprocessor sense. Nonetheless, the model is intuitively appealing. If any process has written a value to a variable, the next read to that variable will return the value written. The model is unrealistic for multiprocessors in that it fails to account for any communication latency: if A and B are on different processors and must communicate via a bus or network or some realistic channel with non-zero latency, then how does one support such a model? The “most recent store” definition causes non-trivial problems. Suppose B performs a read to the same location a fraction of a second (“Δt” in the figure) after A’s write operation. If the time-of-flight between two processors is longer than the “fraction of a second” timing between the write and following read, then there is no means for B even to know of the write event at the moment its read request is issued. Such a system will not be strictly consistent unless it has hardware or software support7 to prevent such race conditions, and such support will most likely degrade the performance of the common case.

FIGURE 4.8 Strict consistency. Each timeline shows a sequence of read/write events to a particular location. For strict consistency to hold, B must read what A wrote to a given memory location, regardless of how little time passes between the events.

As mentioned, a strictly consistent memory system behaves like a uniprocessor memory system, and note that it would, indeed, solve the problem demonstrated in Figure 4.6. Because C’s read to the data buffer cannot happen before A has finished writing the data buffer, a cache-coherence scheme implementing a strict consistency model will ensure that C cannot get old data.

Sequential Consistency

Despite its appeal of simplicity, the strict consistency model is viewed by many as being far too expensive an implementation: one must give up significant performance to obtain the convenient synchronization benefits. The good news is that, with appropriate use of explicit synchronization techniques, one can write perfectly functional code on multiple processors without having to resort to using strict consistency. A slightly relaxed model that still provides much of the guarantees of the strict model is the sequential consistency model [Lamport 1979], which has the following basic tenet:

The result of an execution is the same as a single interleaving of sequential, program-order accesses from different processors.

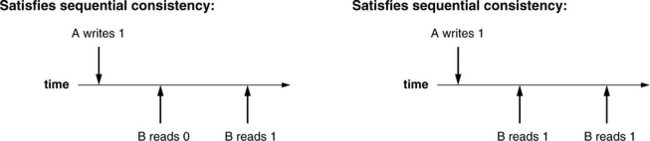

What this says is that accesses on different processors must be in program order (no reordering of memory requests is allowed); accesses from different processors can be interleaved freely, but all processors must see the same interleaving. Note that the interleaving may change from run to run of the program. The model is illustrated in Figure 4.9, which compares to Figure 4.8. Here, both sequences are considered valid by the model.

FIGURE 4.9 Sequential consistency. Unlike Figure 4.8, both scenarios are considered valid. Sequential consistency allows an arbitrary delay after which write data becomes available to subsequent reads.

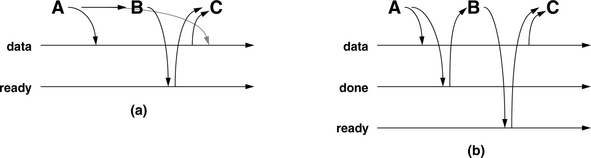

How does the model support the problem in Figure 4.6? At first glance, it would not handle the scenario correctly, which would be a little distressing, but there are more details to consider. In particular, the only realistic way for device A to propagate information to process B is through a device driver, whose invocation would involve a write to the memory system (see Figures 4.10 and 4.11). Figure 4.10 shows the previous example in more detail, including the device driver activity by which A propagates information to B. Figure 4.11 then compares the two scenarios in terms of timelines. Figure 4.11(a) shows the timeline of events as suggested by the original scenario, which would fail to behave as expected in a sequentially consistent memory system: a sequentially consistent model does not require that A’s write to the variable data and B’s write to the variable ready occur in any specific order. In particular, A’s write to data may be seen by the system after B’s write to ready.

FIGURE 4.10 Race condition example, more detail. The previous model ignored (intentionally) the method by which device A communicates to process B: the device driver. Communication with the device driver is through the memory system—memory locations and/or memory-mapped I/O registers.

FIGURE 4.11 Realistic picture of data movement and causality. In (a), information propagates directly from A to B without going through the memory system. Because the memory system does not observe the information, it cannot deduce causality, and thus it is possible for A’s write to data to be delayed until after C’s read of data. In reality, A and B are most likely to communicate through a device driver. Assume that the driver has a variable called “done;” (b) shows the picture of data movement and causality that makes sequential consistency work for this scenario.

However, as mentioned, the picture in Figure 4.6 and its corresponding timeline in Figure 4.11(a) are not realistic. The scenario implies that information propagates from A to B without going through the memory system. In contrast, Figure 4.11(b) paints a more realistic picture of what happens, including the movement of data through the device driver, in particular, through a variable in the driver’s space called done. This scenario will behave as expected under a sequentially consistent memory system. In contrast with the simpler picture, in which the transmission of information from A to B is unobserved by the memory system (and thus a coherence mechanism would be hard pressed to deduce causality between the events), in this picture the memory system observes all of the relevant events. In particular, the following event orderings are seen in a sequentially consistent system:

1. A writes data, followed by A writing done (seen by A and thus seen by all)

2. A writes done, followed by B reading done or some other variable written by the device driver (seen by device driver and B and thus seen by all)

3. B reads done … C reads data (seen by B and C and thus by all)

Because sequential consistency requires a global ordering of events that is seen by all clients of the memory system, any ordering seen by any process becomes the ordering that must be guaranteed by the memory system. Thus, C is guaranteed to read the correct version of the data buffer. Note again that the main reason this occurs is because the communication between A and B is actually through the memory system (either a memory location or memory-mapped I/O), and thus, the communication becomes part of the consistency model’s view of causality.

Some example issues to note about the implementation of a sequentially consistent memory system are the following:

• All I/O must go through the coherence scheme. Otherwise, the coherence mechanism would miss A’s write to the location done. Either I/O is memory-mapped, and the I/O locations are within the scope of the coherence mechanism, or, if I/O is not memory-mapped and instead uses special I/O primitives in the instruction set, the coherence scheme must be made aware of these special operations.

• The example assumes that A’s write to the buffer data and A’s write to the variable done are either handled by the same thread of control or at least handled on the same processor core. While this is a reasonable assumption given a uniprocessor implementation, it is not a reasonable assumption for a CMP (chip multiprocessor). If the assumption is not true, then event ordering #1 above (A writes data, followed by A writing done) does not hold true. As far as the system is concerned, if these operations are performed on different processor cores, then they are considered to be “simultaneous” and thus unrelated. In this event, they may be placed in arbitrary order by a sequentially consistent memory system.

• Another implication arises from the definition of sequential consistency: for all clients in the system to agree on an ordering, all clients will have to see external events in a timely manner, a fact that impacts the buffering of operations. In particular, write buffering, something long considered absolutely essential for good performance, is typically disallowed altogether in a sequentially consistent system. Modern processor cores have load/store queues into which write instructions deposit their store data. This store data is not propagated to the memory system (i.e., the local caches, which are visible to the coherence engine) until the write instruction commits, often long after the write is initiated. Thus, a write operation can be buffered for a relatively long period of time relative to local read operations, which are usually sent to the local caches as soon as their target addresses are known.

Sequential consistency requires that local interleavings of events be reflected globally. This is unlikely to happen if the rest of the system finds out about a local write operation much later, at which point all other clients will be forced to back out of operations that conflict with the local ordering of events. Consider the following operations on a given client: a write to location A, followed by reads that cause read misses to locations B, C, and d. If the reads to B, C, and D are seen by the rest of the system before the write to A is propagated out (i.e., the write is buffered until just after the read requests go out), an implementation will be very hard pressed to make all other clients in the system behave as if the write preceded the reads, as it did from the perspective of the initial client. One alternative is to make a node’s write buffer visible to the coherence engine, as suggested in the write-through cache illustration (Figure 4.1). Note that this would require opening up a processor core’s load/store queue to the coherence engine, a cost considered prohibitive if the cores are on separate chips. However, the current industry trend toward multi-cores on-chip may make this scheme viable, at least in providing a locally consistent (chip-wide consistent) cache system.

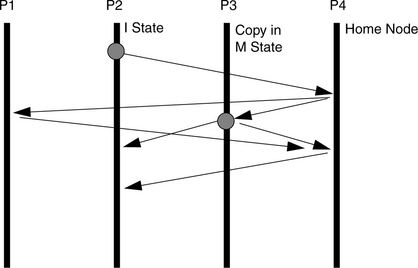

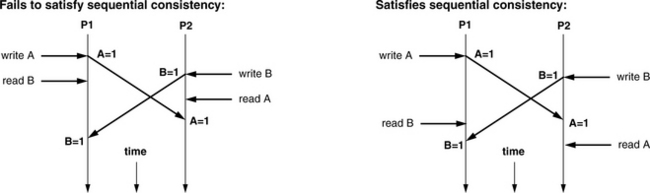

• A similar implication arises from studying Hennessy and Patterson’s example, originally proposed by Goodman [1989], in which a symmetric race condition occurs between two simultaneously executing processes, P1 and P2:

A sequentially consistent memory system will allow 0 or 1 processes to be killed, but not both. For instance, P1 will only try to kill P2 if P1’s read to B occurs before P2’s write to B. By the definition of sequential consistency (which stipulates the in-order execution of memory events), this would imply that P1’s write to A must come before P2’s read of A. The symmetric argument holds equally well.

The implication for an implementation of this model is illustrated in Figure 4.12: not only must writes not be buffered (as mentioned in the previous bullet), but reads must be delayed long enough for write information to propagate to the rest of the system. To ensure that two processes synchronize their memory events in a way that ensures sequential consistency, an implementation must do one of two things: either (i) block all subsequent memory operations following a write until all processor cores have observed the write event or (ii) allow bypassing and/or early execution of memory instructions subsequent to the write operation, but hold their commitment until long enough after the write operation to ensure that all processor cores can observe the write. The implication of speculative bypassing is that if in the meantime a write is observed originating from another core that conflicts with an early executing memory operation, that instruction’s commitment must be halted, its results must be discarded, and the instruction must be reexecuted in light of the new data.

FIGURE 4.12 Sequential consistency and racing threads. A memory system that satisfies sequential consistency must delay all memory operations following a write until the write is observed by all other clients. Otherwise, it would be possible to have both P1 and P2 try to kill each other (as in the scenario on the left), which is disallowed by the sequential model. For example, the earliest that a subsequent read can follow a write is the message-propagation time within the system. Alternatively, a processor can speculate, allowing reads to execute early and patching up if problems are later detected.

As the last two bullets attest, in a sequentially consistent memory system, write performance will be abysmal and read performance will suffer, or the implementation will embody significant complexity to avoid the performance limitations of the model.

Processor Consistency

So how does one avoid the limitations of a sequentially consistent memory system? A further relaxation of memory consistency is called processor consistency [Goodman 1989], also called total store order. Its basic tenet is

Writes from a process are observed by other clients to be in program order; all clients observe a single interleaving of writes from different processors.

This simply removes the read-ordering restriction imposed by sequential consistency: a processor is free to reorder reads ahead of writes without waiting for write data to be propagated to other clients in the system. The racing threads example, if executed on an implementation of processor consistency, can result in both processes killing each other: reads need not block on preceding writes; they may even execute ahead of preceding writes (see Figure 4.13). Similarly, the example illustrated in Figures 4.10 and 4.11 could easily result in unexpected behavior: processor consistency allows reads to go as early as desired, which would allow C’s read of the data buffer to proceed before C’s read of the variable ready finishes (consider, for example, the scenario in which the conditional branch on the value of ready is predicted early and correctly):

FIGURE 4.13 Processor consistency and racing threads. Processor consistency allows each processor or client within the system to reorder freely reads with respect to writes. As a result, the racing threads example can easily result in both processes trying to kill each other (both diagrams illustrate that outcome).

![]()

Ensuring correct behavior in such a consistency model requires the use of explicit release/acquire mechanisms (e.g., see Hennessy and Patterson [1996] for example code) in the update of either the device driver variable done or the variable ready.

Other Consistency Models

This might seem all that is necessary, but there are many further relaxations. For instance,

• Partial store order allows a processor to freely reorder local writes with respect to other local writes.

• Weak consistency allows a processor to freely reorder local writes ahead of local reads.

All of the consistency models up until this point preserve the ordering of synchronization accesses: to wit, locks, barriers, and the like may not be reordered and thus serve as synchronization points. Given this, there are further relaxations of consistency that exploit this.

• Release consistency distinguishes different classes of synchronization accesses (i.e., acquire of a lock versus release of the lock) and enforces synchronization only with respect to the acquire/release operations. When software does an acquire, the memory system updates all protected variables before continuing, and when software does a release, the memory system propagates any changes to the protected variables out to the rest of the system.

Other forms such as entry consistency and lazy release consistency are discussed in Section 4.3.5.

4.3.4 Hardware Cache-Coherence Mechanisms

So, given the various memory-consistency models to choose from, how does one go about supporting one? There are two general implementations:

• Software solutions that build cache coherence into the virtual memory system and add sharing information to the page tables, thereby providing shared memory across a general network

• Hardware solutions that run across dedicated processor busses and come in several flavors, including snoopy schemes and directory-based schemes.

This section will describe hardware solutions. Section 4.3.5 will describe software solutions.

Cache Block States

To begin with, a cache must track the state of its contents, and the states in which a block may find itself can be varied, especially when one considers the information required when multiple clients all want access to the same data. Some of the requirements facing a cache include the following:

• The cache must be able to enforce exclusive updating of lines, to preserve the illusion that a single writer exists in the system at any given moment. This is perhaps the easiest way to enforce a global sequence of write events to a particular address. Caches typically track this with Modified and/or Exclusive states (M/E states).

• The cache must be able to tell if a cache block is out of sync with the rest of the system due to a local write event. Caches typically track this with Modified and/or Owned states (M/O states).

• An implementation can be more efficient if it knows when a client only needs a readable copy of a particular datum and does not intend to write to it. Caches typically track this with a Shared state (S).

(I)nvalid: Invalid means that the cache block is in an uninitialized state.

(M)odified: read-writable, forwardable, dirty: Modified means that the state of the cache block has been changed by the processor; the block is dirty. This usually happens when a processor overwrites (part of) the block via a store instruction. The client with a block in this state has the only cached copy of that block in the system and responds to snoops for this block by forwarding the block (returning the data in a response message) and also writing the block back to the backing store.

(S)hared: read-only (can be clean or dirty): Shared means that the cache line in question may have multiple copies in the caches of multiple clients. Being in a Shared state does not imply that the cache block is actively being shared among processes. Rather, it implies that another copy of the data is held in a remote processor’s cache.

(E)xclusive: read-writable, clean: Exclusive means that a processor can modify a given cache block without informing any other clients. To write a cache block, the processor must first acquire the block in an exclusive state. Thus, even if the processor holds a copy of the data in the data cache, it first requires a transaction to the backing store (or wherever the coherence mechanism is homed) to request the data in Exclusive state. The client with a block in this state has the only cached copy of that block in the system, and it may forward that block in response to a snoop request. Once written by the client, the block is transitioned to the Modified state.

(O)wned: read-only, forwardable, dirty: Owned means that a cache can send other clients a copy of the (dirty) data without needing to write back, but the Owner is the only one who can write the block back to the backing store. The block is read-only: the client holding the block may forward it, but may not write it.

Note that this is just a sample of common states. Protocols exist that make use of many other states as well. This is enough to get an idea of what has been done.

Using Write-Through Caches (SI)

We start with a simple example: a system of write-through caches, as shown in Figure 4.14. In such a scheme, all writes are transmitted directly through to memory, even if considering the existence of write buffers or write caches. Because the backing store is known to be consistent with each cache at all times, a cache may “silently” evict any cache block at any time, even if that block was recently written by the processor.

In such a scheme, cache blocks need only track SI states: a block is either present in a cache or not. Writes are propagated to the backing store immediately by definition of the scheme, and thus, in a sense, the problem of handling multiple writers to the same datum is transferred to the shoulders of the backing store.

Write-update: Typically, write events will cause one of two responses: write-update or write-invalidate. A write-update policy will transparently update the various cached copies of a block: if processor A writes to a block, the write data will find its way into all cached copies of that block. This clearly requires significant bandwidth: every local write immediately becomes a global broadcast of data. The mechanism, though expensive, is extremely beneficial in handling applications that make use of widely shared data. It is non-trivial to implement because it requires shoving unsolicited data into another client’s data cache.

Write-invalidate: The alternative to write-update is a write-invalidate policy, in which all cached copies of the written block are set to the Invalid state. All clients actively sharing the block will suddenly find their cached copies unavailable, and each must re-request the block to continue using it. This can be less bandwidth-intensive than a write-update scheme, but the general implementation mechanism is similar: an address must be sent to remote caches, each of which must check in its tag store to see if the affected datum is present.

The primary difference between the two is that in a write-invalidate policy, there can be only one writable copy of a datum in the system at any given point in time. In contrast, a write-update policy can easily support multiple writers, and, in fact, this is precisely what software implementations of cache coherence do. In addition, note that, by its nature, a write-update scheme makes sequential consistency extremely hard to implement: to guarantee that all clients see all operations in the same order, an implementation must guarantee that all update messages are interleaved with all other system-wide coherence messages in the same order for all nodes. While systems exist that do this (e.g., ISIS [Birman & Joseph 1987]), they tend to be relatively slow.

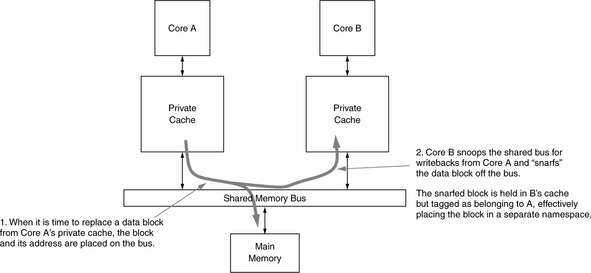

Using Write-Back Caches (MSI)

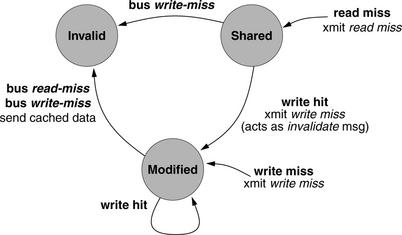

To reduce bandwidth requirements, one can change the scheme slightly (use write-back caches instead of write-through caches) and not propagate write data immediately. The scheme exploits write-coalescing in the data caches: multiple writes to the same cache block will not necessarily generate multiple coherence broadcasts to the system; rather, coherence messages will only be sent out when the written block is removed from the cache. Unlike the write-through scenario, in this scheme, data in a particular cache may be out of sync with the backing store and the other clients in the system. The implementation must ensure that this allows no situations that would be deemed incorrect by the chosen consistency model. Figure 4.15 illustrates a possible state machine for the implementation.

FIGURE 4.15 State machine for an MSI protocol [based on Archibald and Baer 1986]. Boldface font indicates observed action (e.g., local write miss to block in question, or a bus transaction from another cache on a block address matching in the local tags). Regular font indicates response action taken by local cache.