OpenSL ES is an application level audio library in C. Android NDK native audio APIs are based on the OpenSL ES 1.0.1 standard with Android specific extensions. The API is available for Android 2.3 or higher and some features are only supported on Android 4.0 or higher. The API functions in this libraries are not frozen yet and are still evolving. Future versions of this library may require us to update our code. This recipe introduces OpenSL ES APIs in the context of Android.

Before we start coding with OpenSL ES, it is essential to understand some basics of the library. OpenSL ES stands for Open Sound Library for embedded systems, which is a cross-platform, royalty-free, C language application level API for developers to access audio functionalities of embedded systems. The library specification defines features like audio playback and recording, audio effects and controls, 2D and 3D audio, advanced MIDI, and so on. Based on the features supported, OpenSL ES defines three profiles, including phone, music, and game.

However, the Android native audio API does not conform to any of the three profiles, because it does not implement all features from any of the profiles. In addition, Android implements some features specific to Android, such as the Android buffer queue. For a detailed description of what is supported on Android, we can refer to the OpenSL ES for Android documentation available with Android NDK under the docs/opensles/ folder.

Although OpenSL ES API is implemented in C, it adopts an object-oriented approach by building the library based on objects and interfaces:

- Object: An object is an abstraction of a set of resources and their states. Every object has a type assigned at its creation, and the type determines the set of tasks the object can perform. It is similar to the class concept in C++.

- Interface: An interface is an abstraction of a set of features an object can provide. These features are exposed to us as a set of methods and the type of each interface determines the exact set of features exposed. In the code, the type of an interface is identified by the interface ID.

It is important to note that an object does not have actual representation in code. We change the object's states and access its features through interfaces. An object can have one or more interface instances. However, no two instances of a single object can be the same type. In addition, a given interface instance can only belong to one object. This relationship can be illustrated as shown in the following diagram:

As shown in the diagram, Object 1 and Object 2 have different types and therefore expose different interfaces. Object 1 has three interface instances, all with different types. While Object 2 has another two interface instances with different types. Note that Interface 2 of Object 1 and Interface 4 of Object 2 have the same type, which means both Object 1 and Object 2 support the features exposed through interfaces of Interface Type B.

The following steps describe how to create a simple Android application using the native audio library to record and play audio:

- Create an Android application named

OpenSLESDemo. Set the package name ascookbook.chapter7.opensles. Refer to the Loading native libraries and registering native methods recipe of Chapter 2, Java Native Interface for more detailed instructions. - Right-click on the project OpenSLESDemo, select Android Tools | Add Native Support.

- Add a Java file named

MainActivity.javain thecookbook.chapter7.openslespackage. This Java file simply loads the native libraryOpenSLESDemoand calls the native methods to record and play audio. - Add

mylog.h,common.h,play.c,record.c, andOpenSLESDemo.cppfiles in thejnifolder. A part of the code in theplay.c,record.c, andOpenSLESDemo.cppfiles is shown in the following code snippet.record.ccontains the code to create an audio recorder object and record the audio.createAudioRecordercreates and realizes an audio player object and obtains the record and buffer queue interfaces:jboolean createAudioRecorder() { SLresult result; SLDataLocator_IODevice loc_dev = {SL_DATALOCATOR_IODEVICE, SL_IODEVICE_AUDIOINPUT, SL_DEFAULTDEVICEID_AUDIOINPUT, NULL}; SLDataSource audioSrc = {&loc_dev, NULL}; SLDataLocator_AndroidSimpleBufferQueue loc_bq = {SL_DATALOCATOR_ANDROIDSIMPLEBUFFERQUEUE, 1}; SLDataFormat_PCM format_pcm = {SL_DATAFORMAT_PCM, 1, SL_SAMPLINGRATE_16, SL_PCMSAMPLEFORMAT_FIXED_16, SL_PCMSAMPLEFORMAT_FIXED_16, SL_SPEAKER_FRONT_CENTER, SL_BYTEORDER_LITTLEENDIAN}; SLDataSink audioSnk = {&loc_bq, &format_pcm}; const SLInterfaceID id[1] = {SL_IID_ANDROIDSIMPLEBUFFERQUEUE}; const SLboolean req[1] = {SL_BOOLEAN_TRUE}; result = (*engineEngine)->CreateAudioRecorder(engineEngine, &recorderObject, &audioSrc, &audioSnk, 1, id, req); result = (*recorderObject)->Realize(recorderObject, SL_BOOLEAN_FALSE); result = (*recorderObject)->GetInterface(recorderObject, SL_IID_RECORD, &recorderRecord); result = (*recorderObject)->GetInterface(recorderObject, SL_IID_ANDROIDSIMPLEBUFFERQUEUE, &recorderBufferQueue); result = (*recorderBufferQueue)->RegisterCallback(recorderBufferQueue, bqRecorderCallback, NULL); return JNI_TRUE; }startRecordingenqueues the buffer to store the recording audio and set the audio object state as recording:void startRecording() { SLresult result; recordF = fopen("/sdcard/test.pcm", "wb"); result = (*recorderRecord)->SetRecordState(recorderRecord, SL_RECORDSTATE_STOPPED); result = (*recorderBufferQueue)->Clear(recorderBufferQueue); recordCnt = 0; result = (*recorderBufferQueue)->Enqueue(recorderBufferQueue, recorderBuffer, RECORDER_FRAMES * sizeof(short)); result = (*recorderRecord)->SetRecordState(recorderRecord, SL_RECORDSTATE_RECORDING); }Every time the buffer queue is ready to accept a new data block, the

bqRecorderCallbackcallback method is invoked. This happens when a buffer is filled with audio data:void bqRecorderCallback(SLAndroidSimpleBufferQueueItf bq, void *context) { int numOfRecords = fwrite(recorderBuffer, sizeof(short), RECORDER_FRAMES, recordF); fflush(recordF); recordCnt++; SLresult result; if (recordCnt*5 < RECORD_TIME) { result = (*recorderBufferQueue)->Enqueue(recorderBufferQueue, recorderBuffer, RECORDER_FRAMES * sizeof(short)); } else { result = (*recorderRecord)->SetRecordState(recorderRecord, SL_RECORDSTATE_STOPPED); if (SL_RESULT_SUCCESS == result) { fclose(recordF); } } }play.ccontains the code to create an audio player object and play the audio.createBufferQueueAudioPlayercreates and realizes an audio player object which plays audio from the buffer queue:void createBufferQueueAudioPlayer() { SLresult result; SLDataLocator_AndroidSimpleBufferQueue loc_bufq = {SL_DATALOCATOR_ANDROIDSIMPLEBUFFERQUEUE, 1}; SLDataFormat_PCM format_pcm = {SL_DATAFORMAT_PCM, 1, SL_SAMPLINGRATE_16, SL_PCMSAMPLEFORMAT_FIXED_16, SL_PCMSAMPLEFORMAT_FIXED_16, SL_SPEAKER_FRONT_CENTER, SL_BYTEORDER_LITTLEENDIAN}; SLDataSource audioSrc = {&loc_bufq, &format_pcm}; SLDataLocator_OutputMix loc_outmix = {SL_DATALOCATOR_OUTPUTMIX, outputMixObject}; SLDataSink audioSnk = {&loc_outmix, NULL}; const SLInterfaceID ids[3] = {SL_IID_BUFFERQUEUE, SL_IID_EFFECTSEND, SL_IID_VOLUME}; const SLboolean req[3] = {SL_BOOLEAN_TRUE, SL_BOOLEAN_TRUE, SL_BOOLEAN_TRUE}; result = (*engineEngine)->CreateAudioPlayer(engineEngine, &bqPlayerObject, &audioSrc, &audioSnk, 3, ids, req); result = (*bqPlayerObject)->Realize(bqPlayerObject, SL_BOOLEAN_FALSE); result = (*bqPlayerObject)->GetInterface(bqPlayerObject, SL_IID_PLAY, &bqPlayerPlay); result = (*bqPlayerObject)->GetInterface(bqPlayerObject, SL_IID_BUFFERQUEUE, &bqPlayerBufferQueue); result = (*bqPlayerBufferQueue)->RegisterCallback(bqPlayerBufferQueue, bqPlayerCallback, NULL); result = (*bqPlayerObject)->GetInterface(bqPlayerObject, SL_IID_EFFECTSEND, &bqPlayerEffectSend); result = (*bqPlayerObject)->GetInterface(bqPlayerObject, SL_IID_VOLUME, &bqPlayerVolume); }startPlayingfills the buffer with data from thetest.cpmfile and starts playing:jboolean startPlaying() { SLresult result; recordF = fopen("/sdcard/test.pcm", "rb"); noMoreData = 0; int numOfRecords = fread(recorderBuffer, sizeof(short), RECORDER_FRAMES, recordF); if (RECORDER_FRAMES != numOfRecords) { if (numOfRecords <= 0) { return JNI_TRUE; } noMoreData = 1; } result = (*bqPlayerBufferQueue)->Enqueue(bqPlayerBufferQueue, recorderBuffer, RECORDER_FRAMES * sizeof(short)); result = (*bqPlayerPlay)->SetPlayState(bqPlayerPlay, SL_PLAYSTATE_PLAYING); return JNI_TRUE; }bqPlayerCallbackevery time the buffer queue is ready to accept a new buffer, this callback method is invoked. This happens when a buffer has finished playing:void bqPlayerCallback(SLAndroidSimpleBufferQueueItf bq, void *context) { if (!noMoreData) { SLresult result; int numOfRecords = fread(recorderBuffer, sizeof(short), RECORDER_FRAMES, recordF); if (RECORDER_FRAMES != numOfRecords) { if (numOfRecords <= 0) { noMoreData = 1; (*bqPlayerPlay)->SetPlayState(bqPlayerPlay, SL_PLAYSTATE_STOPPED); fclose(recordF); return; } noMoreData = 1; } result = (*bqPlayerBufferQueue)->Enqueue(bqPlayerBufferQueue, recorderBuffer, RECORDER_FRAMES * sizeof(short)); } else { (*bqPlayerPlay)->SetPlayState(bqPlayerPlay, SL_PLAYSTATE_STOPPED); fclose(recordF); } }OpenSLESDemo.cppcontains the code to create the OpenSL ES engine object, free the objects, and register the native methods:naCreateEnginecreates the engine object and outputs the mix object.void naCreateEngine(JNIEnv* env, jclass clazz) { SLresult result; result = slCreateEngine(&engineObject, 0, NULL, 0, NULL, NULL); result = (*engineObject)->Realize(engineObject, SL_BOOLEAN_FALSE); result = (*engineObject)->GetInterface(engineObject, SL_IID_ENGINE, &engineEngine); const SLInterfaceID ids[1] = {SL_IID_ENVIRONMENTALREVERB}; const SLboolean req[1] = {SL_BOOLEAN_FALSE}; result = (*engineEngine)->CreateOutputMix(engineEngine, &outputMixObject, 1, ids, req); result = (*outputMixObject)->Realize(outputMixObject, SL_BOOLEAN_FALSE); result = (*outputMixObject)->GetInterface(outputMixObject, SL_IID_ENVIRONMENTALREVERB, &outputMixEnvironmentalReverb); if (SL_RESULT_SUCCESS == result) { result = (*outputMixEnvironmentalReverb)->SetEnvironmentalReverbProperties( outputMixEnvironmentalReverb, &reverbSettings); } } - Add the following permissions to the

AndroidManifest.xmlfile.<uses-permission android:name="android.permission.RECORD_AUDIO"/> <uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE"/> <uses-permission android:name="android.permission.MODIFY_AUDIO_SETTINGS"></uses-permission>

- Add an

Android.mkfile in thejnifolder with the following content:LOCAL_PATH := $(call my-dir) include $(CLEAR_VARS) LOCAL_MODULE := OpenSLESDemo LOCAL_SRC_FILES := OpenSLESDemo.cpp record.c play.c LOCAL_LDLIBS := -llog LOCAL_LDLIBS += -lOpenSLES include $(BUILD_SHARED_LIBRARY)

- Build and run the Android project, and use the following command to monitor the

logcatoutput:$ adb logcat -v time OpenSLESDemo:I *:S

- The application GUI is shown in the following screenshot:

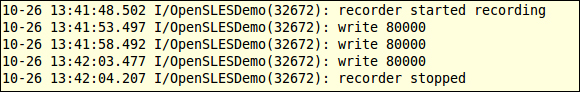

- We can start the audio recording by clicking on the Record button. The recording will last for 15 seconds. The

logcatoutput will be as shown in the following screenshot:

- Once the recording is finished. There will be a

/sdcard/test.pcmfile created at the Android device. We can click on the Play button to play the audio file. Thelogcatoutput will be as shown in the following screenshot:

- We can start the audio recording by clicking on the Record button. The recording will last for 15 seconds. The

This sample project demonstrates how to use OpenSL ES Audio library. We will first explain some key concepts and then describe how we used the recording and playback API.

An object does not have an actual representation in code and the creation of an object is done through interface. Every method which creates an object returns a SLObjectInf interface, which can be used to perform the basic operations on the object and access other interfaces of the object. The steps for object creation is described as follows:

- Create an engine object. The engine object is the entry point of OpenSL ES API. Creating an engine object is done with the global function

slCreateEngine(), which returns aSLObjectItfinterface. - Realize the engine object. An object cannot be used until it is realized. We will discuss this in detail in the following section.

- Obtain the

SLEngineItfinterface of the engine object through theGetInterface()method of theSLObjectItfinterface. - Call the object creation method provided by the

SLEngineItfinterface. ASLObjectItfinterface of the newly created object is returned upon success. - Realize the newly created object.

- Manipulate the created objects or access other interfaces through the

SLObjectItfinterface of the object. - After you are done with the object, call the

Destroy()method of theSLObjectItfinterface to free the object and its resources.

In our sample project, we created and realized the engine object, and obtained the SLEngineItf interface at the naCreateEngine function of OpenSLESDemo.cpp. We then called the CreateAudioRecorder() method, exposed by the SLEngineItf interface, to create an audio recorder object at createAudioRecorder function of record.c. In the same function, we also realized the audio recorder object and accessed a few other interfaces of the object through the SLObjectItf interface returned at object creation. After we are finished with the recorder object, we called the

Destroy() method to free the object and its resources, as shown in the naShutdown function of OpenSLESDemo.cpp.

One more thing to take note of on object creation is the interface request. An object creation method normally accepts three parameters related to interfaces, as shown in the CreateAudioPlayer method of the SLEngineItf interface as shown in the following code snippet:

SLresult (*CreateAudioPlayer) ( SLEngineItf self, SLObjectItf * pPlayer, SLDataSource *pAudioSrc, SLDataSink *pAudioSnk, SLuint32 numInterfaces, const SLInterfaceID * pInterfaceIds, const SLboolean * pInterfaceRequired );

The last three input arguments are related to interfaces. The numInterfaces argument indicates the number of interfaces we request to access. pInterfaceIds is an array of the numInterfaces interface IDs, which indicates the interface types the object should support. pInterfaceRequired is an array of SLboolean, specifying whether the requested interface is optional or required. In our audio player example, we called the

CreateAudioPlayer method to request three types of interfaces (SLAndroidSimpleBufferQueueItf, SLEffectSendItf, and SLVolumeItf indicated by SL_IID_BUFFERQUEUE, SL_IID_EFFECTSEND, and SL_IID_VOLUME respectively). Since all elements of the req array are true, all the interfaces are required. If the object cannot provide any of the interfaces, the object creation will fail:

const SLInterfaceID ids[3] = {SL_IID_BUFFERQUEUE, SL_IID_EFFECTSEND, SL_IID_VOLUME};

const SLboolean req[3] = {SL_BOOLEAN_TRUE, SL_BOOLEAN_TRUE, SL_BOOLEAN_TRUE};

result = (*engineEngine)->CreateAudioPlayer(engineEngine, &bqPlayerObject, &audioSrc, &audioSnk, 3, ids, req);Note that an object can have implicit and explicit interfaces. The implicit interfaces are available for every object of the type. For example, the

SLObjectItf interface is an implicit interface for all objects of all types. It is not necessary to request the implicit interfaces in the object creation method. However, if we want to access some explicit interfaces, we must request them in the method.

For more information on interfaces refer to Section 3.1.6, The Relationship Between Objects and Interfaces in the OpenSL ES 1.0.1 Specification document.

The object creation method creates an object and puts it in an unrealized state. At this state, the resources of the object have not been allocated, therefore it is not usable.

We will need to call the

Realize() method of the SLObjectItf interface of the object to cause the object to transit to the realized state, where the resources are allocated and the interfaces can be accessed.

Once we are done with the object, we call the

Destroy() method to free the object and its resources. This call internally transfers the object through the unrealized stage, where the resources are freed. Therefore, the resources are freed first before the object itself.

In this recipe, we illustrate the recording and playback APIs with our sample project.

In order to call the API functions, we must add the following lines to our code:

#include <SLES/OpenSLES.h>

If we are using Android-specific features as well, we should include another header:

#include <SLES/OpenSLES_Android.h>

In the Android.mk file, we must add the following line to link to the native OpenSL ES Audio library:

LOCAL_LDLIBS += libOpenSLES

Because the MIME data format and the SLAudioEncoderItf interface are not available for the audio recorder on Android, we can only record audio in the PCM format. Our example demonstrates how to record audio in the PCM format and save the data into a file. This can be illustrated using the following diagram:

At the createAudioRecorder function of record.c, we create and realize an audio recorder object. We set the audio input as data source, and an Android buffer queue as data sink. Note that we registered the

bqRecorderCallback function as the callback function for buffer queue. Whenever the buffer queue is ready for a new buffer, the bqRecorderCallback function will be called to save the buffer data to the test.cpm file and enqueue the buffer again for recording new audio data. At the

startRecording function, we start the recording.

Note

The callback functions in OpenSL ES are executed from internal non-application threads. The threads are not managed by Dalvik VM and therefore they cannot access JNI. These threads are critical to the integrity of the OpenSL ES implementation, so the callback functions should not block or perform any heavy-processing tasks.

In case we need to perform heavy tasks when the callback function is triggered, we should post an event for another thread to process such tasks.

This also applies to the OpenMAX AL library that we are going to cover in next recipe. More detailed information can be obtained from the NDK OpenSL ES documentation at the docs/opensles/ folder.

Android OpenSL ES library provides lots of features for audio playback. We can play encoded audio files, including mp3, aac, and so on. Our example shows how to play the PCM audio. This can be illustrated as shown in the following diagram:

We created and realized the engine object and the output mix object in the naCreateEngine function in OpenSLESDemo.cpp. The audio player object is created in the createBufferQueueAudioPlayer function of play.c with an Android buffer queue as data source and the output mix object as data sink. The bqPlayerCallback function is registered as the callback method through a SLAndroidSimpleBufferQueueItf interface. Whenever the player finishes playing a buffer, the buffer queue is ready for new data and the callback function bqPlayerCallback will be invoked. The method reads data from the test.pcm file into the buffer and enqueues it.

In the startPlaying function, we read the initial data into the buffer and set the player state to SL_PLAYSTATE_PLAYING.

OpenSL ES is a complex library with a more than 500 page long specification. The specification is a good reference when developing applications with OpenSL ES and it is available with the Android NDK.

The Android NDK also comes with a native-audio example, which demonstrates usage of a lot more OpenSL ES functions.