Chapter 12. Video Hardware

Display Adapters and Monitors

Although the monitor (or video display) is as vital to a PC’s user interface as the mouse and keyboard, it is actually a latecomer to computing. Before CRT (cathode ray tube) monitors came into general use, the teletypewriter was the standard computer interface—a large, loud device that printed the input and output characters on a roll of paper. Early personal computers often used nothing more than a panel of blinking LEDs for a display.

The first monitors used on computers displayed only text in a single color (usually green), but to users at the time they were a great improvement, allowing real-time display of input and output data. Over time, color displays were introduced, screen sizes increased, and LCD technologies moved from the portable computer to the desktop. The latest trends reflect the increasing convergence of entertainment and computer technologies.

Although modern video hardware is much more sophisticated than that of the past, you should still be careful when selecting video hardware for your computer. A poor display can cause eyestrain or otherwise significantly diminish the experience of using your PC.

The video subsystem of a PC consists of two main components:

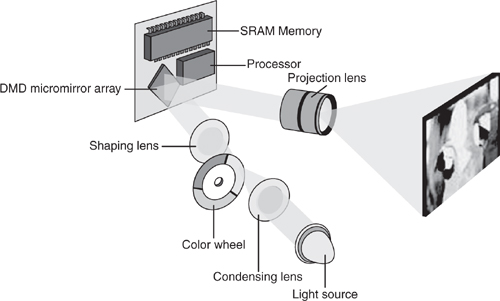

• Monitor (or video display)—The monitor is a display device usually based on an LCD panel, but may also use CRT, plasma, or DLP technology.

• Display adapter (also called the video card, graphics adapter, or graphics processing unit)—Although this usually refers to an adapter card plugged into a slot, the video adapter circuitry might also be built into the motherboard or included as part of the motherboard’s chipset. Although it sounds strange, the circuitry is still called an adapter or card even if it is fully integrated into the motherboard or chipset.

This chapter explores the range of PC video display adapters on the market today and the monitors that work with them.

Note

The term video, as it is used in this context, does not necessarily imply the existence of a moving image, such as on a television screen. Any circuitry that feeds signals to a monitor or display is a video display adapter, regardless of whether it is used with applications that display moving images, such as multimedia or videoconferencing software.

For this reason, video cards are sometimes referred to as graphics cards or display adapters.

Video Display Adapters

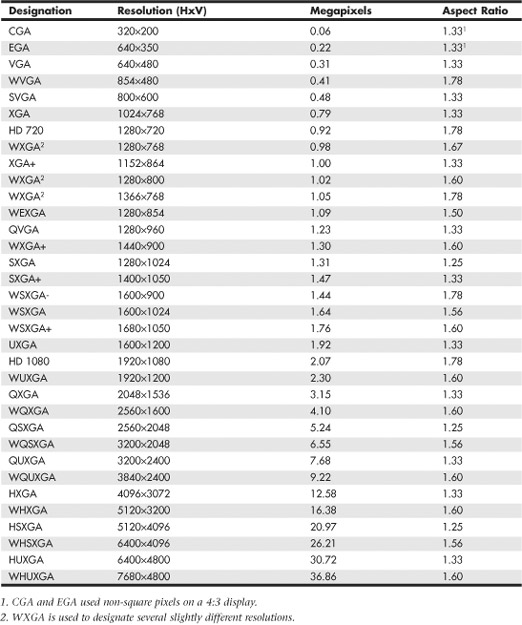

A video display adapter (aka video card) provides the interface between your computer and your monitor and transmits the signals that appear as images on the display. Throughout the history of the PC, there has been a succession of standards for video hardware that represents a steady increase in screen resolution, color depth, and performance. The following list of standards can serve as an abbreviated history of PC video-display technology:

MDA (Monochrome Display Adapter)

HGC (Hercules Graphics Card)

CGA (Color Graphics Adapter)

EGA (Enhanced Graphics Adapter)

VGA (Video Graphics Array)

SVGA (Super VGA)

XGA (Extended Graphics Array) and beyond

IBM pioneered most of these standards, but other manufacturers of compatible PCs adopted and enhanced them as well. Today, IBM no longer sets standards for the PC business (it even sold its PC business to Lenovo in 2005), and many of these standards are obsolete.

Today’s VGA and later video adapters can also handle most older software written for CGA, EGA, and other obsolete graphics standards. This enables you to use many, if not most, older graphics software (such as games and educational programs) on your current system.

Video Adapter Types

A monitor requires a source of input. The signals that run to your monitor come from one or more video display adapters in the system.

There are three basic types of video display adapters:

• Discrete plug-in video cards—These cards require the use of an expansion slot, but provide the highest possible level of features and performance.

• Discrete video on the motherboard—The same discrete circuitry that can be found on a video card can also be directly built in or mounted on the motherboard. This is how high-end video is installed in modern laptops and some older desktop systems; however, modern desktops normally use either discrete video on a plug-in card or motherboard chipset integrated video instead.

• Motherboard chipset integrated video—Integrated video shares the system RAM and other components. This has the lowest cost of any video solution, but performance can also be very low, especially for 3D gaming or other graphics-intensive applications. Resolution and color-depth options are also more limited than those available with add-on video cards. Because it is very economical on power, integrated video is used in many laptops for improved battery life. Many desktop systems with integrated video allow the installation of a discrete video plug-in card as an upgrade.

The term video adapter applies to either discrete or integrated video circuitry. The term graphics adapter is essentially interchangeable with video adapter because all video cards developed except the original IBM monochrome display adapter (MDA) can display graphics as well as text.

Integrated Video/Motherboard Chipsets

Although built-in video has been a staple of low-cost computing for a number of years, until the late 1990s most motherboard-based video simply mounted discrete video components on the motherboard. The performance and features of discrete video are essentially the same whether it is soldered into the motherboard or plugged in via an expansion card. In most cases the built-in discrete video could be upgraded by adding a video card. Some motherboard-based discrete video implementations also had provisions for memory upgrades.

However, in recent years the move toward increasing integration on the motherboard has led to the development of motherboard chipsets that include video support as part of the chipset design. In effect, the motherboard chipset takes the place of most of the discrete video card components and uses a portion of main system memory as video memory. The use of main system memory for video memory is often referred to as unified memory architecture (UMA), and although this memory-sharing method was also used by some built-in video that used its own chipset, it has become much more common with the rise of integrated motherboard chipsets.

Silicon Integrated Systems (SiS) pioneered chipsets with integrated video in 1996 and 1997 with its SiS5510 and SiS5596 chipsets for laptop and desktop systems, respectively. In 1997, Cyrix Semiconductor (now owned by VIA Technologies) introduced the MediaGX, which was the first to build both graphics and chipset functions into a PC-compatible CPU. National Semiconductor and later AMD developed improved versions of the MediaGX known as the Geode GX series.

Intel introduced motherboard chipsets with integrated graphics in 1999, starting with its 810 chipset for the Pentium III and Celeron processors. The 810 (codenamed Whitney) heralded the beginning of widespread industry support for this design, and the beginning of Intel’s dominance in the graphics market. Intel later followed the release of the 810 series (810 and 810E) with the 815 series for the Pentium III and Celeron, most of which also feature integrated video.

Since then, Intel has offered versions of both desktop and mobile chipsets with integrated graphics, and has become the world’s largest supplier of graphics chips virtually every year thereafter. This may sound strange because most people think of NVIDIA and ATI when it comes to graphics. Although NVIDIA and ATI may dominate in the high-end discrete graphics chip market, the market for lower-cost desktop and laptop systems with integrated graphics is larger than that for discrete graphics. Table 12.1 shows graphics chip market share data from JPR (Jon Peddie Research).

Table 12.1 Graphics Chip Market Share

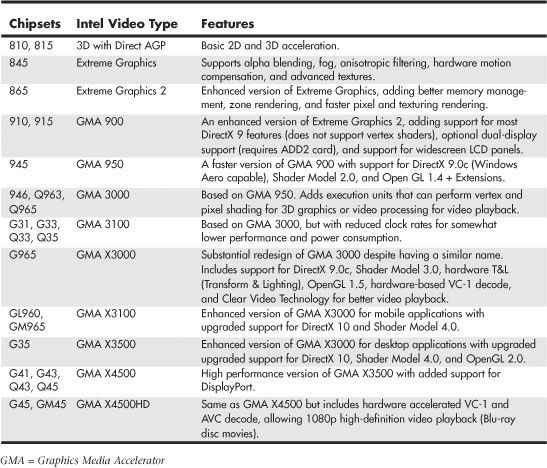

Table 12.2 shows the types and features for the integrated graphics available in Intel motherboard chipsets over the years.

Table 12.2 Intel Motherboard Chipset Integrated Video

Besides Intel, other major vendors of chipsets with integrated graphics include AMD/ATI, NVIDIA, SiS, and VIA/S3. Because there have been so many different chipsets with integrated video from these manufacturers over the years, I recommend consulting the specific manufacturer websites for more detailed information on specific chipset models and capabilities.

Newer integrated chipsets support digital outputs (such as DVI, HDMI, or DisplayPort) for use with digital LCD panels and home theater components. Figure 12.2, later in this chapter, illustrates how you can differentiate these ports.

Although a serious 3D gamer will not be satisfied with the performance of integrated graphics, business, home/office, and casual gamers will find that integrated chipset-based video on recent platforms is satisfactory in performance and provides significant cost savings compared with a separate video card. If you decide to buy a motherboard with an integrated chipset, I recommend that you select one that also includes a PCI Express x16 video expansion slot. This enables you to add a faster video card in the future if you decide you need it.

Video Adapter Components

Video display adapters contain certain basic components, usually including the following:

• Video BIOS.

• Video processor/video accelerator.

• Video memory.

• Digital-to-analog converter (DAC). Formerly a separate chip, the DAC is usually incorporated into the video processor/accelerator chip. The DAC is not necessary on a purely digital subsystem (digital video card and display); however, most display subsystems still include analog VGA support.

• Bus connector.

• Video driver.

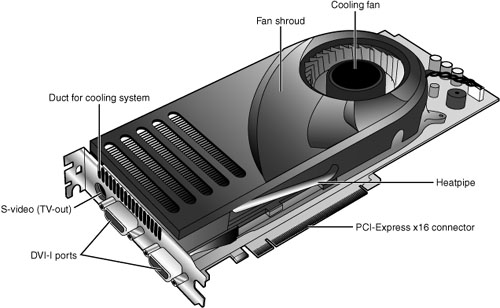

On high-performance video cards, such as the card shown in Figure 12.1, most of the components are underneath the cooling system. This card uses a combination of a fan and heatpipe to cool its graphics processing unit (GPU).

Figure 12.1 A typical example of a high-performance video card optimized for gaming and dual-display support.

Virtually all video adapters on the market today use chipsets that include 3D acceleration features. The following sections examine the video BIOS and processor in greater detail.

The Video BIOS

Video adapters include a BIOS that is separate from the main system BIOS. If you turn on your monitor first and look quickly, you might see an identification banner for your adapter’s video BIOS at the very beginning of the system startup process.

Similar to the system BIOS, the video adapter’s BIOS takes the form of a ROM (read-only memory) chip containing basic instructions that provide an interface between the video adapter hardware and the software running on your system. The software that makes calls to the video BIOS can be a standalone application, an operating system, or the main system BIOS. The programming in the BIOS chip enables your system to display information on the monitor during the system POST and boot sequences, before any other software drivers have been loaded from disk.

![]() See “BIOS Basics,” p. 313 (Chapter 5, “BIOS”).

See “BIOS Basics,” p. 313 (Chapter 5, “BIOS”).

In some cases the video BIOS also can be upgraded, just like a system BIOS. The video BIOS normally use a rewritable chip called an EEPROM (electrically erasable programmable read-only memory). On very old cards, you might be able to completely replace the chip with a new one—again, if supplied by the manufacturer and if the manufacturer did not hard-solder the BIOS to the printed circuit board. Most video cards use a surface-mounted BIOS chip rather than a socketed chip. A BIOS you can upgrade using software is referred to as a flash BIOS, and most video cards that offer BIOS upgrades use this method. However, because the video BIOS is only used during startup for VGA emulation, such upgrades are rarely necessary and most vendors fix problems by issuing updated drivers rather than BIOS updates.

Note

Video BIOS upgrades are sometimes referred to as firmware upgrades.

The Video Processor

The video processor (also known as the video chipset, video graphics processor, or GPU) is the heart of any video adapter and essentially defines the card’s functions and performance levels. Two video adapters built using the same chipset will have the same basic capabilities. However, cards built using the same chipset can vary in the clock speeds at which they run the chipset, memory, and other components, as well as in the amount and type of memory installed. Therefore, performance can vary. The software drivers that operating systems and applications use to address the video adapter hardware are written primarily with the chipset in mind. You can normally use a driver intended for an adapter with a particular chipset on any other adapter using the same chipset, or the same chipset families.

Identifying the Video and System Chipsets

Before you purchase a system or a video card, you should find out which chipset the video card or video circuit uses. For systems with integrated chipset video, you need to find out which integrated chipset the system uses. This allows you to have the following:

• A better comparison of the card or system to others

• Access to technical specifications

• Access to reviews and opinions

• The ability to make a better buying decision

• The choice of card manufacturer or chipset manufacturer support and drivers

Because video card performance and features are critical to enjoyment and productivity, find out as much as you can before you buy the system or video card by using the chipset or video card manufacturer’s website and third-party reviews. Poorly written or buggy drivers can cause several types of problems, so be sure to check periodically for video driver updates and install any that become available. With video cards, support after the sale can be important. Therefore, you should check the manufacturer’s website to see whether it offers updated drivers and whether the product seems to be well supported.

Note that although NVIDIA and AMD/ATI are the leading suppliers of discrete graphics processors, they do not normally make video cards. Instead, they create video card reference designs, which the various card manufacturers use to develop their own specific cards. Because each card manufacturer can customize or modify the designs as it chooses, two cards that use the same graphics chipset may differ in features as well as in actual performance. This means a wide variety of video cards use the same chipset; it also means you are likely to find variations in card performance, software bundles, warranties, and other features between cards using the same chipset.

Video RAM

Most discrete video adapters rely on their own onboard memory that they use to store video images while processing them. Systems with integrated video use the universal memory architecture (UMA) feature to share the main system memory. In any case, the memory on the video card or the memory borrowed from the system performs the same tasks.

The amount of video memory determines the maximum screen resolution and color depth the device can support, among other features. You often can select how much memory you want on a particular video adapter; for example, 256MB, 512MB, and 1GB are common choices today. Although having more video memory is not guaranteed to speed up your video adapter, it can increase the speed if it enables a wider bus (for example, from 128 bits wide to 256 bits wide) or provides nondisplay memory as a cache for commonly displayed objects. It also enables the card to generate more colors and higher resolutions and allows 3D textures to be stored and processed on the card, rather than in slower main memory.

Many types of memory have been used with video adapters. These memory types are summarized in Table 12.3.

Table 12.3 Memory Types Used in Video Display Adapters

Some of these, including FPM DRAM, EDO DRAM, and SDRAM, were also used for main memory in PCs. All of the others were specifically designed for use in graphics subsystems.

![]() For more information about FPM DRAM, EDO DRAM, and SDRAM, see Chapter 6, “Memory,” p. 375.

For more information about FPM DRAM, EDO DRAM, and SDRAM, see Chapter 6, “Memory,” p. 375.

VRAM and WRAM

VRAM and WRAM are dual-ported memory types that can read from one port and write data through the other port. This improves performance by reducing wait times for accessing the video RAM compared to FPM DRAM and EDO DRAM.

SGRAM

Synchronous Graphics RAM (SGRAM) was designed to be a high-end solution for very fast video adapter designs. SGRAM is similar to SDRAM in its capability to be synchronized to high-speed buses up to 200MHz, but it differs from SDRAM by including circuitry to perform block-writes to increase the speed of graphics fill and 3D Z-buffer operations.

DDR SGRAM

Double Data Rate SGRAM is designed to transfer data at speeds twice that of conventional SGRAM by transferring data on both the rising and falling parts of the processing clock cycle.

GDDR2 SGRAM

There have been several variations of what has been called GDDR2. The first was based on standard 2.5V DDR SDRAM with some enhancements, whereas the second was actually based on 1.8V DDR2 SDRAM, and with much higher performance and cooler operation.

GDDR3 SGRAM

GDDR3 SGRAM is based on DDR2 memory, but with two major differences:

• GDDR3 separates reads and writes with a single-ended unidirectional strobe, whereas DDR2 uses differential bidirectional strobes. This method enables higher data transfer rates.

• GDDR3 uses an interface technique known as pseudo-open drain, which uses voltage instead of current. This method makes GDDR3 memory compatible with GPUs designed to use DDR, GDDR2, or DDR2 memory. To determine the type of memory used on a particular video card, check the video card manufacturer’s specification sheet.

GDDR4 SGRAM

GDDR4 SGRAM is used by some of the newer cards. Compared to GDDR3, GDDR4 memory has the following features:

• Higher bandwidth. GDDR4 running at half the speed of GDDR3 provides comparable bandwidth to its predecessor.

• Greater memory density, enabling fewer chips to be needed to reach a particular memory size.

GDDR5 SGRAM

GDDR5 SGRAM is based on the previous GDDR standards with several modifications to allow increased performance. The main differences include the following:

• Signal optimization using data/address bit inversion, adjustable driver strength, adjustable voltage, and adjustable termination

• Adaptive interface timing using data training that is scalable per bit or byte

• Error compensation, including real-time error detection on both read/write and fast re-sending

GDDR5 is also designed for extreme power management such that power is only used when necessary. This allows higher clock speeds with cooler operation. Current GDDR5 parts are rated up to 7Gbps per chip, allowing 28GBps total bandwidth.

Video RAM Speed

Video RAM speed is typically measured in MHz, GHz, or by bandwidth in Mbits/Gbits or MBytes/GBytes per second. Faster memory and faster GPUs produce better gaming performance, but at a higher cost. However, if you are primarily concerned about business or productivity application performance, you can save money by using a video card with a slower GPU and slower memory.

Unless you dig deeply into the technical details of a particular graphics card, determining what type of memory a particular card uses can be difficult. Because none of today’s video cards feature user upgradeable memory, I recommend that you look at the performance of a given card and choose the card with the performance, features, and price that’s right for you.

RAM Calculations

The amount of memory a video adapter needs to display a particular resolution and color depth is based on a mathematical equation. A location must be present in the adapter’s memory array to display every pixel on the screen, and the resolution determines the number of total pixels. For example, a screen resolution of 1024×768 requires a total of 786,432 pixels.

If you were to display that resolution with only two colors, you would need only 1 bit of memory space to represent each pixel. If the bit has a value of 0, the dot is black, and if its value is 1, the dot is white. If you use 32 bits of memory space to control each pixel, you can display more than 4 billion colors because 4,294,967,296 combinations are possible with a 32-digit binary number (232=4,294,967,296). If you multiply the number of pixels necessary for the screen resolution by the number of bits required to represent each pixel, you have the amount of memory the adapter needs to display that resolution. Here is how the calculation works:

As you can see, displaying 32-bit color (4,294,967,296 colors) at 1024×768 resolution requires exactly 3MiB of RAM on the video adapter. Because most adapters have memory installed in multiples of 2, you would need to use a video adapter with at least 4MiB of RAM onboard to run your system using that resolution and color depth.

To use the higher-resolution modes and greater numbers of colors common today, you would need much more memory on your video adapter than the 256KB found on the original IBM VGA. Using the same calculation, even at a relatively high resolution of 1920x1080 (HDTV) using 32-bit color on a modern video card requires only need 7.91MiB, meaning only 8MiB would be required on the card. Since most modern video cards have 128MiB or more, you can see that two-dimensional images don’t require much memory.

3D video cards require more memory for a given resolution and color depth because the video memory must be used for three buffers: the front buffer, back buffer, and Z-buffer. The amount of video memory required for a particular operation varies according to the settings used for the color depth and Z-buffer. Triple buffering allocates more memory for 3D textures than double buffering but can slow down the performance of some games. The buffering mode used by a given 3D video card usually can be adjusted through its properties sheet.

Although current integrated graphics solutions feature 3D support, the performance they offer is limited by being based on older, less powerful 3D GPUs and by the narrow data bus they use to access memory. Because integrated graphics solutions share video memory with the processor, they use the same data bus as the processor. In a single-channel-based system, this restricts the data bus to 64 bits. A dual-channel system has a 128-bit data bus, but today’s fastest 3D video cards feature a 512-bit or wider data bus. The wider the data bus, the more quickly graphics data can be transferred.

For these reasons, you are likely to be disappointed (and lose a lot of games!) if you play 3D games using integrated graphics. To enjoy 3D games, opt for a mid-range to high-end 3D video card based on a current ATI or NVIDIA chipset with 256MB of RAM or more. If your budget permits, you might also consider using a multicard solution from ATI or NVIDIA that allows you to use two or more PCI-Express video cards to increase your graphics processing performance.

![]() See “Dual-GPU Scene Rendering,” p. 706 (this chapter).

See “Dual-GPU Scene Rendering,” p. 706 (this chapter).

Note

If your system uses integrated graphics and you have less than 256MB of RAM, you might be able to increase your available graphics memory by upgrading system memory (system memory is used by the integrated chipset). Some Intel chipsets with integrated graphics automatically detect additional system memory and adjust the size of graphics memory automatically.

Video Memory Bus Width

Another issue with respect to the memory on the video adapter is the width of the bus connecting the graphics chipset and memory on the adapter. The chipset is usually a single large chip on the card that contains virtually all the adapter’s functions. It is wired directly to the memory on the adapter through a local bus on the card. Most of the high-end adapters use an internal memory bus that is up to 512 bits wide (or more in some cases). This jargon can be confusing because video adapters that take the form of separate expansion cards also plug into the main system bus, which has its own speed rating. When you read about a 256-bit or 512-bit video adapter, you must understand that this refers to the memory connection on the card, not the connection to the motherboard. In two cards with otherwise similar GPU, memory type, and memory size specifications, the card with the wider memory bus is preferable because a wider memory bus boosts performance.

![]() See “System Bus Types, Functions, and Features,” p. 269 (Chapter 4, “Motherboards and Buses”).

See “System Bus Types, Functions, and Features,” p. 269 (Chapter 4, “Motherboards and Buses”).

The Digital-to-Analog Converter (DAC)

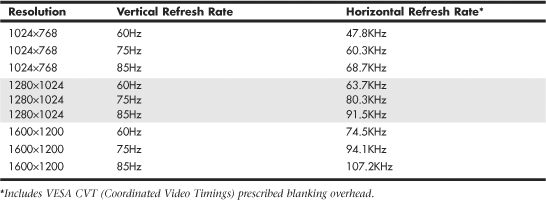

The digital-to-analog converter on a video adapter (commonly called a DAC or RAMDAC) does exactly what its name describes. The RAMDAC is responsible for converting the RAM-based digital images your computer generates into signals for analog monitor connections. The speed of the RAMDAC is measured in MHz; the faster the conversion process, the higher the adapter’s vertical refresh rate. The speeds of the RAMDACs used in today’s high-performance video adapters range from 300MHz to 500MHz. Most of today’s video card chipsets include the RAMDAC function inside the 3D accelerator chip, but some dual-display-capable video cards use a separate RAMDAC chip to allow the second display to work at different refresh rates than the primary display. Systems that use integrated graphics include the RAMDAC function in the North Bridge or GMCH chip portion of the motherboard chipset.

The benefits of increasing the RAMDAC speed include higher vertical refresh rates, which allow higher resolutions with flicker-free refresh rates (72Hz–85Hz or above). Typically, cards with RAMDAC speeds of 300MHz or above display flicker-free (75Hz or above) at all resolutions up to 1920×1200. Of course, as discussed earlier in this chapter, you must ensure that any resolution you want to use is supported by both your monitor and video card.

Video Display Interfaces

Video display adapters connect a PC to a display and therefore must work through two main interfaces. The first is the system interface, meaning the connection between the video adapter and the PC, and the second is the display interface, meaning the connection between the video adapter and the display. By using standardized versions of these interfaces, we end up having video adapters and displays that are both compatible and easily interchangeable. This section discusses the available system and display interfaces as well as the differences between them.

The System Interface

Older video adapters were designed for use with earlier bus standards, such as the IBM MCA, ISA, EISA, and VL-Bus. Because of their relatively slow performance by today’s standards, all are now obsolete. Current video display adapters use the PCI, AGP, or PCI-Express interface standards to connect to a system.

In current systems, PCI Express is the most popular video card slot (in 16-lane or x16 form), replacing the long-time standard AGP 8x. Older PCI video cards are more limited in performance and are sold primarily as add-ons or upgrades for older systems. For example, one common upgrade is to add a second video card to run dual (or more) monitors, which often requires a PCI based video card, even if the primary card is AGP based.

![]() See “The PCI Bus,” p. 286 (Chapter 4).

See “The PCI Bus,” p. 286 (Chapter 4).

![]() See “PCI Express,” p. 290 (Chapter 4).

See “PCI Express,” p. 290 (Chapter 4).

![]() See “Accelerated Graphics Port,” p. 292 (Chapter 4).

See “Accelerated Graphics Port,” p. 292 (Chapter 4).

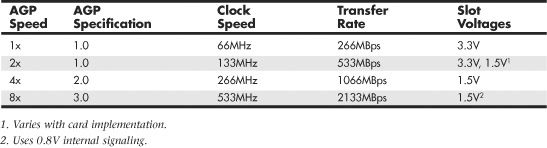

Accelerated Graphics Port (AGP)

The Accelerated Graphics Port (AGP), an Intel-designed dedicated video bus introduced in 1997, delivers a maximum bandwidth up to 16 times greater than that of a comparable PCI bus. AGP was the mainstream high-speed video-to-system interface for several years but has been replaced by the more versatile and faster PCI Express standard.

The AGP slot is essentially an enhancement to the existing PCI bus; however, it’s intended for use only with video adapters and provides them with high-speed access to the main system memory array. This enables the adapter to process certain 3D video elements, such as texture maps, directly from system memory rather than having to copy the data to the adapter memory before the processing can begin. This saves time and eliminates the need to upgrade the video adapter memory to better support 3D functions. Although AGP version 3.0 provides for two AGP slots, this feature has never been implemented in practice. Systems with AGP have only one AGP slot.

Note

Although the earliest AGP cards had relatively small amounts of onboard RAM, most later implementations use large amounts of on-card memory and use a memory aperture (a dedicated memory address space above the area used for physical memory) to transfer data more quickly to and from the video card’s own memory. Integrated chipsets featuring built-in AGP use system memory for all operations, including texture maps.

Windows 98 and later versions support AGP’s Direct Memory Execute (DIME) feature. DIME uses main memory instead of the video adapter’s memory for certain tasks to lessen the traffic to and from the adapter. However, with the large amounts of memory found on current AGP video cards, this feature is seldom implemented.

Four speeds of AGP are available: 1x, 2x, 4x, and 8x (see Table 12.4 for details). Later AGP video cards support AGP 8x and can fall back to AGP 4x or 2x on systems that don’t support AGP 8x.

Table 12.4 AGP Speeds and Technical Specifications

AGP 3.0 was announced in 2000, but support for the standard required the development of motherboard chipsets that were not introduced until mid-2002. Almost all motherboard chipsets with AGP support released after that time featured AGP 8x support.

Although some systems with AGP 4x or 8x slots use a universal slot design that can handle 3.3V or 1.5V AGP cards, others do not. If a card designed for 3.3V (2x mode) is plugged into a motherboard that supports only 1.5V (4x mode) signaling, the motherboard may be damaged.

![]() See “Accelerated Graphics Port,” p. 292 (Chapter 4).

See “Accelerated Graphics Port,” p. 292 (Chapter 4).

Caution

Be sure to check AGP compatibility before you insert an older (AGP 1x/2x) card into a recent or current system. Even if you can physically insert the card, a mismatch between the card’s required voltage and the AGP slot’s voltage output can damage the motherboard. Check the motherboard manual for the card types and voltage levels supported by the AGP slot.

Some AGP cards can use either 3.3V or 1.5V voltage levels, adjusted via an onboard jumper. These cards typically use an AGP connector that is notched for use with either AGP 2x or AGP 4x slots, as pictured in Chapter 4. Be sure to set these cards to use 1.5V before using them in motherboards that support only 1.5V signaling.

PCI Express (PCIe)

PCI Express began to show up in systems in mid-2004 and has filtered down to almost all systems that use discrete video cards or have integrated video that can be upgraded. Despite the name, PCI Express uses a high-speed bidirectional serial data transfer method, and PCI Express channels (also known as lanes) can be combined to create wider and faster expansion slots (each lane provides 250MBps, 500MBps, or 1,000MBps data rate in each direction). Because PCI Express is technically not a bus, unlike PCI the slots do not compete with each other for bandwidth. PCI Express graphics cards use up to 16 lanes (x16) to enable speeds of 4GBps, 8GBps, or 16GBps in each direction, as seen in Table 12.5.

Table 12.5 PCI Express Video Card Bandwidth

Most PCI Express implementations include one x16 slot for video and two or more x1 slots for other add-on cards, as well as legacy PCI slots. Systems that support NVIDIA’s SLI or ATI’s CrossFire dual PCI Express video card technologies have up to three or four PCI Express video slots running at x8 or x16 speed.

The Display Interface

The display interface is used to connect the video display adapter to the monitor or display. Over the years, several different methods of connecting monitors have been available. Some of these interfaces have been analog, and others have been digital.

The very early PC video standards used from 1981 through the late 1980s were based on crude (by today’s standards) digital interface designs. These included the original MDA (Monochrome Display Adapter), CGA (Color Graphics Adapter), and EGA (Enhanced Graphics Adapter) standards. The CGA and EGA in particular generated different colors by sending digital color signals down three wires, which allowed for the display of up to eight colors (23). Another signal doubled the number of color combinations from eight to 16 by allowing each color to display at two intensity levels. This type of digital display was easy to manufacture and offered simplicity, with consistent color combinations from system to system. The main drawback of the early digital display standards was the limited number of possible colors.

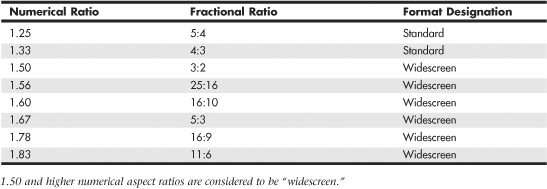

Unlike earlier digital video standards, VGA (Video Graphics Array) is an analog system. VGA came out in 1987 and began a shift from digital to analog that lasted for more than 20 years. Only recently has there been a shift back to digital. Why go from digital to analog and then back to digital? The simple answer is that analog was the least expensive way at the time to design a CRT based system that supported a reasonable resolution with a reasonable number of colors. Now that technology has advanced and LCD panels have largely replaced CRTs, going back to digital interfaces makes sense.

The video interfaces (and connectors) you are likely to encounter in PCs dating from the late ’80s to the present include the following:

VGA (Video Graphics Array)

DVI (Digital Visual Interface)

HDMI (High-Definition Multimedia Interface)

DisplayPort

VGA is an analog connection, while the others are digital. The connectors for these interfaces are shown in Figure 12.2.

Figure 12.2 Video interface connectors used in PCs from the late ’80s to the present.

The following section focuses on these display interfaces.

Video Graphics Array (VGA)

IBM introduced the Video Graphics Array (VGA) interface and display standard on April 2, 1987, along with a family of systems it called PS/2. VGA originally included the display adapter, the monitor, and the connection between them. Since that time the display adapters and monitors have evolved, but the VGA 15-pin analog connection went on to become the most popular video interface in history, and is still used today in PC video adapters and displays.

VGA is an analog design. Analog uses a separate signal for each CRT color gun, but each signal can be sent at varying levels of intensity—64 levels, in the case of the original VGA standard. This provides 262,144 possible colors (643), of which 256 could be simultaneously displayed in the original design. For realistic computer graphics, color depth is often more important than high resolution because the human eye perceives a picture that has more colors as being more realistic.

VGA was designed to be addressed through the VGA BIOS interface, a software interface that forced programs to talk to the BIOS-based driver rather than directly to the hardware. This allowed programs to call a consistent set of commands and functions that would work on different hardware, as long as a compatible VGA BIOS interface was present. The original VGA cards had the BIOS on the video card directly, in the form of a ROM chip containing from 16KB to 32KB worth of code. Modern video cards and laptop graphics processors still have this 32KB onboard BIOS. Typically, the only time the ROM-based drivers are used is during boot, when running legacy DOS-based applications or games, or when you run Windows in Safe Mode.

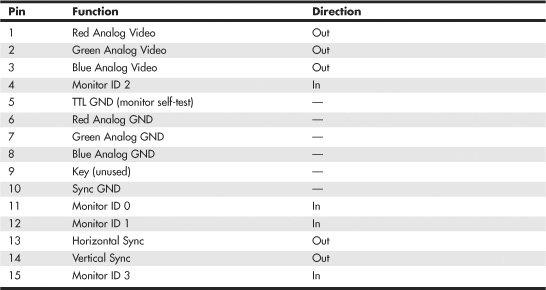

VGA also describes a 15-pin analog interface connection that can support a wide variety of modes. The connection is analog because VGA was primarily designed to drive CRT displays, which are analog by nature. When a display is connected via VGA, the digital signals inside the PC are converted to analog signals by the DAC (Digital-to-Analog Converter) chip in the display adapter and are then sent to the display via the analog VGA connection. The VGA connector is shown in Figure 12.3; the pinouts are shown in Table 12.6.

Figure 12.3 The standard 15-pin analog VGA connector.

Table 12.6 15-Pin Analog VGA Connector Pinout

The mating VGA cable connector that plugs into this connector normally has pin 9 missing. This was designed such that the mating hole in the connector on the video card could be plugged, but it is usually open (and merely unused) instead. The connector is keyed by virtue of the D-shape shell and pin alignment, so it is difficult to plug in backward even without the key pin. Pin 5 is used only for testing purposes, and pin 15 is rarely used; they are often missing as well. To identify the type of monitor connected to the system, some early VGA cards used the presence or absence of the monitor ID pins in various combinations.

In addition to the connector and electrical interface, the original VGA standard also defined a number of text and graphics display modes with various resolutions and colors. The original VGA modes allowed for a maximum graphics resolution of 640×480 in only 16 (4-bit) colors. This was the maximum that could be supported by the original 256KB of RAM included on the card.

IBM introduced higher-resolution versions of VGA called XGA and XGA-2 in the early 1990s, but most of the development of VGA standards has come from the third-party video card industry and its trade group, the Video Electronic Standards Association (VESA).

When VGA originated in 1987, it had very low resolution and color capability by today’s standards. Since then, VGA has evolved to support higher resolution modes with many more colors. Even the least-expensive video adapters on the market today can work with modes well beyond the original VGA standard.

SVGA and XGA

The original IBM VGA card was quickly cloned by other video card manufacturers. To distinguish their products from the IBM original, many provided additional modes and capabilities, generically calling them “Super” VGA or SVGA cards.

By 1989, competing video card and display manufacturers had wanted to cooperate in order to make the new SVGA capabilities an industry standard as well as make them compatible with existing software and hardware designed for VGA.

In February 1989, an international nonprofit group called Video Electronics Standards Association (VESA) was formed to create industrywide interface standards for the PC and other computing environments. VESA was designed to create and promote open standards for the display and display interface industry, which would ensure interoperability and yet also allow for innovation. VESA is led by a board of directors that represents a voting membership of more than 100 corporate members worldwide. The members are PC hardware, software, display, and component manufacturers, as well as cable and telephone companies, service providers, and more. VESA essentially took the role of defining PC video interface standards away from IBM, giving it instead to the VESA members.

In August 1989, VESA introduced its first standard, an 800×600 4-bit (16-color) BIOS interface standard called Super VGA (SVGA) mode 6Ah, which was the maximum that could be supported by the original 256KB of RAM included on early VGA cards. This allowed companies to independently develop video hardware having a common software interface, thus allowing for higher resolution functionality while maintaining interchangeability and backward compatibility with existing VGA. Shortly thereafter, VESA extended the SVGA standard with other modes and resolutions, and it developed or contributed to many successive standards in PC video.

Note

Although SVGA technically defines a set of VESA standards that includes modes from 800×600 and beyond, typically we use the term SVGA to describe only the 800×600 mode. Other higher-resolution modes have been given different names (XGA, SXGA, and so on), even though they are technically part of the VESA SVGA specifications.

IBM further increased the RAM as well as the available resolutions and colors when it introduced the XGA (eXtended Graphics Array) standard in 1990. XGA was basically an enhanced version of VGA, with more memory (1MB), enhanced resolution, color content, and increased hardware functionality. XGA was also optimized for Windows and other graphical user interfaces. The most exciting feature XGA added to VGA was support for two new graphics modes:

• 1024×768 256-color mode

• 640×480 256-color mode

Notably missing from IBM’s original XGA interface was the VESA-defined SVGA 800×600 16-color mode, which had debuted just over a year earlier. That was important because not many monitors at the time could handle 1024×768, but many could handle 800×600. With IBM’s card you had to jump from 640×480 directly to 1024×768, which required a very expensive monitor back then. That oversight was finally corrected when IBM released XGA-2 in 1992. XGA-2 added more performance and additional color depth, as well as support for the mid-range SVGA 800×600 VESA modes:

• 640×480 256- and 65,536-color modes

• 800×600 16-, 256-, and 65,536-color modes

• 1024×768 16- and 256-color modes

Since then, VESA and other industry groups have defined all the newer video interface and display standards. IBM became a member of VESA and many of the other groups as well.

Digital Display Interfaces

The analog VGA interface works well for CRTs, which are inherently analog devices, but VGA does not work well for LCD, plasma, or other types of flat-panel displays that are inherently digital. Video data starts out digitally inside the PC and is converted to analog when the VGA interface is used. When you are running a digital display such as an LCD over an analog interface such as VGA, the signal must then be converted back to digital before it can be displayed, resulting in a double conversion that causes screen artifacts, blurred text, color shifting, and other kinds of problems.

Using a digital interface eliminates the double conversion, allowing the video information to remain as digital data from the PC all the way to the screen. Therefore, a trend back to using digital video interfaces has occurred, especially for inherently digital displays such as LCD flat panels.

Laptop computers have avoided this problem by using an internal digital connection called FPD-Link (Flat Panel Display-Link), which was originally developed by National Semiconductor in 1995. Unfortunately, this standard was not designed for external connections requiring longer cable lengths or extremely high resolutions. What was needed was an industry standard digital connection for external displays.

In order to facilitate a digital video connection between PCs and external displays, several digital video signal standards and specifications have been available:

• Plug and Display (P&D)

• Digital Flat Panel (DFP)

• Digital Visual Interface (DVI)

• High Definition Multimedia Interface (HDMI)

• DisplayPort

The Plug and Display (P&D) and Digital Flat Panel (DFP) standards were released by the Video Electronic Standards Association (VESA) in June 1997 and February 1999, respectively. Both were based on the PanelLink TMDS (Transition Minimized Differential Signaling) protocol developed by Silicon Image. Unfortunately, both of these interfaces had relatively low-resolution support (1280×1024 maximum) and were only implemented in a handful of video cards and monitors. As such, they never really caught on in the mass market and were both overshadowed by the Digital Visual Interface (DVI), which become the first truly popular digital display interface standard.

DVI

The Digital Visual Interface (DVI) was introduced on April 2, 1999 by the Digital Display Working Group (DDWG). The DDWG was formed in 1998 by Intel, Silicon Image, Compaq, Fujitsu, Hewlett-Packard, IBM, and NEC to address the need for a universal digital interface standard between a host system and a display. Unlike the P&D and DFP interfaces that came before it, DVI gained immediate widespread industry support, with 150 DVI products being shown at the Intel Developer Forum in August 1999, only four months after DVI was released. Since then, DVI has become the most popular interface for digital video connections. DVI also allows for both digital and VGA analog connections using the same basic connector.

DVI uses Transition Minimized Differential Signaling (TMDS), which was developed by Silicon Image (www.siliconimage.com) and trademarked under the name PanelLink. TMDS takes 24-bit parallel digital data from the video controller and transmits it serially over balanced lines at a high speed to a receiver. A single-link TMDS connection uses four separate differential data pairs, with three for color data (one each for red, green, and blue data) and the fourth pair for clock and control data. Each twisted pair uses differential signaling with a very low 0.5V swing over balanced lines for reliable, low-power, high-speed data transmission. A low-speed VESA Display Data Channel (DDC) pair is also used to transmit identification and configuration information, such as supported resolution and color-depth information, between the graphics controller and display.

TMDS is designed to support cables up to 10 meters (32.8 feet) in length, although the limits may be shorter or longer depending on cable quality. Several companies make products that can amplify or re-drive the signals, allowing for greater lengths. Figure 12.4 shows a block diagram of a single-link TMDS connection.

Figure 12.4 A single-link TMDS connection.

Using TMDS, each color channel (red/green/blue) transmits 8 bits of data (encoded as a 10-bit character) serially at up to 165MHz. This results in a raw throughput of 1.65Gbps per channel. There are three color channels per link, resulting in a maximum raw bandwidth of 4.95Gbps per link. Because the data is sent using 8b/10b encoding, only 8 bits of every 10 are actual data, resulting in a maximum true video data throughput of 3.96Gbps. This enables a single-link DVI connection to easily handle computer video resolutions as high as WUXGA (1920×1200) as well as 1080p HDTV (1920×1080 with progressive scan).

If more bandwidth is necessary, the DVI standard supports a second TMDS link in the same cable and connector. This uses three additional TMDS signal pairs (one for each color) and shares the same clock and DDC signals as the primary link. This is called dual-link DVI, and it increases the maximum raw bandwidth to 9.9Gbps and the true data bandwidth to 7.92Gpbs, which will handle computer resolutions as high as WQUXGA (3840×2400). Normally only 30″ or larger flat-panel displays use resolutions high enough to require dual-link DVI. Even higher resolution displays can be supported with dual DVI ports, each with a dual-link connection.

TMDS links include support for Display Data Channel (DDC), a low-speed, bidirectional standard for communication between PCs and monitors, created by the VESA. DDC defines the physical connection and signaling method, whereas the communications and data protocol is defined under the VESA Extended Display Identification Data (EDID) standard. DDC and EDID allow the graphics controller to identify the capabilities of the display so the controller can automatically configure itself to match the display’s capabilities.

DVI uses Molex MicroCross connectors with several variations. The DVI standard was primarily designed to support digital devices; however, for backward compatibility, it can also support analog devices as well. The DVI-D (digital) connector supports only digital devices, whereas the DVI-I (integrated) connector supports both digital and analog devices via the addition of extra pins. Figure 12.5 and Table 12.7 show the DVI-I (integrated) connector and pinout.

Figure 12.5 The DVI-I connector.

Table 12.7 DVI-I Connector Pinout

The DVI-D connector is the same as the DVI-I connector, except that it lacks the analog connections. By virtue of the unique MicroCross connector design, a digital-only device can connect only to receptacles with digital support, and an analog-only device can plug in only to receptacles with analog support. This design feature ensures that an analog-only device cannot be connected to a digital-only receptacle, and vice versa. Figure 12.6 shows the DVI-D connector. The pinout is the same as the DVI-I connector, except for the missing analog signals. The DVI-D connector is widely used on laptop port replicators and docking stations that provide DVI support.

Figure 12.6 The DVI-D connector.

The DVI-I connector shown in Figure 12.5 can be converted into a VGA port for use with CRTs or with analog LCD panels via a simple adapter. Often new graphics cards purchased at retail that support only DVI come with just such an adapter that allows you to connect a traditional VGA connector from the display to the adapter.

Unfortunately the Digital Display Working Group (DDWG) that created DVI has disbanded, leaving DVI frozen in time at the DVI 1.0 specification level. This means that DVI will not be updated in the future. Although it has enjoyed tremendous popularity as the first widely used digital display interface, the PC industry as a whole is moving to DisplayPort as the replacement for DVI.

HDMI

The High Definition Multimedia Interface (HDMI) was designed by a group of multimedia companies (Hitachi, Panasonic, Philips, Silicon Image, Sony, Thomson, and Toshiba) as a way to provide a single-cable connection for transporting digital video and audio signals between consumer electronics hardware such as big-screen TVs, video games, DVD players, and home theater systems. HDMI was introduced in December 2002, and version 1.3a was introduced in November 2006.

HDMI is basically a superset of DVI and uses the same TMDS (Transition Minimized Differential Signaling) as does DVI. Unlike DVI, however, each color channel also carries multiplexed audio data. HDMI 1.2a and earlier supports a maximum data clock rate of 165MHz, sending 10 bits per cycle, or 1.65Gbps per channel. There are three channels per link, resulting in a maximum raw bandwidth of 4.95Gbps. Because the data is sent using 8b/10b encoding, only 8 bits of every 10 are actual data, resulting in a true data throughput of 3.96Gbps. This enables a single-link HDMI 1.2a or earlier connection to easily handle computer video resolutions as high as WUXGA (1920×1200) as well as 1080p HDTV (1920×1080 with progressive scan) plus audio data.

HDMI 1.3 increases the maximum clock rate to 340MHz, resulting in 10.2Gbps raw bandwidth, or a true data throughput of 8.16Gbps. This increase allows a single-link HDMI connection to have slightly more throughput than a dual-link DVI connection, which handles computer resolutions as high as WQUXGA (3840×2400) plus audio data.

HDMI can also carry up to eight channels of uncompressed digital audio at 24-bit/192KHz along with Dolby Digital, DTS, Dolby TrueHD, and DTS-HD Master Audio compressed audio formats. Because it uses a single cable for both audio and video signals, HDMI provides an excellent way to reduce the cabling tangle present in home theater systems that use conventional analog audio and video cables. For home theater users who subscribe to HDTV satellite or cable services, HDMI is ideal because it supports high-bandwidth digital content protection (HDCP), which these services use to protect content from piracy while still assuring high-quality viewing and listening. To avoid reduced-quality playback of protected content, all devices (including the DVD player or set-top box, AV receiver, and display) must support HDCP.

In addition to transmitting high-quality audio and video between devices, HDMI carries additional signals. HDMI uses the display data channel (DDC) to identify the capabilities of an HDMI display, such as resolutions, color depth, and audio. DDC enables optimal playback quality on different devices. HDMI also supports the optional consumer electronic control (CEC) feature, which enables one-button control of all CEC-enabled devices for one-touch play or record or other features.

Table 12.8 compares the HDMI versions.

Because HDMI is essentially a superset of DVI, it is backward-compatible with DVI as well. This means that using a simple and inexpensive adapter, you can connect an HDMI source to a DVI display as well as connect a DVI source to an HDMI display. However, unless both the source and monitor both support HDCP, you might not be able to play premium HDTV content, or the resolution might be reduced. Although some graphics cards claimed HDCP support as early as the first part of 2006, changes in the HDCP standard may prevent early cards from working properly. You should contact your graphics card and monitor vendor to determine whether a particular device supports HDCP.

Current HDMI cables correspond to HDMI Type A or Type C. Type A is a 19-pin connector. Type C is a smaller version of Type A, designed for use in DV camcorders or other portable devices. It uses the same pinout, and Type A–to–Type C adapters are available from various vendors. HDMI version 1.0 also defined a 29-pin Type B dual-link cable that has not been used in any products.

Figure 12.7 illustrates a typical HDMI Type A cable and the location of pin 1 on the cable and connector.

Figure 12.7 HDMI Type A cable and socket use a two-row 19-pin interface.

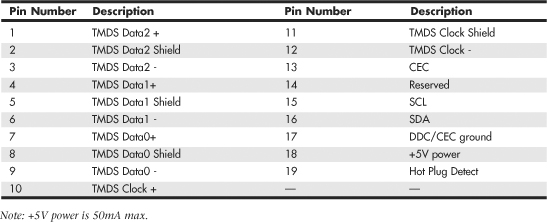

The pinout for HDMI Type A and Type C cables is shown in Table 12.9.

Table 12.9 HDMI Type A/Type C Connector Pinout

Figure 12.8 illustrates a typical HDMI-DVI adapter cable.

Figure 12.8 HDMI–DVI adapter cable.

Note

The adapter cable shown in Figure 12.8 is not designed to work with graphics cards and drivers that do not support HDTV resolutions and timings. You may need to upgrade your graphics card driver before using an HDMI-DVI cable. Although some set-top boxes include DVI ports, this type of adapter cable is only intended for PC-HDTV connections.

Starting in late 2006, some vendors began to release PCI-Express cards including HDMI ports. Some provide HDMI input and output for use with HDV camcorders, while others using ATI or NVIDIA chipsets are graphics cards that also include HDMI output. Unfortunately, HDMI is a royalty-based interface, requiring an annual license fee of $10,000 plus a payment of 4 cents per device. This plus the requirements for additional circuitry in both graphics cards and displays has helped to keep HDMI as more of a consumer electronics (i.e. home entertainment) interface, while DVI and DisplayPort are far more popular in PCs as a video display interface.

For more information about HDMI, see the HDMI Founders website at www.hdmi.org.

DisplayPort

DisplayPort is the latest digital display interface standard. It is designed to replace VGA, DVI, and HDMI for use in PCs and to coexist with HDMI in consumer electronics devices. Dell originated the design in 2003 and then turned it over to the Video Electronics Standards Association (VESA) in August 2005. In May 2006, VESA published it as an open industry standard.

DisplayPort is designed to replace all the previous digital and analog interfaces, including DVI, HDMI, and even VGA. In addition, it is a royalty-free interface and does not incur the licensing fees of HDMI or the implementation patent fees of DVI. In addition, DisplayPort is designed both as an internal and an external interface, meaning it can replace the FPD-Link (Flat Panel Display-Link) interface used internally in most laptops. In short, DisplayPort is designed to be the ultimate universal display interface for PCs now as well as in the future.

Previous digital display interfaces such as DVI and HDMI use TMDS (Transition Minimized Differential Signaling), which requires extra logic on both the source and display ends, logic that must usually be licensed from Silicon Image. DisplayPort instead uses a packetized (network-like) interface that can easily be implemented in chipsets without the extra cost logic required for DVI or HDMI. DisplayPort is kind of like a high-speed Ethernet for video, and the network-like design allows for features such as multiple video streams over a single connection, which means you can connect multiple displays to a single port.

Because it is a license-free, royalty-free design, DisplayPort has seen rapid adoption throughout the industry. In fact, all new chipsets and GPUs since 2008 from Intel, NVIDIA, and AMT/ATI already have integrated DisplayPort support. In 2008, major manufacturers including Dell, HP/Compaq, Lenovo, and Apple introduced products with DisplayPort and endorsed DisplayPort as the successor to DVI and HDMI for most digital display connections.

On the technical side, DisplayPort is a high-speed serial interface with up to four main data lanes (differential signal pairs) carrying multiplexed video and audio data, each of which supports a raw data rate of 1.62Gbps, 2.7Gbps, or 5.4Gbps (DisplayPort 1.2 or later only). Using all four lanes results in a maximum raw bandwidth of 6.48Gbps, 10.8Gbps, or 21.6Gbps, respectively. Because 8b/10b encoding is used, only 8 bits of every 10 are data, resulting in maximum true data throughputs of 5.184Gbps, 8.64Gbps, or 17.28Gbps, respectively.

Audio is optional, with support for up to eight channels of 16- or 24-bit linear PCM data at a 48KHz, 96KHz, or 192KHz sampling rate, with an uncompressed maximum audio bandwidth of 6.144Mbps.

DisplayPort 1.1 includes the following features:

• Small external connectors (slightly larger than USB size) with optional latching. Four display connectors can fit on a single PCIe expansion card bracket and fit easily in laptops.

• Cable lengths of up to 15 meters (49′), which allows for remote displays or projectors.

• Micro-packet network architecture over one to four lanes. Connections can use only as many lanes as necessary for reduced wire counts.

• High performance. A true data bandwidth of 8.64Gbps (four lanes at 2.16Gbps per lane) allows WQXGA 2560×1600 resolution.

• Support for internal (embedded) as well as external LCD connections. This allows a universal interface for both desktop and laptop systems.

• Optional audio that supports displays with built-in speakers.

• Optional HDCP (High-bandwidth Digital Content Protection) to allow playing protected media.

• Interoperability with DVI and HDMI over a DisplayPort connector. You can connect to DVI or HDMI with simple and inexpensive adapters.

• An auxiliary 1Mbps channel, which allows for two-way communication for integrated cameras, microphones, and so on.

• A powered connector, which powers some LCD displays directly.

• An optional latching connector that uses a simple thumb-press release design with no bolts or jackscrews.

DisplayPort 1.2 is fully backward compatible with 1.1 and adds the following features:

• Double the performance. DisplayPort 1.2 offers 21.6Gbps raw (17.28Gbps true) bandwidth, which is more than twice that of HDMI 1.3a and nearly triple that of DVI.

• Multiple data streams, which allows support for two WQXGA 2560×1600 or four WUXGA 1920×1200 monitors daisy-chained over a single cable.

• An auxiliary channel speed increase to 480Mbps. This allows USB 2.0 speed connections for cameras, microphones, or other devices.

• The Mini DisplayPort connector. This connector is approximately half the size yet provides full functionality for laptops or other devices where space is at a premium.

Table 12.10 compares the versions of DisplayPort.

Table 12.10 DisplayPort Versions

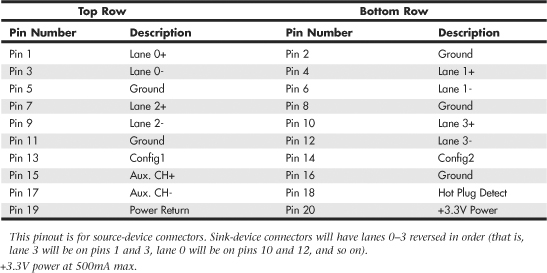

The DisplayPort connector has 20 pins and is only slightly larger than USB size (15.9mm vs. 12mm wide). The pins consist of four data lanes (differential pairs), an auxiliary channel (differential pair), plus configuration and power pins. Figures 12.9 and 12.10 show the DisplayPort cable/plug and socket.

Figure 12.9 DisplayPort cable with latching plug connector (Belkin).

Figure 12.10 DisplayPort socket and pin configuration.

Apple introduced the Mini DisplayPort connector in October 2008, which was subsequently included as part of the official DisplayPort standard in 1.2 and later releases. The Mini DisplayPort connector has the same full complement of 20 pins as the standard DisplayPort connector, but it’s about half the size (at only 7.4mm wide). Figures 12.11 and 12.12 show the Mini DisplayPort cable/plug and socket. Table 12.11 shows the DisplayPort socket connector pinout.

Figure 12.11 Mini DisplayPort cable and plug (Apple).

Figure 12.12 Mini DisplayPort socket and pin configuration.

Table 12.11 DisplayPort Socket Connector Pinout

VESA has created several icons and logos associated with DisplayPort. The basic DisplayPort icon is used to label products incorporating DisplayPort technology, whereas the DisplayPort Certification Compliance logo is used on product marketing material to indicate devices that have been tested to ensure they are fully interoperable with other DisplayPort devices. Figure 12.13 shows the DisplayPort Certification Compliance logo. VESA maintains a list of certified devices on the www.displayport.org website.

Figure 12.13 DisplayPort Certification Compliance logo (left).

The DisplayPort Multimode icon adds two “+” symbols to indicate a port or device that is fully backward compatible with both DVI and HDMI technology (via inexpensive cable adapters). Figure 12.14 shows the DisplayPort Multimode icon. Figure 12.15 shows an inexpensive DisplayPort to DVI adapter.

Figure 12.14 Icon indicating a DisplayPort with Multimode (DVI and HDMI) support (right).

Figure 12.15 DisplayPort to DVI adapter, which works on MultiMode DisplayPort connectors.

When DisplayPort was first released, many people wondered why we needed another digital display interface when we already had DVI and HDMI. Those interfaces unfortunately carry both technical and licensing limitations that were preventing their universal adoption. DisplayPort is designed to overcome not only the technical limitations, but especially the licensing constraints and fees that the other interfaces brought along as baggage. The advanced technical capabilities of DisplayPort, combined with the elimination of licensing and its backward compatibility with existing interfaces, are likely to ensure its rapid adoption throughout the PC marketplace.

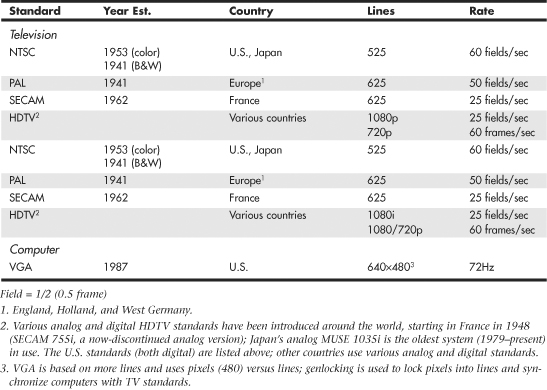

TV Display Interfaces

When video technology first was introduced, it was based on television. However, a difference exists between the signals used by a television and those used by a computer monitor. In the United States, the National Television System Committee (NTSC) established color TV standards in 1953. Some other countries, such as Japan, followed this standard. Many countries in Europe, though, developed more sophisticated standards, including Phase Alternate Line (PAL) and Sequential Couleur Avec Mémoire (SECAM). Table 12.12 shows the differences among these standards.

Table 12.12 Television Versus Computer Monitors

A video-output adapter enables you to display computer screens on a TV set or record them onto videotape for easy distribution. These products fall into two categories: those with genlocking (which enables the board to synchronize signals from multiple video sources or video with PC graphics) and those without. Genlocking provides the signal stability necessary to obtain adequate results when recording to tape, but it isn’t necessary for using a television as a video display.

Video converters are available as internal expansion boards, external boxes that are portable enough to use with a laptop for presentations on the road, and, most commonly today, TV-out ports on the rear of most video cards using chipsets from NVIDIA, ATI, and others. Most converters support the standard NTSC television format and might also support the European PAL format. The resolution these devices display on a TV set or record on videotape often is limited to VGA (640×480) or SVGA (800×600) resolution.

To connect your PC to an HDTV monitor, it is preferable to use a digital signal via a DVI, HDMI, or DisplayPort connection. If your current video adapter only has analog VGA output, you’ll want to upgrade to a video adapter with a DVI, HDMI, or DisplayPort digital output. Because most HDTVs use HDMI, if your video card has DVI or DisplayPort, you can use a DVI-to-HDMI or DisplayPort-to-HDMI adapter if necessary. If you need HDCP support for watching HD premium content, make sure your display and card support HDCP. Otherwise, you may not be able to watch the program or it may be displayed at reduced resolution.

3D Graphics Accelerators

Since the late 1990s, 3D acceleration—once limited to exotic add-on cards designed for hardcore game players—has become commonplace in the PC world. With the introduction of the Aero desktop in Windows Vista and later, 3D imaging is even utilized in the user interface, joining other full-motion 3D uses such as sports, first-person shooters, team combat, driving, and many other types of PC gaming. Because even low-cost integrated chipsets offer some 3D support, virtually any user of a recent-model computer has the ability to enjoy 3D lighting, perspective, texture, and shading effects.

Note

At a minimum, enabling the Windows Aero interface in Vista and later requires graphics hardware that supports DirectX 7 3D graphics; however, for maximum functionality, graphics hardware that supports DirectX 9 or greater is required. Games are now being released that require DirectX 10, which is not available for Windows XP and earlier versions.

How 3D Accelerators Work

To construct an animated 3D sequence, a computer can mathematically animate the sequences between keyframes. A keyframe identifies a specific point. A bouncing ball, for example, can have three keyframes: up, down, and up. Using these frames as reference points, the computer can create all the interim images between the top and bottom. This creates the effect of a smoothly bouncing ball.

After it has created the basic sequence, the system can then refine the appearance of the images by filling them in with color. The most primitive and least effective fill method is called flat shading, in which a shape is simply filled with a solid color. Gouraud shading, a slightly more effective technique, involves the assignment of colors to specific points on a shape. The points are then joined using a smooth gradient between the colors.

A more processor-intensive (and much more effective) type of fill is called texture mapping. The 3D application includes patterns—or textures—in the form of small bitmaps that it tiles onto the shapes in the image, just as you can tile a small bitmap to form the wallpaper for your Windows desktop. The primary difference is that the 3D application can modify the appearance of each tile by applying perspective and shading to achieve 3D effects. When lighting effects that simulate fog, glare, directional shadows, and others are added, the 3D animation comes very close indeed to matching reality.

Until the late 1990s, 3D applications had to rely on support from software routines to convert these abstractions into live images. This placed a heavy burden on the system processor in the PC, which has a significant impact on the performance not only of the visual display, but also of any other applications the computer might be running. Starting in the period from 1996 to 1997, chipsets on most video adapters began to take on many of the tasks involved in rendering 3D images, greatly lessening the load on the system processor and boosting overall system performance.

There have been roughly 10 generations of 3D graphics hardware on PCs, a process that has lasted over a decade, as detailed in Table 12.13.

Table 12.13 Brief History of 3D Acceleration

With virtually every recent graphics card on the market featuring DirectX 9 or greater capabilities, you don’t need to spend a fortune to achieve a reasonable level of 3D graphics. Many cards in the $50–$150 range use lower-performance variants of current high-end GPUs, or they might use the previous year’s leading GPU. These cards typically provide more-than-adequate performance for 2D business applications. Most current 3D accelerators also support dual-display and TV-out capabilities, enabling you to work and play at the same time.

However, keep in mind that the more you spend on a 3D accelerator card, the greater the onboard memory and faster the accelerator chip you can enjoy. If money is no object, and you are a hardcore gamer, you can buy a graphics card featuring its fastest GPU for more than $500. Fortunately, there are plenty of choices using either NVIDIA or ATI GPUs in the under-$500 price range that still offer plenty of 3D gaming performance, including support for dual-GPU operations (NVIDIA SLI or ATI CrossFire), which split rendering chores across the GPUs in both video cards for faster game display than with a single card. GPUs that support DirectX 10 are the preferred choice for a serious gamer who wants to play the newest games.

Mid-range cards costing $100–$300 are often based on GPUs that use designs similar to the high-end products but might have slower memory and core clock speeds or a smaller number of rendering pipelines. These cards provide a good middle ground for users who play games fairly often but can’t cost-justify high-end cards.

Before purchasing a 3D accelerator adapter, you should familiarize yourself with some of the terms and concepts involved in the 3D image generation process.

The basic function of 3D software is to convert image abstractions into the fully realized images that are then displayed on the monitor. The image abstractions typically consist of the following elements:

• Vertices—Locations of objects in three-dimensional space, described in terms of their x, y, and z coordinates on three axes representing height, width, and depth.

• Primitives—The simple geometric objects the application uses to create more complex constructions, described in terms of the relative locations of their vertices. This serves not only to specify the location of the object in the 2D image, but also to provide perspective because the three axes can define any location in three-dimensional space.

• Textures—Two-dimensional bitmap images or surfaces designed to be mapped onto primitives. The software enhances the 3D effect by modifying the appearance of the textures, depending on the location and attitude of the primitive. This process is called perspective correction. Some applications use another process, called MIP mapping, which uses different versions of the same texture that contain varying amounts of detail, depending on how close the object is to the viewer in the three-dimensional space. Another technique, called depth cueing, reduces the color and intensity of an object’s fill as the object moves farther away from the viewer.

Using these elements, the abstract image descriptions must then be rendered, meaning they are converted to visible form. Rendering depends on two standardized functions that convert the abstractions into the completed image that is displayed onscreen. The standard functions performed in rendering are as follows:

• Geometry—The sizing, orienting, and moving of primitives in space and the calculation of the effects produced by the virtual light sources that illuminate the image

• Rasterization—The converting of primitives into pixels on the video display by filling the shapes with properly illuminated shading, textures, or a combination of the two

A modern video adapter that includes a chipset capable of 3D video acceleration has special built-in hardware that can perform the rasterization process much more quickly than if it were done by software (using the system processor) alone. Most chipsets with 3D acceleration perform the following rasterization functions right on the adapter:

• Scan conversion—The determination of which onscreen pixels fall into the space delineated by each primitive

• Shading—The process of filling pixels with smoothly flowing color using the flat or Gouraud shading technique

• Texture mapping—The process of filling pixels with images derived from a 2D sample picture or surface image

• Visible surface determination—The identification of which pixels in a scene are obscured by other objects closer to the viewer in three-dimensional space

• Animation—The process of switching rapidly and cleanly to successive frames of motion sequences

• Antialiasing—The process of adjusting color boundaries to smooth edges on rendered objects

Typical 3D Techniques

Typical 3D techniques include the following:

• Fogging—Fogging simulates haze or fog in the background of a game screen and helps conceal the sudden appearance of newly rendered objects (buildings, enemies, and so on).

• Gouraud shading—Interpolates colors to make circles and spheres look more rounded and smooth.

• Alpha blending—One of the first 3D techniques, alpha blending creates translucent objects onscreen, making it a perfect choice for rendering explosions, smoke, water, and glass. Alpha blending also can be used to simulate textures, but it is less realistic than environment-based bump mapping.

• Stencil buffering—Stencil buffering is a technique useful for games such as flight simulators in which a static graphic element—such as a cockpit windshield frame, which is known as a heads-up display (HUD) and used by real-life fighter pilots—is placed in front of dynamically changing graphics (such as scenery, other aircraft, sky detail, and so on). In this example, the area of the screen occupied by the cockpit windshield frame is not re-rendered. Only the area seen through the “glass” is re-rendered, saving time and improving frame rates for animation.

• Z-buffering—The Z-buffer portion of video memory holds depth information about the pixels in a scene. As the scene is rendered, the Z-values (depth information) for new pixels are compared to the values stored in the Z-buffer to determine which pixels are in “front” of others and should be rendered. Pixels that are “behind” other pixels are not rendered. This method increases speed and can be used along with stencil buffering to create volumetric shadows and other complex 3D objects. Z-buffering was originally developed for computer-aided drafting (CAD) applications.

• Environment-based bump mapping—Environment-based bump mapping (standard starting in DirectX 6) introduces special lighting and texturing effects to simulate the rough texture of rippling water, bricks, and other complex surfaces. It combines three separate texture maps (for colors; for height and depth; and for environment, including lighting, fog, and cloud effects). This creates enhanced realism for scenery in games and can also be used to enhance terrain and planetary mapping, architecture, and landscape-design applications. This represents a significant step beyond alpha blending.

• Displacement mapping—Special grayscale maps called displacement maps have long been used for producing accurate maps of the globe. Microsoft DirectX 9 and 10 support the use of grayscale hardware displacement maps as a source for accurate 3D rendering. GPUs that fully support DirectX 9 and 10 in hardware support displacement mapping.

Advanced 3D Filtering and Rendering

To improve the quality of texture maps, several filtering techniques have been developed, including MIP mapping, bilinear filtering, trilinear filtering, and anisotropic filtering. These techniques and several other advanced techniques found in recent 3D GPUs are explained here:

• Bilinear filtering—Improves the image quality of small textures placed on large polygons. The stretching of the texture that takes place can create blockiness, but bilinear filtering applies a blur to conceal this visual defect.

• MIP mapping—Improves the image quality of polygons that appear to recede into the distance by mixing low-res and high-res versions of the same texture. This is a form of antialiasing.

• Trilinear filtering—Combines bilinear filtering and MIP mapping, calculating the most realistic colors necessary for the pixels in each polygon by comparing the values in two MIP maps. This method is superior to either MIP mapping or bilinear filtering alone.

Note

Bilinear and trilinear filtering work well for surfaces viewed straight on, but might not work so well for oblique angles (such as a wall receding into the distance).

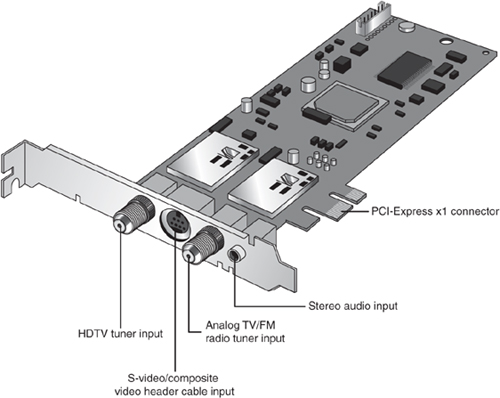

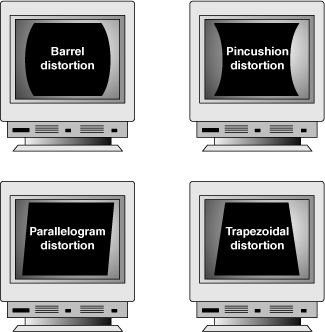

• Anisotropic filtering—Some video card makers use another method, called anisotropic filtering, for more realistically rendering oblique-angle surfaces containing text. This technique is used when a texture is mapped to a surface that changes in two of three spatial domains, such as text found on a wall down a roadway (for example, advertising banners at a raceway). The extra calculations used take time, and for that reason it can be disabled. To balance display quality and performance, you can also adjust the sampling size: Increase the sampling size to improve display quality or reduce it to improve performance.