Chapter 22. OpenGL ES: OpenGL on the Small

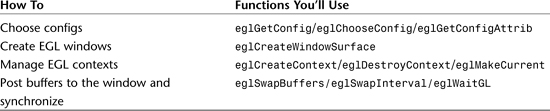

WHAT YOU’LL LEARN IN THIS CHAPTER:

This chapter is a peek into the world of OpenGL ES rendering. This set of APIs is intended for use in embedded environments where traditionally resources are much more limited. OpenGL ES dares to go where other rendering APIs can only dream of.

There is a lot of ground to cover, but we will go over much of the basics. We’ll take a look at the different versions of OpenGL ES and what the differences are. We will also go over the windowing interfaces designed for use with OpenGL ES. Also, we will touch on some issues specific to dealing with embedded environments.

OpenGL on a Diet

You will find that OpenGL ES is very similar to regular OpenGL. This isn’t accidental; the OpenGL ES specifications were developed from different versions of OpenGL. As you have seen up until now, OpenGL provides a great interface for rendering. It is very flexible and can be used in many applications, from gaming to workstations to medical imaging.

What’s the ES For?

Over time, the OpenGL API has been expanded and added to in order to support new features. This has caused the OpenGL application interface to become bloated, providing many different methods of doing the same thing. Take, for instance, drawing a single point. The first available method was drawing in immediate mode, in which you use glBegin/glEnd with the vertex information defined in between. Also, display lists were available, allowing immediate mode commands to be captured into a list that can be replayed again and again. Using the newer glDrawArrays method allows you to put your points in a prespecified array for rendering convenience. And Buffer Objects allow you to do something similar, but from local GPU memory.

The simple action of drawing a point can be done four different ways, each having different advantages. Although it is nice to have many choices when implementing your own application, all of this flexibility has produced a very large API. This in turn requires a very large and complex driver to support it. In addition, special hardware is often required to make each path efficient and fast.

Because of the public nature of OpenGL, it has been a great candidate for use in many different applications outside of the personal computer. But implementers had a hard time conforming to the entire OpenGL spec for these limited hardware applications. For this reason, a new version was necessary; one with Embedded Systems specifically in mind, hence the ES moniker.

A Brief History

As hardware costs have come down and more functionality can be fit into smaller areas on semiconductors, the user interfaces have become more and more complex for embedded devices. A common example is the automobile. In the 1980s the first visual feedback from car computers was provided in the form of single- and multiline text. These interfaces provided warnings about seatbelt usage, current gas mile usage, and so on. After that, two-dimensional displays became prevalent. These often used bitmap-like rendering to present 2D graphics. Most recently, 3D-capable systems have been integrated to help support GPS navigation and other graphic-intensive features. A similar technological history exists for aeronautical instrumentation and cellphones.

The early embedded 3D interfaces were often proprietary and tied closely to the specific hardware features present. This was often the case because the supported feature set was small and varied greatly from device to device. But as 3D engine complexity increased and was used in more and more devices, a standard interface became more important and useful. It was very difficult to port an application from device to device when 3D APIs were so different.

With this in mind, a consortium was formed to help define an interface that would be flexible and portable, yet tailored to embedded environments and conscious of their limitations. This body would be called the Khronos Group.

Khronos

The Khronos Group was originally founded in 2000 by members of the OpenGL ARB, the OpenGL governing body. Many capable media APIs existed for the PC space, but the goal of Khronos was to help define interfaces that were more applicable to devices beyond the personal computer. The first embedded API it developed was OpenGL ES.

Khronos consists of many industry leaders in both hardware and software. Some of the current members are Motorola, Texas Instruments, AMD, Sun Microsystems, Intel, NVIDIA, and Nokia. The complete list is long and distinguished. You can visit the Khronos Web site for more information (www.khronos.org).

Version Development

The first version of OpenGL ES released, cleverly called ES 1.0, was an attempt to drastically reduce the API footprint of a full-featured PC API. This release used the OpenGL 1.3 specification as a basis. Although very capable, OpenGL ES 1.0 removed many less frequently used or very complex portions of the full OpenGL specification. Just like its big brother, OpenGL ES 1.0 defines a fixed functionality pipe for vertex transform and fragment processing.

Being the first release, it was targeted at implementations that supported hardware-accelerated paths for some portions of the pipeline and possibly software implementations for others. In limited devices it is very common to have both software and hardware work together to enable the entire rendering path.

ES 1.1 was completed soon after the first specification had been released. Although very similar to OpenGL ES 1.0, the 1.1 specification is written from the OpenGL 1.5 specification. In addition, a more advanced texture path is supported. A buffer object and draw texture interface has also been added. All in all, the ES 1.1 release was very similar to ES 1.0 but added a few new interesting features.

ES 2.0 was a complete break from the pack. It is not backward compatible with the ES 1.x versions. The biggest difference is that the fixed functionality portions of the pipeline have been removed. Instead, programmable shaders are used to perform the vertex and fragment processing steps. The ES 2.0 specification is based on the OpenGL 2.0 specification.

To fully support programmable shaders, ES 2.0 employs the OpenGL ES Shading Language. This is a high-level shading language that is very similar to the OpenGL Shading Language that is defined for use with OpenGL 2.0+. The reason ES 2.0 is such a large improvement is that all the fixed functionality no longer encumbers the API. This means applications can then implement and use only the features they need in their own shaders. The driver and hardware are relieved from tracking state that may never be used. Of course, the other side of the coin is that applications that want to use portions of the old fixed-function pipeline will need to implement these in app-defined shaders.

There is one more ES version worth mentioning, OpenGL ES SC 1.0. This special version is designed for execution environments with extreme reliability restraints. These applications are considered “Safety Critical,” hence the SC designator. Typical applications are in avionics, automobile, and military environments. In these areas 3D applications are often used for instrumentation, mapping, and representing terrain.

The ES SC 1.0 specification uses the OpenGL ES 1.0 specification as a base, which was derived from OpenGL 1.3. Some things are removed from the core ES 1.0 version to reduce implementation costs, and many features from core OpenGL are added back. The most important re-additions are display lists, immediate mode rendering (glBegin/glEnd), draw pixels, and bitmap rendering. These cumbersome features are included to minimize the complexity of porting older safety critical systems that may already use these features.

So, to recap, the OpenGL ES versions currently defined and the related OpenGL version are listed in Table 22.1.

Table 22.1. Base OpenGL versions for ES

Which Version Is Right for You?

Often hardware is created with a specific API in mind. These platforms usually will support only a single accelerated version of ES. It is sometimes helpful to think of the different versions of ES as profiles that represent the functionality of the underlying hardware. For this reason, if you are developing for a specific platform, you may not have a choice as to which version of ES to use.

For traditional GL, typically new hardware will be designed to support the latest version available. ES is a little different. The type of features targeted for new hardware are chosen based on several factors; targeted production cost, typical uses, and system support are a few. For instance, adding hardware functionality for supporting ES 2.0 on an entry-level cellphone may not make sense if it is not intended to be used as a game platform.

The following sections define each specification in much more detail. The Khronos Group has chosen to define the OpenGL ES specifications relative to their OpenGL counterparts. This provides a convenient way to define the entire specification without having to fully describe each interface. Most developers are already familiar with the parent OpenGL specification, making the consumption of the ES version very efficient. For those who are not, cross-referencing the relevant full OpenGL specification is a great way to get the rest of the picture.

Before we get started, it is important to note that the ES 1.x specifications support multiple profiles. The Common profile is designed as the usual interface for heavier-weight implementations. The Common-Lite profile is designed for thinner, leaner applications.

The Common profile is a superset of the Common-Lite profile. The Common-Lite profile does not support floating-point interfaces, whereas the Common profile does. But the Common-Lite profile can use an extension definition of fixed point to use interfaces that whole numbers are not suitable for.

To get the most out of this chapter, you should be very comfortable with most of the OpenGL feature set. This chapter is more about showing you what the major differences are between regular OpenGL and OpenGL ES and less about describing each feature again in detail.

ES 1.0

OpenGL ES 1.0 is written as a difference specification to OpenGL 1.3, which means that the new specification is defined by the differences between it and the reference. We will review OpenGL ES 1.0 relative to the OpenGL 1.3 specification and highlight the important differences.

Vertex Specification

The first major change for ES 1.0 is the removal of the glBegin/glEnd entrypoints and rendering mechanism. With this, edge flag support is also removed. Although the use of glBegin/glEnd provides a simple mechanism for rendering, the required driver-side support is usually complex. Vertex arrays can be used for vertex specification just as effectively, although the more complex glInterleavedArrays and glDrawRangeElements are not supported.

Also, the primitive types GL_QUAD, GL_QUAD_STRIPS, and GL_POLYGON are no longer supported. Other primitive types can be used just as effectively. By the same token, the glRect commands have been removed. Color index mode is removed. For vertex specification, only the types float, short, and byte are accepted for vertex data. For color components ubyte can also be used.

Even though immediate mode rendering has been removed, ES 1.0 still supports several entrypoints for setting the current render state. This can help reduce the amount of data overhead required per-vertex for state that may not change frequently when drawing with arrays. glNormal3f, glMultiTexCoord4f and glColor3f are all still available.

glNormal3f(GLfloat coords);

glMultiTexCoord4f(GLenum texture, GLfloat coords);

glColor4f(GLfloat components);

The Common-Lite profile supports these entrypoints as well, but replaces the floating-point parameters with fixed-point.

Transforms

The full transform pipeline still exists, but some changes have been made to simplify complex operations. No texture generation exists, and support for the color matrix has been removed. Because of data type limitations, all double-precision matrix specification is removed. Also, the transpose versions of matrix specification entrypoints have been removed.

OpenGL usually requires an implementation to support a matrix stack depth of at least 32. To ease memory requirements for OpenGL ES 1.0 implementations, a stack depth of only 16 is required. Also, user-specified clip planes are not supported.

Coloring

The full lighting model is supported with a few exceptions. Local viewer has been removed, as has support for different front and back materials. The only supported color material mode is GL_AMBIENT_AND_DIFFUSE. Secondary color is also removed.

Rasterization

There are also a few important changes to the rasterization process. Point and line antialiasing is supported because it is a frequently used feature. However, line stipple and polygon stipple have been removed. These features tend to be very difficult to implement and are not as commonly used except by a few CAD apps. Polygon smooth has also been removed. Polygon offset is still available, but only for filled triangles, not for lines or points.

All support for directly drawing pixel rectangles has been removed. This means glDrawPixels, glPixelTransfer, glPixelZoom, and all related functionality is not supported. Therefore the imaging subset is also not supported. glBitmap rendering is removed as well. glPixelStorei is still supported to allow for glReadPixels pack alignment. These paths tend to be complex in hardware. It is still possible to emulate glDrawPixels by creating a texture with color buffer data that would have been used for a glDrawPixels call and then drawing a screen-aligned textured polygon.

Texturing

Texturing is another complex feature that can be simplified for a limited API. For starters, 1D textures are redundant since they can be emulated with a 2D texture of height 1. Also, 3D and cube map textures are removed because of their added complexity and less frequent use. Borders are not supported. To simplify 2D textures, only a few image formats are supported: GL_RGB, GL_RGBA, GL_LUMINANCE, GL_ALPHA, and GL_LUMINANCE_ALPHA. glCopyTexImage2D and glCopyTexSubImage are supported, as well as compressed textures. However, glGetCompressedTexImage is not supported and compressed formats are illegal as internal formats for glTexImage2D calls, so compressed textures have to be compressed offline using vendor-provided tools.

The texture environment remains intact except for combine mode, which is not supported. Both GL_CLAMP_TO_EDGE and GL_REPEAT wrap modes are supported. GL_CLAMP and GL_CLAMP_TO_BORDER are not supported. Controls over mipmap image levels and LOD range are also removed.

Per-Fragment Operations

Most per-fragment operations, such as scissoring, stenciling, and depth test, remain intact since most of them provide unique and commonly used functionality. Blending is included but operations other than GL_ADD are not supported. So glBlendEquation and glBlendColor are no longer necessary.

Framebuffer Operations

Of course, all color index operations are not supported for whole framebuffer operations since color index is not supported. In addition, accumulation buffers are not supported. Also, drawing to multiple color buffers is not supported, so glDrawBuffer is not available. Similarly, glReadPixels is supported but glReadBuffer is not since the only valid rendering target is the front buffer. As has been previously mentioned, glCopyPixels is also gone.

Other Functionality

Evaluators are not supported. Selection and feedback are not supported.

State

OpenGL ES 1.0 has decided to limit access to internal state. This helps reduce duplication of state storage for implementations and can provide for more optimal implementation. Generally, all dynamic state is not accessible whereas static state is available. Only the following functions are allowed for accessing state:

glGetIntegerv(GLenum pname, Glint *params);

glGetString(GLenum pname);

Hints are queryable. Also, independent hardware limits are supported, such as GL_MODELVIEW_MATRIX_STACK_DEPTH, GL_MAX_TEXTURE_SIZE, and GL_ALIASED_POINT_RANGE.

Core Additions

For the most part, OpenGL ES is a subset of OpenGL functionality. But there are also a few additions. These take the form of extensions that are accepted as core additions to the ES specification. That means they are required to be supported by any implementation that is conformant, unless the extension is optional (OES_query_matrix).

Byte Coordinates—OES_byte_coordinates

This, along with the next extension, are two of the biggest enablers for limited embedded systems. This extension allows byte data usage for vertex and texture coordinates.

Fixed Point—OES_fixed_point

This extension introduces a new integer-based fixed-point data type for use in defining vertex data. The new interfaces mirror the floating-point versions with the new data type. The new commands are glNormal3x, glMultiTexCord4x, glVertexPointer, glColorPointer, glNormalPointer, glTexCordPointer, glDepthRange, glLoadMatrixx, glMultMatrixx, glRotatex, glScalex, glTranslatex, glFrustumx, glOrthox, glMaterialx[v], glLight[v], glLightModelx[v], glPointSizex, glLineWidthx, glPolygonOffsetx, glTexParameterx, glTexEnvx[v], glFogx[v], glSampleCoveragex, glAlphaFuncx, glClearColorx, and glClearDepthx.

Single-Precision Commands—OES_single_precision

This extension adds a few new single-precision entrypoints as alternatives to the original double-precision versions. The supported functions are glDepthRangef, glFrustrumf, glOrthof, and glClearDepthf.

Compressed Paletted Textures—OES_compressed_paletted_texture

This extension provides for specifying compressed texture images in color index formats, along with a color palette. It also adds ten new internal texture formats to allow for texture specification.

Read Format—OES_read_format

Read Format is a required extension. With this extension, the optimal type and format combinations for use with glReadPixels can be queried. The format and type have to be within the set of supported texture image values. These are stored as state variables with the names GL_IMPLEMENTATION_COLOR_READ_FORMAT_OES and GL_IMPLEMENTATION_COLOR_READ_TYPE_OES. This prevents the ES implementation from having to do a software conversion of the pixel buffer.

Query Matrix—OES_query_matrix

This is an optional extension that allows access to certain matrix states. If this extension is supported, the modelview, texture, and projection matrix can be queried. The extension allows for retrieval in a fixed-point format for the profiles that require it. (Common-Lite)

ES 1.1

The ES 1.1x specification is similar to the 1.0 specification. The biggest change is that OpenGL 1.5 is used for the base of this revision, instead of OpenGL 1.3. So most of the new features in OpenGL 1.5 are also available in ES 1.1x. In addition to the OpenGL 1.5 features, there are a few new core extensions. In this section we will cover the major changes to ES 1.1 with reference to ES 1.0 instead of beginning from scratch.

Vertex Processing and Coloring

Most of the vertex specification path is the same as the ES 1.0 path. There are a few additions to commands that can be used to define vertex information. Color information can be defined using unsigned bytes:

glColor4ub[v](GLubyte red, GLubyte green, GLubyte blue, GLubyte alpha);

Also, buffer objects were added to the OpenGL 1.5 specification and are included in the OpenGL ES 1.1 specification. Some aspects of buffer objects allowed for flexible usage. For instance, after a buffer object is specified, OpenGL 1.5 allows for that buffer to be mapped back to system memory so that the application can update it, as well as updating buffers by GL. And to support this access method, different usage indicators are given when glBufferData is called on a buffer.

For the ES version, the multiple usage profiles for buffer objects are removed. Instead, the only supported usage is GL_STATIC_DRAW. This means that the buffer object data is intended to be specified once, and then repeatedly rendered from. When ES can expect this behavior, it can optimize the handling and efficiency of the buffer object. In addition, system limitations in an embedded environment may not allow for the video memory holding the buffer object to be mapped to the application directly. Since all other usage methods are not supported, GL_STREAM_DRAW, GL_STREAM_COPY, GL_STREAM_READ, GL_STATIC_READ, GL_DYNAMIC_COPY, and GL_DYNAMIC_READ tokens are not accepted. Also, the glMapBuffer and glUnmapBuffer commands are not supported.

Clip planes were not supported in ES 1.0, but have been added to ES 1.1 in a limited fashion. The new minimum number of supported clip planes is one instead of six. Also, the commands for setting clip planes take lower precision plane equations. The precision is dependent on the profile.

Query functions are generally not supported in the previous version of ES. For lighting state, several functions were added to permit query. These are glGetMaterialfv and glGetLightfv.

Rasterization

Point parameters are also added. The interface is more limited than the standard OpenGL 1.5 interface. Only the glPointParameterf[v] interface is supported.

Texturing

The level of texturing support in ES 1.1 has been expanded. One of the major changes is the re-addition of mipmapping. This helps relieve applications from having to store all the mipmap data or calculate it at runtime. Also, glIsTexture is added back to the interface to help determine what textures have been instantiated. As part of the generate mipmap support, the GL_GENERATE_MIPMAP hint is supported.

Only GL_TEX_ENV_COLOR and GL_TEX_ENV_MODE were previously supported, and in a limited capacity at that. But OpenGL ES 1.1 adds all of the texture environment back in.

State

One of the fundamental changes in level of support for ES 1.1 is state queries. A premise for the ES 1.0 spec was to limit the amount of interaction between the application and GL. This helps to simplify the interface to allow for a leaner implementation. As part of this effort, all dynamic state queries were removed from the GL interface.

Now, many of the dynamic GL state queries are available again. This is helpful for application development, since querying state can be an important debug tool. For the most part, any state that is accepted in ES 1.1 can be queried. But the same limitations that exist in the state command interface exist in the query interface. For instance, glGetMaterialiv is not supported while glGetMaterialfv is, and state interface supports only the “fv” interface. So the query interfaces parallel the state interfaces. In the same respect, only query interfaces for the supported data types for a given profile are valid.

Texture data queries are still limited. The only valid state queries are the following: GL_TEXTURE_2D, GL_TEXTURE_BINDING_2D, GL_TEXTURE_MIN_FILTER, GL_TEXTURE_MAG_FILTER, GL_TEXTURE_WRAP_S, GL_TEXTURE_WRAP_T, and GL_GENERATE_MIPMAP.

Core Additions

Most of the same extensions are included in the OpenGL ES 1.1 specification as are in 1.0. However, the optional OES_query_matrix extension has been replaced by a new extension that also allows matrices to be queried. Several additional extensions are added to the 1.0 set to further extend ES functionality. The OpenGL ES 1.0 extensions that are also part of the ES 1.1 specification are OES_byte_coordinates, OES_fixed_point, OES_single_precision, OES_read_format, OES_query_matrix, and OES_compressed_paletted_texture. These are already described in the preceding section.

Matrix Palette—OES_matrix_palette

Most embedded systems have to keep object models simple due to limited resources. This can be a problem when we’re trying to model people, animals, or other complex objects. As body parts move, when a bone modeling method is used, there can be gaps between different parts. Imagine standing one cylinder on top of another, and then tilting the top one off-axis. The result is a developing gap between the two cylinders, which in a game might represent an upper arm and a lower arm of a human character.

This is a hard problem to solve without complex meshes connecting each piece that need to be recalculated on every frame. That sort of solution is usually well out of the reach of most embedded systems.

Another solution is to use a new OpenGL ES extension that enables the technique of vertex skinning. This stitches together the ends of each “bone,” eliminating the gap. The final result is a smooth, texturable surface connecting each bone.

When this extension is enabled, a palette of matrices can be supported. These are not part of the matrix stack, but can be enabled by setting the GL_MATRIX_MODE to GL_MATRIX_PALETTE_OES. Each implementation can support a different number of matrices and vertex units. The application can then define a set of indices, one for each bone. There is also an associated weight for each index. The final vertex is then the sum of each index weight times its respective matrix palette times the vertex. The normal is calculated in a similar way.

To select the current matrix, use the glCurrentPaletteMatrix command, passing in an index for the specific palette matrix to modify. The matrix can then be set using the normal load matrix commands. Alternatively, the current palette matrix can be loaded from the modelview matrix by using the glLoadPaletteFromModelViewMatrixOES command. You will have to enable two new vertex arrays, GL_MATRIX_INDEX_ARRAY and GL_WEIGHT_ARRAY. Also, the vertex array pointers will need to be set using the glWeightPointer and glMatrixIndexPointer commands:

glCurrentPaletteMatrixOES(GLuint index);

glLoadPaletteFromModelViewMatrixOES();

glMatrixIndexPointerOES(GLint size, GLenum type, sizei stride, void *pointer);

glWeightPointerOES(Glint size, GLenum type, sizei stride, void *pointer);

Point Sprites—OES_point_sprite

Point sprites do not exist as core functionality in OpenGL 1.5. Instead, they are supported as the ARB_point_sprite extension and then later in OpenGL 2.0. The OES_point_sprite core extension is very similar to the ARB version that was written for OpenGL 1.5, but takes into account the embedded system environment. Mainly this means that instead of using token names that end in “ARB,” token names end in “OES.”

Point Size Array—OES_point_size_array

To support quick and efficient rendering of particle systems, the OES_point_size_array extension was added. This allows a vertex array to be defined that will contain point sizes. This allows the application to render an entire series of points with varying sizes in one glDrawArrays call. Without this extension the GL point size state would have to be changed between rendering each point that had a different size.

Matrix Get—OES_matrix_get

Because some applications would like to read matrix state back, particularly useful after having done a series of matrix transforms or multiplications, the new required OES_matrix_get extension was added to provide a query path suited to ES. The Common profile is permitted to query for float values whereas the Common-Lite profile must use a fixed-point representation. The commands are glGetFloatv and glGetFixedv, respectively; they return matrix data as a single array. This extension is in addition to OES_query_matrix.

Draw Texture—OES_draw_texture

In certain environments, ES may be the only API for rendering, or it may be inconvenient for an application to switch between two APIs. Although 2D-like rendering can be done with OpenGL, it can be cumbersome. This extension is intended to help resolve this problem as well as provide a method for quickly drawing font glyphs and backgrounds.

With this extension, a screen-aligned texture can be drawn to a rectangle region on the screen. This may be done using the glDrawTex commands:

glDrawTex{sifx}OES(T Xs, T Ys, T Zs, T Ws, T Hs);

glDrawTex{sifx}vOES(T *coords);

In addition, a specific region of a texture for use can be defined. The entire texture does not need to be used for a glDrawTex call. This may be done by calling glTexParameter with the GL_TEXTURE_CROP_RECT_OES token and the four texture coordinates to use as the texture crop rectangle. By default, the crop rectangle is 0,0,0,0. The texture crop rectangle will not affect any other GL commands besides glDrawTex.

ES 2.0

The first two major OpenGL ES specifications were largely complexity reductions from existing OpenGL specifications. ES 2.0 extends this trend by wholesale removal of large parts of core OpenGL 2.0, making even greater strides in rendering path consolidation.

At the same time, ES 2.0 provides more control over the graphics pipeline than was previously available. Instead of supporting a slimmed-down version of the fixed-function pipeline, the fixed-function pipeline has been completely removed. In its place is a programmable shader path that allows applications to decide individually which vertex and fragment processing steps are important.

One prevalent change in ES 2.0 is the support of floating-point data types in commands. Previously, floating-point data needed to be emulated using fixed-point types, which are still available in ES 2.0. Also, the data types byte, unsigned byte, short, and unsigned short are not used for OpenGL commands.

Vertex Processing and Coloring

As with the preceding versions, display list and immediate mode render are not supported. Vertex arrays or vertex buffer objects must be used for vertex specification. The vertex buffer object interface now supports mapping and unmapping buffers just as OpenGL 2.0 does. Predetermined array types are no longer supported (glVertexPointer, glNormalPointer, etc.). The only remaining method for specifying vertex data is the use of generic attributes through the following entrypoints.

glVertexAttribPointer(GLuint index, GLuint size, GLenum type,

GLboolean normalized, sizei stride, const void *ptr);

In addition, glInterleavedArrays and glArrayElement are no longer supported. Also, normal rescale, normalization, and texture coordinate generation are not supported. Because the fixed-function pipeline has been removed, these features are no longer relevant. If desirable, similar functionality can be implemented in programmable shaders.

Because the fixed-function pipeline has been removed, all lighting state is also removed. Lighting models can be represented in programmable shaders as well.

Programmable Pipeline

OpenGL ES 2.0 has replaced the fixed-function pipeline with support for programmable shaders. In OpenGL 2.0, which also supports programmable GLSL shaders, the implementation model allows applications to compile source at runtime using shader source strings. OpenGL ES 2.0 uses a shading language similar to the GLSL language specification, called the OpenGL ES Shading Language. This version has changes that are specific to embedded environments and hardware they contain.

Although a built-in compiler is very easy to use, including the compiler in the OpenGL driver can be large (several megabytes) and the compile process can be very CPU intensive. These limitations do not work well with smaller handheld embedded systems, which have much more stringent limitations for both memory and processing power.

For this reason, OpenGL ES has provided two different paths for the compilation of shaders. The first is similar to OpenGL 2.0, allowing applications to compile and link shaders using shader source strings at runtime. The second is a method for compiling shaders offline and then loading the compiled result at runtime. Neither method individually is required, but an OpenGL ES 2.0 implementation must support at least one.

Many of the original OpenGL 2.0 commands are still part of ES. The same semantics of program and shader management are still in play. The first step in using the programmable pipeline is to create the necessary shader and program objects. This is done with the following commands:

glCreateShader(void);

glCreateProgram(void);

After that, shader objects can be attached to program objects:

glAttachShader(GLuint program, GLuint shader);

Shaders can be compiled before or after attachment if the compile method is supported. But the shader source needs to be specified first. These methods are covered in the following extension sections: “Shader Source Loading and Compiling” and “Loading Shaders.” Also, generic attribute channels can be bound to names during this time:

glBindAttribLocation(GLuint program, GLuint index, const char *name);

The program can then be linked. If the shader binary interface is supported, the shader binaries for the compiled shaders need to be loaded before the link method is called. A single binary can be loaded for a fragment-vertex pair if they were compiled together offline.

glLinkProgram(GLuint program);

After the program has been successfully linked, it can be set as the currently executing program by calling glUseProgram. Also, at this point uniforms can be set as needed. All the normal OpenGL 2.0 attribute and uniform interfaces are supported. However, the transpose bit for setting uniform matrices must be GL_FALSE. This feature is not essential to the functioning of the programmable pipeline. Trying to draw without a valid program bound will generate undefined results.

glUseProgram(GLuint program);

glUniform{1234}{if}(GLint location, T values);

glUniform{1234}{if}v(GLint location, sizei count, T value);

glUniformMatrix{234}fv(GLint location, sizei count,

GLboolean transpose, T value);

Using the programmable pipeline in OpenGL ES 2.0 is pretty straightforward if you are familiar with using GLSL. If you don’t have much GLSL experience, it may be helpful to do some work with programmable shaders in OpenGL 2.0 first since programming for a PC is usually more user-friendly than most embedded environments. For more information and the semantics of using shaders and programs, see chapters 15 through 17. To get a better idea of how the two OpenGL ES shader compilation models are used, see the related extensions at the end of this section.

Rasterization

Handling of points has also changed. Only aliased points are supported. Also, point sprites are always enabled for point rendering. Several aspects of point sprite handling have also changed. Vertex shaders are responsible for outputting point size; there is no other way for point size to be specified. GL_COORD_REPLACE can be used to generate point texture coordinates from 0 to 1 for s and t coordinates. Also, the point coordinate origin is set to GL_UPPER_LEFT and cannot be changed.

Antialiased lines are not supported. OpenGL ES 2.0 also has the same limitations as ES 1.1 for polygon support.

Texturing

Texture support has been expanded for ES 2.0. In addition to 2D textures, cubemaps are supported. Depth textures still are not supported and 3D textures remain optional. Non-power-of-two textures support was promoted to OpenGL 2.0 and is included as part of the ES 2.0 specification as well. But for ES, non-power-of-two textures are valid only for 2D textures when mipmapping is not in use and the texture wrap mode is set to clamp to edge.

Fragment Operations

There are also a few changes to the per-fragment operations allowed in ES 2.0. It is required that there be at least one config available that supports both a depth buffer and a stencil buffer. This will guarantee that an application depending on the use of depth information and stencil compares will function on any implementation that supports OpenGL ES 2.0.

A few things have also been removed from the OpenGL 2.0 spec. First, the alpha test stage has been removed since an application can implement this stage in a fragment shader. The glLogicOp interface is no longer supported. And occlusion queries are also not part of OpenGL ES.

Blending works as it does in OpenGL 2.0, but the scope is more limited. glBlendEquation and glBlendEquationSeparate can only support the following modes; GL_FUNC_ADD, GL_FUNC_SUBTRACT, GL_FUNC_REVERSE_SUBTRACT.

State

OpenGL ES 2.0 supports the same state and state queries as OpenGL ES 1.1. But the state that is not part of ES 2.0 cannot be queried, for instance, GL_CURRENT_COLOR and GL_CURRENT_NORMAL. Vertex array data state is also not queryable since ES 2.0 does not support named arrays. Queries have been added for shader and program state and these are the same as in OpenGL 2.0.

Core Additions

The number of core additions and extensions has dramatically increased to support the more flexible nature of ES 2.0. Some of these are required but most are optional. You may notice there are many layered extensions for things like texturing. With the use of this model, an optional core extension definition is created for compatibility purposes, while still allowing implementations to decide exactly what components should be implemented and to what level.

Two required extensions are promoted along from ES 1.1 and ES 1.0: OES_read_format and OES_compressed_paletted_texture.

Framebuffer Objects—OES_framebuffer_object

The framebuffer object extension was originally written against OpenGL 2.0, and is required to be supported in OpenGL ES 2.0. This extension creates the concept of a “frame-buffer-attachable image.” This image is similar to the window render surfaces. The main intention is to allow other surfaces to be bound to the GL framebuffer. This allows direct rendering to arbitrary surfaces that can later be used as texture images, among other things. Because this extension details many intricate interactions, only the broad strokes will be represented here. Refer to the ES 2.0 specification and the EXT_framebuffer_object description for more information on usage, and to Chapter 18, “Advanced Buffers,” for the OpenGL 2.0 explanation of framebuffer objects.

Framebuffer Texture Mipmap Rendering—OES_fbo_render_mipmap

When rendering to a framebuffer object that is used as a mipmapped texture, this optional extension allows for rendering into any of the mipmap levels of the attached framebuffer object. This can be done using the glFramebufferTexture2DOES and glFramebufferTexture3DOES commands.

Render Buffer Storage Formats

To increase the data type options for render buffer storage formats, the following extensions have been added: OES_rgb_rgba, OES_depth24, OES_depth32, OES_stencil1, OES_stencil_4, and OES_stencil8. Of these, only OES_stencil8 is required. These new formats are relevant only for use with framebuffer objects and are designed to extend framebuffer object compatibility.

Half-Float Vertex Format—OES_vertex_half_float

With this optional extension it is possible to specify vertex data with 16 bit floating-point values. When this is done, the required storage for vertex data can be significantly reduced from the size of larger data types. Also, the smaller data type can have a positive effect on the efficiency of the vertex transform portions of the pipeline. Use of half-floats for data like colors often does not have any adverse effects, especially for limited display color depth.

Floating-Point Textures

Two new optional extensions, OES_texture_half_float and OES_texture_float, define new texture formats using floating-point components. The OES_texture_float uses a 32-bit floating format whereas OES_texture_half_float uses a 16-bit format. Both extensions support GL_NEAREST magnification as well as GL_NEAREST, and GL_NEAREST_MIPMAP_NEAREST minification filters. To use the other minification and magnification filters defined in OpenGL ES, the support of OES_texture_half_float_linear and OES_texture_float_linear extension is required.

Unsigned Integer Element Indices—OES_element_index_uint

Element index use in OpenGL ES is inherently limited by the maximum size of the index data types. The use of unsigned bytes and unsigned shorts allows for only 65,536 elements to be used. This optional extension allows for the use of element indexing with unsigned integers, extending the maximum reference index to beyond what current hardware could store.

Mapping Buffers—OES_mapbuffer

For vertex buffer object support in previous OpenGL ES versions, the capability to specify and use anything other than a static buffer was removed. When this optional extension is available, use of the tokens GL_STREAM_DRAW, GL_STREAM_COPY, GL_STREAM_READ, GL_STATIC_READ, GL_DYNAMIC_COPY, and GL_DYNAMIC_READ are valid, as well as the glMapBuffer and glUnmapBuffer entrypoints. This permits applications to map and edit VBOs that already have been defined.

3D Textures—OES_texture_3D

Generally, most ES applications do not require support for 3D textures. This extension was kept as optional to allow implementations to decide whether support could be accelerated and would be useful on an individual basis. Also, texture wrap modes and mipmapping are supported for 3D textures that have power-of-two dimensions. Non-power-of-two 3D textures only support GL_CLAMP_TO_EDGE for mipmapping and texture wrap.

Non-Power-of-Two Extended Support—OES_texture_npot

For non-power-of-two textures, the optional OES_texture_npot extension provides two additional wrap modes. GL_REPEAT and GL_MIRRORED_REPEAT are allowed as texture wrap modes and minification filters when this extension is supported.

High-Precision Floats and Integers in Fragment Shaders—OES_fragment_precision_high

This optional extension allows for support of the high-precision qualifier for integers and floats defined in fragment shaders.

Ericsson Compressed Texture Format—OES_compressed_ETC1_RGB8_texture

The need for compressed texture support in OpenGL ES has long been understood, but format specification and implementation has been left to each individual implementer. This optional extension formalizes one of these formats for use on multiple platforms.

To load a compressed texture using the ETC_RGB8 format, call glCompressedTexImage2D with an internal format of GL_ETC1_RGB8_OES. This format defines a scheme by which each 4×4 texel block is grouped. A base color is then derived, and modifiers for each texel are selected from a table. The modifiers are then added to the base color and clamped to 0–255 to determine the final texel color. The full OES_compressed_ETC1_RGB8_texture description has more details on this process.

Shader Source Loading and Compiling—OES_shader_source

This extension is one of the two methods for loading shaders. If this extension is not supported, OES_shader_binary must be. This version is the most like OpenGL 2.0. There are several entrypoints that are valid only for this extension and are used for loading uncompiled shader source.

After the creation of a shader, the source must be set for the shader using the glShaderSource function. This can be done before or after the shader is attached to a program, but must be done before glCompileShader is called. After the source has been set, but before glLinkProgram is called if the shader is attached to a program, the shader must be compiled with a call to glCompileShader.

glShaderSource(GLuint shader, sizei count, const char **string,

const int *length);

glCompileShaer(GLuint shader);

Because the shader source path has been added back to the programmable pipeline, several shader-specific queries are also available. glGetShaderInfoLog can be used to query information specific to a shader. Compile info is usually the most important information stored in the log. glGetShaderSource can be used to query the shader strings.

glGetShaderInfoLog(GLuint shader, sizei bufsize, sizei *length, char *infolog);

glGetShaderSource(GLuint shader, sizei bufsize, sizei *length, char *source);

Different implementations of OpenGL ES 2.0 may have different levels of internal precision when executing linked programs. Both the precision and the range can be checked for both shader types, vertex and fragment, with the glGetShaderPrecisionFormatOES. Each precision-specific data type, GL_LOW_FLOAT, GL_MEDIUM_FLOAT, GL_HIGH_FLOAT, GL_LOW_INT, GL_MEDIUM_INT, and GL_HIGH_INT, can be queried individually. The results of the queries are log base 2 numbers.

glGetShaderPrecisionFormatOES(GLenum shadertype, sizei bufsize,

sizei *length, char *source);

The last function added with this extension is glReleaseShaderCompilerOES. The resources that need to be initialized to successfully compile a shader can be extensive. Generally, an application will compile and link all shaders/programs it will use before executing any draw calls. This new command signals to the implementation that the compiler will not be used for a while and any allocated resources can be freed. The call does not mean that shaders are no longer allowed to be compiled, though.

Loading Shaders—OES_shader_binary

This extension is the other method for loading shaders. If this extension is not supported, OES_shader_source must be. This extension is intended to address the resource issues related to including a compiler in the OpenGL ES implementation. A compiler can require large amounts of storage, and the execution of an optimizing compiler on shaders during execution can steal large amounts of CPU time.

Using this method allows applications to compile shaders offline for execution on a specific system. These compiled shaders can then be loaded at execution time. This solves the storage problems related to including a compiler and eliminates any compile-time stalls.

This extension also supports use of the command glGetShaderPrecisionFormatOES. See the earlier description under “OES_shader_source” to get a detailed explanation.

One new command has been added to load compiled shader source, glShaderBinaryOES. This command can be used to load a single binary for multiple shaders that were all compiled offline together. These shaders are all listed together on the glShaderBinaryOES call, with “n” being the count of shader handles. For OpenGL ES 2.0, the binary format is always GL_PLATFORM_BINARY.

glShaderBinaryOES(GLint n, GLuint *shaders, GLenum binaryformat,

const void *binary, GLint length);

Shaders are compiled offline using an implementation-specific interface that is defined by individual vendors. These compiles will return a shader binary that will be used at execution time. It is best to compile both the vertex and the fragment shaders for an intended program at the same time, together. This gives the compiler the most opportunity to optimize the compiled shader code, eliminating any unnecessary steps such as outputting interpolants in vertex shaders that are never read in the fragment shader.

There is an additional link-time caveat. glLinkProgram is allowed to fail if optimized vertex and fragment shader source pairs are not linked together. This is because it is possible for the vertex shader to need a recompile based on the needs of the fragment shader.

ES SC

The last version of OpenGL ES we will present is OpenGL ES SC. This is the safety-critical specification. At this time there is only one release of SC, version 1.0. The SC specification is important for execution in places where an application or driver crash can have serious implications, such as for aircraft instrumentation or on an automobile’s main computer. Also, many of the removed features for SC were pulled out to make testing easier, since safety-critical components must go through a much more extensive testing and certification process than a normal PC component.

Because the industry in many of these safety-critical areas tends to progress slowly, many embedded applications use older features of OpenGL that are removed from the newer versions of OpenGL ES. You will find that many of these features are still present in OpenGL ES SC. SC has been written against the OpenGL 1.3 specification. Interface versions specific to the byte, short, and unsigned short data types have been removed to help reduce the number of entrypoints.

Vertex Processing and Coloring

In SC, immediate mode rendering has been added back in for all primitive types except GL_QUADS, GL_QUAD_STRIP, and GL_POLYGON. These can be simulated using other primitive types. Edge flags are not supported. Also, the vertex data entry routines have been reduced to include only the following:

glBegin(GLenum mode);

glEnd();

glVertex{2,3}f[v](T coords);

glNormal3f[v](GLfloat coords);

glMultiTexCoord2f(GLenum texture, GLfloat coords);

glColor4{f,fv,ub}(GLfloat components);

Rendering with vertex arrays is also supported in the same capacity as OpenGL ES 1.0, but generally only support GL_FLOAT as the array data type (color arrays can also be GL_UNSIGNED_BYTE). Also, all the float versions of matrix specification and manipulation functions are supported, whereas the double versions are not since doubles are generally not supported in OpenGL ES. But the transpose versions of the commands are also not supported. Texture coordinate generation is not available.

Many SC systems rely on OpenGL ES to do all graphic rendering, including 2D, menus, and such. So, bitmaps are an important part of menus and 2D rendering and are available on SC. Because bitmaps are supported in SC, a method for setting the current raster position is also necessary. The glRasterPos entrypoint has been included to fulfill this need:

glRasterPos3f(GLfloat coords);

glBitmap(sizei width, sizei height, GLfloat xorig, GLfloat yorig,

GLfloat xmove, GLfloat ymove, const GLubyte *bitmap);

Most of the lighting model stays intact and at least two lights must be supported. But two-sided lighting has been removed, and with it go differing front and back materials. Also, local viewer is not available and the only color material mode is GL_AMBIENT_AND_DIFFUSE.

Rasterization

The rasterization path is very similar to the OpenGL 1.3 path. Point rendering is fully supported. Also, line and polygon stippling is supported. But as with the other ES versions, point and line polygon modes are not supported. Neither is GL_POLYGON_SMOOTH or multisample. As in OpenGL ES 1.0, only 2D textures are supported.

Fragment Operations

Fragment operations will seem familiar. Depth test is included as well as alpha test, scissoring, and blending. This specification also still allows use of color mask and depth mask.

State

Most states that are available for rendering are also available for querying. Most entrypoints are also supported unless they are duplicates or are for a data type that is not supported.

Core Additions

Even the SC version of OpenGL ES has several core additions. These are OES_single_precision, EXT_paletted_texture, and EXT_shared_texture_palette. Single precision has already been covered in previous versions of ES. Paletted texture support is very similar to the compressed paletted texture support offered in other versions of ES and is not described in detail here. Shared paletted textures expand on paletted textures by allowing for a common, shared palette.

The ES Environment

Now that we have seen what the specs actually look like, we are almost ready to take a peek at an example. Figure 22.1 shows an example of OpenGL ES running on a cell phone. To see a color version, flip to the Color Insert section of the book. But before that, there are a few issues unique to embedded systems that you should keep in mind while working with OpenGL ES and targeting embedded environments.

Figure 22.1. OpenGL ES rendering on a cellphone.

Application Design Considerations

For first-timers to the embedded world, things are a bit different here than when working on a PC. The ES world spans a wide variety of hardware profiles. The most capable of these might be multicore systems with extensive dedicated graphics resources, such as the Sony PlayStation 3. Alternatively, and probably more often, you may be developing for or porting to an entry-level cellphone with a 50MHz processor and 16MB of storage.

On limited systems, special attention must be paid to instruction count because every cycle counts if you are looking to maintain reasonable performance. Certain operations can be very slow. An example might be finding the sine of an angle. Instead of calling sin() in a math library, it would be much faster to do a lookup in a precalculated table if a close approximation would do the job. In general, the types of calculations and algorithms that might be part of a PC application should be updated for use in an embedded system. One example might be physics calculations, which are often very expensive. These can usually be simplified and approximated for use on embedded systems like cellphones.

On systems that support only ES 1.x, it’s also important to be aware of native floating-point support. Many of these systems do not have the capability to perform floating-point operations directly. This means all floating-point operations will be emulated in software. These operations are generally very slow and should be avoided at all costs. This is the reason that ES has provided for an interface that does not require floating-point data usage.

Dealing with a Limited Environment

Not only can the environment be limiting when working on embedded systems, but the graphics processing power itself is unlikely to be on par with the bleeding edge of PC graphics. This limitation also creates specific areas that need special attention when you’re looking to optimize the performance of your app, or just to get it to load and run at all!

It may be helpful to create a budget for storage space. In this way you can break up the maximum graphics/system memory available into pieces for each memory-intensive category. This will help to provide a perspective on how much data each unique piece of your app can use and when you are starting to run low.

One of the most obvious areas is texturing. Large detailed textures can help make a PC targeted application provide a rich and detailed environment. This is great for the user experience. But in most embedded systems textures can be a huge resource hog. Many of the older platforms may not have full hardware support for texturing. OpenGL ES 1.x implementations may also be limited in the texture environment that can be hardware accelerated. You’ll want to refer to the documentation for your platform for this information. But these issues can cause large performance drops when many fragments are textured, especially if each piece of overlapping geometry is textured and drawn in the wrong order.

In addition to core hardware texturing performance, texture sizes can also be a major limitation. Both 3D and cube map textures can quickly add up to a large memory footprint. This is one reason why only 2D textures are supported in ES 1.x and 3D textures are optional for ES 2.0. Usually when the amount of graphics and system memory is limited, the screen size is also small. This means that a much smaller texture can be used with similar visual results. Also, it may be worth avoiding multitexture because it requires multiple texture passes as well as more texture memory.

Vertex count can also have an adverse effect on performance. Earlier ES platforms often performed vertex transform on the CPU instead of on dedicated graphics resources. This can be especially slow when using lighting on ES 1.x. To reduce vertex counts, difficult decisions have to be made about which parts of object models are important and require higher tessellation, and which are less important or may not suffer if rendered with less tessellation.

Vertex storage can also impact memory, similar to textures. In addition to setting a cap for the total memory used for vertices, it may also be helpful to decide which parts of a scene are important and divide up the vertex allotment along those lines.

One trick to keeping rendering smooth while many objects are on the screen is to change the vertex counts for objects relative to their distance from the viewer. This is a level-of-detail approach to geometry management. For instance, if you would like to generate a forest scene, three different models of trees could be used. One level would have a very small vertex count and would be used to render the farthest of the trees. A medium vertex count could be used for trees of intermediate distance, and a larger count would be used on the closest. This would allow many trees to be rendered much quicker than if they were all at a high detail level. Because the least detailed trees are the farthest away, and may also be partially occluded, it is unlikely the lower detail would be noticed. But there may be significant savings in vertex processing as a result.

Fixed-Point Math

You may ask yourself, “What is fixed-point math and why should I care?” The truth is that you may not care if your hardware supports floating-point numbers and the version of OpenGL ES you are using does as well. But there are many platforms that do not natively support floating point. Floating-point calculations in CPU emulation are very slow and should be avoided. In those instances, a representation of a floating-point number can be used to communicate nonwhole numbers. I definitely am not going to turn this into a math class! But instead a few basic things about fixed-point math will be covered to give you an idea of what’s involved. If you need to know more, there are many great resources available that go to great lengths in discussing fixed-point math.

First, let’s review how floating-point numbers work. There are basically two components to a floating-point number: The mantissa describes the fractional value, and the exponent is the scale or power. In this way large numbers are represented with the same number of significant digits as small numbers. They are related by m * 2e where m is the mantissa and e is the exponent.

Fixed-point representation is different. It looks more like a normal integer. The bits are divided into two parts, with one part being the integer portion and the other part being the fractional. The position between the integer and fractional components is the “imaginary point.” There also may be a sign bit. Putting these pieces together, a fixed-point format of s15.16 means that there is 1 sign bit, 15 bits represent the integer, and 16 bits represent the fraction. This is the format used natively by OpenGL ES to represent fixed-point numbers.

Addition of two fixed-point numbers is simple. Because a fixed-point number is basically an integer with an arbitrary “point,” the two numbers can be added together with a common scalar addition operation. The same is true for subtraction. There is one requirement for performing these operations. The fixed-point numbers must be in the same format. If they are not, one must be converted to the format of the other first. So to add or subtract a number with format s23.8 and one with s15.16, one format has to be picked and both numbers converted to that format.

Multiplication and division are a bit more complex. When two fixed-point numbers are multiplied together, the imaginary point of the result will be the sum of that in the two operands. For instance, if you were multiplying two numbers with formats of s23.8 together, the result would be in the format of s15.16. So it is often helpful to first convert the operands into a format that will allow for a reasonably accurate result format. You probably don’t want to multiply two s15.16 formats together if they are greater than 1.0—the result format would have no integer portion! Division is very similar, except the size of the fractional component of the second number is subtracted from the first.

When using fixed-point numbers, you have to be especially careful about overflow issues. With normal floating point, when the fractional component would overflow, the exponent portion is modified to preserve accuracy and prevent the overflow. This is not the case for fixed point. To avoid overflowing fixed-point numbers when performing operations that might cause problems, the format can be altered. The numbers can be converted to a format that has a larger integer component, and then converted back before calling into OpenGL ES. With multiplication similar issues result in precision loss of the fractional component when the result is converted back to one of the operand formats. There are also math packages available to help you convert to and from fixed-point formats, as well as perform math functions. This is probably the easiest way to handle fixed-point math if you need to use it for an entire application.

That’s it! Now you have an idea how to do basic math operations using fixed-point formats. This will help get you started if you find yourself stuck having to use fixed-point values when working with embedded systems. There are many great references for learning more about fixed-point math. One is Essential Mathematics for Games and Interactive Applications by James Van Verth and Lars Bishop (Elsevier, Inc. 2004).

EGL: A New Windowing Environment

You have already heard about glx, agl, and wgl. These are the OpenGL-related system interfaces for OSs like Linux, Apple’s Mac OS, and Microsoft Windows. These interfaces are necessary to do the setup and management for system-side resources that OpenGL will use. The EGL implementation often is also provided by the graphics hardware vendor. Unlike the other windowing interfaces, EGL is not OS specific. It has been designed to run under Windows, Linux, or embedded OSs such as Brew and Symbian. A block diagram of how EGL and OpenGL ES fit into an embedded system is shown in Figure 22.2

Figure 22.2. A typlical embedded system diagram.

EGL has its own native types just like OpenGL does. EGLBoolean has two values that are named similarly to their OpenGL counterparts: EGL_TRUE and EGL_FALSE. EGL also defines the type EGLint. This is an integer that is sized the same as the native platform integer type. The most current version of EGL as of this writing is EGL 1.2

EGL Displays

Most EGL entrypoints take a parameter called EGLDisplay. This is a reference to the rendering target where drawing can take place. It might be easiest to think of this as corresponding to a physical monitor. The first step in setting up EGL will be to get the default display. This can be done through the following function:

EGLDisplay eglGetDisplay(NativeDisplayType display_id);

The native display id that is taken as a parameter is dependent on the system. For instance, if you were working with an EGL implementation on Windows, the display_id parameter you pass would be the device context. You can also pass EGL_DEFAULT_DISPLAY if you don’t have the display id and just want to render on the default device. If EGL_NO_DISPLAY is returned, an error occurred.

Now that you have a display handle, you can use it to initialize EGL. If you try to use other EGL interfaces without initializing EGL first, you will get an EGL_NOT_INITIALIZED error.

EGLBoolean eglInitialize(EGLDisplay dpy, EGLint *major, EGLint *minor);

The other two parameters returned are the major and minor EGL version numbers. By calling the initialize command, you tell EGL you are getting ready to do rendering, which will allow it to allocate and set up any necessary resources.

The main addition to EGL 1.2 is the eglBindAPI interface. This allows an application to select from different rendering APIs, such as OpenGL ES and OpenVG. Only one context can be current for each API per thread. Use this interface to tell EGL which interface it should use for subsequent calls to eglMakeCurrent in a thread. Pass in one of two tokens to signify the correct API; EGL_OPENVG_API, EGL_OPENGL_ES_API. The call will fail if an invalid enum is passed in. The default value is EGL_OPENGL_ES_API. So unless you plan to switch between multiple APIs, you don’t need to set EGL_OPENGL_ES_API to get OpenGL ES.

EGLBoolean eglBindAPI(EGLenum api);

EGL also provides a method to query the current API, eglQueryAPI. This interface returns one of the two enums previously listed.

EGLBoolean eglBindAPI(EGLenum api);

On exit of your application, or after you are done rendering, a call must be made to EGL again to clean up all allocated resources. After this call is made, further references to EGL resources with the current display will be invalid until eglInitialize is called on it again.

EGLBoolean eglTerminate(EGLDisplay dpy);

Also on exit and when finished rendering from a thread, call eglReleaseThread. This allows EGL to release any resources it has allocated in that thread. If a context is still bound, eglReleaseThread will release it as well. It is still valid to make EGL calls after calling eglReleaseThread, but that will cause EGL to reallocate any state it just released.

EGLBoolean eglReleaseThread(EGLDisplay dpy);

Creating a Window

As on most platforms, creating a window to render in can be a complex task. Windows are created in the native operating system. Later we will look at how to tell EGL about native windows. Thankfully the process is similar enough to that for Windows and Linux.

Display Configs

An EGL config is analogous to a pixel format on Windows or visuals on Linux. Each config represents a group of attributes or properties for a set of render surfaces. In this case the render surface will be a window on a display. It is typical for an implementation to support multiple configs. Each config is identified by a unique number. Different constants are defined that correlate to attributes of a config. They are defined in Table 22.2.

Table 22.2. EGL Config Attribute List

It is necessary to choose a config before creating a render surface. But with all the possible combinations of attributes, the process may seem difficult. EGL has provided several tools to help you decide which config will best support your needs. If you have an idea of the kind of options you need for a window, you can use the eglChooseConfig interface to let EGL choose the best config for your requirements.

EGLBoolean eglChooseConfig(EGLDisplay dpy, const EGLint *attrib_list,

EGLConfig *configs,EGLint config_size,

EGLint *num_configs);

First decide how many matches you are willing to look through. Then allocate memory to hold the returned config handles. The matching config handles will be returned through the configs pointer. The number of configs will be returned through the num_config pointer. Next comes the tricky part. You have to decide which parameters are important to you in a functional config. Then, you create a list of each attrib followed by the corresponding value. For simple applications, some important attributes might be the bit depths of the color and depth buffers, and the surface type. The list must be terminated with EGL_NONE. An example of an attribute list is shown here:

EGLint attributes[] = {EGL_BUFFER_SIZE, 24,

EGL_RED_SIZE, 6,

EGL_GREEN_SIZE, 6,

EGL_BLUE_SIZE, 6,

EGL_DEPTH_SIZE, 12,

EGL_SURFACE_TYPE, EGL_WINDOW_BIT,

EGL_NONE};

For attributes that are not specified in the array, the default values will be used. During the search for a matching config, some of the attributes you list are required to make an exact match whereas others are not. Table 22.3 lists the default values and the compare method for each attribute.

Table 22.3. EGL Config Attribute List

EGL uses a set of rules to sort the matching results before they are returned to you. Basically, the caveat field is matched first, followed by the color buffer channel depths, then the total buffer size, and next the sample buffer information. So the config that is the best match should be first. After you have received the matching configs, you can peruse the results to find the best option for you. The first one will often be sufficient.

To analyze the attributes for each config, you can use eglGetConfigAttrib. This will allow you to query the attributes for a config, one at a time:

EGLBoolean eglGetConfigAttrib(EGLDisplay dpy, EGLConfig config,

EGLint attribute, EGLint *value);

If you prefer a more “hands-on” approach to choosing a config, a more direct method for accessing supported configs is also provided. You can use eglGetConfigs to get all the configs supported by EGL:

EGLBoolean eglGetConfigs(EGLDisplay dpy, EGLConfig *configs,

EGLint config_size, EGLint *num_configs);

This function is very similar to eglChooseConfig except that it will return a list that is not dependent on some search criteria. The number of configs returned will be either the maximum available or the number passed in by config_size, whichever is smaller. Here also a buffer needs to be preallocated based on the expected number of formats. After you have the list, it is up to you to pick the best option, examining each with eglGetConfigAttrib. It is unlikely that multiple different platforms will have the same configs or list configs in the same order. So it is important to properly select a config instead of blindly using the config handle.

Creating Rendering Surfaces

Now that we know how to pick a config that will support our needs, it’s time to look at creating an actual render surface. The focus will be window surfaces, although it is also possible to create nondisplayable surfaces such as pBuffers and pixmaps. The first step will be to create a native window that has the same attributes as those in the config you chose. Then you can use the window handle to create a window surface. The window handle type will be related to the platform or OS you are using. In this way the same interface will support many different OSs without having to define a new method for each.

EGLSurface eglCreateWindowSurface(EGLDisplay dpy, EGLConfig config,

NativeWindowType win, EGLint *attrib_list);

The handle for the onscreen surface is returned if the call succeeds. The attrib_list parameter is intended to specify window attributes, but currently none is defined. After you are done rendering, you’ll have to destroy your surface using the eglDestroySurface function:

EGLBoolean eglDestroySurface(EGLDisplay dpy, EGLSurface surface);

After a window render surface has been created and the hardware resources have been configured, you are almost ready to go!

Context Management

The last step is to create a render context to use. The rendering context is a set of state used for rendering. At least one context must be supported.

EGLContext eglCreateContext(EGLDisplay dpy, EGLConfig config,

EGLContext share_context, const EGLint *attrib_list);

To create a context, call the eglCreateContext function with the display handle you have been using all along. Also pass in the config used to create the render surface. The config used to create the context must be compatible with the config used to create the window. The share_context parameter is used to share objects like textures and shaders between contexts. Pass in the context you would like to share with. Normally you will pass EGL_NO_CONTEXT here since sharing is not necessary. The context handle is passed back if the context was successfully created; otherwise, EGL_NO_CONTEXT is returned.

Now that you have a rendering surface and a context, you’re ready to go! The last thing to do is to tell EGL which context you’d like to use, since you can use multiple contexts for rendering. Use eglMakeCurrent to set a context as current. You can use the surface you just created as both the read and the draw surfaces.

EGLBoolean eglMakeCurrent(EGLDisplay dpy, EGLSurface draw,

EGLSurface read, EGLContext ctx);

You will get an error if the draw or read surfaces are invalid or if they are not compatible with the context. To release a bound context, you can call eglMakeCurrent with EGL_NO_CONTEXT as the context. You must use EGL_NO_SURFACE as the read and write surfaces when releasing a context. To delete a context you are finished with, call eglDestroyContext:

EGLBoolean eglDestroyContex(EGLDisplay dpy, EGLContext ctx);

Presenting Buffers and Rendering Synchronization

For rendering, there are certain EGL functions you may need in order to help keep things running smoothly. The first is eglSwapBuffers. This interface allows you to present a color buffer to a window. Just pass in the window surface you would like to post to:

EGLBoolean eglSwapBuffers(EGLDisplay dpy, EGLSurface surface);

Just because eglSwapBuffers is called doesn’t mean it’s the best time to actually post the buffer to the monitor. It’s possible that the display is in the middle of displaying a frame when eglSwapBuffers is called. This case causes an artifact called tearing that looks like the frame is slightly skewed on a horizontal line. EGL provides a way to decide if it should wait until the current drawing is complete before posting the swapped buffer to the monitor:

EGLBoolean eglSwapInterval(EGLDisplay dpy, EGLint interval);

By setting the swap interval to 0, you are telling EGL to not synchronize swaps and that an eglSwapBuffers call should be posted immediately. The default value is 1, which means each swap will be synchronized with the next post to the monitor. The interval is clamped to the values of EGL_MIN_SWAP_INTERVAL and EGL_MAX_SWAP_INTERVAL.

If you plan to render to your window using other APIs besides OpenGL ES/EGL, there are some things you can do to ensure that rendering is posted in the right order:

EGLBoolean eglWaitGL(void);

EGLBoolean eglWaitNative(EGLint engine);

Use eglWaitGL to prevent other API rendering from operating on a window surface before OpenGL ES rendering completes. Use eglWaitNative to prevent OpenGL ES from executing before native API rendering completes. The engine parameter can be defined in EGL extensions specific to an implementation, but EGL_CORE_NATIVE_ENGINE can also be used and will refer to the most common native rendering engine besides OpenGL ES. This is implementation/system specific.

More EGL Stuff

We have covered the most important and commonly used EGL interfaces. There are a few more EGL functions left to talk about that are more peripheral to the common execution path.

EGL Errors

EGL provides a method for getting EGL-specific errors that may be thrown during EGL execution. Most functions return EGL_TRUE or EGL_FALSE to indicate whether they were successful, but in the event of a failure, a Boolean provides very little information on what went wrong. In this case, eglGetError may be called to get more information:

EGLint eglGetError();

The last thrown error is returned. This will be one of the following self-explanatory errors: EGL_SUCCESS, EGL_NOT_INITIALIZED, EGL_BAD_ACCESS, EGL_BAD_ALLOC, EGL_BAD_ATTRIBUTE, EGL_BAD_CONTEXT, EGL_BAD_CONFIG, EGL_BAD_CURRENT_SURFACE, EGL_BAD_DISPLAY, EGL_BAD_SURFACE, EGL_BAD_MATCH, EGL_BAD_PARAMETER, EGL_BAD_NATIVE_PIXMAP, EGL_BAD_NATIVE_WINDOW, or EGL_CONTEXT_LOST.

Getting EGL Strings

There are a few EGL state strings that may be of interest. These include the EGL version string and extension string. To get these, use the eglQueryString interface with the EGL_VERSION and EGL_EXTENSIONS enums:

const char *eglQueryString(EGLDisplay dpy, EGLint name);

Extending EGL

Like OpenGL, EGL provides support for various extensions. These are often extensions specific to the current platform and can provide for extended functionality beyond that of the core specification. To find out what extensions are available on your system, you can use the eglQueryString function previously discussed. To get more information on specific extensions, you can visit the Khronos Web site listed in the reference section. Some of these extensions may require additional entrypoints. To get the entrypoint address for these extensions, pass the name of the new entrypoint into the following function:

void (*eglGetProcAddress(const char *procname))();

Use of this entrypoint is very similar to wglGetProcAddress. A NULL return means the entry point does not exist. But just because a non-NULL address is returned does not mean the function is actually supported. The related extensions must exist in the EGL extension string or the OpenGL ES extension string. It is important to ensure that you have a valid function pointer (non-NULL) returned from calling eglGetProcAddress.

Negotiating Embedded Environments

After examining all the different versions of OpenGL ES and EGL, it’s time to look closer at the environment of an embedded system and how it will affect an OpenGL ES application. The environment will play an important role in how you approach creating ES applications.

Popular Operating Systems

Because OpenGL ES is not limited to certain platforms as many 3D APIs are, a wide variety of OSs have been used. This decision is often already made for you, because most embedded systems are designed for use with certain OSs, and certain OSs are intended for use on specific hardware.

One of the most apparent platforms is Microsoft Windows CE/Windows Pocket PC/Windows Mobile. The Microsoft OSs are currently most prevalent on PDA type systems. Also, slimmed-down versions of Linux are very popular for their flexibility and extensibility. Brew and Symbian are common in the cellphone arena. Each of these options often has its own SDK for developing, compiling, loading, and debugging applications. For our example, we will target PC-based systems running Windows, although this code can be compiled for any target.

Embedded Hardware

The number of hardware implementations supporting OpenGL ES is rapidly growing. Many hardware vendors create their own proprietary implementations for inclusion in their products. Some of these are Ericsson, Nokia, and Motorola.

Other companies provide standalone support for integration into embedded solutions, like the AMD Imageon processors. And some provide licensing for IP-enabling OpenGL ES support, such as PowerVR (www.imgtec.com/PowerVR/Products/index.asp). There are many ways OpenGL ES hardware support can find its way into an embedded system near you!

Vendor-Specific Extensions