Chapter 19. Wiggle: OpenGL on Windows

WHAT YOU’LL LEARN IN THIS CHAPTER:

OpenGL is purely a low-level graphics API, with user interaction and the screen or window handled by the host environment. To facilitate this partnership, each environment usually has some extensions that “glue” OpenGL to its own window management and user interface functions. This glue is code that associates OpenGL drawing commands with a particular window. It is also necessary to provide functions for setting buffer modes, color depths, and other drawing characteristics.

For Microsoft Windows, this glue code is embodied in a set of functions added to the Windows API. They are called the wiggle functions because they are prefixed with wgl rather than gl. These gluing functions are explained in this chapter, where we dispense with using the GLUT library for our OpenGL framework and build full-fledged Windows applications that can take advantage of all the operating system’s features. You will see what characteristics a Windows window must have to support OpenGL graphics. You will learn which messages a well-behaved OpenGL window should handle and how. The concepts of this chapter are introduced gradually, as we build a model OpenGL program that provides a framework for Windows-specific OpenGL support.

So far in this book, you’ve needed no prior knowledge of 3D graphics and only a rudimentary knowledge of C programming. For this chapter, however, we assume you have at least an entry-level knowledge of Windows programming. Otherwise, we would have wound up writing a book twice the size of this one, and we would have spent more time on the details of Windows programming and less on OpenGL programming.

OpenGL Implementations on Windows

OpenGL became available for the Win32 platform with the release of Windows NT version 3.5. It was later released as an add-on for Windows 95 and then became a shipping part of the Windows 95 operating system (OSR2). OpenGL is now a native API on any Win32 platform (Windows 95/98/ME, Windows NT/2000/XP, and Vista), with its functions exported from opengl32.dll. You need to be aware of four flavors of OpenGL on Windows: Generic, ICD, MCD, and the Extended. Each has its pros and cons from both the user and the developer point of view. You should at least have a high-level understanding of how these implementations work and what their drawbacks might be.

Generic OpenGL

A generic implementation of OpenGL is simply a software implementation that does not use specific 3D hardware. The Microsoft implementation bundled with all versions of Windows is a generic implementation. The Silicon Graphics Incorporated (SGI) OpenGL for Windows implementation (no longer widely available) optionally made use of MMX instructions, but because it was not considered dedicated 3D hardware, it was still considered a generic software implementation. Another implementation called MESA (www.mesa3d.org) is not strictly a “real” OpenGL implementation—it’s a “work-a-like”—but for most purposes, you can consider it to be so. MESA can also be hooked to hardware, but this should be considered a special case of the mini-driver (discussed shortly).

Although the MESA implementation has kept up with OpenGL’s advancing feature set over the years, the Microsoft generic implementation has not been updated since OpenGL version 1.1. Not to worry, we will soon show you how to get to all the OpenGL functionality your graphics card supports.

Installable Client Driver

The Installable Client Driver (ICD) was the original hardware driver interface provided for Windows NT. The ICD must implement the entire OpenGL pipeline using a combination of software and the specific hardware for which it was written. Creating an ICD from scratch is a considerable amount of work for a vendor to undertake.

The ICD drops in and works with Microsoft’s OpenGL implementation. Applications linked to opengl32.dll are automatically dispatched to the ICD driver code for OpenGL calls. This mechanism is ideal because applications do not have to be recompiled to take advantage of OpenGL hardware should it become available. The ICD is actually a part of the display driver and does not affect the existing openGL32.dll system DLL. This driver model provides the vendor with the most opportunities to optimize its driver and hardware combination.

Mini-Client Driver

The Mini-Client Driver (MCD) was a compromise between a software and a hardware implementation. Most early PC 3D hardware provided hardware-accelerated rasterization only. (See “The Pipeline” section in Chapter 2, “Using OpenGL.”) The MCD driver model allowed applications to use Microsoft’s generic implementation for features that were not available in hardware. For example, transform and lighting could come from Microsoft’s OpenGL software, but the actual rasterizing of lit shaded triangles would be handled by the hardware.

The MCD driver implementation made it easy for hardware vendors to create OpenGL drivers for their hardware. Most of the work was done by Microsoft, and whatever features the vendors did not implement in hardware were handed back to the Microsoft generic implementation.

The MCD driver model showed great promise for bringing OpenGL to the PC mass market. Initially available for Windows NT, a software development kit (SDK) was provided to hardware vendors to create MCD drivers for Windows 98, and Microsoft encouraged hardware vendors to use it for their OpenGL support. After many hardware vendors had completed their MCD drivers, Microsoft decided not to license the code for public release. This gave their own proprietary 3D API a temporary advantage in the consumer marketplace.

The MCD driver model today is largely obsolete, but a few implementations are still in use in legacy NT-based systems. One reason for its demise is that the MCD driver model cannot support Intel’s Accelerated Graphics Port (AGP) texturing efficiently. Another is that SGI began providing an optimized ICD driver kit to vendors that made writing ICDs almost as easy as writing MCDs. (This move was a response to Microsoft’s withdrawal of support for OpenGL MCDs on Windows 98.)

Mini-Driver

A mini-driver is not a real display driver. Instead, it is a drop-in replacement for opengl32.dll that makes calls to a hardware vendor’s proprietary 3D hardware driver. Typically, these mini-drivers convert OpenGL calls to roughly equivalent calls in a vendor’s proprietary 3D API. The first mini-driver was written by 3dfx for its Voodoo graphics card. This DLL drop-in converted OpenGL calls into the Voodoo’s native Glide (the 3dfx 3D API) programming interface.

Although mini-drivers popularized OpenGL for games, they often had missing OpenGL functions or features. Any application that used OpenGL did not necessarily work with a mini-driver. Typically, these drivers provided only the barest functionality needed to run a popular game. Though not widely documented, Microsoft even made an OpenGL to D3D translation layer that was used on Windows XP to accelerate some games when an ICD was not present. Fortunately, the widespread popularity of OpenGL has made the mini-driver obsolete on newer commodity PCs.

OpenGL on Vista

A variation of this mini-driver still exists on Windows Vista, but is not exposed to developers. Microsoft has implemented an OpenGL to D3D emulator that supports OpenGL version 1.4. This implementation looks like an ICD, but shows up only if a real ICD is not installed. As of the initial release of Vista, there is no way to turn on this implementation manually. Only a few games (selected by Microsoft) are “tricked” into seeing this implementation. Vista, like XP, does not ship with ICD drivers on the distribution media. After a user downloads a new display driver from their vendor’s Web site, however, they will get a true ICD-based driver, and full OpenGL support in both Windowed and full-screen games.

Extended OpenGL

If you are developing software for any version of Microsoft Windows, you are most likely making use of header files and an import library that works with Microsoft’s opengl32.dll. This DLL is designed to provide a generic (software-rendered) fallback if 3D hardware is not installed, and has a dispatch mechanism that works with the official ICD OpenGL driver model for hardware-based OpenGL implementations. Using this header and import library alone gives you access only to functions and capabilities present in OpenGL 1.1.

As of this edition, most desktop drivers support OpenGL version 2.1. Take note, however, that OpenGL 1.1 is still a very capable and full-featured graphics API and is suitable for a wide range of graphical applications, including games and business graphics. Even without the additional features of OpenGL 1.2 and beyond, graphics hardware performance has increased exponentially, and most PC graphics cards have the entire OpenGL pipeline implemented in special-purpose hardware. OpenGL 1.1 can still produce screaming-fast and highly complex 3D renderings!

Many applications still will require, or at least be significantly enhanced by, use of the newer OpenGL innovations. To get to the newer OpenGL features (which are widely supported), you need to use the same OpenGL extension mechanism that you use to get to vendor-specific OpenGL enhancements. OpenGL extensions were introduced in Chapter 2, and the specifics of using this extension mechanism on Windows are covered later in this chapter in the section “OpenGL and WGL Extensions.”

This may sound like a bewildering environment in which to develop 3D graphics—especially if you plan to port your applications to, say, the Macintosh platform, where OpenGL features are updated more consistently with each OS release. Some strategies, however, can make such development more manageable. First, you can call the following function so that your application can tell at runtime which OpenGL version the hardware driver supports:

glGetString(GL_VERSION);

This way, you can gracefully decide whether the application is going to be able to run at all on the user’s system. Because OpenGL and its extensions are dynamically loaded, there is no reason your programs should not at least start and present the user with a friendly and informative error or diagnostic message.

You also need to think carefully about what OpenGL features your application must have. Can the application be written to use only OpenGL 1.1 features? Will the application be usable at all if no hardware is present and the user must use the built-in software renderer? If the answer to either of these questions is yes, you should first write your application’s rendering code using only the import library for OpenGL 1.1. This gives you the widest possible audience for your application.

When you have the basic rendering code in place, you can go back and consider performance optimizations or special visual effects available with newer OpenGL features that you want to make available in your program. By checking the OpenGL version early in your program, you can introduce different rendering paths or functions that will optionally perform better or provide additional visual effects to your rendering. For example, static texture maps could be replaced with fragment programs, or standard fog replaced with volumetric fog made possible through vertex programs. Using the latest and greatest features allows you to really show off your program, but if you rely on them exclusively, you may be severely limiting your audience...and sales.

Bear in mind that the preceding advice should be weighed heavily against the type of application you are developing. If you are making an immersive and fast-paced 3D game, worrying about users with OpenGL 1.1 does not make much sense. On the other hand, a program that, say, generates interactive 3D weather maps can certainly afford to be more conservative.

Many, if not most, modern applications really must have some newer OpenGL feature; for example, a medical visualization package may require that 3D texturing or the imaging subset be available. In these types of more specialized or vertical markets, your application will simply have to require some minimal OpenGL support to run. The OpenGL version required in these cases will be listed among any other minimum system requirements that you specify are needed for your software. Again, your application can check for these details at startup.

Basic Windows Rendering

The GLUT library provided only one window, and OpenGL function calls always produced output in that window. (Where else would they go?) Your own real-world Windows applications, however, will often have more than one window. In fact, dialog boxes, controls, and even menus are actually windows at a fundamental level; having a useful program that contains only one window is nearly impossible (well, okay, maybe games are an important exception!). How does OpenGL know where to draw when you execute your rendering code? Before we answer this question, let’s first review how we normally draw in a window without using OpenGL.

GDI Device Contexts

There are many technology options for drawing into a Windows window. The oldest and most widely supported is the Windows GDI (graphics device interface). GDI is strictly a 2D drawing interface, and was widely hardware accelerated before Windows Vista. Although GDI is still available on Vista, it is no longer hardware accelerated; the preferred high-level drawing technology is based on the .NET framework and is called the Windows Presentation Foundation (WPF). WPF is also available via a download for Windows XP. Over the years some minor 2D API variations have come and gone, as well as several incarnations of Direct3D. On Vista, the new low-level rendering interface is called Windows Graphics Foundation (WGF) and is essentially just Direct 3D version 10.

The one native rendering API common to all versions of Windows (even Windows Mobile) is GDI. This is fortunate because GDI is how we initialize OpenGL and interact with OpenGL on all versions of Windows (except Windows Mobile, where OpenGL is not natively supported by Microsoft). On Vista, GDI is no longer hardware accelerated, but this is irrelevant because we will never (at least when using OpenGL) use GDI for any drawing operations anyway.

When you’re using GDI, each window has a device context that actually receives the graphics output, and each GDI function takes a device context as an argument to indicate which window you want the function to affect. You can have multiple device contexts, but only one for each window.

Before you jump to the conclusion that OpenGL should work in a similar way, remember that the GDI is Windows specific. Other environments do not have device contexts, window handles, and the like. Although the ideas may be similar, they are certainly not called the same thing and might work and behave differently. OpenGL, on the other hand, was designed to be completely portable among environments and hardware platforms (and it didn’t start on Windows anyway!). Adding a device context parameter to the OpenGL functions would render your OpenGL code useless in any environment other than Windows.

OpenGL does have a context identifier, however, and it is called the rendering context. The rendering context is similar in many respects to the GDI device context because it is the rendering context that remembers current colors, state settings, and so on, much like the device context holds onto the current brush or pen color for Windows.

Pixel Formats

The Windows concept of the device context is limited for 3D graphics because it was designed for 2D graphics applications. In Windows, you request a device context identifier for a given window. The nature of the device context depends on the nature of the device. If your desktop is set to 16-bit color, the device context Windows gives you knows about and understands 16-bit color only. You cannot tell Windows, for example, that one window is to be a 16-bit color window and another is to be a 32-bit color window.

Although Windows lets you create a memory device context, you still have to give it an existing window device context to emulate. Even if you pass in NULL for the window parameter, Windows uses the device context of your desktop. You, the programmer, have no control over the intrinsic characteristics of a window’s device context.

Any window or device that will be rendering 3D graphics has far more characteristics to it than simply color depth, especially if you are using a hardware rendering device (3D graphics card). Up until now, GLUT has taken care of these details for you. When you initialized GLUT, you told it what buffers you needed (double or single color buffer, depth buffer, stencil, and alpha).

Before OpenGL can render into a window, you must first configure that window according to your rendering needs. Do you want hardware or software rendering? Will the rendering be single or double buffered? Do you need a depth buffer? How about stencil, destination alpha, or an accumulation buffer? After you set these parameters for a window, you cannot change them later. To switch from a window with only a depth and color buffer to a window with only a stencil and color buffer, you have to destroy the first window and re-create a new window with the characteristics you need.

Describing a Pixel Format

The 3D characteristics of the window are set one time, usually just after window creation. The collective name for these settings is the pixel format. Windows provides a structure named PIXELFORMATDESCRIPTOR that describes the pixel format. This structure is defined as follows:

typedef struct tagPIXELFORMATDESCRIPTOR {

WORD nSize; // Size of this structure

WORD nVersion; // Version of structure (should be 1)

DWORD dwFlags; // Pixel buffer properties

BYTE iPixelType; // Type of pixel data (RGBA or Color Index)

BYTE cColorBits; // Number of color bit planes in color buffer

BYTE cRedBits; // How many bits for red

BYTE cRedShift; // Shift count for red bits

BYTE cGreenBits; // How many bits for green

BYTE cGreenShift; // Shift count for green bits

BYTE cBlueBits; // How many bits for blue

BYTE cBlueShift; // Shift count for blue bits

BYTE cAlphaBits; // How many bits for destination alpha

BYTE cAlphaShift; // Shift count for destination alpha

BYTE cAccumBits; // How many bits for accumulation buffer

BYTE cAccumRedBits; // How many red bits for accumulation buffer

BYTE cAccumGreenBits; // How many green bits for accumulation buffer

BYTE cAccumBlueBits; // How many blue bits for accumulation buffer

BYTE cAccumAlphaBits; // How many alpha bits for accumulation buffer

BYTE cDepthBits; // How many bits for depth buffer

BYTE cStencilBits; // How many bits for stencil buffer

BYTE cAuxBuffers; // How many auxiliary buffers

BYTE iLayerType; // Obsolete - ignored

BYTE bReserved; // Number of overlay and underlay planes

DWORD dwLayerMask; // Obsolete - ignored

DWORD dwVisibleMask; // Transparent color of underlay plane

DWORD dwDamageMask; // Obsolete - ignored

} PIXELFORMATDESCRIPTOR;

For a given OpenGL device (hardware or software), the values of these members are not arbitrary. Only a limited number of pixel formats is available for a given window. Pixel formats are said to be exported by the OpenGL driver or software renderer. Most of these structure members are self-explanatory, but a few require some additional explanation, as listed in Table 19.1.

Table 19.1. PIXELFORMATDESCRIPTOR Fields

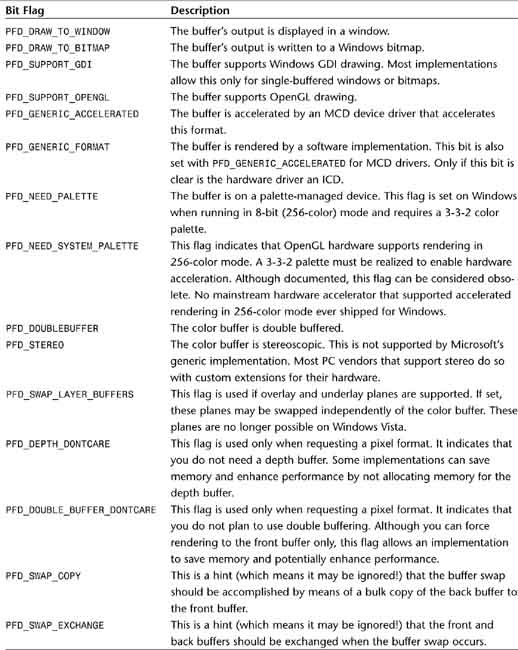

Table 19.2. Valid Flags to Describe the Pixel Rendering Buffer

Enumerating Pixel Formats

The pixel format for a window is identified by a one-based integer index number. An implementation exports a number of pixel formats from which to choose. To set a pixel format for a window, you must select one of the available formats exported by the driver. You can use the DescribePixelFormat function to determine the characteristics of a given pixel format. You can also use this function to find out how many pixel formats are exported by the driver. The following code shows how to enumerate all the pixel formats available for a window:

PIXELFORMATDESCRIPTOR pfd; // Pixel format descriptor

int nFormatCount; // How many pixel formats exported

. . .

// Get the number of pixel formats

// Will need a device context

pfd.nSize = sizeof(PIXELFORMATDESCRIPTOR);

nFormatCount = DescribePixelFormat(hDC, 1, 0, NULL);

// Retrieve each pixel format

for(int i = 1; i <= nFormatCount; i++)

{

// Get description of pixel format

DescribePixelFormat(hDC, i, pfd.nSize, &pfd);

. . .

. . .

}

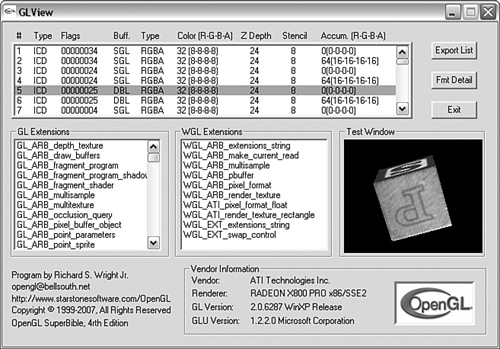

The DescribePixelFormat function returns the maximum pixel format index. You can use an initial call to this function as shown to determine how many are available. An interesting utility program called GLView is included in the source distribution for this chapter. This program enumerates all pixel formats available for your display driver for the given resolution and color depths. Figure 19.1 shows the output from this program when a double-buffered pixel format is selected. (A single-buffered pixel format would contain a flickering block animation.)

Figure 19.1. The GLView program shows all pixel formats for a given device.

The Microsoft Foundation Classes (MFC) source code is included for this program. This is a bit more complex than your typical sample program, and GLView is provided more as a tool for your use than as a programming example. The important code for enumerating pixel formats was presented earlier and is less than a dozen lines long. If you are familiar with MFC already, examination of this source code will show you how to integrate OpenGL rendering into any CWnd derived window class.

The list box lists all the available pixel formats and displays their characteristics (driver type, color depth, and so on). A sample window in the lower-right corner displays a rotating cube using a window created with the highlighted pixel format. The glGetString function enables you to find out the name of the vendor for the OpenGL driver, as well as other version information. Finally, a list box displays all the OpenGL and WGL extensions exported by the driver (WGL extensions are covered later in this chapter).

If you experiment with this program, you’ll discover that not all pixel formats can be used to create an OpenGL window, as shown in Figure 19.2. Even though the driver exports these pixel formats, it does not mean that you can create an OpenGL-enabled window with one of them. The most important criterion is that the pixel format color depth must match the color depth of your desktop. That is, you can’t create a 16-bit color pixel format for a 32-bit color desktop, or vice versa.

Figure 19.2. The GLView program showing an invalid pixel format.

Make special note of the fact that at least 24 pixel formats are always enumerated, sometimes more. If you are running without an OpenGL hardware driver, you will see exactly 24 pixel formats listed (all belonging to the Microsoft Generic Implementation). If you have a hardware accelerator (either an MCD or an ICD), you’ll note that the accelerated pixel formats are listed first, followed by the 24 generic pixel formats belonging to Microsoft. This means that when hardware acceleration is present, you actually can choose from two implementations of OpenGL. The first are the hardware-accelerated pixel formats belonging to the hardware accelerator. The second are the pixel formats for Microsoft’s software implementation.

Knowing this bit of information can be useful. For one thing, it means that a software implementation is always available for rendering to bitmaps or printer devices. It also means that if you so desire (for debugging purposes, perhaps), you can force software rendering, even when an application might typically select hardware acceleration.

One final thing you may notice is that many pixel formats look the same. In these cases, the pixel formats are supporting multisampled buffers. This feature came along after the PIXELFORMATDESCRIPTOR was cast in stone, and we’ll have more to say about this later in the chapter.

Selecting and Setting a Pixel Format

Enumerating all the available pixel formats and examining each one to find one that meets your needs could turn out to be quite tedious. Windows provides a shortcut function that makes this process somewhat simpler. The ChoosePixelFormat function allows you to create a pixel format structure containing the desired attributes of your 3D window. The ChoosePixelFormat function then finds the closest match possible (with preference for hardware-accelerated pixel formats) and returns the most appropriate index. The pixel format is then set with a call to another new Windows function, SetPixelFormat. The following code segment shows the use of these two functions:

Initially, the PIXELFORMATDESCRIPTOR structure is filled with the desired characteristics of the 3D-enabled window. In this case, you want a double-buffered pixel format that renders into a window, so you request 32-bit color and a 16-bit depth buffer. If the current implementation supports 24-bit color at best, the returned pixel format will be a valid 24-bit color format. Depth buffer resolution is also subject to change. An implementation might support only a 24-bit or 32-bit depth buffer. In any case, ChoosePixelFormat always tries to return a valid pixel format, and if at all possible, it returns one that is hardware-accelerated.

Some programmers and programming needs might require more sophisticated selection of a pixel format. In these cases, you need to enumerate and inspect all available pixel formats or use the WGL extension presented later in this chapter. For most uses, however, the preceding code is sufficient to prime your window to receive OpenGL rendering commands.

The OpenGL Rendering Context

A typical Windows application can consist of many windows. You can even set a pixel format for each one (using that windows device context) if you want! But SetPixelFormat can be called only once per window. When you call an OpenGL command, how does it know which window to send its output to? In the previous chapters, we used the GLUT framework, which provided a single window to display OpenGL output. Recall that with normal Windows GDI-based drawing, each window has its own device context.

To accomplish the portability of the core OpenGL functions, each environment must implement some means of specifying a current rendering window before executing any OpenGL commands. Just as the Windows GDI functions use the windows device contexts, the OpenGL environment is embodied in what is known as the rendering context. Just as a device context remembers settings about drawing modes and commands for the GDI, the rendering context remembers OpenGL settings and commands.

You create an OpenGL rendering context by calling the wglCreateContext function. This function takes one parameter: the device context of a window with a valid pixel format. The data type of an OpenGL rendering context is HGLRC. The following code shows the creation of an OpenGL rendering context:

HGLRC hRC; // OpenGL rendering context

HDC hDC; // Windows device context

. . .

// Select and set a pixel format

. . .

hRC = wglCreateContext(hDC);

A rendering context is created that is compatible with the window for which it was created. You can have more than one rendering context in your application—for instance, two windows that are using different drawing modes, perspectives, and so on. However, for OpenGL commands to know which window they are operating on, only one rendering context can be active at any one time per thread. When a rendering context is made active, it is said to be current.

When made current, a rendering context is also associated with a device context and thus with a particular window. Now, OpenGL knows which window into which to render. You can even move an OpenGL rendering context from window to window, but each window must have the same pixel format. To make a rendering context current and associate it with a particular window, you call the wglMakeCurrent function. This function takes two parameters, the device context of the window and the OpenGL rendering context:

void wglMakeCurrent(HDC hDC, HGLRC hRC);

Putting It All Together

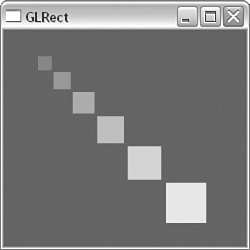

We’ve covered a lot of ground over the past several pages. We’ve described each piece of the puzzle individually, but now let’s look at all the pieces put together. In addition to seeing all the OpenGL-related code, we should examine some of the minimum requirements for any Windows program to support OpenGL. Our sample program for this section is GLRECT. It should look somewhat familiar because it is also the first GLUT-based sample program from Chapter 2. Now, however, the program is a full-fledged Windows program written with nothing but C++ and the Win32 API. Figure 19.3 shows the output of the new program, complete with a bouncing square.

Figure 19.3. Output from the GLRECT program with a bouncing square.

Creating the Window

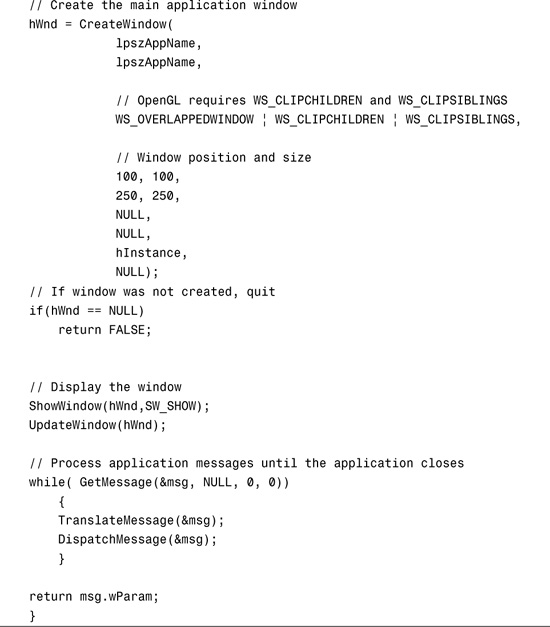

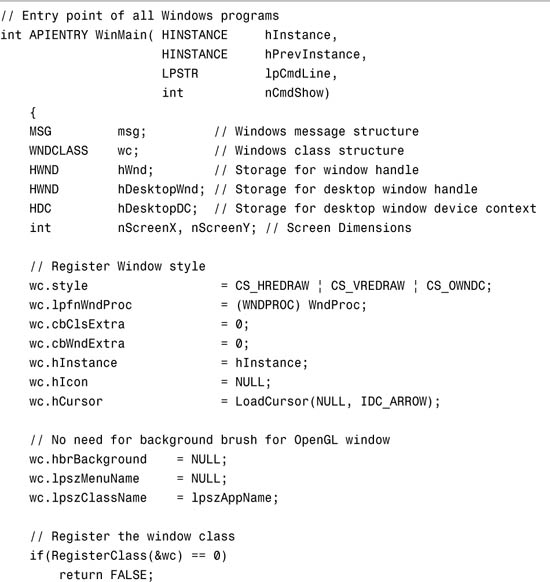

The starting place for any low-level Windows-based GUI program is the WinMain function. In this function, you register the window type, create the window, and start the message pump. Listing 19.1 shows the WinMain function for the first sample.

Listing 19.1. The WinMain Function of the GLRECT Sample Program

This listing pretty much contains your standard Windows GUI startup code. Only two points really bear mentioning here. The first is the choice of window styles set in CreateWindow. You can generally use whatever window styles you like, but you do need to set the WS_CLIPCHILDREN and WS_CLIPSIBLINGS styles. These styles were required in earlier versions of Windows, but later versions have dropped them as a strict requirement. The purpose of these styles is to keep the OpenGL rendering context from rendering into other windows, which can happen in GDI. However, an OpenGL rendering context must be associated with only one window at a time.

The second note you should make about this startup code is the use of CS_OWNDC for the window style. Why you need this innocent-looking flag requires a bit more explanation. You need a device context for both GDI rendering and for OpenGL double-buffered page flipping. To understand what CS_OWNDC has to do with this, you first need to take a step back and review the purpose and use of a windows device context.

First, You Need a Device Context

Before you can draw anything in a window with GDI, you first need the window’s device context. You need it whether you’re doing OpenGL, GDI, or even Direct3D programming. Any drawing or painting operation in Windows (even if you’re drawing on a bitmap in memory) requires a device context that identifies the specific object being drawn on. You retrieve the device context to a window with a simple function call:

HDC hDC = GetDC(hWnd);

The hDC variable is your handle to the device context of the window identified by the window handle hWnd. You use the device context for all GDI functions that draw in the window. You also need the device context for creating an OpenGL rendering context, making it current, and performing the buffer swap. You tell Windows that you don’t need the device context for the window any longer with another simple function call, using the same two values:

ReleaseDC(hWnd, hDC);

The standard Windows programming wisdom is that you retrieve a device context, use it for drawing, and then release it again as soon as possible. This advice dates back to the pre Win32 days; under Windows 3.1 and earlier, you had a small pool of memory allocated for system resources, such as the windows device context. What happened when Windows ran out of system resources? If you were lucky, you got an error message. If you were working on something really important, the operating system could somehow tell, and it would instead crash and take all your work with it. Well, at least it seemed that way!

The best way to spare your users this catastrophe was to make sure that the GetDC function succeeded. If you did get a device context, you did all your work as quickly as possible (typically within one message handler) and then released the device context so that other programs could use it. The same advice applied to other system resources such as pens, fonts, and brushes.

Enter Win32

Windows NT and the subsequent Win32-based operating systems were a tremendous blessing for Windows programmers, in more ways than can be recounted here. Among their many benefits was that you could have all the system resources you needed until you exhausted available memory or your application crashed. (At least it wouldn’t crash the OS!) It turns out that the GetDC function is, in computer time, quite an expensive function call to make. If you got the device context when the window was created and hung on to it until the window was destroyed, you could speed up your window painting considerably. You could hang on to brushes, fonts, and other resources that would have to be created or retrieved and potentially reinitialized each time the window was invalidated.

An old popular example of this Win32 benefit was a program that created random rectangles and put them in random locations in the window. (This was a GDI sample.) The difference between code written the old way and code written the new way was astonishingly obvious. Wow—Win32 was great!

Three Steps Forward, Two Steps Back

Windows 95, 98, and ME brought Win32 programming to the mainstream, but still had a few of the old 16-bit limitations deep down in the plumbing. The situation with losing system resources was considerably improved, but it was not eliminated entirely. The operating system could still run out of resources, but (according to Microsoft) it was unlikely. Alas, life is not so simple. Under Windows NT, when an application terminates, all allocated system resources are automatically returned to the operating system. Under Windows 95, 98, or ME, you have a resource leak if the program crashes or the application fails to release the resources it allocated. Eventually, you will start to stress the system, and you can run out of system resources (or device contexts).

What happens when Windows doesn’t have enough device contexts to go around? Well, it just takes one from someone who is being a hog with them. This means that if you call GetDC and don’t call ReleaseDC, Windows 95, 98, or ME might just appropriate your device context when it becomes stressed. The next time you call wglMakeCurrent or SwapBuffers, your device context handle might not be valid. Your application might crash or mysteriously stop rendering. Ask someone in customer support how well it goes over when you try to explain to a customer that his or her problem with your application is really Microsoft’s fault!

All Is Not Lost

You actually have a way to tell Windows to create a device context just for your window’s use. This feature is useful because every time you call GetDC, you have to reselect your fonts, the mapping mode, and so on. If you have your own device context, you can do this sort of initialization only once. Plus, you don’t have to worry about your device context handle being yanked out from under you. Doing this is simple: You simply specify CS_OWNDC as one of your class styles when you register the window. A common error is to use CS_OWNDC as a window style when you call Create. There are window styles and there are class styles, but you can’t mix and match.

Code to register your window style generally looks something like this:

WNDCLASS wc; // Windows class structure

...

...

// Register window style

wc.style = CS_HREDRAW | CS_VREDRAW | CS_OWNDC;

wc.lpfnWndProc = (WNDPROC) WndProc;

...

...

wc.lpszClassName = lpszAppName;

// Register the window class

if(RegisterClass(&wc) == 0)

return FALSE;

You then specify the class name when you create the window:

hWnd = CreateWindow( wc.lpszClassName, szWindowName, ...

Graphics programmers should always use CS_OWNDC in the window class registration. This ensures that you have the most robust code possible on any Windows platform. Another consideration is that many older OpenGL hardware drivers have bugs because they expect CS_OWNDC to be specified. They might have been originally written for NT, so the drivers do not account for the possibility that the device context might become invalid. The driver might also trip up if the device context does not retain its configuration (as is the case in the GetDC/ReleaseDC scenario).

Regardless of the specifics, some older drivers are not very stable unless you specify the CS_OWNDC flag. Today’s drivers rarely have this issue anymore, but one thing you learn as an application developer is that it’s amazing where your code may end up sometimes! Still, the other reasons outlined here provide plenty of incentive to make what is basically a minor code adjustment.

Using the OpenGL Rendering Context

The real meat of the GLRECT sample program is in the window procedure, WndProc. The window procedure receives window messages from the operating system and responds appropriately. This model of programming, called message or event-driven programming, is the foundation of the modern Windows GUI.

When a window is created, it first receives a WM_CREATE message from the operating system. This is the ideal location to create and set up the OpenGL rendering context. A window also receives a WM_DESTROY message when it is being destroyed. Naturally, this is the ideal place to put cleanup code. Listing 19.2 shows the SetDCPixelFormat format, which is used to select and set the pixel format, along with the window procedure for the application. This function contains the same basic functionality that we have been using with the GLUT framework.

Listing 19.2. Setting the Pixel Format and Handling the Creation and Deletion of the OpenGL Rendering Context

Initializing the Rendering Context

The first thing you do when the window is being created is retrieve the device context (remember, you hang on to it) and set the pixel format:

// Store the device context

hDC = GetDC(hWnd);

// Select the pixel format

SetDCPixelFormat(hDC);

Then you create the OpenGL rendering context and make it current:

// Create the rendering context and make it current

hRC = wglCreateContext(hDC);

wglMakeCurrent(hDC, hRC);

The last task you handle while processing the WM_CREATE message is creating a Windows timer for the window. You will use this shortly to affect the animation loop:

// Create a timer that fires 30 times a second

SetTimer(hWnd,33,1,NULL);

break;

Note that WM_TIMER is not the best way to achieve high frame rates. We’ll revisit this issue later, but for now, it serves our purposes.

At this point, the OpenGL rendering context has been created and associated with a window with a valid pixel format. From this point forward, all OpenGL rendering commands will be routed to this context and window.

Shutting Down the Rendering Context

When the window procedure receives the WM_DESTROY message, the OpenGL rendering context must be deleted. Before you delete the rendering context with the wglDeleteContext function, you must first call wglMakeCurrent again, but this time with NULL as the parameter for the OpenGL rendering context:

// Deselect the current rendering context and delete it

wglMakeCurrent(hDC,NULL);

wglDeleteContext(hRC);

Before deleting the rendering context, you should delete any display lists, texture objects, or other OpenGL-allocated memory.

Other Windows Messages

All that is required to enable OpenGL to render into a window is creating and destroying the OpenGL rendering context. However, to make your application well behaved, you need to follow some conventions with respect to message handling. For example, you need to set the viewport when the window changes size, by handling the WM_SIZE message:

// Window is resized.

case WM_SIZE:

// Call our function which modifies the clipping

// volume and viewport

ChangeSize(LOWORD(lParam), HIWORD(lParam));

break;

The processing that happens in response to the WM_SIZE message is the same as in the function you handed off to glutReshapeFunc in GLUT-based programs. The window procedure also receives two parameters: lParam and wParam. The low word of lParam is the new width of the window, and the high word is the height.

This example uses the WM_TIMER message handler to do the idle processing. The process is not really idle, but the previous call to SetTimer causes the WM_TIMER message to be received on a fairly regular basis (fairly because the exact interval is not guaranteed).

Other Windows messages handle things such as keyboard activity (WM_CHAR, WM_KEYDOWN) and mouse movements (WM_MOUSEMOVE).

The WM_PAINT message bears closer examination. This message is sent to a window whenever Windows needs to draw or redraw its contents. To tell Windows to redraw a window anyway, you invalidate the window with one function call in the WM_TIMER message handler:

IdleFunction();

InvalidateRect(hWnd,NULL,FALSE);

Here, IdleFunction updates the position of the square, and InvalidateRect tells Windows to redraw the window (now that the square has moved).

Most Windows programming books show you a WM_PAINT message handler with the well-known BeginPaint/EndPaint function pairing. BeginPaint retrieves the device context so it can be used for GDI drawing, and EndPaint releases the context and validates the window. In our previous discussion of why you need the CS_OWNDC class style, we pointed out that using this function pairing is generally a bad idea for high-performance graphics applications. The following code shows roughly the equivalent functionality, without any GDI overhead:

// The painting function. This message is sent by Windows

// whenever the screen needs updating.

case WM_PAINT:

{

// Call OpenGL drawing code

RenderScene();

// Call function to swap the buffers

SwapBuffers(hDC);

// Validate the newly painted client area

ValidateRect(hWnd,NULL);

}

break;

Because this example has a device context (hDC), you don’t need to continually get and release it. We’ve mentioned the SwapBuffers function previously but not fully explained it. This function takes the device context as an argument and performs the buffer swap for double-buffered rendering. This is why you need the device context readily available when rendering.

Notice that you must manually validate the window with the call to ValidateRect after rendering. Without the BeginPaint/EndPaint functionality in place, there is no way to tell Windows that you have finished drawing the window contents. One alternative to using WM_TIMER to invalidate the window (thus forcing a redraw) is to simply not validate the window. If the window procedure returns from a WM_PAINT message and the window is not validated, the operating system generates another WM_PAINT message. This chain reaction causes an endless stream of repaint messages. One problem with this approach to animation is that it can leave little opportunity for other window messages to be processed. Although rendering might occur very quickly, the user might find it difficult or impossible to resize the window or use the menu, for example.

OpenGL and Windows Fonts

One nice feature of Windows is its support for TrueType fonts. These fonts have been native to Windows since before Windows became a 32-bit operating system. TrueType fonts enhance text appearance because they are device independent and can be easily scaled while still keeping a smooth shape. TrueType fonts are vector fonts, not bitmap fonts. What this means is that the character definitions consist of a series of point and curve definitions. When a character is scaled, the overall shape and appearance remain smooth.

Textual output is a part of nearly any Windows application, and 3D applications are no exception. Microsoft provided support for TrueType fonts in OpenGL with two new wiggle functions. You can use the first, wglUseFontOutlines, to create 3D font models that can be used to create 3D text effects. The second, wglUseFontBitmaps, creates a series of font character bitmaps that can be used for 2D text output in a double-buffered OpenGL window.

3D Fonts and Text

The wglUseFontOutlines function takes a handle to a device context. It uses the TrueType font currently selected into that device context to create a set of display lists for that font. Each display list renders just one character from the font. Listing 19.3 shows the SetupRC function from the sample program TEXT3D, where you can see the entire process of creating a font, selecting it into the device context, creating the display lists, and, finally, deleting the (Windows) font.

Listing 19.3. Creating a Set of 3D Characters

The function call to wglUseFontOutlines is the key function call to create your 3D character set:

wglUseFontOutlines(hDC, 0, 128, nFontList, 0.0f, 0.5f,

WGL_FONT_POLYGONS, agmf);

The first parameter is the handle to the device context where the desired font has been selected. The next two parameters specify the range of characters (called glyphs) in the font to use. In this case, you use the 1st through 127th characters. (The indexes are zero based.) The third parameter, nFontList, is the beginning of the range of display lists created previously. It is important to allocate your display list space before using either of the WGL font functions. The next parameter is the chordal deviation. Think of it as specifying how smooth you want the font to appear, with 0.0 being the most smooth.

The 0.5f is the extrusion of the character set. The 3D characters are defined to lie in the xy plane. The extrusion determines how far along the z-axis the characters extend. WGL_FONT_POLYGONS tells OpenGL to create the characters out of triangles and quads so that they are solid. When this information is specified, normals are also calculated and supplied for each letter. Only one other value is valid for this parameter: WGL_FONT_LINES. It produces a wireframe version of the character set and does not generate normals.

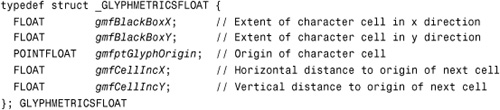

The last argument is an array of type GLYPHMETRICSFLOAT, which is defined in this way:

Windows fills in this array according to the selected font’s characteristics. These values can be useful when you want to determine the size of a string rendered with 3D characters.

When the display list for each character is called, it renders the character and advances the current position to the right (positive x direction) by the width of the character cell. This is like calling glTranslate after each character, with the translation in the positive x direction. You can use the glCallLists function in conjunction with glListBase to treat a character array (a string) as an array of offsets from the first display list in the font. A simple text output method is shown in Listing 19.4. The output from the TEXT3D program appears in Figure 19.4.

Listing 19.4. Rendering a 3D Text String

void RenderScene(void)

{

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

// Blue 3D text

glColor3ub(0, 0, 255);

glPushMatrix();

glListBase(nFontList);

glCallLists (6, GL_UNSIGNED_BYTE, "OpenGL");

glPopMatrix();

}

Figure 19.4. Sample 3D text in OpenGL.

2D Fonts and Text

The wglUseFontBitmaps function is similar to its 3D counterpart. This function does not extrude the bitmaps into 3D, however, but instead creates a set of bitmap images of the glyphs in the font. You output images to the screen using the bitmap functions discussed in Chapter 7, “Imaging with OpenGL.” Each character rendered advances the raster position to the right in a similar manner to the 3D text.

Listing 19.5 shows the code to set up the coordinate system for the window (ChangeSize function), create the bitmap font (SetupRC function), and finally render some text (RenderScene function). The output from the TEXT2D sample program is shown in Figure 19.5.

Listing 19.5. Creating and Using a 2D Font

Figure 19.5. Output from the TEXT2D sample program.

Note that wglUseFontBitmaps is a much simpler function. It requires only the device context handle, the beginning and last characters, and the first display list name to be used:

wglUseFontBitmaps(hDC, 0, 128, nFontList);

Because bitmap fonts are created based on the actual font and map directly to pixels on the screen, the lfHeight member of the LOGFONT structure is used exactly in the same way it is for GDI font rasterization.

Full-Screen Rendering

With OpenGL becoming popular among PC game developers, a common question is “How do I do full-screen rendering with OpenGL?” The truth is, if you’ve read this chapter, you already know how to do full-screen rendering with OpenGL—it’s just like rendering into any other window! The real question is “How do I create a window that takes up the entire screen and has no borders?” After you do this, rendering into this window is no different from rendering into any other window in any other sample in this book.

Even though this issue isn’t strictly related to OpenGL, it is of enough interest to a wide number of our readers that we give this topic some coverage here.

Creating a Frameless Window

The first task is to create a window that has no border or caption. This procedure is quite simple. Following is the window creation code from the GLRECT sample program. We’ve made one small change by making the window style WS_POPUP instead of WS_OVERLAPPEDWINDOW:

// Create the main application window

hWnd = CreateWindow(lpszAppName,

lpszAppName,

// OpenGL requires WS_CLIPCHILDREN and WS_CLIPSIBLINGS

WS_POPUP | WS_CLIPCHILDREN | WS_CLIPSIBLINGS,

// Window position and size

100, 100,

250, 250,

NULL,

NULL,

hInstance,

NULL);

The result of this change is shown in Figure 19.6.

Figure 19.6. A window with no caption or border.

As you can see, without the proper style settings, the window has neither a caption nor a border of any kind. Don’t forget to take into account that now the window no longer has a close button on it. The user will have to press Alt+F4 to close the window and exit the program. Most user-friendly programs watch for a keystroke such as the Esc key or Q to terminate the program.

Creating a Full-Screen Window

Creating a window the size of the screen is almost as trivial as creating a window with no caption or border. The parameters of the CreateWindow function allow you to specify where onscreen the upper-left corner of the window will be positioned and the width and height of the window. To create a full-screen window, you always use (0,0) as the upper-left corner. The only trick would be determining what size the desktop is so you know how wide and high to make the window. You can easily determine this information by using the Windows function GetDeviceCaps.

Listing 19.6 shows the new WinMain function from GLRECT, which is now the new sample FSCREEN. To use GetDeviceCaps, you need a device context handle. Because you are in the process of creating the main window, you need to use the device context from the desktop window.

Listing 19.6. Creating a Full-Screen Window

The key code here is the lines that get the desktop window handle and device context. The device context can then be used to obtain the screen’s horizontal and vertical resolution:

hDesktopWnd = GetDesktopWindow();

hDesktopDC = GetDC(hDesktopWnd);

// Get the screen size

nScreenX = GetDeviceCaps(hDesktopDC, HORZRES);

nScreenY = GetDeviceCaps(hDesktopDC, VERTRES);

// Release the desktop device context

ReleaseDC(hDesktopWnd, hDesktopDC);

If your system has multiple monitors, you should note that the values returned here would be for the primary display device. You might also be tempted to force the window to be a topmost window (using the WS_EX_TOPMOST window style). However, doing so makes it possible for your window to lose focus but remain on top of other active windows. This may confuse the user when the program stops responding to keyboard strokes.

You may also want to take a look at the Win32 function ChangeDisplaySettings in your Windows SDK documentation. This function allows you to dynamically change the desktop size at runtime and restore it when your application terminates. This capability may be desirable if you want to have a full-screen window but at a lower or higher display resolution than the default. If you do change the desktop settings, you must not create the rendering window or set the pixelformat until after the desktop settings have changed. OpenGL rendering contexts created under one environment (desktop settings) are not likely to be valid in another.

Multithreaded Rendering

A powerful feature of the Win32 API is multithreading. The topic of threading is beyond the scope of a book on computer graphics. Basically, a thread is the unit of execution for an application. Most programs execute instructions sequentially from the start of the program until the program terminates. A thread of execution is the path through the machine code that the CPU traverses as it fetches and executes instructions. By creating multiple threads using the Win32 API, you can create multiple paths through your source code that are followed simultaneously.

Think of multithreading as being able to call two functions at the same time and then have them executed simultaneously. Of course, the CPU cannot actually execute two code paths simultaneously, so it switches between threads during normal program flow much the same way a multitasking operating system switches between tasks. If you have more than one processor, or a multicore CPU, multithreaded programs can experience quite substantial performance gains as the work required is split between two execution units.

Even on a single CPU system, a program carefully designed for multithreaded execution can outperform a single-threaded application in many circumstances. On a single processor machine, one thread can service I/O requests, for example, while another handles the GUI.

Some OpenGL implementations take advantage of a multiprocessor system. If, for example, the transformation and lighting units of the OpenGL pipeline are not hardware accelerated, a driver can create another thread so that these calculations are performed by one CPU while another CPU feeds the transformed data to the rasterizer. Threads within a driver can also be used to offload command assembly and dispatch, decreasing the latency of many OpenGL calls.

You might think that using two threads to do your OpenGL rendering would speed up your rendering as well. You could perhaps have one thread draw the background objects in a scene while another thread draws the more dynamic elements. This approach is almost always a bad idea. Although you can create two OpenGL rendering contexts for two different threads, most drivers fail if you try to render with both of them in the same window. Technically, this multithreading should be possible, and the Microsoft generic implementation will succeed if you try it, as might many hardware implementations. In the real world, the extra work you place on the driver with two contexts trying to share the same framebuffer will most likely outweigh any performance benefit you hope to gain from using multiple threads.

Multithreading can benefit your OpenGL rendering on a multiprocessor system or even on a single processor system in at least two ways. In the first scenario, you have two different windows, each with its own rendering context and thread of execution. This case could still stress some drivers (some of the low-end game boards are stressed just by two applications using OpenGL simultaneously!), but many professional OpenGL implementations can handle it quite well.

The second example is if you are writing a game or a real-time simulation. You can have a worker thread perform physics calculations or artificial intelligence or handle player interaction while another thread does the OpenGL rendering. This scenario requires careful sharing of data between threads but can provide a substantial performance boost on a dual-processor machine, and even a single-processor machine can improve the responsiveness of your program. Although we’ve made the disclaimer that multithreaded programming is outside the scope of this book, we present for your examination the sample program RTHREAD in the source distribution for this chapter. This program creates and uses a rendering thread. This program also demonstrates the use of the OpenGL WGL extensions.

OpenGL and WGL Extensions

On the Windows platform, you do not have direct access to the OpenGL driver. All OpenGL function calls are routed through the opengl32.dll system file. Because this DLL understands only OpenGL 1.1 entrypoints (function names), you must have a mechanism to get a pointer to an OpenGL function supported directly by the driver. Fortunately, the Windows OpenGL implementation has a function named wglGetProcAddress that allows you to retrieve a pointer to an OpenGL function supported by the driver, but not necessarily natively supported by opengl32.dll:

PROC wglGetProcAddress(LPSTR lpszProc);

This function takes the name of an OpenGL function or extension and returns a function pointer that you can use to call that function directly. For this to work, you must know the function prototype for the function so you can create a pointer to it and subsequently call the function.

OpenGL extensions (and post-version 1.1 features) come in two flavors. Some are simply new constants and enumerants recognized by a vendor’s hardware driver. Others require that you call new functions added to the API. The number of extensions is extensive, especially when you add in the newer OpenGL core functionality and vendor-specific extensions. Complete coverage of all OpenGL extensions would require an entire book in itself (if not an encyclopedia!). You can find a registry of extensions on the Internet and among the Web sites listed in Appendix A, “Further Reading/References.”

Fortunately, the following two header files give you programmatic access to most OpenGL extensions:

#include <wglext.h>

#include <glext.h>

These files can be found at the OpenGL extension registry Web site. They are also maintained by most graphics card vendors (see their developer support Web sites), and the latest version as of this book’s printing is included in the source code distribution on our Web site The wglext.h header contains a number of extensions that are Windows specific, and the glext.h header contains both standard OpenGL extensions and many vendor-specific OpenGL extensions.

Simple Extensions

Because this book covers known OpenGL features up to version 2.1, you may have already discovered that many of the sample programs in this book use these extensions for Windows builds of the sample code found in previous chapters. For example, in Chapter 9, “Texture Mapping: Beyond the Basics,” we showed you how to add specular highlights to textured geometry using OpenGL’s separate specular color with the following function call:

glLightModeli(GL_LIGHT_MODEL_COLOR_CONTROL, GL_SEPARATE_SPECULAR_COLOR);

However, this capability is not present in OpenGL 1.1, and the GL_LIGHT_MODEL_COLOR_CONTROL and GL_SEPARATE_SPECULAR_COLOR constants are not defined in the Windows version of gl.h. They are, however, found in glext.h, and this file is already included automatically in all the samples in this book via the gltools.h header file. The glLightModeli function, on the other hand, has been around since OpenGL 1.0. These kinds of simple extensions simply pass new tokens to existing entrypoints (functions) and require only that you have the constants defined and know that the extension or feature is supported by the hardware.

Even if the OpenGL version is still reported as 1.1, this capability may still be included in the driver. This feature was originally an extension that was later promoted to the OpenGL core functionality. You can check for this and other easy-to-access extensions (no function pointers needed) quickly by using the following GlTools function:

bool gltIsExtSupported(const char *szExtension);

In the case of separate specular color, you might just code something like this:

if(gltIsExtSupported(GL_EXT_separate_specular_color))

RenderOnce();

else

UseMultiPassTechnique();

Here, you call the RenderOnce function if the extension (or feature) is supported, and the UserMultiPassTechnique function to render an alternate (drawn twice and blended together) and slower way to achieve the same effect.

Using New Entrypoints

A more complex extension example comes from the IMAGING sample program in Chapter 7. In this case, the optional imaging subset not only is missing from the Windows version of gl.h, but is optional in all subsequent versions of OpenGL as well. This is an example of the type of feature that has to be there, or there is no point in continuing. Thus, you first check for the presence of the imaging subset by checking for its extension string:

// Check for imaging subset, must be done after window

// is created or there won't be an OpenGL context to query

if(gltIsExtSupported("GL_ARB_imaging") == 0)

{

printf("Imaging subset not supported

");

return 0;

}

The function prototype typedefs for the functions used are found in glext.h, and you use them to create function pointers to each of the functions you want to call. On the Macintosh platform, the standard system headers already contain these functions:

#ifndef __APPLE__

// These typdefs are found in glext.h

PFNGLHISTOGRAMPROC glHistogram = NULL;

PFNGLGETHISTOGRAMPROC glGetHistogram = NULL;

PFNGLCOLORTABLEPROC glColorTable = NULL;

PFNGLCONVOLUTIONFILTER2DPROC glConvolutionFilter2D = NULL;

#endif

Now you use the glTools function gltGetExtensionPointer to retrieve the function pointer to the function in question. This function is simply a portability wrapper for wglGetProcAddress on Windows and an admittedly more complex method on the Apple of getting the function pointers:

#ifndef __APPLE__

glHistogram = gltGetExtensionPointer("glHistogram");

glGetHistogram = gltGetExtensionPointer("glGetHistogram");

glColorTable = gltGetExtensionPointer("glColorTable");

glConvolutionFilter2D = gltGetExtensionPointer("glConvolutionFilter2D");

#endif

Then you simply use the extension as if it were a normally supported part of the API:

// Start collecting histogram data, 256 luminance values

glHistogram(GL_HISTOGRAM, 256, GL_LUMINANCE, GL_FALSE);

glEnable(GL_HISTOGRAM);

Auto-Magic Extensions

Most normal developers would fairly quickly grow weary of always having to query for new function pointers at the beginning of the program. There is a faster way, and in fact, we used this shortcut for all the samples in this book. The GLEE library (GL Easy Extension library) is included in the shared directory with the source distribution for the book on our Web site. Automatically gaining access to all the function pointers supported by the driver is a simple matter of adding glee.c to your project, and glee.h to the top of your header list.

GLEE is quite clever; the new functions initialize themselves the first time they are called. This removes the need to perform any specialized initialization to gain access to all the OpenGL functionality available by a particular driver on Windows. This does not, however, remove the need to check for which version of OpenGL is currently supported by the driver. If an entrypoint does not exist in the driver, the GLEE library will simply return from the function call and do nothing.

WGL Extensions

Several Windows-specific WGL extensions are also available. You access the WGL extensions’ entrypoints in the same manner as the other extensions—using the wglGetProcAddress function. There is, however, an important exception. Typically, among the many WGL extensions, only two are advertised by using glGetString(GL_EXTENSIONS). One is the swap interval extension (which allows you to synchronize buffer swaps with the vertical retrace), and the other is the WGL_ARB_extensions_string extension. This extension provides yet another entrypoint that is used exclusively to query for the WGL extensions. The ARB extensions string function is prototyped like this:

const char *wglGetExtensionsStringARB(HDC hdc);

This function retrieves the list of WGL extensions in the same manner in which you previously would have used glGetString. Using the wglext.h header file, you can retrieve a pointer to this function like this:

PFNWGLGETEXTENSIONSSTRINGARBPROC *wglGetExtensionsStringARB;

wglGetExtensionsStringARB = (PFNWGLGETEXTENSIONSSTRINGARBPROC)

wglGetProcAddress("wglGetExtensionsStringARB");

glGetString returns the WGL_ARB_extensions_string identifier, but often developers skip this check and simply look for the entrypoint, as shown in the preceding code fragment. This approach usually works with most OpenGL extensions, but you should realize that this is, strictly speaking, “coloring outside the lines.” Some vendors export extensions on an “experimental” basis, and these extensions may not be officially supported, or the functions may not function properly if you skip the extension string check. Also, more than one extension may use the same function or functions. Testing only for function availability provides no information on the availability or the reliability of the specific extension or extensions that are supported.

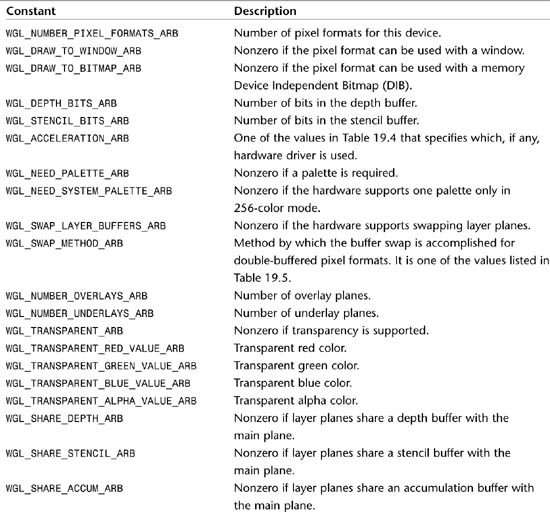

Extended Pixel Formats

Perhaps one of the most important WGL extensions available for Windows is the WGL_ARB_pixel_format extension. This extension provides a mechanism that allows you to check for and select pixel format features that did not exist when PIXELFORMATDESCRIPTOR was first created. For example, if your driver supports multisampled rendering (for full-scene antialiasing, for example), there is no way to select a pixel format with this support using the old PIXELFORMATDESCRIPTOR fields. If this extension is supported, the driver exports the following functions:

BOOL wglGetPixelFormatAttribivARB(HDC hdc, GLint iPixelFormat,

GLint iLayerPlane, GLuint nAttributes,

const GLint *piAttributes, GLint *piValues);

BOOL wglGetPixelFormatAttribfvARB(HDC hdc, GLint iPixelFormat,

GLint iLayerPlane, GLuint nAttributes,

const GLint *piAttributes, GLfloat *pfValues);

These two variations of the same function allow you to query a particular pixel format index and retrieve an array containing the attribute data for that pixel format. The first argument, hdc, is the device context of the window that the pixel format will be used for, followed by the pixel format index. The iLayerPlane argument specifies which layer plane to query (0 on Vista, or if your implementation does not support layer planes). Next, nAttributes specifies how many attributes are being queried for this pixel format, and the array piAttributes contains the list of attribute names to be queried. The attributes that can be specified are listed in Table 19.3. The final argument is an array that will be filled with the corresponding pixel format attributes.

Table 19.3. Pixelformat Attributes

Table 19.4. Acceleration Flags for WGL_ACCELERATION_ARB

Table 19.5. Buffer Swap Values for WGL_SWAP_METHOD_ARB

There is, however, a Catch-22 to these and all other OpenGL extensions. You must have a valid OpenGL rendering context before you can call either glGetString or wglGetProcAddress. This means that you must first create a temporary window, set a pixel format (we can actually cheat and just specify 1, which will be the first hardware accelerated format) and then obtain a pointer to one of the wglGetPixelFormatAttribARB functions. A convenient place to do this might be the splash screen or perhaps an initial options dialog box that is presented to the user. You should not, however, try to use the Windows desktop because your application does not own it!

The following simple example queries for a single attribute—the number of pixel formats supported—so that you know how many you may need to look at:

int attrib[] = { WGL_NUMBER_PIXEL_FORMATS_ARB };

int nResults[0];

wglGetPixelFormatAttributeivARB(hDC, 1, 0, 1, attrib, nResults);

// nResults[0] now contains the number of exported pixel formats

For a more detailed example showing how to look for a specific pixel format (including a multisampled pixel format), see the SPHEREWORLD32 sample program coming up next.

Win32 to the Max

SPHEREWORLD32 is a Win32-specific version of the Sphere World example we have returned to again and again throughout this book. SPHEREWORLD32 allows you to select windowed or full-screen mode, changes the display settings if necessary, and detects and allows you to select a multisampled pixel format. Finally, you use the Windows-specific font features to display the frame rate and other information onscreen. When in full-screen mode, you can even Alt+Tab away from the program, and the window will be minimized until reselected.

The complete source to this “ultimate” Win32 sample program contains extensive comments to explain every aspect of the program. In the initial dialog box that is displayed (see Figure 19.7), you can select full-screen or windowed mode, multisampled rendering (if available), and whether you want to enable the swap interval extension. A sample screen of the running program is shown in Figure 19.8.

Figure 19.7. The initial Options dialog box for SPHEREWORLD32.

Figure 19.8. Output from the SPHEREWORLD32 sample program.

Summary

This chapter introduced you to using OpenGL on the Win32 platform. You read about the different driver models and implementations available for Windows and what to watch. You also learned how to enumerate and select a pixel format to get the kind of hardware-accelerated or software rendering support you want. You’ve now seen the basic framework for a Win32 program that replaces the GLUT framework, so you can write true native Win32 (actually, all this works under Win64 too!) application code. We also showed you how to create a full-screen window for games or simulation-type applications. Additionally, we discussed some of the Windows-specific features of OpenGL on Windows, such as support for TrueType fonts and multiple rendering threads.

Finally, we offer in the source code the ultimate OpenGL on Win32 sample program, SPHEREWORLD32. This program demonstrated how to use a number of Windows-specific features and WGL extensions if they were available. It also demonstrated how to construct a well-behaved program that will run on everything from an old 8-bit color display to the latest 32-bit full-color mega-3D game accelerator.