CHAPTER 6

Introduction to Queuing and Simulation

The process models analyzed in Chapters 4 and 5 have one thing in common: They assume that activity times and demand are deterministic and constant; that is, that they are known with certainty. In reality, an element of variability will always exist in the time it takes to perform a certain task and in the demand for service. In situations where the variability is small, deterministic models of the type studied in Chapters 4 and 5 might be an adequate way of describing a process. However, in situations with more accentuated variability, these models do not suffice. In fact, the variability itself is often one of the most important characteristics to capture in the model of a process.

Consider, for example, the checkout process at a grocery store where one of the main design issues is to avoid long lines. Customers arrive at the checkout stations with their items so they can pay and leave. Each cashier scans the items, bags them, and collects payment from the customer. The time it takes to service a customer depends on the amount and type of groceries and the form of payment used; therefore, it varies from customer to customer. In addition, the number of customers per time unit that arrive at the cashier’s station, meaning the demand for service, is highly uncertain and variable. Applying a deterministic model to describe this process, using only the average service time and the average number of customer arrivals per time unit, fails to capture the variability and explain why queues are forming. This is because the deterministic model assumes that it always takes exactly the same amount of time for a cashier to serve a customer, and that customers arrive at constant intervals. Under these assumptions, it is easy to determine the number of cashiers needed to avoid queues. However, the fact that everyone has waited at a cashier’s station indicates that this is too simplistic of a way to describe the complex reality. Variability makes it difficult to match demand and capacity in such a way that queues are avoided.

The focus of this chapter is the incorporation of variability into models of business processes. These types of models, known in the literature as stochastic models, enable us to evaluate how the process design decisions affect waiting times, queue lengths, and service levels. An important difference compared to the deterministic models that have been considered so far is that in the stochastic models the waiting time is no longer an input parameter; it is the result of the specified process time and the demand pattern. Because eliminating non-value-adding waiting time is an important objective when designing a new business process (see, for example, Section 1.1), the usefulness of stochastic models in process design is obvious.

The modeling approaches that will be explored in this chapter belong to two distinct groups: analytical queuing models stemming from the field of mathematical queuing theory, and simulation models, which are in their nature experimental and in this case computer based. Chapters 7 through 10 also address the simulation modeling approach. The reason for considering these two approaches is that they offer different advantages to the process designer. The analytical queuing models express important performance characteristics in mathematical formulae and are convenient to use when the necessary conditions are in place. The drawback is that these conditions can be restrictive with regard to the process structure and the representation of variability in the models. The simulation models are more flexible, but they usually take more time to set up and run, in addition to requiring a computer with the appropriate software. Because the simulation models are experimental, they also require statistical analysis of input and output data before obtaining the appropriate information on performance characteristics sought by the process designer. This chapter investigates the technical aspects of these two modeling approaches. However, before getting into the modeling details, it is important to understand why variability in process parameters is such an important issue from an operational and an economic perspective.

From an operational perspective, the main problem with variability in processing times, demands, and capacity is that it leads to an unbalanced use of resources over time, causing the formation of waiting lines. This will be referred to loosely as a capacity-planning problem. The core of this problem is that queues (or waiting lines) can arise at any given time when the demand for service exceeds the resource capacity for providing the service. This means that even if the average demand falls well below the average capacity, high variability will lead to instances when the demand for service exceeds the capacity and a queue starts to form. This explains the formation of waiting lines in the grocery store example. On the other hand, in some instances the capacity to provide service will greatly exceed the demand for service. This causes the queue to decrease. Moreover, if no queue exists at all, the resource providing the service will be idle. At a grocery store, even if a cashier has nothing to do for long periods during the day, the arrival of a customer with a full cart could initiate a queue.

At this point, it is important to recognize that queues concern individuals as well as documents, products, or intangible jobs such as blocks of information sent over the Internet. However, the issues with waiting in line are particularly important in service industries where the queue consists of people waiting for service. The reason is that, as opposed to objects or pieces of information, people usually find waiting frustrating, and they often give an immediate response regarding their dissatisfaction.

From an economic perspective, the capacity-planning problem caused by high variability in demand and process times comes down to balancing the cost of having too much capacity on certain occasions against the cost associated with long waiting lines at other times. The cost of delays and waiting can take on many different forms including the following.

- The social costs of not providing fast enough care at a hospital.

- The cost of lost customers who go elsewhere because of inadequate service.

- The cost of discounts because of late deliveries.

- The cost of goodwill loss and bad reputation affecting future sales.

- The cost of idle employees who have to wait for some task to be completed before they can continue their work.

Ultimately, these costs will affect the organization, even though the impact sometimes might be hard to quantify. The cost of excessive capacity is usually easier to identify. Primarily, it consists of the fixed and variable costs of additional and unused capacity, including increased staffing levels. Returning to the grocery store example, the store must balance the cost of hiring additional cashiers and installing more checkout stations against the cost of lost customers and lower sales revenues due to long queues and waiting times at the checkout stations.

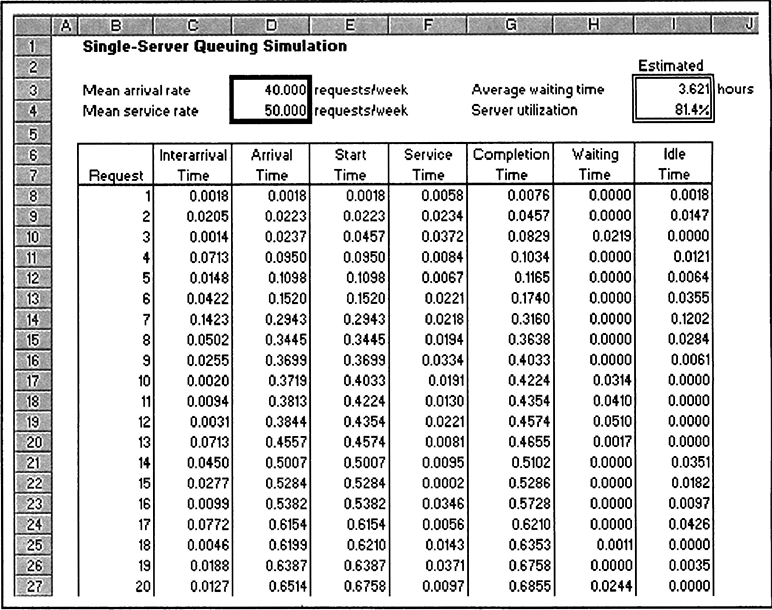

Figure 6.1 depicts the economic tradeoff associated with the capacity-planning problem. The x-axis represents the service capacity expressed as the number of jobs per unit of time the system can complete on average1. The y-axis represents the total costs associated with waiting and providing service. The waiting cost reflects the cost of having too little service capacity, and the service cost reflects the cost of acquiring and maintaining a certain service capacity.

It follows that in order to arrive at design decisions that will minimize the total costs, the first step is to quantify the delay associated with a certain capacity decision. Second, this delay needs to be translated into monetary terms to compare this to the cost of providing a certain service capacity. This chapter investigates models that deal with both of these issues.

Section 6.1 specifies a conceptual model over the basic queuing process and defines what a queuing system is. This section also discusses strategies that are often used in service industries for mitigating the negative effects of making people wait in line. The conceptual model for the basic queuing process serves as a basis for the analytical queuing models investigated in Section 6.2 and for the general understanding of the simulation models in Section 6.3. Finally, a summary and some concluding remarks are provided in Section 6.4.

FIGURE 6.1 Economic Tradeoff Between Service Capacity and Waiting Times

6.1 Queuing Systems, the Basic Queuing Process, and Queuing Strategies

Queuing processes, queuing systems, and queuing models might appear to be abstract concepts with limited practical applicability, but nothing could be further from the truth. Wherever people go in their daily lives, they encounter simple queuing systems and queuing processes (e.g. at the bank, in the grocery store, or when calling for a taxi, going to the doctor, eating at a restaurant, buying tickets to the theater, or taking the bus). In simple terms, an elementary queuing system consists of a service mechanism with servers providing service and one or more queues of customers or jobs waiting to receive service. The queuing process, on the other hand, describes the operations of the queuing system; that is, how customers arrive at the queuing system and how they proceed through it. The queuing system is an integral part of the queuing process. Noting the subtle distinction between a queuing process and a queuing system will prove useful when discussing detailed modeling issues. However, in practical situations and in higher-level discussions, these two terms are often interchangeable. Going back to the definition of a business process as a network of connected activities and buffers (see Section 1.1), a business process can be interpreted as a network of queuing processes or elementary queuing systems.

To expand the frame of reference regarding queuing systems and to make this concept more concrete, one can look at a number of examples of real-world queuing systems that can be broadly classified as commercial, transportation, business-internal, and social service systems.

Many of the queuing systems we encounter daily are commercial service systems, whereby a commercial organization serves external customers. Often the service is a personal interaction between the customer and an employee, but in some cases, the service provider might be a machine. Typically, customers go to a fixed location to seek service. Examples include a dentist’s office (where the server is the dentist), banks, checkout stations at supermarkets or other stores, gas stations, and automated teller machines. However, the server also can travel to the customer. Examples include a plumber or cable company technician visiting the customer’s home to perform a service.

The class of transportation service systems represents situations where vehicles are either customers or servers. Examples where vehicles are customers include cars and trucks waiting at tollbooths, railroad crossings, or traffic lights; trucks or ships waiting to be loaded; and airplanes waiting to access a runway. Situations where vehicles constitute the servers in a queuing system include taxicabs, fire trucks, elevators, buses, trains, and airplanes transporting customers between locations.

In business-internal service systems, the customers receiving service are internal to the organization providing the service. This definition includes inspection stations (the server), materials handling systems like conveyor belts, maintenance systems where a technician (the server) is dispatched to repair a broken machine (the customer), and internal service departments like computer support (the server) servicing requests from employees (the customers). It also includes situations where machines in a production facility or a supercomputer in a computing center process jobs.

Finally, many social service systems are queuing systems. In the judicial process, for example, the cases awaiting trial are the customers and the judge and jury is the server. Other examples include an emergency room at a hospital, the family doctor making house calls, and waiting lists for organ transplants or student dorm rooms.

This broad classification of queuing systems is not exhaustive and it does have some overlap. Its purpose is just to give a flavor of the wide variety of queuing systems facing the process designer.

A conceptual model of the basic queuing process describes the operation of the queuing systems just mentioned and further explains the subtle distinction between a queuing process and a queuing system. It also will provide the basis for exploring the mathematical queuing models in Section 6.2 and help conceptualize the simulation models in Section 6.3. Following the discussion of the basic queuing process, Section 6.1.2 looks at some pragmatic strategies used to mitigate the negative economic impact of long waiting lines.

6.1.1 THE BASIC QUEUING PROCESS

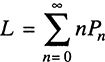

The basic queuing process describes how customers arrive at and proceed through the queuing system. This means that the basic queuing process describes the operations of a queuing system. The following major elements define a basic queuing process: the calling population, the arrival process, the queue configuration, the queue discipline, and the service mechanism. The elements of the basic queuing process are illustrated in Figure 6.2, which also clarifies the distinction between the basic queuing process and the queuing system.

Customers or jobs from a calling population arrive at one or several queues (buffers) with a certain configuration. The arrival process specifies the rate at which customers arrive and the particular arrival pattern. Service is provided immediately when the designated queue is empty and a server (resource) is available. Otherwise, the customer or job remains in the queue waiting for service.

In service systems in which jobs are actual customers (i.e., people), some may choose not to join the queue when confronted with a potentially long wait. This behavior is referred to as balking. Other customers may consider, after joining the queue, that the wait is intolerable and renege (i.e., they leave the queue before being served). From a process performance perspective, an important distinction between balking and reneging is that the reneging customers take up space in the queue and the balking customers do not. For example, in a call center, the reneging customers will accrue connection costs as long as they remain in the queue.

FIGURE 6.2 The Basic Queuing Process and the Queuing System

When servers (resources) become available, a job is selected from the queue and the corresponding service (activity) is performed. The policy governing the selection of a job from the queue is known as the queue discipline. Finally, the service mechanism can be viewed as a network of service stations where activities are performed. These stations may need one or more resources to perform the activities, and the availability of these resources will result in queues of different lengths.

This description of a queuing process is closely related to the definition of a general business process given in Section 1.1. In fact, as previously mentioned, a general business process can be interpreted as a network of basic queuing processes (or queuing systems).

Due to the importance of the basic queuing process, the remainder of this section is devoted to a further examination of its components.

The Calling Population

The calling population can be characterized as homogeneous (i.e., consisting of only one type of job), or heterogeneous, consisting of different types of jobs. In fact, most queuing processes will have heterogeneous calling populations. For example, the admissions process at a state university has in-state and out-of-state applicants. Because these applications are treated differently, the calling population is not homogeneous. In this case, the calling population is divided into two subpopulations.

The calling population also can be characterized as infinite or finite. In reality, few calling populations are truly infinite. However, in many situations, the calling population can be considered infinite from a modeling perspective. The criterion is that if the population is so large that the arrival process is unaffected by how many customers or jobs are currently in the queuing system (in the queue or being served), the calling population is considered infinite. An example of this is a medium-sized branch office of a bank. All potential customers who might want to visit the bank define the calling population. It is unlikely that the number of customers in the bank would affect the current rate of arrivals. Consequently, the calling population could be considered infinite from a modeling perspective.

In other situations, the population cannot be considered infinite. For example, the mechanics at a motorized army unit are responsible for maintenance and repair of the unit’s vehicles. Because the number of vehicles is limited, a large number of broken down vehicles in need of repair implies that fewer functioning vehicles remain that can break down. The arrival process—vehicles breaking down and requiring the mechanic’s attention—is most likely dependent on the number of vehicles currently awaiting repair (i.e., in the queuing system). In this case, the model must capture the effect of a finite calling population.

The Arrival Process

The arrival process refers to the temporal and spatial distribution of the demand facing the queuing system. If the queuing process is part of a more general business process, there may be several entry points for which the arrival processes must be studied. The demand for resources in a queuing process that is not directly connected to the entry points to the overall business process is contingent on how the jobs are routed through this process. That is, the path that each type of job follows determines which activities are performed most often and therefore which resources are most needed.

The arrival process is characterized by the distribution of interarrival times, meaning the probability distribution of the times between consecutive arrivals. To determine these distributions in practice, data need to be collected from the real-world system and then statistical methods can be used to analyze the data and estimate the distributions. The approach can be characterized in terms of the following three-step procedure.

- Collect data by recording the actual arrival times into the process.

- Calculate the interarrival times for each job type.

- Perform statistical analysis of the interarrival times to fit a probability distribution.

Specific tools to perform these steps are described in Chapter 9.

The Queue Configuration

The queue configuration refers to the number of queues, their location, their spatial requirements, and their effect on cuused queue configurationsstomer behavior. Figure 6.3 shows two commonly used queue configurations: the single-line versus the multiple-line configuration.

For the multiple-line alternative (Figure 6.3a), the arriving job must decide which queue to join. Sometimes the customer, like in the case of a supermarket, makes the decision. However, in other cases this decision is made as a matter of policy or operational rule. For example, requests for technical support in a call center may be routed to the agent with the fewest requests at the time of arrival. In the single-line configuration (Figure 6.3b), all the jobs join the same line. The next job to be processed is selected from the single line.

Some advantages are associated with each of these configurations. These advantages are particularly relevant in settings where the jobs flowing through the process are people. The multiple-line configuration, for instance, has the following advantages.

FIGURE 6.3 Alternative Queue Configurations

- The service provided can be differentiated. The use of express lanes in supermarkets is an example. Shoppers who require a small amount of service can be isolated and processed quickly, thereby avoiding long waits for little service.

- Labor specialization is possible. For example, drive-in banks assign the more experienced tellers to the commercial lane.

- The customer has more flexibility. For example, the customer in a grocery store wIth several check out stations has the option of selecting a particular cashier of preference.

- Balking behavior may be deterred. When arriving customers see a long, single queue snaked in front of a service, they often interpret this as evidence of a long wait and decide not to join the line.

The following are advantages of the single-line configuration.

- The arrangement guarantees “fairness” by ensuring that a first-come-first-served rule is applied to all arrivals.

- Because only a single queue is available, no anxiety is associated with waiting to see if one selected the fastest line.

- With only one entrance at the rear of the queue, the problem of cutting in is resolved. Often the single line is roped into a snaking pattern, which makes it physically more difficult for customers to leave the queue, thereby discouraging reneging. Reneging occurs when a customer in line leaves the queue before being served, typically because of frustration over the long wait.

- Privacy is enhanced, because the transaction is conducted with no one standing immediately behind the person being served.

- This arrangement is more efficient in terms of reducing the average time that customers spend waiting in line.

- Jockeying is avoided. Jockeying refers to the behavior of switching lines. This occurs when a customer attempts to reduce his or her waiting time by switching lines as the lines become shorter.

The Queue Discipline

The queue discipline is the policy used to select the next job to be served. The most common queue discipline is first-come-first-served (FCFS), also known as first-in-first-out (FIFO). This discipline, however, is not the only possible policy for selecting jobs from a queue. In some cases, it is possible to estimate the processing time of a job in advance. This estimate can then be used to implement a policy whereby the fastest jobs are processed first. This queue discipline is known as the shortest processing time first (SPT) rule, as discussed in Chapter 4. Other well-known disciplines are last-in-first-out (LIFO) and longest processing time first (LPT).

In addition to these rules, queue disciplines based on priority are sometimes implemented. In a medical setting, for example, the procedure known as triage is used to give priority to those patients who would benefit the most from immediate treatment. An important distinction to be made in terms of priority disciplines is that between nonpreemptive and preemptive priorities. Under a nonpreemptive priority discipline, a customer or job that is currently being served is never sent back to the queue in order to make room for an arriving customer or job with higher priority. In a preemptive discipline, on the other hand, a job that is being served will be thrown back into the queue immediately to make room for an arriving job with higher priority.

The preemptive strategy makes sense, for example, in an emergency room where the treatment of a sprained ankle is interrupted when an ambulance brings in a cardiac arrest patient and the physician must choose between the two. The drawback with the preemptive discipline is that customers with low priorities can experience extremely long waiting times.

The nonpreemptive strategy make sense in situations where interrupting the service before it is finished means that all the work put in is wasted and the service needs to be started from scratch when the job eventually rises in importance again. For example, if a limousine service is driving people to and from an airport, it makes little sense to turn the limousine around in order to pick up a VIP client at the airport just before it reaches the destination of its current passenger.

In many cases in which the customers are individuals, a preemptive strategy can cause severe frustration for low-priority customers. A nonpreemptive approach is often more easily accepted by all parties. Note that queuing disciplines in queuing theory correspond to dispatching rules in sequencing and scheduling (see Section 4.2.6).

The Service Mechanism

The service mechanism is comprised of one or more service facilities, each containing one or more parallel service channels referred to as servers. A job or a customer enters a service facility, where one server provides complete service. In cases where multiple service facilities are set up in series, the job or customer might be served by a sequence of servers. The queuing model must specify the exact number of service facilities, the number of servers in each facility, and possibly the sequence of service facilities a job must pass through before leaving the queuing system. The time spent in a service facility, meaning time in one particular server or service station, is referred to as the service time. The service process refers to the probability distribution of service times associated with a certain server (possibly different across different types of customers or jobs). Often it is assumed that the parallel servers in a given service facility have the same service time distribution. For example, in modeling the parallel tellers in a bank, it is usually reasonable to assume that the service process is the same for all tellers. Statistically, the estimation of the service times can be done in a similar way as the estimation of interarrival times. See Chapter 9.

The design of the service mechanism and queues together with the choice of queuing disciplines result in a certain service capacity associated with the queuing system. For example, the service mechanism may consist of one or more service facilities with one or more servers. How many is a staffing decision that directly affects the ability of the system to meet the demand for service. Adding capacity to the process typically results in decreasing the probability of long queues and, therefore, decreases the average waiting time of jobs in the queue. Often, the arrival process is considered outside the decision maker’s control. However, sometimes implementing so-called demand management strategies is an option. This can involve working with promotional campaigns, such as “everyday low prices” at Wal-Mart, to encourage more stable demand patterns over time. These types of activities also go by the name of revenue management.

6.1.2 STRATEGIES FOR MITIGATING THE EFFECTS OF LONG QUEUES

In some situations, it might be unavoidable to have long waiting lines occasionally. Consider, for example, a ski resort. Most people arrive in the morning to buy tickets so they can spend the whole day on the slopes. As a result, lines will form at the ticket offices early in the day, no matter how many ticket offices the resort opens.

A relevant question is how the process designer or process owner might mitigate the negative economic effects of queues or waiting lines that have formed. This is of particular interest in service systems that want to avoid balking and reneging. Commonly used strategies are based on the ideas of concealing the queue, using the customer as a resource, making the wait comfortable, distracting the customer’s attention, explaining the reasons for the wait, providing pessimistic estimates of remaining waiting time, and being fair and open about the queuing discipline.

An often-used strategy for minimizing or avoiding lost sales due to long lines is to conceal the queue from arriving customers (Fitzsimmons and Fitzsimmons, 1998). Restaurants achieve this by diverting people to the bar, which in turn has the potential to increase revenues. Amusement parks such as Disneyland require people to pay for their tickets outside the park, where they are unable to observe the waiting lines inside. Casinos “snake” the waiting line for nightclub acts through the slot machine area, in part to hide its true length and in part to foster impulsive gambling.

In some cases, a fruitful approach is to consider the customer as a resource with the potential to be part of the service delivery. For example, a patient might complete a medical history record while waiting for a doctor, thereby saving the physician valuable time. This strategy increases the capacity of the process while minimizing the psychological effects of waiting.

Regardless of other strategies, making the customer’s wait comfortable is necessary to avoid reneging and loss of future sales due to displeased and annoyed customers. This can be done in numerous ways, such as by providing complementary drinks at a restaurant or by having a nicely decorated waiting room with interesting reading material in a doctor’s office.

Closely related to this idea is distracting the waiting customers’ attention, thereby making the wait seem shorter than it is. Airport terminals, for instance, have added public TV monitors, food courts, and shops.

If an unexpected delay occurs, it is important to inform the customer about this as soon as possible, but also to explain the reason for the extra wait. The passengers seated on a jumbo jet will be more understanding if they know that the reason for the delay is faulty radio equipment. Who wants to fly on a plane without a radio? However, if no explanation is given, attitudes tend to be much less forgiving because the customers feel neglected and that they have not been treated with the respect they deserve. Keeping the customers informed is also a key concept in all industries that deliver goods. Consider, for example, the tracking systems that FedEx and other shipping companies offer their customers.

To provide pessimistic estimates of the remaining waiting time is often also a good strategy. If the wait is shorter than predicted, the customer might even leave happy. On the other hand, if the wait is longer than predicted the customers tend to lose trust in the information and in the service.

Finally, it is important to be fair and open about the queuing discipline used. Ambiguous VIP treatment of some customers tends to agitate those left out; for example, think about waiting lines at popular restaurants or nightclubs.

6.2 Analytical Queuing Models

This section will examine how analytical queuing models can be used to describe and analyze the basic queuing process and the performance characteristics of the corresponding queuing system. The mathematical study of queues is referred to as queuing or queuing theory.

The distributions of interarrival and service times largely determine the operational characteristics of queuing systems. After all, considering these distributions instead of just their averages is what distinguishes the stochastic queuing models fromthe deterministic models in Chapters 4 and 5. In real-world queuing systems, these distributions can take on virtually any form, but they are seldom thought of until the data is analyzed. However, when modeling the queuing system mathematically, one must be specific about the type of distributions being used. When setting up the model, it is important to use distributions that are realistic enough to capture the system’s behavior. At the same time, they must be simple enough to produce a tractable mathematical model. Based on these two criteria, the exponential distribution plays an important role in queuing theory. In fact, most results in elementary queuing theory assume exponentially distributed interarrival and service times. Section 6.2.1 discusses the importance and relevance of the exponential distribution, as well as its relation to the Poisson process.

The wide variety of specialized queuing models calls for a way to classify these according to their distinct features. A popular system for classifying queuing models with a single service facility with parallel and identical servers, a single queue and a FIFO queuing discipline, uses a notational framework with the structure A1/A2/A3/A4/A5. Attributes A1 and A2 represent the probability distribution of the interarrival and service times, respectively. Attribute A3 denotes the number of parallel servers. Attribute A4 indicates the maximum number of jobs allowed in the system at the same time. If there is no limitation, A4 = ∞. Finally, attribute A5 represents the size of the calling population. If the calling population is infinite, then A5 = ∞. Examples of symbols used to represent probability distributions for interarrival and service times — that is, attributes A1 and A2 — include the following.

M = Markovian, meaning the interarrival and service times follow exponential distributions

D = Deterministic, meaning the interarrival and service times are deterministic and constant

G = General, meaning the interarrival times may follow any distribution

Consequently, M/M/c refers to a queuing model with c parallel servers for which the interarrival times and the service times are both exponentially distributed. Omitting attributes A4 and A5 means that the queue length is unrestricted and the calling population is infinite. In the same fashion, M/M/c/K refers to a model with a limitation of at most K customers or jobs in the system at any given time. M/M/c/∞/N, on the other hand, indicates that the calling population is finite and consists of N jobs or customers.

Exponentially distributed interarrival and service times are assumed for all the queuing models in this chapter. In addition, all the models will have a single service facility with one or more parallel servers, a single queue and a FIFO queuing discipline. It is important to emphasize that the analysis can be extended to preemptive and non-preemptive priority disciplines, see, for example, Hillier and Lieberman (2001). Moreover, there exist results for many other queuing models not assuming exponentially distributed service and interarrival times. Often though, the analysis of these models tends to be much more complex and is beyond the scope of this book.

This exploration of analytical queuing models is organized as follows. Section 6.2.1 discusses the exponential distribution, its relevance, important properties, and its connection to the Poisson process. Section 6.2.2 introduces some notation and terminology used in Sections 6.2.3 through 6.2.8. Important concepts include steady-state analysis and Little’s law. Based on the fundamental properties of the exponential distribution and the basic notation introduced in Section 6.2.2, Section 6.2.3 focuses on general birth-and-death processes and their wide applicability in modeling queuing processes. Although a powerful approach, the method for analyzing general birth-and-death processes can be tedious for large models. Therefore, Sections 6.2.4 through 6.2.7 explore some important specialized birth-and-death models with standardized expressions for determining important characteristics of these queuing systems. Finally, Section 6.2.8 illustrates the usefulness of analytical queuing models for making process design-related decisions, particularly by translating important operational characteristic into monetary terms.

6.2.1 THE EXPONENTIAL DISTRIBUTION AND ITS ROLE IN QUEUING THEORY

The exponential distribution has a central role in queuing theory for two reasons. First, empirical studies show that many real-world queuing systems have arrival and service processes that follow exponential distributions. Second, the exponential distribution has some mathematical properties that make it relatively easy to manipulate.

In this section, the exponential distribution is defined and some of its important properties and their implications when used in queuing modeling are discussed. The relationship between the exponential distribution, the Poisson distribution, and the Poisson process is also investigated. This discussion assumes some prior knowledge of basic statistical concepts such as random variables, probability density functions, cumulative distribution functions, mean, variance, and standard deviation. Basic books on statistics contain material related to these topics, some of which are reviewed in Chapter 9.

To provide a formal definition of the exponential distribution, let T be a random (or stochastic) variable representing either interarrival times or service times in a queuing process. T is said to follow an exponential distribution with parameter α if itsprobability density function (or frequency function), fT(t), is:

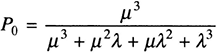

The shape of the density function fT(t) is depicted in Figure 6.4, where t represents the realized interarrival or service time.

FIGURE 6.4 The Probability Density Function for an Exponentially Distributed Random Variable T

The expression for the corresponding cumulative distribution function, FT(t), is then

To better understand this expression, recall that per definition FT(t) = P(T ≤ t), where P(T ≤ t) is the probability that the random variable T is less than or equal to t. Consequently, P(T ≤ t) = 1 − e−αt This also implies that the probability that T is greater than t, P(T > t), can be obtained as P(T > t) = 1 − P(T > t) = e−αt. Finally, it can be concluded that the mean, variance, and standard deviation of the exponentially distributed random variable T, denoted E(T), Var(T), and σT are, respectively:

Note that the standard deviation is equal to the mean, which implies that the relative variability is high.

Next, the implications of assuming exponentially distributed interarrival and service times will be examined. Does it make sense to use this distribution? To provide an answer, it is necessary to look at a number of important properties characterizing the exponential distribution, some of which are intuitive and some of which have a more mathematical flavor.

First, from looking at the shape of the density function fT(t) in Figure 6.4, it can be concluded that it is decreasing in t. (Mathematically, it can be proved that it is strictly decreasing in t, which means that P(0 ≤ T ≤ δ) > P(t ≤ T ≤ t + δ) for all positive values of t and δ).

Property 1: The Density Function fT(t) Is Strictly Decreasing in t. The implication is that exponentially distributed interarrival and service times are more likely to take on small values. For example, there is always a 63.2 percent chance that the time (service or interarrival) is less than or equal to the mean value E(T) = 1/α. At the same time, the long, right-hand tail of the density function fT(t) indicates that large time values occasionally occur. This means that the exponential distribution also encompasses situations when the time between customer arrivals, or the time it takes to serve a customer, can be very long. Is this a reasonable way of describing interarrival and service times?

For interarrival times, plenty of empirical evidence indicates that the exponential distribution in many situations is a reasonable way of modeling arrival processes of external customers to a service system. For service times, the applicability might sometimes be more questionable, particularly in cases of standardized service operations where all the service times are centered around the mean.

Consider, for example, a machining process of a specific product in a manufacturing setting. Because the machine does exactly the same work on every product, the time spent in the machine is going to deviate only slightly from the mean. In these situations, the exponential distribution does not offer a close approximation of the actual service time distribution. Still, in other situations the specific work performed in servicing different customers is often similar but occasionally deviates dramatically. In these cases, the exponential distribution may be a reasonable choice. Examples of where this might be the case could be a bank teller or a checkout station in a supermarket. Most customers demand the same type of service—a simple deposit or withdrawal, or the scanning of fewer than 15 purchased items. Occasionally, however, a customer requests a service that takes a lot of time to complete. For example, in a bank, a customer’s transaction might involve contacting the head office for clearance; in a supermarket, a shopper might bring two full carts to the cashier.

To summarize, the exponential distribution is often a reasonable approximation for interarrival times and of service times in situations where the required work differs across customers. However, its use for describing processing (or service) times in situations with standardized operations performed on similar products or jobs might be questionable.

Property 2: Lack of Memory. Another interesting property of the exponential distribution, which is less intuitive, is its lack of memory. This means that the probability distribution of the remaining service time, or time until the next customer arrives, is always the same. It does not matter how long a time has passed since the service started or since the last customer arrived. Mathematically, this means that P(T > t + δ| T > δ) = P(T > t) for all positive values of t and δ.

MATHEMATICAL DERIVATION

This property is important for the mathematical tractability of the queuing models that will be considered later. However, the main question here is whether this feature seems reasonable for modeling service and interarrival times.

In the context of interarrival times, the implication is that the next customer arrival is completely independent of when the last arrival occurred. In case of external customers arriving to the queuing system from a large calling population, this is a reasonable assumption in most cases. When the queuing system represents an internal subprocess of a larger business process so that the jobs arrive on some kind of schedule, this property is less appropriate. Consider, for example, trucks shipping goods between a central warehouse and a regional distribution center. The trucks leave the central warehouse at approximately 10 A.M. and 1 P.M. and arrive at the regional distribution center around noon and 3 P.M., respectively. In this case, it is not reasonable to assume that the time remaining until the next truck arrives is independent of when the last arrival occurred.

For service times, the lack of memory property implies that the time remaining until the service is completed is independent of the elapsed time since the service began. This may be a realistic assumption if the required service operations differ among customers (or jobs). However, if the service consists of the same collection of standardized operations across all jobs or customers, it is expected that the time elapsed since the service started will help one predict how much time remains before the service is completed. In these cases, the exponential distribution is not an appropriate choice.

The exponential distribution’s lack of memory implies another result. Assume as before that T is an exponentially distributed random variable with parameter α, and that it represents the time between two events; that is, the time between two customer arrivals or the duration of a service operation. It can be asserted that no matter how much time has elapsed since the last event, the probability that the next event will occur in the following time increment δ is αδ. Mathematically, this means that P(T ≤ t + δ| T > t) = αδ for all positive values of t and small positive values of δ, or more precisely

The result implies that α can be interpreted as the mean rate at which new events occur. This observation will be useful in the analysis of birth-and-death processes in Section 6.2.3.

Property 3: The Minimum of Independent Exponentially Distributed Random Variables Is Exponentially Distributed. A third property characterizing the exponential distribution is that the minimum of independent exponentially distributed random variables is exponentially distributed. To explain this in more detail, assume that T1, T2, … , Tn are n independent, exponentially distributed random variables with parameters α1, α2, … , αn respectively. (Here independent means that the distribution of each of these variables is independent of the distributions of all the others.) Furthermore, let Tmin be the random variable representing the minimum of T1, T2, … , Tn that is, Tmin = min{T1, T2, … , Tn}. It can be asserted that Tmin follows an exponential distributionwith parameter

MATHEMATICAL DERIVATION

To interpret the result, assume that T1, T2, … , Tn represent the remaining service times for n jobs currently being served in n parallel servers operating independently of each other. Tmin then represents the time remaining until the first of these n jobs has been fully serviced and can leave the service facility. Because Tmin is exponentially distributed with parameter α, the implication is that currently, when all n servers are occupied, this multiple-server queuing system performs in the same way as a single-server system with service time Tmin. The result becomes even more transparent if one assumes that the parallel servers are identical. This means that the remaining service times T1, T2, … , Tn are exponential with parameter μ, meaning μ = α1 = α2 = … = αn, and consequently Tmin is exponential with parameter α = nμ. This result is useful in the analysis of multiple-server systems.

In the context of interarrival times, this property implies that with a calling population consisting of n customer types, all displaying exponentially distributed interarrival times but with different parameters α1, α2,…, αn, the time between two arrivals is Tmin. Consequently, the arrival process of undifferentiated customers has interarrivaltimes that are exponentially distributed with parameter

Consider, forexample, a highway where connecting traffic arrives via a on-ramp. Assume that just before the on-ramp, the time between consecutive vehicles passing a given point on the highway is exponentially distributed with a parameter α1 = 0.5. Furthermore, assume the time between consecutive vehicles on the ramp is exponentially distributed with parameter α2 = 1. After the two traffic flows have merged (i.e., after the on-ramp), the time between consecutive vehicles passing a point on the highway is exponentially distributed with a parameter α = α1 + α2 = 1.5.

The Exponential Distribution, the Poisson Distribution, and the Poisson Process. Consider a simple queuing system, say a bank, and assume that the time T between consecutive customer arrivals is exponentially distributed with parameter λ . An important issue for the bank is to estimate how many customers might arrive during a certain time interval t. As it turns out, this number will be Poisson distributed with a mean value of λt. More precisely, let X(t) represent the number of customers that has arrived by time t(t ≥ 0). If one starts counting arrivals at time 0, then X(t) is Poisson distributed with mean λt, this is often denoted X(t)∈ Po(λt). Moreover, the probability that exactly n customers have arrived by time t is:

Note that if n = 0, the probability that no customers have arrived by time t is P(X(t) > = 0) = e−λt, which is equivalent to the probability that the arrival time ofthe first customer is greater than t, i.e., P(T > 0) = e−λt.

Every value of t has a corresponding random variable X(t) that represents the cumulative number of customers that have arrived at the bank by time t. Consequently, the arrival process to the bank can be described in terms of this family of random variables {X(t); t ≥ 0}. In general terms, such a family of random variables that evolves over time is referred to as a stochastic or random process. If, as in this case, the times between arrivals are independent, identically distributed, and exponential, {X(t); t ≥ 0} defines a Poisson process. It follows that for every value of t, X(t) is Poisson distributed with mean λt. An important observation is that because λt is the mean number of arrivals during t time units, the average number of arrivals per time unit, or equivalently, the mean arrival rate, for this Poisson process is λ. This implies that the average time between arrivals is 1/λ. This should come as no surprise because we started with the assumption that the interarrival time T is exponentially distributed with parameter λ, or equivalently with mean 1/λ. To illustrate this simple but sometimes confusing relationship between mean rates and mean times, consider an arrival (or counting) process with interarrival times that are exponentially distributed with a mean of 5 minutes. This means that the arrival process is Poisson and on average, one arrival occurs every 5 minutes. More precisely, the cumulative number of arrivals describes a Poisson process with the arrival rate λ equal to 1/5 jobs perminute, or 12 jobs per hour.

An interesting feature of the Poisson process is that aggregation or disaggregation results in new Poisson processes. Consider a simple queuing system, for example the bank discussed previously, with a calling population consisting of n different customer types, where each customer group displays a Poisson arrival process with arrival rate λ1, λ1, … , λn, respectively. Assuming that all these Poisson processes are independent, the aggregated arrival process, where no distinction is made between customer types, is also a Poisson process but with mean arrival rate λ = λ1 + λ2 + … + λn

To illustrate the usefulness of Poisson process disaggregation, imagine a situation where the aforementioned bank has n different branch offices in the same area and the arrival process to this cluster of branch offices is Poisson with mean arrival rate λ. If every customer has the same probability pi of choosing branch i, for i = 1, 2, … , n and every arriving customer must go somewhere, ![]() it can be shown that the arrival process to branch office j is Poisson with arrival rate λj = pjλ. Consequently, the total Poisson arrival process has been disaggregated into branch office-specific Poisson arrival processes.

it can be shown that the arrival process to branch office j is Poisson with arrival rate λj = pjλ. Consequently, the total Poisson arrival process has been disaggregated into branch office-specific Poisson arrival processes.

To conclude the discussion thus far, the exponential distribution is a reasonable way of describing interarrival times and service times in many situations. However, as with all modeling assumptions, it is extremely important to verify their validity before using them to model and analyze a particular process design. To investigate the assumption’s validity, data on interarrival and service times must be collected and analyzed statistically. (See Chapter 9.) For the remainder of this section on analytical queuing models, it is assumed that the interarrival and service times are exponentially distributed.

6.2.2 TERMINOLOGY, NOTATION, AND LITTLE’S LAW REVISITED

This section introduces some basic notation and terminology that will be used in setting up and analyzing the queuing models to be investigated in Sections 6.2.3 through 6.2.8. A particularly important concept is that of steady-state analysis as opposed to transient analysis. Little’s law (introduced in Chapter 5) also will be revisited, and its applicability for obtaining average performance measures will be discussed.

When modeling the operation of a queuing system, the challenge is to capture the variability built into the queuing process. This variability means that some way is needed to describe the different situations or states that the system will face. If one compares the deterministic models discussed in Chapters 4 and 5, the demand, activity times, and capacity were constant and known with certainty, resulting in only one specific situation or state that needs to be analyzed. As it turns out, a mathematically efficient way of describing the different situations facing a queuing system is to focus on the number of customers or jobs currently in the system (i.e., in the queue or in the service facility). Therefore, the state of a queuing system is defined as the number of customers in the system. This is intuitively appealing, because when describing the current status of a queuing system, such as the checkout station in a supermarket, the first thing that comes to mind is how many customers are in that system—both in line and being served. It is important to distinguish between the queue length (number of customers or jobs in the queue) and the number of customers in the queuing system. The former represents the number of customers or jobs waiting to be served. It excludes the customers or jobs currently in the service facility. Defining the state of the system as the total number of customers or jobs in it enables one to model situations where the mean arrival and service rates, and the corresponding exponential distributions, depend on the number of customers currently in the system. For example, tellers might work faster when they see a long line of impatient customers, or balking and reneging behavior might be contingent on the number of customers in the system.

General Notation:

N(t) = The number of customers or jobs in the queuing system (in the queue and service facility) at time t(t ≥ 0), or equivalently the state of the system at time t

Pn(t) = The probability that exactly n customers are in the queuing system at time t = P(N(t) = n)

c = The number of parallel servers in the service facility

λn = The mean arrival rate (i.e., the expected number of arrivals per time unit) of customers or jobs when n customers are present in the system (i.e., at state n)

μn = The mean service rate (i.e., the expected number of customers or jobs leaving the service facility per time unit) when n customers or jobs are in the system

In cases where λn is constant for all n, this constant arrival rate will be denoted with λ. Similarly, in cases where the service facility consists of c identical servers in parallel, each with the same constant service rate, this constant rate will be denoted by μ. This service rate is valid only when the server is busy. Furthermore, note that from property 3 of the exponential distribution discussed in Section 6.2.1, it is known that if k(k ≤ c) servers are busy, the service rate for the entire service facility at this instance is kμ. Consequently, the maximum service rate for the facility when all servers are working is cμ. It follows that under constant arrival and service rates, 1/λ is the mean interarrival time between customers, and 1μ is the mean service time at each of the individual servers.

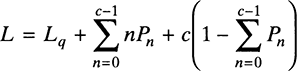

Another queuing characteristic related to λ and μ is the utilization factor, ρ, which describes the expected fraction of time that the service facility is busy. If the arrival rate is λ and the service facility consists of one server with constant service rate μ, then the utilization factor for that server is ρ = λ/μ. If c identical servers are in the service facility, the utilization factor for the entire service facility is ρ = λ/cμ. The utilization factor can be interpreted as the ratio between the mean demand for capacity per time unit (λ) and the mean service capacity available per time unit (cμ). To illustrate, consider a switchboard operator at a law firm. On average, 20 calls per hour come into the switchboard, and it takes the operator on average 2 minutes to figure out what the customer wants and forward the call to the right person in the firm. This means that the arrival rate is λ = 20 calls per hour, the service rate is μ = 60/2 = 30 calls per hour, and the utilization factor for the switchboard operator is ρ = 20/30 = 67 percent. In other words, on average, the operator is on the phone 67 percent of the time.

As reflected by the general notation, the probabilistic characteristics of the queuing system is in general dependent on the initial state and changes over time. More precisely, the probability of finding n customers or jobs in the system (or equivalently, that the system is in state n) changes over time and depends on how many customers or jobs were in the system initially. When the queuing system displays this behavior, it is said to be in a transient condition or transient state. Fortunately, as time goes by, the probability of finding the system in state n will in most cases stabilize and become independent of the initial state and the time elapsed (i.e., ![]() ). When this happens, it is said that the system has reached a steady-state condition (or often just that it has reached steady state). Furthermore, the probability distribution {Pn; n = 0, 1, 2, … } is referred to as the steady-state state or stationary distribution. Most results in queuing theory are based on analyzing the system when it has reached steady state. This so-called steady-state analysis is also the exclusive focus in this investigation of analytical queuing models. For a given queuing system, Figure 6.5 illustrates the transient and steady-state behavior of the number of customers in the system at time t, N(t). It also depicts the expected number of customers in the system,

). When this happens, it is said that the system has reached a steady-state condition (or often just that it has reached steady state). Furthermore, the probability distribution {Pn; n = 0, 1, 2, … } is referred to as the steady-state state or stationary distribution. Most results in queuing theory are based on analyzing the system when it has reached steady state. This so-called steady-state analysis is also the exclusive focus in this investigation of analytical queuing models. For a given queuing system, Figure 6.5 illustrates the transient and steady-state behavior of the number of customers in the system at time t, N(t). It also depicts the expected number of customers in the system,

(Recall that Pn(t) is the probability of n customers in the system at time t, i.e., Pn(t) = P(N(t) = n))

Both N(t) and E[N(t)] change dramatically with the time t for small t values; that is, while the system is in a transient condition. However, as time goes by, their behavior stabilizes and a steady-state condition is reached. Note that per definition, in steady state Pn(t) = Pn for every state n = 0, 1, 2, … . Consequently,

This implies that as a steady-state condition is approached, the expected number of customers in the system, E[A(t)], should approach a constant value independent of time t. Figure 6.5 shows that this is indeed the case, as t grows large E[N(t)] approaches 21.5 customers. N(t), on the other hand, will not converge to a constant value; it is a random variable for which a steady-state behavior is characterized by a stationary probability distribution, (Pn, n = 1, 2, 3, …), independent of the time t.

FIGURE 6.5 Illustration of Transient and Steady-State Conditions for a Given Queuing Process. N(t) Is the Number of Customers in the System at Time t, and E(N(t)) Represents the Expected Number of Customers in the System

Due to the focus on steady-state analysis, a relevant question is whether all queuing systems are guaranteed to reach a steady state condition. Furthermore, if this is not the case, how can one know which systems eventually will reach steady state? The answer to the first question is no; there could well be systems where the queue explodes and grows to an infinite size, implying that a steady-state condition is never reached. As for the second question, a sufficient criterion for the system to eventually reach a steady-state condition is that ρ < 1. In other words, if the mean capacity demand is less than the mean available service capacity, it is guaranteed that the queue will not explode in size. However, in situations where restrictions are placed on the queue length (M/M/c/K in Section 6.2.6) or the calling population is finite M/M/c/∞/N in Section 6.2.7), the queue can never grow to infinity. Consequently, these systems will always reach a steady-state condition even if ρ > 1.

For the steady-state analysis that will follow in Sections 6.2.3 through 6.2.7, the following notation will be used.

Pn = The probability of finding exactly n customers or jobs in the system, or equivalently the probability that the system is in state n

L = The expected number of customers or jobs in the system, including the queue and the service facility

Lq = The expected number of customers or jobs in the queue

W = The expected time customers or jobs spend in the system, including waiting time in the queue and time spent in the service facility

Wq = The expected time customers or jobs spend waiting in the queue before they get served

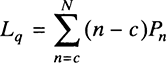

Using the definition of expected value, the expected number of customers or jobs in the system can be determined as follows.

Furthermore, by recognizing that in a queuing system with c parallel servers, the queue will be empty as long as c or fewer customers are in the system, the expected number of customers in the queue can be obtained as follows.

To determine W and Wq, one can turn to an important relationship that is used extensively in steady-state analysis of queuing systems—namely, Little’s law, which also was discussed in Chapter 5. In the following, this basic relationship will be recapitulated and it will be shown how it can be extended to a more general situation with state-dependent arrival rates.

Assuming first that the mean arrival rate is constant and independent of the system state, λn = λ for all n Little’s law states that:

For an intuitive explanation, think of a queuing system as a pipeline where customers arrive at one end, enter the pipeline (the system), work their way through it, and then emerge at the other end and leave. Now consider a customer just before he or she leaves the pipeline (the system), which is such that customers cannot pass one another. Assume that 2 hours have gone by since the customer entered it. All the customers currently in the pipeline must have entered it during these 2 hours, so the current number of customers in the pipeline can be determined by counting how many have arrived during these 2 hours. Little’s law builds on the same logic but states the relationships in terms of expected values: The mean number of customers in the system (or the queue) can be obtained as the mean number of arrivals per time unit multiplied by the mean time a customer spends in the system (or the queue).

In case the arrival rate is state dependent, that is, λn is not the same for all n, Little’s law still applies, but the average arrival rate λ̅ has to be used instead of λ. The general expressions are then:

Note that if λn = λ for all n, it means that λ̅ = λ.

Another useful relationship in cases where the service rate at each server in the service facility is state independent and equal to μ is:

This relationship follows because Wq is the expected time spent in the queue, 1/μ is the expected service time, and W is the expected time spent in the queue and service facility together. An important observation is that by using this expression together with Little’s law, it suffices to know λ̅ and one of the mean performance measures L, Lq, W, or Wq, and all the others can be easily obtained.

6.2.3 BIRTH-AND-DEATH PROCESSES

All the queuing models considered in this chapter are based on the general birth-and-death process. Although the name sounds ominous, in queuing, a birth simply represents the arrival of a customer or job to the queuing system, and a death represents the departure of a fully serviced customer or job from that same system. Still, queuing is just one of many applications for the general birth-and-death process, with one of the first being models of population growth, hence the name.

A birth-and-death process describes probabilistically how the number of customers in the queuing system, N(t), evolves over time. Remember from Section 6.2.2 that N(t) is the state of the system at time t. Focusing on the steady-state behavior, analyzing the birth-and-death process enables one to determine the stationary probability distribution for finding 0, 1, 2 … customers in the queuing system. By using the stationary distribution, determining mean performance measures such as the expected number of customers in the system, L, or in the queue, Lq, is straightforward. With Little’s law, it is then easy to determine the corresponding average waiting times W and Wq. The stationary distribution also allows for an evaluation of the likelihood of extreme situations to occur; for example, the probability of finding the queuing system empty or the probability that it contains more than a certain number of customers.

In simple terms, the birth-and-death process assumes that births (arrivals) and deaths (departures) occur randomly and independently from each other. However, the average number of births and deaths per time unit might depend on the state of the system (number of customers or jobs in the queuing system). A more precise definition of a birth-and-death process is based on the following four properties.

- For each state, N(t) = n; n = 0, 1, 2, … , the time remaining until the next birth (arrival), TB, is exponentially distributed with parameter λn. (Remember that the state of a queuing system is defined by the number of customers or jobs in that system.)

- For each state, N(t) = n; n = 0, 1, 2, … , the time remaining until the next death (service completion), TD, is exponentially distributed with parameter μn.

- The time remaining until the next birth, TB, and the time remaining until the next death, TD, are mutually independent.

- For each state, N(t) = n; n = 1, 2, … , the next event to occur is either a single birth, n → n + 1, or a single death, n → n − 1.

The birth-and-death process can be illustrated graphically in a so-called rate diagram (also referred to as a state diagram). See Figure 6.6. In this diagram, the states (the number of customers in the system) are depicted as numbered circles or nodes, and the transitions between these states are indicated by arrows or directed arcs. For example, the state when zero customers are in the system is depicted by a node labeled 0. The rate at which the system moves from one state to the next is indicated in connection to the arc in question. Consider, for example, state n. The interarrival times and the service times are exponential with parameters λn and μn, respectively, so it is known from the properties of the exponential distribution that the mean arrival rate (or birth rate) must be λn and the service rate (or death rate) is μn. This means that the process moves from state n to state n − 1 at rate μn and from state n to state n + 1 at rate λn. The rate diagram also illustrates the factthat the birth-and-death process by definition allows for transitions involving only one birth or death at a time (n → n + 1 or n → n − 1).

FIGURE 6.6 Rate Diagram Desbring A General Birth-and-Death Process

The rate diagram is an excellent tool for describing the queuing process conceptually. However, it is also useful for determining the steady-state probability distribution {Pn; n = 0, 1, 2 …} of finding 0, 1, 2, … customers in the system. A key observation in this respect is the so-called Rate In = Rate Out Principle, which states that when the birth-and-death process is in a steady-state condition, the expected rate of entering any givenstate n(n = 1, 2, 3, …) is equal to the expected rate of leaving the state. To conceptualize this principle, think of each state n as a water reservoir, where the water level represents the probability Pn. In order for the water level to remain constant (stationary), the average rate of water flowing into the reservoir must equal the average outflow rate.

Rate In = Rate Out Principle. For every state n = 0, 1, 2, … the expected rate of entering the state = the expected rate of leaving the state.

MATHEMATICAL DERIVATION OF THE RATE IN = RATE OUT PRINCIPLE

Consider an arbitrary state n and count the number of times the process enters and leaves this state, starting at time 0.

Let

In(t) = The number of times the process has entered state n by time t ≥ 0

On(t) = The number of times the process has left state n by time t ≥ 0

It follows that for any t, the absolute difference between In(t) and On(t) is 0 or 1:

Dividing this expression by t and letting t → ∞ — that is, by considering what happens to the average number of arrivals and departures at state n insteady-state—the following is obtained.

which implies that:

The Rate In = Rate Out Principal follows, because by definition:

![]() Mean rate (average number of times unit) at which the process centrs state n = Exparted rate In

Mean rate (average number of times unit) at which the process centrs state n = Exparted rate In

![]() Mean rate at which the process leaves state n = Experted Rate Out

Mean rate at which the process leaves state n = Experted Rate Out

The following example illustrates how the rate diagram and the Rate In = Rate Out Principle can be used to determine the stationary distribution for a queuing process.

Example 6.1: Queuing Analysis at Travel Call Inc.

Travel Call Inc. is a small travel agency that only services customers over the phone. Currently, only one travel agent is available to answer incoming customer calls. The switchboard can accommodate two calls on hold in addition to the one currently being answered by the travel agent. Customers who call when the switchboard is full get a busy signal and are turned away. Consequently, at most three customers can be found in the queuing system at any time. The calls arrive to the travel agent according to a Poisson process with a mean rate of nine calls per hour. The calls will be answered in the order they arrive; that is, the queuing discipline is FIFO. On average, it takes the travel agent 6 minutes to service a customer, and the service time follows an exponential distribution. The management of TravelCall wants to determine what the probability is of finding zero, one, two, or three customers in the system. If the probability of finding two or three customers in the system is high, it indicates that maybe the capacity should be increased.

The first observation is that the queuing system represented by Travel Call fits the aforementioned criteria 1 through 4 that define a birth-and-death process. (Using the previously defined framework for classifying queuing models, it also represents an M/M/1/3 process). Second, it should be recognized that the objective is to determine the stationary probability distribution {Pn, n = 0, 1, 2, 3} describing the probability of finding zero, one, two, or three customers in the system. Now that the system and the objective of the analysis are clear, it is time for some detailed calculations.

The first step in the numerical analysis is to decide on a time unit, and to determine the mean arrival and service rates for each of the states of the system. The arrival rate and the service rate are constant and independent of the number of customers in the system. They are hereafter denoted by λ and μ, respectively. All rates are expressed in number of occurrences per hour. However, it does not matter which time unit is used as long as it is used consistently. From the aforementioned data, it follows that λ = 9 calls per hour and μ = 60/6 = 10 calls per hour.

Because the number of states the mean arrival rate, and the service rate for each state are known, it is possible to construct the specific rate diagram describing the queuing process. See Figure 6.7. For state 0, the mean rate at which the system moves to state 1 must be λ , because this is the mean rate at which customers arrive to the system. On the other hand, the service rate for state 0 must be zero, because at this state the system is empty and there are no customers who can leave. For state 1, the mean rate at which the system moves to state 2 is still λ , because the mean arrival rate is unchanged. The service rate is now μ, because this is the mean rate at which the one customer in the system will leave. For state 2, the mean arrival and service rates are the same as in state 1. At state 3, the system is full and no new customers are allowed to enter. Consequently, the arrival rate for state 3 is zero. The service rate, on the other hand, is still μ, because three customers are in the system and the travel agent serves them with a mean service rate of μ customers per time unit.

FIGURE 6.7 Rate Diagram for the Single Server Queuing Process at Travel Call

To determine the probabilities P0, P1, P2, and P3 for finding zero, one, two, and three customers in the system, use the Rate In = Rate Out Principle applied to each state together with the necessary condition that all probabilities must sum to 1 (P0 + P1 + P2 + P3 = 1).

The equation expressing the Rate In = Rate Out Principle for a given state n is often called the balance equation for state n. Starting with state 0, the balance equation is obtained by recognizing that the expected rate in to state 0 is μ if the system is in state 1 and zero otherwise. See Figure 6.7. Because the probability that the system is in state 1 is P1, the expected rate into state 0 must be μ times P1, or μP1. Note that P1 can be interpreted as the fraction of the total time that the system spends in state 1. Similarly, the rate out of state 0 is λ if the system is in state 0, and zero otherwise. (See Figure 6.7). Because the probability for the system to be in state 0 is P0, the expected rate out of state 0 is λP0. Consequently, we have:

Using the same logic for state 1, the rate in to state 1 is λ if the system is in state 0 and μ if the system is in state 2, but zero otherwise. (See Figure 6.7). The expected rate into state 1 can then be expressed as λP0 + μP2. On the other hand, the rate out of state 1 is λ, going to state 2, and μ, going to state 0, if the system is in state 1, and zero otherwise. Consequently, the expected rate out of state 1 is λP1 + μP1, and the corresponding balance equation can be expressed as follows.

By proceeding in the same fashion for states 2 and 3, the complete set of balance equations summarized in Table 6.1 is obtained.

The balance equations represent a linear equation system that can be used to express the probabilities P1, P2, and P3 as functions of P0. (The reason the system of equations does not have a unique solution is that it includes four variables but only three linearly independent equations.) To find the exact values of P0, P1, P2, and P3, it is necessary to use the condition that their sum must equal 1.

Starting with the balance equation for state 0, one finds that P1 = (λ/μ)P0. Adding the equation for state 0 to that of state 1 results in λP0 + μP2 + μP1 = λP1 + μP1 + λP0, which simplifies to μP2 = λP1. This means that P2 = (λ/μ)P1 and using the previous result that P1 = (λ/μ)P0, one finds that P2 = (λ/μ)2P0. In the same manner, by adding the simplified equation μP2 = λP)1 to the balance equation for state 2, one finds that λP1 + μP3 = μP2 = μP2 + λP1, which simplifies to μP3 = μP2. Note that this equation is identical to the balance equation for state 3, which then becomes redundant. P3 can now be expressed in P0 as follows: P3 = (λ/μ)P2 = (λ/μ)3P0. Using the expressions P1 = (λ/μ)P0, P2 = (λ/μ)2P0, and P3 = (λ/μ)3P0, together with the condition that P0 + P1 + P2 + P3 = 1, the following is obtained.

TABLE 6.1 Balance Equations for the Queuing Process at TravelCall

watch result in

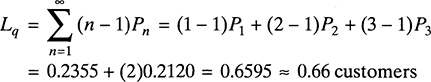

Because it is known that λ = 9 and μ = 10, one can obtain P0 = 0.2908. Because P1 = (λ/μ)P0, P2 = (λ/μ)2P0, and P3 = ((λ/μ)3P0, one also has P1 = (9/10)P0 ≈ 0.2617, P2 ≈ (9/10)2P0 ≈ 0.2355, and P3 = (9/10)3P0 ≈ 0.2120.

These calculations predict that in steady state, the travel agent will be idle 29 percent of the time (P0). This also represents the probability that an arbitrary caller does not have to spend time in the queue before talking to an agent. At the same time, it is now known that 21 percent of the time the system is expected to be full (P3). This means that all new callers get a busy signal, resulting in losses for the agency. Moreover, the probability that a customer who calls in will be put on hold is P1 + P2 ≈ 0.2617 + 0.2355 ≈ 0.50.

Although the explicit costs involved have not been considered, it seems reasonable that TravelCall would like to explore the possibilities of allowing more calls to be put on hold; that is, to allow a longer queue. This way, they can increase the utilization of the travel agent and catch some customers who now take their business elsewhere. Another more drastic option to reduce the number of lost sales might be to hire one more travel agent.

The procedure for determining the stationary distribution of a general birth-and-death process with state-dependent arrival and service rates is analogous to the approach explained in Example 6.1. The balance equations are constructed in the same way, but their appearance is slightly different, as shown in Table 6.2. (See also the corresponding rate diagram in Figure 6.6.)

A complicating factor is that a large state space — one with many states to consider — means that it would be tedious to find a solution following the approach in Example 6.1. For example, to handle the cases of infinite queue lengths, some additional algebraic techniques are needed to obtain the necessary expressions. This is the motivation for studying the specialized models in Sections 6.4 through 6.7, where expressions are available for the steady-state probabilities and for certain important performance measures such as average number of customers in the system and queue, as well as the corresponding waiting times. Still, for smaller systems, the general approach offers more flexibility.

TABLE 6.2 Balance Equations for the General Birth-and-Death Process

As previously mentioned, interesting performance measures for queuing processes are the average number of customers or jobs in the system (L) and in the queue (Lq), as well as the corresponding average waiting times (W and Wq). With the stationary probability distribution {Pn; n = 1, 2, 3, …} available, it is straightforward to obtain all these measures from the definition of expected value and Little’s law. (See Section 6.2.2 for the general expressions.) Next is an explanation of how to do this in the context of Travel Call Inc.

Example 6.2: Determining Average Performance Measures at TravelCall