Chapter 10. Agile Technology

Software developers have been battling the challenge of creating complex software projects since the first program was written.1 Even in the infancy of computers, the challenge of programming with primitive tools pushed the limits of what people could accomplish. Seminal books on project management such as Mythical Man Month, written in the sixties, address challenges that are seen to this day.

1 http://en.wikipedia.org/wiki/Bernoulli_number

Complexity Is Not New

The complex Apollo program was almost undone by unanticipated glitches in the Lunar Module’s navigation and guidance computer in the final seconds before the historic landing. That computer had only 4,000 words of memory2 (one-millionth of the memory in a typical desktop computer).

This chapter will begin with a description of some of the major problems encountered when creating technology for games. The remainder of the part discusses some of the agile practices—including the XP methodology—used to address these problems.

I’ve avoided low-level technical discussion and code examples in this chapter on purpose. The goal is to communicate the issues and solutions that people in every discipline can understand.

The Problems

Technology creation is the greatest area of risk for many video game projects. Video games compete on the basis of technical prowess as much as gameplay and visual quality. Consumers want to experience the latest graphics, physics, audio effects, artificial intelligence, and so on. Even if a game isn’t using the next-generation hardware, it’s usually pushing the current generation to new limits.

This section addresses the typical technical problems that often impact development. These problems impact all disciplines and lead projects down dead-end paths.

Uncertainty

In 2002 I joined Sammy Studios as the lead tools programmer. What intrigued me about Sammy was the vision for the studio’s technology. It was to give the artists and designers the best possible control over the game through customized tools, while engine technology was to be largely middleware-based. This was in stark contrast to my previous job where engine technology was the main focus and tools meant to help artists and designers were considered far less important.

The first tool I was tasked with developing was meant to tune character movement and animation. This tool was meant to integrate animation and physics behavior and allow animators to directly construct and tune character motion. The tool effort was launched with an extensive 80-page requirements document authored by the animators. This document had mock-ups of the user interface and detailed descriptions of every control necessary for them to fully manipulate the system. I had never seen this level of detail in a tool design before, least of all one created by an artist. At my last company, the programmers developed what they thought the artists and designers needed. This resulted in tools that didn’t produce the best results.

Another programmer and I worked on this tool for several months and delivered what was defined in the design document the animators wrote. I looked forward to seeing the amazing character motion that resulted from this tool.

Unfortunately, the effort was a failure. The tool did what it was supposed to, but apparently the animators really couldn’t foresee what they needed, and none of us truly understood what the underlying technology would eventually do. This was shocking.

We reflected on what else could have been done to create a better tool. What we decided was that we should have evolved the tool. We should have started by releasing a version with a single control—perhaps a slider that blended two animations (such as walking and running). Had this tool evolved with the animators’ understanding of their needs and the capabilities of the emerging animation and physics technology, we would have created a far better tool.

We had developed the wrong tool on schedule. This suggested to us that a more incremental and iterative approach was necessary to develop even technology that had minimum technical and schedule risk.

Change Causes Problems

At the core of any game’s requirements is the need to “find the fun.” Fun cannot be found solely on the basis of what we predict in a game design document. Likewise, technical design and architecture driven by a game design document are unlikely to reflect what we may ultimately need in the game. If we want flexibility in the design of the game, then the technology we create needs to exhibit equal flexibility, but often it does not.

Cost of Late Change

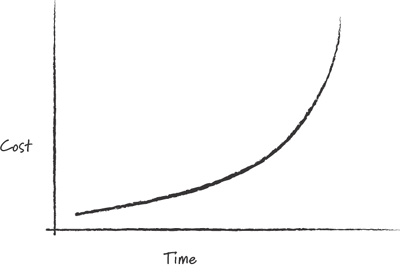

The curve in Figure 10.1 shows how the cost of changing something in a project grows over time (Boehm 1981). Changes made late in a project can result in costs that are a magnitude or more greater than if those changes had been made early. Many reasons for this exist:

• Design details are forgotten by the programmer who wrote the code. It takes time to recall the details and be as effective as when the code was written.

• The original author of the code may not be around to make the change. Someone else has to learn the design and architecture.

• Changing assets that were created based on the original code’s behavior can take a lot of time and effort.

• A lot of code may have been built on top of the original design and code. Not only does the original code have to be changed, but all the other code built upon the expected behavior of the original code has to be changed as well.

Figure 10.1. The cost of change

So, it’s important to identify and make changes as early as possible.

Both uncertainty and the cost of change point to the benefits of short iterations of development. Short iterations test features quickly, which reduces uncertainty for those features. Uncertainty implies the potential for change. Since the cost of change increases as time passes, carrying uncertainty forward also carries the potential for increased cost. For example, some projects implement online functionality late in development and discover that much of their core technology (such as animation and physics) does not work across a network. Had they discovered this earlier in the project, the cost of fixing the problem could have been far less.

Note

This potential cost of change being carried forward is also referred to as technical debt. The concept of debt is used for many elements of game development in this book.

Too Much Architecture Up Front

One approach to the problem of creating technology for changing requirements is to “overarchitect” a solution. This means implementing a solution that encompasses all the potential solutions. An example is a camera system for a game initially designed to be a first-person shooter. A programmer might architect a camera system that includes general-purpose tools and camera management that handles a variety of other potential cameras, including third-person cameras and others. The goal is that if and when the designers change their minds about the game (such as going from a first-person view to a third-person view), then the changes to the camera can be accommodated through the architected system.

There are two problems with this approach:

• It slows the introduction of new features up front: At the start of the game, the designer wants a simple first-person camera to begin prototyping gameplay. They have to wait for the baseline architecture to be created (or brought in from an existing codebase) before the camera is introduced.

• Architectures designed up front often need to be changed: The assumptions built into the architecture are often proven wrong during development and need to be changed anyway. Changing larger systems takes more time than smaller systems.

An Agile Approach

This section describes agile solutions to these problems. These solutions focus on an iterative and incremental approach to delivering value and knowledge early.

Extreme Programming

Scrum, by design, has no engineering practices. Many teams using Scrum soon find that their original engineering practices can’t keep up with the changing requirements. These teams often turn to the practices of Extreme Programming for help.

XP was a methodology developed after Scrum that adopted many of the Scrum practices. XP iterations and customer reviews, though slightly different, aren’t hard to integrate into Scrum teams used to these concepts. XP introduced new practices for programmers. Among them are the practices of test-driven development (TDD) and pair programming.

It’s outside the scope of this book to cover the concepts and practices of XP in great detail. There are great books that already do that (see the “Additional Reading” section at the end of this chapter). Numerous studies have shown that XP increases the velocity of code creation and its quality (Jeffries and Melnik 2007).

Programmers pair up to work on tasks. They apply the TDD practices, described next, to implement technology in small, functional increments. This enables functionality to emerge incrementally that remains stable in the face of change. The goal is to create higher-quality code that can be changed with minimum cost.

Test-Driven Development

TDD practices include writing a number of unit tests for every function introduced. Each unit test will exercise the function to be written a single way by passing in data and testing what the function returns or changes. An example of this is a function that sets the health of the player in a game. If your game design defines 100 as full health and 0 as being dead, your unit tests would each set and test valid and min/max parameters and check to make sure those values were assigned by the function. Other unit tests would try to assign invalid numbers (greater than 100 or less than 0) and test to make sure the function handled those bad values correctly.

If you follow the strict TDD practices, the tests are written before you write the logic of the function that will allow those tests to pass, so the tests actually fail at first.

Unit tests and their associated code are built in parallel. When all the tests pass, the code is checked in. This happens quite frequently when the team is using TDD, sometimes every hour or two. This requires a server that acquires all changes as they are checked in and runs all the unit tests to ensure that none of them has broken the code. This server is called a continuous integration server (CIS). By running all the unit tests, it catches a majority of the problems usually caused by commits. When a build passes all the unit tests, then the CIS informs all the developers that it is safe to synchronize with the changes. When a submission breaks a unit test, the CIS lets everyone know that it is broken. It then becomes the team’s job to fix that problem. Since the culprit who checked in the error is easily identified, they are usually the one who gets to fix the problem.

This provides big benefits to the team and project. As a project grows, the number of unit tests expands, often into the thousands. These tests continue to catch a great deal of errors automatically that would otherwise have to be caught by QA much later. These tests also create a safety net for refactoring and for other large changes that an emerging design will require.

One of the philosophical foundations of XP is that the programmers create the absolute minimal amount of functionality to satisfy customer requests every iteration. For example, suppose a customer wants to see one AI character walking around the environment. Many programmers want to architect an AI management system that handles dozens of AI characters because they “know that the game will need it sometime in the future.” With XP, you don’t do this. You write the code as if the user story needs only one AI character. When a story asks for more than one AI character in a future sprint, you then introduce a simple AI manager and refactor the original code. This leads to an AI manager that is a better fit for the emerging requirements.

A major benefit of TDD is that it requires “constant refactoring” of the codebase to support this behavior. There are a number of reasons for this, but here are two of them:

• Systems created from refactoring, coupled with implementing the absolute minimum needed, often match final requirements more closely and quickly.

• Refactored code has much higher quality. Each refactoring pass creates the opportunity to improve it.

The barriers to TDD are as follows:

• There is an immediate slowing of new features introduced into the game. Writing tests take time, and it can be hard to argue that the time gained back in reduced debugging time is greater.

• Programmers take their practices very personally. Rolling out a practice like TDD has to be done slowly and in a way that clearly demonstrates its value (see Chapter 16, “Launching Scrum”).

Note

Personally I don’t fully agree with the purist approach that XP programmers should always do the absolute minimum. I believe that knowledge and experience factor into how much architecture should be preplanned. It’s very easy to plan too much ahead and “overarchitect,” but I believe there is a sweet spot found between the two extremes.

TDD is very useful and is not a difficult practice for programmers to adopt. In my experience, if a programmer tries TDD for a while, the practice of writing unit tests becomes second-nature. The practice of refactoring takes longer to adjust to. Programmers resist refactoring unless it is necessary. This practice reinforces the mind-set of writing code “the right way, the first time,” which leads to a more brittle codebase that cannot support iteration as easily. Lead programmers should ensure that refactoring is a constant part of their work.

Pair Programming

Pair programming is a simple practice in principle. Two programmers sit at a workstation. One types in code while the other watches and provides input on the problem they are both tasked with solving.

This practice often creates a great deal of concern:

• “Our programmers will get half the work done.”

• “I do my best work when I am focused and not interrupted.”

• “Code ownership will be destroyed, which is bad.”

Changing personal workspaces and habits can generate a lot of fear, uncertainty, and doubt. This section will examine how the benefits of pair programming outweigh or invalidate these concerns.

Benefits of Pair Programming

Let’s look at the benefits of pair programming:

• Spreads knowledge: Pair programming isn’t about one person typing and the other watching. It’s an ongoing conversation about the problem the pair is trying to solve and the best way to solve it.

Two separate programmers solve problems differently. If you were to compare these results, you’d find that each solution had strengths and weaknesses. This is because the knowledge of each programmer does not entirely overlap with that of the other. The dialogue that occurs with pair programming helps to share knowledge and experience widely and quickly. It results in solutions that contain the “best of both worlds.”

Although this is good for experienced programmers, it is an outstanding benefit for bringing new programmers up to speed and mentoring entry-level programmers. Pairing brings a new programmer up to speed much more quickly and therefore rapidly improves their coding practices.

• Assures that you’ll get the best out of TDD: TDD requires that comprehensive tests be written for every function. The discipline required for this is made easier by pairing. First, it’s in our nature to occasionally slack off on writing the tests. From time to time, the partner reminds you to write the proper test or takes over the keyboard if you are not fully motivated. Second, it’s common to have one programmer write the tests and the other write the function that causes the tests to pass. Although this doesn’t need to become a competition between the two, it usually ensures better test coverage. When the same programmer writes both the test and function, they may overlook the same problem in the function and tests. The saying “two heads are better than one” definitely applies to pair programming!

• Eliminates many bottlenecks caused by code ownership: How many times have you been concerned about a key programmer leaving the company in midproject or getting hit by the proverbial bus that drives around hunting down good programmers? Pairing solves some of this by having two programmers on every problem. Even if you are lucky enough not to lose key programmers, they are often too busy on other tasks to quickly solve a critical problem that arrives. Shared knowledge that comes from pair programming solves these problems.

• Creates good standards and practices automatically: Have you ever been faced with the problem, late in a project, that one of your programmers has written thousands of lines of poor-quality code that you depend on and it is causing major problems? Management often tries to solve this problem by defining “coding standards” and conducting peer reviews.

The problem with coding standards is that they are often hard to enforce and are usually ignored over time. Peer reviews of code are a great practice, but they usually suffer from not being applied consistently and often occur too late to head off the problem.

Pair programming can be thought of as a continuous peer review. It catches many bad coding practices early. As pairs mix, a company coding standard emerges and is improved daily. It doesn’t need to be written down because it is documented in the code and embedded in the heads of every programmer.

• Focuses programmers on programming: When programmers start pairing, it takes several days to adjust to the unrelenting pace. The reason is that they do nothing but focus on the problem the entire day. Mail isn’t read at the pair station. The Web isn’t surfed. Shared e-mail stations can be set up for when a programmer wants to take a break and catch up on mail. E-mail clients and web browsers are a major distraction from the focus that needs to occur for programming.

Experience

At High Moon Studios, we didn’t enforce pair programming 100% of the time. For example, programmers didn’t always pair up to solve simple bugs. However, it was done long enough to become second-nature. Had we abandoned pair programming, I would have wanted to make sure that we retained the same benefits with whatever practices replaced it.

Problems with Pairing

There are some problems to watch out for with pair programming:

• Poor pair chemistry: Some pair combinations should be avoided, namely, when the chemistry does not work and cannot be forced. If pairings are self-selected, it works out better. In rare cases, some programmers cannot be paired with anyone. Any large team switching to pair programming will have some programmers who refuse to pair. It’s OK to make exceptions for these people to program outside of pairs, but they still need a peer review of their work before they commit. As time passes, they will often do some pairing and may even switch to it. You just need to give them time and not force it.

• Pairing very junior people with very senior people: Pairing between the most experienced programmers and junior programmers is not ideal. The senior programmer ends up doing all the work at a pace the junior programmer cannot learn from. Matching junior-level programmers with mid-level programmers is better.

• Hiring issues: Make sure that every programming candidate for hire knows they are interviewing for a job that includes XP practices and what that entails. Consider a one-hour pair programming exercise with each candidate in the later stages of a hiring process. This does two things: First, it’s a great tool for evaluating how well the candidate does in a pair situation where communication is critical. Second, it gives the candidate exposure to what they are in for if they accept an offer. A small percentage of candidates admit that pairing is not for them and opt out of consideration for the job. This is best for them and for you.

Are XP Practices Better Than Non-XP Practices?

It’s difficult to measure the benefit of XP practices when the team first starts using them because the pace of new features immediately slows down. This corresponds to studies (Jeffries and Melnik 2007) that show that a pair of programmers using TDD is about 1.5 times as fast as one separate programmer introducing new features. The additional benefits we’ve seen with XP/TDD more than offset the initial loss of productivity:

• Very high stability in builds at all times: It adds to the productivity of designers and artists as well as programmers when the build is not constantly crashing or behaving incorrectly.

• Post-production debugging demands vastly reduced: More time is spent tuning and improving gameplay rather than fixing bugs that have been postponed.

• Better practices required in Scrum: Iterative practices in Scrum have a higher level of change. TDD helps retain stability through this change.

• Less wasted effort: A project wastes less time reworking large systems by avoiding large architectures written before the requirements are fully known.

Debugging

One of the biggest differences between an agile game development project and a traditional game development project has to do with how bugs are addressed. In many projects, finding and fixing bugs does not happen until the end when QA focuses on the game. The resulting rush to fix defects usually leads to crunch.

One of the ideals of agile game development is to eliminate the “post-alpha bug-fixing crunch.” By adding QA early in the project and addressing bugs as we find them, we significantly reduce the amount of time and risk during the alpha and beta portions of a project.

Debugging in Agile

An agile project approaches bugs differently. Fixing bugs is part of the work that needs to happen before a feature is completed each sprint. In an agile project, we are trying to minimize debt, especially the debt of defects, since the cost of fixing those bugs increases over time. Although QA is part of an agile team, this doesn’t relieve the other developers from the responsibility of testing their own work.

When a bug is identified, we either add a task to fix it in the sprint backlog or a story to fix it in the product backlog.

Adding a Task to Fix a Bug to the Sprint Backlog

When a bug is found that relates to a sprint goal and it’s small enough to fix, a task to do so is added to the sprint backlog. Fixing bugs is part of development velocity. Adopting better practices to avoid defects increases velocity.

In some cases, if enough bugs arise during a sprint, then the team may miss achieving all the user stories.

Adding a Bug to the Product Backlog

Sometimes a problem is uncovered that does not impact a sprint goal, cannot be solved by the team, or is too large to fix in the remaining time of the sprint. For example, if a level production team that contains no programmers uncovers a flaw with AI pathfinding on a new level, a user story to fix this is created, added to the product backlog, and prioritized by the product owner. Often such bugs are addressed in a hardening sprint if the product owner decides to call for one (see Chapter 6, “Agile Planning”).

If the team is uncertain about which backlog the bug belongs on, they should discuss it with the product owner.

A Word About Bug Databases

One rule I strongly encourage on every agile team is to avoid bug-tracking tools and databases before the “feature complete” goals (often called alpha). It’s not that the tools are bad, but tools encourage the attitude that identifying a bug and entering it in a database is “good enough for now.” It’s not. In many cases it’s a root of the evil that creates crunch at the end of a project.

When a team enters alpha and the publisher ramps up their off-site QA staff, then a bug database may become necessary.

Optimization

Like debugging, optimization is often left for the end of a project. Agile game development projects spread optimization across the project as much as possible. Unfortunately for projects that have large production phases and single true releases, much of the optimization must be left to the end of the project when the entire game is playable.

Knowledge is the key element that helps decide between what is optimized early in the project and what is optimized in post-production. Projects optimize early to gain knowledge about the following:

• Is the game (feature, mechanic, and so on) fun? It’s difficult to know what is fun when the game is playing at 10 fps. There must be an ongoing effort to avoid a debt of badly written code or bloated test assets that need to be redone in post-production.

• What are the technical and asset budgets for production? Projects shouldn’t enter production unless the team is certain about the limitations of the engine and tool sets. Knowing these limitations will vastly decrease rework waste. The following are examples:

• How many AI characters can be in the scene at any one time? Often this variable depends on other parameters. For example, a game may afford more AI characters in a simpler scene that frees up some of the rendering budget for AI.

• What is the static geometry budget? This should be established early, and it should be very conservative. As the game experience is polished with special effects and improved textures, the budget often shrinks. In post-production, it’s easier to add static geometry detail than to remove it from scenes.

• What work will be necessary to get the game working on the weakest platform? Sometimes the weakest platform is the most difficult to iterate on. It’s better to know as early as possible if separate assets need to be created for another platform!

• Does the graphics-partitioning technology work? Don’t count on some “future technical miracle” to occur that enables levels to fit in memory or render at an acceptable frame rate. How much work needs to be done to make a culling system work? Make sure the level artists and designers know how a culling system works before they lay out the levels.

So, what optimizations are left until post-production? These include lower-risk optimizations such as those made to the assets created in production to reduce their resource footprint. Such optimizations are best made after the entire game is fully playable. Here are some examples of post-production optimization:

• Disc-streaming optimization: Organize the data on the hard drive and disc to stream in effectively. This is always prototyped to some degree in pre-production.

• AI spawn optimization: Spread out the loading and density of AI characters to balance their use of resources.

• Audio mixing: Simplify the audio streams, and premix multiple streams when possible.

A project can’t discount the benefits from engine improvements made during production. At the same time, they can’t be counted on. It’s a judgment call about where to draw the line. As a technical customer, I set the goal of a project to achieve a measurable bar of performance throughout development as a definition of done for releases. An example of a release definition of done is as follows:

• 30 frames per second (or better) for 50% of the frames

• 15 to 30 frames per second for 48% of the frames

• Less than 15 frames per second for 2% of the frames

• Loading time on the development station of less than 45 seconds

These standards are measurable and are caught quickly by test automation. They might not be stringent enough to ship with, but they are acceptable for the “magazine demo” quality releases.

Staying Within Technical and Asset Budgets Throughout the Project

The benefits of having the game run at shippable frame rates throughout development are vast. We get a much more true experience of the game emerging when it runs “within its means” at all times. However, there is an ongoing give-and-take between iterative discovery and incremental value. For example, developers may want to experiment with having 24 AI characters rush the player in an “AI wave” to find out whether it’s fun. Do they have to optimize the entire AI system to handle 24 characters in the experiment? Of course not. If the experiment has shown that the feature would add a lot of value to the game, we have only improved our understanding, not the game. We have iterated to improve our knowledge, but we haven’t yet incremented the value of the game.

Too often we’ll add such a scene to the game without enough optimization. We have created a bit of debt that we have to pay back later. This payback cost could be large. It could be so large that we can’t afford it and have to eliminate the mechanic altogether. This could occur for many reasons; here are some examples:

• The AI character models are too complex to afford 24 in the scene.

• Spawning a wave of 24 AI characters causes a one-second pause, which violates a first-party technical requirement (for example, TCR/TRC).

The list could go on. What can we do to reduce this debt and truly increment the value of the game? We need to do some spikes to determine the optimization debt and influence the product backlog to account for it. If a spike reveals these two problems, we could address them in the following ways:

• Plan for simple models with a smaller number of bones to populate characters in the wave.

• Implement an interleaved spawning system (like a round-robin) to spawn the characters one per frame over a second.

Both of these backlog items enable the product owner to measure the cost of the AI wave against the value we learned in the test. This enables cost and value to be their deciding factor rather than the need to ship a game on time.

There are many examples of this type of decision that need to be made early. When these decisions are not made, the optimization debt often becomes overwhelming. This requires an objective eye to watch out for. Too many times we view these features with the “developer’s eye.” We overlook the flaws that cause frustration to our stakeholders.

Summary

This chapter explored the XP practices of test-driven development and pair programming and the principles behind them. These practices support agility because they move programmers away from separate design, code, and test phases of development into iterative daily practices where these activities are mixed. They create code that matches emergent requirements, is more maintainable, and is of higher quality.

The next several chapters will explore the other game team disciplines and how their practices can be adapted for more agility as well.

Additional Reading

Beck, K. 2004. Extreme Programming Explained, Second Edition. Boston: Addison-Wesley.

Brooks, F. 1995. Mythical Man Month, Second Edition. Boston: Addison-Wesley.

McConnell, S. 2004. Code Complete, Second Edition. Redmond, WA: Microsoft Press.