Chapter 1. The Crisis Facing Game Development

The pioneer days of video game development have all but disappeared. The sole programmer—who designed, programmed, and rendered the art on their own—has been replaced by armies of specialists. An industry that sold its goods in Ziploc bags now rakes in more cash than the Hollywood box office. As an industry, we’ve matured a bit.

However, in our rush to grow up, we’ve made some mistakes. We’ve inherited some discredited methodologies for making games from other industries. Like children wearing their parents’ old clothes, we’ve frocked ourselves in ill-fitting practices. We’ve met uncertainty and complexity of our projects with planning tools and prescriptive practices that are more likely to leave a “pretty corpse” at the end of the project than a hit game on the shelves. We’ve created a monster that has removed much of the fun from making fun products. This monster eats the enthusiasm of extremely talented people who enter the game development industry with hopes of entertaining millions. Projects capped with months of overtime (aka crunch) feed it. A high proportion of developers are leaving the industry and taking years of experience with them. It doesn’t need to be this way.

In this chapter, we’ll look at the history of game development and how it has evolved from individuals making games every few months to multiyear projects that require more than 100 developers. We will see how the business model is headed down the wrong path. We will set the stage for why agile development methods are a way of changing the course that game development has taken over the past decade. The goals are to ensure that game development remains a viable business and to ensure that the creation of games is as fun as it should be.

Note

This chapter will use “AAA” arcade or console games as the main examples of cost, because they’ve been around the longest.

A Brief History of Game Development

In the beginning, video game development didn’t require artists, designers, or even programmers. In the early seventies, games were dedicated boxes of components that were hardwired together by electrical engineers for a specific game. These games first showed up in arcades and later in home television consoles that played only one game, such as Pong.

As the technology progressed, game manufacturers discovered that new low-cost microprocessors offered a way to create more sophisticated games; programmable hardware platforms could run a variety of games rather than being hardwired for just one. This led to common motherboards for arcade machines and eventually to popular home consoles with cartridges.1 The specific logic of each game moved from hardware to software. With this change, the game developers turned to programmers to implement games. Back then, a single programmer could create a game in a few months.

1 Circa 1977 with the release of the Atari 2600 console

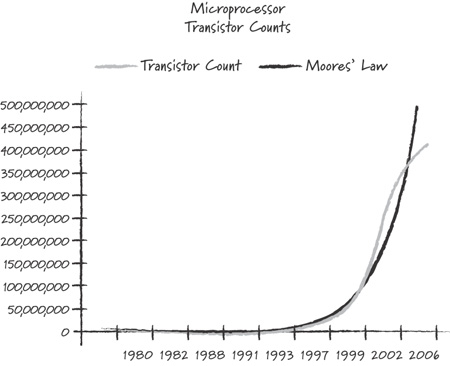

In 1965, Gordon Moore, the cofounder of Intel, defined a law that predicted that the number of transistors that could fit on a chip would continue to double every two years. His law has persevered for the past four decades (see Figure 1.1).

Figure 1.1. The number of transistors in PC microprocessors

Moore’s law: www.intel.com/technology/mooreslaw/index.htm

The home computer and console market have been driven by this law. Every several years a new generation of processors rolls off the fabrication lines, the performance of which dwarfs that of the previous generation. Consumers have an insatiable thirst for the features2 this power provides, while developers rush to quench those thirsts with power-hungry applications. To game developers, the power and capability of home game consoles were doubling every two years—processor speeds increased, graphics power increased, and memory size increased—all at the pace predicted by Moore.

2 Realistic physics, graphics, audio, and so on

Each generation of hardware brought new capabilities and capacities. 3D rendering, CD-quality sound, and high-definition graphics bring greater realism and cost to each game. Memory and storage have increased as fast. Thirty years ago, the Atari 2600 had less than 1,000 bytes of memory and 4,000 bytes of cartridge space. Today a PlayStation 3 has 500,000 times the memory and 10,000,000 times the storage! Processor speeds and capabilities have grown just as dramatically.

Iterating on Arcade Games

The model first used to develop games was a good match for the hardware’s capabilities and the market. In the golden age of the video arcade, during the late seventies and early eighties, games like Pac-Man, Asteroids, Space Invaders, and Defender were gold mines. A single $3,000 arcade machine could collect more than $1,000 in quarters per weekend. This new gold rush attracted quite a few prospectors. Many of these “wanna-be” arcade game creators went bankrupt in their rush to release games. A manufacturing run of 1,000 arcade machines required a considerable investment—an investment that was easily destroyed if the machines shipped with a poor game.

With millions of dollars of investment at stake, arcade game developers sought the best possible game software. Developing the game software was a tiny fraction of the overall cost, so it was highly effective to throw bad games out and try again—and again—before committing to manufacturing hardware dedicated to a game. As a result, game software development was highly iterative. Executives funded a game idea for a month of development. At the end of the month, they played the game and decided whether to fund another month, move to field-testing, or simply cancel the game.

Companies such as Atari field-tested a game idea by placing a mocked-up production machine in an arcade alongside other games. Several days later Atari would count the quarters in the machine and decide whether to mass-produce it, tweak it, or cancel it outright. Some early prototypes, such as Pong, were so successful that their coin collection boxes overflowed and led to failure of the hardware even before the end of the field-test (Kent 2001)!

This iterative approach helped fuel the release of consistently high-quality games from companies like Atari. The market decline in the mid-eighties was caused by the increased proportion of inferior games released because of falling hardware costs. The cartridge-based home consoles allowed almost anyone to create and mass-produce games cheaply. The financial barrier of high-distribution cost disappeared, as did much of the disciplined iteration, which previously ensured only better games were released. When the market became flooded with poor-quality games, consumers spent their money elsewhere.

Early Methodologies

In the dawn of video game development, a single person working on a game didn’t need much in the way of a “development methodology.” A game could be quickly developed in mere months. As the video game hardware became more complex, the cost to create games rose. A lone programmer could no longer write a game that leveraged the full power of evolving consoles. Those lone programmers needed help. This help came increasingly from bigger project teams and specialists. For example, the increase in power in the graphics hardware allowed more detailed and colorful images on the screen; it created a canvas that needed true artists to exploit. Software and art production became the greater part of the cost of releasing a game to market.

Within a decade, instead of taking three or four people-months to create a game, a game might take thirty or forty people-months.

To reduce the increasing risk, many companies adopted waterfall-style methodologies used by other industries. Waterfall is forever associated with a famous 1970 paper by Winston Royce.3 The waterfall methodology employed the idea of developing a large software project through a series of phases. Each phase led to a subsequent phase more expensive than the previous. The initial phases consisted of writing plans about how to build the software. The software was written in the middle phase. The final phase was integrating all the software components and testing the software. Each phase was intended to reduce risk before moving on to more expensive phases.

3 http://en.wikipedia.org/wiki/Waterfall_model#CITEREFRoyce1970

Many game development projects use a waterfall approach to development. Figure 1.2 shows typical waterfall phases for a game project.

Figure 1.2. Waterfall game development

Waterfall describes a flow of phases; once design is done, a project moves to the analysis phase and so on. Royce described an iterative behavior in waterfall development, which allowed earlier phases to be revisited. Game development projects also employ this behavior, often returning to redesign a feature later in development when testing shows a problem. However, on a waterfall project, a majority of design is performed early in the project, and a majority of testing is performed late.

Ironically, Royce’s famous paper illustrated how this process leads to project failure. In fact, he never used the term waterfall; unfortunately, the association stuck.

The Death of the Hit-or-Miss Model

In the early days of the game industry, a hit game could pull in tens of millions of dollars for a game maker. This was a fantastic return on investment for a few months of effort. Profits like these created a gold rush. Many people tried their hand at creating games with dreams of making millions. Unfortunately, a very small percentage of games made such profits. With the minimal cost of making games, however, game developers could afford to gamble on many new innovative titles in hopes of hitting the big time. One hit could pay for many failures. This is called the hit-or-miss publishing model.

Sales have continued to grow steadily over the 30 years of the industry’s existence.4 Figure 1.3 shows the sales growth for the total video game market from 1996 to 2008. This represents a steady growth of about 10% a year. Few markets can boast such consistent and steady growth.

4 Except for the occasional market crashes every decade!

Figure 1.3. Market sales for video games

Source: Multiple, M2R, NPD, CEA, DFC

Although hardware capabilities followed Moore’s law, the tools and processes employed to create the games did not. By the nineties, small teams of people were now required to create games, and they often took longer than several months to finish. This raised the cost of creating games proportionally, and they’ve continued to rise, roughly following Moore’s law. This growth of effort (measured in people-years) has grown to this day (see Figure 1.4).

Figure 1.4. People-years to make “AAA” games

Electronic Entertainment Design and Research

The growth in effort to create a game has been much greater than the market’s growth market. The number of games released each year hasn’t diminished significantly, and the price of a game for the consumer has risen only 25% (adjusted for inflation).5 This has greatly reduced the margin of the hit-or-miss model. Now a hit pays for fewer misses because the misses cost hundreds of times more than they did 30 years ago. If the trend continues, soon every major title released will have to be a hit just for a publisher to break even.

5 Electronic Entertainment Design and Research

Note

According to Laramee (2005), of the games released to the market, only 20% will produce a significant profit.

People-Years

It’s almost impossible to compare the cost of making games through the decades. I use the phrases people-years and people-months to compare effort across time. “Ten people-years” equals the effort of five people for two years or ten people for one year.

The Crisis

Projects with more than 100 developers, with costs exceeding tens of millions of dollars to develop, are now common. Many of these projects go over budget and/or fail to stay on schedule. Most games developed are not profitable. The rising cost of game development and the impending death of the hit-or-miss model has created a crisis for game development in three main areas: less innovation, less game value, and a deteriorating work environment for developers.

Less Innovation

We will never create hit games every time, so we need to find ways to reduce the cost of making games and to catch big “misses” long before they hit the market. One unfortunate trend today is to attempt to avoid failure by taking less risk. Taking less risk means pursuing less innovation. A larger proportion of games are now sequels and license-based “safe bets” that attempt to ride on the success of previous titles or popular movies.

Innovation is the engine of the game industry. We cannot afford to “throw out the baby with the bath water.”

Less Value

Reducing cost has also led to providing less content for games. This reveals itself in the reduction in average gameplay time consumers are provided by today’s games. In the eighties, a typical game often provided more than forty hours of gameplay. These days, games can often be completed in less than ten hours.

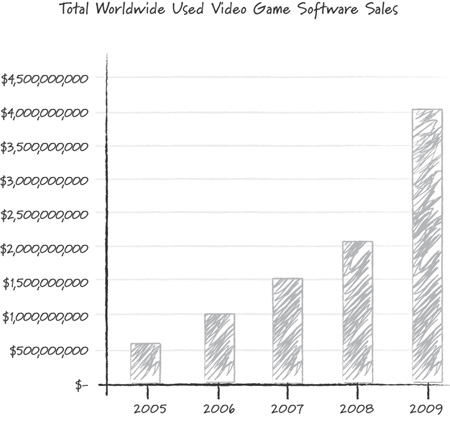

This reduction in value has had a significant impact on the market. Consumers are far less willing to pay $60 for a game that provides only ten hours of entertainment. As a result, the game rental and secondhand sales markets have blossomed (see Figure 1.5). Each rental represents the potential loss of a sale.

Figure 1.5. The growth of the used-games market

Source: M2Research

Deteriorating Work Environment

With predictability in schedules slipping and development costs skyrocketing, developers are bearing a greater burden. They are asked to work extended overtime hours in an effort to offset poor game development methods. Developers often work twelve hours a day seven days a week for months at a time to hit a critical date; lawsuits concerning excessive overtime are not uncommon (for example, see http://en.wikipedia.org/wiki/Ea_Spouse).

Talented developers are leaving the industry because they are faced with choosing between making games or having a life outside of work. The average developer leaves the industry before their ten-year anniversary.6 This prevents the industry from building the experience and leadership necessary to provide innovative new methods to manage game development.

6 www.igda.org/quality-life-white-paper-info

A Silver Lining

There is a silver lining. The market is forcing us to face reality. Other industries have faced a similar crisis and improved themselves.

We need to transition as well. The game market is healthy. New gaming platforms such as the iPhone and online content distribution models, to name a few, offer new markets for smaller projects. The industry is still in its infancy and looks to change itself completely in the next ten years. It makes sense that we explore new ways for people to work together to overcome this growing crisis.

This book is about different ways to develop games. It’s about ways people work together in environments that focus talent, creativity, and commitment in small teams. It’s about “finding the fun” in our games every month—throwing out what isn’t fun and doubling down on what is. It’s not about avoiding plans but about creating flexible plans that react to what is on the screen.

This book applies agile methodologies, mainly Scrum but also Extreme Programming (XP) and lean, to game development. It shows how to apply agile practices to the unique environment of game development; these are practices that have been proven in numerous game studios. In doing this, we are setting the clock back to a time when making a game was more a passionate hobby than a job. We are also setting the clock forward to be ready for the new markets we are starting to see now, such as the iPhone and more downloadable content.

Additional Reading

Bagnall, B. 2005. On the Edge: The Spectacular Rise and Fall of Commodore. Winnipeg, Manitoba: Variant Press.

Cohen, S. 1984. Zap: The Rise and Fall of Atari. New York: McGraw-Hill.