DIGITAL ELEMENT CREATION

DIGITAL MODELING

Kevin Hudson

Overview: The Importance of Modeling

The pipeline for digital asset creation begins with digital modeling. It is the birthplace for a digital asset, and it is here that the basic aesthetic form and technology are laid. If they are not done well, this is the place where most problems for the future pipeline begin. Modeling is as important to the creation of an asset as the foundation is to the construction of a house. If the model is solid, all of the other crafts from rigging to painting to color and lighting can be done with far greater ease.

Types of Modeling

Digital modeling can be broken up into four types: character, prop, hard surface/mechanical, and environmental.

Character Modeling

Character modeling assets are assets that will need to move in some fashion. For the most part, they can be classified as actors. These would easily include such models as a cuddly Stuart, from Stuart Little (1999), the hyperrealistic Dr. Manhattan from Watchmen (2009), the stylized characters from The ChubbChubbs (2002), or digital stand-ins for background people such as panicking or stampeding crowds from any number of action films.

Character modeling calls on the true sculptural talent of the modeler. In recent years, software that more and more resembles a sculpting experience has evolved. This type of software includes Zbrush, Freeform 3D, 3D-Coat, and Mudbox.

Figure 7.1 Stuart Little (1999) is just one example of a digital character asset. (STUART LITTLE © 1999 Global Entertainment Productions GmbH & Co. Medien KG and SPE German Finance Co. Inc. All rights reserved. Courtesy of Columbia Pictures.)

Prop Modeling

Prop models tend to be items that do not necessarily move on their own accord. They are handled or acted on by characters. This does not mean that they will not need to be rigged for movement to some degree, but they are often rigged to a much lesser degree than a character model.

Props are still incredibly important assets and can be either soft or mechanical. Often digital props are re-creations of real-world counterparts. In that case, gathering quality reference is vital to the success of re-creating these assets. Whenever possible, the real-world item should be made available to the modeler. Because this is often not practical, getting good photo reference with measurements is the next best thing.

Hard Surface/Mechanical Modeling

Hard surface modeling has been broken out as its own distinct type of modeling. Cars, trains, helicopters, and highly designed technical items fall into this category.

Environmental Modeling

At a certain point, the line blurs between what separates a prop from an environment. In the case of the train in The Polar Express (2004), it functions as a very complicated prop or hard surface model. But it is also a model that characters walk through and perform within. This is where it enters the realm of environmental modeling.

Figure 7.2 A motorcycle is a classic example of a hard surface/mechanical model. (Image © 2007 Sony Pictures Imageworks Inc. All rights reserved.)

Believable versus Real

In motion picture storytelling, it is more important to be believable than it is to be real. Since the software used for modeling has its roots in mechanical engineering, it is all too seductive to get caught up in attempting to make something mechanically real. Production budgets are built around what it will take for modelers to assist in the creation of something that is believable and helps to tell the story. Many people who come out of an industrial design background tend to overengineer their models yet learn that the requirements, though certainly high, are not as precise as might be needed in something that would actually be built.

Model Data Types

The three main data types for models are polygons, NURBS, and subdivision surfaces. Another emerging type is voxel technology.

Polygons: Part 1

The first data type used for entertainment purposes was the polygon data type. A polygon is a basic piece of geometry defined by three points or vertices—a triangular face. A model is made by assembling many of these triangles until a more complicated form is created. Originally, polygons were textured using a series of texture projection techniques.

Figure 7.3 Meeper is an example of a multi-patch NURBS Model. (Image © 2002 Sony Pictures Imageworks Inc. All rights reserved.)

If multiple planar projections were to be used, an alpha channel would be created to fade off one projection and fade in another. This technique had tremendous inherent limitations and obstacles. Chief among them was stretching of the texture as it approached areas where the normal of the surface would move toward being parallel with the projection plane.

NURBS

The second data type emerged out of the design industry and it solved many of the texture projection issues. NURBS (Non-Uniform Rational B-Spline) have texture coordinates (UVs) already associated with the geometry. A NURBS plane is essentially a mathematically defined surface that uses a B-spline1 algorithm to generate a patch. A model built-in NURBS is a series of these patches quilted together. An average character model may be made up of hundreds of these NURBS patches.

Although using NURBS patches solved many texturing issues, it introduced considerable file management overhead. For each NURBS patch, a unique texture map was created. This meant that for color alone there were hundreds of texture maps to paint and manage throughout a production. Adding bump and specular texture maps would multiply the count by 3.

Polygons: Part 2

Polygons became popular again when techniques emerged to manipulate the UV structure of polygons. Modelers would unwrap an object in UV space and modify the UVs to avoid the stretching pitfalls of the traditional texture projection techniques. This technique of creating a UV layout allowed for superior texturing.

At this time, polygons also began using a tessellation2 technique whereby the polygonal geometry would be subdivided and smoothed at render time. This allowed the polygonal geometry to function more like NURBS with a control “cage” model, and the tessellated, or subdivided “limit,” surface derived from it. Though different from a true subdivision surface, it is often referred to as such.

Subdivision Surfaces

A true subdivision surface is different from tessellated polygons, though it is common to hear tessellated polygons called a subdivision surface. This is a data type that is probably going to be retired in the near future because it never found acceptance and tools were never developed to support it.

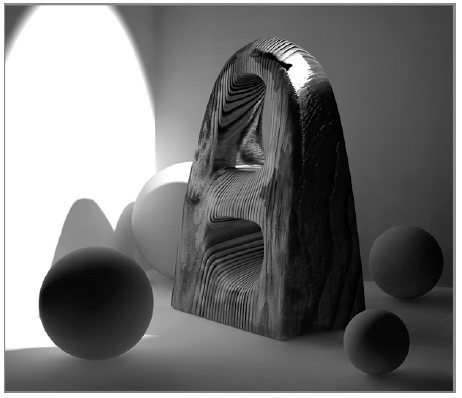

Voxels

In the not too distant future, modeling may be done using volumetric pixels (voxels). This is most similar to clay in that the model is made up of actual volumetric material. All of the models described above are merely exterior shells. Since voxels are geometry independent, they will provide the most freedom to the modeler. They will allow modelers to easily cut and paste portions of a model, without having to think about how to tie the faces into the model geometrically. It is very exciting technology and has made its way into visualization and medical communities.

Development of Models

The three basic routes for the development of digital models are free-form digital sculpting, 2D reference material, and scanned dimensional information.

Free-Form Digital Sculpting

In free-form sculpting, the digital modeler functions as a concept artist/designer and interacts directly with the Director, Production Designer, and/or VFX Supervisor. There has been a recent rise of the independent digital artist as celebrity designer who can deliver a design sculpt. In addition, several software tools have come into use lately for doing digital sculpting: Freeform 3D, Zbrush, Mudbox, and 3D-Coat.

Freeform 3D is the oldest and most expensive. It relies on the use of a haptic arm3 stylus and attempts to emulate a sculpting experience by use of a force-feedback interface. This system uses voxels, and topologically frees up the artist. The completed voxel model will need to be sampled and tessellated into polygons in order to export it to another package for further use.

Zbrush is probably the most common digital sculpting tool in use today. It works with polygonal data, so it does have some topological limitations, but it requires no special interface or graphics hardware, making it a very cost-effective digital sculpting solution.

Mudbox is very similar to Zbrush, but provides some very cool open GL4 preview technology. As a result, however, a higher end workstation, OpenGL card, is required.

3D-Coat is a new software package that may emerge as the first voxel modeler accessible to the masses.

With each of these technologies, the design phase should be viewed as linked but still separate from the creation of the production model. In particular, since voxels are not supported in a production pipeline, they need to be resurfaced into some form of polygonal or NURBS data. Many people build a polygonal cage on top of the design model using GSI, Topogun, or CySlice software, but most of the common modeling software packages (Maya, 3D Max, Softimage, or Modo) will work for remeshing the design model into a more production-friendly polygonal layout.

From 2D Imagery

This is very similar to free-form, but it involves the creation of orthographic5 imagery (either photographic or illustrative), which is then loaded into the sculpting or modeling software as background image planes over which the model is built. Even when using the Freeform approach, it can be advantageous to do some work in 2D before proceeding to 3D.

When imagery contains perspective, as in photographic reference, it is important to record lens/location information so that matchmoved cameras may be replicated inside the modeling software to approximate the real-life camera. The more the camera approximates an orthographic view of the subject, the more the modeling process can be streamlined.

From Scanned 3D Information

Scanning requires the preexistence of a 3D object. It can be anything from a digital maquette to a skyscraper. It is still common to employ a traditional sculptor to build a maquette for a character prior to going into 3D. In this case, the maquette is scanned using a laser or photographic system.

Laser scanners are the standard of scanning systems. They use a laser light projected onto the surface of an object or actor. The 3D contours of the subject are recorded and sampled into polygons. The most common laser scanning systems come from a company called Cyberware. There are several companies in the Los Angeles area, most with portable laser scanning systems: Gentle Giant, Icon Studios, Cyber F/X, XYZ/RGB, and Nexus Digital Studios, to name a few.

With systems becoming smaller and less expensive, it isn’t outside the realm of possibility for artists to purchase a scanner. Next Engine makes an affordable desktop scanner that sells for around $2,500.

Photographic-based scanning is less intrusive, requiring less equipment, time, and setup than the larger laser-based systems. Though the data is lower in resolution, the simplicity and speed of acquisition can be advantageous. Most photographic-based scanning uses a structured light approach whereby a grid of light is projected onto a subject from the camera. It is recorded and a 3D impression of the object is created. The limitation of this system is the resolution of the camera. Some photographic-based scanning systems are now using feature recognition to eliminate the need for the structured light pass. As digital resolution becomes finer, they may one day eliminate the need for laser-based scanners altogether. Realscan 3D, Eyetronics, XYZ/RGB, and Mimic Studios are examples of companies that do photographic-based scanning.

Lidar scanning is generally used for large-scale scanning such as entire buildings. The only limitation in these situations is accessibility to scanning locations. Lidar scanning is done from a nodal point, with laser beams cast outward from that location. The more locations from which the scanner can cast beams, the more complete the acquisition of the subject will be. In the case of capturing a building, the ability to cast beams from every side of the building and capture the roof may not be practical. In this case, the data will have holes, and modeling will need to extrapolate. As a result, it is important to capture as much photographic reference of the location to use in addition to the lidar scanning.

Processing the scan data in these situations is best performed by the company that performed the acquisition because the quantity of data can be enormous. A company that has pioneered the use of lidar data for entertainment purposes is Lidar Services Inc. out of Seattle. Gentle Giant is also now providing larger scale lidar scanning.

Figure 7.4 Lidar, survey, and photogrammetric data are often used to build accurate building and cityscape models for Spider-ManTM 3. (Image © 2007 Sony Pictures Imageworks Inc. All rights reserved.)

Photogrammetric Data

Photogrammetry is not a replacement for lidar data, which generates a tremendous amount of information, but for some simpler objects photogrammetry may be an option. It requires less complicated equipment and is less time consuming on location. It does, however, require more post-processing and the use of specific software. PhotoModeler is probably the most common photogrammetric modeling package.

Modeling for a Production Pipeline

When creating or building a model, it is very important to take into account the needs of the major downstream partners: texture painting, character setup, cloth simulation and color and lighting.

Texture Painting Needs

With modeling and texturing tools becoming more and more artist friendly, it is not uncommon these days for a modeler to also do texture painting. Modelers and texture painters communicate through the UV layout. If it is done well, the texture painters will be able to paint on the model with little distortion. It is not uncommon for texture painters to request alternate poses of a model to better facilitate painting.

Character Setup Needs

The Character Setup team will want the least amount of resolution possible to define a surface while still providing enough points to manipulate and define the structure of the model. While specific point counts are arbitrary and difficult to nail down, control meshes, which are delivered to Character Setup, are often in the range of 8000 to 16,000 faces. It is not uncommon for modeling to support two meshes: the lower resolution control mesh, which in turn drives the higher resolution render mesh.

Cloth Simulation Needs

The Cloth Simulation team has needs similar to those of Character Setup. However, they have a few additional requirements. Simulated cloth needs data that can be easily converted into spring meshes. This requires a more grid like (almost NURBS-like) layout. This is not to say that the hero cloth model needs to be completely built in this fashion, but at the very least, the basic layout of the clothing model needs to have a layout that is more grid like.

It is not uncommon for modeling to provide two products for clothing items as well: the basic cloth simulation model, which is the model discussed above, and a more detailed final hero cloth model, which is the model to be rendered. This version would contain additional details such as pockets, buttons, zippers, etc. It will also have the more subtle sculpted details such as seams and buttonholes. It may even contain an inner as well as outer surface to provide thickness. It is important that the hero cloth model not deviate too greatly in shape from the simulation model that is used to drive it. Close collaboration between modeling and the Cloth Simulation team is essential. It is very common to make adjustments to both models throughout production.

Color and Lighting

The Color and Lighting team will want enough resolution to make sure that the main details are clear and built out—relying too much on displacement and a variety of bump mapping techniques only makes their job more time consuming.

Client Servicing Approach

Modelers need to take a client servicing approach in meeting the needs of these different and often opposing groups. Collaboration and cooperation are essential to fostering this sort of team synergy and can be just as challenging as creating a great digital sculpture.

Engineering Aspects for Polygons

The reintroduction of polygons as the main data type of choice for motion picture production use has freed up the modeler from the NURBS-centric UV structure. It has cleared the way for more organic polygonal layouts and allowed artists to more accurately register the use of space in both 3D and UV

When modeling objects, one of the most important things to keep in mind is edge flow. This is true for both NURBS and polygons, but because polygons can be so free-form, some sense of organization needs to be in place. One of the first recognized strategies uses a technique called edge loops. Edge loops simply attempt to describe the shape of the object using a series of concentric rings. What edge looping did was instill a basic modeling strategy and order on otherwise free-form meshes. It creates a mesh that is visibly ordered and would allow Character Setup to better set up their skin weights and falloffs. Edge looping is similar to NURBS, while still allowing some of the free-form advantages of working in polygons.

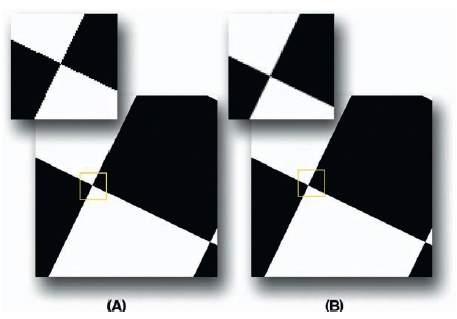

UV editing opened the door for texturing to have customized UV layouts. These layouts could be made any number of ways, and efficiencies could be introduced into the UV layout that might make the texture artist’s job easier.

Engineering Aspects for NURBS

Although NURBS are not as common as they once were, they are still used exclusively in some facilities around the globe. Sometimes they are still the best way to build certain objects. Some basic guidelines for working with NURBS include proper NURBS parameterization and proper maintenance of continuity between the different NURBS patches.

Proper NURBS parameterization entails having uniform parameterization between spans. This doesn’t necessarily mean that the points are all equidistant from each other, but rather that the tension between the points is fairly even. A general guideline would be to strive toward a patch with squares that are, for the most part, square. The best mesh is one with even distribution of point resolution that approaches being square.

Achieving proper continuity between NURBS patches is still an art in itself. Most packages that support NURBS have some form of patch stitching. There are several types of continuity: C1 continuity means that points on the edge of a NURBS patch are merely coincident, whereas G2 continuity means that the patches not only line up but also that there is no visible seam or discontinuity between them.

RIGGING AND ANIMATION RIGGING

Steve Preeg

Rigging: What Is It?

Rigging can be simply expressed as a process that allows motion to be added to static meshes or objects. This is not to be confused with actually moving an object because that is left to animation. Rather, rigging is what allows animators to do their job. These rigs can be made up of many aspects such as bones, deformers, expressions, and processes external to the application in which the rig is built. Rigging is considered a relatively technical process, and riggers are often required to have some scripting and/or programming skills. Python, Perl, C++, and MEL are some of the more desirable languages for a rigger to know.

In general, rigging can be broken into two distinct types: animation rigging and deformation rigging. Each of those types may be further broken down into subcategories such as body rigging, facial rigging, and noncharacter rigging.

Animation Rigging

Animation or puppet rigging is creating a rig specifically to be animated interactively. An animation rig is rarely intended to be renderable but instead a means to get animation onto a full deformation rig, which will be covered later.

It is not uncommon to have multiple controls for the same object in an animation rig, allowing animators to decide the best way to animate a particular object. The additional control structures do, however, need to be balanced with the need for speed and interactivity. Since these rigs may never be rendered and speed is critical, the quality of deformations on these rigs can be quite crude. In fact, geometry is oftentimes simply parented to nodes giving rigid deformations instead of heavier skinning or clustering.

A character rig for animation may come in many flavors and the control structures vary depending on the workflow of the animators themselves. However, most character rigs have certain standard parts.

Figure 7.5 Example of an animation rig. (Image courtesy of Digital Domain Productions, Inc.)

The ability to switch between inverse kinematics (IK) and forward kinematics (FK) is probably the most common need in a character animation rig. Inverse kinematics happen when a target position for a joint chain is animated. For example, the animator may want the foot to be in a particular place and does not care, as much, how the leg has to bend to get there. Forward kinematics is where the control for a rotation value on each joint is discreetly given. For example, first the hip is rotated and then the knee and ankle to get a new foot position. Switching between these two types of controls and matching the resulting positions when switching are common practices. Another example: As a character is walking, IK may be desirable to lock the feet down at each step. Then, if the character jumps and does a flip, it may be desirable to switch to FK. This way the foot targets do not need to be animated during the flip. Only the rotation of the hips and knees need be rotated. Making sure that the position of the leg/feet at the point of transition is critical.

Motion capture integration is another common need for an animation rig to accommodate. Motion capture is, by its nature, an FK set of motion. It also tends to have data for every joint of every frame. This can be very difficult for an animator to modify, both on a single frame and temporally. In addition, it is difficult to animate “on top of” motion capture; in other words, adding to the motion rather than replacing it. For these reasons it is often desirable to convert the motion capture from its initial FK form onto the control structure of the rig itself. The process itself is application specific and therefore not covered here but should be part of the planning if motion capture is to be used.

Local versus global control, and the ability to switch between them, is another commonly requested feature of a character animation rig. To better understand this concept, imagine animating a character with IK that needs to walk up to, and go up, an escalator. While the character is walking up to the escalator, the feet may need to be in global space. In other words, it does not matter what the torso of the character does as the feet stay locked in world space wherever the foot is placed. Once the character gets on the escalator, some ambient motion needs to be added. But having to keyframe the feet to always stay on the escalator may be difficult, especially if the animation of the escalator changes. At this point it may be easier to have the IK controls in the space of the pelvis. This way, the artist can not only move the body as a whole with the escalator, but can also have the character move around on the step that he is riding on by moving the IK controls relative to, or as a child of, the pelvis motion.

Control structures for character rigging are as varied as the characters themselves. One may need bones to be able to squash and stretch cartoony characters, while another may need to be anatomically correct for realistic body motion. Regardless of the specifics, there are some basic rules to follow.

The controls should be easily seen and accessed by the animators. Color coding controls can be an effective way to distinguish parts, or even sides, of a character. More control is not necessarily better; it can cause confusion when animators are trying to refine animation if there are too many nodes with animation data on them. Speed is critical. If an animator can scrub through a scene in real time, the artist can make many creative animation revisions in short order before rendering the scene out. A sense of timing can be determined without having to play back images or a movie file. Animators should feel that the rig is allowing them to use their talents to animate; anything that slows down that creative process should be avoided.

Facial rigging for animation could be counted as character rigging for animation, but it has specific needs that warrant separate discussion. Motion capture for the face is quite different from motion capture for the body. Various methods are used for facial capture ranging from frame-by-frame dense reconstruction, to marker points on the face, to video analysis. Whichever method is chosen, the integration to the rig is tricky to say the least.

In contrast to body motion capture, where one can explicitly measure, for example, the rotation of an elbow joint, nothing is quite as straightforward in the face. The face may be approached as phonemes, muscles, expressions, etc. A common starting point is Dr. Paul Ekman’s system, which is described in his book Facial Action Coding System (FACS) (Ekman and Friesen, 1978). This system essentially breaks facial motions down into component movements based on individual muscles in the face. Although it is a common starting point, it is generally understood that it needs to be expanded from its academic version. Once a system like FACS has been chosen, the process of mapping motion capture data to corresponding FACS shapes is a substantial problem.

As with body animation rigs, control structures that are easy to understand, and not overly abundant, can increase the speed as well as the quality of the animation. Unlike body animation rigs, facial rigs can become slow, because it is imperative to see final quality deformations in the animation rig. It is common to see final mesh resolutions and render-ready data in a facial animation rig. The reason is simple: That level of detail is needed to make quality facial animation. Seeing exactly when the lips touch, for example, is critical for lip sync. Dynamic simulations run after animation can add believability. However, care must be taken to leave the critical parts of the face that animation has spent fine-tuning, such as the lips and eyes, unchanged.

Noncharacter rigging, or elements of characters that are mechanical, represents another area of rigging. Examples of this type of rigging would be vehicle suspensions, tank treads, etc.

Control structures for noncharacter rigging change dramatically depending on the level of detail and importance of the action in the shot. For example, there may be a close-up of a complicated linkage in some sort of transformation where animators would need control over the speed and movement of a large number of pieces. Alternatively, there could be a panel opening on a tank far from the camera that merely requires a simple rotation. Clearly the control structure would be different in these two cases. Keeping the control as simple as possible for the needs of the shot can save a lot of time and effort.

Part of keeping the control structures simple for noncharacter rigging is automating ancillary motion. For instance, an opening hatch may need dozens of screws that are turning. The turning of these screws can be automatically driven by a single parameter that opens the hatch. Many mechanical systems are designed to work in films. Taking advantage of systems that only work one way can be a huge benefit to animation. Pistons, flaps, and springs are all good candidates for automated rigging. Often it is desirable to have overrides on these types of controls, which is relatively easy to integrate most of the time.

Animation rigs are often designed not only to deliver animation to a renderable rig but also to prepare for simulations. A tank tread, for example, may have animation controls to move the individual tread pieces around the base of the tank, but a simulation may be run to add vibration and undulating motion, suggesting slack in the treads. Extra values or attributes can be passed to the simulation software based on the animation rig and its motion. Velocity, direction of travel, etc., are values that may enhance the quality of the simulation. Whether these simulations are run by the rigging department or passed off to a dynamics group, preparation for simulation can be a valuable tool supplied by rigging.

Deformation Rigging

Riggers often spend the majority of their time on deformation rigging. The goal of the deformation rig is to take the animation data from the animation rig and deform the final renderable mesh as dictated by the needs of the show. The quality here can vary greatly from far-away digital doubles to hero characters that need to have the feeling of skin sliding over muscles or animated displacement maps.

Figure 7.6 Example of a deformation rig. (Image courtesy of Digital Domain Productions, Inc.)

The first step is to accept data from the animation rig. This is done in many ways including constraining the deformation rig to the animation rig, or having an equivalent set of nodes or joints in both the animation rig and deformation rig. The second example allows the animation to be exported off the animation rig and directly applied to a matching set of objects, or nodes, in the deformation rig. Facial animation may be converted directly, vertex by vertex, or through matching nodes. There are many ways to convert animation data to deformation rigs. These are frequently application specific, so no detailed descriptions are provided here.

There are many different types of deformers and, of course, their names and exact capabilities are application specific. In general they may be grouped into a few different types. One type is linear deformers. This is the simplest and most predictable of all the deformers. However, they are often blamed for deformations looking CG. In general, that is more a misuse of them than a problem with linear deformations themselves. They also do offer some good advantages—for instance, they are extremely predictable. Through simple mathematical calculations they may be reversed and broken down into individual components when multiple linear deformers are affecting a single point. In addition, linear deformers tend to be very fast. Compared to deformation rigs, which might become slow, this can be a huge advantage.

Nonlinear deformers are another common type of deformer used in character rigging. These deformers may be tricky to implement and often have somewhat unpredictable results. For example, a deformer that bulges a surface based on a sphericalshaped object coming into contact with vertices may end up distorting the mesh significantly by allowing the vertices to slide over the spherical shape rather than push them out along their original normals. Nonlinear deformers often lack the control level to change the behavior to a directable level.

Other typically nonlinear deformers that deserve mention are muscle systems, which come in many varieties. They can be dynamic or nondynamic. They may have volume preservation, with stretch and slide attributes, etc. In general one can imagine implementing a muscle system on a character by imitating real-life muscle shapes and attachments and then binding the surface mesh to them. In practice, however, that rarely works. The algorithms used in most muscle systems do not behave the way a real muscle affects real skin. Therefore, the effort to accurately simulate an entire character’s muscle structure will not give accurate skin motion. Instead, most muscle systems are implemented using anatomically correct muscle placement as a guide for placement and behavior. Then, contrary to real muscle systems, extra muscles are placed or attachments adjusted until the skin moves in a desirable way. Some muscle systems also allow post-deformation adjustments at the vertex level. This can save huge amounts of time when trying to fix a muscle’s contribution to a skin.

Muscle systems, when used correctly, supply certain advantages such as simulated skin sliding or bulging, based on compression of a muscle and its associated volume conservation. In general, the amount of time it takes to rig a character with a full muscle system, compared to standard skinning methods, is much longer. Therefore, the quality of deformation and range of motion that a character is required to do in the project may determine whether or not to build a full muscle rig. As computers get faster and new algorithms are implemented for muscle systems, they could become a more standard rigging tool for the future. However, at this time there is still a need for the simpler rigging solutions in many cases.

Regardless of the method, or methods, finally chosen to rig a character, there is often a need to clean up what the rig generated. Dynamic simulations can be run and smoothing algorithms can help remove pinches or spikes in the deformations. In cases where vertex-by-vertex accuracy is required, as is often the case in facial animation, shot modeling may be considered.

Shot modeling is a phase of production in which a rigging artist adjusts a model specifically for a shot instead of rolling global changes back into a rig to have it affect other shots as well. Shot modeling, though powerful in terms of the level of control per vertex it allows, does have its problems. Shot modeling is dependent on the animation, so if the animation changes, the shot modeling needs to be readdressed. Shot modeling is also time consuming and, as the name suggests, is only useful for that one shot. Post-deformation cleanup, in any form, can be a huge time-saver over refining a rig for months to make sure it stands up in any and all shots.

Noncharacter deformation rigging is usually less time consuming and may have more of an impact on rendering and lighting optimization than on the deformations themselves. It is often more efficient to distinguish between deforming and nondeforming geometry and represent nondeforming geometry in static caches on disk, which can then simply have a transform applied at render time. Many current renderers support this functionality, so the deformation rigger should be aware of when it may be used. As with character deformation rigs, passing animation data can be done in the same ways, and ancillary motion can be driven automatically as in the animation rigs.

Last, there is often the need to prepare deformation rigs to go through a postprocess such as simulation. Again, this can be done on geometry caches, live rigs, etc. Sometimes these simulations are multiple rigs that are dependent on an earlier piece being simulated first; for example, a cloth simulation for clothes hanging on a drying line may need a simulation of the clothesline first. That is a simple case, and it is not uncommon to have many levels of dependencies.

Reference

Ekman, P, & Friesen, W. (1978). Facial action coding system: a technique for the measurement of facial movement. Palo Alto, CA: Consulting Psychologists Press.

TEXTURING AND SURFACING

Ron Woodall

The Importance of Texture Painting

The texture artist, along with the modeler, is at the head of the production line. Being able to successfully render and bring a shot to completion depends a great deal on the texture work being complete.

In the world of computer graphics, the texture artist can serve many roles: makeup artist, scenic painter, costume designer, model painter, or all of the above on any given project. These mind-sets can be quite different depending on the type of model that is being painted. If a machine is being painted versus a creature, or a set versus a human, the surfacing approach and methodology are different for each.

Hard Surface Models

When painting a vehicle the texture artist has to think about its direction of travel and how that might bias dirt toward one side versus another. Where is the model most likely going to have scratches or damage due to wear and tear? Generally, ground and air transport have the most damage to their finish coats on their leading edges because those are the surfaces most often hit by bugs, rocks, dirt, or any other thing that may be in the vehicle’s path of travel.

Thought also must be given to individual parts of a machine. Where would it get hot and discolored or show cracked paint from the exhaust? Where are the joints that may leak oil? Where would dirt most likely collect? Where then, would rainwater flow over the surface and wash dirt away? There are many things to consider when making a very unreal object look real. One of the most important things a texture artist provides to CG work is imperfection. By nature, computer-generated models tend to be too perfect looking and symmetrical. Generally, the human eye is quick at splitting masses in half and spotting symmetry, so providing asymmetry to a model is a good thing.

If the model to be textured exists as a real vehicle or a practical on-set model, the job is somewhat easier. These real things can be photographed to provide reference and, in some cases, texture source. These practical set pieces, therefore, need to be thoroughly documented. If the texture artist cannot be on set to document these objects, a list should be prepared of every view that is needed. Evenly lit, shadow-free photos that may only need some minor touch-ups to remove highlights can be applied directly to a model to move very quickly toward a finished paint job. However, if a spaceship, for instance, only exists as a piece of artwork, then much thought needs to be given to the previously mentioned aging factors in order to create a paint job that looks believable.

For a model that is entirely fictitious with no on-set counterpart, a photographic source is still the best reference. Photographs of oil stains, dirty cars, the back of a garbage truck, etc., can provide the artist with believable detail to add to the work. There are also many free websites or sites that for a small annual or monthly fee provide a cornucopia of high-resolution texture sources. Painting every detail from scratch is commendable, but very foolish, because it will most certainly take more time. Wherever possible, the use of some combination of painting and using source material is highly recommended.

With the constantly shrinking schedules of today’s production world, finding every possible way to get to a great end look quickly should be the goal of every texture artist.

Scale is another major factor in believability, especially with large-scale models. Having a human model in the same scale as the subject model is useful. Use the human model placed in various positions next to the subject model to create a clear sense of scale for paintwork. This practice helps immediately show existing scale problems. For instance, if surface scratches on an object need to be one-eighth of an inch wide in reality, and the scratches that have been painted are much larger, it will be readily apparent.

It is also important to do test rendering with bump and displacement maps. They are first set to whatever height is appropriate for the scale of the model chosen by the texture artist. As an example measurement ratio, 1 unit equals 1 foot. Keeping this in mind, initial bump and displacement heights for normal-sized models can be set to 0.02075 and 0.083, respectively. Knowing that details are being created with a maximum depth or height of 0.25 inch in bump and 1 inch in displacement makes the process of creating these maps happen more easily.

Certain camps believe that modeling every little detail is the only way to achieve realistic results. The majority of texture artists argues that this methodology is not only foolish, but also wrong. For example, if a modeler chooses to create a row of rivets on a surface, he or she will use algorithms that instance and place the rivets. This will create a perfect row of details, which normally is not desired. Creating imperfect fine details via bump or displacement is a much faster process. It’s also much easier to achieve synchronicity of maps for dirt, oil, and other effects with these details. If all of the small detail is modeled, the only way to get dirt in around it is to paint it in 3D. Faux details by nature are 2D and, therefore, can be painted in the various effects without having to interact with the model at all. Moreover, an incredible amount of time is also saved; creating UV layouts and texture assignments for tons of little details is not necessary.

Creature Models

Painting creatures provides an artist with a whole new list of concerns. Creatures can require thought about their age, their skin condition, how they move, and many other factors. Where is the skin most likely to have wrinkles from repetitive expansion and contraction? Where is the creature most likely to have dry skin or calluses from contact with the ground? What does the creature eat and how might that stain skin or fur if it has any? What is the creature’s native environment? Is it dirty? What color is the dirt? Where is the creature going to collect dirt? On its belly? Does it sleep on one side? Maybe one side is dirtier than another. This all may sound absurd, but small details such as these must be considered in order to foster believability in something entirely fanciful.

Like the hard surface model, if a texture artist is matching a real animal or a puppet from on set, the importance of a complete, thorough, and properly lit set of reference photographs of these practical creatures cannot be underestimated. The minimal time and money spent on gathering great photographic reference pales in comparison to the money that may be spent chasing and correcting textures painted from horrible reference. If an artist starts with improper reference photography, it can take forever to reach a point where the creature looks correct. If the artist has no other choice than to start with improperly gathered reference, additional relative imagery must be gathered from online sources. The artist could also take a field trip to the zoo or local pet store to take reference photography.

Types of Geometry: Their Problems and Benefits

Polygons

Polygonal modeling was once viewed as the dusty forgotten forefather geometry type but has come back around to being the preferred geometry type for productions. A whole host of robust UV layout software packages and options are available within modeling packages that really allow the user to achieve great texture setup with polygons.

Being able to easily texture polygons makes them very appealing to use since polygons are less data intensive and give way to faster render and interaction times. Visually complex parts comprised of one object can be made using polygons. This is a huge plus for most rendering packages. Being able to combine 50 objects into one and still get good texture layout makes polygonal modeling the way of the foreseeable future. The only major downside to polygons is that they shade too perfectly and therefore do not look real. More texture work has to be put into the flat areas and edges of polygons to give them a real level of imperfection.

Catmull-Clark Surfaces

The Catmull-Clark surface is the city cousin of the polygonal surface. Created by Edwin Catmull and Jim Clark, Catmull-Clark surfaces are primarily used for creature modeling or hard surface objects that require compound curved shapes. Catmull-Clark surfaces are used to render complex organic objects from light polygonal proxy objects. A Catmull-Clark is automatically made when a subdividing scheme is applied to a polygonal mesh. A six-sided cube, after one level of subdivision, becomes an icosikaitetragon having 24 sides and appears more like a sphere.

While staying light in interaction preview mode, subdivision surfaces can become very visually complex with a quick keystroke that applies the subdivision scheme. Most Catmull-Clarke meshes are rendered at subdivision level 3. Because this geometry type is ever in flux, so is the UV layout for it. The layout for a Catmull-Clark object looks different in preview mode versus subdivision level 3. It’s important to make sure that any part designated to render as subdivision be painted as such. If a surface is painted in polygonal mode and rendered as subdivision, all of the corners where the UV layout have been cut will show gaps in the paint over the entire model.

It may be difficult to make UV layout cuts in areas with only a few control vertices. Often when cutting in these light areas, the paint gets weird and stretchy. Adding another edge to the left and right of the cut edge usually fixes the stretching problem. More often than not, UV layout work is far more difficult on subdivision models than it is when working on polygons.

Splines and NURBS

B-splines and NURBS are somewhat extinct in production today. The one place they are still used is modeling. The painfully missed benefit of spline-based geometry is ready-made UV coordinates. By nature, spline surfaces have a UV layout the instant they are created. Sometimes the layout needs modification, or multiple surfaces need to be stitched together and assigned to a single texture but at least there is a starting point. Splines are easy to set up for painting, but very data intensive. Spline models almost always take much more information to describe a surface than a polygonal or subdivision model of the same subject.

Prepping the Model to Be Painted

UV Layout

Before painting can begin the model has to be prepped to receive paint. This is done most often by creating UV layouts. A UV layout is a 2D representation of points and edges defining texture allocation that correspond to the 3D control vertices and face edges of a model. The UV layout determines how much texture resolution each polygonal face will receive once textures are applied. Generally, texture artists prefer to create their own UV layouts. Most automatic layouts tend to be highly inefficient with regard to optimal usage of texture space. They make for poor memory usage during real-time interactions.

Longer render times are also an unwanted side effect of poor layout and map assignment. Automatic layouts also tend to be extremely visually incoherent. Often in these layouts, parts in proximity on a model tend to have no relationship to one another in 2D layout space. Painting in 2D is easier if parts are laid out in a thoughtful way with UV orientation closely matching that of the geometry and with neighboring model parts near each other in UV space. Painting in 2D is more efficient because the resolution is one to one and interaction with the model is not a factor. Using automatic layouts and painting solely in 3D can create models that have far too many giant resolution textures with overly soft paint mapped into them.

Projection Mapping

Projection mapping is a tried-and-true method of applying texture to a model. Because of the brute force nature of applying textures via projection mapping, this method only works well for hard surface models.

Projection mapping has many merits. Because each pixel of the texture is being projected on an infinite line through any geometry associated with the projector, the information stored in the texture file to describe this mapping is far lighter than a model mapped entirely via UV layout. With UV layout, every single control vertex in the model has multiple coordinate numbers associated with it to describe the vertex location within the UV layout. This can result in many thousands of lines of information just to map one piece of geometry. Most projection coordinate information equates out to less than a dozen lines of information in the texture file. Using projection maps wherever possible results in lighter files. Lighter files equal faster loading, interaction, and render times. Using projectors also eliminates the time needed to create UV layout for the parts being projected.

Because the paint is being blasted through the model from a single axis, any surfaces perpendicular to the projection plane will have streaked paint. If a surface tilts away from, or toward, the projector at a shallow enough angle, a projection map will usually still work well. Once surface pitch out of parallel with the projector approaches around 80 degrees, the paint starts to stretch too much. In cases where this occurs, those surfaces should be removed from the projector and placed in a projector that is closer to parallel with the problem area. The other option is to UV map the parts that do not work well with projections. Because of it strengths and limitations, projection mapping is best suited for models that don’t need to stand up to close scrutiny or models that need to go into the rendering pipeline quickly.

Camera Mapping

Camera mapping is very similar to projection mapping with the exception that the paint can be mapped from a camera having any field of view up to, and beyond, 180 degrees. Because the paint can be mapped from a nonorthographic view, camera mapping is ideally suited for adding detail to paint on a shot-specific basis. Models that have limited close-up screen time can be painted solely via camera mapping. For instance, a model that is seen closely in only three shots could be painted from the three shot cameras and most certainly take less time to complete than painting a model that has to stand up to scrutiny from every possible angle.

Volumetric Mapping

With volumetric mapping the texture is described in 3D, instead of 2D, with images being applied to the surface. Because this method of mapping requires no assigning of images or creation of UVs it may very well be the fastest way to get going on a paint job.

In its simplest form, volume mapping only requires the artist to select the model or parts of the model and assign a pixel density value to the selected volume. In a matter of seconds, a model can be ready to paint from any angle without fear of pixel stretching or resolution variation from surface to surface. However, because there are no 2D images, all changes great and small have to be done solely in 3D. With UV mapping or projection mapping, paint versions or changes can be made by simply referencing an alternate version of an original texture. With volume mapping this is impossible since any one given part of the model is part of the whole texture volume. There is no easy way to remove only a portion of the model from the volume and modify the paint of just that portion.

Texture Creation

Texture from Source

When matching to an existing prop, set, or costume, the reference photos should be lit as perfectly and evenly as possible so that they can be used as source texture with a small amount of cleanup. It is also very helpful to ask for painted swatches from the set painters, as well as fabric swatches from the costume department. If any fabrics have a pattern, be sure to ask for a swatch large enough to cover the repeat of the pattern. Actual painted card and fabric swatches are great because they can be easily turned into textures via a flatbed scanner.

Pure Painting

Purely painting textures doesn’t happen all that often (perhaps more often in digital animated features than for live-action films). Even then, using source texture where possible is wise because painting every last little detail is time consuming and may in the end not look as real as some combination of painting and source material.

Finding or Making Source Texture

The best weapon for a texture artist is an extensive, organized source library. In addition to whatever source or reference may come from location, gathering personal source texture is a great practice. Keep a camera on hand for the great textures that pop up in everyday life. If a certain texture is needed for a project, but can’t be found, make it! Just a half hour outside with India ink and printer paper can result in a wealth of scannable splatters, drips, and stains for use on models.

Diffuse Maps

When creating diffuse maps from scratch, the paint values should be created as the surface would appear in shadowless light. In some rare situations a bit of stage painting can help model details read better. Generally though, diffuse color maps should be free of highlight and shadow.

Small, faux-painted details, such as holes in a surface that do not require an accompanying opacity map, can be painted as zero black to help cheat them as an area that light is entering into but not returning from. These details should also be painted zero black in the specularity map.

The extreme values of light and dark in color maps should be no lighter than 80% and no darker than 20%. This helps to prevent the paint from looking too illustrative and provides some range for a technical director (lighting artist) to push the values up or down in the materials where needed.

Bump Maps

Bump maps are most easily equated with topographical maps in real life. With topographical maps certain colors represent a range of altitudes. Red might represent sea level, orange 1000 feet above sea level, and so on. With bump maps instead of colors, the height change on the model surface is described in values of gray. Most often middle or 50% gray is the pivot point. Any value less than middle gray will read as an indentation, and any value above middle gray will read as a protrusion.

Bump mapping is merely a method of making a simple surface look more complex by modifying the surface normal. The height of a bump map in the material should be rather shallow. Since bump maps don’t really change the surface topology, if the height value is much more than one-quarter of an inch, the trick gives itself away. Looking at the middle of the model, the bump map may provide great believable detail, but around the edges of the model there is a perfectly smooth profile. This instantly reveals that the surface detail is merely a cheat. Details that need to have more than a quarter-inch of relief off the surface should either be modeled or added via displacement maps.

Displacement Maps

Like bump maps, displacement maps are described with a range of gray values. Displacement maps are used to actually change the surface detail. If the pivot point in the material is middle gray, anywhere the paint is lighter or darker than middle gray, the paint will get converted to micropolygons pushing out of, or into, the surface of the model.

The result of paint displacement is an actual geometry change. Unlike bump, displaced details will read when the surfaces are in profile or edge on. Displacement mapping takes far longer to calculate at render time; therefore prudent use of displacement is advised. A model with every square inch of surface area displaced will certainly take far longer to render than one with efficient use of bump and displacement.

Specularity Maps

Specularity maps are used to control the specular reflectivity of a model. Typically these maps should have a full range of values from white to black all over, with white representing the most specularity and black presenting none.

More complicated uses of specularity maps involve setting up the material to behave like two or more materials based on a range of values in the map. For instance, 0% to 33% gray has a spec model of tire rubber, 33% to 66% like brushed aluminum, and 66% to 100% has the specularity of chrome. This way of painting helps create more believable faux details on the surface of a single mesh object because of the obvious material changes. On a single mesh object one could paint a rubber gasket surrounded by chromed fastener heads in the displacement and diffuse pass. By painting the same details in the specularity map, each within the appropriate range of gray, the details will have very divergent material looks from the base material of brushed aluminum.

Material Designation Maps

Material designation maps or area maps are another way to create the look of many material types within a single mesh object. Area maps are usually solid white on black maps, with no range of grays in between. Black represents the base material, and white represents the areas where it is desired to switch to an alternate material. By using area maps one can reserve the full range of the specularity map solely for attenuating the specularity and use one or more area maps to switch to various other material types.

The benefit of using area maps is that multiple material switches can be separated cleanly into individual area maps. This separation makes it easy to change how much of any one given material is being used without accidently changing any others.

Luminance Maps

Luminance maps can be used to create faux lighting such as many pools of light on a surface, backlit windows, or hundreds of point lights. Where it may be too render intensive to have many actual lights being calculated for every frame, luminance maps can be used. These maps can be painted or more effectively rendered with real lights and baked into texture maps. Luminance maps should be solid black everywhere except where the surface is being lit or is supposed to be a faux light source.

Various Other Map-Driven Effects

Many other separated maps can be used to add interest to a model; dirt, oil, and rust maps, just to name a few. These maps are essentially the same as area maps since dirt, oil, and rust all have their own material properties.

These maps should be painted pure white on black. White represents dirt or whatever effect is being created, and black is the base material. These additional effects should be set up in the material to use a procedural color map or a single color. Since the percentage of surface area typically covered by dirt, oil, or rust is so small, it doesn’t warrant the efficiency hit of an additional full-color map for each effect on every part of the model.

Help from Procedural Textures

Procedural textures are computer-generated images that are based on user-created code. Using mathematical algorithms to create real-looking materials like wood, stone, metal, or any surface that has a random pattern of texture, the true strength of procedural textures is infinite resolution. Use of procedural maps can allow the camera to travel from far away to within a sixteenth of an inch above the surface of a model without losing textural detail. On the whole, procedural color maps still tend to look fake, but procedural bump and displacement can be quite convincing.

Another strength of procedural maps is their efficiency during interactivity and during render time. Because no images are being stored on disk to create the look of the procedural texture, none is called up at render time or when a model is loaded and worked on. Procedural maps are a great way to add an extra high level of detail to surfaces at a relatively very low cost.

Film Look

Most visual effects facilities receive lookup tables (LUTs) from a facility that is color timing the entire film. LUTs can be used to preview on a monitor or digital projector how an image will be reproduced on the final film print. It is important to periodically check how rendered paint jobs look with a film look applied. This helps ensure that the film look is not shifting values or colors so much that things appear to be broken.

Always painting with the film look on is a bad idea, however, unless the reference images for the model being painted have been pre-processed to have the film look baked in. The other exception is when the model being painted has no real-life counterpart on set. In this case, there is no harm in painting with the film look on, since there is nothing real to match.

Texture Painting in Production

It is very important to keep a production on schedule. With that goal in mind, creating a cursory paint job in the course of a day or two that can be handed off to the look development artist gives that artist something to start building material work on. Most average paint jobs take around 2 to 6 weeks to complete. Making look development artists wait this long is foolish, since that artist can make paint work quite a bit easier with great material work.

It is also very important to keep in regular contact with supervisors and art directors multiple times a day. Any time a personal benchmark is reached, a test render of the paint should be sent to the VFX Supervisor, the Paint Supervisor, and the Art Director for input. Making a habit of touching base often will ensure that the work stays on the right track.

Other important points of focus include understanding real-world material behavior and understanding how textures affect materials. Understanding the physics of how and why solid plastic responds to light differently from cast iron will help in the creation of better textures. Also understanding expected gamma settings and pivot point defaults for the various texture map types will help make work go faster and easier. A good solid understanding of how all the various painted maps affect the materials to which they are assigned is imperative to prevent making more work than is necessary.

Making self-created backups is another important mode of working during production. Mistakes happen to everyone. Creating iterated master files, like Photoshop files that contain all of the layers unflattened, makes it easier regain lost paint or make corrections and resave from the master file later in the production schedule.

Some facilities have an automated nightly backup system, but relying solely on this type of system cannot be done. While a restore can take hours, it takes mere minutes to find one’s own organized master files. Naming the master files with the same naming convention as the textures they represent with the exception of extension and iteration number makes a quick workflow. For example, finding /car/images/master/Rlite_C.3.psd and saving a copy of it to /car/images/color/Rlite_C.tif is easy because the two locations are close together, and the names differ by only one character and the file extension.

Model Editing

It is important for painters to understand what modelers do to foster better communication between the two disciplines. Learning at least the basics of modeling skills helps a painter to better convey any needs to a modeler more easily. At the bare minimum learn enough about modeling to be able to converse intelligently with a modeler about problems.

Conclusion

Texture mapping is every bit as important as any other part of the pipeline. Often overlooked in the end, without texture maps from a skilled artist, most CG would inevitably fall far short of looking believable. Imagine a world covered in glossy smooth gray plastic and one will begin to understand the importance of good textures.

DIGITAL HAIR/FUR

Armin Bruderlin, Francois Chardavoine

Generating convincing digital hair/fur requires dedicated solutions to a number of problems. First, it is not feasible to independently model and animate all of the huge number of hair fibers. Real humans have between 100,000 and 150,000 hair strands, and a full fur coat on an animal can have millions of individual strands, often consisting of a dense undercoat and a layer of longer hair called guard hair.

The solution in computer graphics is to only model and animate a relatively small number of control hairs (often also called guide or key hairs), and interpolate the final hair strands from these control hairs. The actual number of control hairs varies slightly depending on the system used but typically amounts to hundreds for a human head or thousands for a fully furred creature. Figure 7.7 shows the hair of two characters from The Polar Express (2004), a girl with approximately 120,000 hair strands and a wolf with more than 2.1 million hairs.

A second problem is that real hair interacts with light in many intricate ways. Special hair shaders or rendering methods utilizing opacity maps, deep shadow maps, or even ray tracing are therefore necessary to account for effects such as reflection, opacity, self-shadowing, or radiosity. Visual aliasing can also be an issue due to the thin structure of the hair.6

Another problem that can arise is the intersection of hairs with other objects, usually between hairs and the underlying surface, such as clothes or the skin. For practical purposes, hair/hair intersections between neighboring hairs are usually ignored, because they do not result in visual artifacts.

In almost all cases other than for very short animal fur, hair is not static during a shot but moves and breaks up as a result of the motion of the underlying skin and muscles, as well as due to external influences, such as wind and water. Often, dynamic simulation techniques are applied to the control hairs in order to obtain a realistic and natural motion of the hair. In the case of fully furred creatures with more than 1000 control hairs, this approach can be computationally expensive, especially if collisions are also handled as an integral part of the simulation.

Figure 7.7 Examples of digital human hair and animal fur. (Image © 2004 Sony Pictures Imageworks Inc. All rights reserved.)

Finally, for short hair or fur, some special purely rendering techniques have been introduced that create the illusion of hair while sidestepping the definition and calculation of explicit control and final hair geometry. These techniques address the anisotropic7 surface characteristics of a fur coat and can result in convincing medium to distant short hair or fur shots but can lack close-up hair detail and don’t provide a means for animating hairs.8

The next subsection introduces a generic hair/fur pipeline and explains how a specific hairstyle for a character is typically achieved during look development and then applied during shots.

Figure 7.8 Pipeline diagram. (Image © 2009 Sony Pictures Imageworks Inc. All rights reserved.)

Hair Generation Process

Figure 7.8 shows a diagram of a basic hair generation pipeline. The input is the geometry of a static or animated character, and the output is the rendered hair for that character. The hair or fur is generated in a series of stages, from combing (modeling) and animating of the control hairs to interpolating (generating) and rendering of the final hairs or fur coat.

In a production environment, this process is often divided into look development and shot work. During look development, the hair is styled, and geometric and rendering parameters are dialed in to match the appearance the client has in mind as closely as possible. During shots, the main focus is hair animation.

Look Development

The Hair Team starts out with the static geometry of a character in a reference pose provided by the modeling department. One obvious potential problem to resolve immediately is to make sure that the hair system used can handle the format of the geometric model, which most likely is either of a polygonal mesh type, a subdivision surface, or a set of connected NURBS9 patches.

With the character geometry available, placing and combing control hairs are the next steps. Control hairs are usually modeled as parametric curves such as NURBS curves. Some systems require a control hair on every vertex of the mesh defining the character, whereas others allow the user to freely place them anywhere on the character. The latter can reduce the overall number required since it makes the number of control hairs independent of the model: Larger numbers of hairs need only be placed in areas of the body where detailed control and shaping are needed (such as the face). Keeping the number of control hairs low makes the whole hair generation process more efficient and interactive, because fewer hairs need to be combed and used in the calculations of the final hairs.

Figure 7.9 Left: Uncombed/combed control hairs. Right: Rendered final hairs. (Image © 1999 Sony Pictures Imageworks Inc. All rights reserved.)

Figure 7.9 illustrates a set of uncombed and combed control hairs from Stuart Little (1999). One such control hair with four control points is enlarged in the lower right corners. Similar to the number of control hairs, the number of control points per hair also has an effect on performance.

When control hairs are initially placed on a character, they point in a default direction, usually along the normal of the surface at the hair follicle locations. Combing is the process of shaping the control hairs to match a desired hairstyle. This can be one of the most time-consuming stages in the whole hair generation process. Having a set of powerful combing tools is essential here.10 For short hair or fur, painting tools are often utilized to interactively brush certain hair shape parameters, such as hair direction or bend over the character’s surface.

Interpolation is the process of generating the final hairs from the control hairs. One or more control hairs influence each final hair. The exact algorithm of interpolation depends on the hair system used, but the most popular approaches are barycentric coordinates, where the shape of a final hair is determined by its surrounding control hairs (requiring a triangulation of those control hairs or one per vertex of the mesh) or weighted fall-off based on the distance of a final hair to its closest control hairs.

The interpolation step requires an overall density value to be specified that translates into the actual number of final hairs generated. Frequently, feature maps (as textures) are also painted over the body to more precisely specify what the hair will look like on various parts of the body. For example, a density map can provide fine control over how much hair is placed in different parts of the body. In the extreme case, where the density map is black, no final hairs will appear; where it is white, the full user-defined density value applies. Maps can be applied to any other hair parameter as well, such as length or width.

It is also helpful during the combing process to see the final hairs at the same time when combing the control hairs, and most systems provide a lower quality render of the final hairs at interactive rates. This is shown in Figure 7.10, an early comb of Doc Ock in Spider-Man 2 (2004), where the control hairs are in green, and only 15% of the final hairs are drawn to maintain real-time combing.

The hair/fur is then rendered for client feedback or approval, usually on a turntable, with a traditional three-point lighting setup to bring out realistic shadows and depth. During this phase, a Lighter typically tweaks many of the parameters of a dedicated hair shader like color, opacity, etc., until the hair looks right.

Look development is an iterative process in which the hairstyle and appearance are refined step by step until they are satisfactory and match what the client wants. Once approved, shot work can start and a whole new set of challenges arises with respect to hair when the character is animated.

Figure 7.10 Control and final hairs during combing. (Image © 2004 Sony Pictures Imageworks Inc. All rights reserved.)

Shot Work

Animators don’t usually directly interact with the hair. When they start working on a shot that includes a furry or haired character, it doesn’t have any hair yet. It is therefore helpful to have some sort of visual reference as to where the hair would be to help them animate as if the hair were present. This can be in the form of a general outline of how far out the hair would be compared to the body surface, such as a transparent hair volume, or it might be a fast preview of what the final hair look will be. This is important because it limits the number of corrections that need to be done once hair is applied.

As described earlier, the hair appearance is decided in the look development phase. This includes where all the hairs are on the body and their shape and orientation. When an animator creates a performance for a character in a shot, the hair follows along with the body, and remains stuck to the body surface in the same positions it had in the reference pose. This provides what is known as static hair; even though the hair follows along with the body, the hair itself is not animated. If all that is needed is motionless hair on an animated body, then hair work for the shot is done.

Usually, hair motion and dynamics are an important part of making a shot look realistic and can contribute to the character’s performance. Hair motion can be:

• Caused by the character’s animation: If the character folds an arm, the hair should squash together instead of going through the arm.

• Caused by interaction with other objects: If the character is holding something, walking on the ground, or hitting an object, in all cases the hair should be properly pushed away by the object instead of going through it.

• Full dynamics simulation: Short hair may have a bouncy motion (and take a few frames to settle), whereas long hair will flow with the movement and trail behind.

These situations are usually handled by applying some sort of dynamics solver to the control hairs, so that they react in a physically realistic way, while moving through space or colliding with objects. The final rendered hairs then inherit all of the motion applied to the control hairs.

Third-party hair software packages provide dynamics systems, but some facilities also have proprietary solvers. There are many published methods of simulating hair dynamics (superhelix, articulated rigid-body chains), but the most widely used are mass spring systems, much like in cloth simulations. Whereas these methods provide solutions for physically correct motion, animating hair for motion pictures also has a creative or artistic component: The hair needs to move according to the vision of the movie director. Therefore, the results of dynamic hair simulations are often blended with other solutions (hand animated, hair rigs, static hair) to achieve the final motion.

General Issues and Solutions

Combing

As mentioned earlier, combing can be an elaborate task during look development, especially for long human hair and complex hairstyles. It is therefore crucial for a hair artist to have access to a set of flexible combing tools. Even for a commercially available system like Maya Hair, additional custom tools can be developed to facilitate the shaping of control hairs. For example, to make it easy to create curls or form clumps between neighboring hairs as shown in Figure 7.11, left, the user selected a group of control hairs and then precisely shaped the desired clump profile to specify how those hairs should bunch together.

A fill-volume tool can quickly fill an enclosed surface with randomly placed control hairs. The hair volumes created by modelers to describe the rough hair look of a character can be used to actually generate the control hairs needed by the hair artist. An example is shown in Figure 7.11, right, where braids were simply modeled as cylindrical surfaces by a modeler and then filled with hair. Figure 7.12 illustrates intricate the human hairstyles of two characters from Beowulf (2007).

Figure 7.11 Left: Clumping tool with a user-customizable profile. Right: Combing braids (from left-to-right: three braided cylinders, control hairs generated by tool, final rendered hairs). (Image © 2007 Sony Pictures Imageworks Inc. All rights reserved.)

Figure 7.12 Final comb examples, illustrating the intricate human hairstyles of two digital characters from Beowulf (2007). (Image courtesy of Sony Pictures Imageworks Inc. and Paramount Pictures. BEOWULF © Shangri-La Entertainment, LLC, and Paramount Pictures Corporation. Licensed by Warner Bros. Entertainment Inc. All rights reserved.)

Render Times and Optimizations

One difference between short hair/animal fur and long humanlike hair is the difference in combing needs. Another is render times and memory requirements. Whereas a frame of high-quality human head hair can be rendered from minutes to under an hour depending on hair length and image resolution, rendering the millions of individual hair strands of fully furred creatures can take several hours per frame using several gigabytes of computer memory, especially with motion blur and when close up to the camera.

Optimizations applied at render time can therefore be very effective. One example is view frustum culling, in which hair outside the current view is not generated and rendered, which is very useful if most of the character is off-screen. If the hair follicle location at the base of the hair on the skin is used to decide whether to cull the hair or not, a safety margin needs to be added, because (long) hair with follicles outside the view frustum may still have their tips visible in the frame. The same holds true for backface culling methods, which do not render hair on parts of the character facing away from the camera.

Another optimization technique is level of detail (LOD) applied to the hair, where different preset densities of hairs are generated and rendered for a character depending on the distance from camera. Varying hair opacity to fade out hair strands as they move between levels helps to avoid flickering. Some studios have also developed more continuous techniques, which smoothly cull individual hair strands based on their size and speed.

A feature of many hair systems is the ability to have multiple hair layers. Examples are an undercoat of dense, short hair and an overcoat of longer sparse hair for an animal fur coat or more general base, stray, or fuzz layers. Each layer can have its own set of control hairs, or share them, but apply different stylistic parameters. Using layers can ease and speed up the development of a complex hair or fur style by breaking it up into simpler components, which are then combined at render time.

Procedural Hair Effects