Chapter Topics

• Databases and Python RDBMSs, ORMs, and Python

• Database Application Programmer’s Interface (DB-API)

• Relational Databases (RDBMSs)

In this chapter, we discuss how to communicate with databases from Python. Earlier, we discussed simplistic persistent storage, but in many cases, a full-fledged relational database management system (RDBMS) is required for your application.

In any application, there is a need for persistent storage. Generally, there are three basic storage mechanisms: files, a relational database system (RDBMS), or some sort of hybrid, i.e., an API (application programmer interface) that “sits on top of” one of those existing systems, an object relational mapper (ORM), file manager, spreadsheet, configuration file, etc.

In an earlier chapter, we discussed persistent storage using both plain file access as well as a Python and DBM overlay on top of files, i.e., *dbm, dbhash/bsddb files, shelve (combination of pickle and DBM), and using their dictionary-like object interface. This chapter will focus on using RDBMSs for the times when files or writing your own system does not suffice for larger projects.

Before we dig into databases and how to use them with Python, we want to present a quick introduction (or review if you have some experience) to some elementary database concepts and the Structured Query Language (SQL).

Databases usually have a fundamental persistent storage using the file system, i.e., normal operating system files, special operating system files, and even raw disk partitions.

Most database systems provide a command-line tool with which to issue SQL commands or queries. There are also some GUI tools that use the command-line clients or the database client library, giving users a much nicer interface.

An RDBMS can usually manage multiple databases, e.g., sales, marketing, customer support, etc., all on the same server (if the RDBMS is server-based; simpler systems are usually not). In the examples we will look at in this chapter, MySQL is an example of a server-based RDBMS because there is a server process running continuously waiting for commands while neither SQLite nor Gadfly have running servers.

The table is the storage abstraction for databases. Each row of data will have fields that correspond to database columns. The set of table definitions of columns and data types per table all put together define the database schema.

Databases are created and dropped. The same is true for tables. Adding new rows to a database is called inserting, changing existing rows in a table is called updating, and removing existing rows in a table is called deleting. These actions are usually referred to as database commands or operations. Requesting rows from a database with optional criteria is called querying.

When you query a database, you can fetch all of the results (rows) at once, or just iterate slowly over each resulting row. Some databases use the concept of a cursor for issuing SQL commands, queries, and grabbing results, either all at once or one row at a time.

Database commands and queries are given to a database by SQL. Not all databases use SQL, but the majority of relational databases do. Here are some examples of SQL commands. Most databases are configured to be case-insensitive, especially database commands. The accepted style is to use CAPS for database keywords. Most command-line programs require a trailing semicolon ( ; ) to terminate a SQL statement.

Creating a Database

CREATE DATABASE test;

GRANT ALL ON test.* to user(s);

The first line creates a database named “test,” and assuming that you are a database administrator, the second line can be used to grant permissions to specific users (or all of them) so that they can perform the database operations below.

Using a Database

USE test;

If you logged into a database system without choosing which database you want to use, this simple statement allows you to specify one with which to perform database operations.

Dropping a Database

DROP DATABASE test;

This simple statement removes all the tables and data from the database and deletes it from the system.

Creating a Table

CREATE TABLE users (login VARCHAR(8), uid INT, prid INT);

This statement creates a new table with a string column login and a pair of integer fields uid and prid.

Dropping a Table

DROP TABLE users;

This simple statement drops a database table along with all its data.

Inserting a Row

INSERT INTO users VALUES('leanna', 311, 1);

You can insert a new row in a database with the INSERT statement. Specify the table and the values that go into each field. For our example, the string 'leanna' goes into the login field, and 311 and 1 to uid and prid, respectively.

Updating a Row

UPDATE users SET prid=4 WHERE prid=2;

UPDATE users SET prid=1 WHERE uid=311;

To change existing table rows, you use the UPDATE statement. Use SET for the columns that are changing and provide any criteria for determining which rows should change. In the first example, all users with a “project ID” or prid of 2 will be moved to project #4. In the second example, we take one user (with a UID of 311) and move them to project #1.

Deleting a Row

DELETE FROM users WHERE prid=%d;

DELETE FROM users;

To delete a table row, use the DELETE FROM command, give the table you want to delete rows from, and any optional criteria. Without it, as in the second example, all rows will be deleted.

Now that you are up to speed on basic database concepts, it should make following the rest of the chapter and its examples much easier. If you need additional help, there are plenty of database books out in the market that you can check out.

We are going to cover the Python database API and look at how to access relational databases from Python, either directly through a database interface, or via an ORM, and how you can accomplish the same task but without necessarily having to give explicitly commands in SQL.

Topics such as database principles, concurrency, schema, atomicity, integrity, recovery, proper complex left JOINs, triggers, query optimization, transactions, stored procedures, etc., are all outside the scope of this text, and we will not be discussing these in this chapter other than direct use from a Python application. There are plenty of resources you can refer to for general information. Rather, we will present how to store and retrieve data to/from RDBMSs while playing within a Python framework. You can then decide which is best for your current project or application and be able to study sample code that can get you started instantly. The goal is to get you up to speed as quickly as possible if you need to integrate your Python application with some sort of database system.

We are also breaking out of our mode of covering only the “batteries included” features of the Python standard library. While our original goal was to play only in that arena, it has become clear that being able to work with databases is really a core component of everyday application development in the Python world.

As a software engineer, you can probably only make it so far in your career without having to learn something about databases: how to use one (command-line and/or GUI interfaces), how to pull data out of one using the Structured Query Language (SQL), perhaps how to add or update information in a database, etc. If Python is your programming tool, then a lot of the hard work has already been done for you as you add database access to your Python universe. We first describe what the Python “DB-API” is, then give examples of database interfaces that conform to this standard.

We will give some examples using popular open source relational database management systems (RDBMSs). However, we will not include discussions of open source vs. commercial products, etc. Adapting to those other RDBMS systems should be fairly straightforward. A special mention will be given to Aaron Watters’s Gadfly database, a simple RDBMS written completely in Python.

The way to access a database from Python is via an adapter. An adapter is basically a Python module that allows you to interface to a relational database’s client library, usually in C. It is recommended that all Python adapters conform to the Python DB-SIG’s Application Programmer Interface (API). This is the first major topic of this chapter.

Figure 21.1 illustrates the layers involved in writing a Python database application, with and without an ORM. As you can see, the DB-API is your interface to the C libraries of the database client.

Where can one find the interfaces necessary to talk to a database? Simple. Just go to the database topics section at the main Python Web site. There you will find links to the full and current DB-API (version 2.0), existing database modules, documentation, the special interest group, etc. Since its inception, the DB-API has been moved into PEP 249. (This PEP obsoletes the old DB-API 1.0 specification which is PEP 248.) What is the DB-API?

The API is a specification that states a set of required objects and database access mechanisms to provide consistent access across the various database adapters and underlying database systems. Like most community-based efforts, the API was driven by strong need.

In the “old days,” we had a scenario of many databases and many people implementing their own database adapters. It was a wheel that was being reinvented over and over again. These databases and adapters were implemented at different times by different people without any consistency of functionality. Unfortunately, this meant that application code using such interfaces also had to be customized to which database module they chose to use, and any changes to that interface also meant updates were needed in the application code.

A special interest group (SIG) for Python database connectivity was formed, and eventually, an API was born ... the DB-API version 1.0. The API provides for a consistent interface to a variety of relational databases, and porting code between different databases is much simpler, usually only requiring tweaking several lines of code. You will see an example of this later on in this chapter.

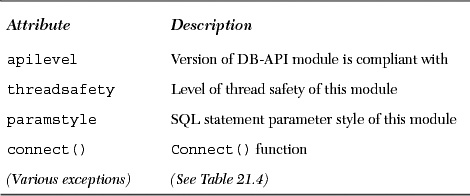

The DB-API specification mandates that the features and attributes listed below must be supplied. A DB-API-compliant module must define the global attributes as shown in Table 21.1.

apilevel

This string (not float) indicates the highest version of the DB-API the module is compliant with, i.e., “1.0”, “2.0”, etc. If absent, “1.0” should be assumed as the default value.

This an integer with these possible values:

• 0: Not threadsafe, so threads should not share the module at all

• 1: Minimally threadsafe: threads can share the module but not connections

• 2: Moderately threadsafe: threads can share the module and connections but not cursors

• 3: Fully threadsafe: threads can share the module, connections, and cursors

If a resource is shared, a synchronization primitive such as a spin lock or semaphore is required for atomic-locking purposes. Disk files and global variables are not reliable for this purpose and may interfere with standard mutex operation. See the threading module or the chapter on multithreaded programming (Chapter 16) on how to use a lock.

paramstyle

The API supports a variety of ways to indicate how parameters should be integrated into an SQL statement that is eventually sent to the server for execution. This argument is just a string that specifies the form of string substitution you will use when building rows for a query or command (see Table 21.2).

connect() Function access to the database is made available through Connection objects. A compliant module has to implement a connect() function, which creates and returns a Connection object. Table 21.3 shows the arguments to connect().

You can pass in database connection information as a string with multiple parameters (DSN) or individual parameters passed as positional arguments (if you know the exact order), or more likely, keyworded arguments. Here is an example of using connect() from PEP 249:

connect(dsn='myhost:MYDB',user='guido',password='234$')

The use of DSN versus individual parameters is based primarily on the system you are connecting to. For example, if you are using an API like ODBC or JDBC, you would likely be using a DSN, whereas if you are working directly with a database, then you are more likely to issue separate login parameters. Another reason for this is that most database adapters have not implemented support for DSN. Below are some examples of non-DSN connect() calls. Note that not all adapters have implemented the specification exactly, e.g., MySQLdb uses db instead of database.

• MySQLdb.connect(host='dbserv', db='inv', user='smith')

• PgSQL.connect(database='sales')

• psycopg.connect(database='template1', user='pgsql')

• gadfly.dbapi20.connect('csrDB', '/usr/local/database')

• sqlite3.connect('marketing/test')

Exceptions that should also be included in the compliant module as globals are shown in Table 21.4.

Connections are how your application gets to talk to the database. They represent the fundamental communication mechanism by which commands are sent to the server and results returned. Once a connection has been established (or a pool of connections), you create cursors to send requests to and receive replies from the database.

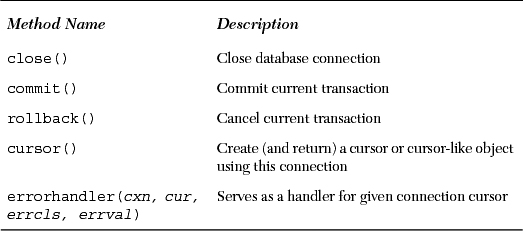

Connection objects are not required to have any data attributes but should define the methods shown in Table 21.5.

When close() is used, the same connection cannot be used again without running into an exception.

The commit() method is irrelevant if the database does not support transactions or if it has an auto-commit feature that has been enabled. You can implement separate methods to turn auto-commit off or on if you wish. Since this method is required as part of the API, databases that do not have the concept of transactions should just implement “pass” for this method.

Like commit(), rollback() only makes sense if transactions are supported in the database. After execution, rollback() should leave the database in the same state as it was when the transaction began. According to PEP 249, “Closing a connection without committing the changes first will cause an implicit rollback to be performed.”

If the RDBMS does not support cursors, cursor() should still return an object that faithfully emulates or imitates a real cursor object. These are just the minimum requirements. Each individual adapter developer can always add special attributes specifically for their interface or database.

It is also recommended but not required for adapter writers to make all database module exceptions (see above) available via a connection. If not, then it is assumed that Connection objects will throw the corresponding module-level exception. Once you have completed using your connection and cursors closed, you should commit() any operations and close() your connection.

Once you have a connection, you can start talking to the database. As we mentioned above in the introductory section, a cursor lets a user issue database commands and retrieve rows resulting from queries. A Python DB-API cursor object functions as a cursor for you, even if cursors are not supported in the database. In this case, the database adapter creator must implement CURSOR objects so that they act like cursors. This keeps your Python code consistent when you switch between database systems that have or do not have cursor support.

Once you have created a cursor, you can execute a query or command (or multiple queries and commands) and retrieve one or more rows from the results set. Table 21.6 shows data attributes and methods that cursor objects have.

The most critical attributes of cursor objects are the execute*() and the fetch*() methods ... all the service requests to the database are performed by these. The arraysize data attribute is useful in setting a default size for fetchmany(). Of course, closing the cursor is a good thing, and if your database supports stored procedures, then you will be using callproc().

Oftentimes, the interface between two different systems are the most fragile. This is seen when converting Python objects to C types and vice versa. Similarly, there is also a fine line between Python objects and native database objects. As a programmer writing to Python’s DB-API, the parameters you send to a database are given as strings, but the database may need to convert it to a variety of different, supported data types that are correct for any particular query.

For example, should the Python string be converted to a VARCHAR, a TEXT, a BLOB, or a raw BINARY object, or perhaps a DATE or TIME object if that is what the string is supposed to be? Care must be taken to provide database input in the expected format, so because of this another requirement of the DB-API is to create constructors that build special objects that can easily be converted to the appropriate database objects. Table 21.7 describes classes that can be used for this purpose. SQL NULL values are mapped to and from Python’s NULL object, None.

Several important changes were made when the DB-API was revised from version 1.0 (1996) to 2.0 (1999):

• Required dbi module removed from API

• Type objects were updated

• New attributes added to provide better database bindings

• callproc() semantics and return value of execute() redefined

• Conversion to class-based exceptions

Since version 2.0 was published, some of the additional optional DB-API extensions that you read about above were added in 2002. There have been no other significant changes to the API since it was published. Continuing discussions of the API occur on the DB-SIG mailing list. Among the topics brought up over the last 5 years include the possibilities for the next version of the DB-API, tentatively named DB-API 3.0. These include the following:

• Better return value for nextset() when there is a new result set

• Switch from float to Decimal

• Improved flexibility and support for parameter styles

• Prepared statements or statement caching

• Refine the transaction model

• State the role of API with respect to portability

• Add unit testing

If you have strong feelings about the API, feel free to participate and join in the discussion. Here are some references you may find handy.

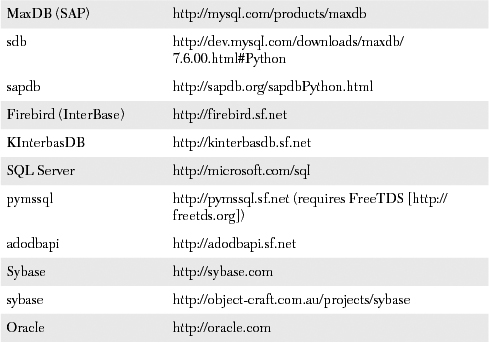

So, you are now ready to go. A burning question must be, “Interfaces to which database systems are available to me in Python?” That inquiry is similar to, “Which platforms is Python available for?” The answer is, “Pretty much all of them.” Following is a list that is comprehensive but not exhaustive:

• Informix

• Sybase

• Oracle

• MS SQL Server

• DB/2

• SAP

• Interbase

• Ingres

Open Source RDBMSs

• MySQL

• PostgreSQL

• SQLite

• Gadfly

Database APIs

• JDBC

• ODBC

To find a current list of what databases are supported, check out:

For each of the databases supported, there exists one or more adapters that let you connect to the target database system from Python. Some databases, such as Sybase, SAP, Oracle, and SQLServer, have more than one adapter available. The best thing to do is to find out which ones fit your needs best. Your questions for each candidate may include: how good its performance is, how useful is its documentation and/or Web site, whether it has an active community or not, what the overall quality and stability of the driver is, etc. You have to keep in mind that most adapters provide just the basic necessities to get you connected to the database. It is the extras that you may be looking for. Keep in mind that you are responsible for higher-level code like threading and thread management as well as management of database connection pools, etc.

If you are squeamish and want less hands-on—for example, if you wish to do as little SQL or database administration as much as possible—then you may wish to consider object-relational mappers, covered later on in this chapter.

Let us now look at some examples of how to use an adapter module to talk to a relational database. The real secret is in setting up the connection. Once you have this and use the DB-API objects, attributes, and object methods, your core code should be pretty much the same regardless of which adapter and RDBMS you use.

First, let us look at a some sample code, from creating a database to creating a table and using it. We present examples using MySQL, PostgreSQL, and SQLite.

We will use MySQL as the example here, along with the only MySQL Python adapter: MySQLdb, aka MySQL-python. In the various bits of code, we will also show you (deliberately) examples of error situations so that you have an idea of what to expect, and what you may wish to create handlers for.

We first log in as an administrator to create a database and grant permissions, then log back in as a normal client.

In the code above, we did not use a cursor. Some adapters have Connection objects, which can execute SQL queries with the query() method, but not all. We recommend you either not use it or check your adapter to make sure it is available.

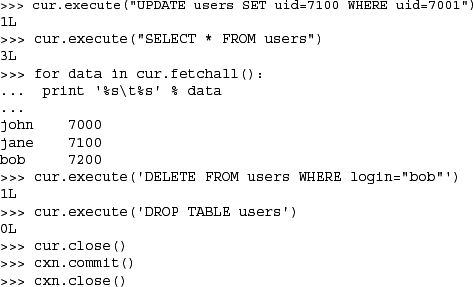

The commit() was optional for us as auto-commit is turned on by default in MySQL. We then connect back to the new database as a regular user, create a table, and perform the usual queries and commands using SQL to get our job done via Python. This time we use cursors and their execute() method.

The next set of interactions shows us creating a table. An attempt to create it again (without first dropping it) results in an error.

Now we will insert a few rows into the database and query them out.

The last bit features updating the table, either updating or deleting rows.

MySQL is one of the most popular open source databases in the world, and it is no surprise that a Python adapter is available for it.

Another popular open source database is PostgreSQL. Unlike MySQL, there are no less than three current Python adapters available for Postgres: psycopg, PyPgSQL, and PyGreSQL. A fourth, PoPy, is now defunct, having contributed its project to combine with that of PyGreSQL back in 2003. Each of the three remaining adapters has its own characteristics, strengths, and weaknesses, so it would be a good idea to practice due diligence to determine which is right for you.

The good news is that the interfaces are similar enough that you can create an application that, say, measures the performance between all three (if that is a metric that is important to you). Here we show you the setup code to get a Connection object for each:

psycopg

>>> import psycopg

>>> cxn = psycopg.connect(user='pgsql')

PyPgSQL

>>> from pyPgSQL import PgSQL

>>> cxn = PgSQL.connect(user='pgsql')

PyGreSQL

>>> import pgdb

>>> cxn = pgdb.connect(user='pgsql')

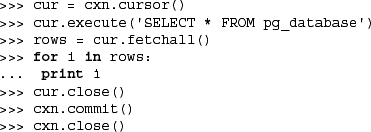

Now comes some generic code that will work for all three adapters.

Finally, you can see how their outputs are slightly different from one another.

sales

template1

template0

psycopg

PyGreSQL

For extremely simple applications, using files for persistent storage usually suffices, but the most complex and data-driven applications demand a full relational database. SQLite targets the intermediate systems and indeed is a hybrid of the two. It is extremely lightweight and fast, plus it is serverless and requires little or no administration.

SQLite has seen a rapid growth in popularity, and it is available on many platforms. With the introduction of the pysqlite database adapter in Python 2.5 as the sqlite3 module, this marks the first time that the Python standard library has featured a database adapter in any release.

It was bundled with Python not because it was favored over other databases and adapters, but because it is simple, uses files (or memory) as its backend store like the DBM modules do, does not require a server, and does not have licensing issues. It is simply an alternative to other similar persistent storage solutions included with Python but which happens to have a SQL interface.

Having a module like this in the standard library allows users to develop rapidly in Python using SQLite, then migrate to a more powerful RDBMS such as MySQL, PostgreSQL, Oracle, or SQL Server for production purposes if this is their intention. Otherwise, it makes a great solution to stay with for those who do not need all that horsepower.

Although the database adapter is now provided in the standard library, you still have to download the actual database software yourself. However, once you have installed it, all you need to do is start up Python (and import the adapter) to gain immediate access:

Okay, enough of the small examples. Next, we look at an application similar to our earlier example with MySQL, but which does a few more things:

• Creates a database (if necessary)

• Creates a table

• Inserts rows into the table

• Updates rows in the table

• Deletes rows from the table

• Drops the table

For this example, we will use two other open source databases. SQLite has become quite popular of late. It is very small, lightweight, and extremely fast for all the most common database functions. Another database involved in this example is Gadfly, a mostly SQL-compliant RDBMS written entirely in Python. (Some of the key data structures have a C module available, but Gadfly can run without it [slower, of course].)

Some notes before we get to the code. Both SQLite and Gadfly require the user to give the location to store database files (while MySQL has a default area and does not require this information from the use). The most current incarnation of Gadfly is not yet fully DB-API 2.0 compliant, and as a result, is missing some functionality, most notably the cursor attribute rowcount in our example.

In the example below, we want to demonstrate how to use Python to access a database. In fact, for variety, we added support for three different database systems: Gadfly, SQLite, and MySQL. We are going to create a database (if one does not already exist), then run through various database operations such as creating and dropping tables, and inserting, updating, and deleting rows. Example 21.1 will be duplicated for the upcoming section on ORMs as well.

The first part of this script imports the necessary modules, creates some global “constants” (the column size for display and the set of databases we are supporting), and features the setup() function, which prompts the user to select the RDBMS to use for any particular execution of this script.

The most notable constant here is DB_EXC, which stands for DataBase EXCeption. This variable will eventually be assigned the database exception module for the specific database system that the users chooses to use to run this application with. In other words, if users choose MySQL, DB_EXC will be _mysql_exceptions, etc. If we developed this application in more of an object-oriented fashion, this would simply be an instance attribute, i.e., self.db_exc_module or something like that.

The guts of consistent database access happens here in the connect() function. At the beginning of each section, we attempt to load the requested database modules. If a suitable one is not found, None is returned to indicate that the database system is not supported.

Once a connection is made, then all other code is database and adapter independent and should work across all connections. (The only exception in our script is insert().) In all three subsections of this set of code, you will notice that a valid connection should be passed back as cxn.

If SQLite is chosen (lines 24-36), we attempt to load a database adapter. We first try to load the standard library’s sqlite3 module (Python 2.5+). If that fails, we look for the third-party pysqlite2 package. This is to support 2.4.x and older systems with the pysqlite adapter installed. If a suitable adapter is found, we then check to ensure that the directory exists because the database is file based. (You may also choose to create an in-memory database.) When the connect() call is made to SQLite, it will either use one that already exists or make a new one using that path if it does not.

MySQL (lines 38-57) uses a default area for its database files and does not require this to come from the user. Our code attempts to connect to the specified database. If an error occurs, it could mean either that the database does not exist or that it does exist but we do not have permission to see it. Since this is just a test application, we elect to drop the database altogether (ignoring any error if the database does not exist), and re-create it, granting all permissions after that.

The last database supported by our application is Gadfly (lines 59-75). (At the time of writing, this database is mostly but not fully DB-API-compliant, and you will see this in this application.) It uses a startup mechanism similar to that of SQLite: it starts up with the directory where the database files should be. If it is there, fine, but if not, you have to take a roundabout way to start up a new database. (Why this is, we are not sure. We believe that the startup() functionality should be merged into that of the constructor gadfly.gadfly().)

The create() function creates a new users table in our database. If there is an error, that is almost always because the table already exists. If this is the case, drop the table and re-create it by recursively calling this function again. This code is dangerous in that if the recreation of the table still fails, you will have infinite recursion until your application runs out of memory. You will fix this problem in one of the exercises at the end of the chapter.

The table is dropped from the database with the one-liner drop().

This is probably the most interesting part of the code outside of database activity. It consists of a constant set of names and user IDs followed by the generator randName() whose code can be found in Chapter 11 (Functions) in Section 11.10. The NAMES constant is a tuple that must be converted to a list for use with randName() because we alter it in the generator, randomly removing one name at a time until the list is exhausted. Well, if NAMES was a list, we would only use it once. Instead, we make it a tuple and copy it to a list to be destroyed each time the generator is used.

The insert() function is the only other place where database-dependent code lives, and the reason is that each database is slightly different in one way or another. For example, both the adapters for SQLite and MySQL are DB-API-compliant, so both of their cursor objects have an executemany() function, whereas Gadfly does not, so rows have to be inserted one at a time.

Another quirk is that both SQLite and Gadfly use the qmark parameter style while MySQL uses format. Because of this, the format strings are different. If you look carefully, however, you will see that the arguments themselves are created in a very similar fashion.

What the code does is this: for each name-userID pair, it assigns that individual to a project group (given by its project ID or prid). The project ID is chosen randomly out of four different groups (randrange(1,5)).

This single line represents a conditional expression (read as: Python ternary operator) that returns the rowcount of the last operation (in terms of rows altered), or if the cursor object does not support this attribute (meaning it is not DB-API-compliant), it returns -1.

Conditional expressions were added in Python 2.5, so if you are using 2.4.x or older, you will need to convert it back to the “old-style” way of doing it:

![]()

If you are confused by this line of code, don’t worry about it. Check the FAQ to see why this is, and get a taste of why conditional expressions were finally added to Python in 2.5. If you are able to figure it out, then you have developed a solid understanding of Python objects and their Boolean values.

The update() and delete() functions randomly choose folks from one group. If the operation is update, move them from their current group to another (also randomly chosen); if it is delete, remove them altogether.

The dbDump() function pulls all rows from the database, formats them for printing, and displays them to the user. The print statement to display each user is the most obfuscated, so let us take it apart.

First, you should see that the data were extracted after the SELECT by the fetchall() method. So as we iterate each user, take the three columns (login, uid, prid), convert them to strings (if they are not already), titlecase it, and format the complete string to be COLSIZ columns left-justified (right-hand space padding). Since the code to generate these three strings is a list (via the list comprehension), we need to convert it to a tuple for the format operator ( % ).

The director of this movie is main(). It makes the individual functions to each function described above that defines how this script works (assuming that it does not exit due to either not finding a database adapter or not being able to obtain a connection [lines 143-145]). The bulk of it should be fairly self-explanatory given the proximity of the print statements. The last bits of main() close the cursor, and commit and close the connection. The final lines of the script are the usual to start the script.

As seen in the previous section, a variety of different database systems are available today, and most of them have Python interfaces to allow you to harness their power. The only drawback to those systems is the need to know SQL. If you are a programmer who feels more comfortable with manipulating Python objects instead of SQL queries, yet still want to use a relational database as your data backend, then you are a great candidate to be a user of ORMs.

Creators of these systems have abstracted away much of the pure SQL layer and implemented objects in Python that you can manipulate to accomplish the same tasks without having to generate the required lines of SQL. Some systems allow for more flexibility if you do have to slip in a few lines of SQL, but for the most part, you can avoid almost all the general SQL required.

Database tables are magically converted to Python classes with columns and features as attributes and methods responsible for database operations. Setting up your application to an ORM is somewhat similar to that of a standard database adapter. Because of the amount of work that ORMs perform on your behalf, some things are actually more complex or require more lines of code than using an adapter directly. Hopefully, the gains you achieve in productivity make up for a little bit of extra work.

The most well-known Python ORMs today are SQLAlchemy and SQLObject. We will give you examples of SQLAlchemy and SQLObject because the systems are somewhat disparate due to different philosophies, but once you figure these out, moving on to other ORMs is much simpler.

Some other Python ORMs include PyDO/PyDO2, PDO, Dejavu, PDO, Durus, QLime, and ForgetSQL. Larger Web-based systems can also have their own ORM component, i.e., WebWare MiddleKit and Django’s Database API. Note that “well-known” does not mean “best for your application.” Although these others were not included in our discussion, that does not mean that they would not be right for your application.

We will port our user shuffle application ushuffle_db.py to both SQLAlchemy and SQLObject below. MySQL will be the backend database server for both. You will note that we implement these as classes because there is more of an object “feel” to using ORMs as opposed to using raw SQL in a database adapter. Both examples import the set of NAMES and the random name chooser from ushuffle_db.py. This is to avoid copying-and-pasting the same code everywhere as code reuse is a good thing.

We start with SQLAlchemy because its interface is somewhat closer to SQL than SQLObject’s interface. SQLAlchemy abstracts really well to the object world but does give you more flexibility in issuing SQL if you have to. You will find both of these ORMs (Examples 21.2 and 21.3) very similar in terms of setup and access, as well as being of similar size, and both shorter than ushuffle_db.py (including the sharing of the names list and generator used to randomly iterate through that list).

Example 21.2. SQLAlchemy ORM Example (ushuffle_sa.py)

This "user shuffle" application features SQLAlchemy paired up with the MySQL database as its backend.

As expected, we begin with module imports and constants. We follow the suggested style guideline of importing Python Standard Library modules first, followed by third-party or external modules, and finally, local modules to our application. The constants should be fairly self-explanatory.

The constructor for our class, like ushuffle_db.connect(), does everything it can to make sure that there is a database available and returns a connection to it (lines 18-31). This is the only place you will see real SQL, as such activity is typically an operational task, not application-oriented.

The try-except clause (lines 33-40) is used to reload an existing table or make a new one if it does not exist yet. Finally, we attach the relevant objects to our instance.

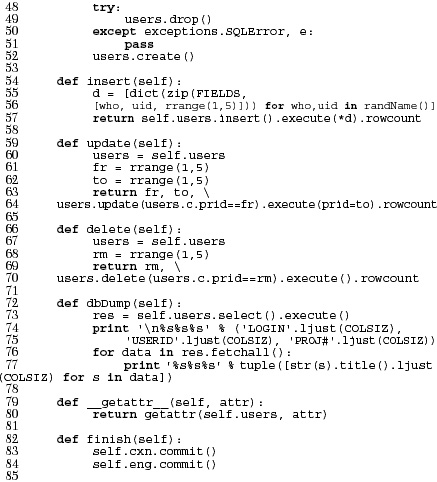

These next four methods represent the core database functionality of table creation (lines 46-52), insertion (lines 54-57), update (lines 59-64), and deletion (lines 66-70). We should also have a method for dropping the table:

def drop(self):

self.users.drop()

or

drop = lambda self: self.users.drop()

However, we made a decision to give another demonstration of delegation (as introduced in Chapter 13, Object-Oriented Programming). Delegation is where missing functionality (method call) is passed to another object in our instance which has it. See the explanation of lines 79-80.

The responsibility of displaying proper output to the screen belongs to the dbDump() method. It extracts the rows from the database and pretty-prints the data just like its equivalent in ushuffle_db.py. In fact, they are nearly identical.

We deliberately avoided creating a drop() method for the table since it would just call the table’s drop method anyway. Also, there is no added functionality, so why create yet another function to have to maintain? The __getattr__() special method is called whenever an attribute lookup fails.

If our object calls orm.drop() and finds no such method, getattr (orm, 'drop') is invoked. When that happens, __getattr__() is called and delegates the attribute name to self.users. The interpreter will find that self.users has a drop attribute and pass that method call to it: self. users.drop()!

The main() function drives our application. It creates a MySQLAlchemy object and uses that for all database operations. The script is the same as for our original application, ushuffle_db.py. You will notice that the database parameter db is optional and does not serve any purpose here in ushuffle_sa.py or the upcoming SQLobject version ushuffle_so.py. This is a placeholder for you to add support for other RDBMSs in these applications (see Exercises at the end of the chapter).

Upon running this script, you may get output that looks like this:

This modules imports and constant declarations are practically identical to those of ushuffle_sa.py except that we are using SQLObject instead of SQLAlchemy.

The constructor for our class does everything it can to make sure that there is a database available and returns a connection to it, just like our SQLAlchemy example. Similarly, this is the only place you will see real SQL. Our application, as coded here, will result in an infinite loop if for some reason a Users table cannot be created in SQLObject.

We are trying to be clever in handling errors by fixing the problem and retrying the table (re)create. Since SQLobject uses metaclasses, we know that special magic is happening under the covers, so we have to define two different classes—one for if the table already exists and another if it does not.

The code works something like this:

- Try and establish a connection to an existing table; if it works, we are done (lines 23-29)

- Otherwise, create the class from scratch for the table; if so, we are done (lines 31-36)

- Otherwise, we have a database issue, so try and make a new database (lines 37-40)

- Loop back up and try all this again

Hopefully it (eventually) succeeds in one of the first two places. When the loop is terminated, we attach the relevant objects to our instance as we did in ushuffle_sa.py.

The database operations happen in these lines. We have table create (lines 44-47) and drop (line 77), insert (lines 49-52), update (lines 54-60), and delete (lines 62-67). The finish() method on line 78 is to close the connection. We could not use delegation for table drop like we did for the SQLAlchemy example because the would-be delegated method for it is called dropTable() not drop().

This is the same and expected dbDump() method, which pulls the rows from the database and displays things nicely to the screen.

This is the main() function again. It works just like the one in ushuffle_sa.py. Also, the db argument to the constructor is a placeholder for you to add support for other RDBMSs in these applications (see Exercises at the end of the chapter).

Here is what your output may look like if you run this script:

We hope that we have provided you with a good introduction to using relational databases with Python. When your application’s needs go beyond those offered by plain files, or specialized files like DBM, pickled, etc., you have many options. There are a good number of RDBMSs out there, not to mention one completely implemented in Python, freeing one from having to install, maintain, or administer a real database system. Below, you will find information on many of the Python adapters plus database and ORM systems out there. We also suggest checking out the DB-SIG pages as well as the Web pages and mailing lists of all systems of interest. Like all other areas of software development, Python makes things easy to learn and simple to experiment with.

Table 21.8 lists most of the common databases out there along with working Python modules and packages that serve as adapters to those database systems. Note that not all adapters are DB-API-compliant.

21-1. Database API. What is the Python DB-API? Is it a good thing? Why (or why not)?

21-2. Database API. Describe the differences between the database module parameter styles (see the paramstyle module attribute).

21-3. Cursor Objects. What are the differences between the cursor execute*() methods?

21-4. Cursor Objects. What are the differences between the cursor fetch*() methods?

21-5. Database Adapters. Research your RDBMS and its Python module. Is it DB-API compliant? What additional features are available for that module that are extras not required by the API?

21-6. Type Objects. Study using Type objects for your database and DB-API adapter and write a small script that uses at least one of those objects.

21-7. Refactoring. In the create() function of Example 21.1 (ushuffle_db.py), a table that already exists is dropped and re-created by recursively calling create() again. This is dangerous in case the re-creation of the table fails (again) because you will then have infinite recursion. Fix this problem by creating a more practical solution that does not involve copying the create query (cur.execute()) again in the exception handler. Extra Credit: Try to recreate the table a maximum of three times before returning failure back to the caller.

21-8. Database and HTML. Take any existing database table, and use the knowledge you developed from Chapter 20 and output the contents of a database table into an HTML table.

21-9. Web Programming and Databases. Take our “user shuffle” example (ushuffle_db.py), and create a Web interface for it.

21-10. GUI Programming and Databases. Take our “user shuffle” example (ushuffle_db.py), and throw a GUI for it.

21-11. Stock Portfolio Class. Update the stock database example from Chapter 13 to use a relational database.

21-12. Switching ORMs to a Different RDBMS. Take either the SQLAlchemy (ushuffle_sa.py) or SQLObject (ushuffle_so.py) application and swap out MySQL as the back-end RDBMS for another one of your choice.