System Identification Based on Mutual Information Criteria

As a central concept in communication theory, mutual information measures the amount of information that one random variable contains about another. The larger the mutual information between two random variables is, the more information they share, and the better the estimation algorithm can be. Typically, there are two mutual information-based identification criteria: the minimum mutual information (MinMI) and the maximum mutual information (MaxMI) criteria. The MinMI criterion tries to minimize the mutual information between the identification error and the input signal such that the error signal contains as little as possible information about the input,1 while the MaxMI criterion aims to maximize the mutual information between the system output and the model output such that the model contains as much as possible information about the system in their outputs. Although the MinMI criterion is essentially equivalent to the minimum error entropy (MEE) criterion, their physical meanings are different. The MaxMI criterion is somewhat similar to the Infomax principle, an optimization principle for neural networks and other information processing systems. They are, however, different in their concepts. The Infomax states that a function that maps a set of input values I to a set of output values O should be chosen (or learned) so as to maximize the mutual information between I and O, subject to a set of specified constraints and/or noise processes. In the following, we first discuss the MinMI criterion.

6.1 System Identification Under the MinMI Criterion

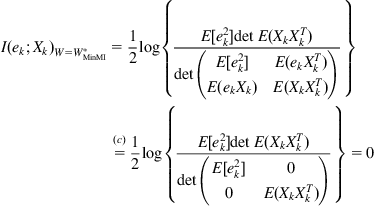

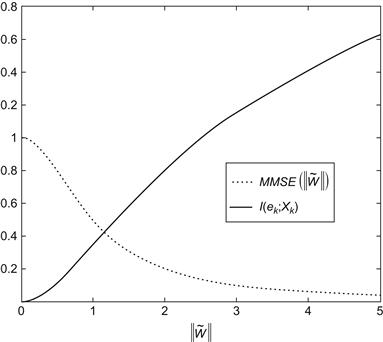

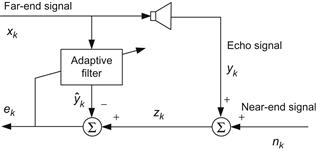

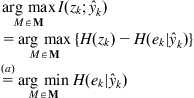

The basic idea behind the MinMI criterion is that the model parameters should be determined such that the identification error contains as little as possible information about the input signal. The scheme of this identification method is shown in Figure 6.1. The objective function is the mutual information between the error and the input, and the optimal parameter is solved as

![]() (6.1)

(6.1)

where ![]() is the identification error at

is the identification error at ![]() time (the difference between the measurement

time (the difference between the measurement ![]() and the model output

and the model output ![]() ),

), ![]() is a vector consisting of all the inputs that have influence on the model output

is a vector consisting of all the inputs that have influence on the model output ![]() (possibly an infinite dimensional vector),

(possibly an infinite dimensional vector), ![]() is the set of all possible

is the set of all possible ![]() -dimensional parameter vectors.

-dimensional parameter vectors.

For a general causal system, ![]() will be

will be

![]() (6.2)

(6.2)

If the model output depends on finite input (e.g., the finite impulse response (FIR) filter), then

![]() (6.3)

(6.3)

Assume the initial state of the model is known, the output ![]() will be a function of

will be a function of ![]() , i.e.,

, i.e., ![]() . In this case, the MinMI criterion is equivalent to the MEE criterion. In fact, we can derive

. In this case, the MinMI criterion is equivalent to the MEE criterion. In fact, we can derive

(6.4)

(6.4)

where (a) is because that the conditional entropy ![]() is not related to the parameter vector

is not related to the parameter vector ![]() . In Chapter 3, we have proved a similar property when discussing the MEE Bayesian estimation. That is, minimizing the estimation error entropy is equivalent to minimizing the mutual information between the error and the observation.

. In Chapter 3, we have proved a similar property when discussing the MEE Bayesian estimation. That is, minimizing the estimation error entropy is equivalent to minimizing the mutual information between the error and the observation.

Although both are equivalent, the MinMI criterion and the MEE criterion are much different in meaning. The former aims to decrease the statistical dependence while the latter tries to reduce the uncertainty (scatter or dispersion).

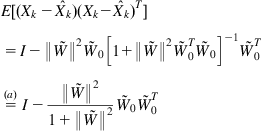

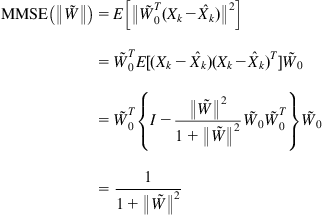

6.1.1 Properties of MinMI Criterion

Let the model be an FIR filter. We discuss in the following the optimal solution of the MinMI criterion and investigate the connection to the mean square error (MSE) criterion [234].

6.1.2 Relationship with Independent Component Analysis

The parameter identification under MinMI criterion is actually a special case of independent component analysis (ICA) [133]. A brief scheme of the ICA problem is shown in Figure 6.3, where ![]() is the

is the ![]() -dimensional source vector,

-dimensional source vector, ![]() is the

is the ![]() -dimensional observation vector that is related to the source vector through

-dimensional observation vector that is related to the source vector through ![]() , where

, where ![]() is the

is the ![]() mixing matrix [236]. Assume that each component of the source signal

mixing matrix [236]. Assume that each component of the source signal ![]() is mutually independent. There is no other prior knowledge about

is mutually independent. There is no other prior knowledge about ![]() and the mixing matrix

and the mixing matrix ![]() . The aim of the ICA is to search a

. The aim of the ICA is to search a ![]() matrix

matrix ![]() (i.e., the demixing matrix) such that

(i.e., the demixing matrix) such that ![]() approaches as closely as possible

approaches as closely as possible ![]() up to scaling and permutation ambiguities.

up to scaling and permutation ambiguities.

The ICA can be formulated as an optimization problem. To make each component of ![]() as mutually independent as possible, one can solve the matrix

as mutually independent as possible, one can solve the matrix ![]() under a certain objective function that measures the degree of dependence (or independence). Since the mutual information measures the statistical dependence between random variables, we may use the mutual information between components of

under a certain objective function that measures the degree of dependence (or independence). Since the mutual information measures the statistical dependence between random variables, we may use the mutual information between components of ![]() as the optimization criterion,2 i.e.,

as the optimization criterion,2 i.e.,

(6.14)

(6.14)

To some extent, the system parameter identification can be regarded as an ICA problem. Consider the FIR system identification:

(6.15)

(6.15)

where ![]() ,

, ![]() and

and ![]() are

are ![]() -dimensional weight vectors of the unknown system and the model. If regarding the vectors

-dimensional weight vectors of the unknown system and the model. If regarding the vectors ![]() and

and ![]() as, respectively, the source signal and the observation in ICA, we have

as, respectively, the source signal and the observation in ICA, we have

![]() (6.16)

(6.16)

where ![]() is the mixing matrix and

is the mixing matrix and ![]() is the

is the ![]() identity matrix. The goal of the parameter identification is to make the model weight vector

identity matrix. The goal of the parameter identification is to make the model weight vector ![]() approximate the unknown weight vector

approximate the unknown weight vector ![]() , and hence make the identification error

, and hence make the identification error ![]() (

(![]() ) approach the additive noise

) approach the additive noise ![]() , or in other words, make the vector

, or in other words, make the vector ![]() approach the ICA source vector

approach the ICA source vector ![]() . Therefore, the vector

. Therefore, the vector ![]() can be regarded as the demixing output vector, where the demixing matrix is

can be regarded as the demixing output vector, where the demixing matrix is

![]() (6.17)

(6.17)

Due to the scaling ambiguity of the demixing output, it is reasonable to introduce a more general demixing matrix:

![]() (6.18)

(6.18)

where ![]() ,

, ![]() . In this case, the demixed output

. In this case, the demixed output ![]() will be related to the identification error via a proportional factor

will be related to the identification error via a proportional factor ![]() .

.

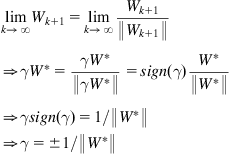

According to (6.14), the optimal demixing matrix will be

(6.19)

(6.19)

After obtaining the optimal matrix ![]() , one may get the optimal weight vector [133]

, one may get the optimal weight vector [133]

![]() (6.20)

(6.20)

Clearly, the above ICA formulation is actually the MinMI criterion-based parameter identification.

6.1.3 ICA-Based Stochastic Gradient Identification Algorithm

The MinMI criterion is in essence equivalent to the MEE criterion. Thus, one can utilize the various information gradient algorithms in Chapter 4 to implement the MinMI criterion-based identification. In the following, we introduce an ICA-based stochastic gradient identification algorithm [133].

According to the previous discussion, the MinMI criterion-based identification can be regarded as an ICA problem, i.e.,

![]() (6.21)

(6.21)

Since

![]() (6.22)

(6.22)

by (2.8), we have

![]() (6.23)

(6.23)

And hence

(6.24)

(6.24)

where (a) is due to the fact that the term ![]() is not related to the matrix

is not related to the matrix ![]() . Denote the objective function

. Denote the objective function ![]() . The instantaneous value of

. The instantaneous value of ![]() is

is

![]() (6.25)

(6.25)

in which ![]() is the PDF of

is the PDF of ![]() (

(![]() ).

).

In order to solve the demixing matrix ![]() , one can resort to the natural gradient (or relative gradient)-based method [133,237]:

, one can resort to the natural gradient (or relative gradient)-based method [133,237]:

(6.26)

(6.26)

where ![]() . As the PDF

. As the PDF ![]() is usually unknown, a certain nonlinear function (e.g., the tanh function) will be used to approximate the

is usually unknown, a certain nonlinear function (e.g., the tanh function) will be used to approximate the ![]() function.3

function.3

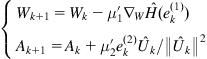

If adopting different step-sizes for learning the parameters ![]() and

and ![]() , we have

, we have

(6.27)

(6.27)

The above algorithm is referred to as the ICA-based stochastic gradient identification algorithm (or simply the ICA algorithm). The model weight vector learned by this method is

![]() (6.28)

(6.28)

If the parameter ![]() is set to constant

is set to constant ![]() , the algorithm will reduce to

, the algorithm will reduce to

![]() (6.29)

(6.29)

6.1.4 Numerical Simulation Example

Figure 6.4 illustrates a general configuration of an acoustic echo canceller (AEC) [133]. ![]() is the far-end signal going to the loudspeaker, and

is the far-end signal going to the loudspeaker, and ![]() is the echo signal entering into the microphone that is produced by an undesirable acoustic coupling between the loudspeaker and the microphone.

is the echo signal entering into the microphone that is produced by an undesirable acoustic coupling between the loudspeaker and the microphone. ![]() is the near-end signal which is usually independent of the far-end signal and the echo signal.

is the near-end signal which is usually independent of the far-end signal and the echo signal. ![]() is the signal received by the microphone (

is the signal received by the microphone (![]() ). The aim of the echo cancelation is to remove the echo part in

). The aim of the echo cancelation is to remove the echo part in ![]() by subtracting the output of an adaptive filter that is driven by the far-end signal. As shown in Figure 6.4, the filter output

by subtracting the output of an adaptive filter that is driven by the far-end signal. As shown in Figure 6.4, the filter output ![]() is the synthetic echo signal, and the error signal

is the synthetic echo signal, and the error signal ![]() is the echo-canceled signal (or the estimate of the near-end signal). The key technique in AEC is to build an accurate model for the echo channel (or accurately identifying the parameters of the synthetic filter).

is the echo-canceled signal (or the estimate of the near-end signal). The key technique in AEC is to build an accurate model for the echo channel (or accurately identifying the parameters of the synthetic filter).

One may use the previously discussed ICA algorithm to implement the adaptive echo cancelation [133]. Suppose the echo channel is a 100 tap FIR filter, and assume that the input (far-end) signal ![]() is uniformly distributed over the interval [–4, 4], and the noise (near-end) signal

is uniformly distributed over the interval [–4, 4], and the noise (near-end) signal ![]() is Cauchy distributed, i.e.,

is Cauchy distributed, i.e., ![]() . The performance of the algorithms is measured by the echo return loss enhancement (ERLE) in dB:

. The performance of the algorithms is measured by the echo return loss enhancement (ERLE) in dB:

![]() (6.30)

(6.30)

Simulation results are shown in Figures 6.5 and 6.6. In Figure 6.5, the performances of the ICA algorithm, the normalized least mean square (NLMS), and the recursive least squares (RLS) are compared, while in Figure 6.6, the performances of the ICA algorithm and the algorithm (6.29) with ![]() are compared. During the simulation, the

are compared. During the simulation, the ![]() function in the ICA algorithm is chosen as

function in the ICA algorithm is chosen as

![]() (6.31)

(6.31)

Figure 6.5 Plots of the performance of three algorithms (ICA, NLMS, RLS) in Cauchy noise environment. Source: Adopted from [133].

Figure 6.6 Plots of the performance of the ICA algorithm and the algorithm (6.29) with ![]() (

(![]() ). Source: Adopted from [133].

). Source: Adopted from [133].

It can be clearly seen that the ICA-based algorithm shows excellent performance in echo cancelation.

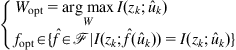

6.2 System Identification Under the MaxMI Criterion

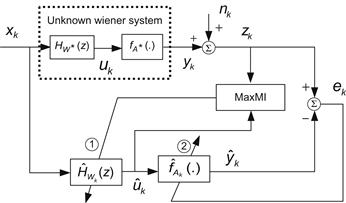

Consider the system identification scheme shown in Figure 6.7, in which ![]() is the common input to the unknown system and the model,

is the common input to the unknown system and the model, ![]() is the intrinsic (noiseless) output of the unknown system,

is the intrinsic (noiseless) output of the unknown system, ![]() is the additive noise,

is the additive noise, ![]() is the noisy output measurement, and

is the noisy output measurement, and ![]() stands for the output of the model. Under the MaxMI criterion, the identification procedure is to determine a model

stands for the output of the model. Under the MaxMI criterion, the identification procedure is to determine a model ![]() such that the mutual information between the noisy system output

such that the mutual information between the noisy system output ![]() and the model output

and the model output ![]() is maximized. Thus the optimal model

is maximized. Thus the optimal model ![]() is given by

is given by

(6.32)

(6.32)

where ![]() denotes the model set (collection of all candidate models),

denotes the model set (collection of all candidate models), ![]() ,

, ![]() , and

, and ![]() denote, respectively, the PDFs of

denote, respectively, the PDFs of ![]() ,

, ![]() , and

, and ![]() .

.

The MaxMI criterion provides a fresh insight into system identification. Roughly speaking, the noisy measurement ![]() represents the output of an information source and is transmitted over an information channel, i.e., the identifier (including the model set and search algorithm), and the model output

represents the output of an information source and is transmitted over an information channel, i.e., the identifier (including the model set and search algorithm), and the model output ![]() represents the channel output. Then the identification problem can be regarded as the information transmitting problem, and the goal of identification is to maximize the channel capacity (measured by

represents the channel output. Then the identification problem can be regarded as the information transmitting problem, and the goal of identification is to maximize the channel capacity (measured by ![]() ) over all possible identifiers.

) over all possible identifiers.

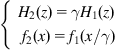

6.2.1 Properties of the MaxMI Criterion

In the following, we present some important properties of the MaxMI criterion [135,136].

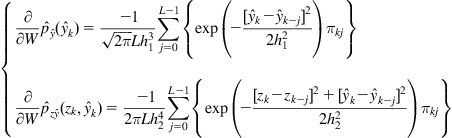

6.2.2 Stochastic Mutual Information Gradient Identification Algorithm

The stochastic gradient identification algorithm under the MaxMI criterion can be expressed as

![]() (6.55)

(6.55)

where ![]() denotes the instantaneous estimate of the gradient of mutual information

denotes the instantaneous estimate of the gradient of mutual information ![]() evaluated at the current value of the weight vector and

evaluated at the current value of the weight vector and ![]() is the step-size. The key problem of the update equation (6.55) is how to calculate the instantaneous gradient

is the step-size. The key problem of the update equation (6.55) is how to calculate the instantaneous gradient ![]() .

.

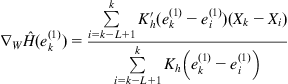

Let us start with the calculation of the gradient (not the instantaneous gradient) of ![]() :

:

(6.56)

(6.56)

where ![]() ,

, ![]() , and

, and ![]() denote the related PDFs at the instant

denote the related PDFs at the instant ![]() . Then the instantaneous value of

. Then the instantaneous value of ![]() can be obtained by dropping the expectation operator and plugging in the estimates of the PDFs, i.e.,

can be obtained by dropping the expectation operator and plugging in the estimates of the PDFs, i.e.,

(6.57)

(6.57)

where ![]() and

and ![]() are, respectively, the estimates of

are, respectively, the estimates of ![]() and

and ![]() . To estimate the density functions, one usually adopts the kernel density estimation (KDE) method and uses the following Gaussian functions as the kernels [135]

. To estimate the density functions, one usually adopts the kernel density estimation (KDE) method and uses the following Gaussian functions as the kernels [135]

(6.58)

(6.58)

where ![]() and

and ![]() denote the kernel widths.

denote the kernel widths.

Based on the above Gaussian kernels, the estimates of the PDFs and their gradients can be calculated as follows:

(6.59)

(6.59)

(6.60)

(6.60)

where ![]() is the sliding data length and

is the sliding data length and ![]() . For FIR filter, we have

. For FIR filter, we have

![]() (6.61)

(6.61)

Combining (6.55), (6.57), (6.59), and (6.60), we obtain a stochastic gradient identification algorithm under the MaxMI criterion, which is referred to as the stochastic mutual information gradient (SMIG) algorithm [135].

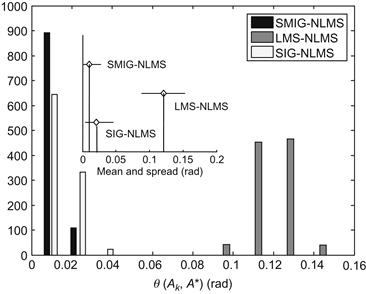

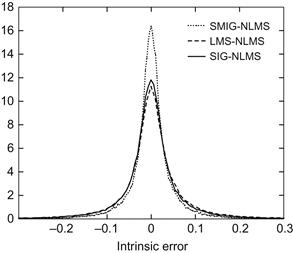

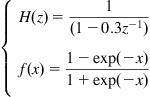

The performances of the SMIG algorithm compared with the least mean square (LMS) algorithm are demonstrated in the following by Monte Carlo simulations. Consider the FIR system identification [135]:

(6.62)

(6.62)

where ![]() and

and ![]() are, respectively, the transfer functions of the unknown system and the model. Suppose the input signal

are, respectively, the transfer functions of the unknown system and the model. Suppose the input signal ![]() and the additive noise

and the additive noise ![]() are both unit-power white Gaussian processes. To uniquely determine an optimal solution under the MaxMI criterion, it is assumed that the first component of the unknown weight vector is a priori known (which is assumed to be 0.8). Thus the goal is to search the optimal solution of the other five weights. The initial weights (except

are both unit-power white Gaussian processes. To uniquely determine an optimal solution under the MaxMI criterion, it is assumed that the first component of the unknown weight vector is a priori known (which is assumed to be 0.8). Thus the goal is to search the optimal solution of the other five weights. The initial weights (except ![]() ) for the adaptive FIR filter are zero-mean Gaussian distributed with variance 0.01. Further, the following distortion functions are considered [135]:

) for the adaptive FIR filter are zero-mean Gaussian distributed with variance 0.01. Further, the following distortion functions are considered [135]:

Figure 6.8 plots the distortion functions of the saturation and dead zone. Figure 6.9 shows the desired response signal with data loss (the probability of data loss is 0.3). In the simulation below, the Gaussian kernels are used and the kernel sizes are kept fixed at ![]() .

.

Figure 6.8 Distortion functions of saturation and dead zone. Source: Adopted from [135].

Figure 6.9 Desired response signal with data loss. Source: Adopted from [135].

Figure 6.10 illustrates the average convergence curves over 50 Monte Carlo simulation runs. One can see that, without measurement distortions, the conventional LMS algorithm has a better performance. However, in the case of measurement distortions, it is evident the deterioration of the LMS algorithm whereas the SMIG algorithm is little affected and achieves a much better performance. Simulation results confirm that the MaxMI criterion is more robust to the measurement distortion than traditional MSE criterion.

Figure 6.10 Average convergence curves of SMIG and LMS algorithms: (A) undistorted, (B) saturation, (C) dead zone, and (D) data loss. Source: Adopted from [135].

6.2.3 Double-Criterion Identification Method

The system identification scheme of Figure 6.7 does not, in general, satisfy the condition of parameter identifiability (i.e., the uniqueness of the optimal solution). In order to uniquely determine an optimal solution, some a priori information about the parameters is required. However, such a priori information is not available for many practical applications. To address this problem, we introduce in the following the double-criterion identification method [136].

Consider the Wiener system shown in Figure 6.11, where the system has the cascade structure and consists of a discrete-time linear filter ![]() followed by a zero-memory nonlinearity

followed by a zero-memory nonlinearity ![]() . Wiener systems are typical nonlinear systems and are widely used for nonlinear modeling [238]. The double-criterion method mainly aims at the Wiener system identification, but it also applies to many other systems. In fact, any system can be regarded as a cascade system consisting of itself followed by

. Wiener systems are typical nonlinear systems and are widely used for nonlinear modeling [238]. The double-criterion method mainly aims at the Wiener system identification, but it also applies to many other systems. In fact, any system can be regarded as a cascade system consisting of itself followed by ![]() .

.

First, we define the equivalence between two Wiener systems.

Appendix I MinMI Rate Criterion

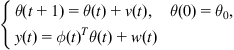

The authors in [124] propose the MinMI rate criterion. Consider the linear Gaussian system

(I.1)

(I.1)

where ![]() ,

, ![]() ,

, ![]() . One can adopt the following linear recursive algorithm to estimate the parameters

. One can adopt the following linear recursive algorithm to estimate the parameters

![]() (I.2)

(I.2)

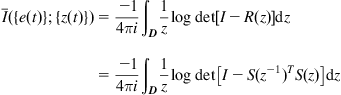

The MinMI rate criterion is to search an optimal gain matrix ![]() such that the mutual information rate

such that the mutual information rate ![]() between the error signal

between the error signal ![]() and a unity-power white Gaussian noise

and a unity-power white Gaussian noise ![]() is minimized, where

is minimized, where ![]() ,

, ![]() is a certain Gaussian process independent of

is a certain Gaussian process independent of ![]() . Clearly, the MinMI rate criterion requires that the asymptotic power spectral

. Clearly, the MinMI rate criterion requires that the asymptotic power spectral ![]() of the error process

of the error process ![]() satisfies

satisfies ![]() (otherwise

(otherwise ![]() does not exist). It can be calculated that

does not exist). It can be calculated that

(I.3)

(I.3)

where ![]() is a spectral factor of

is a spectral factor of ![]() . Hence, under the MinMI rate criterion the optimal gain matrix

. Hence, under the MinMI rate criterion the optimal gain matrix ![]() will be

will be

![]() (I.4)

(I.4)

1The minimum mutual information rate criterion was also proposed in [124], which minimizes the mutual information rate between the error signal and a certain white Gaussian process (see Appendix I).

2The mutual information minimization is a basic optimality criterion in ICA. Other ICA criteria, such as the negentropy maximization, Infomax, likelihood maximization, and the higher order statistics, in general conform with the mutual information minimization.

3One can also apply the kernel density estimation method to estimate ![]() , and then use the estimated PDF to compute the

, and then use the estimated PDF to compute the ![]() function.

function.

4It is worth noting that the identifiability problem under the MaxMI criterion has been studied in [134], wherein the “identifiability” does not means the uniqueness of the solution, but just means that the mutual information between the system output and the model output is nonzero.

5Data loss means that there exists accidental loss of the measurement data due to certain failures in the sensors or communication channels.

6For any random variables ![]() and

and ![]() , the mutual information

, the mutual information ![]() satisfies

satisfies ![]() ,

, ![]() ,

, ![]() .

.

7In practice, the IEP is evaluated using the sample mean instead of the expectation value.