System Identification Under Minimum Error Entropy Criteria

In previous chapter, we give an overview of information theoretic parameter estimation. These estimation methods are, however, devoted to cases where a large amount of statistical information on the unknown parameter is assumed to be available. For example, the minimum divergence estimation needs to know the likelihood function of the parameter. Also, in Bayes estimation with minimum error entropy (MEE) criterion, the joint distribution of unknown parameter and observation is assumed to be known. In this and later chapters, we will further investigate information theoretic system identification. Our focus is mainly on system parameter estimation (identification) where no statistical information on parameters exists (i.e., only data samples are available). To develop the identification algorithms under information theoretic criteria, one should evaluate the related information measures. This requires the knowledge of the data distributions, which are, in general, unknown to us. To address this issue, we can use the estimated (empirical) information measures as the identification criteria.

4.1 Brief Sketch of System Parameter Identification

System identification involves fitting the experimental input–output data (training data) into empirical model. In general, system identification includes the following key steps:

• Experiment design: To obtain good experimental data. Usually, the input signals should be designed such that it provides enough process excitation.

• Selection of model structure: To choose a suitable model structure based on the training data or prior knowledge.

• Selection of the criterion: To choose a suitable criterion (cost) function that reflects how well the model fits the experimental data.

• Parameter identification: To obtain the model parameters1 by optimizing (minimizing or maximizing) the above criterion function.

• Model validation: To test the model so as to reveal any inadequacies.

In this book, we focus mainly on the parameter identification part. Figure 4.1 shows a general scheme of discrete-time system identification, where ![]() and

and ![]() denote the system input and output (clean output) at time

denote the system input and output (clean output) at time ![]() ,

, ![]() is an additive noise that accounts for the system uncertainty or measurement error, and

is an additive noise that accounts for the system uncertainty or measurement error, and ![]() is the measured output. Further,

is the measured output. Further, ![]() is the model output and

is the model output and ![]() denotes the identification error, which is defined as the difference between the measured output and the model output, i.e.,

denotes the identification error, which is defined as the difference between the measured output and the model output, i.e., ![]() . The goal of parameter identification is then to search the model parameter vector (or weight vector) so as to minimize (or maximize) a certain criterion function (usually the model structure is predefined).

. The goal of parameter identification is then to search the model parameter vector (or weight vector) so as to minimize (or maximize) a certain criterion function (usually the model structure is predefined).

The implementation of system parameter identification involves model structure, criterion function, and parameter search (identification) algorithm. In the following, we will briefly discuss these three aspects.

4.1.1 Model Structure

Generally speaking, the model structure is a parameterized mapping from inputs2 to outputs. There are various mathematical descriptions of system model (linear or nonlinear, static or dynamic, deterministic or stochastic, etc.). Many of them can be expressed as the following linear-in-parameter model:

(4.1)

(4.1)

where ![]() denotes the regression input vector and

denotes the regression input vector and ![]() denotes the weight vector (i.e., parameter vector). The simplest linear-in-parameter model is the adaptive linear neuron (ADALINE). Let the input be an

denotes the weight vector (i.e., parameter vector). The simplest linear-in-parameter model is the adaptive linear neuron (ADALINE). Let the input be an ![]() -dimensional vector

-dimensional vector ![]() . The output of ADALINE model will be

. The output of ADALINE model will be

![]() (4.2)

(4.2)

where ![]() is a bias (some constant). In this case, we have

is a bias (some constant). In this case, we have

(4.3)

(4.3)

If the bias ![]() is zero, and the input vector is

is zero, and the input vector is ![]() , which is formed by feeding the input signal to a tapped delay line, then the ADALINE becomes a finite impulse response (FIR) filter.

, which is formed by feeding the input signal to a tapped delay line, then the ADALINE becomes a finite impulse response (FIR) filter.

The ARX (autoregressive with external input) dynamic model is another important linear-in-parameter model:

![]() (4.4)

(4.4)

One can write Eq. (4.4) as ![]() if let

if let

(4.5)

(4.5)

The linear-in-parameter model also includes many nonlinear models as special cases. For example, the ![]() -order polynomial model can be expressed as

-order polynomial model can be expressed as

![]() (4.6)

(4.6)

where

(4.7)

(4.7)

Other examples include: the discrete-time Volterra series with finite memory and order, Hammerstein model, radial basis function (RBF) neural networks with fixed centers, and so on.

In most cases, the system model is a nonlinear-in-parameter model, whose output is not linearly related to the parameters. A typical example of nonlinear-in-parameter model is the multilayer perceptron (MLP) [53]. The MLP, with one hidden layer, can be generally expressed as follows:

![]() (4.8)

(4.8)

where ![]() is the

is the ![]() input vector,

input vector, ![]() is the

is the ![]() weight matrix connecting the input layer with the hidden layer,

weight matrix connecting the input layer with the hidden layer, ![]() is the activation function (usually a sigmoid function),

is the activation function (usually a sigmoid function), ![]() is the

is the ![]() bias vector for the hidden neurons,

bias vector for the hidden neurons, ![]() is the

is the ![]() weight vector connecting the hidden layer to the output neuron, and

weight vector connecting the hidden layer to the output neuron, and ![]() is the bias for the output neuron.

is the bias for the output neuron.

The model can also be created in kernel space. In kernel machine learning (e.g. support vector machine, SVM), one often uses a reproducing kernel Hilbert space (RKHS) ![]() associated with a Mercer kernel

associated with a Mercer kernel ![]() as the hypothesis space [161, 162]. According to Moore-Aronszajn theorem [174, 175], every Mercer kernel

as the hypothesis space [161, 162]. According to Moore-Aronszajn theorem [174, 175], every Mercer kernel ![]() induces a unique function space

induces a unique function space ![]() , namely the RKHS, whose reproducing kernel is

, namely the RKHS, whose reproducing kernel is ![]() , satisfying: 1)

, satisfying: 1) ![]() , the function

, the function ![]() , and 2)

, and 2) ![]() , and for every

, and for every ![]() ,

, ![]() , where

, where ![]() denotes the inner product in

denotes the inner product in ![]() . If Mercer kernel

. If Mercer kernel ![]() is strictly positive-definite, the induced RKHS

is strictly positive-definite, the induced RKHS ![]() will be universal (dense in the space of continuous functions over

will be universal (dense in the space of continuous functions over ![]() ). Assuming the input signal

). Assuming the input signal ![]() , the model in RKHS

, the model in RKHS ![]() can be expressed as

can be expressed as

![]() (4.9)

(4.9)

where ![]() is the unknown input–output mapping that needs to be estimated. This model is a nonparametric function over input space

is the unknown input–output mapping that needs to be estimated. This model is a nonparametric function over input space ![]() . However, one can regard it as a “parameterized” model, where the parameter space is the RKHS

. However, one can regard it as a “parameterized” model, where the parameter space is the RKHS ![]() .

.

The model (4.9) can alternatively be expressed in a feature space (a vector space in which the training data are embedded). According to Mercer’s theorem, any Mercer kernel ![]() induces a mapping

induces a mapping ![]() from the input space

from the input space ![]() to a feature space

to a feature space ![]() .3 In the feature space, the inner products can be calculated using the kernel evaluation:

.3 In the feature space, the inner products can be calculated using the kernel evaluation:

![]() (4.10)

(4.10)

The feature space ![]() is isometric-isomorphic to the RKHS

is isometric-isomorphic to the RKHS ![]() . This can be easily understood by identifying

. This can be easily understood by identifying ![]() and

and ![]() , where

, where ![]() denotes a vector in feature space

denotes a vector in feature space ![]() , satisfying

, satisfying ![]() ,

, ![]() . Therefore, in feature space the model (4.9) becomes

. Therefore, in feature space the model (4.9) becomes

![]() (4.11)

(4.11)

This is a linear model in feature space, with ![]() as the input, and

as the input, and ![]() as the weight vector. It is worth noting that the model (4.11) is actually a nonlinear model in input space, since the mapping

as the weight vector. It is worth noting that the model (4.11) is actually a nonlinear model in input space, since the mapping ![]() is in general a nonlinear mapping. The key principle behind kernel method is that, as long as a linear model (or algorithm) in high-dimensional feature space can be formulated in terms of inner products, a nonlinear model (or algorithm) can be obtained by simply replacing the inner product with a Mercer kernel. The model (4.11) can also be regarded as a “parameterized” model in feature space, where the parameter is the weight vector

is in general a nonlinear mapping. The key principle behind kernel method is that, as long as a linear model (or algorithm) in high-dimensional feature space can be formulated in terms of inner products, a nonlinear model (or algorithm) can be obtained by simply replacing the inner product with a Mercer kernel. The model (4.11) can also be regarded as a “parameterized” model in feature space, where the parameter is the weight vector ![]() .

.

4.1.2 Criterion Function

The criterion (risk or cost) function in system identification reflects how well the model fits the experimental data. In most cases, the criterion is a functional of the identification error ![]() , with the form

, with the form

![]() (4.12)

(4.12)

where ![]() is a loss function, which usually satisfies

is a loss function, which usually satisfies

Typical examples of criterion (4.12) include the mean square error (MSE), mean absolute deviation (MAD), mean ![]() -power error (MPE), and so on. In practice, the error distribution is in general unknown, and hence, we have to estimate the expectation value in Eq. (4.12) using sample data. The estimated criterion function is called the empirical criterion function (empirical risk). Given a loss function

-power error (MPE), and so on. In practice, the error distribution is in general unknown, and hence, we have to estimate the expectation value in Eq. (4.12) using sample data. The estimated criterion function is called the empirical criterion function (empirical risk). Given a loss function ![]() , the empirical criterion function

, the empirical criterion function ![]() can be computed as follows:

can be computed as follows:

Note that for MSE criterion (![]() ), the average criterion function is the well-known least-squares criterion function (sum of the squared errors).

), the average criterion function is the well-known least-squares criterion function (sum of the squared errors).

Besides the criterion functions of form (4.12), there are many other criterion functions for system identification. In this chapter, we will discuss system identification under MEE criterion.

4.1.3 Identification Algorithm

Given a parameterized model, the identification error ![]() can be expressed as a function of the parameters. For example, for the linear-in-parameter model (4.1), we have

can be expressed as a function of the parameters. For example, for the linear-in-parameter model (4.1), we have

![]() (4.13)

(4.13)

which is a linear function of ![]() (assuming

(assuming ![]() and

and ![]() are known). Similarly, the criterion function

are known). Similarly, the criterion function ![]() (or the empirical criterion function

(or the empirical criterion function ![]() ) can also be expressed as a function of the parameters, denoted by

) can also be expressed as a function of the parameters, denoted by ![]() (or

(or ![]() ). Therefore, the identification criterion represents a hyper-surface in the parameter space, which is called the performance surface.

). Therefore, the identification criterion represents a hyper-surface in the parameter space, which is called the performance surface.

The parameter ![]() can be identified through searching the optima (minima or maxima) of the performance surface. There are two major ways to do this. One is the batch mode and the other is the online (sequential) mode.

can be identified through searching the optima (minima or maxima) of the performance surface. There are two major ways to do this. One is the batch mode and the other is the online (sequential) mode.

4.1.3.1 Batch Identification

In batch mode, the identification of parameters is done only after collecting a number of samples or even possibly the whole training data. When these data are available, one can calculate the empirical criterion function ![]() based on the model structure. And then, the parameter

based on the model structure. And then, the parameter ![]() can be estimated by solving the following optimization problem:

can be estimated by solving the following optimization problem:

![]() (4.14)

(4.14)

where ![]() denotes the set of all possible values of

denotes the set of all possible values of ![]() . Sometimes, one can achieve an analytical solution by setting the gradient4 of

. Sometimes, one can achieve an analytical solution by setting the gradient4 of ![]() to zero, i.e.,

to zero, i.e.,

![]() (4.15)

(4.15)

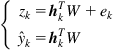

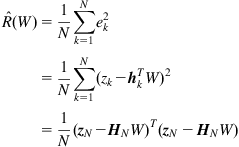

For example, with the linear-in-parameter model (4.1) and under the least-squares criterion (empirical MSE criterion), we have

(4.16)

(4.16)

where ![]() and

and ![]() . And hence,

. And hence,

(4.17)

(4.17)

If ![]() is a nonsingular matrix, we have

is a nonsingular matrix, we have

![]() (4.18)

(4.18)

In many situations, however, there is no analytical solution for ![]() , and we have to rely on nonlinear optimization techniques, such as gradient descent methods, simulated annealing methods, and genetic algorithms (GAs).

, and we have to rely on nonlinear optimization techniques, such as gradient descent methods, simulated annealing methods, and genetic algorithms (GAs).

The batch mode approach has some shortcomings: (i) it is not suitable for online applications, since the identification is performed only after a number of data are available and (ii) the memory and computational requirements will increase dramatically with the increasing amount of data.

4.1.3.2 Online Identification

The online mode identification is also referred to as the sequential or incremental identification, or adaptive filtering. Compared with the batch mode identification, the sequential identification has some desirable features: (i) the training data (examples or observations) are sequentially (one by one) presented to the identification procedure; (ii) at any time, only few (usually one) training data are used; (iii) a training observation can be discarded as long as the identification procedure for that particular observation is completed; and (iv) it is not necessary to know how many total training observations will be presented. In this book, our focus is primarily on the sequential identification.

The sequential identification is usually performed by means of iterative schemes of the type

![]() (4.19)

(4.19)

where ![]() denotes the estimated parameter at

denotes the estimated parameter at ![]() instant (iteration) and

instant (iteration) and ![]() denotes the adjustment (correction) term. In the following, we present several simple online identification (adaptive filtering) algorithms.

denotes the adjustment (correction) term. In the following, we present several simple online identification (adaptive filtering) algorithms.

4.1.3.3 Recursive Least Squares Algorithm

Given a linear-in-parameter model, the Recursive Least Squares (RLS) algorithm recursively finds the least-squares solution of Eq. (4.18). With a sequence of observations ![]() up to and including time

up to and including time ![]() , the least-squares solution is

, the least-squares solution is

![]() (4.20)

(4.20)

When a new observation ![]() becomes available, the parameter estimate

becomes available, the parameter estimate ![]() is

is

![]() (4.21)

(4.21)

One can derive the following relation between ![]() and

and ![]() :

:

![]() (4.22)

(4.22)

where ![]() is the prediction error,

is the prediction error,

![]() (4.23)

(4.23)

and ![]() is the gain vector, computed as

is the gain vector, computed as

![]() (4.24)

(4.24)

where the matrix ![]() can be calculated recursively as follows:

can be calculated recursively as follows:

![]() (4.25)

(4.25)

Equations (4.22)–(4.25) constitute the RLS algorithm.

Compared to most of its competitors, the RLS exhibits very fast convergence. However, this benefit is achieved at the cost of high computational complexity. If the dimension of ![]() is

is ![]() , then the time and memory complexities of RLS are both

, then the time and memory complexities of RLS are both ![]() .

.

4.1.3.4 Least Mean Square Algorithm

The Least Mean Square (LMS) algorithm is much simpler than RLS, which is a stochastic gradient descent algorithm under the instantaneous MSE cost ![]() . The weight update equation for LMS can be simply derived as follows:

. The weight update equation for LMS can be simply derived as follows:

(4.26)

(4.26)

where ![]() is the step-size (adaptation gain, learning rate, etc.),5 and the term

is the step-size (adaptation gain, learning rate, etc.),5 and the term ![]() is the instantaneous gradient of the model output with respect to the weight vector, whose form depends on the model structure. For a FIR filter (or ADALINE), the instantaneous gradient will simply be the input vector

is the instantaneous gradient of the model output with respect to the weight vector, whose form depends on the model structure. For a FIR filter (or ADALINE), the instantaneous gradient will simply be the input vector ![]() . In this case, the LMS algorithm becomes6

. In this case, the LMS algorithm becomes6

![]() (4.27)

(4.27)

The computational complexity of the LMS (4.27) is just ![]() , where

, where ![]() is the input dimension.

is the input dimension.

If the model is an MLP network, the term ![]() can be computed by back propagation (BP), which is a common method of training artificial neural networks so as to minimize the objective function [53].

can be computed by back propagation (BP), which is a common method of training artificial neural networks so as to minimize the objective function [53].

There are many other stochastic gradient descent algorithms that are similar to the LMS. Typical examples include the least absolute deviation (LAD) algorithm [31] and the least mean fourth (LMF) algorithm [26]. The LMS, LAD, and LMF algorithms are all special cases of the least mean ![]() -power (LMP) algorithm [30]. The LMP algorithm aims to minimize the

-power (LMP) algorithm [30]. The LMP algorithm aims to minimize the ![]() -power of the error, which can be derived as

-power of the error, which can be derived as

(4.28)

(4.28)

For the cases ![]() , the above algorithm corresponds to the LAD, LMS, and LMF algorithms, respectively.

, the above algorithm corresponds to the LAD, LMS, and LMF algorithms, respectively.

4.1.3.5 Kernel Adaptive Filtering Algorithms

The kernel adaptive filtering (KAF) algorithms are a family of nonlinear adaptive filtering algorithms developed in kernel (or feature) space [12], by using the linear structure and inner product of this space to implement the well-established linear adaptive filtering algorithms (e.g., LMS, RLS, etc.) and to obtain nonlinear filters in the original input space. They have several desirable features: (i) if choosing a universal kernel (e.g., Gaussian kernel), they are universal approximators; (ii) under MSE criterion, the performance surface is quadratic in feature space so gradient descent learning does not suffer from local minima; and (iii) if pruning the redundant features, they have moderate complexity in terms of computation and memory. Typical KAF algorithms include the kernel recursive least squares (KRLS) [176], kernel least mean square (KLMS) [177], kernel affine projection algorithms (KAPA) [178], and so on. When the kernel is radial (such as the Gaussian kernel), they naturally build a growing RBF network, where the weights are directly related to the errors at each sample. In the following, we only discuss the KLMS algorithm. Interesting readers can refer to Ref. [12] for further information about KAF algorithms.

Let ![]() be an

be an ![]() -dimensional input vector. We can transform

-dimensional input vector. We can transform ![]() into a high-dimensional feature space

into a high-dimensional feature space ![]() (induced by kernel

(induced by kernel ![]() ) through a nonlinear mapping

) through a nonlinear mapping ![]() , i.e.,

, i.e., ![]() . Suppose the model in feature space is given by Eq. (4.11). Then using the LMS algorithm on the transformed observation sequence

. Suppose the model in feature space is given by Eq. (4.11). Then using the LMS algorithm on the transformed observation sequence ![]() yields [177]

yields [177]

(4.29)

(4.29)

where ![]() denotes the estimated weight vector (at iteration

denotes the estimated weight vector (at iteration ![]() ) in feature space. The KLMS (4.29) is very similar to the LMS algorithm, except for the dimensionality (or richness) of the projection space. The learned mapping (model) at iteration

) in feature space. The KLMS (4.29) is very similar to the LMS algorithm, except for the dimensionality (or richness) of the projection space. The learned mapping (model) at iteration ![]() is the composition of

is the composition of ![]() and

and ![]() , i.e.,

, i.e., ![]() . If identifying

. If identifying ![]() , we obtain the sequential learning rule in the original input space:

, we obtain the sequential learning rule in the original input space:

(4.30)

(4.30)

At iteration ![]() , given an input

, given an input ![]() , the output of the filter is

, the output of the filter is

![]() (4.31)

(4.31)

From Eq. (4.31) we see that, if choosing a radial kernel, the KLMS produces a growing RBF network by allocating a new kernel unit for every new example with input ![]() as the center and

as the center and ![]() as the coefficient. The algorithm of KLMS is summarized in Table 4.1, and the corresponding network topology is illustrated in Figure 4.2.

as the coefficient. The algorithm of KLMS is summarized in Table 4.1, and the corresponding network topology is illustrated in Figure 4.2.

Selecting a proper Mercer kernel is crucial for all kernel methods. In KLMS, the kernel is usually chosen to be a normalized Gaussian kernel:

![]() (4.32)

(4.32)

where ![]() is the kernel size (kernel width) and

is the kernel size (kernel width) and ![]() is called the kernel parameter. The kernel size in Gaussian kernel is an important parameter that controls the degree of smoothing and consequently has significant influence on the learning performance. Usually, the kernel size can be set manually or estimated in advance by Silverman’s rule [97]. The role of the step-size in KLMS remains in principle the same as the step-size in traditional LMS algorithm. Specifically, it controls the compromise between convergence speed and misadjustment. It has also been shown in Ref. [177] that the step-size in KLMS plays a similar role as the regularization parameter.

is called the kernel parameter. The kernel size in Gaussian kernel is an important parameter that controls the degree of smoothing and consequently has significant influence on the learning performance. Usually, the kernel size can be set manually or estimated in advance by Silverman’s rule [97]. The role of the step-size in KLMS remains in principle the same as the step-size in traditional LMS algorithm. Specifically, it controls the compromise between convergence speed and misadjustment. It has also been shown in Ref. [177] that the step-size in KLMS plays a similar role as the regularization parameter.

The main bottleneck of KLMS (as well as other KAF algorithms) is the linear growing network with each new sample, which poses both computational and memory issues especially for continuous adaptation scenarios. In order to curb the network growth and to obtain a compact representation, a variety of sparsification techniques can be applied, where only the important input data are accepted as the centers. Typical sparsification criteria include the novelty criterion [179], approximate linear dependency (ALD) criterion [176], coherence criterion [180], surprise criterion [181], and so on. The idea of quantization can also be used to yield a compact network with desirable accuracy [182].

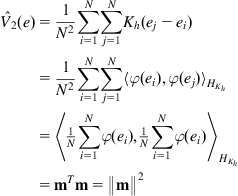

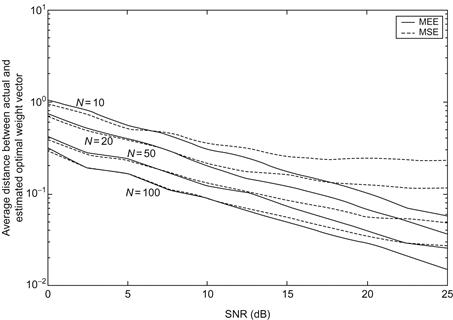

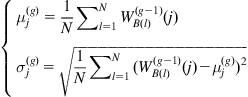

4.2 MEE Identification Criterion

Most of the existing approaches to parameter identification utilized the MSE (or equivalently, Gaussian likelihood) as the identification criterion function. The MSE is mathematically tractable and under Gaussian assumption is an optimal criterion for linear system. However, it is well known that MSE may be a poor descriptor of optimality for nonlinear and non-Gaussian (e.g., multimodal, heavy-tail, or finite range distributions) situations, since it constrains only the second-order statistics. To address this issue, one can select some criterion beyond second-order statistics that does not suffer from the limitation of Gaussian assumption and can improve performance in many realistic scenarios. Information theoretic quantities (entropy, divergence, mutual information, etc.) as identification criteria attract ever-increasing attention to this end, since they can capture higher order statistics and information content of signals rather than simply their energy [64]. In the following, we discuss the MEE criterion for system identification.

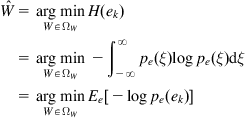

Under MEE criterion, the parameter vector (weight vector) ![]() can be identified by solving the following optimization problem:

can be identified by solving the following optimization problem:

(4.33)

(4.33)

where ![]() denotes the probability density function (PDF) of error

denotes the probability density function (PDF) of error ![]() . If using the order-

. If using the order-![]() Renyi entropy (

Renyi entropy (![]() ,

, ![]() ) of the error as the criterion function, the estimated parameter will be

) of the error as the criterion function, the estimated parameter will be

(4.34)

(4.34)

where ![]() follows from the monotonicity of logarithm function,

follows from the monotonicity of logarithm function, ![]() is the order-

is the order-![]() information potential (IP) of the error

information potential (IP) of the error ![]() . If

. If ![]() , minimizing the order-

, minimizing the order-![]() Renyi entropy is equivalent to minimizing the order-

Renyi entropy is equivalent to minimizing the order-![]() IP; while if

IP; while if ![]() , minimizing the order-

, minimizing the order-![]() Renyi entropy is equivalent to maximizing the order-

Renyi entropy is equivalent to maximizing the order-![]() IP. In practical application, we often use the order-

IP. In practical application, we often use the order-![]() IP instead of the order-

IP instead of the order-![]() Renyi entropy as the criterion function for identification.

Renyi entropy as the criterion function for identification.

Further, if using the ![]() -entropy of the error as the criterion function, we have

-entropy of the error as the criterion function, we have

(4.35)

(4.35)

where ![]() . Note that the

. Note that the ![]() -entropy criterion includes the Shannon entropy (

-entropy criterion includes the Shannon entropy (![]() ) and order-

) and order-![]() information potential (

information potential (![]() ) as special cases.

) as special cases.

The error entropy is a functional of error distribution. In practice, the error distribution is usually unknown to us, and so is the error entropy. And hence, we have to estimate the error entropy from error samples, and use the estimated error entropy (called the empirical error entropy) as a criterion to identify the system parameter. In the following, we present several common approaches to estimating the entropy from sample data.

4.2.1 Common Approaches to Entropy Estimation

A straight way to estimate the entropy is to estimate the underlying distribution based on available samples, and plug the estimated distributions into the entropy expression to obtain the entropy estimate (the so-called “plug-in approach”) [183]. Several plug-in estimates of the Shannon entropy (extension to other entropy definitions is straightforward) are presented as follows.

4.2.1.1 Integral Estimate

Denote ![]() the estimated PDF based on sample

the estimated PDF based on sample ![]() . Then the integral estimate of entropy is of the form

. Then the integral estimate of entropy is of the form

![]() (4.36)

(4.36)

where ![]() is a set typically used to exclude the small or tail values of

is a set typically used to exclude the small or tail values of ![]() . The evaluation of Eq. (4.36) requires numerical integration and is not an easy task in general.

. The evaluation of Eq. (4.36) requires numerical integration and is not an easy task in general.

4.2.1.2 Resubstitution Estimate

The resubstitution estimate substitutes the estimated PDF into the sample mean approximation of the entropy measure (approximating the expectation value by its sample mean), which is of the form

![]() (4.37)

(4.37)

This estimation method is considerably simpler than the integral estimate, since it involves no numerical integration.

4.2.1.3 Splitting Data Estimate

Here, we decompose the sample ![]() into two sub samples:

into two sub samples: ![]() ,

, ![]() ,

, ![]() . Based on subsample

. Based on subsample ![]() , we obtain a density estimate

, we obtain a density estimate ![]() , and then, using this density estimate and the second subsample

, and then, using this density estimate and the second subsample ![]() , we estimate the entropy by

, we estimate the entropy by

![]() (4.38)

(4.38)

where ![]() is the indicator function and the set

is the indicator function and the set ![]() (

(![]() ). The splitting data estimate is different from the resubstitution estimate in that it uses different samples to estimate the density and to calculate the sample mean.

). The splitting data estimate is different from the resubstitution estimate in that it uses different samples to estimate the density and to calculate the sample mean.

4.2.1.4 Cross-validation Estimate

If ![]() denotes a density estimate based on sample

denotes a density estimate based on sample ![]() (i.e., leaving

(i.e., leaving ![]() out), then the cross-validation estimate of entropy is

out), then the cross-validation estimate of entropy is

![]() (4.39)

(4.39)

A key step in plug-in estimation is to estimate the PDF from sample data. In the literature, there are mainly two approaches for estimating the PDF of a random variable based on its sample data: parametric and nonparametric. Accordingly, there are also parametric and nonparametric entropy estimations. The parametric density estimation assumes a parametric model of the density and estimates the involved parameters using classical estimation methods like the maximum likelihood (ML) estimation. This approach needs to select a suitable parametric model of the density, which depends upon some prior knowledge. The nonparametric density estimation, however, does not need to select a parametric model, and can estimate the PDF of any distribution.

The histogram density estimation (HDE) and kernel density estimation (KDE) are two popular nonparametric density estimation methods. Here we only discuss the KDE method (also referred to as Parzen window method), which has been widely used in nonparametric regression and pattern recognition. Given a set of independent and identically distributed (i.i.d.) samples7 ![]() drawn from

drawn from ![]() , the KDE for

, the KDE for ![]() is given by [97]

is given by [97]

![]() (4.40)

(4.40)

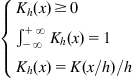

where ![]() denotes a kernel function8 with width

denotes a kernel function8 with width ![]() , satisfying the following conditions:

, satisfying the following conditions:

(4.41)

(4.41)

where ![]() is the kernel function with width 1. To make the estimated PDF smooth, the kernel function is usually selected to be a continuous and differentiable (and preferably symmetric and unimodal) function. The most widely used kernel function in KDE is the Gaussian function:

is the kernel function with width 1. To make the estimated PDF smooth, the kernel function is usually selected to be a continuous and differentiable (and preferably symmetric and unimodal) function. The most widely used kernel function in KDE is the Gaussian function:

![]() (4.42)

(4.42)

The kernel width of the Gaussian kernel can be optimized by the ML principle, or selected according to rules-of-thumb, such as Silverman’s rule [97].

With a fixed kernel width ![]() , we have

, we have

![]() (4.43)

(4.43)

where ![]() denotes the convolution operator. Using a suitable annealing rate for the kernel width, the KDE can be asymptotically unbiased and consistent. Specifically, if

denotes the convolution operator. Using a suitable annealing rate for the kernel width, the KDE can be asymptotically unbiased and consistent. Specifically, if ![]() and

and ![]() , then

, then ![]() in probability [98].

in probability [98].

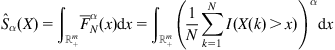

In addition to the plug-in methods described previously, there are many other methods for entropy estimation, such as the sample-spacing method and the nearest neighbor method. In the following, we derive the sample-spacing estimate. First, let us express the Shannon entropy as [184]

![]() (4.44)

(4.44)

where ![]() . Using the slope of the curve

. Using the slope of the curve ![]() to approximate the derivative, we have

to approximate the derivative, we have

![]() (4.45)

(4.45)

where ![]() is the order statistics of the sample

is the order statistics of the sample ![]() , and

, and ![]() is the

is the ![]() -order sample spacing (

-order sample spacing (![]() ). Hence, the sample-spacing estimate of the entropy is

). Hence, the sample-spacing estimate of the entropy is

![]() (4.46)

(4.46)

If adding a correction term to compensate the asymptotic bias, we get

![]() (4.47)

(4.47)

where ![]() is the Digamma function (

is the Digamma function (![]() is the Gamma function).

is the Gamma function).

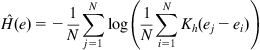

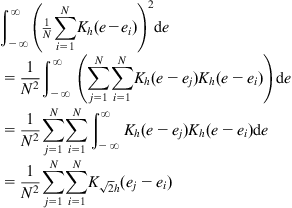

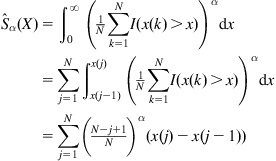

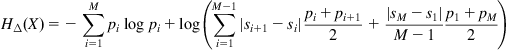

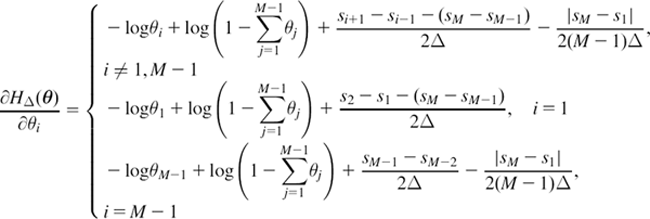

4.2.2 Empirical Error Entropies Based on KDE

To calculate the empirical error entropy, we usually adopt the resubstitution estimation method with error PDF estimated by KDE. This approach has some attractive features: (i) it is a nonparametric method, and hence requires no prior knowledge on the error distribution; (ii) it is computationally simple, since no numerical integration is needed; and (iii) if choosing a smooth and differentiable kernel function, the empirical error entropy (as a function of the error sample) is also smooth and differentiable (this is very important for the calculation of the gradient).

Suppose now a set of error samples ![]() are available. By KDE, the error density can be estimated as

are available. By KDE, the error density can be estimated as

![]() (4.48)

(4.48)

Then by resubstitution estimation method, we obtain the following empirical error entropies:

(4.49)

(4.49)

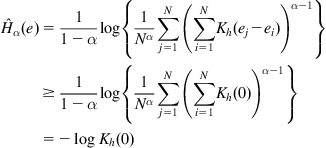

2. Empirical order-![]() Renyi entropy

Renyi entropy

(4.50)

(4.50)

where ![]() is the empirical order-

is the empirical order-![]() IP, i.e.,

IP, i.e.,

(4.51)

(4.51)

(4.52)

(4.52)

It is worth noting that for quadratic information potential (QIP) (![]() ), if choosing Gaussian kernel function, the resubstitution estimate will be identical to the integral estimate but with kernel width

), if choosing Gaussian kernel function, the resubstitution estimate will be identical to the integral estimate but with kernel width ![]() instead of

instead of ![]() . Specifically, if

. Specifically, if ![]() is given by Eq. (4.42), we can derive

is given by Eq. (4.42), we can derive

(4.53)

(4.53)

This result comes from the fact that the integral of the product of two Gaussian functions can be exactly evaluated as the value of the Gaussian function computed at the difference of the arguments and whose variance is the sum of the variances of the two original Gaussian functions. From Eq. (4.53), we also see that the QIP can be simply calculated by the double summation over error samples. Due to this fact, when using order-![]() IP, we usually set

IP, we usually set ![]() .

.

In the following, we present some important properties of the empirical error entropy, and our focus is mainly on the order-![]() Renyi entropy (or order-

Renyi entropy (or order-![]() IP) [64,67].

IP) [64,67].

where (a) is because that ![]() , the kernel function

, the kernel function ![]() satisfies

satisfies ![]() .

.

4.3 Identification Algorithms Under MEE Criterion

4.3.1 Nonparametric Information Gradient Algorithms

In general, information gradient (IG) algorithms refer to the gradient-based identification algorithms under MEE criterion (i.e., minimizing the empirical error entropy), including the batch information gradient (BIG) algorithm, sliding information gradient algorithm, forgetting recursive information gradient (FRIG) algorithm, and stochastic information gradient (SIG) algorithm. If the empirical error entropy is estimated by nonparametric approaches (like KDE), then they are called the nonparametric IG algorithms. In the following, we present several nonparametric IG algorithms that are based on ![]() -entropy and KDE.

-entropy and KDE.

4.3.1.1 BIG Algorithm

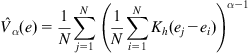

With the empirical ![]() -entropy as the criterion function, the BIG identification algorithm is derived as follows:

-entropy as the criterion function, the BIG identification algorithm is derived as follows:

(4.65)

(4.65)

where ![]() is the step-size,

is the step-size, ![]() is the number of training data,

is the number of training data, ![]() and

and ![]() denote, respectively, the first-order derivatives of functions

denote, respectively, the first-order derivatives of functions ![]() and

and ![]() . The gradient

. The gradient ![]() of the model output with respect to the parameter

of the model output with respect to the parameter ![]() depends on the specific model structure. For example, if the model is an ADALINE or FIR filter, we have

depends on the specific model structure. For example, if the model is an ADALINE or FIR filter, we have ![]() . The reason for the algorithm named as the BIG algorithm is because that the empirical error entropy is calculated based on all the training data.

. The reason for the algorithm named as the BIG algorithm is because that the empirical error entropy is calculated based on all the training data.

Given a specific ![]() function, we obtain a specific algorithm:

function, we obtain a specific algorithm:

In above algorithms, if the kernel function is Gaussian function, the derivative ![]() will be

will be

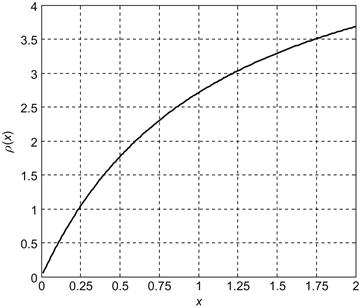

![]() (4.68)

(4.68)

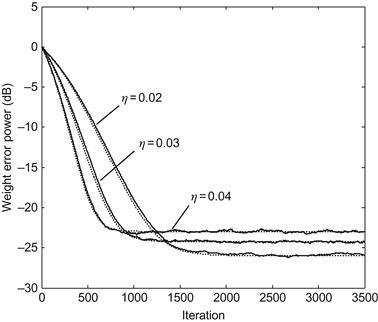

The step-size ![]() is a crucial parameter that controls the compromise between convergence speed and misadjustment, and has significant influence on the learning (identification) performance. In practical use, the selection of step-size should guarantees the stability and convergence rate of the algorithm. To further improve the performance, the step-size

is a crucial parameter that controls the compromise between convergence speed and misadjustment, and has significant influence on the learning (identification) performance. In practical use, the selection of step-size should guarantees the stability and convergence rate of the algorithm. To further improve the performance, the step-size ![]() can be designed as a variable step-size. In Ref. [105], a self-adjusting step-size (SAS) was proposed to improve the performance of QIP criterion, i.e.,

can be designed as a variable step-size. In Ref. [105], a self-adjusting step-size (SAS) was proposed to improve the performance of QIP criterion, i.e.,

![]() (4.69)

(4.69)

where ![]() and

and ![]() is a symmetric and unimodal kernel function (hence

is a symmetric and unimodal kernel function (hence ![]() ).

).

The kernel width ![]() is another important parameter that controls the smoothness of the performance surface. In general, the kernel width can be set manually or determined in advance by Silverman’s rule. To make the algorithm converge to the global solution, one can start the algorithm with a large kernel width and decrease this parameter slowly during the course of adaptation, just like in stochastic annealing. In Ref. [185], an adaptive kernel width was proposed to improve the performance.

is another important parameter that controls the smoothness of the performance surface. In general, the kernel width can be set manually or determined in advance by Silverman’s rule. To make the algorithm converge to the global solution, one can start the algorithm with a large kernel width and decrease this parameter slowly during the course of adaptation, just like in stochastic annealing. In Ref. [185], an adaptive kernel width was proposed to improve the performance.

The BIG algorithm needs to acquire in advance all the training data, and hence is not suitable for online identification. To address this issue, one can use the sliding information gradient algorithm.

4.3.1.2 Sliding Information Gradient Algorithm

The sliding information gradient algorithm utilizes a set of recent error samples to estimate the error entropy. Specifically, the error samples used to calculate the error entropy at time ![]() is as follows9 :

is as follows9 :

![]() (4.70)

(4.70)

where ![]() denotes the sliding data length (

denotes the sliding data length (![]() ). Then the error entropy at time

). Then the error entropy at time ![]() can be estimated as

can be estimated as

(4.71)

(4.71)

And hence, the sliding information gradient algorithm can be derived as

(4.72)

(4.72)

For the case ![]() (corresponding to QIP), the above algorithm becomes

(corresponding to QIP), the above algorithm becomes

![]() (4.73)

(4.73)

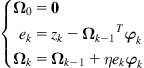

4.3.1.3 FRIG Algorithm

In the sliding information gradient algorithm, the error entropy can be estimated by a forgetting recursive method [64]. Assume at time ![]() the estimated error PDF is

the estimated error PDF is ![]() , then the error PDF at time

, then the error PDF at time ![]() can be estimated as

can be estimated as

![]() (4.74)

(4.74)

where ![]() is the forgetting factor. Therefore, we can calculate the empirical error entropy as follows:

is the forgetting factor. Therefore, we can calculate the empirical error entropy as follows:

(4.75)

(4.75)

If ![]() (

(![]() ), there exists a recursive form [186]:

), there exists a recursive form [186]:

![]() (4.76)

(4.76)

where ![]() is the QIP at time

is the QIP at time ![]() . Thus, we have the following algorithm:

. Thus, we have the following algorithm:

(4.77)

(4.77)

namely the FRIG algorithm. Compared with the sliding information gradient algorithm, the FRIG algorithm is computationally simpler and is more suitable for nonstationary system identification.

4.3.1.4 SIG Algorithm

In the empirical error entropy of (4.71), if dropping the outer averaging operator (![]() ), one may obtain the instantaneous error entropy at time

), one may obtain the instantaneous error entropy at time ![]() :

:

(4.78)

(4.78)

The instantaneous error entropy is similar to the instantaneous error cost ![]() , as both are obtained by removing the expectation operator (or averaging operator) from the original criterion function. The computational cost of the instantaneous error entropy (4.78) is

, as both are obtained by removing the expectation operator (or averaging operator) from the original criterion function. The computational cost of the instantaneous error entropy (4.78) is ![]() of that of the empirical error entropy of Eq. (4.71). The gradient identification algorithm based on the instantaneous error entropy is called the SIG algorithm, which can be derived as

of that of the empirical error entropy of Eq. (4.71). The gradient identification algorithm based on the instantaneous error entropy is called the SIG algorithm, which can be derived as

(4.79)

(4.79)

If ![]() , we obtain the SIG algorithm under Shannon entropy criterion:

, we obtain the SIG algorithm under Shannon entropy criterion:

(4.80)

(4.80)

If ![]() , we get the SIG algorithm under QIP criterion:

, we get the SIG algorithm under QIP criterion:

![]() (4.81)

(4.81)

The SIG algorithm (4.81) is actually the FRIG algorithm with ![]() .

.

4.3.2 Parametric IG Algorithms

In IG algorithms described above, the error distribution is estimated by nonparametric KDE approach. With this approach, one is often confronted with the problem of how to choose a suitable value of the kernel width. An inappropriate choice of width will significantly deteriorate the performance of the algorithm. Though the effects of the kernel width on the shape of the performance surface and the eigenvalues of the Hessian at and around the optimal solution have been carefully investigated [102], at present the choice of the kernel width is still a difficult task. Thus, a certain parameterized density estimation, which does not involve the choice of kernel width, sometimes might be more practical. Especially, if some prior knowledge about the data distribution is available, the parameterized density estimation may achieve a better accuracy than nonparametric alternatives.

Next, we discuss the parametric IG algorithms that adopt parametric approaches to estimate the error distribution. To simplify the discussion, we only present the parametric SIG algorithm.

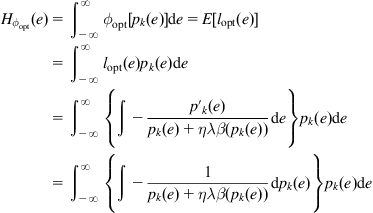

In general, the SIG algorithm can be expressed as

![]() (4.82)

(4.82)

where ![]() is the value of the error PDF at

is the value of the error PDF at ![]() estimated based on the error samples

estimated based on the error samples ![]() . By KDE approach, we have

. By KDE approach, we have

![]() (4.83)

(4.83)

Now we use a parametric approach to estimate ![]() . Let’s consider the exponential (maximum entropy) PDF form:

. Let’s consider the exponential (maximum entropy) PDF form:

(4.84)

(4.84)

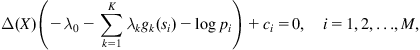

where the parameters ![]() (

(![]() ) can be estimated by some classical estimation methods like the ML estimation. After obtaining the estimated parameter values

) can be estimated by some classical estimation methods like the ML estimation. After obtaining the estimated parameter values ![]() , one can calculate

, one can calculate ![]() as

as

(4.85)

(4.85)

Substituting Eq. (4.85) into Eq. (4.82), we obtain the following parametric SIG algorithm:

(4.86)

(4.86)

If adopting Shannon entropy (![]() ), the algorithm becomes

), the algorithm becomes

(4.87)

(4.87)

The selection of the PDF form is very important. Some typical PDF forms are as follows [187–190]:

where ![]() ,

, ![]() is the standard deviation, and

is the standard deviation, and ![]() is the Gamma function:

is the Gamma function:

![]() (4.89)

(4.89)

In the following, we present the SIG algorithm based on GGD model.

The GGD model has three parameters: location (mean) parameter ![]() , shape parameter

, shape parameter ![]() , and dispersion parameter

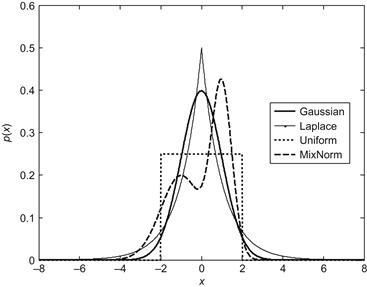

, and dispersion parameter ![]() . It has simple yet flexible functional forms and could approximate a large number of statistical distributions, and is widely used in image coding, speech recognition, and BSS, etc. The GGD densities include Gaussian (

. It has simple yet flexible functional forms and could approximate a large number of statistical distributions, and is widely used in image coding, speech recognition, and BSS, etc. The GGD densities include Gaussian (![]() ) and Laplace (

) and Laplace (![]() ) distributions as special cases. Figure 4.4 shows the GGD distributions for several shape parameters with zero mean and deviation

) distributions as special cases. Figure 4.4 shows the GGD distributions for several shape parameters with zero mean and deviation ![]() . It is evident that smaller values of the shape parameter correspond to heavier tails and therefore to sharper distributions. In the limiting cases, as

. It is evident that smaller values of the shape parameter correspond to heavier tails and therefore to sharper distributions. In the limiting cases, as ![]() , the GGD becomes close to the uniform distribution, whereas as

, the GGD becomes close to the uniform distribution, whereas as ![]() , it approaches an impulse function (

, it approaches an impulse function (![]() -distribution).

-distribution).

Utilizing the GGD model to estimate the error distribution is actually to estimate the parameters ![]() ,

, ![]() , and

, and ![]() based on the error samples. Up to now, there are many methods on how to estimate the GGD parameters [192]. Here, we only discuss the moment matching method (method of moments).

based on the error samples. Up to now, there are many methods on how to estimate the GGD parameters [192]. Here, we only discuss the moment matching method (method of moments).

The order-![]() absolute central moment of GGD distribution can be calculated as

absolute central moment of GGD distribution can be calculated as

![]() (4.90)

(4.90)

Hence we have

![]() (4.91)

(4.91)

The right-hand side of Eq. (4.91) is a function of ![]() , denoted by

, denoted by ![]() . Thus the parameter

. Thus the parameter ![]() can be expressed as

can be expressed as

![]() (4.92)

(4.92)

where ![]() is the inverse of function

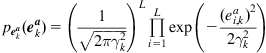

is the inverse of function ![]() . Figure 4.5 shows the curves of the inverse function

. Figure 4.5 shows the curves of the inverse function ![]() when

when ![]() .

.

According to Eq. (4.92), based on the moment matching method one can estimate the parameters ![]() ,

, ![]() , and

, and ![]() as follows:

as follows:

(4.93)

(4.93)

where the subscript ![]() represents that the values are estimated based on the error samples

represents that the values are estimated based on the error samples ![]() . Thus

. Thus ![]() will be

will be

(4.94)

(4.94)

Substituting Eq. (4.94) into Eq. (4.82) and letting ![]() (Shannon entropy), we obtain the SIG algorithm based on GGD model:

(Shannon entropy), we obtain the SIG algorithm based on GGD model:

(4.95)

(4.95)

where ![]() ,

, ![]() , and

, and ![]() are calculated by Eq. (4.93).

are calculated by Eq. (4.93).

To make a distinction, we denote “SIG-kernel” and “SIG-GGD” the SIG algorithms based on kernel approach and GGD densities, respectively. Compared with the SIG-kernel algorithm, the SIG-GGD just needs to estimate the three parameters of GGD density, without resorting to the choice of kernel width and the calculation of kernel function.

Comparing Eqs. (4.95) and (4.28), we find that when ![]() , the SIG-GGD algorithm can be considered as an LMP algorithm with adaptive order

, the SIG-GGD algorithm can be considered as an LMP algorithm with adaptive order ![]() and variable step-size

and variable step-size ![]() . In fact, under certain conditions, the SIG-GGD algorithm will converge to a certain LMP algorithm with fixed order and step-size. Consider the FIR system identification, in which the plant and the adaptive model are both FIR filters with the same order, and the additive noise

. In fact, under certain conditions, the SIG-GGD algorithm will converge to a certain LMP algorithm with fixed order and step-size. Consider the FIR system identification, in which the plant and the adaptive model are both FIR filters with the same order, and the additive noise ![]() is zero mean, ergodic, and stationary. When the model weight vector

is zero mean, ergodic, and stationary. When the model weight vector ![]() converges to the neighborhood of the plant weight vector

converges to the neighborhood of the plant weight vector ![]() , we have

, we have

![]() (4.96)

(4.96)

where ![]() is the weight error vector. In this case, the estimated values of the parameters in SIG-GGD algorithm will be

is the weight error vector. In this case, the estimated values of the parameters in SIG-GGD algorithm will be

(4.97)

(4.97)

Since noise ![]() is an ergodic and stationary process, if

is an ergodic and stationary process, if ![]() is large enough, the estimated values of the three parameters will tend to some constants, and consequently, the SIG-GGD algorithm will converge to a certain LMP algorithm with fixed order and step-size. Clearly, if noise

is large enough, the estimated values of the three parameters will tend to some constants, and consequently, the SIG-GGD algorithm will converge to a certain LMP algorithm with fixed order and step-size. Clearly, if noise ![]() is Gaussian distributed, the SIG-GGD algorithm will converge to the LMS algorithm (

is Gaussian distributed, the SIG-GGD algorithm will converge to the LMS algorithm (![]() ), and if

), and if ![]() is Laplacian distributed, the algorithm will converge to the LAD algorithm (

is Laplacian distributed, the algorithm will converge to the LAD algorithm (![]() ). In Ref. [28], it has been shown that under slow adaptation, the LMS and LAD algorithms are, respectively, the optimum algorithms for the Gaussian and Laplace interfering noises. We may therefore conclude that the SIG-GGD algorithm has the ability to adjust its parameters so as to automatically switch to a certain optimum algorithm.

). In Ref. [28], it has been shown that under slow adaptation, the LMS and LAD algorithms are, respectively, the optimum algorithms for the Gaussian and Laplace interfering noises. We may therefore conclude that the SIG-GGD algorithm has the ability to adjust its parameters so as to automatically switch to a certain optimum algorithm.

There are two points that deserve special mention concerning the implementation of the SIG-GGD algorithm: (i) since there is no analytical expression, the calculation of the inverse function ![]() needs to use look-up table or some interpolation method and (ii) in order to avoid too large gradient and ensure the stability of the algorithm, it is necessary to set an upper bound on the parameter

needs to use look-up table or some interpolation method and (ii) in order to avoid too large gradient and ensure the stability of the algorithm, it is necessary to set an upper bound on the parameter ![]() .

.

4.3.3 Fixed-Point Minimum Error Entropy Algorithm

Given a mapping ![]() , the fixed points are solutions of iterative equation

, the fixed points are solutions of iterative equation ![]() ,

, ![]() . The fixed-point (FP) iteration is a numerical method of computing fixed points of iterated functions. Given an initial point

. The fixed-point (FP) iteration is a numerical method of computing fixed points of iterated functions. Given an initial point ![]() , the FP iteration algorithm is

, the FP iteration algorithm is

![]() (4.98)

(4.98)

where ![]() is the iterative index. If

is the iterative index. If ![]() is a function defined on the real line with real values, and is Lipschitz continuous with Lipschitz constant smaller than 1.0, then

is a function defined on the real line with real values, and is Lipschitz continuous with Lipschitz constant smaller than 1.0, then ![]() has precisely one fixed point, and the FP iteration converges toward that fixed point for any initial guess

has precisely one fixed point, and the FP iteration converges toward that fixed point for any initial guess ![]() . This result can be generalized to any metric space.

. This result can be generalized to any metric space.

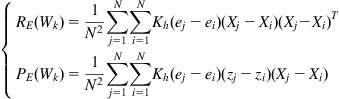

The FP algorithm can be applied in parameter identification under MEE criterion [64]. Let’s consider the QIP criterion:

![]() (4.99)

(4.99)

under which the optimal parameter (weight vector) ![]() satisfies

satisfies

![]() (4.100)

(4.100)

If the model is an FIR filter, and the kernel function is the Gaussian function, we have

![]() (4.101)

(4.101)

One can write Eq. (4.101) in an FP iterative form (utilizing ![]() ):

):

(4.102)

(4.102)

Then we have the following FP algorithm:

![]() (4.103)

(4.103)

where

(4.104)

(4.104)

The above algorithm is called the fixed-point minimum error entropy (FP-MEE) algorithm. The FP-MEE algorithm can also be implemented by using the forgetting recursive form [194], i.e.,

![]() (4.105)

(4.105)

where

(4.106)

(4.106)

This is the recursive fixed-point minimum error entropy (RFP-MEE) algorithm.

In addition to the parameter search algorithms described above, there are many other parameter search algorithms to minimize the error entropy. Several advanced parameter search algorithms are presented in Ref. [104], including the conjugate gradient (CG) algorithm, Levenberg–Marquardt (LM) algorithm, quasi-Newton method, and others.

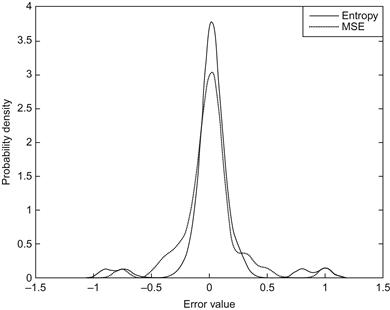

4.3.4 Kernel Minimum Error Entropy Algorithm

System identification algorithms under MEE criterion can also be derived in kernel space. Existing KAF algorithms are mainly based on the MSE (or least squares) criterion. MSE is not always a suitable criterion especially in nonlinear and non-Gaussian situations. Hence, it is attractive to develop a new KAF algorithm based on a non-MSE (nonquadratic) criterion. In Ref. [139], a KAF algorithm under the maximum correntropy criterion (MCC), namely the kernel maximum correntropy (KMC) algorithm, has been developed. Similar to the KLMS, the KMC is also a stochastic gradient algorithm in RKHS. If the kernel function used in correntropy, denoted by ![]() , is the Gaussian kernel, the KMC algorithm can be derived as [139]

, is the Gaussian kernel, the KMC algorithm can be derived as [139]

![]() (4.107)

(4.107)

where ![]() denotes the estimated weight vector at iteration

denotes the estimated weight vector at iteration ![]() in a high-dimensional feature space

in a high-dimensional feature space ![]() induced by Mercer kernel

induced by Mercer kernel ![]() and

and ![]() is a feature vector obtained by transforming the input vector

is a feature vector obtained by transforming the input vector ![]() into the feature space through a nonlinear mapping

into the feature space through a nonlinear mapping ![]() . The KMC algorithm can be regarded as a KLMS algorithm with variable step-size

. The KMC algorithm can be regarded as a KLMS algorithm with variable step-size ![]() .

.

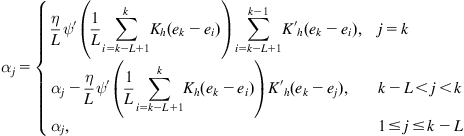

In the following, we will derive a KAF algorithm under the MEE criterion. Since the ![]() -entropy is a very general and flexible entropy definition, we use the

-entropy is a very general and flexible entropy definition, we use the ![]() -entropy of the error as the adaptation criterion. In addition, for simplicity we adopt the instantaneous error entropy (4.78) as the cost function. Then, one can easily derive the following kernel minimum error entropy (KMEE) algorithm:

-entropy of the error as the adaptation criterion. In addition, for simplicity we adopt the instantaneous error entropy (4.78) as the cost function. Then, one can easily derive the following kernel minimum error entropy (KMEE) algorithm:

(4.108)

(4.108)

The KMEE algorithm (4.108) is actually the SIG algorithm in kernel space. By selecting a certain ![]() function, we can obtain a specific KMEE algorithm. For example, if setting

function, we can obtain a specific KMEE algorithm. For example, if setting ![]() (i.e.,

(i.e., ![]() ), we get the KMEE under Shannon entropy criterion:

), we get the KMEE under Shannon entropy criterion:

(4.109)

(4.109)

The weight update equation of Eq. (4.108) can be written in a compact form:

![]() (4.110)

(4.110)

where ![]() ,

, ![]() , and

, and ![]() is a vector-valued function of

is a vector-valued function of ![]() , expressed as

, expressed as

(4.111)

(4.111)

The KMEE algorithm is similar to the KAPA [178], except that the error vector ![]() in KMEE is nonlinearly transformed by the function

in KMEE is nonlinearly transformed by the function ![]() . The learning rule of the KMEE in the original input space can be written as (

. The learning rule of the KMEE in the original input space can be written as (![]() )

)

![]() (4.112)

(4.112)

The learned model by KMEE has the same structure as that learned by KLMS, and can be represented as a linear combination of kernels centered in each data points:

![]() (4.113)

(4.113)

where the coefficients (at iteration ![]() ) are updated as follows:

) are updated as follows:

(4.114)

(4.114)

The pseudocode for KMEE is summarized in Table 4.2.

4.4 Convergence Analysis

Next, we analyze the convergence properties of the parameter identification under MEE criterion. For simplicity, we consider only the ADALINE model (which includes the FIR filter as a special case). The convergence analysis of error entropy minimization algorithms is, in general, rather complicated. This is mostly because

1. the objective function (the empirical error entropy) is not only related to the current error but also concerned with the past error values;

2. the entropy function and the kernel function are nonlinear functions;

3. the shape of performance surface is very complex (nonquadratic and nonconvex).

There are two ways for the convergence analysis of such algorithms: (i) using the Taylor series expansion near the optimal solution to obtain an approximate linearization algorithm, and then performing the convergence analysis for the linearization algorithm and (ii) applying the energy conservation relation to analyze the convergence behavior [106]. The first approach is relatively simple, but only the approximate analysis results near the optimal solution can be achieved. With the second approach it is possible to acquire rigorous analysis results of the convergence, but usually more assumptions are needed. In the following, we first briefly introduce the first analysis approach, and then focus on the second approach, performing the mean square convergence analysis based on the energy conservation relation. The following analysis is mainly aimed at the nonparametric IG algorithms with KDE.

4.4.1 Convergence Analysis Based on Approximate Linearization

In Ref. [102], an approximate linearization approach has been used to analyze the convergence of the gradient-based algorithm under order-![]() IP criterion. Consider the ADALINE model:

IP criterion. Consider the ADALINE model:

![]() (4.123)

(4.123)

where ![]() is the

is the ![]() -dimensional input vector,

-dimensional input vector, ![]() is the weight vector. The gradient based identification algorithm under order-

is the weight vector. The gradient based identification algorithm under order-![]() (

(![]() ) information potential criterion can be expressed as

) information potential criterion can be expressed as

![]() (4.124)

(4.124)

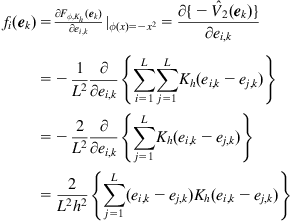

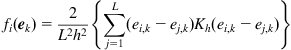

where ![]() denotes the gradient of the empirical order-

denotes the gradient of the empirical order-![]() IP with respect to the weight vector, which can be calculated as

IP with respect to the weight vector, which can be calculated as

(4.125)

(4.125)

As the kernel function ![]() satisfies

satisfies ![]() , we have

, we have

![]() (4.126)

(4.126)

So, one can rewrite Eq. (4.125) as

(4.127)

(4.127)

where ![]() .

.

If the weight vector ![]() lies in the neighborhood of the optimal solution

lies in the neighborhood of the optimal solution ![]() , one can obtain a linear approximation of the gradient

, one can obtain a linear approximation of the gradient ![]() using the first-order Taylor expansion:

using the first-order Taylor expansion:

![]() (4.128)

(4.128)

where ![]() is the Hessian matrix, i.e.,

is the Hessian matrix, i.e.,

(4.129)

(4.129)

Substituting Eq. (4.128) into Eq. (4.124), and subtracting ![]() from both sides, one obtains

from both sides, one obtains

![]() (4.130)

(4.130)

where ![]() is the weight error vector. The convergence analysis of the above linear recursive algorithm is very simple. Actually, one can just borrow the well-known convergence analysis results from the LMS convergence theory [18–20]. Assume that the Hessian matrix

is the weight error vector. The convergence analysis of the above linear recursive algorithm is very simple. Actually, one can just borrow the well-known convergence analysis results from the LMS convergence theory [18–20]. Assume that the Hessian matrix ![]() is a normal matrix and can be decomposed into the following normal form:

is a normal matrix and can be decomposed into the following normal form:

![]() (4.131)

(4.131)

where ![]() is an

is an ![]() orthogonal matrix,

orthogonal matrix, ![]() ,

, ![]() is the eigenvalue of

is the eigenvalue of ![]() . Then, the linear recursion (4.130) can be expressed as

. Then, the linear recursion (4.130) can be expressed as

![]() (4.132)

(4.132)

Clearly, if the following conditions are satisfied, the weight error vector ![]() will converge to the zero vector (or equivalently, the weight vector

will converge to the zero vector (or equivalently, the weight vector ![]() will converge to the optimal solution):

will converge to the optimal solution):

![]() (4.133)

(4.133)

Thus, a sufficient condition that ensures the convergence of the algorithm is

(4.134)

(4.134)

Further, the approximate time constant corresponding to ![]() will be

will be

![]() (4.135)

(4.135)

In Ref. [102], it has also been proved that if the kernel width ![]() increases, the absolute values of the eigenvalues will decrease, and the time constants will increase, that is, the convergence speed of the algorithm will become slower.

increases, the absolute values of the eigenvalues will decrease, and the time constants will increase, that is, the convergence speed of the algorithm will become slower.

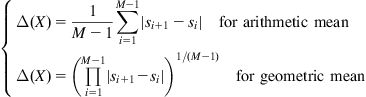

4.4.2 Energy Conservation Relation

Here, the energy conservation relation is not the well-known physical law of conservation of energy, but refers to a certain identical relation that exists among the WEP, a priori error, and a posteriori error in adaptive filtering algorithms (such as the LMS algorithm). This fundamental relation can be used to analyze the mean square convergence behavior of an adaptive filtering algorithm [196].

Given an adaptive filtering algorithm with error nonlinearity [29]:

![]() (4.136)

(4.136)

where ![]() is the error nonlinearity function (

is the error nonlinearity function (![]() corresponds to the LMS algorithm), the following energy conservation relation holds:

corresponds to the LMS algorithm), the following energy conservation relation holds:

(4.137)

(4.137)

where ![]() is the WEP at instant

is the WEP at instant ![]() (or iteration

(or iteration ![]() ),

), ![]() and

and ![]() are, respectively, the a priori error and a posteriori error:

are, respectively, the a priori error and a posteriori error:

![]() (4.138)

(4.138)

One can show that the IG algorithms (assuming ADALINE model) also satisfy the energy conservation relation similar to Eq. (4.137) [106]. Let us consider the sliding information gradient algorithm (with ![]() entropy criterion):

entropy criterion):

![]() (4.139)

(4.139)

where ![]() is the empirical error entropy at iteration

is the empirical error entropy at iteration ![]() :

:

(4.140)

(4.140)

where ![]() ,

, ![]() is the

is the ![]() th error sample used to calculate the empirical error entropy at iteration

th error sample used to calculate the empirical error entropy at iteration ![]() . Given the

. Given the ![]() -function and the kernel function

-function and the kernel function ![]() , the empirical error entropy

, the empirical error entropy ![]() will be a function of the error vector

will be a function of the error vector ![]() , which can be written as

, which can be written as

![]() (4.141)

(4.141)

This function will be continuously differentiable with respect to ![]() , provided that both functions

, provided that both functions ![]() and

and ![]() are continuously differentiable.

are continuously differentiable.

In ADALINE system identification, the error ![]() will be

will be

![]() (4.142)

(4.142)

where ![]() is the

is the ![]() th measurement at iteration

th measurement at iteration ![]() ,

, ![]() is the

is the ![]() th model output at iteration

th model output at iteration ![]() ,

, ![]() is the

is the ![]() th input vector11 at iteration

th input vector11 at iteration ![]() , and

, and ![]() is the ADALINE weight vector. One can write Eq. (4.142) in the form of block data, i.e.,

is the ADALINE weight vector. One can write Eq. (4.142) in the form of block data, i.e.,

![]() (4.143)

(4.143)

where ![]() ,

, ![]() , and

, and ![]() (

(![]() input matrix).

input matrix).

The block data ![]() can be constructed in various manners. Two typical examples are

can be constructed in various manners. Two typical examples are

Combining Eqs. (4.139), (4.141), and (4.143), we can derive (assuming ADALINE model)

(4.146)

(4.146)

where ![]() , in which

, in which

![]() (4.147)

(4.147)

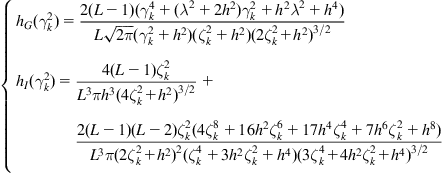

With QIP criterion (![]() ), the function

), the function ![]() can be calculated as (assuming

can be calculated as (assuming ![]() is a Gaussian kernel):

is a Gaussian kernel):

(4.148)

(4.148)

The algorithm in Eq. (4.146) is in form very similar to the adaptive filtering algorithm with error nonlinearity, as expressed in Eq. (4.136). In fact, Eq. (4.146) can be regarded, to some extent, as a “block” version of Eq. (4.136). Thus, one can study the mean square convergence behavior of the algorithm (4.146) by similar approach as in mean square analysis of the algorithm (4.136). It should also be noted that the objective function behind algorithm (4.146) is not limited to the error entropy. Actually, the cost function ![]() can be extended to any function of

can be extended to any function of ![]() , including the simple block mean square error (BMSE) criterion that is given by [197]

, including the simple block mean square error (BMSE) criterion that is given by [197]

![]() (4.149)

(4.149)

We now derive the energy conservation relation for the algorithm (4.146). Assume that the unknown system and the adaptive model are both ADALINE structures with the same dimension of weights.12 Let the measured output (in the form of block data) be

![]() (4.150)

(4.150)

where ![]() is the weight vector of unknown system and

is the weight vector of unknown system and ![]() is the noise vector. In this case, the error vector

is the noise vector. In this case, the error vector ![]() can be expressed as

can be expressed as

![]() (4.151)

(4.151)

where ![]() is the weight error vector. In addition, we define the a priori and a posteriori error vectors

is the weight error vector. In addition, we define the a priori and a posteriori error vectors ![]() and

and ![]() :

:

(4.152)

(4.152)

Clearly, ![]() and

and ![]() have the following relationship:

have the following relationship:

![]() (4.153)

(4.153)

Combining Eqs. (4.146) and (4.153) yields

![]() (4.154)

(4.154)

where ![]() is an

is an ![]() symmetric matrix with elements

symmetric matrix with elements ![]() .

.

Assume that the matrix ![]() is invertible (i.e.,

is invertible (i.e., ![]() ). Then we have

). Then we have

![]() (4.155)

(4.155)

And hence

![]() (4.156)

(4.156)

Both sides of Eq. (4.156) should have the same energy, i.e.,

![]() (4.157)

(4.157)

From Eq. (4.157), after some simple manipulations, we obtain the following energy conservation relation:

![]() (4.158)

(4.158)

where ![]() ,

, ![]() ,

, ![]() . Further, in order to analyze the mean square convergence performance of the algorithm, we take the expectations of both sides of (4.158) and write

. Further, in order to analyze the mean square convergence performance of the algorithm, we take the expectations of both sides of (4.158) and write

![]() (4.159)

(4.159)

The energy conservation relation (4.159) shows how the energies (powers) of the error quantities evolve in time, which is exact for any adaptive algorithm described in Eq. (4.146), and is derived without any approximation and assumption (except for the condition that ![]() is invertible). One can also observe that Eq. (4.159) is a generalization of Eq. (4.137) in the sense that the a priori and a posteriori error quantities are extended to vector case.

is invertible). One can also observe that Eq. (4.159) is a generalization of Eq. (4.137) in the sense that the a priori and a posteriori error quantities are extended to vector case.

4.4.3 Mean Square Convergence Analysis Based on Energy Conservation Relation

The energy conservation relation (4.159) characterizes the evolution behavior of the weight error power (WEP). Substituting ![]() into (4.159), we obtain

into (4.159), we obtain

![]() (4.160)

(4.160)

To evaluate the expectations ![]() and

and ![]() , some assumptions are given below [106]:

, some assumptions are given below [106]:

• A1: The noise ![]() is independent, identically distributed, and independent of the input

is independent, identically distributed, and independent of the input ![]() ;

;

• A2: The a priori error vector ![]() is jointly Gaussian distributed;

is jointly Gaussian distributed;

• A3: The input vectors ![]() are zero-mean independent, identically distributed;

are zero-mean independent, identically distributed;

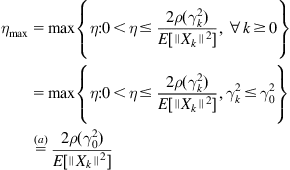

4.4.3.1 Sufficient Condition for Mean Square Convergence

From Eq. (4.168), it is easy to observe

(4.169)

(4.169)

Therefore, if we choose the step-size ![]() such that for all

such that for all ![]()

(4.170)

(4.170)

then the sequence of WEP ![]() will be monotonically decreasing (and hence convergent). Thus, a sufficient condition for the mean square convergence of the algorithm (4.146) would be