Information Measures

The concept of information is so rich that there exist various definitions of information measures. Kolmogorov had proposed three methods for defining an information measure: probabilistic method, combinatorial method, and computational method [146]. Accordingly, information measures can be categorized into three categories: probabilistic information (or statistical information), combinatory information, and algorithmic information. This book focuses mainly on statistical information, which was first conceptualized by Shannon [44]. As a branch of mathematical statistics, the establishment of Shannon information theory lays down a mathematical framework for designing optimal communication systems. The core issues in Shannon information theory are how to measure the amount of information and how to describe the information transmission. According to the feature of data transmission in communication, Shannon proposed the use of entropy, which measures the uncertainty contained in a probability distribution, as the definition of information in the data source.

2.3 Information Divergence

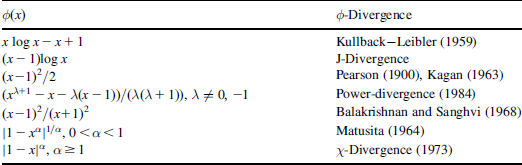

In statistics and information geometry, an information divergence measures the “distance” of one probability distribution to the other. However, the divergence is a much weaker notion than that of the distance in mathematics, in particular it need not be symmetric and need not satisfy the triangle inequality.

2.4 Fisher Information

The most celebrated information measure in statistics is perhaps the one developed by R.A. Fisher (1921) for the purpose of quantifying information in a distribution about the parameter.

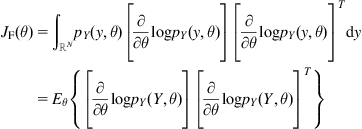

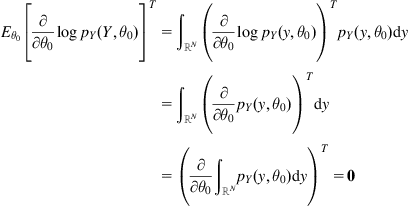

where ![]() stands for the expectation with respect to

stands for the expectation with respect to ![]() . From (2.47), one can see that the Fisher information measures the “average sensitivity” of the logarithm of PDF to the parameter

. From (2.47), one can see that the Fisher information measures the “average sensitivity” of the logarithm of PDF to the parameter ![]() or the “average influence” of the parameter

or the “average influence” of the parameter ![]() on the logarithm of PDF. The Fisher information is also a measure of the minimum error in estimating the parameter of a distribution. This is illustrated in the following theorem.

on the logarithm of PDF. The Fisher information is also a measure of the minimum error in estimating the parameter of a distribution. This is illustrated in the following theorem.

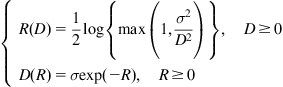

2.5 Information Rate

The previous information measures, such as entropy, mutual information, and KL-divergence, are all defined for random variables. These definitions can be further extended to various information rates, which are defined for random processes.

Appendix B  -Stable Distribution

-Stable Distribution

![]() -stable distributions are a class of probability distributions satisfying the generalized central limit theorem, which are extensions of the Gaussian distribution. The Gaussian, inverse Gaussian, and Cauchy distributions are its special cases. Excepting the three kinds of distributions, other

-stable distributions are a class of probability distributions satisfying the generalized central limit theorem, which are extensions of the Gaussian distribution. The Gaussian, inverse Gaussian, and Cauchy distributions are its special cases. Excepting the three kinds of distributions, other ![]() -stable distributions do not have PDF with analytical expression. However, their characteristic functions can be written in the following form:

-stable distributions do not have PDF with analytical expression. However, their characteristic functions can be written in the following form:

(B.1)

(B.1)

where ![]() is the location parameter,

is the location parameter, ![]() is the dispersion parameter,

is the dispersion parameter, ![]() is the characteristic factor,

is the characteristic factor, ![]() is the skewness factor. The parameter

is the skewness factor. The parameter ![]() determines the trailing of distribution. The smaller the value of

determines the trailing of distribution. The smaller the value of ![]() , the heavier the trail of the distribution is. The distribution is symmetric if

, the heavier the trail of the distribution is. The distribution is symmetric if ![]() , called the symmetric

, called the symmetric ![]() -stable (

-stable (![]() ) distribution. The Gaussian and Cauchy distributions are

) distribution. The Gaussian and Cauchy distributions are ![]() -stable distributions with

-stable distributions with ![]() and

and ![]() , respectively.

, respectively.

When ![]() , the tail attenuation of

, the tail attenuation of ![]() -stable distribution is slower than that of Gaussian distribution, which can be used to describe the outlier data or impulsive noises. In this case the distribution has infinite second-order moment, while the entropy is still finite.

-stable distribution is slower than that of Gaussian distribution, which can be used to describe the outlier data or impulsive noises. In this case the distribution has infinite second-order moment, while the entropy is still finite.

Appendix C Proof of (2.17)

1In this book, “log” always denotes the natural logarithm. The entropy will then be measured in nats.

2Strictly speaking, we should use some subscripts to distinguish the PDFs ![]() ,

, ![]() , and

, and ![]() . For example, we can write them as

. For example, we can write them as ![]() ,

, ![]() ,

, ![]() . In this book, for simplicity we often omit these subscripts if no confusion arises.

. In this book, for simplicity we often omit these subscripts if no confusion arises.

3The detailed proofs of these properties can be found in related information theory textbooks, such as “Elements of Information Theory” written by Cover and Thomas [43].

4Cauchy distribution is a non-Gaussian ![]() -stable distribution (see Appendix B).

-stable distribution (see Appendix B).

5On how to solve these equations, interested readers are referred to [150,151].

6Let ![]() be a measurable space and assume a real-valued function

be a measurable space and assume a real-valued function ![]() is defined on

is defined on ![]() , i.e.,

, i.e., ![]() . Then function

. Then function ![]() is called a Mercer kernel if and only if it is a continuous, symmetric, and positive-definite function. Here,

is called a Mercer kernel if and only if it is a continuous, symmetric, and positive-definite function. Here, ![]() is said to be positive-definite if and only if

is said to be positive-definite if and only if

![]()

where ![]() denotes any finite signed Borel measure,

denotes any finite signed Borel measure, ![]() . If the equality holds only for zero measure, then

. If the equality holds only for zero measure, then ![]() is said to be strictly positive-definite (SPD).

is said to be strictly positive-definite (SPD).

7Unless mentioned otherwise, in this book a vector refers to a column vector.