Chapter 10

Imputation and Adjustment

10.1 Missing Data

Missing values may occur in a dataset either because they were not measured or because they got removed somewhere during data processing, for example, because values were deemed erroneous and deleted. In either case, the term ‘imputation’ is used to indicate the practice of completing a dataset that contains empty values.

At first sight, imputation is not all that different from any predictive modeling task. One constructs a model of an incomplete dataset, trains it on a subset of reliable data, and predicts unobserved values. There are some elements that set imputation apart from ‘general’ predictive modeling, however. The first is that imputation tasks are often specifically done with the purpose of inference in mind. That is, one is usually less interested in the specific value for a single record than in the properties of the mean, variance, or covariance structure of the whole dataset or population. Secondly, and this is arguably related to the first issue, there are a number of methods that are commonly used in imputation but rarely in predictive modeling. Examples include nearest-neighbor imputation or expectation–maximization-based techniques.

The literature on imputation methodology is vast and extensive, and excellent text books and review papers are available [See, e.g., Anderson (2002); Andridge and Little (2010); Donders et al. (2006); Kalton and Kasprzyk (1986); Schafer and Graham (2002); Zhang (2003)]. The current chapter therefore summarizes some of the most common issues and imputation methodology and focuses on methods available in R.

10.1.1 Missing Data Mechanisms

One commonly distinguishes three missing data mechanisms [attributed to Rubin (1976), but see Little and Rubin (2002) or Schafer and Graham (2002)] that determine the basic probability structure of the missing value locations in a data record. To set up the mechanisms, consider three random variables: the variable of interest ![]() , an auxiliary variable

, an auxiliary variable ![]() , and a missing value indicator

, and a missing value indicator ![]() . Here,

. Here, ![]() is a binary variable that indicates whether a realization of

is a binary variable that indicates whether a realization of ![]() is observed or not. One can imagine a dataset with realizations of these variables and model the joint probability

is observed or not. One can imagine a dataset with realizations of these variables and model the joint probability ![]() . Three models are distinguished, each of which we state in two equivalent representations as follows:

. Three models are distinguished, each of which we state in two equivalent representations as follows:

In the first model (MCAR: missing completely at random), it is assumed that the distribution of the missing value indicator is independent of both ![]() and

and ![]() . If the dataset is created as a simple random sample, the missing values merely make that sample smaller, possibly with a different fraction for

. If the dataset is created as a simple random sample, the missing values merely make that sample smaller, possibly with a different fraction for ![]() and

and ![]() .

.

In the second model, labeled ![]() (missing at random), it is assumed that the value of

(missing at random), it is assumed that the value of ![]() gives no information about whether it is observed or not. However, the distribution of

gives no information about whether it is observed or not. However, the distribution of ![]() depends on the value of

depends on the value of ![]() (and

(and ![]() 's distribution could depend on the value of

's distribution could depend on the value of ![]() ). Gelman and Hill (2006) distinguish further between dependence of

). Gelman and Hill (2006) distinguish further between dependence of ![]() on observed and unobserved variables

on observed and unobserved variables ![]() .

.

The last case, labeled NMAR (not missing at random), is not really a model at all. The two expressions on the right-hand side are simply rewrites of ![]() using the expression for conditional probabilities. In this case, the distribution of the missing value indicator may depend on both the values of

using the expression for conditional probabilities. In this case, the distribution of the missing value indicator may depend on both the values of ![]() and

and ![]() .

.

Although these models give a clear classification of probability models for the missing/observed status of a variable, it is in practice not possible to distinguish between them based on observed data. Suppose that one constructs a dataset with ![]() and

and ![]() independent (so

independent (so ![]() ). Now, remove all values of

). Now, remove all values of ![]() above a certain threshold. Clearly, this is the not missing at random case, since the distribution of

above a certain threshold. Clearly, this is the not missing at random case, since the distribution of ![]() depends on the value of

depends on the value of ![]() . However, an analyst who has no access to the missing realizations of

. However, an analyst who has no access to the missing realizations of ![]() will not be able to detect the correlation between the values of

will not be able to detect the correlation between the values of ![]() and

and ![]() . Indeed, it is impossible for the analyst to distinguish the NMAR situation from MCAR. Now suppose that

. Indeed, it is impossible for the analyst to distinguish the NMAR situation from MCAR. Now suppose that ![]() and

and ![]() are correlated so that larger values of

are correlated so that larger values of ![]() co-occur with larger values of

co-occur with larger values of ![]() . In that case,

. In that case, ![]() will be correlated with

will be correlated with ![]() , and the analyst observes a MAR situation.

, and the analyst observes a MAR situation.

The approach that is commonly taken is rather practical. To accommodate for MCAR and MAR situations, many popular imputation methods simply attempt to leverage all the auxiliary information available (e.g., MICE, missForest, to be discussed later). To correct for the NMAR case, one needs to make assumptions about or somehow model the data collection process. To assess, in approximation, the effect of a possible NMAR missing data mechanism on outcomes, one may have to resort to sensitivity analyses using a simulation of the missing value mechanism.

10.1.2 Visualizing and Testing for Patterns in Missing Data Using R

Effective summarization of missing values across a multivariate dataset can provide incentives for investigating the missing value mechanism. Ideally (apart from having complete data), missing values are distributed as MCAR. If there are patterns in missing data pointing to a MAR situation, those patterns require an explanation or, when relevant and possible, the data collection process can be altered to prevent such patterns from occurring.

The VIM package (Templ et al., 2012 2016) offers a number of visualizations and aggregations for pattern discovery in missing data. As an example we will use the retailers dataset that comes with the validate package. The VIM function aggr aggregates missing value patterns per variable and per record.

data("retailers",package="validate")

VIM::aggr(retailers[3:9], sortComb=TRUE, sortVar=TRUE, only.miss=TRUE)

##

## Variables sorted by number of missings:

## Variable Count

## other.rev 0.60000000

## staff.costs 0.16666667

## staff 0.10000000

## total.costs 0.08333333

## profit 0.08333333

## turnover 0.06666667

## total.rev 0.03333333The result is an overview of the fraction of missing values per variable. Here, the option to sort variables from high to low fractions of missing values is used (sortVar=TRUE). As a side effect, a plot of the aggregates, shown in Figure 10.1, is created. The visualization contains a barplot showing fractions of missing values per variable in panel (a) and a rectangular, space-filling plot indicating the occurrence of missing value combinations in panel (b). In the latter plot, each column in a space-filling grid of squares represents a variable, and each row represents a single occurring missing data pattern. A value that is missing is colored gray, and the observed values are colored light gray (by default). On the right there is a vertical bar chart that indicates for each row how often every pattern occurs. In this example, we also sort the graph by variables (decreasing left to right in fraction of missing values) and combination (sortComb=TRUE). Also, we specify that the bar chart heights should be relative to the number of patterns that contain at least one missing (only.miss=TRUE). The total fraction of complete records represented is printed at the right. The graph shows that the variable other revenue is missing most often, while the combination other revenue and staff is the most often missing combination in this dataset.

Figure 10.1 Percentages of missing values per variable (a) and occurrence of missing data patterns (b) in the retailers dataset, plotted with VIM::aggr.

To detect whether a variable's missing value mechanism is MAR with respect to a second variable, the missingness indicator of the first variable can be used to split the dataset into two groups. The observed distributions of the second variable for the two groups can then be compared to distinguish between MCAR and MAR. In the case of MCAR, one expects the distributions to be similar. With VIM::pbox, one chooses a single numerical variable and compares its distribution with respect to the missingness indicator of all other variables. Here, we compare the distribution of staff against the status (missing or present) of other variables.

VIM::pbox(retailers[3:9], pos=1)The result is shown in Figure 10.2. The leftmost boxplot shows the distribution of staff, with numbers indicating that there are 60 observations of which 6 are missing. The other boxplots, occurring in pairs, compare the distributions of staff, split according to the missingness of another variable. For example, the distribution of staff in the case where other.rev is observed (shown in light gray) appears to differ from the case where other.rev is missing. This indicates a possible MAR situation for other.rev with respect to staff. The widths of the boxplots indicate the number of observations used in producing the boxplot: a (very) thin boxplot indicates that the difference in distributions is supported by little evidence. The number of observations (top) and missing values (bottom) per group are printed below the boxes.

Figure 10.2 Parallel boxplots, comparing the distribution of staff conditional on the missingness of other variables.

To confirm or reject our suspicion that the locations of the distributions differ significantly, we perform the Student ![]() -test. Here, we use log-transformed data since economic data tends to follow highly skewed distribution (typically close to log-normal).

-test. Here, we use log-transformed data since economic data tends to follow highly skewed distribution (typically close to log-normal).

t.test(log(staff) ∼ is.na(other.rev), data=retailers)

##

## Welch Two Sample t-test

##

## data: log(staff) by is.na(other.rev)

## t = 2.7464, df = 46.014, p-value = 0.008572

## alternative hypothesis: true difference in means is not equal to 0

## 95 percent confidence interval:

## 0.1985149 1.2880867

## sample estimates:

## mean in group FALSE mean in group TRUE

## 2.329996 1.586695The low ![]() value indicates that the null hypotheses (means are equal across groups) may be rejected with a low probability (

value indicates that the null hypotheses (means are equal across groups) may be rejected with a low probability (![]() ) of error. The conclusion is that missingness of other.rev is to be treated as MAR with respect to staff.

) of error. The conclusion is that missingness of other.rev is to be treated as MAR with respect to staff.

One may wonder whether the reverse is also true: is the missingness of staff MAR with respect to the values observed in other.rev? A quick insight into this question can be obtained by drawing a so-called marginplot (here, using log-transformed variables to accommodate for their skew distributions).

dat <- log10(abs(retailers[c(3,5)]))

VIM::marginplot(dat, las=1, pch=16)The marginplot (Figure 10.3) shows a scatterplot of cases where both variables are observed. The margins show, in light gray, boxplots of the variable depicted on the respective axis. These are contrasted with boxplots (in gray) of the same variable, but for the case where the other variable is missing. So from the boxplots in the margins of the ![]() -axis, we read off that other.rev may be MAR with respect to staff. Similarly, from the boxplots in the

-axis, we read off that other.rev may be MAR with respect to staff. Similarly, from the boxplots in the ![]() -axis, we read off that staff might be MAR with respect to other.rev. The actual values used to produce the dark gray boxplots are also represented in the margins as dark gray dots. The number of missing values per variable and the number of co-occurring missing values are denoted in the margins as well.

-axis, we read off that staff might be MAR with respect to other.rev. The actual values used to produce the dark gray boxplots are also represented in the margins as dark gray dots. The number of missing values per variable and the number of co-occurring missing values are denoted in the margins as well.

Figure 10.3 Marginplot of other.rev against staff.

Exercises for Section 10.1

10.2 Model-Based Imputation

The literature on imputation methodology is extensive. Besides a broad range of general imputation methods, many methods have been developed with specific applications in mind. Examples include methodology for longitudinal data (Fitzmaurice et al., 2008) or social network data (see Huisman (2009) and references therein). Here, we focus on a number of well-established methods covering a broad range of applications that are readily available in R.

In a predictive model, the target variable ![]() is described by an estimating function or algorithm

is described by an estimating function or algorithm ![]() depending on one or more predictors

depending on one or more predictors ![]() and one or more parameters

and one or more parameters ![]() .

.

where ![]() is the residual of the model, the part of variation in

is the residual of the model, the part of variation in ![]() that is not described by

that is not described by ![]() . The predictor variables may be real-valued or categorical. In the latter case, a categorical variable taking

. The predictor variables may be real-valued or categorical. In the latter case, a categorical variable taking ![]() values is represented by

values is represented by ![]() binary dummy variables. Likewise, the predicted variable

binary dummy variables. Likewise, the predicted variable ![]() can be real-valued or categorical. If

can be real-valued or categorical. If ![]() is a binary variable, the possible values can be coded as 0 and 1. The form of

is a binary variable, the possible values can be coded as 0 and 1. The form of ![]() can be chosen to estimate the probability of

can be chosen to estimate the probability of ![]() taking the value 1, so it only takes values in the range

taking the value 1, so it only takes values in the range ![]() . If

. If ![]() can take

can take ![]() different values, we may label them as

different values, we may label them as ![]() . One then determines

. One then determines ![]() model functions

model functions ![]() , each estimating the probability

, each estimating the probability ![]() .

.

The values for ![]() are estimated by minimizing a loss function such as the negative log-likelihood over known values of

are estimated by minimizing a loss function such as the negative log-likelihood over known values of ![]() . Once the estimates

. Once the estimates ![]() are obtained, imputed values

are obtained, imputed values ![]() for numerical

for numerical ![]() are determined as

are determined as

with ![]() a vector of observed values for

a vector of observed values for ![]() and

and ![]() a chosen residual value. A common choice is to set

a chosen residual value. A common choice is to set ![]() , so the imputed value is the best estimate of

, so the imputed value is the best estimate of ![]() given

given ![]() ,

, ![]() , and the loss function. If one is interested in individual predictions only, setting

, and the loss function. If one is interested in individual predictions only, setting ![]() is the common choice. When dealing with imputation problems, one is often interested in reconstructing the (co)variance structure of a dataset. So, the other options include sampling

is the common choice. When dealing with imputation problems, one is often interested in reconstructing the (co)variance structure of a dataset. So, the other options include sampling ![]() from

from ![]() , where

, where ![]() is the estimated variance of

is the estimated variance of ![]() or sampling

or sampling ![]() (uniformly) from the observed set of residuals. Methods where

(uniformly) from the observed set of residuals. Methods where ![]() is sampled are referred to as stochastic imputation methods. If

is sampled are referred to as stochastic imputation methods. If ![]() is a categorical variable, the actual predicted value may be the one that is assigned the highest probability, so

is a categorical variable, the actual predicted value may be the one that is assigned the highest probability, so ![]() . Alternatively, one can sample a value from

. Alternatively, one can sample a value from ![]() assuming the

assuming the ![]() as probability distribution over the domain of

as probability distribution over the domain of ![]() .

.

If ![]() is a real-valued variable, a common model is the linear model

is a real-valued variable, a common model is the linear model

Here, ![]() usually (but not necessarily) represents the intercept, so

usually (but not necessarily) represents the intercept, so ![]() . Given a set of observations

. Given a set of observations ![]() , the most popular loss function to estimate

, the most popular loss function to estimate ![]() the sum of squares, so

the sum of squares, so

where ![]() represents the

represents the ![]() th row in

th row in ![]() . The resulting estimator can be interpreted as the conditional expectation of

. The resulting estimator can be interpreted as the conditional expectation of ![]() given

given ![]() or

or ![]() . There are several variations on the quadratic loss function including ridge regression (Hoerl and Kennard, 1970), lasso regression (Tibshirani, 1996), and their generalization: elasticnet regression (Zou and Hastie, 2005). Each of these methods forms an attempt to cope with high variability in the training data by adding terms that penalize the size of the

. There are several variations on the quadratic loss function including ridge regression (Hoerl and Kennard, 1970), lasso regression (Tibshirani, 1996), and their generalization: elasticnet regression (Zou and Hastie, 2005). Each of these methods forms an attempt to cope with high variability in the training data by adding terms that penalize the size of the ![]() (

(![]() ). Other robust alternatives include the class of

). Other robust alternatives include the class of ![]() -estimators. There, the loss function is adapted to decrease the contribution of highly influential records in the training set [see, e.g., Huber (2011) or Maronna et al. (2006)].

-estimators. There, the loss function is adapted to decrease the contribution of highly influential records in the training set [see, e.g., Huber (2011) or Maronna et al. (2006)].

If we denote the matrix ![]() , where the

, where the ![]() are columns of observed values (possibly including the intercept ‘variable’

are columns of observed values (possibly including the intercept ‘variable’ ![]() ), the imputed values can be written as

), the imputed values can be written as

It was demonstrated by Kalton and Kasprzyk (1986) and more extensively by de Waal et al. (2011, Chapter 7) that a surprising number of common imputation methods can be written in this form when the choices for the ![]() and

and ![]() are appropriately adapted. Methods that can be written in this form include (group) mean imputation, linear regression and ratio imputation, nearest-neighbor imputation, the deductive imputation method of Section 9.3.2, and several forms of stochastic imputation. If the (regularized) quadratic loss function is also allowed to vary, even more imputation methods can be written in this form. In particular, if the quadratic loss function is replaced with the least absolute deviation (LAD), we get

are appropriately adapted. Methods that can be written in this form include (group) mean imputation, linear regression and ratio imputation, nearest-neighbor imputation, the deductive imputation method of Section 9.3.2, and several forms of stochastic imputation. If the (regularized) quadratic loss function is also allowed to vary, even more imputation methods can be written in this form. In particular, if the quadratic loss function is replaced with the least absolute deviation (LAD), we get

One can show that in this case [See, e.g., Koenker (2005); Chen et al. (2008)]

Thus, imputing the conditional (group-wise) median can also be summarized under this notation.

Exercises for Section 10.2

10.3 Model-Based Imputation in R

The large amount of literature on imputation methodology is reflected in the large number of R packages implementing them. At the time of writing there are dozens of packages mentioning ‘impute’ or ‘imputation’ in their description. Here, we will demonstrate a number of imputation methods using the simputation package. The reason for choosing this particular package is it offers a consistent and (to R-users) familiar interface to many imputation models. The package relies mostly on other packages for computing the models and generating predictions. In some cases the backend can be chosen (e.g., one can make simputation use VIM for certain types of hotdeck imputations).

10.3.1 Specifying Imputation Methods with simputation

With the simputation package the specification of an imputation method always has the following form:

impute_<model-abbreviation>(dat, formula, [model-specific options], …)where <model-abbreviation> is replaced with an abbreviated name for the predictive model to be used (e.g., lm for linear models), dat is the dataset to be imputed, and formula specifies the relation between imputed and predicting variables. Depending on the method there may be some simputation-specific options, and all extra arguments (…) are passed to the underlying modeling functions.

The formula object is an expression of the form

imputed_variables ∼ predicting_variables [ | grouping_variables ]where imputed_variables specifies what variables should be imputed and predicting_variables specifies the combination of variables to be used as predictors. The terms enclosed in brackets are optional. The grouping_variables term can be used to specify a split-apply-combine strategy for imputation. The dataset is split according to the value combinations of grouping variables, the imputation model is estimated for each subset, values are imputed, and the dataset recombined.

Contrary to most modeling functions in R, the specification of imputed (dependent) variables is flexible and can contain multiple variables. The simputation package will simply loop over all variables to be imputed, estimating models as needed. For example, the specification

y ∼ foo + barspecifies that variable y should be imputed, using foo and bar as predictors. To impute multiple variables, one can just add variables on the left-hand side.

y1 + y2 + y3 ∼ foo + barHere, y1, y2, and y3 are imputed using foo and bar as predictors. The dot (.) stands for ‘every variable not mentioned earlier’, so

. ∼ foo + baris to be interpreted as impute every variable, using foo and bar as predictors. It depends on the imputation method whether that means that foo and bar can also be imputed. The simputation package will remove predictors from the list of imputed variables when necessary. Finally, it is also possible to remove variables. The formula

. - x ∼ foo + barmeans impute every variable except x using foo and bar as predictors.

The form that predicting_variables can take depends on the chosen imputation model. For example, in linear modeling, the option to model interaction effects (e.g., foo:bar) is relevant, while for other models it is not.

10.3.2 Linear Regression-Based Imputation

Linear regression imputation can be applied to impute numerical variables, using numerical and/or categorical variables and possibly their interaction effects as predictors. The model function is given by ![]() , where

, where ![]() is estimated with Eq. (10.6). In particular, we can partition the vector of

is estimated with Eq. (10.6). In particular, we can partition the vector of ![]() -values as

-values as ![]() , where

, where ![]() indicates where

indicates where ![]() is observed and

is observed and ![]() indicates where

indicates where ![]() is missing. Accordingly, the matrix

is missing. Accordingly, the matrix ![]() with predictor values can be partitioned in rows

with predictor values can be partitioned in rows ![]() where

where ![]() is observed and rows

is observed and rows ![]() where

where ![]() is missing. The value of

is missing. The value of ![]() is then estimated over the observed values of

is then estimated over the observed values of ![]()

after which the missing values can be estimated as

With the simputation package, linear model imputation can be performed with the impute_lm function. In the following paragraphs a few columns of the retailers dataset from the validate package will be used.

library(simputation)

library(magrittr) # for convenience

data(retailers, package="validate")

retl <- retailers[c(1,3:6,10)]

head(retl, n=3)

## size staff turnover other.rev total.rev vat

## 1 sc0 75 NA NA 1130 NA

## 2 sc3 9 1607 NA 1607 NA

## 3 sc3 NA 6886 -33 6919 NAWe will be interested in imputing the values for turnover, other.rev, and total.rev. The simputation package relies on lm for estimating linear models, which means that several classes of imputation methods can be specified with ease.

In mean imputation, missing values are replaced by the column mean. To impute turnover, other.rev, and total.rev with their respective means, we specify

impute_lm(retl, turnover + other.rev + total.rev ∼ 1) %>% head(3)

## size staff turnover other.rev total.rev vat

## 1 sc0 75 20279.48 4218.292 1130 NA

## 2 sc3 9 1607.00 4218.292 1607 NA

## 3 sc3 NA 6886.00 -33.000 6919 NAIt is well known that mean imputation leads to a gross underestimation of the variance of estimated means (when computed over the imputed dataset).

A slightly better procedure is to impute the group mean. Here, we impute missing variables using size (a size classification) as grouping variable.

impute_lm(retl, turnover + other.rev + total.rev ∼ 1 | size) %>% head(3)

## size staff turnover other.rev total.rev vat

## 1 sc0 75 1420.375 315.50 1130 NA

## 2 sc3 9 1607.000 6169.25 1607 NA

## 3 sc3 NA 6886.000 -33.00 6919 NABy specifying size after the vertical bar, we make sure that simputation does the split-apply-combine work over the grouping variable. The same result can be achieved as follows:

impute_lm(retl, turnover + other.rev + total.rev ∼ size) %>% head(3)

## size staff turnover other.rev total.rev vat

## 1 sc0 75 1420.375 315.50 1130 NA

## 2 sc3 9 1607.000 6169.25 1607 NA

## 3 sc3 NA 6886.000 -33.00 6919 NAwhere we let lm estimate a model with size as predictor. The latter method is slightly less robust. When one of the groups contains only missing values for one of the predicted variables, lm will stop, while the split-apply-combine procedure of simputation can handle such cases.

Ratio imputation uses the model ![]() , where

, where ![]() is the ratio of the mean of

is the ratio of the mean of ![]() and the mean of

and the mean of ![]() . It is equivalent to a linear model with a single predictor, no abscissa, weighted according to the reciprocal of the predictor. Here, the three variables are imputed with

. It is equivalent to a linear model with a single predictor, no abscissa, weighted according to the reciprocal of the predictor. Here, the three variables are imputed with staff (the number of employees) as predictor.

impute_lm(retl, turnover + other.rev + total.rev ∼ staff - 1

, weight=1/retl$staff) %>% head(3)

## size staff turnover other.rev total.rev vat

## 1 sc0 75 26187.55 26426.132 1130 NA

## 2 sc3 9 1607.00 3171.136 1607 NA

## 3 sc3 NA 6886.00 -33.000 6919 NARatio imputation is often used as a growth estimate, that is, in cases where a current value as well as a past value is known.

In linear regression imputation, one or more predictors may be used to impute a value based on a linear model. Below, the number of staff and turnover reported for value-added tax (vat) are used as predictors.

impute_lm(retl, turnover + other.rev + total.rev ∼ staff + vat

)%>% head(3)

## size staff turnover other.rev total.rev vat

## 1 sc0 75 NA NA 1130 NA

## 2 sc3 9 1607 NA 1607 NA

## 3 sc3 NA 6886 -33 6919 NAObserve that in the first three rows, nothing is imputed. The reason is that in those cases, the predictor vat is missing. This illustrates a general property of simputation. The package will leave values untouched when one of the predictors is missing and return the partially imputed dataset.

Each of these models imputes the expected value, given zero or more predictors. They can be made stochastic by adding to each estimated value a random residual ![]() , as denoted in Eq. (10.5). For model-based imputation methods,

, as denoted in Eq. (10.5). For model-based imputation methods, simputation supports three options: ![]() , this is the default;

, this is the default; ![]() with

with ![]() , the estimated variance of the residuals; and

, the estimated variance of the residuals; and ![]() , sampled from the observed residuals. They can be specified with the

, sampled from the observed residuals. They can be specified with the add_residual option.

# make results reproducible

set.seed(1)

# add normal residual

impute_lm(retl

, turnover + other.rev + total.rev ∼ staff

, add_residual = "normal") %>% head(3)

## size staff turnover other.rev total.rev vat

## 1 sc0 75 1100.659 11737.95 1130 NA

## 2 sc3 9 1607.000 -12914.62 1607 NA

## 3 sc3 NA 6886.000 -33.00 6919 NA

# add observed residual

impute_lm(retl

, turnover + other.rev + total.rev ∼ staff

, add_residual = "observed") %>% head(3)

## size staff turnover other.rev total.rev vat

## 1 sc0 75 7439.802 -659.214 1130 NA

## 2 sc3 9 1607.000 1015.605 1607 NA

## 3 sc3 NA 6886.000 -33.000 6919 NA10.3.3  -Estimation

-Estimation

The ![]() -estimation method aims to reduce the influence of outliers on linear model coefficients by replacing the loss function of Eq. (10.9) with a suitable function

-estimation method aims to reduce the influence of outliers on linear model coefficients by replacing the loss function of Eq. (10.9) with a suitable function ![]() so that

so that

where ![]() is the number of observed records

is the number of observed records ![]() . To find

. To find ![]() , one solves the system of equations for

, one solves the system of equations for ![]()

Here, we defined the so-called influence function ![]() . It determines the relative influence of each observation to the solution. If we set

. It determines the relative influence of each observation to the solution. If we set ![]() , then

, then ![]() (up to an unimportant additive constant) and Eq. (10.9) is returned.

(up to an unimportant additive constant) and Eq. (10.9) is returned.

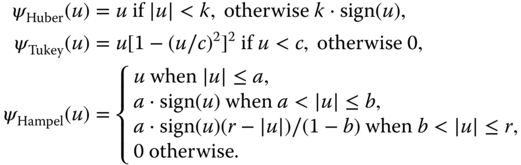

The influence function is chosen so that it is less sensitive for increasing values of ![]() as the standard quadratic loss function. A few popular choices are proposals by Huber et al. (1964), Hampel et al. (1986), and Tukey's bisquare function, which are also available in R through the

as the standard quadratic loss function. A few popular choices are proposals by Huber et al. (1964), Hampel et al. (1986), and Tukey's bisquare function, which are also available in R through the MASS package.

The parameters ![]() ,

, ![]() ,

, ![]() ,

, ![]() , and

, and ![]() are tuning parameters that determine the rate of increase as a function of

are tuning parameters that determine the rate of increase as a function of ![]() and the locations where

and the locations where ![]() levels off. Figure 10.4 shows the shape of the

levels off. Figure 10.4 shows the shape of the ![]() and

and ![]() functions of Huber, Tukey, and Hampel. The constants were chosen so that the regression estimators have an efficiency of 95% as described, for example, by Koller and Mächler (2016), that is,

functions of Huber, Tukey, and Hampel. The constants were chosen so that the regression estimators have an efficiency of 95% as described, for example, by Koller and Mächler (2016), that is, ![]() ,

, ![]() ,

, ![]() ,

, ![]() , and

, and ![]() , where

, where ![]() .

.

Figure 10.4 Three  - and

- and  -functions used in

-functions used in  -estimation (plotted so that the scales of the

-estimation (plotted so that the scales of the  axes are comparable). For comparison, the traditional function

axes are comparable). For comparison, the traditional function  is plotted with dotted lines.

is plotted with dotted lines.

With the simputation package, imputation based on ![]() -estimated linear regression parameters can be done with the

-estimated linear regression parameters can be done with the impute_rlm function. It uses the ![]() function of the

function of the MASS package for coefficient estimation.

impute_rlm(retl, turnover + other.rev + total.rev ∼ staff) %>% head(3)

## size staff turnover other.rev total.rev vat

## 1 sc0 75 12927.56 159.16574 1130 NA

## 2 sc3 9 1607.00 16.29018 1607 NA

## 3 sc3 NA 6886.00 -33.00000 6919 NAThe default is to use Huber's ![]() function with

function with ![]() . Extra arguments are passed through to

. Extra arguments are passed through to rlm. For example, rlm has the option to set method="MM". This sets a number of options ensuring that the regression estimator has a high breakdown point (qualitatively, the fraction of outliers that may be present in the data before the estimator gives unacceptable results).

impute_rlm(retl, turnover + other.rev + total.rev ∼ staff

, method="MM") %>% head(3)

## size staff turnover other.rev total.rev vat

## 1 sc0 75 13529.59 75.34900 1130 NA

## 2 sc3 9 1607.00 13.24304 1607 NA

## 3 sc3 NA 6886.00 -33.00000 6919 NA10.3.4 Lasso, Ridge, and Elasticnet Regression

With impute_en, elasticnet regression is used to compute imputations. In linear elasticnet regression, the parameters are estimated as

where ![]() is the familiar Euclidean norm and

is the familiar Euclidean norm and ![]() the

the ![]() -norm or the sum over absolute values of the coefficients of its argument. The penalty term is defined in terms of

-norm or the sum over absolute values of the coefficients of its argument. The penalty term is defined in terms of ![]() , which denotes all coefficients except the intercept (when present). The parameter

, which denotes all coefficients except the intercept (when present). The parameter ![]() allows one to shift smoothly from ridge regression (

allows one to shift smoothly from ridge regression (![]() ) to lasso regression (

) to lasso regression (![]() ), while

), while ![]() determines the overall strength of the penalty. The characteristic difference between lasso and ridge regression is that in the case of correlated predictors, lasso regression tends to push one or more coefficients to zero, while ridge regression spreads the value of coefficients over multiple correlated variables.

determines the overall strength of the penalty. The characteristic difference between lasso and ridge regression is that in the case of correlated predictors, lasso regression tends to push one or more coefficients to zero, while ridge regression spreads the value of coefficients over multiple correlated variables.

The simputation package implements elasticnet imputation through the impute_en function, which depends on the glmnet package of Friedman et al. (2010).

impute_en(retl, turnover + other.rev + total.rev ∼ staff + size

, s=0.005, alpha=0.5) %>% head(3)

## size staff turnover other.rev total.rev vat

## 1 sc0 75 13360.82 -8354.709 1130 NA

## 2 sc3 9 1607.00 10476.443 1607 NA

## 3 sc3 NA 6886.00 -33.000 6919 NAHere, the predictor size is added since the glmnet package does not accept models with less than two predictors. The parameter s determines the size of the overall penalty factor ![]() used in predictions, and we (arbitrarily) set

used in predictions, and we (arbitrarily) set alpha=0.5.

10.3.5 Classification and Regression Trees

Decision tree models can be used in situations where the predicted variable is numeric and its dependence on the predictors is highly nonlinear, or when the predicted variable is categorical with probabilities that have complex dependence on predictor variables. In both the cases, the predictors can be numeric or categorical. Because decision tree models can be used to predict quantitative as well as qualitative variables, they are often referred to as classification and regression trees or CART, for short. The term was introduced by Breiman et al. (1984), and it also refers to a specific method for setting up the decision tree (which will be treated below).

Consider again a predicted variable ![]() (to be imputed) and a set of predictors

(to be imputed) and a set of predictors ![]() . The idea is to partition the set of all possible value combinations

. The idea is to partition the set of all possible value combinations ![]() into disjunct regions, such that the corresponding value of

into disjunct regions, such that the corresponding value of ![]() is as homogeneous as possible in each region. For numeric

is as homogeneous as possible in each region. For numeric ![]() , homogeneous usually means ‘a small variance’; for categorical data, it means ‘a high proportion of a single category’. Given a particular record

, homogeneous usually means ‘a small variance’; for categorical data, it means ‘a high proportion of a single category’. Given a particular record ![]() , one follows a binary decision tree

, one follows a binary decision tree ![]() to see in what region the record falls. The predicted value for numeric

to see in what region the record falls. The predicted value for numeric ![]() is then the mean value within the region (although more robust estimators are sometimes used as well), and the predicted value for categorical

is then the mean value within the region (although more robust estimators are sometimes used as well), and the predicted value for categorical ![]() is the category with the highest prevalence.

is the category with the highest prevalence.

Before considering how such a decision tree is constructed, consider the example of Figure 10.5. Here, we used the rpart package to build a predictive model for the staff variable in the retailers dataset. Depicted are two representations of the resulting model. In Figure 10.5(a), the decision tree is shown. Each nonterminal node contains two numbers and a decision rule. The root node represents 100% of the records, and the mean value for staff over all those records equals 12 (rounded). If we partition the dataset according to the rule total.rev < 3464, we get 83% records for which this rule holds, with a mean number of staff of 7.7 and 17% records for which this total.rev >= 3464, with a mean number of staff of 31. The latter case ends in a terminal node and thus corresponds with a single partition of the feature space. The group of records falling into this category are on the right of the second vertical line in Figure 10.5(b). The group of records on the left (with total.rev < 3464) are subdivided by having smaller or larger total.rev of 968. The latter are subdivided once more based on whether the size variable equals "sc2" (depicted in white in panel (b)). The terminal nodes of the tree contain the actual predictions made for each partition along with the size of the partition.

Figure 10.5 (a) A decision tree for estimating number of staff in the retailers dataset, computed with rpart. (b) The space partitioning. The vertical bars indicate the limits represented in the top node and the subdivision indicated by its left child node. In the middle region, white dots indicate records where size=="sc2".

When comparing this procedure with Eq. (10.5), we see that here, the model function is a procedure ![]() , that is parameterized by a decision tree

, that is parameterized by a decision tree ![]() . Based on the values of

. Based on the values of ![]() , the tree is traversed until a terminal node is reached and the prediction returned.

, the tree is traversed until a terminal node is reached and the prediction returned.

The tree itself is built up iteratively. Given a ![]() dataset

dataset ![]() and a set of values

and a set of values ![]() , the optimal split based on each variable

, the optimal split based on each variable ![]() is computed. Of those

is computed. Of those ![]() splits, the one resulting in the lowest error is chosen. This process is then repeated for each partition recursively. Note that for any dataset, it is in principle possible to create a perfect partition by growing the tree until each leaf has a single record in it (or only records with equal values for

splits, the one resulting in the lowest error is chosen. This process is then repeated for each partition recursively. Note that for any dataset, it is in principle possible to create a perfect partition by growing the tree until each leaf has a single record in it (or only records with equal values for ![]() ). In practice, one stops at some minimum number of records. The resulting (still large) tree is then pruned by removing leaf nodes from the bottom up. The final result is determined by a trade-off between error minimization and tree size

). In practice, one stops at some minimum number of records. The resulting (still large) tree is then pruned by removing leaf nodes from the bottom up. The final result is determined by a trade-off between error minimization and tree size ![]() :

:

Here, ![]() is the set of subtrees that can be obtained by pruning the initial tree. The function ‘error’ records the mismatch between prediction and observation, appropriate for the variable

is the set of subtrees that can be obtained by pruning the initial tree. The function ‘error’ records the mismatch between prediction and observation, appropriate for the variable ![]() (e.g., standard deviation for numerical variables end mismatch ratio for categorical variables). The term

(e.g., standard deviation for numerical variables end mismatch ratio for categorical variables). The term ![]() penalizes the number of nodes

penalizes the number of nodes ![]() . Here,

. Here, ![]() is referred to as the cost-complexity parameter. It is determined automatically by computing

is referred to as the cost-complexity parameter. It is determined automatically by computing ![]() for a series of values of

for a series of values of ![]() and applying cross-validation to select the best one [see also James et al. (2013, Chapter 8) or Hastie et al. (2001, Chapter 9)].

and applying cross-validation to select the best one [see also James et al. (2013, Chapter 8) or Hastie et al. (2001, Chapter 9)].

With the simputation package, CART-based imputation can be performed with the impute_cart. The specification of predictor variables tells impute_cart what variables can be used in the tree. In many cases, one can choose all variables except the predicted since decision trees have variable selection built-in.

impute_cart(retl, staff ∼ .) %>% head(3)

## size staff turnover other.rev total.rev vat

## 1 sc0 75.00000 NA NA 1130 NA

## 2 sc3 9.00000 1607 NA 1607 NA

## 3 sc3 30.66667 6886 -33 6919 NAImputation took place in the third record. The imputation value can be traced by following the decision tree of Figure 10.5. The total revenue in the third record equals 6919. Since this is larger than 3464, we end in a leaf node immediately and predict a value of 30.67.

One advantage of CART models over linear models for imputation is their resilience against missing values in predictors. For a linear model, the imputation ![]() cannot be estimated when any of the

cannot be estimated when any of the ![]() happens to be missing unless it is somehow imputed. In a CART model, the actual decision tree contains more information than shown in Figure 10.5. Anticipating on possible missing predictors, each node stores one or more backup split rules based on other predictors present in the dataset. If during prediction some value

happens to be missing unless it is somehow imputed. In a CART model, the actual decision tree contains more information than shown in Figure 10.5. Anticipating on possible missing predictors, each node stores one or more backup split rules based on other predictors present in the dataset. If during prediction some value ![]() is found to be missing, its first so-called surrogate variable is used to decide the split. If the surrogate is also missing, the next one is used, and so on, until an observed surrogate is found, or no surrogates are left. In the latter case, the most populated child node is chosen. The loss of quality of prediction is smaller when surrogates are highly correlated with the primary splitting variables.

is found to be missing, its first so-called surrogate variable is used to decide the split. If the surrogate is also missing, the next one is used, and so on, until an observed surrogate is found, or no surrogates are left. In the latter case, the most populated child node is chosen. The loss of quality of prediction is smaller when surrogates are highly correlated with the primary splitting variables.

10.3.6 Random Forest

Random forest (Breiman, 2001) is an ensemble-based improvement over CART. It can be used to predict both qualitative and quantitative variables. The idea is to take bootstrap samples from the original data, and grow a decision tree for each sample. Moreover, at each split, a subset of the ![]() available predictors (typically about

available predictors (typically about ![]() is randomly chosen as possible splitting variables. Randomizing the available splitting variables is used to decrease correlation between the trees.

is randomly chosen as possible splitting variables. Randomizing the available splitting variables is used to decrease correlation between the trees.

Training a random forest model thus results in a set of ![]() trees

trees ![]() called a forest. If the predicted variable is numerical, the prediction is an aggregate over the individual predictions such as the mean,

called a forest. If the predicted variable is numerical, the prediction is an aggregate over the individual predictions such as the mean,

but in principle, it is possible to use a robust aggregate such as the median as well. For categorical variables, the majority vote over the trees is taken.

With the simputation package, random forest models can be employed for imputation as follows:

impute_rf(retl, staff ∼ .) %>% head(3)

## size staff turnover other.rev total.rev vat

## 1 sc0 75 NA NA 1130 NA

## 2 sc3 9 1607 NA 1607 NA

## 3 sc3 NA 6886 -33 6919 NARandom forest models are somewhat less resilient against missing predictors than the CART models discussed in the previous paragraph. The underlying Fortran code by Breiman and Cutler (2004) is able to use a rough imputation scheme on the training set to generate the forest (this can be set by passing na_action=na.rough fix; this will impute medians for numeric data and modes for categorical data). For prediction, however, not every variable needs to be present: the average can be taken over the subset of trees that do return a value. However, for small datasets such as in this example, it may occur that all trees return NA so no prediction is possible. Here, the problem can be partially resolved by removing a variable with little observations from the list of predictors.

impute_rf(retl, staff ∼ . - vat) %>% head(3)

## size staff turnover other.rev total.rev vat

## 1 sc0 75.00000 NA NA 1130 NA

## 2 sc3 9.00000 1607 NA 1607 NA

## 3 sc3 20.23483 6886 -33 6919 NABesides simputation, there are other R packages implementing imputation based on random forests. The missForest package by Stekhoven and Bühlmann (2012) implements an iterative imputation procedure. For initiation, missing values get imputed using a simple rule. Next, a random forest is trained on the completed dataset, yielding updated imputation values. This second step is repeated until a convergence criterion has been satisfied. When installed, the missForest package can be interfaced via simputation using impute_mf.

impute_mf(retl, staff ∼ .) %>% head(3)

## missForest iteration 1 in progress…done!

## missForest iteration 2 in progress…done!

## size staff turnover other.rev total.rev vat

## 1 sc0 75.00 NA NA 1130 NA

## 2 sc3 9.00 1607 NA 1607 NA

## 3 sc3 18.15 6886 -33 6919 NAHere, all variables are imputed (iteratively) and used as predictors, but only the variables on the left-hand side for the formula are copied to the resulting dataset. With the formula . ∼ . all variables can be imputed.

10.4 Donor Imputation with R

In donor imputation, a missing value in one record is replaced with an observed value that is copied from another and somehow otherwise similar record. The record from which the value is copied is referred to as the ‘donor’ record; hence, the name of the method. Donor imputation is also referred to as hot deck imputation. The etymology of this term derives from the state of the art in computing when the method was first applied. Researchers would ‘hot deck impute’ by drawing from a deck of computer punch cards representing records (Andridge and Little, 2010; Cranmer and Gill, 2013).

When compared to model-based imputation, the advantage of donor imputation is that the imputed value is always an actually existing (observed) value. Statistical models always run the risk of predicting a value that is not (physically) possible, especially when extrapolating beyond the observed range of values. The downside of donor imputation is that in spite of its wide application, theoretical underpinning is not as strong as for model-based methods. Moreover, Andridge and Little (2010) conclude in their extensive review that no consensus exists on the best way to apply hot deck imputation methods, and note that ‘many multivariate hot deck methods seem relatively ad hoc’. Nevertheless, hot deck methods have been commonly applied for a long time in areas related to official statistics [see Ono and Miller (1969); Bailar and Bailar (1979); and Cox (1980) for some early applications and method comparisons] and to a lesser extend in medical or epidemiological settings. Method comparisons are given in, for example, Barzi and Woodward (2004); Engels and Diehr (2003); Perez et al. (2002); Reilly and Pepe (1997); Tang et al. (2005), and Twisk and de Vente (2002).

Hot deck imputation methods are commonly categorized along two dimensions. The first dimension distinguishes between methods where multiple missing values in a record are imputed from the same donor (multivariate donor imputation) and methods where a separate donor may be appointed for each missing variable. The main advantage of multivariate donor imputation is that one only imputes valid and existing value combinations, so that imputed values cannot introduce inconsistencies. The downside is that the number of possible donors may be greatly reduced as the number of missing values in a record increases. The hot deck donor imputation routines in simputation have an option called pool, which control this behavior. Its possible values are

"complete": |

Use only complete records as donor pool and perform multivariate imputation. This is the default |

"univariate": |

A new donor is sought for each variable. |

"multivariate": |

For each occurring pattern of missingness find suitable donors and perform multivariate imputation. |

The second dimension distinguishes between the various ways donor records are determined, and each of these methods (discussed next) can be executed in a univariate or multivariate fashion.

10.4.1 Random and Sequential Hot Deck Imputation

In random hot deck imputation, a donor is sampled from a donor pool. Often, a dataset is separated into imputation cells for which one or more auxiliary variables have the same values. With the simputation package, the imputation cells are determined by the right-hand side of the formula object specifying the model (we continue with the retl dataset constructed in the previous paragraph).

set.seed(1) # make reproducible

# random hot deck imputation (multivariate; complete cases are donor)

impute_rhd(retl, turnover + other.rev + total.rev ∼ size) %>% head(3)

## size staff turnover other.rev total.rev vat

## 1 sc0 75 359 9 1130 NA

## 2 sc3 9 1607 98350 1607 NA

## 3 sc3 NA 6886 -33 6919 NAIn the above example, the dataset is split according to the size class label, and data are imputed in univariate manner. That is, for each variable, a value is sampled from all observed values within the same size class. If multiple categorical variables are used to define imputation cells, the donor pools can quickly decrease in size, leading possibly to many imputations of the same value. Setting pool="univariate" can alleviate this issue to a small extend since per-variable donor pools are generally larger than multivariate donor pools.

# random hot deck imputation (univariate)

impute_rhd(retl, turnover + other.rev + total.rev ∼ size

, pool="univariate") %>% head(3)

## size staff turnover other.rev total.rev vat

## 1 sc0 75 197 622 1130 NA

## 2 sc3 9 1607 33 1607 NA

## 3 sc3 NA 6886 -33 6919 NABy default, records are drawn uniformly from the pool, but one can pass a numeric vector prob assigning a probability to each record in the imputed data. Probabilities will be rescaled as necessary, depending on grouping and donor pool specification.

In sequential hot deck, one sorts the dataset using one or more variables, and missing values in a record are taken from the first preceding or ensuing record that has a value. If values are taken from preceding records, the method is referred to as last observation carried forward or LOCF in short, if values are taken from ensuing records, the method is referred to as next observation carried backward (NOCB). With the simputation package, sequential hot deck is executed with the impute_shd function. The ‘predictor variables’ in the formula are used to sort the data (with every variable after the first used as tie-breaker for the previous one).

impute_shd(retl, turnover + other.rev + total.rev ∼ staff) %>% head(3)

## size staff turnover other.rev total.rev vat

## 1 sc0 75 9067 622 1130 NA

## 2 sc3 9 1607 38 1607 NA

## 3 sc3 NA 6886 -33 6919 NAThe sort order is always ‘increasing’, but with the argument order, one can choose to use between "nocb" (the default) and "locb" imputation.

Both random and sequential hot decks are implemented in the simputation source code. Especially for large datasets and many groups, it is beneficial to use the faster implementation provided by the VIM package. This is possible for both impute_rhd and impute_shd by setting backend="VIM". Options specific to simputation (such as the order argument) will be ignored, but one can pass any argument of VIM::hotdeck to impute_rhd or impute_shd for detailed control over the imputation method.

10.4.2  Nearest Neighbors and Predictive Mean Matching

Nearest Neighbors and Predictive Mean Matching

In the ![]() -nearest-neighbor (knn) method, a similarity measure is used to find the knns to a record containing missing values. Next, a donor value is determined. Donor value determination can be done by randomly selecting from the

-nearest-neighbor (knn) method, a similarity measure is used to find the knns to a record containing missing values. Next, a donor value is determined. Donor value determination can be done by randomly selecting from the ![]() neighbors or, for example (in the case of categorical data), by choosing the majority value. A particularly popular similarity measure is that of Gower (1971). Given two records

neighbors or, for example (in the case of categorical data), by choosing the majority value. A particularly popular similarity measure is that of Gower (1971). Given two records ![]() and

and ![]() , each with

, each with ![]() variables that may be numeric, categorical, or missing, Gower's similarity measure

variables that may be numeric, categorical, or missing, Gower's similarity measure ![]() can be written as

can be written as

The values of ![]() and

and ![]() depend on the variable type. If the

depend on the variable type. If the ![]() th variable is numeric, then

th variable is numeric, then

Here, ![]() is the observed range of the

is the observed range of the ![]() th variable. If the

th variable. If the ![]() th variable is categorical, then

th variable is categorical, then ![]() is defined as

is defined as

For numerical and categorical variables, the importance weights ![]() may be chosen at will, but they are usually set to 1 or 0, where setting

may be chosen at will, but they are usually set to 1 or 0, where setting ![]() amounts to excluding the

amounts to excluding the ![]() th variable from the similarity calculation. For a dichotomous (

th variable from the similarity calculation. For a dichotomous (logical, in R) variable, ![]() is yet defined differently, namely,

is yet defined differently, namely,

while the weights are defined as

Here, we identify the logical outputs true with 1 and false with 0. The rationale is that a dichotomous variable only adds to the similarity when both variables are true. If any of the two variables is true they add to the weight in the denominator.

In the simputation package, knn imputation based on Gower's similarity is performed with the impute_knn function. The predictor variables in the formula argument specify which variables are used to determine Gower's similarity. Below, we use all variables by specifying the dot (.). The default value for ![]() , but by setting

, but by setting ![]() , values are copied directly from the nearest neighbor.

, values are copied directly from the nearest neighbor.

impute_knn(retl, turnover + other.rev + total.rev ∼ ., k=1) %>% head(3)

## size staff turnover other.rev total.rev vat

## 1 sc0 75 9067 622 1130 NA

## 2 sc3 9 1607 13 1607 NA

## 3 sc3 NA 6886 -33 6919 NALike for the random and sequential hot deck imputation procedures, the donor pool can be specified ("complete", "univariate", or "multivariate") and the VIM package can be used as computational backend.

Predictive mean matching (PMM) is a nearest-neighbor imputation method where the donor is determined by comparing predicted donor values with model-based predictions for the recipient's missing values. It can therefore be seen as a method that lies between the purely model-based and purely donor-based imputation methods. On one hand, it partially shares the benefits of both approaches, utilizing the power of predictive modeling while making sure only observed values are imputed. On the other hand, it inherits some of the intricacies of both worlds, such as issues with model selection and the possibility of small donor pools. In practice, PMM has become a popular method, and the popular mice package for multiple imputation (van Buuren and Groothuis-Oudshoorn, 2011) uses it as default imputation method.

In simputation, PMM is achieved with the impute_pmm function. Besides the data to be imputed and a predictive model-specifying formula, it takes one of the impute_ functions as an argument to preimpute the recipients with a chosen model. By default, impute_lm is used so the formula object must specify a linear model.

impute_pmm(retl, turnover + other.rev + total.rev ∼ staff) %>% head(3)

## size staff turnover other.rev total.rev vat

## 1 sc0 75 7271 30 1130 NA

## 2 sc3 9 1607 1831 1607 NA

## 3 sc3 NA 6886 -33 6919 NAHowever, one can switch to a robust linear model based on the ![]() -estimator as follows:

-estimator as follows:

impute_pmm(retl, turnover + other.rev + total.rev ∼ staff

, predictor=impute_rlm, method="MM") %>% head(3)

## size staff turnover other.rev total.rev vat

## 1 sc0 75 9067 622 1130 NA

## 2 sc3 9 1607 13 1607 NA

## 3 sc3 NA 6886 -33 6919 NA10.5 Other Methods in the simputation Package

There are a few other imputation approaches supported by the simputation package that facilitate certain type of imputations.

The first method is a utility function allowing to replace missing values with a constant. For example,

impute_const(retl, other.rev ∼ 0) %>% head(3)

## size staff turnover other.rev total.rev vat

## 1 sc0 75 NA 0 1130 NA

## 2 sc3 9 1607 0 1607 NA

## 3 sc3 NA 6886 -33 6919 NASuch an imputation strategy implies strong assumptions that in some cases may nevertheless be reasonable. For example, one could assume that values that are not submitted by a respondent can be interpreted as ‘not applicable’ or in this case zero. Obviously, one should very carefully test such an assumption since they can introduce severe bias in estimates based on the data.

The second method is referred to by de Waal et al. (2011) as proxy imputation. Here, the missing value is estimated by copying a value from the same record, but from another variable. For example, we may estimate the variable total turnover in the retailers dataset by copying the amount of turnover reported to the tax office for vat.

impute_proxy(retl, total.rev ∼ vat) %>% head(3)

## size staff turnover other.rev total.rev vat

## 1 sc0 75 NA NA 1130 NA

## 2 sc3 9 1607 NA 1607 NA

## 3 sc3 NA 6886 -33 6919 NAThis method is useful only when both the economic definition of turnover and the legal definition used by the tax authorities coincide or are very close.

The simputation package is more flexible than De Waal et al.'s original definition and also allows for imputing functions of variables.

impute_proxy(retl

, turnover ∼ mean(turnover/total.rev,na.rm=TRUE) * total.rev) %>%

head(3)

## size staff turnover other.rev total.rev vat

## 1 sc0 75 1351.159 NA 1130 NA

## 2 sc3 9 1607.000 NA 1607 NA

## 3 sc3 NA 6886.000 -33 6919 NAHere, the right-hand side of the formula may evaluate to a vector of unit length or to a vector with length equal to the number of input rows. Proxy imputation also accepts grouping, so imputing the group mean can be done as follows:

impute_proxy(retl, turnover ∼ mean(turnover, na.rm=TRUE) | size)

%>% head(3)

## size staff turnover other.rev total.rev vat

## 1 sc0 75 1420.375 NA 1130 NA

## 2 sc3 9 1607.000 NA 1607 NA

## 3 sc3 NA 6886.000 -33 6919 NA10.6 Imputation Based on the EM Algorithm

The purpose of the Expectation–Maximization algorithm (Dempster et al., 1977) is to estimate the maximum-likelihood estimate for parameters of a multivariate probability distribution in the presence of missing data. As such, it is not an imputation method. Imputations can be generated by computing the expected value for missing items conditional on the observed data or by sampling them from the conditional multivariate distribution.

Contrary to the model-based imputation methods discussed up until now, in the EM algorithm, there is no fixed distinction between predicting and predicted variables—one simply uses everything available to estimate the parameters of some model distribution. This also means that one needs to assume a distributional form (e.g., multivariate normal) for the combined variables in the dataset.

10.6.1 The EM Algorithm

Consider again a set of random variables ![]() with a joint probability distribution

with a joint probability distribution ![]() parameterized by a numeric vector

parameterized by a numeric vector ![]() . We denote a realization of

. We denote a realization of ![]() as a row vector

as a row vector ![]() and a set of

and a set of ![]() realizations as a

realizations as a ![]() matrix

matrix ![]() . A common way to estimate the parameters of

. A common way to estimate the parameters of ![]() given a set of observations is to assume a distributional form for

given a set of observations is to assume a distributional form for ![]() (say, multinormal) and then solve the following maximization problem.

(say, multinormal) and then solve the following maximization problem.

where ![]() is the space of possible values for

is the space of possible values for ![]() . Furthermore, we used Bayes' rule in the second line, and in the third line, we used that taking the logarithm does not alter the location of the maximum. If there is no prior knowledge about

. Furthermore, we used Bayes' rule in the second line, and in the third line, we used that taking the logarithm does not alter the location of the maximum. If there is no prior knowledge about ![]() , we may assume that

, we may assume that ![]() is uniformly distributed over

is uniformly distributed over ![]() , so

, so ![]() is constant. Since

is constant. Since ![]() is also independent of

is also independent of ![]() , the maximization problem can be simplified to

, the maximization problem can be simplified to

where we introduced the notation ![]() , which is referred to as the maximum-likelihood function. Correspondingly, Eq. (10.10) is referred to as the maximum-likelihood estimator. It returns the value of

, which is referred to as the maximum-likelihood function. Correspondingly, Eq. (10.10) is referred to as the maximum-likelihood estimator. It returns the value of ![]() such that

such that ![]() is the most likely observed data. To actually solve this equation, one substitutes a distribution (e.g., multinormal), equates the right-hand side to zero, and solves for

is the most likely observed data. To actually solve this equation, one substitutes a distribution (e.g., multinormal), equates the right-hand side to zero, and solves for ![]() .

.

If only part of the realizations ![]() are actually observed, this maximization problem cannot be solved. To move forward, we partition

are actually observed, this maximization problem cannot be solved. To move forward, we partition ![]() into the observed values

into the observed values ![]() and missing values

and missing values ![]() . Using Bayes' rule twice, we can write

. Using Bayes' rule twice, we can write

Again, dropping terms not depending on ![]() (and we already assumed that

(and we already assumed that ![]() is constant), we find that maximizing the likelihood function of Eq. (10.10) can equivalently be written as the maximization over the likelihood function

is constant), we find that maximizing the likelihood function of Eq. (10.10) can equivalently be written as the maximization over the likelihood function

Since ![]() is unknown, this expression cannot be maximized. The idea of Dempster et al. (1977) is to replace the maximum-likelihood function with its expected value with regard to the missing values.

is unknown, this expression cannot be maximized. The idea of Dempster et al. (1977) is to replace the maximum-likelihood function with its expected value with regard to the missing values.

Here, integration is over all unobserved variables, where ![]() indicates the domain of possible values for the variables in

indicates the domain of possible values for the variables in ![]() . In the second line, Eq. (10.11) was substituted. The integral in the second line is the negative entropy of the distribution

. In the second line, Eq. (10.11) was substituted. The integral in the second line is the negative entropy of the distribution ![]() . It is therefore usually denoted

. It is therefore usually denoted ![]() . In principle, one can numerically maximize the above expression to obtain

. In principle, one can numerically maximize the above expression to obtain ![]() . However, if

. However, if ![]() has a simple form, and we choose some value

has a simple form, and we choose some value ![]() , the integral

, the integral

can often be worked out explicitly. Next, an updated value for ![]() can be found by maximizing

can be found by maximizing ![]() as a function of

as a function of ![]() . The Expectation–Maximization algorithm is indeed an iteration over this procedure. The crucial result of Dempster et al. (1977) is that the value of

. The Expectation–Maximization algorithm is indeed an iteration over this procedure. The crucial result of Dempster et al. (1977) is that the value of ![]() must increase at each iteration and converge to a maximum under mild conditions, including cases where

must increase at each iteration and converge to a maximum under mild conditions, including cases where ![]() is a member of the regular exponential family.

is a member of the regular exponential family.

If ![]() is a member of the regular exponential family, the expressions to be evaluated by the algorithm can be simplified by expressing them in terms of (expected values of) sufficient statistics [e.g., de Waal et al. (2011, Chapter 8) or Schafer (1997, Chapter 5)]. The regular exponential family includes a wide range of commonly applied distributions including the (multivariate) normal, Bernoulli, exponential, (negative) binomial, Poisson, and gamma distributions [see, e.g., Brown (1986)]. Indeed, many implementations of the EM algorithm demand that

is a member of the regular exponential family, the expressions to be evaluated by the algorithm can be simplified by expressing them in terms of (expected values of) sufficient statistics [e.g., de Waal et al. (2011, Chapter 8) or Schafer (1997, Chapter 5)]. The regular exponential family includes a wide range of commonly applied distributions including the (multivariate) normal, Bernoulli, exponential, (negative) binomial, Poisson, and gamma distributions [see, e.g., Brown (1986)]. Indeed, many implementations of the EM algorithm demand that ![]() is from the regular exponential family. The

is from the regular exponential family. The Amelia package discussed below is restricted to models based on the multivariate normal distribution.

Advantages of the EM algorithm include that it is a simple and well-understood algorithm that is guaranteed to converge in principle. Given a distribution from the exponential family, the E and M steps can be computed very quickly. Also, since the algorithm provides an estimate for the full multivariate distribution, it has a good chance of correcting for the random (MAR) mechanism. A disadvantage is that computation may take many iterations since the convergence criterion (measured in terms of the difference in ![]() between iterations) decreases approximately linearly with the number of iterations (Schafer, 1997). Convergence can be especially slow when the fraction of missing values is high or when the model distribution is a poor description of the actual data distribution. Furthermore, the EM algorithm does not immediately provide a variance estimate for

between iterations) decreases approximately linearly with the number of iterations (Schafer, 1997). Convergence can be especially slow when the fraction of missing values is high or when the model distribution is a poor description of the actual data distribution. Furthermore, the EM algorithm does not immediately provide a variance estimate for ![]() .

.

10.6.2 EM Imputation Assuming the Multivariate Normal Distribution

Probably, one of the most implemented models for EM estimation is the model where numeric variables are distributed according to the multivariate normal distribution. This distribution is parameterized by the mean vector ![]() and covariance matrix

and covariance matrix ![]() , with the probability density function given by

, with the probability density function given by

Because of its relatively simple form, the update rules for the E and M steps can be worked out yielding expressions that eventually be evaluated using simple matrix algebra and linear system solving.

Before stating the algorithm, consider a record ![]() with observed values

with observed values ![]() and missing values

and missing values ![]() . Given an estimate for the mean vector and covariance matrix, these can be rearranged accordingly, so

. Given an estimate for the mean vector and covariance matrix, these can be rearranged accordingly, so

Here, ![]() is the estimated covariance matrix for observed variables,

is the estimated covariance matrix for observed variables, ![]() the estimated covariance matrix between observed and missing variables, and

the estimated covariance matrix between observed and missing variables, and ![]() the estimated covariance matrix for the missing variables. After a fair amount of tedious algebra and integration, one can show that the expected value(s) for the missing part of

the estimated covariance matrix for the missing variables. After a fair amount of tedious algebra and integration, one can show that the expected value(s) for the missing part of ![]() conditional on

conditional on ![]() ,

, ![]() , and

, and ![]() is given by

is given by

This equation can be used to impute a record once the parameters have been estimated (Procedure 10.6.2). The imputed dataset is obtained by applying Eq. (10.12) one last time on every record in the dataset.

impute_em(retl, ∼ .- size) %>% head(3)

## size staff turnover other.rev total.rev vat

## 1 sc0 75.00000 893.2151 1168.523 1130 7917.634

## 2 sc3 9.00000 1607.0000 4373.896 1607 1749.444

## 3 sc3 11.84416 6886.0000 -33.000 6919 1991.73010.7 Sampling Variance under Imputation

The goal of data analysis is often to infer the value of a population parameter, say ![]() . If the data is obtained by sampling from the population, an estimate

. If the data is obtained by sampling from the population, an estimate ![]() is obtained by applying some procedure to the observed dataset. In such cases, one is often interested in how much the estimator

is obtained by applying some procedure to the observed dataset. In such cases, one is often interested in how much the estimator ![]() would vary if the sampling-and-estimation procedure was to be repeated on the same population. The variation over all possible samples is usually measured by the sampling variance.

would vary if the sampling-and-estimation procedure was to be repeated on the same population. The variation over all possible samples is usually measured by the sampling variance.

Suppose that the parameter of interest is the population mean ![]() of a variable

of a variable ![]() . Given a sample, obtained using simple random sample without replacement, an unbiased estimate

. Given a sample, obtained using simple random sample without replacement, an unbiased estimate ![]() can be obtained by computing the sample mean. The sampling variance of

can be obtained by computing the sample mean. The sampling variance of ![]() , estimated from the same sample, is given by the well-known expression

, estimated from the same sample, is given by the well-known expression

where ![]() and

and ![]() are the sample and population size and

are the sample and population size and ![]() the observed sample values.

the observed sample values.

The important thing to realize here is that the expression for sampling variance depends on the sampling scheme (including size) and the expression or procedure used to obtain the estimator. This means that if part of the sampled data must be imputed, this imputation procedure should be considered part of the estimation procedure. This is easy to see from the abovementioned example. Suppose that some fraction of the observations ![]() are missing completely at random, and we impute them with the estimated mean (ignoring missing values). This clearly leads to negatively biased variance estimation since the terms in the sum of Eq. (10.13) corresponding to missing