Preliminary Study of TV Caption Presentation Method for Aphasia Sufferers and Supporting System to Summarize TV captions

Abstract: We conducted an experiment in which people with aphasia watched TV news with summarized TV captions made by speech-language-hearing therapists in accordance with our rules for summarizing captions. As a result, we found the summarized TV captions are better subjectively for aphasia sufferers. To familiarize people with the summarized captions, we’ve developed a supporting system for any volunteers to summarize Japanese captions while keeping a consistent summarization style. A result of preliminary evaluation shows some of symbols applied automatically in initial summarized sentences are effective for summarization manually. Furthermore, we found 40% isn’t suitable as a length of initial sentence.

1 Introduction

Aphasia is a medical condition that affects an individual’s ability to process language, including both producing and recognizing language, as a result of a stroke or head injury. Though they do not have reading difficulties related to vision or hearing difficulties related to auditory sense, aphasia sufferers encounter several difficulties in understanding written text and speech.

To support their comprehension, the people supporting them like speech-language-hearing therapists (ST) and their family must know about their daily knowledge of communication. They can combine short sentences and words with symbols and pictures to enhance understanding because it is easier for aphasia sufferers with reading difficulties to understand writing in Kanji which convey a meaning rather than in Kana, syllabic writing and short and simple sentences rather than long and complex sentences. (Kane et al., 2013) explored ways to help adults with aphasia vote and learn about political issues using common knowledge. (Kobayashi 2010) reports volunteer activities, summary writing, and point writing of speeches in conferences consisting mainly of aphasia sufferers. These activities also use the knowledge of communication. Volunteers type summarization sentences on a projection screen during the conference on summary writing. Next they give overviews by point writing. They prepare summarization sentences in advance before the conference and write new information on the projection and tablet screens during the conference. One of the problems in summary writing is the shortage of STs and volunteers relative to aphasia sufferers. The other is that summary writing is difficult for people other than STs and volunteers because there are no specific guidelines for it.

Mai Yanagimura, Shingo Kuroiwa, Yasuo Horiuchi: Chiba University, Graduate School of Advanced Integration Science, 1-33, Yayoicho, Inage-ku, Chiba-shi, Chiba, 263-8522 Japan, {mai}@chiba-u.jp

Sachiyo Muranishi, Daisuke Furukawa: Kimitsu Chuo Hospital, 10-10 Sakurai, Kisarazu-shi, Chiba, 292-8535 Japan

A summarization technique needs to be applied in daily life, specifically summarization of TV captions. Therefore, we aim to develop an automatic caption summarization system. (Napoles et al., 2011; Cohn et al., 2009; Knight et al., 2002; Boudin et al., 2013) have researched automatic sentence compression methods in English and other languages. However, a practical automatic caption summarization is difficult to make in Japanese. (Ikeda et al., 2005) found readability to be 36% and meaning identification to be 45% for sentences they used. Though (Norimoto et al., 2012) showed the 82% of sentences they used could be correctly summarized, the remaining 18% of sentences has their meanings changed and did not work as sentences. These pieces of research were for deaf and foreign exchange students. (Carrol et al., 1998) developed a sentence simplification system in English that was divided into an analyzer and a simplifier for people with aphasia. However, we cannot find a sentence compression system for aphasia sufferers in Japanese.

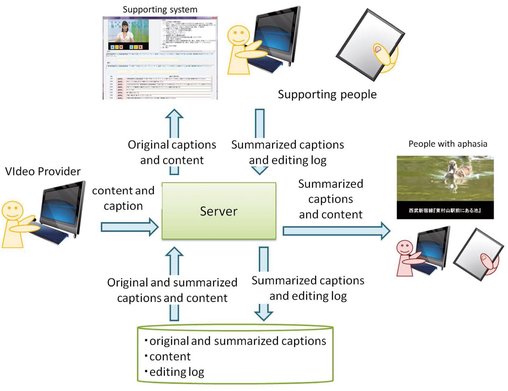

Therefore, before developing an automatic summarization system, our purpose here is to develop a supporting system to help people summarize TV captions manually. Fig. 1 shows the concept of our system to provide videos with summarized captions. We build a server to manage original and summarized captions, videos, and editing logs. We obtain videos and original captions from video providers. We provide the videos and captions to supporting people who use our supporting system to summarize captions. They summarize initial summarized text that has been generated automatically in our system. We can then provide videos with summarized captions for people with aphasia. Although (Pražák et al., 2012; Imai et al., 2010; Hong et al., 2010) proposed captioning system using speech recognition for deaf, it is hard to find research work for people with aphasia such our developing system.

We also use sentence compression to generate the initial summarized text. Ex. 1 presents an original sentence and its automatically and manually summarized version.

[Ex. 1]

Original Caption:

![]()

![]()

(A replica car was unveiled today at a shrine Prince Arisugawa-no-miya once he visited in Kunitachi city)

Summarized TV caption:

![]()

(Replica unveiled ≪Shrine in Kunitachi≫)

We need to paraphrase from Kana into Kanji aggressively and use symbols to replace deleted words and phrases in such sentences. Previous research did not summarize these features. Therefore, we need additional methods to generate initial summarized text for people with aphasia.

Fig. 1: Concept of our project.

After that, we evaluate the effect of summarized TV captions because we could not find a comprehensive study that discusses the effects of summarized TV captions for aphasia sufferers in Japanese. In Sec.3, we perform an experimental evaluation of summarized TV captions for aphasia sufferers to compare them with original captions in Sec.3. Next, in Sec.4, we detail a method to generate the initial summarized captions for people with aphasia. Finally, we evaluate the system in Sec. 5 and make some concluding remarks Sec.6.

2 Guidelines of Summarized TV Captions for Aphasia Sufferers

We have proposed the following guidelines for manually producing accessible summarized captions for adults with aphasia using the knowledge of summary writing and communication of STs. These guidelines consist of 27 rules.

2.1 Overview of Guideline

Summarized TV captions have the following features.

- Shorten summarized TV captions to 50–70% of original captions.

- Summarize as clearly and concisely as possible

- Paraphrase Kana into Kanji

- Use some symbols to replace words and phrases

We define various specific rules: paraphrasing; deletion and usage of symbols to summarize sentences. In this paper, we present a part of all rules as follows.

2.2 Rules of Paraphrasing

These rules aim to transform writing in Kana into Kanji.

- (1) Into smaller chunks including more Kanji

[Ex.]

Original caption:

.

.(It was collided violently.)

Summarized caption:

(Crash.)

- (2) Into nouns from general verbs

[Ex.]

Original caption:

.

.(They have discovered a car unexpectedly.)

Summarized caption:

(

found.)

found.) - (3) Into general expressions from honorific ones

[Ex.]

Original caption:

.

.(Mr. Sato has arrived.)

Summarized caption:

(Sato came.)

- (4) Into ending a sentence with a noun or noun phrase

[Ex.]

Original caption:

.

.(They are growing up healthily with the support of local people.)

Summarized caption:

(Growing up ← local people’s support.)

2.3 Rules of Deletion

The rules aim to decrease writing in Kana.

- (1) Delete fillers

[Ex.]

Original caption:

(Uh, we wish that, well, all 9 birds fly away safely.)

Summarized caption:

(⌜Nine birds fly away safely!⌟)

- (2) Delete postpositional particles

[Ex.]

Originalcaption:

.

.(The leading role of the dog is to be played by a second-year high school student, Ms. Kumada Rina.)

Summarized caption:

(Lead role of dog = second-year HS student

)

) - (3) Delete terms you decide can be inferred

[Ex.]

Original Caption:

.

.(This food event was held in Saitama Prefecture for the first time thanks to your enthusiastic encouragement.)

Summarized caption:

(≪Saitama Prefecture≫ hosts first food event .)

- (4) Delete terms you decide are not relevant to key content

[Ex.]

Original Caption:

.

.(Local people placed a notebook to write messages for baby spot-billed ducks, and station users wrote, “Good luck” and “Grow up healthily”.) Summarized caption:

(

placed)

placed)

2.4 Rules of Symbols

We use symbols to paraphrase language and emphatic expressions effectively.

- (1) ≪ ≫ : Enclose location and time expressions

[Ex.]

Original caption:

.

.(Baby spot-billed ducks are born annually in a pond in front of Higashimu-rayama station on the Seibu-Shinjuku Line.)

Summarized caption:

(≪Annuall≫ baby ducks are born ≪Higashi-murayama station on Seibu-Shinjuku Line≫.)

(≪Annuall≫ baby ducks are born ≪Higashi-murayama station on Seibu-Shinjuku Line≫.) - (2)

: Enclose key terms to emphasize them

: Enclose key terms to emphasize them

[Ex.]

Original caption:

.

.(Nine newborn baby spot-billed ducks are growing up healthily with the support of local people.)

Summarized caption:

(

growing up ← local people’s support.)

growing up ← local people’s support.) - (3) ( ) : Enclose less important information

[Ex.]

Original caption:

.

.  .

.(Nine newborn baby spot-billed ducks are growing up healthily with the support of local people.)

Summarizedcaption:

(

( growing up ← local people’s support.)

growing up ← local people’s support.) - (4) →: Paraphrasing Conjunction and conjunctive particles to express addition

[Ex]

Original caption: 9

.

.(The nine baby birds have been grown up to palm-size and are swimming in a pond in front of the station.)

Summarized caption:

(

grown up to palm-size → swimming ≪pond in front of station≫.)

grown up to palm-size → swimming ≪pond in front of station≫.) - (5) ⇒: Paraphrasing → into end of sentence when → is already used in the sentence

[Ex.]

Original caption:

.

.(Please take care because low pressure will go up north, leading to snow in the Kanto region.)

Summarized Caption:

(Low pressure going up north → ≪Kanto region≫ snow ⇒ Take care.)

3 Summarized TV Caption of Evaluation

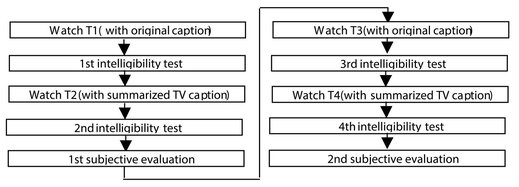

We performed an evaluation of summarized TV captions for people with aphasia. Fig. 2 shows the evaluation procedure. We manually summarized TV captions for 4 TV news segments in accordance with the guidelines. We presented two types of content (with the original or summarized TV captions) to 13 aphasia sufferers. The summarized captions were assessed in intelligibility tests, subjective evaluations, and interviews. T1, T2, T3 and T4 represented four TV news segments. The number of sentences in T1~T4 was 36 sentences. Each TV segment was about 1 minute and 30 seconds long. Aphasia sufferers tried the intelligibility test after watching each news with original captions or summarized captions. Each aphasia suffer tried the intelligibility test four times as shown in Fig. 2. They filled out the answer sheet for some questions about the news they watched just before the tests. We asked “Which do you think is better and why: the TV caption or the summarized TV caption?” in the subjective evaluations after watching 2 news with original captions and summarized captions. Each aphasia suffer tried the subjective evaluation twice as shown in Fig. 2.

Fig. 2: Example procedure for one person.

We organized some procedures to prevent the order effect. We studied this evaluation in a hospital where the subjects were undergoing rehabilitation. This experiment lasted 30 minutes per person. The contents included topics such as birds, a car accident, and so on.

3.1 Experimental Results and Discussion

Eight out of 13 people the first time and nine out of 13 people the second time replied “The summarized captions are better”. We received some positive opinions from the aphasia sufferers, for example, “They are precise” and “They’re easy to read”. These results suggest that summarized captions are suitable to give an overview of news, not detailed information. Furthermore, although content was about only 1 minute and 30 seconds, some people remarked “I become tired watching content with the original captions” in interviews. This suggests that original captions are not simple enough for people with aphasia to watch contents for a long time. Therefore, we found summarized TV captions can be one effective option for closed caption expressions for aphasia sufferers.

Table 1 presents the average correct percentages in the intelligibility test of people who said the summarized or original TV captions are better. The percentage for summarized TV captions is lower than was expected. (There is no significant difference in T test.) We discuss why the average correct percentages of the intelligibility test are lower for aphasia sufferers who preferred summarized captions. Some were confused by the symbols used to summarize captions because we did not explain their meanings before this experiment. One aphasia sufferer remarked “I don’t know the meanings of the symbols” in the interview. We need to familiarize aphasia sufferers with the meanings of symbols.

Tab. 1: Average percentage of correct answers in intelligibility test.

| People who prefer summarized captions | People who prefer original captions | |

|---|---|---|

| Original caption | 0.68 | 0.69 |

| Summarized caption | 0.65 | 0.54 |

Another reason is the vague usages of brackets, ![]() . We proposed the following rule for

. We proposed the following rule for ![]() : you enclose key terms with

: you enclose key terms with![]() toemphasize them. Although

toemphasize them. Although ![]() and

and ![]() are the same key term (joyosha: car),

are the same key term (joyosha: car), ![]() was used for the former but not for the latter. This is why it is not enough for one person only to apply rules consistently. For this reason, our system supports people to summarize captions. Ex. 1 presents a sentence that is difficult to understand because we use too many arrows →. This could hinder reading comprehension. We should consider how to use → and how many to use in more detail in order to generate better sentences like Ex. 2.

was used for the former but not for the latter. This is why it is not enough for one person only to apply rules consistently. For this reason, our system supports people to summarize captions. Ex. 1 presents a sentence that is difficult to understand because we use too many arrows →. This could hinder reading comprehension. We should consider how to use → and how many to use in more detail in order to generate better sentences like Ex. 2.

[Ex. 1]

![]()

(Big Rig collided with tractor-trailer → burning → man killed)

[Ex. 2] ![]()

(Big Rig collided with tractor-trailer → burning man killed.)

4 Supporting System to Summarize

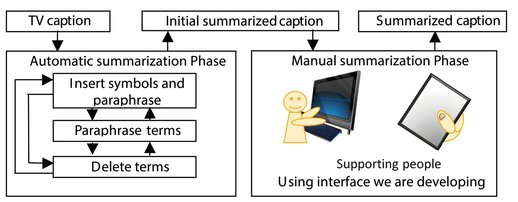

We found that summarized captions are an option for closed captions in Sec. 3. We thus developed a system to make summarized captions known and overcome the shortage of people to summarize. Fig. 3 shows the two-phase procedure of the system. The system summarizes TV captions in the automatic summarization phrase to generate initial summarization captions. Then, in the manual summarization phase, volunteers summarize initial summarized captions on the PC or tablet using an interface we are developing.

Fig. 3: Procedure of supporting system to summarize captions.

4.1 Rule for Supporting System

We detail methods to generate initial summarized captions in this section. The system summarizes captions in accordance with the 27 rules in Sec. 2 written in XML. We used Headline style summarization (Ikeda et al. 2005) and a deletion method (Norimoto et al. 2012) to apply paraphrasing and deletion rules like paraphrasing to end a sentence with a noun or noun phrase. Moreover, we determine a new method to apply some symbol rules, ≪≫, ![]() , →, ⇒ because these symbols account for approximately 80% in all symbols in summarized captions in Sec. 2. We used CaboCha (Kudo et al., 2003) as a Japanese dependency structure analyzer and MeCab (Kudo et al., 2004) as a Part-of-Speech and Morphological Analyzer. Furthermore, we manually made a database of 203 conjunctions and conjunctive particles and tagged them. We describe a part of all methods to insert or paraphrase each symbol in below.

, →, ⇒ because these symbols account for approximately 80% in all symbols in summarized captions in Sec. 2. We used CaboCha (Kudo et al., 2003) as a Japanese dependency structure analyzer and MeCab (Kudo et al., 2004) as a Part-of-Speech and Morphological Analyzer. Furthermore, we manually made a database of 203 conjunctions and conjunctive particles and tagged them. We describe a part of all methods to insert or paraphrase each symbol in below.

- I. ≪≫ : Enclose location and time expressions

If a chunk including phrases tagged LOCATION or TIME by Cabocha.

- II.

: Enclose key terms to emphasize them

: Enclose key terms to emphasize them

If the tfidf score defined by Norimoto terms is over the threshold, the system encloses the terms. Furthermore, we delete all words except nouns inside the chunk.

- III. → : Paraphrase conjunction and conjunctive particles to express addition

If a word matches conjunctions or conjunctive particles to express addition in the database, the system paraphrases the word into an arrow →.

- IV. → : Paraphrase resultative conjunction and conjunctive particles to express causality

If a word matches resultative conjunctions or conjunctive particles to express causality in the database, the system paraphrases the word into an arrow →.

- V. ⇒ : Paraphrasing → into end of sentence when →is already used in the sentence

If some → have been applied already in sentence, the system paraphrases the last → into ⇒.

4.2 Preliminary Experiment to Evaluate Supporting System

We performed an experimental evaluation of the supporting system. The evaluation was intended to determine the most suitable summarization percentage for editing. We made between 100–40% summarization that appeared in the edit box, from which user made a summarized caption. In 100% condition, the sentences are applied all symbols and paraphrasing rules before the experiment. One student subject summarized the same original captions in Sec. 3 using the system. The system was assessed by a user-interview about the usefulness of each initial summarized sentence.

Sentences in 100%, 80% and 60% condition were chosen as useful sentences. The student suggested some reasons to choose useful sentences. One of the reasons is that ≪≫ had already been applied correctly in initial summarized sentences. We use ≪≫ to enclose location and time expressions in our guideline. Ex. 1 shows a useful initial summarized sentence and its original sentence because ≪≫ is applied correctly as ![]() ininitial summarized caption.

ininitial summarized caption. ![]() (meaning: at Joshin-etsu Expressway in Gunma) in original caption means a location expression.

(meaning: at Joshin-etsu Expressway in Gunma) in original caption means a location expression.

Another reason is that → and ⇒ gave points of beginning summarization. We use → to paraphrase conjunction and conjunctive particles to express addition and ⇒ to paraphrase → into end of sentence when → is already used in the sentence. In [Ex. 1], ![]() ⇒ (meaning: a traffic accident which the truck collided with the trailer and went up in flames and a man dies) in the initial caption is used → and ⇒. The students could instantly find point of beginning summarization around → and ⇒ not be worried seriously.

⇒ (meaning: a traffic accident which the truck collided with the trailer and went up in flames and a man dies) in the initial caption is used → and ⇒. The students could instantly find point of beginning summarization around → and ⇒ not be worried seriously.

[Ex. 1]

Original caption: ![]()

![]()

![]()

(We found a car between a heavy duty truck and a trailer after a traffic accident which the truck collided with the trailer and went up in flames and one fatality was recorded at Joshin-etsu Expressway in Gunma Prefecture.)

Initial summarized caption: ![]()

![]()

![]()

(We found car between heavy duty truck and trailer after traffic accident which ![]() collided with

collided with ![]() → burning → a man dies ≪Joshin-etsu Expressway in Gunma≫.)

→ burning → a man dies ≪Joshin-etsu Expressway in Gunma≫.)

Most of sentences chosen as not useful sentences were in 40% condition. The student remarked it was annoying for him to re-insert words deleted already in initial summarized sentences if deleted words were too many. Ex. 2 presents a not useful initial summarized caption in 40% condition and its original sentence. ![]() (the trailer driver was injured on his neck lightly) in the original caption was deleted in the initial summarized caption. There was too much deletion.

(the trailer driver was injured on his neck lightly) in the original caption was deleted in the initial summarized caption. There was too much deletion.

[Ex. 2]

Original caption: ![]()

![]()

(One fatality consider to the heavy truck driver was recorded in the driver’s seat and the trailer driver was injured on his neck lightly.)

Initial summarized caption: ![]() (driver in the seat and

(driver in the seat and ![]() driver.)

driver.)

These results show we do not need to use 40% to show in extension experiment we will perform.

5 Conclusion

In this paper, we discussed an evaluation of summarized TV captions and a supporting system to summarize them. First, we experimentally evaluated TV captions summarized for people with aphasia. We, including two speech-language-hearing therapists (STs), proposed summarization guidelines in order to assist summarization of TV captions in Japanese. After that, aphasia sufferers evaluated captions summarized in accordance with the guidelines manually in comparison with original captions. As a result, we found some people with aphasia preferred the summarized captions when they watched the TV contents. Importantly, we should provide the summarized TV captions as one effective option for closed caption expressions for aphasia sufferers.

To familiarize people with the summarized captions, we have developed a supporting system for any volunteers to summarize Japanese TV captions while keeping a consistent summarization style. Our system used some existing sentence compression methods to apply paraphrasing and deletion methods from previous research. What is more, we proposed new methods to apply symbols. The results of the experimental evaluation showed it was effective to summarize captions if ≪≫, → and ⇒ had already been applied in initial summarized sentences. We also found that we don’t need to show sentences whose length is 40% in an extension experiment we will perform soon. We will immediately upload our system on the web and increase the number of volunteers to summarize captions using it.

Bibliography

Ikeda, S. (2005) Transforming a Sentence End into News Headline Style. In: Yamamoto, K., Proceedings of the Third International Workshop on Paraphrasing (IWP2005), Jeju Island, South Korea, October 14, 2005, pp. 41–48.

Kobayashi, H. (2010) Participation restrictions in aphasia, Japanese journal of Speech, Language, and Hearing Research, 7(1):73–80. (In Japanese)

Norimoto, T. (2012) Sentence contraction of Subtitles by Analyzes Dependency Structure and Case Structure for VOD learning system. In: Yigang, L., Koyama, N., Kitagawa, F. (eds.), Language,IEICE Technical repor.ET, 110(453):305–310. (In Japanese)

Kane, S.K. (2013) Design Guidelines for Creating Voting Technology for Adults with Aphasia. In Galbraith, C. (ed.), ITIF Accessible Voting Technology Initiative #006

Carrol, J. (1998) Practical Simplification of English Newspaper to Assist Aphasic Readers. In: Minnen, G., Canning,Y., Devlin, S. Tait, J. (eds.), Proceedings of Workshop on Integrating AI and Assistive Technology, Medison, Isconsin, July 26–27, 31, pp. 7–10.

Hong, R. (2010) Dynamic captioning: video accessibility enhancement for hearing impairment. In: Wang, M., Xu, M., Yan, S., Chua, T. (eds.), Proceedings of the International Conference on Multimedia, Firenze, Italy , October 25–29 2010, pp. 421–430.

Pražák , A. (2012) Captioning of Live TV Programs through Speech Recognition and Re-speaking In: Loose. Z., Trmal, J., Psutka, J.V., Psutka, J. (eds.), Text, Speech and Dialogue Lecture Notes in Computer Science, 7499:513–519

Imai, T. (2010) Speech recognition with a seamlessly updated language model for real-time closed-captioning In: Homma, S., Kobayashi, A., Oku, T., Sato, S. (eds.), Proceeding of INTERSPEECH, Florence, Italy, 15 August 2011, pp. 262–265.

Napoles, C. (2011) Paraphrastic sentence compression with a character-based metric: tightening without deletion In: Burch, C. C., Ganitkevitch, J., Durme, B. V. (eds.), Proceedings of the Workshop on Monolingual Text-To-Text Generation, Portland, OR, 24 June 2011, pp. 84–90.

Cohn, T. A. (2009) Sentence Compression as Tree Transduction In: Lapata, M. (ed.), Artificial Intelligence Research, 34:637–674.

Knight, K. (2002) Summarization beyond sentence extraction: A probabilistic approach to sentence compression In: Marcu, D. (ed.), Artificial Intelligence, 139(1):91–107.

Boudin, F. (2013) Keyphrase Extraction for N-best Reranking in Multi-Sentence Compression In: Morin, E. Proceedings of North America Chapter of the Association for Computational Linguistics, Atlanta, USA, June 9th-15 2013, pp. 298–305.