CHAPTER 2

Create and manage Compute Resources

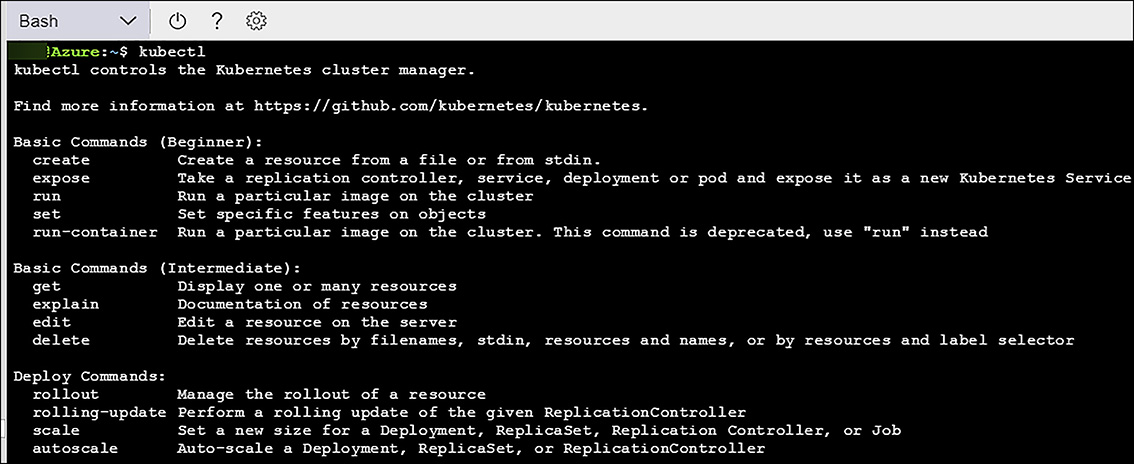

Microsoft Azure offers many features and services that can be used to create inventive solutions for almost any IT problem. Two of the most common services for designing these solutions are Microsoft Azure Virtual Machines (VM) and VM Scale Sets. Virtual machines are one of the key compute options for deploying workloads in Microsoft Azure. Virtual machines can provide the on-ramp for migrating workloads from on-premises (or other cloud providers) to Azure, because they are usually the most compatible with existing solutions. The flexibility of virtual machines makes them a key scenario for many workloads. For example, you have a choice of server operating systems, with various supported versions of Windows and Linux distributions. Azure virtual machines also provide you full control over the operating system, along with advanced configuration options for networking and storage. VM Scale Sets provide similar capabilities to VMs, and provide the ability to scale out certain types of workloads to handle large processing problems, or to just optimize cost by only running instances when needed. The third option covered in this module is Azure Container Service (ACS). Azure Container Service optimizes the configuration of popular open source tools and technologies, specifically for Azure. ACS provides a solution that offers portability for both container-based workloads and application configuration. You select the size, number of hosts, and choice of orchestrator tools and ACS handles everything else.

Skills covered in this chapter

Skill 2.1: Deploy workloads on Azure Resource Manager (ARM) virtual

machines (VMs)Skill 2.7: Manage containers with Azure Container Service (ACS)

Skill 2.1: Deploy workloads on Azure Resource Manager (ARM) virtual machines (VMs)

Microsoft Azure Virtual Machines is a flexible and powerful option for deploying workloads into the cloud. The support of both Windows and Linux-based operating systems allows for the deployment of a wide variety of workloads that traditionally run in an on-premises environment.

This skill covers how to:

Identify and run workloads in VMs

Create virtual machines

Connect to virtual machines

Identify and run workloads in VMs

Due to the flexible nature of virtual machines, they are the most common deployment target for workloads that are not explicitly designed with the cloud in mind. Azure virtual machines are based on Windows Server Hyper-V. However, not all features within Hyper-V are directly supported because much of the underlying networking and storage infrastructure is much different than a traditional Hyper-V deployment.

With that in mind, it should not come as a total surprise that all workloads from Microsoft (including roles and features of Windows Server itself) are not supported when running within Azure Virtual Machines. Microsoft Azure supports running all 64-bit versions of Windows Server starting with Windows Server 2003 and on. In the event the operating system itself is not supported like Windows Server 2003, this support is limited to issues that don’t require operating system-level troubleshooting or patches). Beyond Windows Server, much of the Microsoft server software portfolio is directly supported on Azure VMs, such as Exchange, CRM, System Center, and so on.

The best way to keep track of what is, and is not supported, is through the Microsoft support article at http://support.microsoft.com/kb/2721672. This article details which Microsoft workloads are supported within Azure. Also, the article is kept up-to-date as new workloads are brought online, or the support policy changes when new capabilities within Azure enhance what is supported.

There are several distributions of Linux that are endorsed and officially supported to run in Microsoft Azure Virtual Machines. At the time of this writing, the following distributions have been tested with the Microsoft Azure Linux Agent and have pre-defined images in the Azure Marketplace with the agent pre-configured. Table 2-1 shows the current endorsed Linux distributions.

TABLE 2-1 Endorsed Linux distributions for Azure VMs

Distribution |

Version |

CentOS |

CentOS 6.3+, 7.0+ |

CoreOS |

494.4.0+ |

Debian |

Debian 7.9+, 8.2+ |

Oracle Linux |

6.4+, 7.0+ |

Red Hat Enterprise Linux |

RHEL 6.7+, 7.1+ |

SUSE Linux Enterprise |

SLES/SLES for SAP 11 SP4 12 SP1+ |

openSUSE |

openSUSE Leap 42.1+ |

Ubuntu |

Ubuntu 12.04, 14.04, 16.04, 16.10 |

This list is updated as new versions and distributions are on-boarded and can be accessed online at https://docs.microsoft.com/en-us/azure/virtual-machines/linux/endorsed-distros.

You can also bring your own custom version of Linux if you deploy the Microsoft Azure Linux agent to it. You should be aware that the Microsoft Azure support team offers various levels of support for open source technologies including custom distributions of Linux. For more details see https://support.microsoft.com/en-us/help/2941892/support-for-linux-and-open-source-technology-in-azure.

Running Linux on Microsoft Azure Virtual Machines requires an additional piece of software known as the Microsoft Azure Linux Agent (waagent). This software agent provides much of the base functionality for provisioning and communicating with the Azure Fabric Controller including the following:

Image provisioning

Creation of a user account

Configuring SSH authentication types

Deployment of SSH public keys and key pairs

Setting the host name

Publishing the host name to the platform DNS

Reporting SSH host key fingerprint to the platform

Resource disk management

Formatting and mounting the resource disk

Configuring swap space

Networking

Manages routes to improve compatibility with platform DHCP servers

Ensures the stability of the network interface name

Kernel

Configures virtual NUMA (disable for kernel <2.6.37)

Consumes Hyper-V entropy for /dev/random

Configures SCSI timeouts for the root device (which could be remote)

Diagnostics

Console redirection to the serial port

SCVMM deployments

Detects and bootstraps the VMM agent for Linux when running in a System Center Virtual Machine Manager 2012 R2 environment

Manages virtual machine extensions to inject component authored by Microsoft and Partners into Linux VM (IaaS) to enable software and configuration automation

VM Extension reference implementation at https://github.com/Azure/azure-linux-extensions

The Azure Fabric Controller communicates to this agent in two ways:

A boot-time attached DVD for IaaS deployments. This DVD includes an OVF-compliant configuration file that includes all provisioning information other than the actual SSH keypairs.

A TCP endpoint exposing a REST API used to obtain deployment and topology configuration.

MORE INFO MICROSOFT LINUX AGENT

For more information on how the Microsoft Azure Linux agent works and how to enable it on a Linux distribution see https://docs.microsoft.com/en-us/azure/virtual-machines/linux/agent-user-guide.

Create virtual machines

There are multiple ways to create virtual machines depending on your intended use. The easiest way to create an individual virtual machine is to use the Azure portal. If you have a need for automated provisioning (or you just enjoy the command line) the Azure PowerShell cmdlets and the Azure cross-platform command-line tools (CLI) are a good fit. For more advanced automation that could even include orchestration of multiple virtual machines Azure Resource Manager templates could also be used. Each method brings with its own capabilities and tradeoffs, and it is important to understand which tool should be used in the right scenario.

To create a virtual machine using the Azure portal, you first click the new button and you can then either search for an image or solution or you can browse by clicking Compute, as shown in Figure 2-1. Within the Compute category you will see the featured images, and if one of those images is not appropriate you can click the See all option to view a larger selection.

FIGURE 2-1 The Azure Marketplace view for virtual machines

Create an Azure VM (Azure portal)

The Azure portal allows you to provision virtual machines from a wide variety of virtual machine images, and pre-defined templates for entire solutions such as SQL Server Always On, or even a complete SharePoint farm using just your web browser. For individual virtual machines you can specify some, but not all, configuration options at creation time. Some options, such as configuring the load balancer, and specifying additional storage configuration such as adding data disks, are not available at creation time but can be set after the virtual machine is created. Using the Azure portal, you can create virtual machines individually or you can deploy an ARM template that can deploy many virtual machines (including other Azure resources as well). You can even use the Azure portal to export an ARM template from an existing deployed resource. Through the integrated Azure Cloud Shell, you can also execute commands from the command line that can also be used to provision virtual machines. After an image is selected, you navigate through several screens to configure the virtual machine.

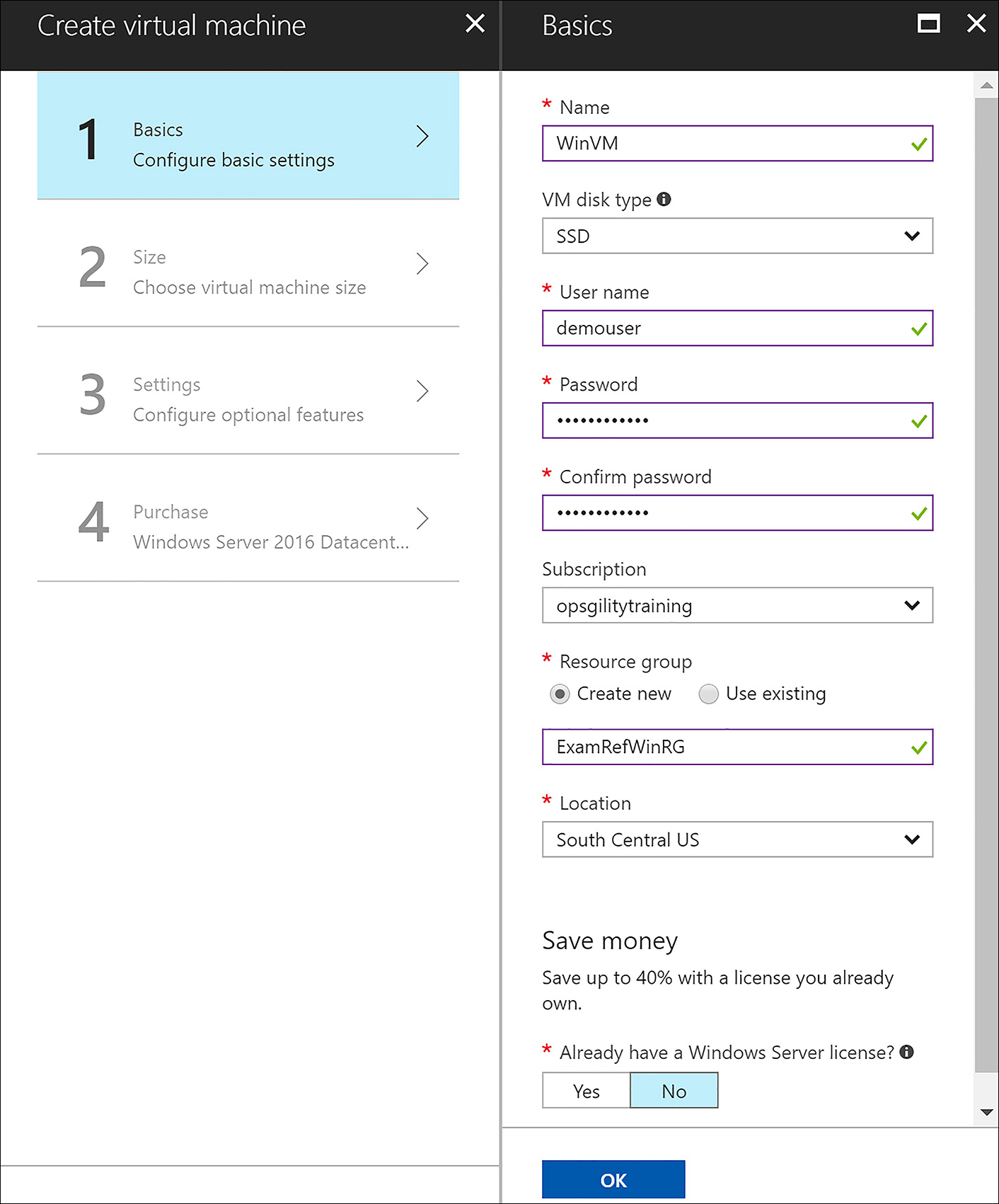

The first blade to complete is the Basics blade, as shown in Figure 2-2. The basics blade allows you to set the following configuration options:

The name of the virtual machine.

Standard hard disk drive storage (HDD) or Premium solid-state disk (SSD) based storage.

The administrator credentials.

User name and password for Windows and Linux.

Optionally an SSH key for Linux VMs.

The Azure subscription to create the VM in (if you have more than one).

The resource group name to deploy the virtual machine and its related resources in, such as network interface, public IP, etc.

The Azure region the virtual machine is created in.

If you already have licenses for Windows Server you can take advantage by using them in Azure. This is known as the Hybrid Use Benefit and can cut your bill significantly.

FIGURE 2-2 The Basics blade of the portal creation process for a Windows-based virtual machine

You can specify an existing SSH public key or a password when creating a Linux VM. If the SSH public key option is selected you must paste in the public key for your SSH certificate. You can create the SSH certificate using the following command:

ssh-keygen -t rsa -b 2048

To retrieve the public key for your new certificate, run the following command:

cat ~/.ssh/id_rsa.pub

From there, copy all the data starting with ssh-rsa and ending with the last character on the screen and paste it into the SSH public key box, as shown in Figure 2-3. Ensure you don’t include any extra spaces.

FIGURE 2-3 The Basics blade of the portal creation process for a Linux-based virtual machine

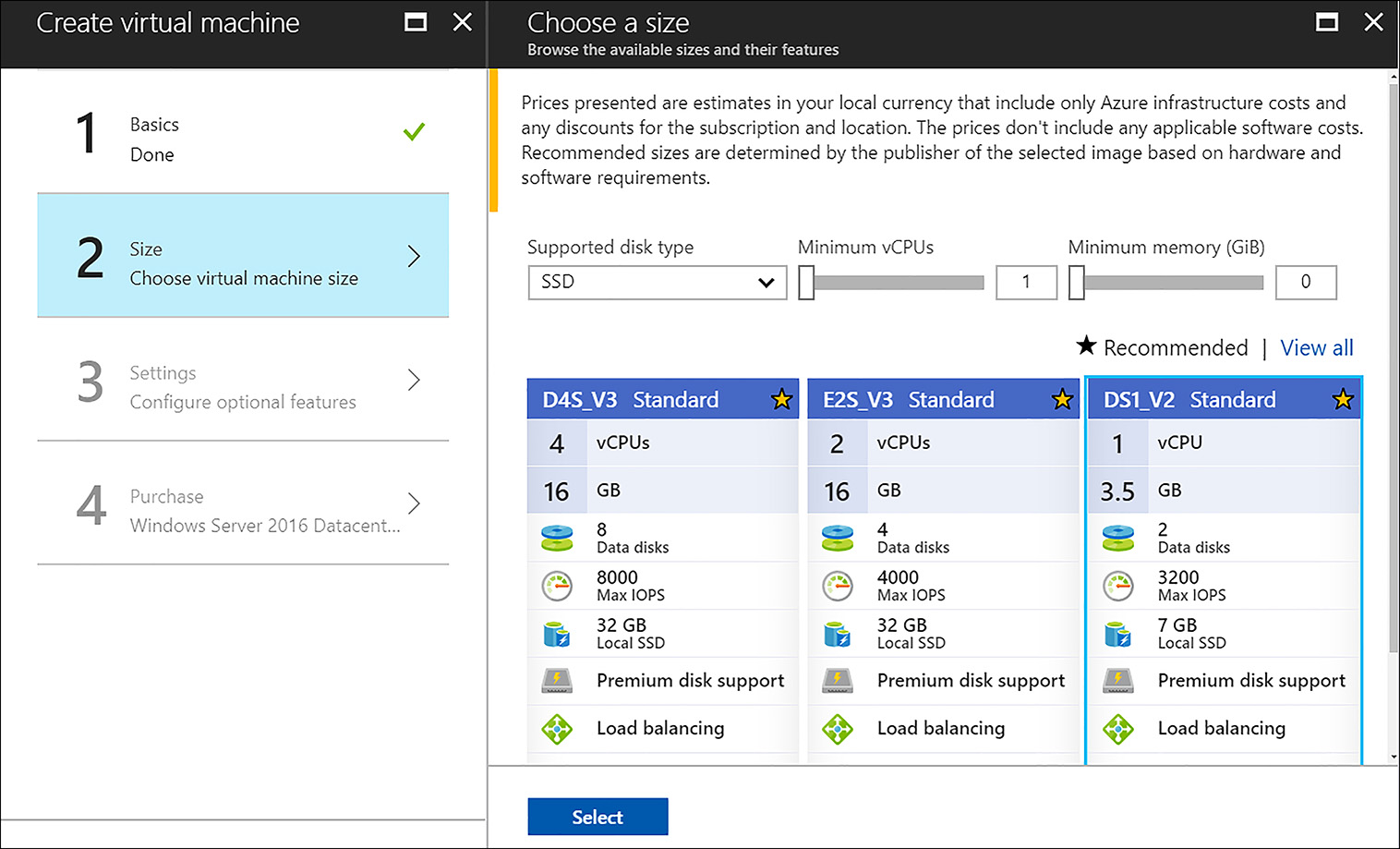

After setting the basic configuration for a virtual machine you then specify the virtual machine size, as show in Figure 2-4. The portal gives you the option of filtering the available instance sizes by specifying the minimum number of virtual CPUs (vCPUs) and the minimum amount of memory, as well as whether the instance size supports solid state disks (SSD) or only traditional hard disk drives (HDD).

FIGURE 2-4 Setting the size of the virtual machine

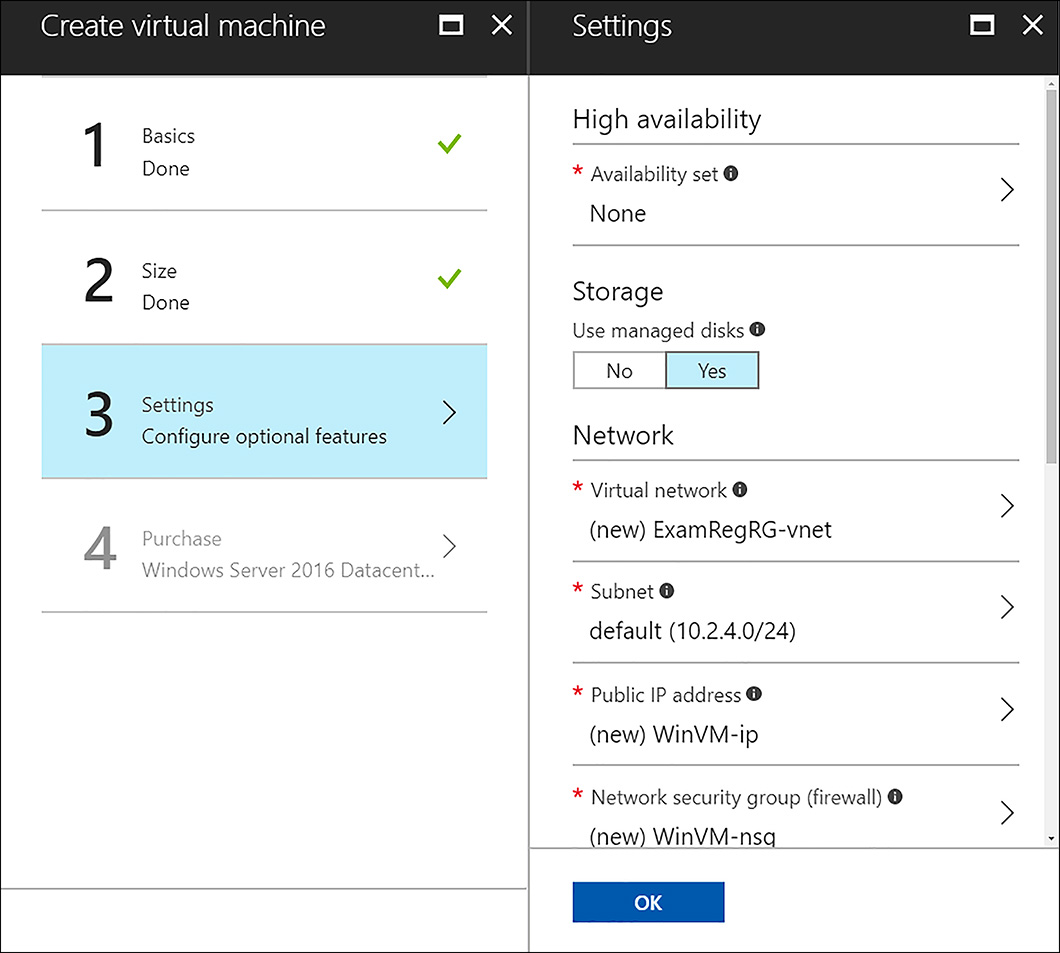

The Settings blade, shown in Figure 2-5, allows you to set the following configuration

options:

Whether the virtual machine is part of an availability set

Whether to use managed or unmanaged disks

What virtual network and subnet the network interface should use

What public IP (if any) should be used

What network security group (if any) should be used (you can specify new rules here as well)

FIGURE 2-5 Specifying virtual machine configuration settings

The last step to create a virtual machine using the Azure portal is to read through and agree to the terms of use and click the purchase button, as shown in Figure 2-6. From there, the portal performs some initial validation of your template, as well as checks many of the resources against policies in place on the subscription and resource group you are targeting. If there are no validation errors the template is deployed.

FIGURE 2-6 Accepting the terms of use and purchasing

EXAM TIP

EXAM TIP

The link next to the purchase button allows you to download an Azure Resource Manager template and parameters file for the virtual machine you just configured in the portal. You can customize this template and use it for future automated deployments.

Create an Azure VM (PowerShell)

The PowerShell cmdlets are commonly used for automating common tasks such as stopping and starting virtual machines, deploying ARM templates, or for making configuration settings on a vast number of resources at the same time. Using the Azure PowerShell cmdlets, you can also create virtual machines programmatically. Let’s walk through creating a similar virtual machine to what was shown in the previous section using PowerShell.

The approach we’ll use is to programmatically create the resources needed for the virtual machine such as storage, networking, availability sets and so on, and then associate them with the virtual machine at creation time.

Before you can create or manage any resources in your Azure subscription using the Azure PowerShell cmdlets you must login by executing the Login-AzureRmAccount cmdlet (which is an alias to the Add-AzureRmAccount cmdlet).

Login-AzureRmAccount

A virtual machine and all its related resources such as network interfaces, disks, and so on must be created inside of an Azure Resource Group. Using PowerShell, you can create a new resource group with the New-AzureRmResourceGroup cmdlet.

$rgName = "Contoso"

$location = "West US"

New-AzureRmResourceGroup -Name $rgName -Location $location

This cmdlet requires you to specify the resource group name, and the name of the Azure region. These values are defined in the variables $rgName, and $location. You can use the Get-AzureRmResourceGroup cmdlet to see if the resource group already exists or not, and you can use the Get-AzureRmLocation cmdlet to view the list of available regions.

Azure virtual machines must be created inside of a virtual network. Like the portal, using PowerShell, you can specify an existing virtual network or you can create a new one. In the code example below, the New-AzureRmVirtualNetworkSubnetConfig cmdlet is used to create two local objects that represent two subnets in the virtual network. The virtual network is actually created within the call to New-AzureRmVirtualNetwork. It is passed in the address space of 10.0.0.0/16, and you could also pass in multiple address spaces similar to how the subnets were passed in using an array.

$subnets = @()

$subnet1Name = "Subnet-1"

$subnet2Name = "Subnet-2"

$subnet1AddressPrefix = "10.0.0.0/24"

$subnet2AddressPrefix = "10.0.1.0/24"

$vnetAddresssSpace = "10.0.0.0/16"

$VNETName = "ExamRefVNET-PS"

$subnets += New-AzureRmVirtualNetworkSubnetConfig -Name $subnet1Name `

-AddressPrefix $subnet1AddressPrefix

$subnets += New-AzureRmVirtualNetworkSubnetConfig -Name $subnet2Name `

-AddressPrefix $subnet2AddressPrefix

$vnet = New-AzureRmVirtualNetwork -Name $VNETName `

-ResourceGroupName $rgName `

-Location $location `

-AddressPrefix $vnetAddresssSpace `

-Subnet $subnets

Virtual Machines store their virtual hard disk (VHD) files in an Azure storage account. If you are using managed disks (see more in Skill 2.3) Azure manages the storage account for you. This example uses unmanaged disks so the code creates a new storage account to contain the VHD files. You can use an existing storage account for storage or create a new storage account. The PowerShell cmdlet Get-AzureRmStorageAccount returns an existing storage account. To create a new one, use the New-AzureRmStorageAccount cmdlet, as the following example shows.

$saName = "examrefstoragew123123"

$storageAcc = New-AzureRmStorageAccount -ResourceGroupName $rgName `

-Name $saName `

-Location $location `

-SkuName Standard_LRS

$blobEndpoint = $storageAcc.PrimaryEndpoints.Blob.ToString()

The $blobEndpoint variable is used in a later code snippet to specify the location of where the VMs disks are created.

Use the New-AzureRmAvailabilitySet cmdlet to create a new availability set, or to retrieve an existing one use Get-AzureRmAvailabilitySet.

$avSet = New-AzureRmAvailabilitySet -ResourceGroupName $rgName `

-Name $avSet `

-Location $location

To connect to the virtual machine remotely create a public IP address resource.

$pip = New-AzureRmPublicIpAddress -Name $ipName `

-ResourceGroupName $rgName `

-Location $location `

-AllocationMethod Dynamic `

-DomainNameLabel $dnsName

By default, adding a public IP to a VM’s network interface will allow in all traffic regardless of the destination port. To control this, create a network security group and only open the ports you will use. The example below creates an array that will be used for the rules and populates the array with the New-AzureRmNetworkSecurityRuleConfig cmdlet.

# Add a rule to the network security group to allow RDP in

$nsgRules = @()

$nsgRules += New-AzureRmNetworkSecurityRuleConfig -Name "RDP" `

-Description "RemoteDesktop" `

-Protocol Tcp `

-SourcePortRange "*" `

-DestinationPortRange "3389" `

-SourceAddressPrefix "*" `

-DestinationAddressPrefix "*" `

-Access Allow `

-Priority 110 `

-Direction Inbound

The New-AzureRmNetworkSecurityGroup cmdlet creates the network security group. The rules are passed in using the SecurityRules parameter.

$nsgName = "ExamRefNSG"

$nsg = New-AzureRmNetworkSecurityGroup -ResourceGroupName $rgName `

-Name $nsgName `

-SecurityRules $nsgRules `

-Location $location

Now that the public IP and the network security group are created, use the New-AzureRmNetworkInterface cmdlet to create the network interface for the VM. This cmdlet accepts the unique ID for the subnet, public IP, and the network security group for configuration.

$nicName = "ExamRefVM-NIC"

$nic = New-AzureRmNetworkInterface -Name $nicName `

-ResourceGroupName $rgName `

-Location $location `

-SubnetId $vnet.Subnets[0].Id `

-PublicIpAddressId $pip.Id `

-NetworkSecurityGroupId $nsg.ID

Now that all the resources are created that the virtual machine requires, use the New-AzureRmVMConfig cmdlet to instantiate a local configuration object that represents a virtual machine to associate them together. The virtual machine’s size and the availability set are specified during this call.

$vmSize = "Standard_DS1_V2"

$vm = New-AzureRmVMConfig -VMName $vmName -VMSize $vmSize `

-AvailabilitySetId $avSet.Id

After the virtual machine configuration object is created there are several configuration options that must be set. This example shows how to set the operating system and the credentials using the Set-AzureRmVMOperatingSystem cmdlet. The operating system is specified by using either the Windows or the Linux parameter. The ProvisionVMAgent parameter tells Azure to automatically install the VM agent on the virtual machine when it is provisioned. The Credential parameter specifies the local administrator username and password with the values passed to the $cred object.

$cred = Get-Credential

Set-AzureRmVMOperatingSystem -Windows `

-ComputerName $vmName `

-Credential $cred `

-ProvisionVMAgent `

-VM $vm

The operating system image (or existing VHD) must be specified for the VM to boot. Setting the image is accomplished by calling the Set-AzureRmVMSourceImage cmdlet and specifying the Image publisher, offer, and SKU. These values can be retrieved by calling the cmdlets Get-AzureRmVMImagePublisher, Get-AzureRmVMImageOffer, and Get-AzureRmVMImageSku.

$pubName = "MicrosoftWindowsServer"

$offerName = "WindowsServer"

$skuName = "2016-Datacenter"

Set-AzureRmVMSourceImage -PublisherName $pubName `

-Offer $offerName `

-Skus $skuName `

-Version "latest" `

-VM $vm

$osDiskName = "ExamRefVM-osdisk"

$osDiskUri = $blobEndpoint + "vhds/" + $osDiskName + ".vhd"

Set-AzureRmVMOSDisk -Name $osDiskName `

-VhdUri $osDiskUri `

-CreateOption fromImage `

-VM $vm

The final step is to provision the virtual machine by calling the New-AzureRmVMConfig cmdlet. This cmdlet requires you to specify the resource group name to create the virtual machine in and the virtual machine configuration, which is in the $vm variable.

New-AzureRmVM -ResourceGroupName $rgName -Location $location -VM $vm

EXAM TIP

EXAM TIP

In addition to knowing how to provision a virtual machine from an image, it is good to understand how to create from an existing disk using the Set-AzureRmVMOSDisk -CreateOption attach parameter (for more information see https://docs.microsoft.com/en-us/powershell/module/azurerm.compute/set-azurermvmosdisk) or using an existing managed operating system disk (for more information see https://docs.microsoft.com/en-us/azure/virtual-machines/windows/create-vm-specialized).

Create an Azure VM (CLI)

The Azure CLI tools are used in a similar fashion to the PowerShell cmdlets. They are built to run cross platform on Windows, Mac, or Linux. The syntax of the CLI tools is designed to be familiar to users of a Bash scripting environment. Let’s walk through an example that creates the same resources as the previous PowerShell example, except creating a Linux-based virtual machine instead.

Like the PowerShell cmdlets, you first must login to access Azure using the CLI tools. The approach is slightly different, after executing the command az login, the tools provide you with a link to navigate to in the browser, and a code to enter. After entering the code and your credentials you are logged in to the command line.

az login

Create a new resource group by executing the az group create command and specifying a unique name and the region.

#!/bin/bash

rgName="Contoso"

location="WestUS"

az group create --name $rgName --location $location

The following command can be used to identify available regions that you can create resources and resource groups in.

az account list-locations

From here you have two options. You can create a virtual machine with a very simple syntax that generates much of the underlying configuration for you such as a virtual network, public IP address, storage account, and so on, or you can create and configure each resource and link to the virtual machine at creation time. Here is an example of the syntax to create a simple stand-alone virtual machine:

# Creating a simple virtual machine

vmName="myUbuntuVM"

imageName="UbuntuLTS"

az vm create --resource-group $rgName --name $vmName --image $imageName

--generate-ssh-keys

EXAM TIP

EXAM TIP

The generate-ssh-keys parameter dynamically generates keys to connect to the Linux virtual machine for you if they are missing. The new keys are stored in ~/.ssh. You can also specify a user name and password using the admin-username and admin-password parameters if you set the authentication-type parameter to password (default is ssh).

To create all the resources from scratch, as shown in the section on creating a virtual machine using the PowerShell cmdlets, you can start with the virtual network. Use the az network vnet create command to create the virtual network. This command requires the name of the virtual network, a list of address prefixes, and the location to create the virtual network in.

vnetName="ExamRefVNET-CLI"

vnetAddressPrefix="10.0.0.0/16"

az network vnet create --resource-group $rgName -n ExamRefVNET-CLI

--address-prefixes $vnetAddressPrefix -l $location

The az network vnet subnet create command is used to add additional subnets to the virtual network. This command requires the resource group name, the name of the virtual network, the subnet name, and the address prefix for the subnet to create.

Subnet1Name="Subnet-1"

Subnet2Name="Subnet-2"

Subnet1Prefix="10.0.1.0/24"

Subnet2Prefix="10.0.2.0/24"

az network vnet subnet create --resource-group $rgName --vnet-name $vnetName -n

$Subnet1Name --address-prefix $Subnet1Prefix

az network vnet subnet create --resource-group $rgName --vnet-name $vnetName -n

$Subnet2Name --address-prefix $Subnet2Prefix

The az storage account create command is used to create a new storage account. In this example, the code uses the new storage account to store the VHD files for the Linux VM created later.

storageAccountName="examrefstoragew124124"

az storage account create -n $storageAccountName --sku Standard_LRS -l $location

--kind Storage --resource-group $rgName

The az vm availability-set create command is used to create a new availability set.

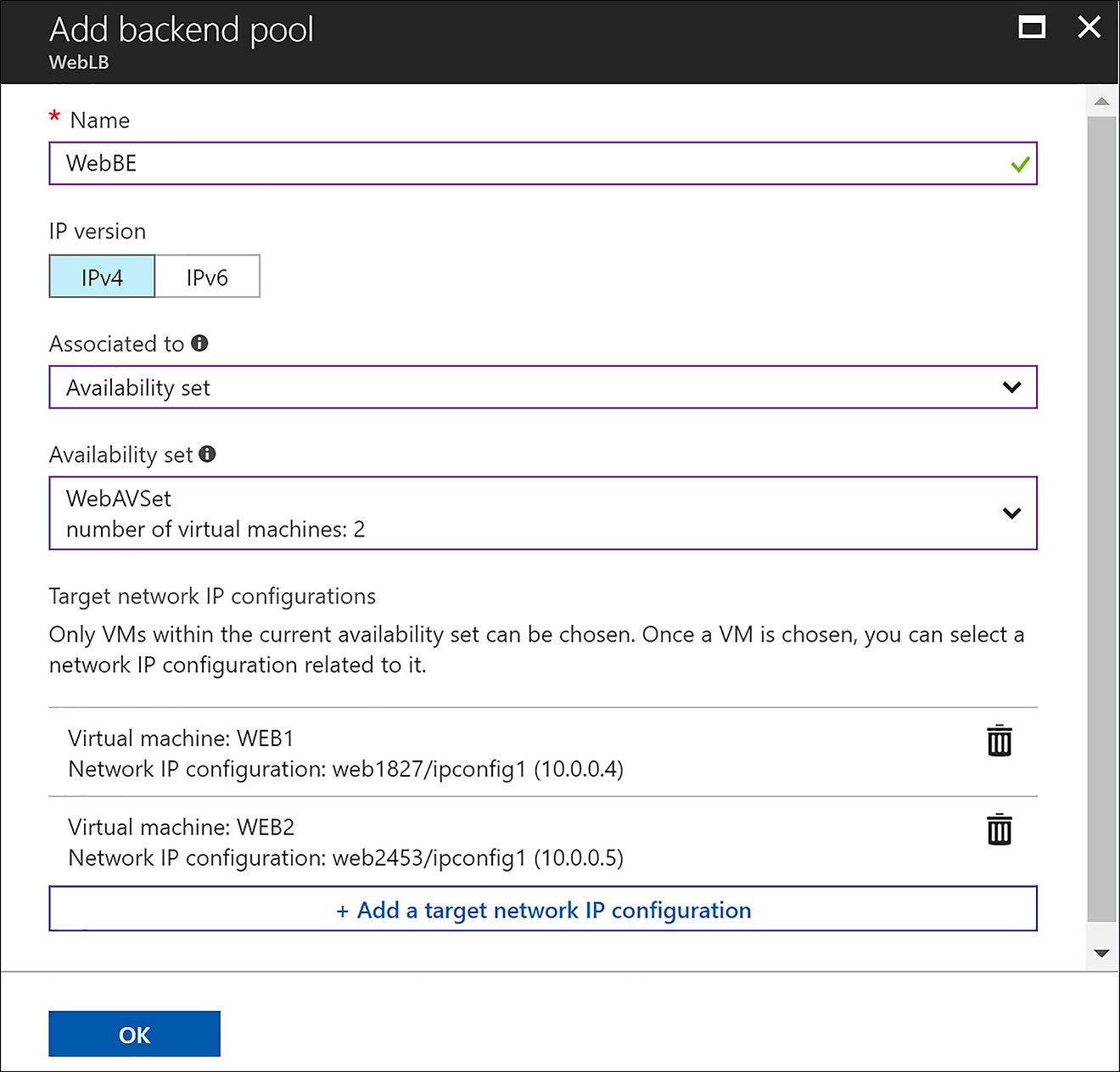

avSetName="WebAVSET"

az vm availability-set create -n $avSetName -g $rgName --platform-fault-domain-count

3 --platform-update-domain-count 5 --unmanaged -l $location

The az network public-ip create command is used to create a public IP resource. The allocation-method parameter can be set to dynamic or static.

dnsRecord="examrefdns123123"

ipName="ExamRefCLI-IP"

az network public-ip create -n $ipName -g $rgName --allocation-method Dynamic

--dns-name $dnsRecord -l $location

The az network nsg create command is used to create a network security group.

nsgName="webnsg"

az network nsg create -n $nsgName -g $rgName -l $location

After the network security group is created, use the az network rule create command to add rules. In this example, the rule allows inbound connections on port 22 for SSH and another rule is created to allow in HTTP port 80.

# Create a rule to allow in SSH

az network nsg rule create -n SSH --nsg-name $nsgName --priority 100 -g $rgName --access Allow --description "SSH Access" --direction Inbound --protocol Tcp --destination-address-prefix "*" --destination-port-range 22 --source-address-prefix "*" --source-port-range "*"

# Create a rule to allow in HTTP

az network nsg rule create -n HTTP --nsg-name webnsg --priority 101 -g $rgName --access Allow --description "Web Access" --direction Inbound --protocol Tcp --destination-address-prefix "*" --destination-port-range 80 --source-address-prefix "*" --source-port-range "*"

The network interface for the virtual machine is created using the az network nic create command.

nicname="WebVMNic1"

az network nic create -n $nicname -g $rgName --subnet $Subnet1Name --network-security-

group $nsgName --vnet-name $vnetName --public-ip-address $ipName -l $location

To create a virtual machine, you must specify whether it will boot from a custom image, a marketplace image, or an existing VHD. You can retrieve a list of marketplace images by executing the following command:

az vm image list

The command az image list is used to retrieve any of your own custom images you have captured.

Another important piece of metadata needed to create a virtual machine is the VM size. You can retrieve the available form factors that can be created in each region by executing the following command:

az vm list-sizes --location $location

The last step is to use the az vm create command to create the virtual machine. This command allows you to pass the name of the availability set, the virtual machine size, the image the virtual machine should boot from, and other configuration data such as the username and password, and the storage configuration.

imageName="Canonical:UbuntuServer:17.04:latest"

vmSize="Standard_DS1_V2"

containerName=vhds

user=demouser

vmName="WebVM"

osDiskName="WEBVM1-OSDISK.vhd"

az vm create -n $vmName -g $rgName -l $location --size $vmSize --availability-set

$avSetName --nics $nicname --image $imageName --use-unmanaged-disk --os-disk-name

$osDiskName --storage-account $storageAccountName --storage-container-name

$containerName --generate-ssh-keys

MORE INFO AZURE POWERSHELL CMDLETS AND CLI TOOL

The Azure PowerShell cmdlets and CLI tools can be downloaded and installed at https://azure.microsoft.com/en-us/downloads/. Scroll down to the Command-Line Tools section for installation links and documentation.

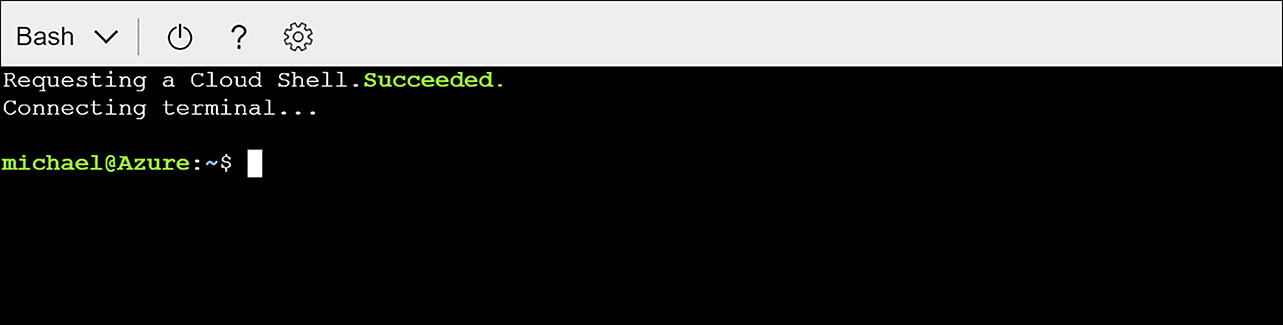

The Azure Cloud Shell, shown in Figure 2-7, is a feature of the Azure portal that provides access to an Azure command line (CLI or PowerShell) using the user credentials you are already logged into without the need to install additional tools on your computer.

FIGURE 2-7 Starting the Cloud Shell

EXAM TIP

EXAM TIP

Stopping a virtual machine from the Azure portal, Windows PowerShell with the Stop-AzureRmVM cmdlet, or the az vm deallocate command puts the virtual machine in the Stopped (deallocated) state (az vm stop puts the VM in the Stopped state). It is important to understand the difference between Stopped (deallocated) and just Stopped. In the Stopped state a virtual machine is still allocated in Azure, and the operating system is simply shut down. You will still be billed for the compute time for a virtual machine in this state. A virtual machine in the Stopped (deallocated) state is no longer occupying physical hardware in the Azure region, and you will not be billed for the compute time (you are still billed for the underlying storage).

Creating an Azure VM from an ARM template

Azure Resource Manager (ARM) templates provide the ability to define the configuration of resources like virtual machines, storage accounts, and so on in a declarative manner. ARM templates go beyond just providing the ability to create the resources; some resources such as virtual machines also allow you to customize them and create dependencies between them. This allows you to create templates that have capabilities for orchestrated deployments of completely functional solutions. Chapter 5, “Design and deploy ARM templates” goes in-depth on authoring templates. Let’s start with learning how to deploy them.

The Azure team maintains a list of ARM templates with examples for most resources. This list is located at https://azure.microsoft.com/en-us/resources/templates/, and is backed by a source code repository in GitHub. If you want to go directly to the source to file a bug or any other reason you can access it at https://github.com/Azure/azure-quickstart-templates.

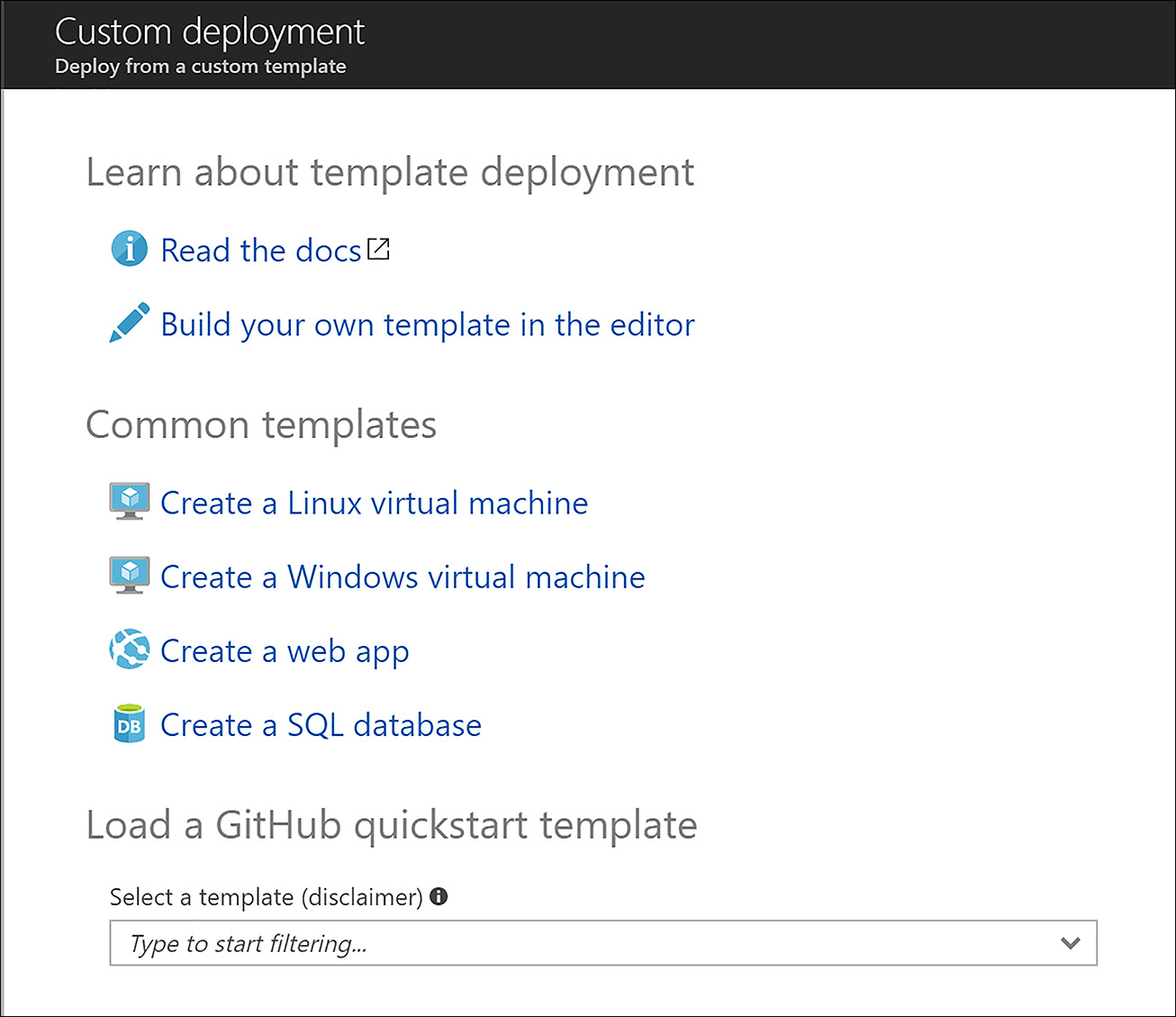

You can deploy ARM templates using the portal, the command line tools, or directly using the REST API. Let’s start with deploying a template that creates virtual machines using the portal. To deploy a template from the portal, click the NEW button and search for Template Deployment, as shown in Figure 2-8, and select the Template Deployment name from the search results, and then click Create.

FIGURE 2-8 The Template Deployment option

From there, you have the option to build your own template using the portal’s editor (you can paste your own template in or upload from a file using this option too), or choose from one of the most common templates. Last of all, you can search the existing samples in the quickstart samples repository and choose one of them as a starting point. Figure 2-9 shows the various options after clicking the template deployment search result.

FIGURE 2-9 Options for configuring a template deployment

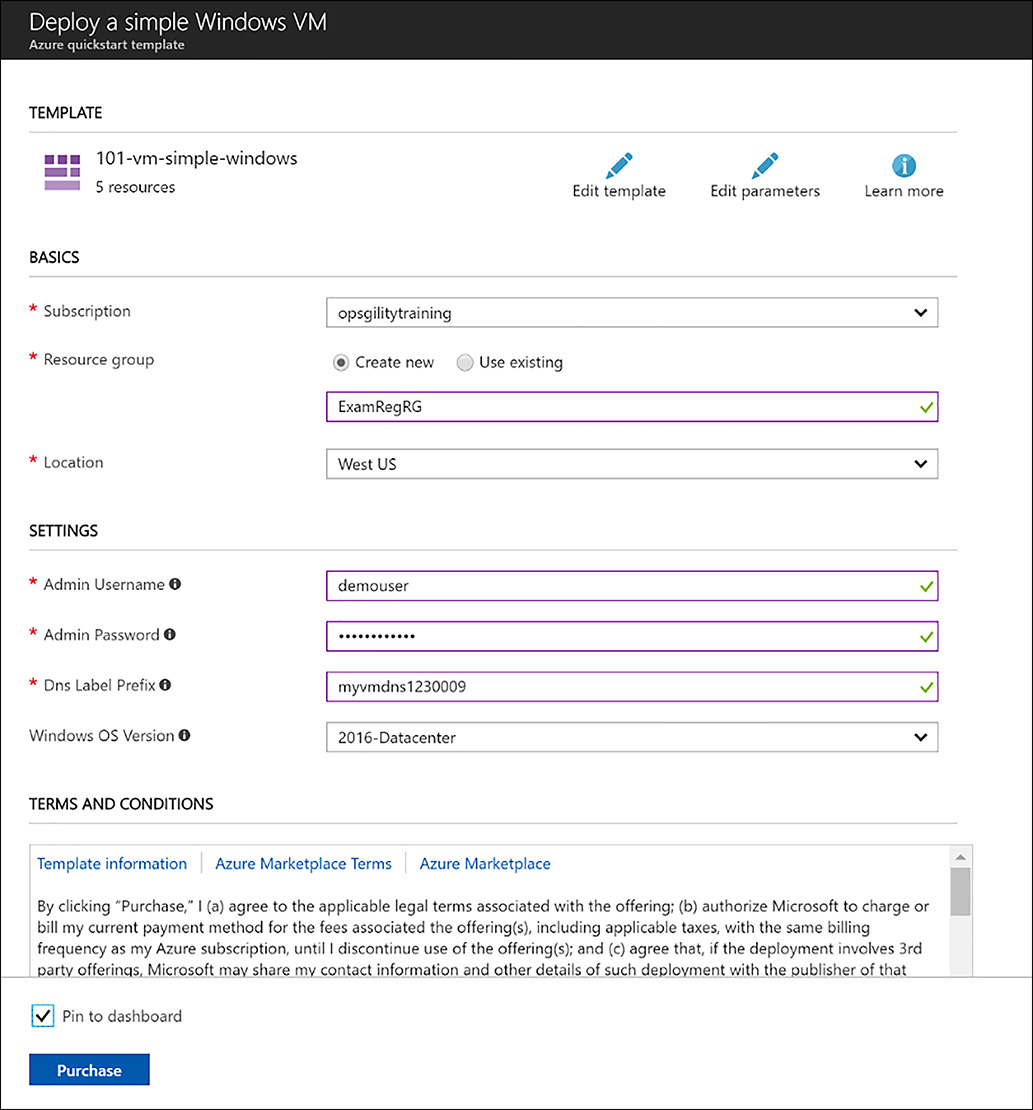

Choosing one of the common templates links opens the next screen, which gives you the options for deploying the template. A template deployment requires you to specify a subscription and resource group, along with any parameters that the template requires. In figure 2-10 the Admin Username, Admin Password, DNS Label Prefix, and Windows operating system version values are all parameters defined in the template.

FIGURE 2-10 Deploying a template

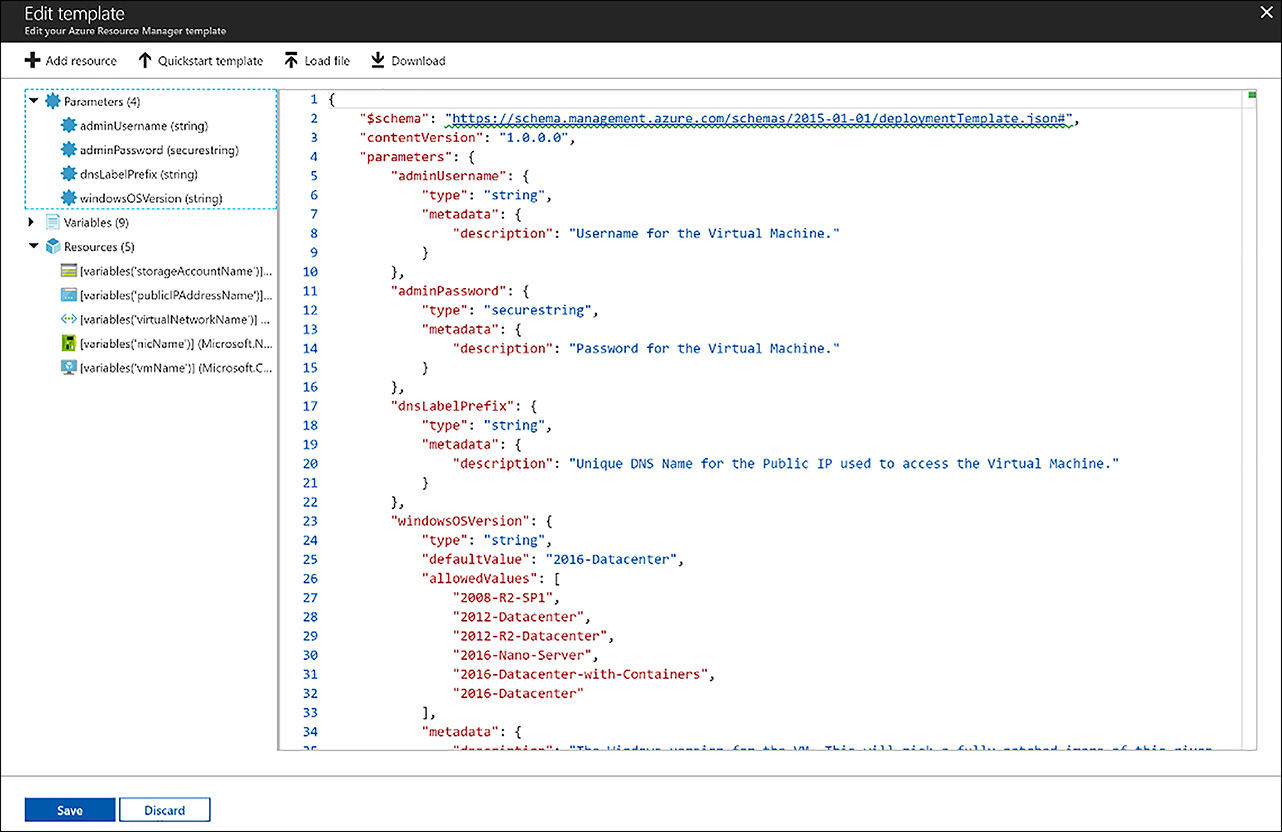

Clicking the Edit Template button opens the editor shown in Figure 2-11, where you can continue modifying the template. On the left navigation, you can see the parameters section that defines the four parameters shown in the previous screen, as well as the resource list, which defines the resources that the template will create. In this example, the template defines a storage account, public IP address, virtual network, network interface, and the virtual machine.

FIGURE 2-11 The template editor view

The editor also allows you to download the template as a JavaScript Object Notation (.json) file for further modification or for deployment using an alternative method.

The Edit Parameters button allows you to edit a JSON view of the parameters for the template, as shown in Figure 2-12. This file can also be downloaded and is used to provide different behaviors for the template at deployment time without modifying the entire template.

FIGURE 2-12 Editing template parameters using the Azure portal

Common examples of using a parameters file:

Defining different instance sizes or SKUs for resources based on the intended usage (small instances for test environments for example)

Defining different number of instances

Different regions

Different credentials

The last step to creating an ARM template using the portal is to click the Purchase button after reviewing and agreeing to the terms and conditions on the screen.

The Azure command line tools can also deploy ARM templates. The template files can be located locally on your file system or accessed via HTTP/HTTPs. Common deployment models have the templates deployed into a source code repository or an Azure storage account to make it easy for others to deploy the template.

This example uses the Azure PowerShell cmdlets to create a new resource group, specify the location and then deploy a template by specifying the URL from the Azure QuickStart GitHub repository.

# Create a Resource Group

$rgName = "Contoso"

$location = "WestUs"

New-AzureRmResourceGroup -Name $rgName -Location $location

# Deploy a Template from GitHub

$deploymentName = "simpleVMDeployment"

$templateUri = "https://raw.githubusercontent.com/Azure/azure-quickstart-templates/master/101-vm-simple-windows/azuredeploy.json"

New-AzureRmResourceGroupDeployment -Name $deploymentName `

-ResourceGroupName $rgName `

-TemplateUri $templateUri

If the template requires parameters without default values, the cmdlet will prompt you to input their values.

EXAM TIP

EXAM TIP

The parameters to a template can be passed to the New-AzureRmResourceGroupDeployment cmdlet using the TemplateParameterObject parameter for values that are defined directly in the script as .json. The TemplateParameterFile parameter can be used for values stored in a local .json file. The TemplateParameterUri parameter for values that are stored in a .json file at an HTTP endpoint.

The following example uses the Azure CLI tools to accomplish the same task.

#!/bin/bash

# Create the resource group

rgName="Contoso"

location="WestUS"

az group create --name $rgName --location $location

# Deploy the specified template to the resource group

deploymentName="simpleVMDeployment"

templateUri="https://raw.githubusercontent.com/Azure/azure-quickstart-templates/master/101-vm-simple-linux/azuredeploy.json"

az group deployment create --name $deploymentName --resource-group $rgName --template-uri $templateUri

EXAM TIP

EXAM TIP

The parameters to a template can be passed to the az group deployment create command using the parameters parameter for values that are defined directly in the script as .json. The template-file parameter can be used for values stored in a local .json file. The template-uri parameter for values that are stored in a .json file at an HTTP endpoint.

Connecting to virtual machines

There are many ways to connect to virtual machines. You should consider options such as connecting to VMs using their public IP addresses and protecting them with network security groups, and allowing only the port for the service you are connecting to. You should also understand how to connect to a VM on its private IP address. This introduces additional connectivity requirements such as ExpressRoute, Site-to-Site, or Point-to-Site to put your client on the same network as your VMs. These technologies are discussed in Chapter 4, “Implement Virtual Networks.” In this section we’ll review the most common tools to connect and manage your VMs.

Connecting to a Windows VM with remote desktop

The default connectivity option for a Windows-based virtual machine is to use the remote desktop protocol (RDP) and a client such as mstsc.exe. This service listens on TCP port 3389 and provides full access to the Windows desktop. This service is enabled by default on all Windows-based VMs. The Azure portal provides a connect button that will appear enabled for virtual machines that have a public IP address associated with them, as shown in Figure 2-13.

FIGURE 2-13 The Connect button for an Azure VM

You can launch a remote desktop session from Windows PowerShell by using the Get-AzureRmRemoteDesktopFile cmdlet. The Get-AzureRmRemoteDesktopFile cmdlet performs the same validation as the Azure portal. The API it calls validates that a public IP address is associated with the virtual machine’s network interface. If a public IP exists, it generates an .rdp file consumable with a Remote Desktop client. The .rdp file will have the IP address of the VIP and public port (3389) of the virtual machine specified embedded. There are two parameters that alter the behavior of what happens with the generated file.

Use the Launch parameter to retrieve the .rdp file and immediately open it with a Remote Desktop client. The following example launches the Mstsc.exe (Remote Desktop client), and the client prompts you to initiate the connection.

Get-AzureRmRemoteDesktopFile -ResourceGroupName $rgName -Name $vmName -Launch

The second behavior is specifying the LocalPath parameter, as the following example shows. Use this parameter to save the .rdp file locally for later use.

Get-AzureRmRemoteDesktopFile -ResourceGroupName $serviceName -Name $vmName -LocalPath $path

Connecting to a Windows VM with PowerShell remoting

It is also possible to connect to a Windows-based virtual machine using Windows Remote Management (WinRM), or more commonly known as Windows PowerShell remoting. The Set-AzureRmVMOperatingSystem supports two parameters that define the behavior of WinRM on a virtual machine at provision time.

WinRMHttps Enables connectivity over SSL using port 5986. If you connect to your virtual machine over the public internet, ensure this option is used to avoid man-in-the-middle attacks.

WinRMHttp Enables connectivity using 5985 (no SSL required). Enable this option if you want to connect to your virtual machines using PowerShell remoting from other virtual machines on the same private network.

To ensure your virtual machine is secure from man-in-the-middle attacks you must deploy a self-signed SSL certificate that your local computer trusts and that the virtual machine trusts. This is accomplished by creating the certificate using the New-SelfSignedCertificate cmdlet, or makecert.exe. After the certificate is created, it must be added to an Azure Key Vault to secure it as a secret.

During provisioning you reference the secret using the WinRMCertificateUrl parameter of the Set-AzureRmVMOperatingSystem cmdlet if you are creating the virtual machine PowerShell, or if you are using a template you can specify the sourceVault and certificate information directly as part of the secrets configuration stored in the osProfile section.

"secrets": [

{

"sourceVault": {

"id": "<resource id of the Key Vault containing the secret>"

},

"vaultCertificates": [

{

"certificateUrl": "<URL for the certificate>",

"certificateStore": "<Name of the certificate store on the VM>"

}

]

}

],

MORE INFO CONFIGURING WINRM ON WINDOWS-BASED VIRTUAL MACHINES

For more information on enabling WinRM on your Windows-based virtual machines a complete walk through is available at: https://docs.microsoft.com/en-us/azure/virtual-machines/windows/winrm.

Connecting to a Linux virtual machine using SSH

The default connectivity option for a Linux-based virtual machine is to use the secure shell (SSH) protocol. This service listens on TCP port 22 and provides full access to a command line shell. This service is enabled by default on all Linux-based VMs. When you click the Connect button on a Linux-based virtual machine with a public IP associated with it you see a dialog, like the one shown in Figure 2-14, advising you to use SSH to connect.

FIGURE 2-14 The connect dialog advising how to connect to a Linux VM using SSH

Use the following command to connect to a Linux VM using the SSH bash client.

ssh username@ipaddress

If the virtual machine is configured for password access, SSH then prompts you for the password for the user you specified. If you specified the public key for an SSH certificate during the creation of the virtual machine it attempts to use the certificate from the ~/.ssh folder.

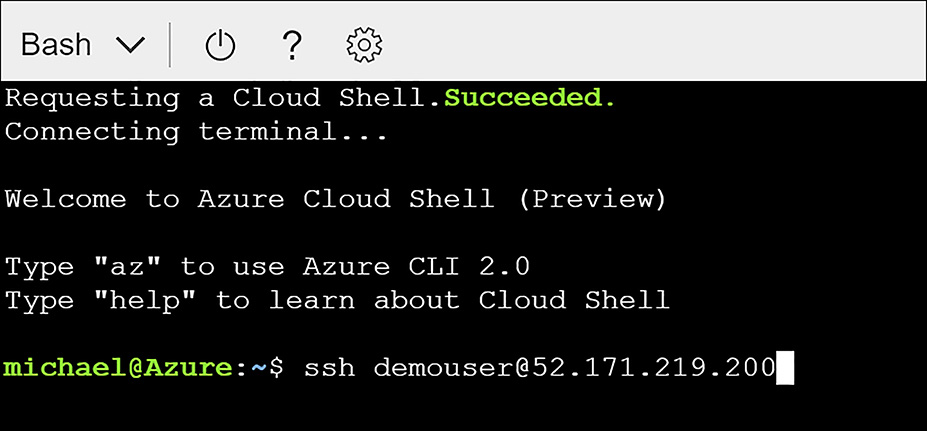

There are many options for SSH users from a Windows machine. For example, if you install the Linux subsystem for Windows 10, you will also install an SSH client that can be accessed from the bash command line. You can also install one of many GUI-based SSH clients like PuTTy. The Azure Cloud Shell shown in Figure 2-15 also provides an SSH client. So regardless of which operating system you are on, if you have a modern browser and can access the Azure portal you can connect to your Linux VMs.

FIGURE 2-15 Connecting to a Linux VM using SSH from within the Azure Cloud Shell

MORE INFO OPTIONS FOR USING SSH FROM WINDOWS

There are plenty of options to connect to your Linux-based virtual machines from Windows. The following link has more detail on SSH certificate management and some available clients at https://docs.microsoft.com/en-us/azure/virtual-machines/linux/ssh-from-windows.

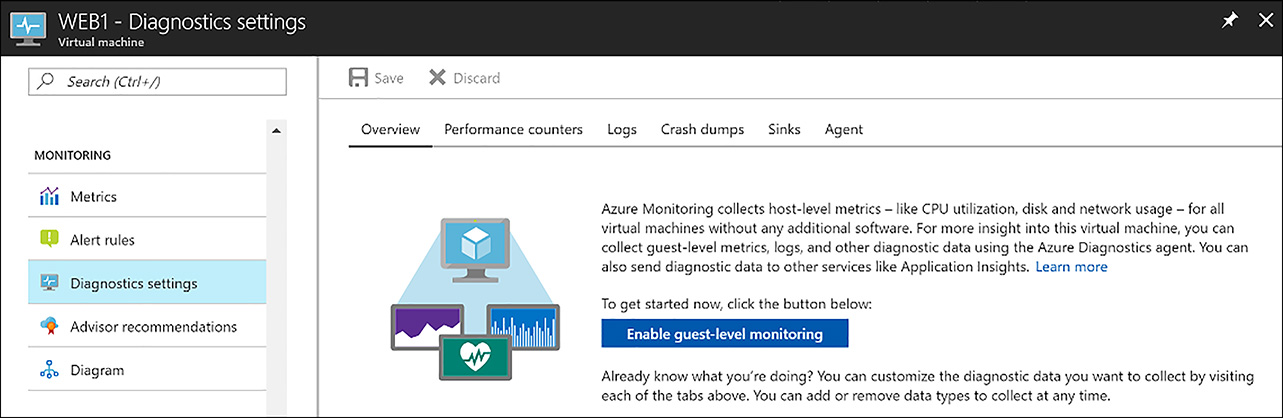

Skill 2.2: Perform configuration management

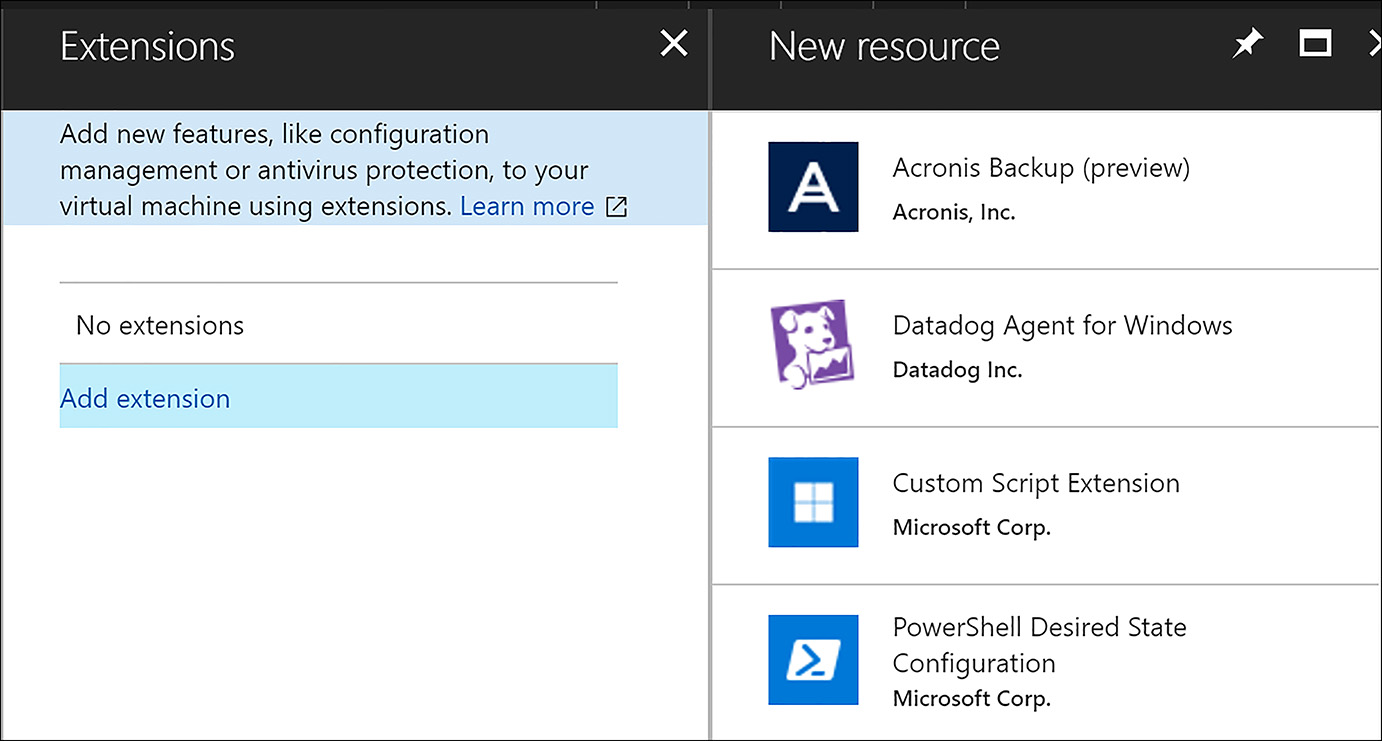

Azure virtual machines have a variety of built-in extensions that can enable configuration management as well as a variety of other operations such as installing software agents and even enabling remote debugging for live troubleshooting purposes. The two most common extensions for configuration management are the Windows PowerShell Desired State Configuration (DSC) extension and the more generic Custom Script Extension. Both extensions can be executed at provisioning time or after the virtual machine has already been started. The Windows PowerShell DSC Extension allows you to define the state of a virtual machine using the PowerShell Desired State Configuration language and apply it as well as perform continuous updates when integrated with the Azure Automation DSC service. The custom script extension can be used to execute an arbitrary command such as a batch file, regular PowerShell script, or a bash script. In addition to these extensions there are also more specific extensions that allow you to configure your virtual machines to use open source configuration management utilities such as Chef or Puppet and many others.

This skill covers how to:

Automate configuration management by using PowerShell Desired State

Configuration (DSC) and VM Agent (custom script extensions)Enable remote debugging

EXAM TIP

EXAM TIP

To use virtual machine extensions like Windows PowerShell DSC, Custom Script Extension, Puppet, and Chef on Windows, the Azure virtual machine agent must be installed on the virtual machine. By default, the agent is installed on virtual machines created after February 2014 (when the feature was added). But, it’s also possible to not install the agent on Windows-based virtual machines by not passing the ProvisionVMAgent parameter of the Set-AzureRmVMOperatingSystem cmdlet in PowerShell. If the agent is not installed at provisioning time, or if you have migrated a virtual hard disk from on-premises, you can manually install the agent on these virtual machines by downloading and installing the agent from Microsoft at http://go.microsoft.com/fwlink/?LinkID=394789&clcid=0x409.

PowerShell Desired State Configuration

PowerShell Desired State Configuration (DSC) allows you to declaratively configure the state of the virtual machine. Using built-in resource providers or custom providers with a DSC script enables you to declaratively configure settings such as roles and features, registry settings, files and directories, firewall rules, and most settings available to Windows. One of the compelling features of DSC is that, instead of writing logic to detect and correct the state of the machine, the providers do that work for you and make the system state as defined in the script.

For example, the following DSC script declares that the Web-Server role should be installed, along with the Web-Asp-Net45 feature. The WindowsFeature code block represents a DSC resource. The resource has a property named Ensure that can be set to Present or Absent. In this example, the WindowsFeature resource verifies whether the Web-Server role is present on the target machine and if it is not, the resource installs it. It repeats the process for the Web-Asp-Net45 feature.

Configuration ContosoSimple

{

Node "localhost"

{

#Install the IIS Role

WindowsFeature IIS

{

Ensure = "Present"

Name = "Web-Server"

}

#Install ASP.NET 4.5

WindowsFeature AspNet45

{

Ensure = "Present"

Name = "Web-Asp-Net45"

}

}

}

In addition to the default DSC resources included by default with PowerShell DSC, there is an open source DSC resource kit hosted in GitHub that has many more resources that are maintained and updated by the Windows PowerShell engineering, and of course you can write your own. To install a custom resource, download it and unzip it into the C:Program FilesWindowsPowerShellModules folder. To learn about and download the latest DSC resource kit from Microsoft see the following GitHub repo at: https://github.com/PowerShell/DscResources.

The example uses the xPSDesiredStateConfiguration module from the DSC resource kit to download a .zip file that contains the website content. This module can be installed using

PowerShellGet by executing the following commands from an elevated command prompt:

Install-Module -Name xPSDesiredStateConfiguration

# ContosoWeb.ps1

configuration Main

{

# Import the module that defines custom resources

Import-DscResource -Module xPSDesiredStateConfiguration

Node "localhost"

{

# Install the IIS role

WindowsFeature IIS

{

Ensure = "Present"

Name = "Web-Server"

}

# Install the ASP .NET 4.5 role

WindowsFeature AspNet45

{

Ensure = "Present"

Name = "Web-Asp-Net45"

}

# Download the website content

xRemoteFile WebContent

{

Uri = "https://cs7993fe12db3abx4d25xab6.blob.core.windows.net/public/website.zip"

DestinationPath = "C:inetpubwwwroot"

DependsOn = "[WindowsFeature]IIS"

}

Archive ArchiveExample

{

Ensure = "Present"

Path = "C:inetpubwwwrootwebsite.zip"

Destination = "C:inetpubwwwroot"

DependsOn = "[xRemoteFile]WebContent"

}

}

}

Before the DSC script can be applied to a virtual machine, you must use the Publish-AzureRmVMDscConfiguration cmdlet to package the script into a .zip file. This cmdlet also import any dependent DSC modules such as xPSDesiredStateConfiguration into the .zip.

Publish-AzureRmVMDscConfiguration -ConfigurationPath .ContosoWeb.ps1 -OutputArchivePath

.ContosoWeb.zip

The DSC configuration can then be applied to a virtual machine in several ways such as using the Azure portal, as shown in Figure 2-16.

FIGURE 2-16 Adding a VM extension

The Configuration Modules Or Script field expects the .zip file created by the call to the Publish-AzureRmVMDscConfiguration. The Module-Qualified Name Of Configuration field expects the name of the script file (with the .ps1 extension) concatenated with the name of the configuration in the script, which in the example shown in Figure 2-17 is ContosoWeb.ps1Main.

FIGURE 2-17 Configuring a VM extension

One of the powerful features of PowerShell DSC is the ability to parameterize the configuration. This means you can create a single configuration that can exhibit different behaviors based on the parameters passed. The Configuration Data PSD1 file field is where you can specify these parameters in the form of a hashtable. You can learn more about how to separate configuration from environment data at https://docs.microsoft.com/en-us/powershell/dsc/separatingenvdata.

The PowerShell DSC extension also allows you to specify whether to use the latest version of the Windows Management Framework (WMF) and to specify the specific version of the DSC extension to use, and whether to automatically upgrade the minor version or not.

MORE INFO AZURE POWERSHELL DSC EXTENSION VERSIONS

You can find more about the DSC extension versions at https://blogs.msdn.microsoft.com/powershell/2014/11/20/release-history-for-the-azure-dsc-extension/. This blog post is actively maintained with new versions. PowerShell DSC configurations can also be applied programmatically during a PowerShell deployment by using the Set-AzureRmVmDscExtension cmdlet. In the example below, the Publish-AzureRmVMDscConfiguration cmdlet is used to publish the packaged script to an existing Azure storage account before applying the configuration using the Set-AzureRmVMDscExtension cmdlet on an existing virtual machine.

$rgName = "Contoso"

$location = "westus"

$vmName = "DSCVM"

$storageName = "erstorage"

$configurationName = "ContosoWeb"

$archiveBlob = "ContosoWeb.ps1.zip"

$configurationPath = ".ContosoWeb.ps1"

#Publish the configuration script into Azure storage

Publish-AzureRmVMDscConfiguration -ConfigurationPath $configurationPath `

-ResourceGroupName $rgName `

-StorageAccountName $storageName

#Set the VM to run the DSC configuration

Set-AzureRmVmDscExtension -Version 2.26 `

-ResourceGroupName $resourceGroup `

-VMName $vmName `

-ArchiveStorageAccountName $storageName `

-ArchiveBlobName $archiveBlob `

-AutoUpdate:$false `

-ConfigurationName $configurationName

The PowerShell DSC extension can also be applied to a virtual machine created through an ARM template by extending and adding the resource configuration in the virtual machine’s resource section of the template. You learn more about authoring ARM templates in Chapter 5.

{

"name": "Microsoft.Powershell.DSC",

"type": "extensions",

"location": "[resourceGroup().location]",

"apiVersion": "2016-03-30",

"dependsOn": [

"[resourceId('Microsoft.Compute/virtualMachines', parameters('WebVMName'))]"

],

"tags": {

"displayName": "WebDSC"

},

"properties": {

"publisher": "Microsoft.Powershell",

"type": "DSC",

"typeHandlerVersion": "2.26",

"autoUpgradeMinorVersion": false,

"settings": {

"configuration": {

"url": "[parameters('DSCUri'))]",

"script": "ContosoWeb.ps1",

"function": "Main"

},

"configurationArguments": {

"nodeName": "[parameters('WebVMName')]"

}

},

"protectedSettings": {

"configurationUrlSasToken": "[parameters('SasToken')]"

}

}

}

The previous examples apply the PowerShell DSC configuration only when the extension is executed. If the configuration of the virtual machine changes after the extension is applied, the configuration can drift from the state defined in the DSC configuration. The Azure Automation DSC service allows you to manage all your DSC configurations, resources, and target nodes from the Azure portal or from PowerShell. It also provides a built-in pull server so your virtual machines will automatically check on a scheduled basis for new configuration changes, or to compare the current configuration against the desired state and update accordingly.

MORE INFO AZURE AUTOMATION DSC

For more information on how to automatically apply PowerShell DSC configurations to your virtual machines see https://docs.microsoft.com/en-us/azure/automation/automation-dsc-overview.

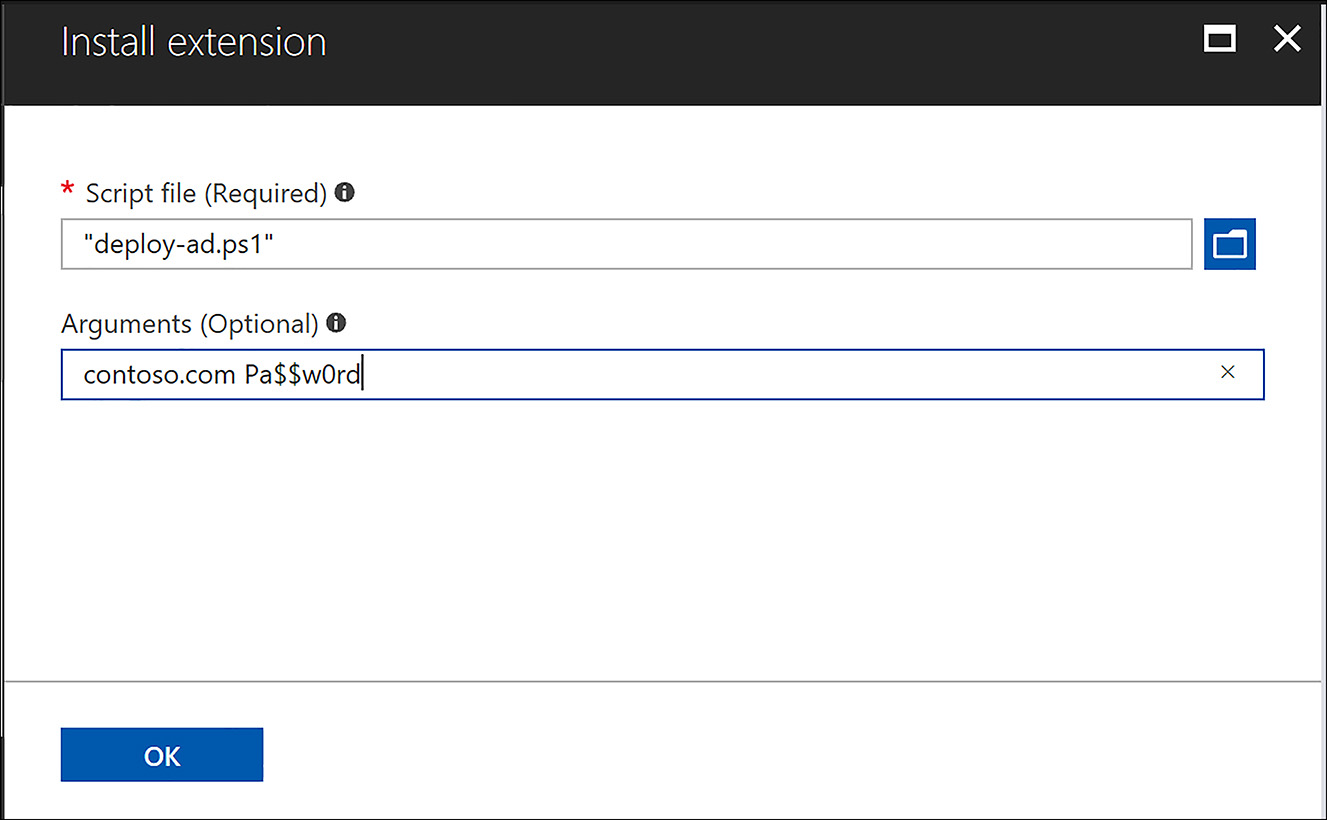

Using the custom script extension

The Azure custom script extension is supported on Windows and Linux-based virtual machines, and is ideal for bootstrapping a virtual machine to an initial configuration. To use the Azure custom script extension your script must be accessible via a URI such as an Azure storage account, and either accessed anonymously or passed with a shared access signature (SAS URL). The custom script extension takes as parameters the URI and the command to execute including any parameters to pass to the script. You can execute the script at any time the virtual machine is running.

Using the custom script extension (Azure portal)

To add the custom script extension to an existing virtual machine, open the virtual machine in the portal, click the Extensions link on the left, and choose the Custom Script Extension option. The script file is specified as well as any arguments passed to the script. Figure 2-18 shows how to enable this extension using the Azure portal.

FIGURE 2-18 Specifying the custom script extension configuration

Using the custom script extension (PowerShell)

Both the Azure PowerShell cmdlets and the Azure CLI tools can be used to execute scripts using the custom script extension. Starting with PowerShell, the following script deploys the Active Directory Domain Services role. It accepts two parameters: one is for the domain name and the other is for the administrator password.

#deployad.ps1

param(

$domain,

$password

)

$smPassword = (ConvertTo-SecureString $password -AsPlainText -Force)

Install-WindowsFeature -Name "AD-Domain-Services" `

-IncludeManagementTools `

-IncludeAllSubFeature

Install-ADDSForest -DomainName $domain `

-DomainMode Win2012 `

-ForestMode Win2012 `

-Force `

-SafeModeAdministratorPassword $smPassword

You can use the Set-AzureRmVMCustomScriptExtension cmdlet to run this script on an Azure virtual machine. This scenario can be used for installing roles or any other type of iterative script you want to run on the virtual machine.

$rgName = "Contoso"

$scriptName = "deploy-ad.ps1"

$scriptUri = http://$storageAccount.blob.core.windows.net/scripts/$scriptName

$scriptArgument = "contoso.com $password"

Set-AzureRmVMCustomScriptExtension -ResourceGroupName $rgName `

-VMName $vmName `

-FileUri $scriptUri `

-Argument "$domain $password" `

-Run $scriptName

The FileUri parameter of the Set-AzureRmVMCustomScriptExtension cmdlet, accepts the URI to the script, and the Run parameter tells the cmdlet the name of the script to run on the virtual machine. The script can also be specified using the StorageAccountName, StorageAcountKey, ContainerName, and FileName parameters that qualify its location in an Azure storage account.

Using the custom script extension (CLI)

You can also use the custom script extension for Linux-based virtual machines. The following example demonstrates a simple bash script that installs Apache and PHP. The script would need to be uploaded to an accessible HTTP location such as an Azure storage account or a GitHub repository for the custom script extension to access it and apply it to the virtual machine.

#!/bin/bash

#install-apache.sh

apt-get update

apt-get -y install apache2 php7.0 libapache2-mod-php7.0

apt-get -y install php-mysql

sudo a2enmod php7.0

apachectl restart

The following code example shows how this script can be applied to an Azure Virtual Machine named LinuxWebServer in the ExamRefRG-CLI resource group.

rgName="Contoso"

vmName="LinuxWebServer"

az vm extension set --resource-group $rgName --vm-name $vmName --name

$scriptName --publisher Microsoft.Azure.Extensions --settings ./cseconfig.json

The az vm extension set command can take the script to execute as a .json based configuration file as the previous example demonstrates. The contents of this .json file are shown for reference:

{

"fileUris": [ "https://examplestorageaccount.blob.core.windows.net/scripts/apache.sh" ],

"commandToExecute": "./apache.sh"

}

EXAM TIP

EXAM TIP

There are many other ways of configuring and executing the custom script extension using the Azure CLI tools. The following article has several relevant examples that might be used in an exam, which you can find at https://docs.microsoft.com/en-us/azure/virtual-machines/linux/extensions-customscript.

Like the PowerShell DSC extension, the custom script extension can be added to the resources section of an Azure Resource Manager template. The following example shows how to execute the same script using an ARM template instead of the CLI tools.

{

"name": "apache",

"type": "extensions",

"location": "[resourceGroup().location]",

"apiVersion": "2015-06-15",

"dependsOn": [

"[concat('Microsoft.Compute/virtualMachines/', parameters('scriptextensionName'))]"

],

"tags": {

"displayName": "installApache"

},

"properties": {

"publisher": "Microsoft.Azure.Extensions",

"type": "CustomScript",

"typeHandlerVersion": "2.0",

"autoUpgradeMinorVersion": true,

"settings": {

"fileUris": [

" https://examplestorageaccount.blob.core.windows.net/scripts/apache.sh "

],

"commandToExecute": "sh apache.sh"

}

}

}

MORE INFO TROUBLESHOOTING USING VIRTUAL MACHINE EXTENSION LOGS

In the event your custom script extension fails to execute it’s a good idea to review the log files. On Windows the logs are located at: C:WindowsAzureLogsPluginsMicrosoft.Compute.CustomScriptExtension. On Linux at /var/log/azure/Microsoft.Azure.Extensions.CustomScript.

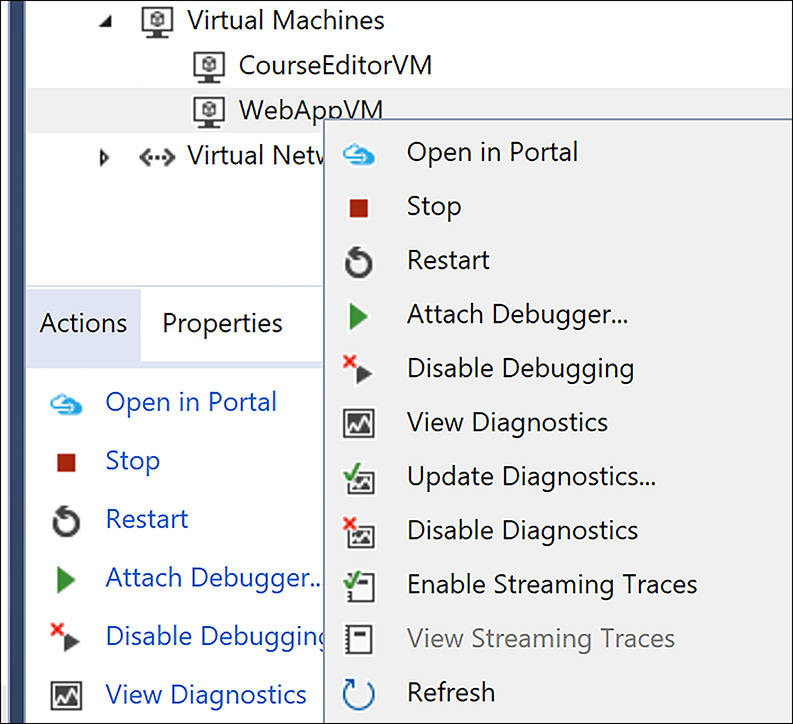

Enable remote debugging

Sometimes a problem with an application cannot be reproduced on a developer’s computer and only happens in a deployed environment. Azure Virtual Machines provides the ability to enable a developer using Visual Studio 2015 or above to attach a debugger directly to the offending process on the virtual machine and debug the problem as it happens.

To enable debugging, you should deploy a debug version of your application to the virtual machine, and then you can use the Visual Studio Cloud Explorer to find the virtual machine, right-click its name, and select the Enable Debugging option, as shown in Figure 2-19.

FIGURE 2-19 Enabling debugging with Visual Studio

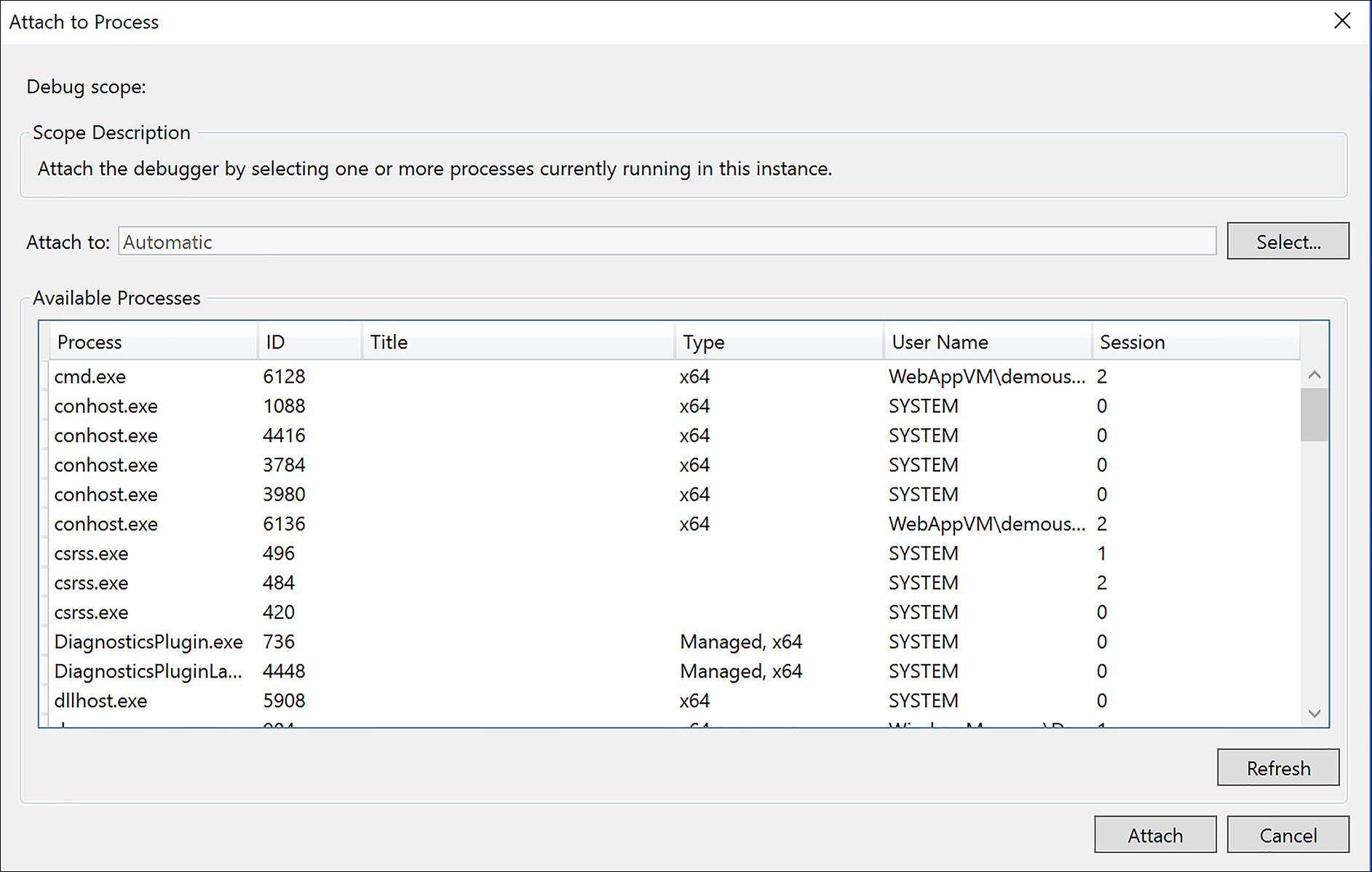

This step opens the necessary port on the network security group for your virtual machine, and then enables the virtual machine extension for remote debugging. After both tasks have completed, right-click the virtual machine once more and click the attach debugger option, as shown in Figure 2-20.

FIGURE 2-20 Attaching the debugger

Visual Studio will prompt you to attach the process on the virtual machine to debug, as shown in figure 2-21. Select the process and click the Attach button. You are then able to set one or more breakpoints in the application and debug the problem directly on the offending virtual machine.

FIGURE 2-21 Selecting the process to debug

Skill 2.3: Design and implement VM Storage

There are many options to consider when designing a storage subsystem for your virtual machine infrastructure. Core requirements such as performance, durability, availability, security, and capacity must be considered, as well as what the requirements are for accessing the data from applications. Microsoft Azure offers a broad set of features and capabilities that solve each of these problems in their own way.

This skill covers how to:

Configure disk caching

Plan storage capacity

Configure operating system disk redundancy

Configure shared storage using Azure File service

Configure geo-replication

Encrypt disks

Implement ARM VMs with Standard and Premium storage

Virtual machine storage overview

It’s important to understand that there are many features and capabilities to plan and design for when implementing virtual machines in Microsoft Azure. In this section we summarize some of these features and terms before we go deeper with how to put the pieces together to create a storage solution for your virtual machine infrastructure.

Storage accounts and blob types

All persistent disks for an Azure Virtual Machine are stored in blob storage of an Azure Storage account. There are three types of blob files:

Block blobs are used to hold ordinary files up to about 4.7 TB.

Page blobs are used to hold random access files up to 8 TB in size. These are used for the VHD files that back VMs.

Append blobs are made up of blocks like the block blobs, but are optimized for append operations. These are used for things like logging information to the same blob from multiple VMs.

An Azure Storage account can be one of three types:

Standard The most widely used storage accounts are Standard storage accounts, which can be used for all types of data. Standard storage accounts use magnetic media to store data.

Premium Premium storage provides high-performance storage for page blobs, which are primarily used for VHD files. Premium storage accounts use SSD to store data. Microsoft recommends using Premium Storage for all your VMs.

Blob The Blob Storage account is a specialized storage account used to store block blobs and append blobs. You can’t store page blobs in these accounts; therefore you can’t store VHD files. These accounts allow you to set an access tier to Hot or Cool; the tier can be changed at any time. The hot access tier is used for files that are accessed frequently—you pay a higher cost for storage, but the cost of accessing the blobs is much lower. For blobs stored in the cool access tier, you pay a higher cost for accessing the blobs, but the cost of storage is much lower.

Table 2-2 provides a mapping of what services are available and which blobs are supported by the storage account type.

TABLE 2-2 Services by Azure storage account type

Account Type |

General-purpose Standard |

General-purpose Premium |

Blob storage, hot and cool access tiers |

Services |

Blob, File, Queue Services |

Blob Service |

Blob Service |

Types of blobs |

Block blobs, page blobs, and append blobs |

Page blobs |

Block blobs and append blobs |

Storage account replication

Each Azure storage account has built in replication to ensure the durability of its data. Depending on the storage account type these replication options can be changed for different types of behaviors.

Locally redundant storage (LRS) Each blob has three copies in the data center

Geo-redundant storage (GRS) Each blob has three copies in the data center, and is asynchronously replicated to a second region for a total of six copies. In the event of a failure at the primary region, Azure Storage fails over to the secondary region.

Read-access geo-redundant storage (RA-GRS) The same as (GRS), except you can access the replicated data (read only) regardless of whether a failover has occurred.

Zone redundant storage (ZRS) Each blob has three copies in the data center, and is asynchronously replicated to a second data center in the same region for a total of six copies. Note that ZRS is only available for block blobs (no VM disks) in general-purpose storage accounts. Also, once you have created your storage account and selected ZRS, you cannot convert it to use to any other type of replication, or vice versa.

Azure disks

Azure VMs use three types of disks:

Operating System Disk (OS Disk) The C drive in Windows or /dev/sda on Linux. This disk is registered as an SATA drive and has a maximum capacity of 2048 gigabytes (GB). This disk is persistent and is stored in Azure storage.

Temporary Disk The D drive in Windows or /dev/sdb on Linux. This disk is used for short term storage for applications or the system. Data on this drive can be lost in during a maintenance event, or if the VM is moved to a different host because the data is stored on the local disk.

Data Disk Registered as a SCSI drive. These disks can be attached to a virtual machine, the number of which depends on the VM instance size. Data disks have a maximum capacity of 4095 gigabytes (GB). These disks are persistent and stored in Azure Storage.

There are two types of disks in Azure: Managed or Unmanaged.

Unmanaged disks With unmanaged disks you are responsible for ensuring for the correct distribution of your VM disks in storage accounts for capacity planning as well as availability. An unmanaged disk is also not a separate manageable entity. This means that you cannot take advantage of features like role based access control (RBAC) or resource locks at the disk level.

Managed disks Managed disks handle storage for you by automatically distributing your disks in storage accounts for capacity and by integrating with Azure Availability Sets to provide isolation for your storage just like availability sets do for virtual machines. Managed disks also makes it easy to change between Standard and Premium storage (HDD to SSD) without the need to write conversion scripts.

MORE INFO DISKS AND VHDS

See the following for more information on Disks and VHDs https://docs.microsoft.com/en-us/azure/virtual-machines/windows/about-disks-and-vhds.

Operating system images

In addition to using the VM images from the Azure marketplace, Azure also provides the ability to upload your own image or create a custom image directly in the cloud.

VM images are captured from an existing VM that has been prepared using the Windows program sysprep.exe or the Microsoft Azure Linux Agent (waagent) to make the operating system generalized. Generalized means that VM specific settings such as hostname, user accounts, domain join information, and so on are removed from the operating system so it is in a state to be provisioned on a new VM. Generalization does not remove customizations such as installation of software, patches, additional files, and folders. This capability is what makes VM images a great solution for providing pre-configured and tested solutions for VMs or VM Scale Sets.

Like Azure disks, there are managed and unmanaged images. Prior to the launch of Azure Managed Disks, unmanaged images were your only option. The primary problem that managed images solves over unmanaged images is storage account management. With unmanaged images, you can only create a new VM in the same storage account that the image resides in. This means if you wanted to use the image in another storage account you would have to use one of the storage tools to copy it to the new storage account first and then create the VM from it. Managed images solve this problem for the most part. Once a managed image exists you can create a VM from it using managed disks without worrying about the storage account configuration. This applies only to VMs created in the same region. If you want to create the VM in a remote region you must still copy the managed image to the remote region first.

To create a VM image you first generalize the operating system. In Windows this is using the sysprep.exe tool as shown in Figure 2-22. After this tool has completed execution the VM is in a generalized state and shut down.

FIGURE 2-22 Using the System Preparation tool to generalize a Windows VM

The command to generalize a Linux VM using the waagent program is shown here:

sudo waagent -deprovision+user

After the VM is generalized, you then deallocate the VM, set its status to generalized, and then use the Save-AzureRmVMImage cmdlet to capture the VM (including operating system disks) into a container in the same storage account. This cmdlet saves the disk configuration (including URIs to the VHDs) in a .json file on your local file system.

Creating an unmanaged VM image (PowerShell)

The following example shows how to use the Azure PowerShell cmdlets to save an umanaged image using the Save-AzureRmVMImage cmdlet.

# Deallocate the VM

$rgName = "Contoso"

$vmName = "ImageVM"

Stop-AzureRmVM -ResourceGroupName $rgName -Name $vmName

# Set the status of the virtual machine to Generalized

Set-AzureRmVm -ResourceGroupName $rgName -Name $vmName -Generalized

$containerName = "vmimage"

$vhdPrefix = "img"

$localPath = "C:LocalImageConfig"

Save-AzureRmVMImage -ResourceGroupName $rgName -Name $vmName `

-DestinationContainerName $containerName -VHDNamePrefix $vhdPrefix `

-Path $localPath

Creating a managed VM image (PowerShell)

This example shows how to create a managed VM image using PowerShell. This snippet uses the New-AzureRmImageConfig and New-AzureRmImage cmdlets.

# Deallocate the VM

# Deallocate the VM

$rgName = "Contoso"

$vmName = "ImageVM"

Stop-AzureRmVM -ResourceGroupName $rgName -Name $vmName

# Set the status of the virtual machine to Generalized

Set-AzureRmVm -ResourceGroupName $rgName -Name $vmName -Generalized

# Create a managed VM from a VM

$imageName = "WinVMImage"

$vm = Get-AzureRmVM -ResourceGroupName $rgName -Name $vmName

$image = New-AzureRmImageConfig -Location $location -SourceVirtualMachineId $vm.ID

New-AzureRmImage -Image $image -ImageName $imageName -ResourceGroupName $rgName

Creating an managed VM image (CLI)

This example uses the az vm generalize and az image commands from the CLI tools to create a managed VM image.

# Create a Managed Image

rgName="Contoso"

vmName="ImageVM"

imageName="LinuxImage"

# Deallocate the VM

az vm deallocate --resource-group $rgName --name $vmName

# Set the status of the virtual machine to Generalized

az vm generalize --resource-group $rgName --name $vmName

az image create --resource-group $rgName --name $imageName --source $vmName

Creating a VM from an image

Creating a VM from an image is very similar to creating an image using an Azure Marketplace image. There are differences depending on if you start with an unmanaged image or a managed image. For example, using an unmanaged image you must ensure that the destination operating system and data disk URIs for your VM references the same storage account that your image resides in and then you reference the operating system image by its URI in the storage account.

To specify an image using PowerShell, set the -SourceImageUri parameter of the Set-AzureRmOsDisk cmdlet.

$vm = Set-AzureRmVMOSDisk -VM $vm -Name $osDiskName -VhdUri $osDiskUri `

-CreateOption fromImage -SourceImageUri $imageURI -Windows

Using the CLI tools, specify the URI using the image parameter of the az vm create command.

az vm create --resource-group $rgName --name $vmName --image $osDiskUri

--generate-ssh-keys

To create using a manage image with PowerShell, you first retrieve the image ID and pass it to Set-AzureRmVMOSDisk instead.

$image = Get-AzureRmImage -ImageName $imageName -ResourceGroupName $rgName

$vmConfig = Set-AzureRmVMSourceImage -VM $vmConfig -Id $image.Id

Using the CLI tools saves a step because it retrieves the image ID for you, you just need to specify the name of your managed image.

az vm create -g $rgName -n $vmName --image $imageName

EXAM TIP

EXAM TIP

Image management is an incredibly important topic and having a solid understanding of the various options and techniques is undoubtedly valuable for passing the exam. Understanding how to create VMs from images, URIs, attach data disks, and copying disks from storage account to storage account will certainly not hurt your chances. You can learn more about managing images using the CLI tools at https://docs.microsoft.com/en-us/azure/virtual-machines/linux/capture-image and using PowerShell at https://docs.microsoft.com/en-us/azure/virtual-machines/windows/capture-image-resource.

Virtual machine disk caching

Azure disks (operating system and data) have configurable cache settings that you should be aware of when designing systems for durability and performance. The caching behavior differs whether you are using Standard storage or Premium storage.

Caching works on Standard storage by buffering the reads and write on the local physical disk on the host server the virtual machine is running on. Virtual machines that use Azure Premium Storage have a multi-tier caching technology called BlobCache. BlobCache uses a combination of the Virtual Machine RAM and local SSD for caching. This cache is available for the Premium Storage persistent disks and the VM local disks. By default, this cache setting is set to Read/Write for operating system disks and Read Only for data disks hosted on Premium storage. With disk caching enabled on the Premium storage disks, virtual machines can achieve extremely high levels of performance that exceed the underlying disk performance.

There are three settings that can be applied to your disks (Standard and Premium):

None Configure host-cache as None for write-only and write-heavy disks.

Read Only Configure host-cache as ReadOnly for read-only and read-write disks.

Read Write Configure host-cache as ReadWrite only if your application properly handles writing cached data to persistent disks when needed.

You can set the host caching setting at any time, but understand that when the cache setting is changed that the disk will be unattached and then reattached to the virtual machine. For best practice, you should ensure that none of your applications are actively using the disk when you change the cache setting. Changing the operating system disk’s host cache setting results in the virtual machine being rebooted.

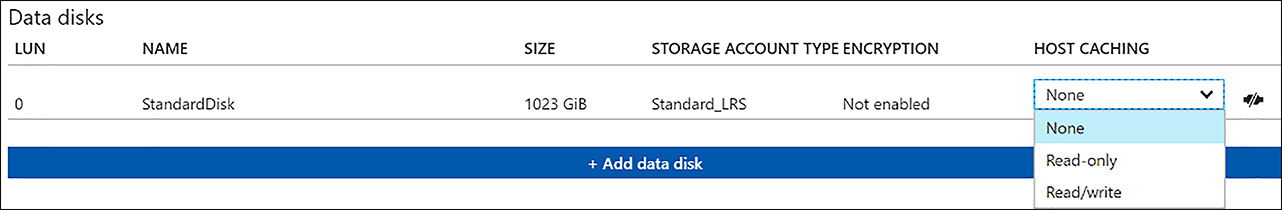

The host cache setting can be modified for a disk by using the Azure portal as shown in Figure 2-23, the command line tools, an ARM template, or via a call to the REST API.

FIGURE 2-23 Setting the Host Caching options

With PowerShell, use the Set-AzureRmVMDataDisk cmdlet to modify the cache setting of a disk. In the following example, an existing virtual machine configuration is returned using the Get-AzureRmVM cmdlet, the disk configuration is modified using Set-AzureRmVMDataDisk, and then the virtual machine is updated using the Update-AzureRmVM cmdlet. You would use the Set-AzureRmVMOSDisk cmdlet instead to update the operating system disk. The Set-AzureRmVMDataDisk cmdlet also supports a Name parameter if you would rather update the disk by name instead of using the LUN.

$rgName = "StorageRG"

$vmName = "StandardVM"

$vm = Get-AzureRmVM -ResourceGroupName $rgName -Name $vmName

Set-AzureRmVMDataDisk -VM $vm -Lun 0 -Caching ReadOnly

Update-AzureRmVM -ResourceGroupName $rgName -VM $vm

Using the Azure CLI, there are two commands to use depending on whether the virtual machine is an unmanaged or a managed disk. Also, the host cache setting can only be specified when attaching a disk using the az vm unmanaged-disk for unmanaged disks, or az vm disk attach for managed and specifying the caching parameter. This means you would need to detach and then attach an existing VHD to modify the cache setting or you can specify during the creation of a new disk as the following example demonstrates.

rgName="StorageRG"

vmName="StandardVM"

diskName="ManagedDisk"

az vm disk attach --vm-name $vmName --resource-group $rgName --size-gb 128 --disk

$diskName --caching ReadWrite –new

To configure the disk cache setting using an ARM template specify the caching property of the OSDisk, or each disk in the dataDisks collection of the virtual machine’s OSProfile configuration. The following example shows how to set the cache setting on a data disk.

"dataDisks": [

{

"name": "datadisk1",

"diskSizeGB": "1023",

"lun": 0,

"caching": "ReadOnly",

"vhd": { "uri": "[variables('DISKURI')]" },

"createOption": "Empty"

}

]

Planning for storage capacity

Planning for storage capacity is a key exercise when you are deploying a new workload or migrating an existing workload. In Azure Storage, there are several considerations to be aware of. The first is the size of the disks themselves. For an Azure virtual machine, the maximum capacity of a disk is 4095 GB (4 TB). Currently, the maximum number of data disks you can attach to a single virtual machine are 64 with the G5/GS5 instance size for a total storage capacity of 64 TB.

In addition to the size limitations, it is important to understand that capacity planning differs if you are using Standard or Premium storage, or if you are using Managed or Unmanaged disks. From a capacity planning perspective, the primary difference between Managed and Unmanaged disks is that Unmanaged disks must include the capacity of the storage accounts you are creating in with your planning, and with Managed disks you do not.

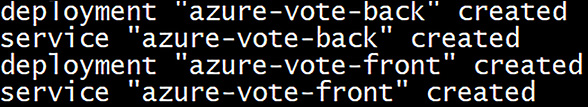

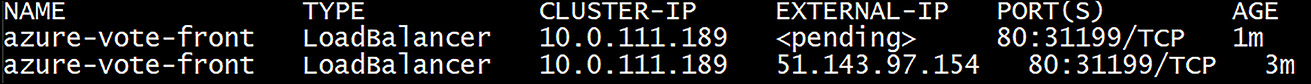

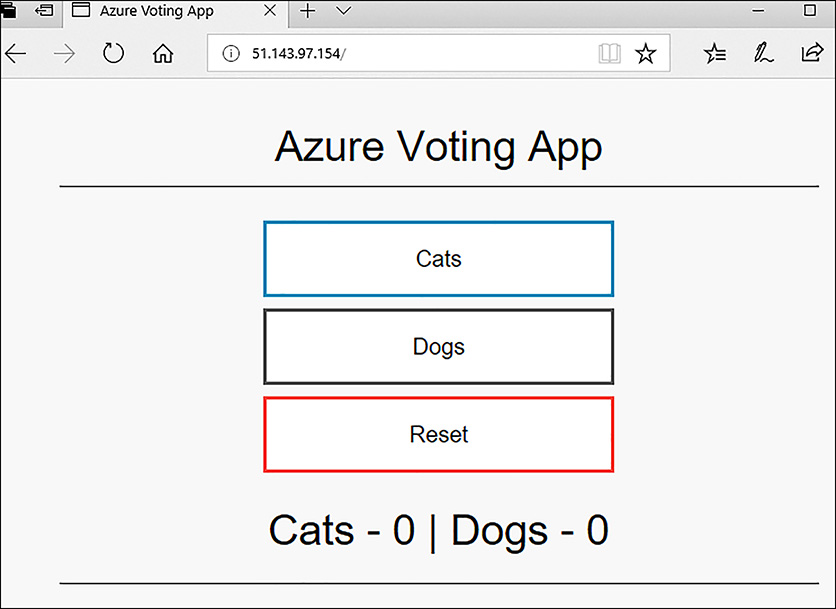

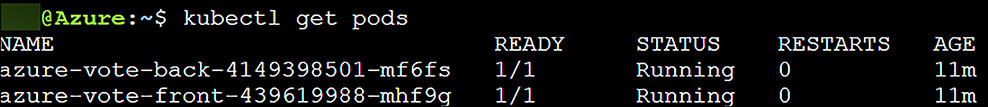

Capacity planning with Standard storage