During the years 1834 to 1845, Hamilton found a system of ordinary differential equations which is now called the Hamiltonian canonical system, equivalent to the Euler-Lagrange equation (1744). He also derived the Hamilton-Jacobi equation (HJE), which was improved/modified by Jacobi in 1838 [114, 130]. Later, in 1952, Bellman developed the discrete-time equivalent of the HJE which is called the dynamic programming principle [64], and the name Hamilton-Jacobi-Bellman equation (HJBE) was coined (see [287] for a historical perspective). For a century now, the works of these three great mathematicians have remained the cornerstone of analytical mechanics and modern optimal control theory.

In mechanics, Hamilton-Jacobi-theory (HJT) is an extension of Lagrangian mechanics, and concerns itself with a directed search for a coordinate transformation in which the equations of motion can be easily integrated. The equations of motion of a given mechanical system can often be simplified considerably by a suitable transformation of variables such that all the new position and momemtum coordinates are constants. A special type of transformation is chosen in such a way that the new equations of motion retain the same form as in the former coordinates; such a transformation is called canonical or contact and can greatly simplify the solution to the equations. Hamilton in 1838 has developed the method for obtaining the desired transformation equations using what is today known as Hamilton’s principle. It turns out that the required transformation can be obtained by finding a smooth function S called a generating function or Hamilton’s principal function, which satisfies a certain nonlinear first-order partial-differential equation (PDE) also known as the Hamilton-Jacobi equation (HJE).

Unfortunately, the HJE, being nonlinear, is very difficult to solve; and thus, it might appear that little practical advantage has been gained in the application of the HJT. Nevertheless, under certain conditions, and when the Hamiltonian (to be defined later) is independent of time, it is possible to separate the variables in the HJE, and the solution can then always be reduced to quadratures. In this event, the HJE becomes a useful computational tool only when such a separation of variables can be achieved.

Subsequently, it was long recognized from the Calculus of variation that the variational approach to the problems of mechanics could be applied equally efficiently to solve the problems of optimal control [47, 130, 164, 177, 229, 231]. Thus, terms like “Lagrangian,” “Hamiltonian” and “Canonical equations” found their way and were assimilated into the optimal control literature. Consequently, it is not suprising that the same HJE that governs the behavior of a mechanical system also governs the behavior of an optimally controlled system. Therefore, time-optimal control problems (which deal with switching curves and surfaces, and can be implemented by relay switches) were extensively studied by mathematicians in the United States and the Soviet Union. In the period 1953 to 1957, Bellman, Pontryagin et al. [229, 231] and LaSalle [157] developed the basic theory of minimum-time problems and presented results concerning the existence, uniqueness and general properties of time-optimal control.

However, classical variational theory could not readily handle “hard” constraints usually associated with control problems. This led Pontryagin and co-workers to develop the famous maximum principle, which was first announced at the International Congress of Mathematicians held in 1958 at Edinburgh. Thus, while the maximum principle may be surmised as an outgrowth of the Hamiltonian approach to variational problems, the method of dynamic programming of Bellman, may be viewed as an off-shoot of the Hamilton-Jacobi approach to variational problems.

In recent years, as early as 1990, there has been a renewed interest in the application of the HJBE to the control of nonlinear systems. This has been motivated by the successful development of the H∞

Invariably however, as in mechanics, the biggest bottle-neck to the practical application of the nonlinear equivalent of the H∞

1.1 Historical Perspective on Nonlinear H∞

The breakthrough in the derivation of the elegant state-space formulas for the solution of the standard linear H∞

The solution of the output-feedback problem with dynamic measurement-feedback for affine nonlinear systems was presented by Ball et al. [53], Isidori and Astolfi [138, 139, 141], Lu and Doyle [191, 190] and Pavel and Fairman [223]. While the solution for a general class of nonlinear systems was presented by Isidori and Kang [145]. At the same time, the solution of the discrete-time state and dynamic output-feedback problems were presented by Lin and Byrnes [77, 74, 182, 183, 184] and Guillard et al. [125, 126]. Another approach to the discrete-time problem using risk-sensitive control and the concept of information-state for output-feedback dynamic games was also presented by James and Baras [151, 150, 149] for a general class of discrete-time nonlinear systems. The solution is expressed in terms of dissipation inequalities; however, the resulting controller is infinite-dimensional. In addition, a control Lyapunov-function approach to the global output-regulation problem via measurement-feedback for a class of nonlinear systems in which the nonlinear terms depend on the output of the system, was also considered by Battilotti [62].

Furthermore, the solution of the problem for the continuous time-varying affine nonlinear systems was presented by Lu [189], while the mixed H2

Finally, the filtering problem for affine nonlinear systems has been considered by Berman and Shaked [66, 244], while the continuous-time robust filtering problem was discussed by Nguang and Fu [210, 211] and by Xie et al. [279] for a class of discrete-time affine nonlinear systems.

A more general case of the problem though is the singular nonlinear H∞

A more recent contribution to the literature has considered a factorization approach to the problem, which had been earlier initiated by Ball and Helton [52, 49] but discounted because of the inherent difficulties with the approach. This was also the case for the earlier approaches to the linear problem which emphasized factorization and interpolation in lieu of state-space tools [290, 101]. These approaches are the J-j-inner-outer factorization and spectral-factorization proposed by Ball and Van der Schaft [54], and a chain-scattering matrix approach considered by Baramov and Kimura [55], and Pavel and Fairman [224]. While the former approach tries to generalize the approach in [118, 117] to the nonlinear case (the solution is only given for stable invertible continuous-time systems), the latter approach applies the method of conjugation and chain-scattering matrix developed for linear systems in [163] to derive the solution of the nonlinear problem. However, an important outcome of the above endeavors using the factorization approach, has been the derivation of state-space formulas for coprime-factorization and inner-outer factorization of nonlinear systems [240, 54] which were hitherto unavailable [52, 128, 188]. This has paved the way for employing these state-space factors in balancing, stabilization and design of reduced-order controllers for nonlinear systems [215, 225, 240, 33].

In the next section, we present the general setup and an overview of the nonlinear H∞

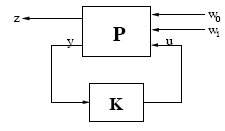

FIGURE 1.1

Feedback Configuration for Nonlinear H∞

1.2 General Set-Up for Nonlinear H∞

In this section, we present a general framework and an overview of nonlinear H∞

CT: Pc : {˙x= f(x,u,w), x(t0)=x0y=hy(x,w)z=hz(x,u) |

(1.1) |

DT : Pd :{xk+1=f(xk,uk,wk), x(k0)=x0 yk=hy(xk,wk) zk=hz(xk,uk),k∈Z |

(1.2) |

where x ∈ X

We begin with the following definitions.

Definition 1.2.1 A solution or trajectory of the system Pc at any time t ∈ ℜ from an initial state x(t0) = x0 due to an input u[t0 ,t] will be denoted by x(t,t0,x0,u[t0 ,t]) or ϕ(t,t0,x0,u[t0 ,t]). Similarly, by x(k,k0,x0,u[k0 ,k]) or ϕ(k0,k,x0,u[k0 ,k]) for Pd.

Definition 1.2.2 The nonlinear system Pc is said to have locally L2

T∫t0‖z(t)‖2dt≤γ2T∫t0‖w(t)‖2dt+κ(x0), ∀T>t0

for some bounded function κ such that κ(0) = 0. The system has L2

Equivalently, the nonlinear system Pd has locallyℓ2-gain less than or equal to γ in N if for any initial state x0 in N and fixed feedback u[k0 ,K], the response of the system due to any w[k0 ,K] ∈ℓ2[k0 ,K) satisfies

K∑κ=κ0‖zκ‖2≤γ2K∑κ=κ0‖wκ‖2+κ(x0), ∀K>κ0,K∈Z.

The system has ℓ2-gain ≤ γ if N = X

Remark 1.2.1 Note that in the above definitions ‖.‖

Definition 1.2.3 The nonlinear system Pc or [f,hz] is said to be locally zero-state detectable in O ⊂X

Equivalently, Pd or [f, hy] is said to be locally zero-state detectable in O

Definition 1.2.4 The state-space X

Equivalently, the state-space of Pd is reachable from the origin x = 0 if for any state xk1 ∈X

Now, the state-feedback nonlinear H∞

‖Pc‖H∞=sup0≠w∈ℒ2(0,∞)‖z(t)‖2‖w(t)‖2, x(t0)=0, |

(1.3) |

equivalently the induced-norm from ℓ2 to ℓ2:

‖Pd‖H∞=sup0≠w∈l2(0,∞)‖z‖2‖w‖2, x(k0)=0, |

(1.4) |

where for any v : [t0, T ] ⊂ ℜ → ℜm or {v} : [k0, K] ⊂ Z → ℜm,

‖v‖22[t0,T]Δ=T∫t0m∑i=1|vi(t)2|dt, and ‖{vκ}‖22[κ0,K]Δ=K∑κ=k0m∑i=1|viκ|2.

The H∞

Thus, the problem can be formulated as a finite-time horizon (or infinite-horizon) minimax optimization problem with the following cost function (or more precisely functional) [57]:

Jc(μ,w)=min μ∈UsupTsupw∈ℒ212T∫t0[‖z(t)‖2-γ2‖w(t)‖2]dτ, T>t0 |

(1.5) |

equivalently

Jd(μ,w)=min μ∈Usup Tsupw∈l212K∑k=k0[‖zk‖2-γ2‖wk‖2], K>k0∈Z |

(1.6) |

subject to the dynamics P with internal (or closed-loop) stability of the system. It is seen that, by rendering the above cost function nonpositive, the L2

The above cost function or performance measure also has a differential game interpretation. It constitutes a two-person zero-sum game in which the minimizing player controls the input u while the maximizing player controls the disturbance w. Such a game has a saddle-point equilibrium solution if the value-function

Vc(x,t)=infμ∈Usupw∈L2T∫t[‖z(τ)‖2−γ2‖w(τ)‖2dt]

or equivalently

Vd(x,k)=infμ∈Usupw∈l2K∑j=k[‖zj‖2−γ2‖wj‖2]

is C1 and satisfies the following dynamic-programming equation (known as Isaacs’s equation or HJIE):

−Vct(x,t)=infusupw{Vcx(t,x)f(x,u,w)+[‖z(t)2‖−γ2‖w(t)‖2]}; Vc(T,x)=0, x∈X, |

(1.7) |

or equivalently

Vd(x,k)=infuksupwk{vd(f(x,uk,wk), k+1)+[‖zk‖−γ2‖wk‖2]}; Vd(K+1,x)=0, x∈X, |

(1.8) |

where Vt, Vx are the row vectors of first partial-derivatives with respect to t and x respectively. A pair of strategies (μ⋆, ν⋆) provides under feedback-information pattern, a saddle-point equilibrium solution to the above game if

(1.9) |

or equivalently

(1.10) |

For the plant P, the above optimization problem (1.5) or (1.6) subject to the dynamics of P reduces to that of solving the HJIE (1.7) or (1.8). However, the optimal control u⋆ may be difficult to write explicitly at this point because of the nature of the function f(., ., .).

Therefore, in order to write explicitly the nature of the optimal control and worst-case disturbance in the above minimax optimization problem, we shall for the most part in this book, assume that the plant P is affine in nature and is represented by an affine state-space system of the form:

Pca : { ·x =f(x)+g1(x)w+g2(x)u; x(0)=x0z= h1(x)+k12(x)uy= h2(x)+k21(x)w |

(1.11) |

or

Pda : {xk+1 =f(xk)+g1(xk)wk+g2(xk)uk; x0=x0zk= h1(xk)+k12(xk)ukyk= h2(xk)+k21(xk)wk, k∈Z+ |

(1.12) |

where f: X → X , g1 : X →ℳn×r( X ), g2 : X →ℳn×p( X ),

Furthermore, since we are more interested in the infinite-time horizon problem, i.e., for a control strategy such that limT→∞ Jc(u,w) (resp. limK→∞ Jd(uk,wk))

min μsupw{Vx(x)[f(x)+g1(x)w(t)+g2(x)u(t)]+12(‖z(t)‖2−γ2‖w(t)‖2)}=0; V(0) = 0, x∈X |

(1.13) |

or equivalently the discrete HJIE (DHJIE):

V(x) = min μsupw{V(f(x)+g1(x)w+g2(x)u)+12(‖z‖2−γ2‖w‖2)}=0; V(0) = 0, x∈X. |

(1.14) |

The problem of explicitly solving for the optimal control u⋆ and the worst-case disturbance w⋆ in the HJIE (1.13) (or the DHJI (1.14)) will be the subject of discussion in Chapters 5 and 7 respectively.

However, in the absence of the availability of the state information for feedback, one might be interested in synthesizing a dynamic controller K which processes the output measurement y(t), t ∈ [t0, T ] ⊂ [0,∞) (equivalently yk, κ ∈ [k0, K] ⊂ [0, ∞)) and generates a control action u = α([t0, t]) (resp. u = α([k0, k])) that renders locally the L2

Kc : ˙ξ = η(ξ,y), ξ(0) = ξ0 u = θ(ξ,y)

Kd : ξκ+1= η(ξκ,yκ), ξ0 =ξ0 uκ =θ (ξκ,yκ), k∈Z+,

where ξ : [0,∞) → X,η : O × Y → X, θ : O×Y → ℜp

Often this kind of controller will be like a carbon-copy of the plant which is also called observer-based controller, and the feedback interconnnection of the controller K and plant P results in the following closed-loop system:

or equivalently

Then the problem of optimizing the performance (1.5) or (1.6) subject to the dynamics Pca ◦ Kc (respectively Pda ◦ Kd) becomes, by the dynamic programming principle, that of solving the following HJIE:

min usupw {W(x,ξ)(x,ξ)fc(x,ξ)+12‖z‖2−12γ2‖w‖2}=0, W(0,0)=0, |

(1.17) |

or the DHJIE

W(x,ξ)=min usupw {W(fd(x,ξ))+12‖z‖2−12γ2‖w‖2}=0, W(0,0)=0, |

(1.18) |

where

fc(x,ξ) = ( f(x)+g1(x)w+g2(x)θ(ξ,y) η(ξ,y) ),

fd(xk,ξk) = ( f(xk)+g1(xk)wk+g2(xk)θ(ξk,yk) η(ξk,yk) ),

in addition to the HJIE (1.13) or (1.14) respectively. The problem of designing such a dynamic-controller and solving the above optimization problem associated with it, will be the subject of discussion in Chapters 6 and 7 respectively.

An alternative approach to the problem is using the theory of dissipative systems [131, 274, 263, 264] which we hereby introduce.

Definition 1.2.5 The nonlinear system Pc is said to be locally dissipative in M ⊂ X

V(x(t1))−V(x(t0))≤∫t1t0s(w(t),z(t))dt |

(1.19) |

is satisfied for all t1 > t0, for all x(t0), x(t1) ∈ M. The system is dissipative if M = X

Equivalently, Pd is locally dissipative in M if there exists a C0 positive semidefinite function V : M → ℜ, V (0) = 0 such that

(1.20) |

is satisfied for all k1 > k0, for all xk1, xk0 ∈ M. The system is dissipative if M = X

Consider now the nonlinear system P and assume the states of the system are available for feedback. Consider also the problem of rendering locally the L2

Furthermore, if we assume the storage-function V in Definition 1.2.5 is C1(M), then we can go from the integral version of the dissipation inequalities (1.19), (1.20) to their differential or infinitesimal versions respectively, by differentiation along the trajectories of the system P and with t0 fixed (equivalently k0 fixed), and t1 (equivalently k1) arbitrary, to obtain:

Vx(x)[f(x)+g1(x)w+g2(x)u]+12(‖z‖2−γ2‖w‖2)≤0,

respectively

V(f(x)+g1(x)w+g2(x)u)+12(‖z‖2−γ2‖w‖2)≤V(x).

Next, consider the problem of rendering the system dissipative with the minimum control action and in the presence of the worst-case disturbance. This is essentially the H∞

infu∈Usupw∈W{Vx(x)[f(x)+g1(x)w+g2(x)u]+12(‖z‖2−γ2‖w‖2)}≤0 |

(1.21) |

or equivalently

(1.22) |

The above inequality (1.21) (respectively (1.22)) is exactly the inequality version of the equation (1.13) (respectively (1.14)) and is known as the HJI-inequality. Thus, the existence of a solution to the dissipation inequality (1.21) (respectively (1.22)) implies the existence of a solution to the HJIE (1.13) (respectively (1.14)).

Conversely, if the state-space of the system P is reachable from x = 0, has an asymptotically-stable equilibrium-point at x = 0 and an L2-gain ≤ γ, then the functions

Vca(x) = supT supw∈L2[0,T),x(0)=x−12T∫0(γ2‖w(t)‖2−‖z(t)‖2)dt,

Vcr(x) = infT infw∈L2(−T,0],x = x0,x(−T)=0−120∫−T(γ2‖w(τ)‖2−‖z(τ)‖2)dt,

or equivalently

Vda(x) = supT supw∈l2[0,K),x0=x0−12K∑k=0(γ2‖wk‖2−‖zk‖2),

Vdr(x) = infT infw∈l2[−K,0],x = x0,x−k=0 120∑k=−K(γ2‖wk‖2−‖zk‖2),

respectively, are well defined for all x ∈ M and satisfy the dissipation inequality (1.21) (respectively (1.22)) (see Chapter 3 and [264]). Moreover, Va(0) = Vr(0) = 0, 0 ≤ Va ≤ Vr. Therefore, there exists at least one solution to the dissipation inequality. The functions Va and Vr are also known as the available-storage and the required-supply respectively.

It is therefore clear from the foregoing that a solution to the disturbance-attenuation problem can be derived from this perspective. Moreover, the H∞-suboptimal control problem for the system P has been reduced to the problem of solving the HJIE (1.13) (respectively (1.14)) or the HJI-inequality (1.21) (respectively (1.22)), and hence we have shown that the differential game approach and the dissipative system’s approach are equivalent. Similarly, the measurement-feedback problem can also be tackled using the theory of dissipative systems.

The above approaches to the nonlinear H∞-control problem for time-invariant affine state-space systems can be extended to more general time-invariant nonlinear state-space systems in the form (1.1) or (1.2) as well as time-varying nonlinear systems. In this case, the finite-time horizon problem becomes relevant; in fact, it is the most relevant. Indeed, we can consider an affine time-varying plant of the form

Pcat :{˙x(t) = f(x,t)+g1(x,t)w(t)+g2(x,t)u(t); x(0) =ˆx0z(t) = h1(x,t)+κ12(x,t)u(t)y (t) = h2(x,t)+κ21(x,t)w(t) , |

(1.23) |

or equivalently

Pdak :{xk+1 = f(xk,k)+g1(xk,k)wk+g2(xk,k)uk; x0=x0zk = h1(xk,k)+k12(xk,k)ukyk = h2(xk,k)+k21(xk,k)wk, k∈Z |

(1.24) |

with the additional argument “t” (respectively “k”) here denoting time-variation; and where all the variables have their usual meanings, while the functions f: X→ ℛ, g1: X→ ℛ→ℳn×r(X×ℛ),g2: X→ ℛ→ℳn×r(X×ℛ),h1: X× ℛ→ℛm,h2: X× ℛ→ℛm, and k12, k21 of appropriate dimensions, are real C∞,0(X , ℜ) functions, i.e., are smooth with respect to x and continuous with respect to t (respectively smooth with respect to xk). Furthermore, we may assume without loss of generality that the system has a unique equilibrium-point at x = 0, i.e., f(0, t) = 0 and hi(0, t) = 0, i = 1, 2 with u = 0, w = 0 (or equivalently f(0, k) = 0 and hi(0, k) = 0, i = 1, 2 with uk = 0, wk = 0).

Then, the finite-time horizon state-feedback H∞ suboptimal control problem for the above system Pa can be pursued along similar lines as the time-invariant case with the exception here that, the solution to the problem will be characterized by an evolution equation of the form (1.7) or (1.8) respectively. Briefly, the problem can be formulated

analogously as a two-player zero-sum game with the following cost functional:

(1.25) |

(1.26) |

subject to the dynamics Pcat (respectively Pdak) over some finite-time interval [0, T ] (respectively [0, K]) using state-feedback controls of the form:

u=β(x,t) β(0,t)=0

or

u=β(xk,k), β(0,k)=0

respectively.

A pair of strategies (u⋆ (x, t), w⋆ (x, t)) under feedback information pattern provides a saddle-point solution to the above problem such that

(1.27) |

or

Jk(u⋆(xk,k),w(xk,k))≤Jk(u⋆(xk,),w⋆(xk,k))≤Jk(u(xk,k),w⋆(xk,k)) |

(1.28) |

respectively, if there exists a positive definite C1 1 function V : X×[0,T] → ℜ+ (respectively V : X×[0,K] → ℜ+) satisfying the following HJIE:

−Vt(x,t) = infu supw{Vx(x,t)[f(x,t)+g1(x,t)w+g2(x,t)u]+12[‖z(t)‖2− γ2‖w(t)‖2]}; V(x,T)=0 =Vx(x,t)[f(x,t)+g1(x,t)w⋆(x,t)+g2(x,t)u⋆(x,t)]+ 12[‖z⋆(x,t)‖2−γ2‖w(x,t)‖2]; V(x,T)=0, x∈X |

(1.29) |

or equivalently the recursive equations (DHJIE)

respectively, where z⋆ (x, t) (equivalently z⋆ (x, k)) is the optimal output. Furthermore, a dissipative-system approach to the problem can also be pursued along similar lines as in the time-invariant case, and the output measurement-feedback problem could be tackled similarly.

FIGURE 1.2

Feedback Configuration for Nonlinear Mixed H2/H∞-Control

Notice however here that the HJIEs (1.29) and DHJIE (1.30) are more involved, in the sense that they are time-varying or evolution PDEs. In the case of (1.29), the solution is a single function of two variables x and t that is also required to satisfy the boundary condition V (x,T) = 0 ∀x ∈X; while in the case of the DHJIE (1.30), the solution is a set of K + 1 functions with the last function required to also satisfy the boundary conditions V (x,K+1) = 0 ∀x ∈ X. These equations are notoriously difficult to solve and will be the subject of discussion in the last chapter.

1.2.1 Mixed H2/H∞-Control Problem

We now consider a different set-up for the H∞-control problem; namely, the problem of mixing two cost functions to achieve disturbance-attenuation and at the same time minimizing the output energy of the system. It is well known that we can only solve the suboptimal H∞-control problem easily, and therefore H∞-controllers are hardly unique. However, H2controllers [92, 292] can be designed optimally. It therefore follows that, by mixing the two criterion functions, one can achieve the benefits of both types of controllers, while at the same time, try to recover some form of uniqueness for the controller. This philosophy led to the formulation of the mixed H2/H∞-control problem.

A typical set-up for this problem is shown in Figure 1.2 with w = (w0w1), where the signal w0 is a Gaussian white noise signal (or bounded-spectrum signal), while w1(t) is a bounded-power or energy signal. Thus, the induced norm from the input w0 to z is the L2-norm (respectively ℓ2 -norm) of the plant P, i.e.,

‖Pc‖ℒ2 ≜ sup0≠w0∈S‖z‖p‖w0‖S,‖Pd‖l2 ≜ sup0≠w0,k∈S'‖z‖p'‖w0‖S',

while the induced norm from w1 to z is the L2-norm (respectively ℓ∞-norm) of P, i.e.,

‖Pc‖ℒ∞ ≜ sup0≠w1∈p‖z‖2‖w1‖2,‖Pd‖l∞ ≜ sup0≠w1∈p'‖z‖2‖w1‖2,

where

P ≜ {w(t) ; w ∈ ℒ∞,Rww(τ),Sww(jω) exist for all τ and all ω resp.,‖w‖2p < ∞},S ≜ {w(t) ; w ∈ ℒ∞,Rww(τ),Sww(jω) exist for all τ and all ω resp.,‖Sww(jω)‖∞ < ∞},P' ≜ {w ; w ∈ l∞,Rww(k),Sww(jω) exist for all k and all ω resp.,‖w‖2p' < ∞},S' ≜ {w ; w ∈ l∞,Rww(k),Sww(jω) exist for all k and all ω resp.,‖Sww(jω)‖∞ < ∞},‖z‖2p ≜ limT→∞12TT∫−T‖z(t)‖2dt, ‖z‖2p' ≜ limK→∞12KK∑k=−K‖zk‖2, ‖w0‖2S = ‖Sw0w0(jω)‖∞, ‖w0‖2S' = ‖Sw0w0(jω)‖∞,

and Rww(τ), Sww(jω) (equivalently Rww(k), Sww(jω)) are the autocorrelation and power spectral-density matrices [152] of w(t) (equivalently wk) respectively. Notice also that ‖(.)‖p and ‖(.)‖S are seminorms. In addition, if the plant is stable, we replace the induced ℓ-norms (resp. ℓ-norms) above by their equivalent H-subspace norms.

Since minimizing the H∞-induced norm as defined above, over the set of admissible controllers is a difficult problem (i.e., the optimal H∞-control problem is difficult to solve exactly [92, 101]), whereas minizing the induced H2-norm and obtaining optimal H2- controllers is an easy problem, the objective of the mixed H2/H∞ design philosophy is then to minimize ‖P‖ℒ2 (equivalently ‖P‖l2) while rendering ‖Pc‖ℒ∞≤ γ⋆ (resp. ‖Pd‖l∞≤ γ⋆ ) for some prescribed number γ⋆ > 0. Such a problem can be formulated as a two-player nonzero-sum differential game with two cost functionals:

(1.31) |

(1.32) |

or equivalently

(1.33) |

(1.34) |

for the finite-horizon problem. Here, the first functional is associated with the H∞-constraint criterion, while the second functional is related to the output energy of the system or H2-criterion. Moreover, here we wish to solve an associated mixed H2/H∞ problem in which w is comprised of a single disturbance signal w ∈ W ⊂ ℒ2([t0,∞),ℜr) (equivalently w ∈ W ⊂ l2([k0,∞),ℜr)). It can easily be seen that by making J1 ≥ 0 (respectively J1k ≥ 0) then the H∞ constraint ‖P‖ℒ∞≤ γ is satisfied. Subsequently, minimizing J2 (respectively J2k) will achieve the H2/H∞ design objective. Moreover, if we assume also that U ⊂ ℒ2([t0,∞),ℜk) (equivalently U ⊂ l2([k0,∞),ℜk)) then under closed-loop perfect information, a Nash-equilibrium solution to the above game is said to exist if we can find a pair of strategies (u⋆, w⋆> ) such that

(1.35) |

(1.36) |

Equivalently for J1k(u⋆, w⋆ ), J2k(u⋆ , w⋆).

Furthermore, by minimizing the first objective with respect to w and substituting in the second objective which is then minimized with respect to u, the pair of Nash-equilibrium strategies can be found. A necessary and sufficient condition for optimality in such a differentail game is provided by a pair of cross-coupled HJIEs (resp. DHJIEs). For the state-feedback problem and the case of the plant Pc given by equation (1.1) or Pd given by (1.2), the governing equations can be obtained by the dynamic-programming principle or the theory of dissipative systems to be

Yt(x,t) = −infw∈W {Yx(x)f(x,u⋆ (x),w(x))−γ2‖w(x)‖2+‖z⋆(x)‖2},Y(x,T)=0, x∈X, x∈X,

Vt(x,t) = −infu∈U {Vx(x)f(x,u(x),w⋆(x))+‖z⋆(x)‖2}=0,V(x,T)=0, x∈X,

or

Y(x,k) = minwk∈W {Y(fk(x,u⋆k(x),wk)),k+1)+γ2‖w(x)‖2−‖z⋆(x)‖2}; Y(x,K+1)=0, k=1,…,K, x∈X,

V(x,k) = minuk∈U {V(fk(x,uk(x),w⋆k(x))+‖z⋆(x)‖2}; V(x,K+1)=0, k=1,…,K, x∈X,

respectively, for some negative-(semi)definite function V : X → ℜ and positive-(semi)definite function V : X→ ℜ.The solution to the above optimization problem will be the subject of discussion in Chapter 11.

1.2.2 Robust H∞-Control Problem

A primary motivation for the development of H∞-synthesis methods is the design of robust controllers to achieve robust performance in the presence of disturbances and/or model uncertainty due to modeling errors or parameter variations. For all the models and the design methods that we have considered so far, we have concentrated on the problem of disturbance-attenuation using either the pure H∞-criterion or the mixed H2/H∞-criterion.

Therefore in this section, we briefly overview the second aspect of the theory.

A typical set-up for studying plant uncertainty and the design of robust controllers is shown in Figure 1.3 below. The third block labelled Δ in the diagram represents the model uncertainty. There are also other topologies for representing the uncertainty depending on the nature of the plant [6, 7, 33, 147, 223, 265, 284]; Figure 1.3 is the simplest of such representations. If the uncertainty is significant in the system, such as unmodelled dynamics or perturbation in the model, then the uncertainty can be represented as a dynamic system with input excited by the plant output and output as a disturbance input to the plant, i.e.,

FIGURE 1.3

Feedback Configuration for Robust Nonlinear H∞-Control

Δc : { ˙φ =ϑ(φ,u,v), ϑ(0,0,0)=0, φ(t0)= 0 e = ϱ(φ,u,v), ϱ(0,0,0) = 0

or

Δd : { ˙φk =ϑk(φk,uk,vk), ϑk(0,0,0)=0, φ(k0)= 0 ek = ϱk(φk,uk,vk), ϱk(0,0,0) = 0.

The above basic model can be further decomposed (together with the plant) into a coprime factor model as is done in [34, 223, 265].

On the other hand, if the uncertainty is due to parameter variations caused by, for instance, aging or environmental conditions, then it can be represented as a simple norm-bounded uncertainty as in [6, 7, 261, 284]. For the case of the affine system Pca or Pda, such a model can be represented in the most general way in which the uncertainty or perturbation comes from the system input and output matrices as well as the drift vector-field f as

PcaΔ : { ˙x =[f(x)+Δf(x)]+g1(x)w+[g2(x)+Δg2(x)]u; x(0)=x0 z =h1(x)+k12(x)u y=[h2(x)+Δh2(x)]+k21(x)w

or

PdaΔ:{ xk+1 =[f(xk)+Δf(xk)]+g1(xk)wk+[g2(xk)+Δg2(xk)]uk; x(0)=x0 zk =h1(xk)+k12(xk)uk yk=[h2(xk)+Δh2(xk)]+k21(xk)wk, k∈Z

respectively, where Δf, Δg2, Δh2 belong to some suitable admissible sets.

Whichever type of representation is chosen for the plant, the problem of robustly designing an H∞ controller or mixed H2/H∞-controller for the plant PcaΔ (respectively PdaΔ) can be pursued along similar lines as in the case of the nominal model Pca (respectively Pda) using either state-feedback or output-feedback with some additional complexity due to the presence of the uncertainty. This problem will be discussed for the continuous-time state-feedback case in Chapter 5 and for the the measurement-feedback case in Chapter 6.

Often than not, the states of a dynamic system are not accessible from its output. Therefore, it is necessary to design a scheme for estimating them for the purpose of feedback or other use. Such a scheme involves another dynamic system called an “observer” or “filter.” It is essentially a carbon-copy of the original system which is error-driven. It takes in the past and present output measurements y(t) of the system for a given time span [t0, t] ⊂ ℜ and generates an estimate of the desired output z which can be the states or some suitable function of them. It is therefore required to be “causal” so that it is implementable.

FIGURE 1.4

Configuration for Nonlinear H∞-Filtering

A typical set-up for filtering is shown in Figure 1.4. The filter is denoted by F and is designed to minimize the worst-case gain from w to the error difference between the actual desired output of the system z and the estimated output ˆz; typically z = x in such applications. We can represent the plant P by an affine state-space model with the subscript “f” added to denote a filtering model:

Pcaf : { ˙x =f(x)+g1(x)w+g2(x)w; x(t0)=x0 z =h1(x) y=h2(x)+k21(x)w |

(1.37) |

or equivalently

Pdaf : { xk+1 =f(xk)+g1(xk)w; xk0=x0 zk =h1(xk) yk=h2(xk)+k21(xk)wk, k∈Z |

(1.38) |

where all the variables and functions have their previous meanings and definitions, and w ∈ ℒ2[t0,∞) (or equivalently w ∈ l2[k0,∞)) is a noise signal that is assumed to corrupt the outputs y and z (respectively yk and zk) of the system. Then the objective is to design the filter such that

supw∈ℒ2(t0,∞)‖z−ˆz‖22‖w‖22≤γ⋆

or equivalently

supw∈l2(t0,∞)‖zk−ˆzk‖22‖wk‖22≤γ⋆

for some prescribed number γ⋆ > 0 is achieved. If the filter F is configured so that it has identical dynamics as the system, then it can be shown that the solution to the filtering problem is characterized by a (D)HJIE that is dual to that of the state-feedback problem.

1.2.4 Organization of the Book

The book contains thirteen chapters and two appendices. It is loosely organized in two parts: Part I, comprising Chapters 1-4 covers mainly introductory and background material, while Part II comprising Chapters 5-13 covers the real subject matter of the book, dealing with all the various types and aspects of nonlinear H∞-control problems.

Chapter 1 is an introductory chapter. It covers historical perspectives and gives highlights of the contents of the book. Preliminary definitions and notations are also given to prepare the reader for what is to come. In addition, some introduction on differentiable manifolds, mainly definitions, and Lyapunov-stability theory is also included in the chapter to make the book self-contained.

Chapter 2 gives background material on the basics of differential games. It discusses discrete-time and continuous-time nonzero-sum and zero-sum games from which all of the problems in nonlinear H∞-theory are derived and solved. Linear-quadratic (LQ) and two-person games are included as special cases and to serve as examples. No proofs are given for most of the results in this chapter as they are standard, and can be found in wellknown standard references on the subject.

Chapter 3 is devoted to the theory of dissipative systems which is also at the core of the subject of the book. The material presented, however, is well beyond the amount required for elucidating the content matter. The reason being that this theory is not very well known by the control community, yet it pervades almost all the problems of modern control theory, from the LQ-theory and the positive-real lemma, to the H∞-theory and the bounded-real lemma. As such, we have given a complete literature review on this subject and endeavored to include all relevant applications of this theory. A wealth of references is also included for further study.

Chapter 4 is also at the core of the book, and traces the origin of Hamilton-Jacobi theory and Hamiltonian systems to Lagrangian mechanics. The equivalence of Hamiltonian and Lagrangian mechanics is stressed, and the motivation behind the Hamiltonian approach is emphasized. The Hamilton-Jacobi equation is derived from variational principles and the duality between it and Hamilton’s canonical equations is pointed. In this regard, the method of characteristics for first-order partial-differential equations is also discussed in the chapter, and it is shown that Hamilton’s canonical equations are nothing but the characteristic equations for the HJE.

The concept of viscosity and non-smooth solutions of the HJE where smooth solutions and/or Hamiltonians do not exist is also introduced, and lastly the Toda lattice which is a particularly integrable Hamiltonian system, is discussed as an example.

Chapter 5 starts the discussion of nonlinear H∞-theory with the state-feedback problem for continuous-time affine nonlinear time-invariant systems. The solution to this problem is derived from the differential game as well as dissipative systems perspective. The parametrization of a class of full-information controllers is given, and the robust control problem in the presence of unmodelled and parametric uncertainty is also discussed. The approach is then extended to time-varying and delay systems, as well as a more general class of nonlinear systems that are not necessarily affine. In addition, the H∞ almost disturbance-decoupling problem for affine systems is also discussed.

Chapter 6 continues with the discussion in the previous chapter with the output-feedback problem for affine nonlinear systems. First the output measurement-feedback problem is considered and sufficient conditions for the solvability of this problem are given in terms of two uncoupled HJIEs with an additional side-condition. Moreover, it is shown that the controller that solves this problem is observer-based. A parametrization of all output-feedback controllers is given, and the results are also extended to a more general class of nonlinear systems. The robust measurement-feedback problem in the presence of uncertainties is also considered. Finally, the static output-feedback problem is considered, and sufficient conditions for its solvability are also presented in terms of a HJIE together with some algebraic conditions.

In Chapter 7 the discrete-time nonlinear H∞-control problem is discussed. Solution for the full-information, state-feedback and output measurement-feedback problems are given, as well as parametrizations of classes of full-information state and output-feedback controllers. The extension of the solution to more general affine discrete-time systems is also presented. In addition, an approximate and explicit approach to the solution to the problem is also presented.

In Chapter 8 the nonlinear H∞-filtering problem is discussed. Solutions for both the continuous-time and the discrete-time problems are given in terms of dual HJIEs and a coupling condition. Some simulation results are also given to show the performance of the nonlinear H∞-filter compared to the extended Kalman-filter. The robust filtering problem is also discussed.

Chapter 9 discusses the generalization of the H∞-control problems to include singular or ill-posed problems, as well as the H∞-control of singularly-perturbed nonlinear systems with small singular parameters. Both the singular state and measurement-feedback problems are discussed, and the case of cascaded systems is also addressed. However, only the continuous-time results are presented. Furhermore, the state-feedback control problem for nonlinear singularly perturbed continuous-time systems is presented, and a class of composite controllers is also discussed. In addition, Chapter 10 continues with the discussion on singularly perturbed systems and presents a solution to the H∞ infinite-horizon filtering problem for affine nonlinear singularly perturbed systems. Three types of filters, namely, decomposition, aggregate and reduced-order filters are presented, and again sufficient conditions for the solvability of the problem with each filter are presented.

Chapers 11 and 12 are devoted to the mixed H2/H∞ nonlinear control and filtering problems respectively. Only the state-feedback control problem is discussed in Chapter 11 and this is complemented by the filtering problem in Chapter 12. The output measurement-feedback problem is not discussed because of its complexity. Moreover, the treatment is exhaustive, and both the continuous and discrete-time results are presented.

Lastly, the book culminates in Chapter 13 with a discussion of some computational approaches for solving Hamilton-Jacobi equations which are the cornerstones of the theory. Iterative as well as exact methods are discussed. But the topic is still evolving, and it is hoped that the chapter will be substantially improved in the future.