In this chapter, we discuss the nonlinear H∞

It would be seen that the underlying structure of the H∞

The performance of the Kalman-filter for linear systems has been unmatched, and is still widely applied when the spectra of the noise signal is known. However, in the case when the statistics of the noise or disturbances are not known well, the Kalman-filter can only perform averagely. In addition, the nonlinear enhancement of the Kalman-filter or the “extended Kalman-filter” suffers from the usual problem with linearization, i.e., it can only perform well locally around a certain operating point for a nonlinear system, and under the same basic assumptions that the noise inputs are white.

It is therefore reasonable to expect that a filter that is inherently nonlinear and does not make any a priori assumptions on the spectra of the noise input, except that they have bounded energies, would perform better for a nonlinear system. Moreover, previous statistical nonlinear filtering techniques developed using minimum-variance [172] as well as maximum-likelihood [203] criteria are infinite-dimensional and too complicated to solve the filter differential equations. On the other hand, the nonlinear H∞

The linear H∞

8.1 Continuous-Time Nonlinear H∞

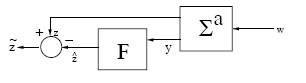

The general set-up for this problem is shown in Figure 8.1, where the plant is represented by an affine nonlinear system Σa, while F is the filter. The filter processes the measurement output y from the plant which is also corrupted by the noise signal w, and generates an estimate ˆz

FIGURE 8.1

Configuration for Nonlinear H∞

∑a : {˙x = f(x)+g1(x)w; x(t0)=x0z = h1(x)y = h2(x)+k21(x)w |

(8.1) |

where x ∈ X

The functions f : X

The objective is to synthesize a causal filter, F, for estimating the state x(t) (or a function of it z = h1(x)) from observations of y(τ) up to time t, over a time horizon [t0, T ], i.e., from

Yt≜{y(τ) : τ≤t}, t∈[t0,T],

such that the L2-gain from w to ˜z

∫Tt0‖˜z(τ)‖2dτ ≤∫Tt0‖w(τ)‖2dτ, T>t0, |

(8.2) |

for all w ∈ W, and for all x0 ∈ O. In addition, it is also required that with w ≡ 0, the penalty variable or estimation error satisfies limt→∞˜z(t)=0

More formally, we define the local nonlinear H∞

Definition 8.1.1 (Nonlinear H∞

ˆx(t) = F(Yt)

and (8.2) is satisfied for all γ ≥ γ⋆

Moreover, if the above conditions are satisfied for all x(t0) ∈ X

Remark 8.1.1 The problem defined above is the finite-horizon filtering problem. We have the infinite-horizon problem if we let T → ∞.

To solve the above problem, a structure is chosen for the filter F

˙ˆx = f(ˆx)+L(ˆx,t)(y(t)−h2(ˆx)), ˆx(t0)=ˆx0ˆz = h1(ˆx)} |

(8.3) |

where ˆx

˜z=z−ˆz=h1(x)−h1(ˆx),

and the problem can be formulated as a two-person zero-sum differential game as discussed in Chapter 2. The cost functional is defined as

ˆJ(w,L)≜12∫Tt0(‖z(t)‖2−γ2‖w(t)‖2)dt, |

(8.4) |

and we consider the problem of finding L⋆

{˙xe = fe(xe)+ge(xe)w˜z = h1(x)−h2(ˆx) |

(8.5) |

where

xe=(xˆx), fe(x)e = ( f(x)f(ˆx)+L(ˆx)(h2(x)−h2(x))), ge(xe)=(g1(x)L1(ˆx)k21(x)) .

We then make the following assumption:

Assumption 8.1.1 The system matrices are such that

k21(x)gT1(x) = 0k21(x)kT21(x) = I

Remark 8.1.2 The first of the above assumptions means that the measurement-noise and the system-noise are independent; while the second is a normalization to simplify the problem.

To solve the above problem, we can apply the sufficient conditions given by Theorem 2.3.2 from Chapter 2, i.e., we consider the following HJIE:

−Yt(xe,t)=infLsupw{Yxe(xe,t)(fe(xe)+ge(xe)w)−12γ2wTw+12zTz}, Y(xe,T)=0 |

(8.6) |

for some smooth C1 (with respect to both its arguments) function Y:ˆN׈N×ℜ→ℜ

Lemma 8.1.1 Suppose there exists a pair of strategies (w, L) = (w⋆, L⋆) for which there exists a positive-definite C1 function Y:ˆN׈N×ℜ→ℜ+

To find the pair (w⋆, L⋆) that satisfies the HJIE, we proceed as in Chapter 5, by forming the Hamiltonian function Λ : T⋆ (X

Λ(xe,w,L,Yxe)=Yxe(xe,t)(fe(xe)+ge(xe)w)−12γ2‖w‖2+12‖z‖2. |

(8.7) |

Then we apply the necessary conditions for the unconstrained optimization problem:

(w⋆,L⋆) = arg {supwminLΛ(xe,w,L,Yx)}.

We summarize the result in the following proposition.

Theorem 8.1.1 Consider the system (8.5), and suppose there exists a C1(with respect to all its arguments) positive-definite function Y:ˆN׈N×ℜ→ℜ+

together with the coupling condition

Yˆx(xe,t)L(ˆx,t)=−γ2(h2(x)−h2(ˆx))T. |

(8.9) |

Then the matrix L(ˆx,t)

Proof: Consider the Hamiltonian function Λ(xe, w, L, Y ex). Since it is quadratic in w, we can apply the necessary condition for optimality, i.e.,

∂Λ∂w|w=w⋆=0

to get

w⋆:=1γ2(gT1(x)YTx(xe,t)+kT21(x)LT(ˆx,t)YTˆx(xe,t)).

Moreover, it can be checked that the Hessian matrix of Λ(xe, w⋆ , L, Y ex) is negative-definite, and hence

Λ(xe,w⋆,L,Yex)≥Λ(xe,w,L,Yex) ∀w∈W.

However, Λ is linear in L, so we cannot apply the above technique to obtain L⋆. Instead, we use a completion of the squares method. Accordingly, substituting w⋆ in (8.7), we get

Λ(xe,w⋆,L,Yex) = (Yx(xe,t)f(x)+Yˆx(xe,t)f(ˆx))+Yˆx(xe,t)L(ˆx,t)(h2(x)−h2(ˆx))+ 12γ2Yx(xe,t)g1(x)gT1(x)YTx(xe,t)+12zTz+ 12γ2Yˆx(xe,t)L(ˆx,t)(x)LT(ˆx,t)YTˆx(xe,t).

Now, completing the squares for L in the above expression, we get

Λ(xe,w⋆,L,Yex) = Yx(xe,t)f(x)+Yˆx(xe,t)f(ˆx)−12γ2‖(h2(x)−h2(ˆx))‖2+ 12γ2‖LT(ˆx,t)YTˆx(xe,t)+γ2(h2(x)−h2(ˆx))‖2+12zTz+ 12γ2Yx(xe,t)g1(x)gT1(x)YTx(xe,t).

Thus, taking L⋆ as in (8.9) renders the saddle-point conditions

Λ(w,L⋆)≤Λ(w⋆,L⋆)≤(w⋆,L)

satisfied for all (w, L) ∈ W × ℜn×m × ℜ, and the HJIE (8.6) reduces to (8.8). By Lemma 8.1.1, we conclude that (w⋆ , L⋆ ) is indeed a saddle-point solution for the game. Finally, it is very easy to show from the HJIE (8.8) that the L2-gain condition (8.2) is also satisfied. □

Remark 8.1.3 By virtue of the side-condition (8.9), the HJIE (8.8) can be represented as

Yt(xe,t)+Yx(xe,t)f(x)+Yˆx(xe,t)f(ˆx)+12γ2Yx(xe,t)g1(x)gT1(x)YTx(xe,t)−12γ2Yx(xe,t)L(ˆx,t)(x)LT(ˆx,t)YTˆx(xe,t)+ 12(h1(x)−h1(ˆx))T(h1(x)−h1(ˆx))=0, Y(xe,T)=0, |

(8.10) |

Remark 8.1.4 The above result, Theorem 8.1.1, can also be obtained from a dissipative systems perspective. Indeed, it can be checked that a function Y (., .) satisfying the HJIE (8.8) renders the dissipation-inequality

(8.11) |

satisfied for all xe(t0) and all w ∈ W. Conversely, it can also be shown (as it has been shown in the previous chapters), that a function Y (., .) satisfying the dissipation-inequality (8.11) also satisfies in more general terms the HJI-inequality (8.8) with “=” replaced by “≤”. Thus, this observation allows us to solve a HJI-inequality which is substantially easier and more advantageous to solve.

For the case of the LTI system

(8.12) |

we have the following corollary.

Corollary 8.1.1 Consider the LTI system Σl (8.12) and the filtering problem for this system. Suppose there exists a symmetric positive-definite solution P to the Riccati-ODE:

(8.13) |

Then, the filter

˙ˆx=Aˆx+L(t)(y−C2ˆx)

solves the finite-horizon linear ℋ∞ filtering problem if the gain-matrix L(t) is taken as

L(t)=P(t)CT2.

Proof: Assume D21DT21 = I, D21B1 = 0, t0 = 0. Let P (t0) > 0, and consider the positive-definite function

(8.14) |

Taking partial-derivatives and substituting in (8.8), we obtain

−12γ2(x−ˆx)TP−1(t)˙P(t)P−1(x−ˆx)+γ2(x−ˆx)TP−1(t)A(x−ˆx)+ γ22(x−ˆx)TP−1(t)B1BT1P−1(t)(x−ˆx)−γ22(x−ˆx)TCT1C2(x−ˆx)+ 12(x−ˆx)TCT1C1(x−ˆx)=0. |

(8.15) |

Splitting the second term in the left-hand-side into two (since it is a scalar):

γ2(x−ˆx)TP−1(t)A(x−ˆx) = 12γ2(x−ˆx)TP−1(t)A(x−ˆx)+ 12γ2(x−ˆx)TP−1(t)A(x−ˆx),

and substituting in the above equation, we get upon cancellation,

P−1(t)˙P(t)P−1(t) = P−1(t)A+ATP−1(t)+P−1(t)B1BT1P−1(t)− CT2C2+γ−2CT1C1. |

(8.16) |

Finally, multiplying the above equation from the left and from the right by P (t), we get the Riccati ODE (8.13). The terminal condition is obtained by setting t = T in (8.14) and equating to zero.

Furthermore, substituting in (8.9) we get after cancellation

P−1(t)L(t) = CT2 or L(t) = P(t)CT2 . □

Remark 8.1.5 Note that, if we used the HJIE (8.10) instead, or by substituting C2 = P −1(t)L(t) in (8.16), we get the following Riccati ODE after simplification:

˙P(t) =ATP(t)+P(t)A+P(t)[1γ2CT1C1−LT(t)L(t)]p(t)+B1BT1, P(T)=0

This result is the same as in reference [207]. In addition, cancelling (x− ˆx) from (8.15), and then multipling both sides by P (t), followed by the factorization, will result in the following alternative filter Riccati ODE

(8.17) |

8.1.1 Infinite-Horizon Continuous-Time Nonlinear H∞-Filtering

In this subsection, we discuss the infinite-horizon filter in which case we let T → ∞. Since we are interested in finding time-invariant gains for the filter, we seek a time-independent function: Y:ˆN1׈N1→ℜ+ such that the HJIE:

Yx(xe)f(x)+Yˆx(xe)f(ˆx)+12γ2Yx(xe,t)g1(x)gT1(x)YTx(xe)− γ22(h2(x)−h2(ˆx))T(h2(x)−h2(ˆx))+ 12(h1(x)−h1(ˆx))T(h1(x)−h1(ˆx))=0, Y(0)=0, x,ˆx∈ ˆN1 |

(8.18) |

is satisfied, together with the coupling condition

(8.19) |

Or equivalently, the HJIE:

Yx(xe)f(x)+Yˆx(xe)f(ˆx)+12γ2Yx(xe)g1(x)gT1(x)YTx(xe)− 12γ2Yx(xe)L(ˆx)(x)LT(ˆx)YTˆx(xe)+ 12(h1(x)−h1(ˆx))T(h1(x)−h1(ˆx))=0, Y(0)=0, x,ˆx∈ ˆN1 |

(8.20) |

However here, since the estimation is carried over an infinite-horizon, it is necessary to ensure that the interconnected system (8.5) is stable with w = 0. This will in turn guarantee that we can find a smooth function Y(.) which satisfies the HJIE (8.18) and provides an optimal gain for the filter. One additional assumption is however required: the system (8.1) must be locally asymptotically-stable. The following theorem summarizes this development.

Proposition 8.1.1 Consider the nonlinear system (8.1) and the infinite-horizon N L H I F P for it. Suppose the system is locally asymptotically-stable, and there exists a C1-positive-definite function Y:ˆN1׈N1→ℜ+ locally defined in a neighborhood of (x, ˆx) = (0, 0) and satisfying the HJIE (8.18) together with the coupling condition (8.19), or equivalently the HJIE (8.20) for some matrix function L(.) ∈ Mn×m. Then, the infinite-horizon N L H I F P is locally solvable in ˆN1 and the interconnected system is locally asymptotically-stable.

Proof: By Remark 8.1.4, any function Y satisfying (8.18)-(8.19) or (8.20) also satisfies the dissipation-inequality

(8.21) |

for all t and all w ∈ W. Differentiating this inequality along the trajectories of the interconnected system with w = 0, we get

˙Y(xe(t))=−12‖z‖2.

Thus, the interconnected system is stable. In addition, any trajectory xe(t) of the system starting in ˆN1 neighborhood of the origin xe = 0 such that ˙Y(t)≡0∀t≥ts, is such that h1(x(t)) = h1(ˆx(t)) and x(t) = ˆx(t) ∀t ≥ ts. This further implies that h2(x(t)) = h2(ˆx(t)), and therefore, it must be a trajectory of the free-system

˙xe=f(x)=(f(x)f(ˆx)).

By local asymptotic-stability of the free-system ˙x=f(x), we have local asymptotic-stability of the interconnected system. □

Remark 8.1.6 Note that, in the above proposition, it is not necessary to have a stable system for H∞ estimation. However, estimating the states of an unstable system is of no practical benefit.

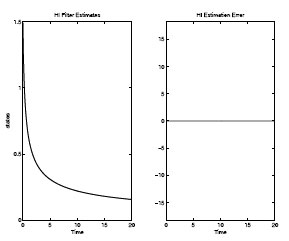

FIGURE 8.2

Nonlinear H∞-Filter Performance with Known Initial Condition

Example 8.1.1 Consider a simple scalar example

˙x = −x3y = x+wˆz = x−ˆx.

We consider the infinite-horizon problem and the HJIE (8.18) together with (8.19). Substituting in these equations we get

−x3Yx−x3Yˆx−γ22(x−ˆx)2+12(x−ˆx)2=0, Y(0,0)=0Yˆxl∞=−2γ2(x−ˆx).

If we let γ = 1, then

−x3Yx−ˆxYˆx=0

and it can be checked that

Yx=x, Yˆx=ˆx

solve the HJI-inequality, and result in

Y=(x,ˆx)=12(x2+ˆx2) ⇒ l⋆∞=−2(x−ˆx)ˆx.

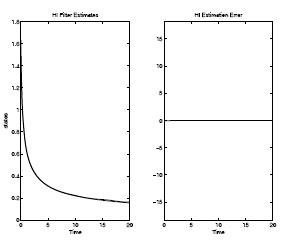

The results of simulation of the system with this filter are shown on Figures 8.2 and 8.3. A noise signal

w(t)=w0+0.1 sin(t)

where w0 is a zero-mean Gaussian white-noise with unit variance, is also added to the output.

FIGURE 8.3

Nonlinear H∞-Filter Performance with Unknown Initial Condition

Because of the difficulty of solving the HJIE in implementation issues, it is sometimes useful to consider the linearized filter and solve the associated Riccati equation. Such a filter will be a variant of the extended-Kalman filter, but is different from it in the sense that, in the extended-Kalman filter, the finite-horizon Riccati equation is solved at every instant, while for this filter, we solve an infinite-horizon Riccati equation. Accordingly, let

(8.22) |

be a linearization of the system about x = 0. Then the following result follows trivially.

Proposition 8.1.2 Consider the nonlinear system (8.1) and its linearization (8.22). Suppose for some γ > 0 there exists a real positive-definite symmetric solution to the filter algebraic-Riccati equation (FARE):

(8.23) |

or

(8.24) |

for some matrix L = PHT2. Then, the filter

˙ˆx=Fˆx+L(y−H2ˆx)

solves the infinite-horizon N L H I F P for the system (8.1) locally on a small neighborhood O of x = 0 if the gain matrix L is taken as specified above.

Proof: Proof follows trivially from linearization. It can be checked that the function V(x)=12γ2(x−ˆx)P−1(x−ˆx), P a solution of (8.23) or (8.24) satisfies the HJIE (8.8) together with (8.9) or equivalently the HJIE (8.10) for the linearized system.

Remark 8.1.7 To guarantee that there exists a positive-definite solution to the ARE (8.23) or (8.24), it is necessary for the linearized system (8.22) or [F, H1] to be detectable (see [292]).

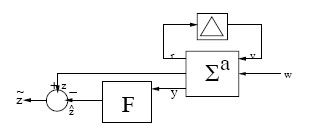

8.2 Continuous-Time Robust Nonlinear H∞-Filtering

In this section we discuss the continuous-time robust nonlinear H∞-filtering problem (R N L H I F) in the presence of structured uncertainties in the system. This situation is shown in Figure 8.4 below, and arises when the system model is not known exactly, as is usually the case in practice. For this purpose, we consider the following model of the system with uncertainties:

∑a,Δ : {˙x = f(x)+Δf(x,t)+g1(x)w; x(0) = 0z = h1(x)y = h2(x)+Δh2(x,t)+k21(x)w |

(8.25) |

where all the variables have their previous meanings. In addition, Δf : X × ℜ → V ∞X, Δf(0, t) = 0, Δh2 : X × ℜ → ℜm, Δh2(0, t) = 0 are the uncertainties of the system which belong to the set of admissible uncertainties ΞΔ and is defined as follows.

Assumption 8.2.1 The admissible uncertainties of the system are structured and matched, and they belong to the following set:

ΞΔ={Δf,Δh2|Δf(x,t)=H1(x)F(x,t)E(x), Δh2(x,t)=H2(x)F(x,t)E(x), E(0)=0,∫∞0(‖E(x)‖2−‖F(x,t)E(x)‖2)dt≥0, and [k21(x)H2(x)][k21(x) H2(x)]T>0 ∀x∈X, t∈ℜ}

where H1(.), H2(.), F (., .), E(.) have appropriate dimensions.

The problem is the following.

Definition 8.2.1 (Robust Nonlinear H∞-Filtering Problem (RNLHIFP)). Find a filter of the form

(8.26) |

where ξ ∈ X is the state estimate, y ∈ ℜm is the system output, ˆz is the estimated variable, and the functions a : X → V ∞X, a(0) = 0, b : X → Mn×m, c : X → ℜs, c(0) = 0 are smooth C2 functions, such that the L2-gain from w to the estimation error ˜z=z−ˆz is less than or equal to a given number γ > 0, i.e.,

(8.27) |

for all T > 0, all w ∈ L2[0, T ], and all admissible uncertainties. In addition with w ≡ 0, we have limt→∞ ˜z(t) = 0.

To solve the above problem, we first recall the following result [210] which gives sufficient conditions for the solvability of the N L H I F P for the nominal system (i.e., without the uncertainties Δf(x, t) and Δh2(x, t)). Without any loss of generality, we shall also assume for the remainder of this section that γ=1_ henceforth.

Theorem 8.2.1 Consider the nominal system (8.25) without the uncertainties Δf(x, t) and Δh2(x, t) and the N L H I F P for this system. Suppose there exists a positive-semidefinite function ψ:˜NטN→ℜ locally defined in a neighborhood ˜NטN of the origin (x, ξ) = 0 such that the following HJIE is satisfied

FIGURE 8.4

Configuration for Robust Nonlinear H∞-Filtering

(8.28) |

for all x, ξ ∈ ˜NטN, where

H JI(x,ξ) ≜ [ψx(x,ξ) ψξ(x,ξ)][˜f(x,ξ)+˜g(x,ξ)ΨTx(ξ,ξ)]+ 14[Ψx(x,ξ) Ψξ(x,ξ)]˜k(x,ξ)˜kT(x,ξ)[ΨTx(x,ξ)ΨTξ(x,ξ)]− 14Ψx(ξ,ξ)g1( ξ)kT21(ξ)R−1(x)k21(ξ)gT1(ξ)ΨTx(ξ,ξ)− ˜h2(x,ξ)R−1(x)˜h2(x,ξ)+˜hT1(x,ξ)˜h1(x,ξ)+ 12Ψx(ξ,ξ)g1( ξ)kT21(ξ)R−1(x)˜h2(x,ξ)˜b(x,ξ) = 12bT(ξ)ΨTξ(x,ξ)+R−1(x)[12k21(x)gT1(x)ΨTx(x,ξ)+˜h2(x,ξ)],

and

˜f(x,ξ) = [f(x)−g1(x)kT21(x)R−1(x)˜h2(x,ξ) f(ξ)],˜g(x,ξ) = [12g1(ξ)kT21(ξ)R−1(x)k21(ξ)gT1(ξ) 12g1(ξ)gT(ξ)],˜kT(x,ξ) = [g1(x)(I−kT21(x)R−1(x)k21(x))12 0],˜h1(x,ξ) = h1(x)−h1(ξ),˜h2(x,ξ) = h2(x)−h2(ξ),R(x) = k21(x)kT21(x).

Then, the filter (8.26) with

a(ξ) = f(ξ)−b(ξ)h2(ξ)c(ξ) = h1(ξ)

solves the N L H I F P for the system (8.25).

Proof: The proof of this theorem can be found in [210].

Remark 8.2.1 Note that Theorem 8.2.1 provides alternative sufficient conditions for the solvability of the N L H I F P discussed in Section 8.1.

Next, before we can present a solution to the R N L H I F P, which is a refinement of Theorem 8.2.1, we transform the uncertain system (8.25) into a scaled or auxiliary system (see also [226]) using the matching properties of the uncertainties in ΞΔ:

∑as,Δ:{ ˙xs=f(xs)+[g1(xs)1τH1(xs)]ωs;xs(0)=0zs=[h1(xs)τE(xs)]ys=h2(xs)+[k21(xs)1τH2(xs)]ωs |

(8.29) |

where xs ∈ X is the state-vector of the scaled system, ws ∈ W ⊂ L2([0, ∞), ℜr+v) is the noise input, τ > 0 is a scaling constant, zs is the new controlled (or estimated) output and has a fictitious component coming from the uncertainties. To estimate zs, we employ the filter structure (8.26) with an extended output:

(8.30) |

where all the variables have their previous meanings. We then have the following preliminary result which establishes the equivalence between the system (8.25) and the scaled system (8.29).

Theorem 8.2.2 Consider the nonlinear uncertain system (8.25) and the R N L H I F P for this system. There exists a filter of the form (8.26) which solves the problem for this system for all admissible uncertainties if, and only if, there exists a τ > 0 such that the same filter (8.30) solves the problem for the scaled system (8.29).

Proof: The proof of this theorem can be found in Appendix B.

We can now present a solution to the R N L H I F P in the following theorem which gives sufficient conditions for the solvability of the problem.

Theorem 8.2.3 Consider the uncertain system (8.29) and the R N L H I F P for this system. Given a scaling factor τ > 0, suppose there exists a positive-semidefinite function Φ :˜N1טN1→ℜ locally defined in a neighborhood ˜N1טN1 of the origin (xs, ξ) = (0,0) such that the following HJIE is satisfied:

(8.31) |

for all xs, ξ ∈ ˜N1טN1, where

˜H JI (xS,ξ) ≜ [Φxs(xs,ξ) Φξ(xs,ξ)][ˆf(xs,ξ)+ˆg(xs,ξ)ΨTxs(ξ,ξ)]+ 14[Φxs(xs,ξ) Φξ(xs,ξ)]ˆk(xs,ξ)ˆkT(xs,ξ)[Φxs(xs,ξ)Ψξ(xs,ξ)] −14 Φxs(ξ,ξ)ˆg1( ξ)ˆk21(ξ)ˆR−1(xs)ˆkT21(ξ)ˆgT1(ξ)ΦTxs(ξ,ξ)− ˆhT2(xs,ξ)ˆR−1(xs)ˆh2(xs,ξ)+ˆhT1(xs,ξ)ˆh1(xs,ξ)+ 12Φxs(ξ,ξ)ˆg1( ξ)ˆkT21(ξ)^R;−1(xs)ˆh2(xs,ξ)+τ2ET(xs)E(xs)ˆb(xs,ξ) = 12bT(ξ)ΨTξ(xs,ξ)+R−1(xs)[12k21(xs)gT1(xs)ΨTxs(x,ξ)− 12k21(ξ)gT1(ξ)ΨTxs(ξ,ξ)+ˆh2(xs,ξ)],

and

ˆf(xs,ξ) = [f(xs)−ˆg1(x)ˆkT21(xs)ˆR−1(x)ˆh2(xs,ξ) f(ξ)],ˆg(x,ξ) = [12ˆg1(ξ)ˆkT21(ξ)R−1(x)ˆk21(ξ)ˆgT1(ξ)ΦTxs(ξ,ξ) 12ˆg1(ξ)ˆgT(ξ)ΦTxs(ξ,ξ)]ˆkT(xs,ξ) = [ˆg1(xs)(I−ˆkT21(x)R−1(xs)ˆk21(x))12 0]ˆk21(xs) = [k21(xs)1τH2(xs)]ˆg1(xs) = [g1(xs)1τH1(xs)]ˆh1(xs,ξ) = h1(xs)−h1(ξ)ˆh2(xs,ξ) = h2(xs)−h2(ξ)ˆR(xs) = k21(xs)ˆkT21(xs).

Then the filter (8.30) with

a(ξ) = f(ξ)+12ˆg1(x)ˆgT1(x)ΦTx(ξ,ξ)−b(ξ)[h2(ξ)+12ˆk21(x)gT1(x)ΦTx(ξ,ξ)c(ξ) = h1(ξ)

solves the R N L H I F P for the system (8.25).

Proof: The result follows by applying Theorem 8.2.2 for the scaled system (8.25). □

It should be observed in the previous two Sections 8.1, 8.2, that the filters constructed can hardly be implemented in practice, because the gain matrices are functions of the original state of the system, which is to be estimated. Thus, except for the linear case, such filters will be of little practical interest. Based on this observation, in the next section, we present another class of filters which can be implemented in practice.