3

State Estimation

Gauss's batch least squares is routinely used today, and it often gives accuracy that is superior to the best available EKF.

Fred Daum, [40], p. 65

Although SDEs provide accessible mathematical models and have become standard models in many areas of science and engineering, features extraction from high‐order processes is usually much more complex than from first‐order processes such as the Langevin equation. The state-space representation avoids many inconveniences by replacing high‐order SDEs with first‐order vector SDEs. Estimation performed using the state‐space model solves simultaneously two problems: 1) filtering measurement noise and, in some cases, process noise and thus filter, and 2) solving state‐space equations with respect to the process state and thus state estimator. For well‐defined linear models, state estimation is usually organized using optimal, optimal unbiased, and unbiased estimators. However, any uncertainty in the model, interference, and/or errors in the noise description may require a norm‐bounded state estimator to obtain better results. Methods of linear state estimation can be extended to nonlinear problems to obtain acceptable estimates in the case of smooth nonlinearities. Otherwise, special nonlinear estimators can be more successful in accuracy. In this chapter, we lay the foundations of state estimation and introduce the reader to the most widely used methods applied to linear and nonlinear stochastic systems and processes.

3.1 Lineal Stochastic Process in State Space

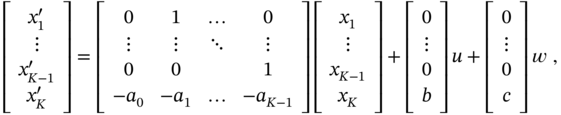

To introduce the state space approach, we consider a simple case of a single‐input, single‐output ![]() th order LTV system represented by the SDE

th order LTV system represented by the SDE

where ![]() ,

, ![]() , and

, and ![]() are known time‐varying coefficients;

are known time‐varying coefficients; ![]() is the output;

is the output; ![]() is the input; and

is the input; and ![]() is WGN. To represent (3.1) in state space, let us assign

is WGN. To represent (3.1) in state space, let us assign ![]() , suppose that

, suppose that ![]() , and rewrite (3.1) as

, and rewrite (3.1) as

The ![]() th‐order derivative in (3.2) can be viewed as the

th‐order derivative in (3.2) can be viewed as the ![]() th system state and the state variables

th system state and the state variables ![]() assigned as

assigned as

to be further combined into compact matrix forms

called state‐space equations, where time ![]() is omitted for simplicity. In a similar manner, a multiple input multiple output (MIMO) system can be represented in state space.

is omitted for simplicity. In a similar manner, a multiple input multiple output (MIMO) system can be represented in state space.

In applications, the system output ![]() is typically measured in the presence of additive noise

is typically measured in the presence of additive noise ![]() and observed as

and observed as ![]() . Accordingly, a continuous‐time MIMO system can be represented in state space using the state equation and observation equation, respectively, as

. Accordingly, a continuous‐time MIMO system can be represented in state space using the state equation and observation equation, respectively, as

where ![]() is the state vector,

is the state vector, ![]() ,

, ![]() is the observation vector,

is the observation vector, ![]() is the input (control) signal vector,

is the input (control) signal vector, ![]() is the process noise, and

is the process noise, and ![]() is the observation or measurement noise. Matrices

is the observation or measurement noise. Matrices ![]() ,

, ![]() ,

, ![]() .

. ![]() , and

, and ![]() are generally time‐varying. Note that the term

are generally time‐varying. Note that the term ![]() appears in (3.4) only if the order of

appears in (3.4) only if the order of ![]() is the same as of the system,

is the same as of the system, ![]() . Otherwise, this term must be omitted. Also, if a system has no input, then both terms consisting

. Otherwise, this term must be omitted. Also, if a system has no input, then both terms consisting ![]() in (3.3) and (3.4) must be omitted. In what follows, we will refer to the following definition of the state space linear SDE.

in (3.3) and (3.4) must be omitted. In what follows, we will refer to the following definition of the state space linear SDE.

Linear SDE in state‐space: The model in ((3.3)) and ((3.4)) is called linear if all its noise processes are Gaussian.

3.1.1 Continuous‐Time Model

Solutions to (3.3) and (3.4) can be found in state space in two different ways for LTI and LTV systems.

Time‐Invariant Case

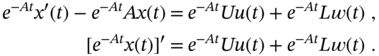

When modeling LTI systems, all matrices are constant, and the solution to the state equation 3.3 can be found by multiplying both sides of (3.3) from the left‐hand side with an integration factor ![]() ,

,

Further integration from ![]() to

to ![]() gives

gives

and the solution becomes

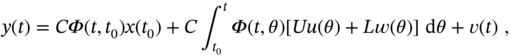

where the state transition matrix that projects the state from ![]() to

to ![]() is

is

and ![]() is called matrix exponential. Note that the stochastic integral in (3.6) can be computed either in the Itô sense or in the Stratonovich sense [193].

is called matrix exponential. Note that the stochastic integral in (3.6) can be computed either in the Itô sense or in the Stratonovich sense [193].

Substituting (3.6) into (3.4) transforms the observation equation to

where the matrix exponential ![]() has Maclaurin series

has Maclaurin series

in which ![]() is an identity matrix. The matrix exponential

is an identity matrix. The matrix exponential ![]() can also be transformed using the Cayley‐Hamilton theorem [167], which states that

can also be transformed using the Cayley‐Hamilton theorem [167], which states that ![]() can be represented with a finite series of length

can be represented with a finite series of length ![]() as

as

if a constant ![]() ,

, ![]() , is specified properly.

, is specified properly.

Time‐Varying Case

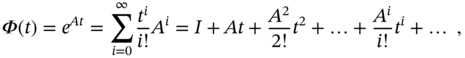

For equations 3.3 and (3.4) of the LTV system with time‐varying matrices, the proper integration factor cannot be found to obtain solutions like for (3.6) and (3.8) [167]. Instead, one can start with a homogenous ordinary differential equation (ODE) [28]

Matrix theory suggests that if ![]() is continuous for

is continuous for ![]() , then the solution to (3.13) for a known initial

, then the solution to (3.13) for a known initial ![]() can be written as

can be written as

where the fundamental matrix ![]() that satisfies the differential equation

that satisfies the differential equation

is nonsingular for all ![]() and is not unique.

and is not unique.

To determine ![]() , we can consider

, we can consider ![]() initial state vectors

initial state vectors ![]() , obtain from (3.13)

, obtain from (3.13) ![]() solutions

solutions ![]() , and specify the fundamental matrix as

, and specify the fundamental matrix as ![]() . Since each particular solution satisfies (3.13), it follows that matrix

. Since each particular solution satisfies (3.13), it follows that matrix ![]() defined in this way satisfies (3.14).

defined in this way satisfies (3.14).

Provided ![]() , the solution to (3.13) can be written as

, the solution to (3.13) can be written as ![]() , where

, where ![]() satisfies (3.15), and

satisfies (3.15), and ![]() is some unknown function of the same class. Referring to (3.15), equation 3.3 can then be transformed to

is some unknown function of the same class. Referring to (3.15), equation 3.3 can then be transformed to

and, from (3.16), we have

which, by known ![]() , specifies function

, specifies function ![]() as

as

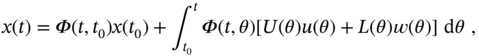

The solution to (3.3), by ![]() and (3.17), finally becomes

and (3.17), finally becomes

where the state transition matrix is given by

Referring to (3.18), the observation equation can finally be rewritten as

It should be noted that the solutions (3.6) and (3.19) are formally equivalent. The main difference lies in the definitions of the state transition matrix: (3.7) for LTI systems and (3.19) for LTV systems.

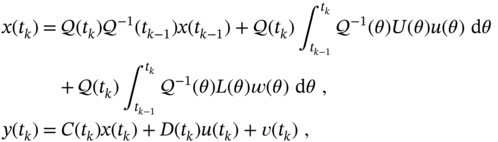

3.1.2 Discrete‐Time Model

The necessity of representing continuous‐time state‐space models in discrete time arises when numerical analysis is required or solutions are supposed to be obtained using digital blocks. In such cases, a solution to an SDE is considered at two discrete points ![]() and

and ![]() with a time step

with a time step ![]() , where

, where ![]() is a discrete time index. It is also supposed that a digital

is a discrete time index. It is also supposed that a digital ![]() represents accurately a discrete‐time

represents accurately a discrete‐time ![]() . Further, the FE or BE methods are used [26].

. Further, the FE or BE methods are used [26].

The FE method, also called the standard Euler method or Euler‐Maruyama method, relates the numerically computed stochastic integrals to ![]() and is associated with Itô calculus. The relevant discrete‐time state‐space model

and is associated with Itô calculus. The relevant discrete‐time state‐space model ![]() , sometimes called a prediction model, is basic in control. A contradiction here is with the noise term

, sometimes called a prediction model, is basic in control. A contradiction here is with the noise term ![]() that exists even though the initial state

that exists even though the initial state ![]() is supposed to be known and thus already affected by

is supposed to be known and thus already affected by ![]() .

.

The BE method, also known as an implicit method, relates all integrals to the current time ![]() that yields

that yields ![]() . This state equation is sometimes called a real‐time model and is free of the contradiction inherent to the FE‐based state equation.

. This state equation is sometimes called a real‐time model and is free of the contradiction inherent to the FE‐based state equation.

The FE and BE methods are uniquely used to convert continuous‐time differential equations to discrete time, and in the sequel we will use both of them. It is also worth noting that predictive state estimators fit better with the FE method and filters with the BE method.

Time‐Invariant Case

Consider the solution (3.6) and the observation (3.4), substitute ![]() with

with ![]() ,

, ![]() with

with ![]() , and write

, and write

Because input ![]() is supposed to be known, assume that it is also piecewise constant on each time step and put

is supposed to be known, assume that it is also piecewise constant on each time step and put ![]() outside the first integral. Next, substitute

outside the first integral. Next, substitute ![]() ,

, ![]() , and

, and ![]() , and write

, and write

where ![]() ,

, ![]() , and

, and

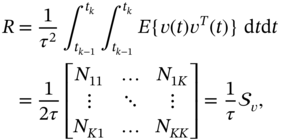

The discrete process noise ![]() given by (3.24) has zero mean,

given by (3.24) has zero mean, ![]() , and the covariance

, and the covariance ![]() specified using the continuous WGN covariance (2.91) by

specified using the continuous WGN covariance (2.91) by

Observe that ![]() is a matrix of the same class as

is a matrix of the same class as ![]() , which is a function of

, which is a function of ![]() ,

, ![]() . If

. If ![]() is small enough, then this term can be set to zero. If also

is small enough, then this term can be set to zero. If also ![]() in (3.3), then

in (3.3), then ![]() establishes a rule of thumb relationship between the discrete and continuous process noise covariances.

establishes a rule of thumb relationship between the discrete and continuous process noise covariances.

The zero mean discrete observation noise ![]() (2.93) has the covariance

(2.93) has the covariance ![]() defined as

defined as

where ![]() ,

, ![]() , is a component of the PSD matrix

, is a component of the PSD matrix ![]() .

.

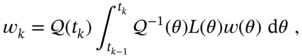

Time‐Varying Case

For LTV systems, solutions in state space are given by (3.18) and (3.20). Substituting ![]() with

with ![]() and

and ![]() with

with ![]() yields

yields

where the fundamental matrix ![]() must be assigned individually for each model. Reasoning along similar lines as for LTI systems, these equations can be represented in discrete‐time as

must be assigned individually for each model. Reasoning along similar lines as for LTI systems, these equations can be represented in discrete‐time as

where ![]() and the time‐varying matrices are given by

and the time‐varying matrices are given by

and noise ![]() has zero mean,

has zero mean, ![]() , and generally a time‐varying covariance

, and generally a time‐varying covariance ![]() . Noise

. Noise ![]() also has zero mean,

also has zero mean, ![]() , and generally a time‐varying covariance

, and generally a time‐varying covariance ![]() .

.

3.2 Methods of Linear State Estimation

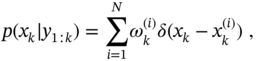

Provided adequate modeling of a real linear physical process in discrete‐time state space, state estimation can be carried out using methods of optimal linear filtering based on the following state‐space equations,

where ![]() is the state vector,

is the state vector, ![]() is the input (or control) signal vector,

is the input (or control) signal vector, ![]() is the observation (or measurement) vector,

is the observation (or measurement) vector, ![]() is the system (process) matrix,

is the system (process) matrix, ![]() is the observation matrix,

is the observation matrix, ![]() is the control (or input) matrix,

is the control (or input) matrix, ![]() is the disturbance matrix, and

is the disturbance matrix, and ![]() is the system (or process) noise matrix. The term with

is the system (or process) noise matrix. The term with ![]() is usually omitted in (3.33), assuming that the order of

is usually omitted in (3.33), assuming that the order of ![]() is less than the order of

is less than the order of ![]() . At the other extreme, when the input disturbance appears to be severe, the noise components are often omitted in (3.32) and (3.33).

. At the other extreme, when the input disturbance appears to be severe, the noise components are often omitted in (3.32) and (3.33).

In many cases, system or process noise is assumed to be zero mean and white Gaussian ![]() with known covariance

with known covariance ![]() . Measurement or observation noise

. Measurement or observation noise ![]() is also often modeled as zero mean and white Gaussian with covariance

is also often modeled as zero mean and white Gaussian with covariance ![]() . Moreover, many problems suggest that

. Moreover, many problems suggest that ![]() and

and ![]() can be viewed as physically uncorrelated and independent processes. However, there are other cases when

can be viewed as physically uncorrelated and independent processes. However, there are other cases when ![]() and

and ![]() are correlated with each other and exhibit different kinds of coloredness.

are correlated with each other and exhibit different kinds of coloredness.

Filters of two classes can be designed to provide state estimation based on (3.32) and (3.33). Estimators of the first class ignore the process dynamics described by the state equation 3.32. Instead, they require multiple measurements of the quantity in question to approach the true value through statistical averaging. Such estimators have batch forms, but recursive forms may also be available. An example is the LS estimator. Estimators of the second class require both state‐space equations, so they are more flexible and universal. The recursive KF algorithm belongs to this class of estimators, but its batch form is highly inefficient in view of growing memory due to IIR. The batch FIR filter and LMF, both operating on finite horizons, are more efficient in this sense, but they cause computational problems when the batch is very large.

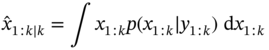

Regardless of the estimator structure, the notation ![]() means an estimate of the state

means an estimate of the state ![]() at time index

at time index ![]() , given observations of

, given observations of ![]() up to and including at time index

up to and including at time index ![]() . The state

. The state ![]() to be estimated at the time index

to be estimated at the time index ![]() is represented by the following standard variables:

is represented by the following standard variables:

![]()

![]() is the a priori (or prior) state estimate at

is the a priori (or prior) state estimate at ![]() given observations up to and including at time index

given observations up to and including at time index ![]() ;

;

![]()

![]() is the a posteriori (or posterior) state estimate at

is the a posteriori (or posterior) state estimate at ![]() given observations up to and including at

given observations up to and including at ![]() ;

;

![]() The a priori estimation error is defined by

The a priori estimation error is defined by

![]() The a posteriori estimation error is defined by

The a posteriori estimation error is defined by

![]() The a priori error covariance is defined by

The a priori error covariance is defined by

![]() The a posteriori error covariance is defined by

The a posteriori error covariance is defined by

In what follows, we will refer to these definitions in the derivation and study of various types of state estimators.

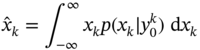

3.2.1 Bayesian Estimator

Suppose there is some discrete stochastic process ![]() ,

, ![]() measured as

measured as ![]() and assigned a set of measurements

and assigned a set of measurements ![]() . We may also assume that the a posteriori density

. We may also assume that the a posteriori density ![]() is known at the past time index

is known at the past time index ![]() . Of course, our interest is to obtain the a posteriori density

. Of course, our interest is to obtain the a posteriori density ![]() at

at ![]() . Provided

. Provided ![]() , the estimate

, the estimate ![]() can be found as the first‐order initial moment (2.10) and the estimation error as the second‐order central moment (2.14). To this end, we use Bayes' rule (2.43) and obtain

can be found as the first‐order initial moment (2.10) and the estimation error as the second‐order central moment (2.14). To this end, we use Bayes' rule (2.43) and obtain ![]() as follows.

as follows.

Consider the join density ![]() and represent it as

and represent it as

Note that ![]() is independent of past measurements and write

is independent of past measurements and write ![]() . Also, the desirable a posteriori pdf can be written as

. Also, the desirable a posteriori pdf can be written as ![]() . Then use Bayesian inference (2.47) and obtain

. Then use Bayesian inference (2.47) and obtain

where ![]() is a normalizing constant, the conditional density

is a normalizing constant, the conditional density ![]() represents the prior distribution of

represents the prior distribution of ![]() , and

, and ![]() is the likelihood function of

is the likelihood function of ![]() given the outcome

given the outcome ![]() . Note that the conditional pdf

. Note that the conditional pdf ![]() can be expressed via known

can be expressed via known ![]() using the rule (2.53) as

using the rule (2.53) as

Provided ![]() , the Bayesian estimate is obtained by (2.10) as

, the Bayesian estimate is obtained by (2.10) as

and the estimation error is obtained by (2.14) as

From the previous, it follows that the Bayesian estimate (3.41) is universal regardless of system models and noise distributions.

Bayesian estimator: Bayesian estimation can be universally applied to linear and nonlinear models with Gaussian and non‐Gaussian noise.

It is worth noting that for linear models the Bayesian approach leads to the optimal KF algorithm, which will be shown next.

Linear Model

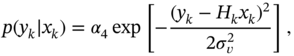

Let us look at an important special case and show how the Bayesian approach can be applied to a linear Gaussian model (3.32) and (3.33) with ![]() . For clarity, consider a first‐order stochastic process. The goal is to obtain the a posteriori density

. For clarity, consider a first‐order stochastic process. The goal is to obtain the a posteriori density ![]() in terms of the known a posteriori density

in terms of the known a posteriori density ![]() and arrive at a computational algorithm.

and arrive at a computational algorithm.

To obtain the desired ![]() using (3.39), we need to specify

using (3.39), we need to specify ![]() using (3.40) and define the likelihood function

using (3.40) and define the likelihood function ![]() . Noting that all distributions are Gaussian, we can represent

. Noting that all distributions are Gaussian, we can represent ![]() as

as

where ![]() is the known a posteriori estimate of

is the known a posteriori estimate of ![]() and

and ![]() is the variance of the estimation error. Hereinafter, we will use

is the variance of the estimation error. Hereinafter, we will use ![]() as the normalizing coefficient.

as the normalizing coefficient.

Referring to (3.32), the conditional density ![]() can be written as

can be written as

where ![]() is the variance of the process noise

is the variance of the process noise ![]() .

.

Now, the a priori density ![]() can be transformed using (3.40) as

can be transformed using (3.40) as

By substituting (3.43) and (3.44) and equating to unity the integral of the normally distributed ![]() , (3.45) can be transformed to

, (3.45) can be transformed to

Likewise, the likelihood function ![]() can be written using (3.33) as

can be written using (3.33) as

where ![]() is the variance of the observation noise

is the variance of the observation noise ![]() . Then the required density

. Then the required density ![]() can be represented by (3.39) using (3.46) and (3.47) as

can be represented by (3.39) using (3.46) and (3.47) as

which holds if the following obvious relationship is satisfied

By equating to zero the free term and terms with ![]() and

and ![]() , we arrive at several conditions

, we arrive at several conditions

which establish a recursive computational algorithm to compute the estimate ![]() (3.53) and the estimation error

(3.53) and the estimation error ![]() (3.50) starting with the initial

(3.50) starting with the initial ![]() and

and ![]() for known

for known ![]() and

and ![]() . This is a one‐state KF algorithm. What comes from its derivation is that the estimate (3.53) is obtained without using (3.41) and (3.42). Therefore, it is not a Bayesian estimator. Later, we will derive the

. This is a one‐state KF algorithm. What comes from its derivation is that the estimate (3.53) is obtained without using (3.41) and (3.42). Therefore, it is not a Bayesian estimator. Later, we will derive the ![]() ‐state KF algorithm and discuss it in detail.

‐state KF algorithm and discuss it in detail.

3.2.2 Maximum Likelihood Estimator

From (3.39) it follows that the a posteriori density ![]() is given in terms of the a priori density

is given in terms of the a priori density ![]() and the likelihood function

and the likelihood function ![]() . It is also worth knowing that the likelihood function plays a special role in the estimation theory, and its use leads to the ML state estimator. For the first‐order Gaussian stochastic process, the likelihood function is given by the expression (3.47). This function is maximized by minimizing the exponent power, and we see that the only solution following from

. It is also worth knowing that the likelihood function plays a special role in the estimation theory, and its use leads to the ML state estimator. For the first‐order Gaussian stochastic process, the likelihood function is given by the expression (3.47). This function is maximized by minimizing the exponent power, and we see that the only solution following from ![]() is inefficient due to noise.

is inefficient due to noise.

An efficient ML estimate can be obtained if we combine ![]() measurement samples of the fully observed vector

measurement samples of the fully observed vector ![]() into an extended observation vector

into an extended observation vector ![]() with a measurement noise

with a measurement noise ![]() and write the observation equation as

and write the observation equation as

where ![]() is the extended observation matrix. A relevant example can be found in sensor networks, where the desired quantity is simultaneously measured by

is the extended observation matrix. A relevant example can be found in sensor networks, where the desired quantity is simultaneously measured by ![]() sensors. Using (3.54), the ML estimate of

sensors. Using (3.54), the ML estimate of ![]() can be found by maximizing the likelihood function

can be found by maximizing the likelihood function ![]() as

as

For Gaussian processes, the likelihood function becomes

where ![]() is the extended covariance matrix of the measurement noise

is the extended covariance matrix of the measurement noise ![]() . Since maximizing (3.56) with (3.55) means minimizing the power of the exponent, the ML estimate can equivalently be obtained from

. Since maximizing (3.56) with (3.55) means minimizing the power of the exponent, the ML estimate can equivalently be obtained from

By equating the derivative with respect to ![]() to zero,

to zero,

using the identities (A.4) and (A.5), and assuming that ![]() is nonsingular, the ML estimate can be found as

is nonsingular, the ML estimate can be found as

which demonstrates several important properties when the number ![]() of measurement samples grows without bound [125]:

of measurement samples grows without bound [125]:

- It converges to the true value of

.

. - It is asymptotically optimal unbiased, normally distributed, and efficient.

Maximum likelihood estimate [125]: There is no other unbiased estimate whose covariance is smaller than the finite covariance of the ML estimate as a number of measurement samples grows without bounds.

It follows from the previous that as ![]() increases, the ML estimate (3.58) converges to the Bayesian estimate (3.41). Thus, the ML estimate has the property of unbiased optimality when

increases, the ML estimate (3.58) converges to the Bayesian estimate (3.41). Thus, the ML estimate has the property of unbiased optimality when ![]() grows unboundedly, and is inefficient when

grows unboundedly, and is inefficient when ![]() is small.

is small.

3.2.3 Least Squares Estimator

Another approach to estimate ![]() from (3.54) is known as Gauss' least squares (LS) [54,185] or LS estimator,1 which is widely used in practice. In the LS method, the estimate

from (3.54) is known as Gauss' least squares (LS) [54,185] or LS estimator,1 which is widely used in practice. In the LS method, the estimate ![]() is chosen such that the sum of the squares of the residuals

is chosen such that the sum of the squares of the residuals ![]() minimizes the cost function

minimizes the cost function ![]() over all

over all ![]() to obtain

to obtain

Reasoning similarly to (3.57), the estimate can be found to be

To improve (3.60), we can consider the weighted cost function ![]() , in which

, in which ![]() is a symmetric positive definite weight matrix. Minimizing of this cost yields the weighted LS (WLS) estimate

is a symmetric positive definite weight matrix. Minimizing of this cost yields the weighted LS (WLS) estimate

and we infer that the ML estimate (3.58) is a special case of WLS estimate (3.61), in which ![]() is chosen to be the measurement noise covariance

is chosen to be the measurement noise covariance ![]() .

.

3.2.4 Unbiased Estimator

If someone wants an unbiased estimator, whose average estimate is equal to the constant ![]() , then the only performance criterion will be

, then the only performance criterion will be

To obtain an unbiased estimate, one can think of an estimate ![]() as the product of some gain

as the product of some gain ![]() and the measurement vector

and the measurement vector ![]() given by (3.54),

given by (3.54),

Since ![]() has zero mean, the unbiasedness condition (3.62) gives the unbiasedness constraint

has zero mean, the unbiasedness condition (3.62) gives the unbiasedness constraint

By multiplying the constraint by the identity ![]() from the right‐hand side and discarding the nonzero

from the right‐hand side and discarding the nonzero ![]() on both sides, we obtain

on both sides, we obtain ![]() and arrive at the unbiased estimate

and arrive at the unbiased estimate

which is identical to the LS estimate (3.60). Since the product ![]() is associated with discrete convolution, the unbiased estimator (3.63) can be thought of as an unbiased FIR filter.

is associated with discrete convolution, the unbiased estimator (3.63) can be thought of as an unbiased FIR filter.

Recursive Unbiased (LS) Estimator

We have already mentioned that batch estimators (3.63) and (3.60) can be computationally inefficient and cause a delay when the number of data points ![]() is large. To compute the batch estimate recursively, one can start with

is large. To compute the batch estimate recursively, one can start with ![]() ,

, ![]() , associated with matrix

, associated with matrix ![]() , and rewrite (3.63) as

, and rewrite (3.63) as

where ![]() . Further, matrix

. Further, matrix ![]() can be represented using the forward and backward recursions as [179]

can be represented using the forward and backward recursions as [179]

and ![]() rewritten similarly as

rewritten similarly as

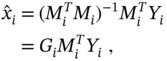

Combining this recursive form with (3.64), we obtain

Next, substituting ![]() taken from (3.64), combining with (3.66), and providing some transformations gives the recursive form

taken from (3.64), combining with (3.66), and providing some transformations gives the recursive form

in which ![]() can be computed recursively by (3.65). To run the recursions, the variable

can be computed recursively by (3.65). To run the recursions, the variable ![]() must range as

must range as ![]() for the inverse in (3.64) to exist and the initial values calculated at

for the inverse in (3.64) to exist and the initial values calculated at ![]() using the original batch forms.

using the original batch forms.

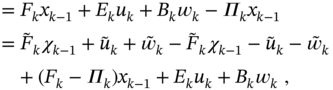

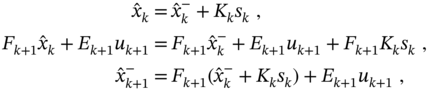

3.2.5 Kalman Filtering Algorithm

We now look again at the recursive algorithm in (3.50)–(3.53) associated with one‐state linear models. The approach can easily be extended to the ![]() ‐state linear model

‐state linear model

where the noise vectors ![]() and

and ![]() are uncorrelated. The corresponding algorithm was derived by Kalman in his seminal paper [84] and is now commonly known as the Kalman filter.2

are uncorrelated. The corresponding algorithm was derived by Kalman in his seminal paper [84] and is now commonly known as the Kalman filter.2

The KF algorithm operates in two phases: 1) in the prediction phase, the a priori estimate and error covariance are predicted at ![]() using measurements at

using measurements at ![]() , and 2) in the update phase, the a priori values are updated at

, and 2) in the update phase, the a priori values are updated at ![]() to the a posteriori estimate and error covariance using measurements at

to the a posteriori estimate and error covariance using measurements at ![]() . This strategy assumes two available algorithmic options: the a posteriori KF algorithm and the a priori KF algorithm. It is worth noting that the original KF algorithm is a posteriori.

. This strategy assumes two available algorithmic options: the a posteriori KF algorithm and the a priori KF algorithm. It is worth noting that the original KF algorithm is a posteriori.

The a posteriori Kalman Filter

Consider a ![]() ‐state space model as in (3.68) and (3.69). The Bayesian approach can be applied similarly to the one‐state case, as was done by Kalman in [84], although there are several other ways to arrive at the KF algorithm. Let us show the simplest one.

‐state space model as in (3.68) and (3.69). The Bayesian approach can be applied similarly to the one‐state case, as was done by Kalman in [84], although there are several other ways to arrive at the KF algorithm. Let us show the simplest one.

The first thing to note is that the most reasonable prior estimate can be taken from (3.68) for the known estimate ![]() and input

and input ![]() if we ignore the zero mean noise

if we ignore the zero mean noise ![]() . This gives

. This gives

which is also the prior estimate (3.52).

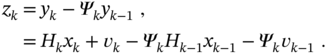

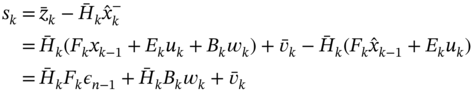

To update ![]() , we need to involve the observation (3.69). This can be done if we take into account the measurement residual

, we need to involve the observation (3.69). This can be done if we take into account the measurement residual

which is the difference between data ![]() and the predicted data

and the predicted data ![]() . The measurement residual covariance

. The measurement residual covariance ![]() can then be written as

can then be written as

Since ![]() is generally biased, because

is generally biased, because ![]() is generally biased, the prior estimate can be updated as

is generally biased, the prior estimate can be updated as

where the matrix ![]() is introduced to correct the bias in

is introduced to correct the bias in ![]() . Therefore,

. Therefore, ![]() plays the role of the bias correction gain.

plays the role of the bias correction gain.

As can be seen, the relations (3.70) and (3.73), which are reasonably extracted from the state‐space model, are exactly the relations (3.52) and (3.53). Now notice that the estimate (3.73) will be optimal if we will find ![]() such that the MSE is minimized. To do this, let us refer to (3.68) and (3.69) and define errors (3.34)–(3.37) involving (3.70)–(3.73).

such that the MSE is minimized. To do this, let us refer to (3.68) and (3.69) and define errors (3.34)–(3.37) involving (3.70)–(3.73).

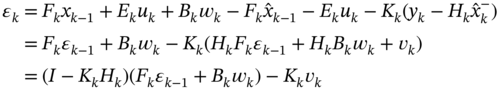

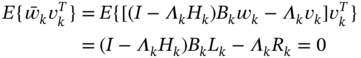

The prior estimation error ![]() (3.34) can be transformed as

(3.34) can be transformed as

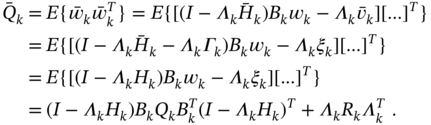

and, for mutually uncorrelated ![]() and

and ![]() , the prior error covariance (3.36) can be transformed to

, the prior error covariance (3.36) can be transformed to

Next, the estimation error ![]() (3.35) can be represented by

(3.35) can be represented by

and, for mutually uncorrelated ![]() ,

, ![]() , and

, and ![]() , the a posteriori error covariance (3.37) can be transformed to

, the a posteriori error covariance (3.37) can be transformed to

What is left behind is to find the optimal bias correction gain ![]() that minimizes MSE. This can be done by minimizing the trace of

that minimizes MSE. This can be done by minimizing the trace of ![]() , which is a convex function with a minimum corresponding to the optimal

, which is a convex function with a minimum corresponding to the optimal ![]() . Using the matrix identities (A.3) and (A.6), the minimization of

. Using the matrix identities (A.3) and (A.6), the minimization of ![]() by

by ![]() can be carried out if we rewrite the equation

can be carried out if we rewrite the equation ![]() as

as

This gives the optimal bias correction gain

The gain ![]() (3.78) is known as the Kalman gain, and its substitution on the left‐hand side of the last term in (3.77) transforms the error covariance

(3.78) is known as the Kalman gain, and its substitution on the left‐hand side of the last term in (3.77) transforms the error covariance ![]() into

into

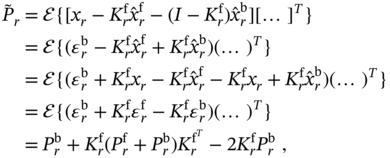

Another useful form of ![]() appears when we first refer to (3.78) and (3.72) and transform (3.77) as

appears when we first refer to (3.78) and (3.72) and transform (3.77) as

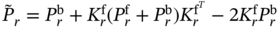

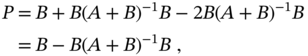

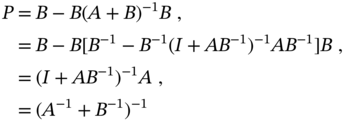

Next, inverting the both sides of ![]() and applying (A.7) gives [185]

and applying (A.7) gives [185]

and another form of ![]() appears as

appears as

Using (3.80), the Kalman gain (3.78) can also be modified as [185]

The previous derived standard KF algorithm gives an estimate at ![]() utilizing data from zero to

utilizing data from zero to ![]() . Therefore, it is also known as the a posteriori KF algorithm, the pseudocode of which is listed as Algorithm 1. The algorithm requires initial values

. Therefore, it is also known as the a posteriori KF algorithm, the pseudocode of which is listed as Algorithm 1. The algorithm requires initial values ![]() and

and ![]() , as well as noise covariances

, as well as noise covariances ![]() and

and ![]() to update the estimates starting at

to update the estimates starting at ![]() . Its operation is quite transparent, and recursions are easy to program, are fast to compute, and require little memory, making the KF algorithm suitable for many applications.

. Its operation is quite transparent, and recursions are easy to program, are fast to compute, and require little memory, making the KF algorithm suitable for many applications.

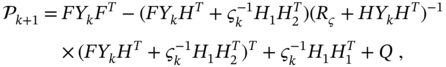

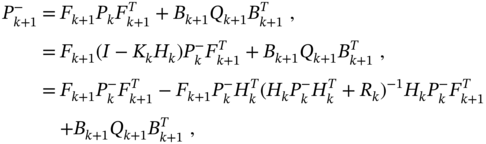

The a priori Kalman Filter

Unlike the a posteriori KF, the a priori KF gives an estimate at ![]() using data

using data ![]() from

from ![]() . The corresponding algorithm emerges from Algorithm 1 if we first substitute (3.79) in (3.74) and go to the discrete difference Riccati equation (DDRE)

. The corresponding algorithm emerges from Algorithm 1 if we first substitute (3.79) in (3.74) and go to the discrete difference Riccati equation (DDRE)

which gives recursion for the covariance of the prior estimation error. Then the a priori estimate ![]() can be obtained by the transformations

can be obtained by the transformations

and the a priori KF formalized with the pseudocode specified in Algorithm 2. As can be seen, this algorithm does not require data ![]() to produce an estimate at

to produce an estimate at ![]() . However, it requires future values

. However, it requires future values ![]() and

and ![]() and is thus most suitable for LTI systems without input.

and is thus most suitable for LTI systems without input.

Optimality and Unbiasedness of the Kalman Filter

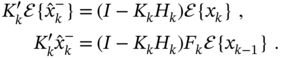

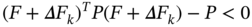

From the derivation of the Kalman gain (3.78) it follows that, by minimizing the MSE, the KF becomes optimal [36,220]. In Chapter, the optimality of KF will be supported by the batch OFIR filter, whose recursions are exactly the KF recursions. On the other hand, the KF originates from the Bayesian approach. Therefore, many authors also call it optimal unbiased, and the corresponding proof given in [54] has been mentioned in many works [9,58,185]. However, in Chapter it will be shown that the batch OUFIR filter has other recursions, which contradicts the proof given in [54].

To ensure that the KF is optimal, let us look at the explanation given in [54]. So let us consider the model (3.68) with ![]() and define an estimate

and define an estimate ![]() as [54]

as [54]

where ![]() and the gain

and the gain ![]() still needs to be determined. Next, let us examine the a priori error

still needs to be determined. Next, let us examine the a priori error ![]() and the a posteriori error

and the a posteriori error

Estimate (3.82) was stated in [54], p. 107, to be unbiased if ![]() and

and ![]() . By satisfying these conditions using the previous relations, we obtain

. By satisfying these conditions using the previous relations, we obtain ![]() and the KF estimate becomes (3.73). This fact was used in [54] as proof that the KF is optimal unbiased.

and the KF estimate becomes (3.73). This fact was used in [54] as proof that the KF is optimal unbiased.

Now, notice that the prior error ![]() is always biased in dynamic systems, so

is always biased in dynamic systems, so ![]() . Otherwise, there would be no need to adjust the bias by

. Otherwise, there would be no need to adjust the bias by ![]() . Then we redefine the prior estimate as

. Then we redefine the prior estimate as

and average the estimation error as

The filter will be unbiased if ![]() is equal to zero that gives

is equal to zero that gives

Substituting (3.84) into (3.82) gives the same estimate (3.73) with, however, different ![]() defined by (3.83). The latter means that KF will be 1) optimal, if

defined by (3.83). The latter means that KF will be 1) optimal, if ![]() is optimally biased, and 2) optimal unbiased, if

is optimally biased, and 2) optimal unbiased, if ![]() has no bias. Now note that the Kalman gain

has no bias. Now note that the Kalman gain ![]() makes an estimate optimal, and therefore there is always a small bias to be found in

makes an estimate optimal, and therefore there is always a small bias to be found in ![]() , which contradicts the property of unbiased optimality and the claim that KF is unbiased.

, which contradicts the property of unbiased optimality and the claim that KF is unbiased.

Intrinsic Errors of Kalman Filtering

Looking at Algorithm 1 and assuming that the model matches the process, we notice that the required ![]() ,

, ![]() ,

, ![]() , and

, and ![]() must be exactly known in order for KF to be optimal. However, due to the practical impossibility of doing this at every

must be exactly known in order for KF to be optimal. However, due to the practical impossibility of doing this at every ![]() and prior to running the filter, these values are commonly estimated approximately, and the question arises about the practical optimality of KF. Accordingly, we can distinguish two intrinsic errors caused by incorrectly specified initial conditions and poorly known covariance matrices.

and prior to running the filter, these values are commonly estimated approximately, and the question arises about the practical optimality of KF. Accordingly, we can distinguish two intrinsic errors caused by incorrectly specified initial conditions and poorly known covariance matrices.

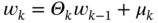

Figure 3.1 shows what happens to the KF estimate when ![]() and

and ![]() are set incorrectly. With correct initial values, the KF estimate ranges close to the actual behavior over all

are set incorrectly. With correct initial values, the KF estimate ranges close to the actual behavior over all ![]() . Otherwise, incorrectly set initial values can cause large initial errors and long transients. Although the KF estimate approaches an actual state asymptotically, it can take a long time. Such errors also occur when the process undergoes temporary changes associated, for example, with jumps in velocity, phase, and frequency. The estimator inability to track temporary uncertain behaviors causes transients that can last for a long time, especially in harmonic models [179].

. Otherwise, incorrectly set initial values can cause large initial errors and long transients. Although the KF estimate approaches an actual state asymptotically, it can take a long time. Such errors also occur when the process undergoes temporary changes associated, for example, with jumps in velocity, phase, and frequency. The estimator inability to track temporary uncertain behaviors causes transients that can last for a long time, especially in harmonic models [179].

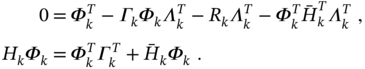

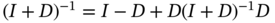

Now, suppose the error covariances are not known exactly and introduce the worst‐case scaling factor ![]() as

as ![]() or

or ![]() . Figure 3.2 shows the typical root MSEs (RMSEs) produced by KF as functions of

. Figure 3.2 shows the typical root MSEs (RMSEs) produced by KF as functions of ![]() , and we notice that

, and we notice that ![]() makes the estimate noisy and

makes the estimate noisy and ![]() makes it biased with respect to the optimal case of

makes it biased with respect to the optimal case of ![]() . Although the most favorable case of

. Although the most favorable case of ![]() and

and ![]() assumes that the effects will be mutually exclusive, in practice this rarely happens due to the different nature of the process and measurement noise. We add that the intrinsic errors considered earlier appear at the KF output regardless of the model and differ only in values.

assumes that the effects will be mutually exclusive, in practice this rarely happens due to the different nature of the process and measurement noise. We add that the intrinsic errors considered earlier appear at the KF output regardless of the model and differ only in values.

Figure 3.1 Typical errors in the KF caused by incorrectly specified initial conditions. Transients can last for a long time, especially in a harmonic state‐space model.

Figure 3.2 Effect of errors in noise covariances,  and

and  , on the RMSEs produced by KF in the worst case:

, on the RMSEs produced by KF in the worst case:  corresponds to the optimal estimate,

corresponds to the optimal estimate,  makes the estimate more noisy, and

makes the estimate more noisy, and  makes it more biased.

makes it more biased.

3.2.6 Backward Kalman Filter

Some applications require estimating the past state of a process or retrieving information about the initial state and error covariance. For example, multi‐pass filtering (forward‐backward‐forward‐...) [231] updates the initial values using a backward filter, which improves accuracy.

The backward a posteriori KF can be obtained similarly to the standard KF if we represent the state equation 3.68 backward in time as

and then, reasoning along similar lines as for the a posteriori KF, define the a priori state estimate at ![]() ,

,

the measurement residual ![]() , the measurement residual covariance

, the measurement residual covariance

the backward estimate

the a priori error covariance

and the a posteriori error covariance

Further minimizing ![]() by

by ![]() gives the optimal gain

gives the optimal gain

and the modified error covariance

Finally, the pseudocode of the backward a posteriori KF, which updates the estimates from ![]() to 0, becomes as listed in Algorithm 3. The algorithm requires initial values

to 0, becomes as listed in Algorithm 3. The algorithm requires initial values ![]() and

and ![]() at

at ![]() and updates the estimates back in time from

and updates the estimates back in time from ![]() to zero to obtain estimates of the initial state

to zero to obtain estimates of the initial state ![]() and error covariance

and error covariance ![]() .

.

3.2.7 Alternative Forms of the Kalman Filter

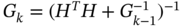

Although the KF Algorithm 1 is the most widely used, other available forms of KF may be more efficient in some applications. Collections of such algorithms can be found in [9,58,185] and some other works. To illustrate the flexibility and versatility of KF in various forms, next we present two versions known as the information filter and alternate KF form. Noticing that these modifications were shown in [185] for the FE‐based model, we develop them here for the BE‐based model. Some other versions of the KF can be found in the section “Problems”.

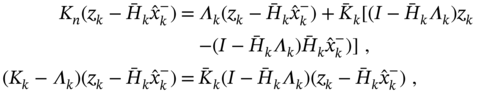

Information Filter

When minimal information loss is required instead of minimal MSE, the information matrix ![]() , which is the inverse of the covariance matrix

, which is the inverse of the covariance matrix ![]() , can be used. Since

, can be used. Since ![]() is symmetric and positive definite, it follows that the inverse of

is symmetric and positive definite, it follows that the inverse of ![]() is unique and KF can be modified accordingly.

is unique and KF can be modified accordingly.

By introducing ![]() and

and ![]() , the error covariance (3.80) can be transformed to

, the error covariance (3.80) can be transformed to

where, by (3.75) and ![]() , matrix

, matrix ![]() becomes

becomes

Using the Woodbury identity (A.7), one can further rewrite (3.86) as

and arrive at the information KF [185]. Given ![]() ,

, ![]() ,

, ![]() ,

, ![]() , and

, and ![]() , the algorithm predicts and updates the estimates as

, the algorithm predicts and updates the estimates as

It is worth noting that the information KF is mathematically equivalent to the standard KF for linear models. However, its computational load is greater because more inversions are required in the covariance propagation.

Alternate Form of the KF

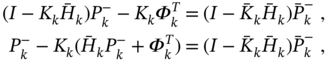

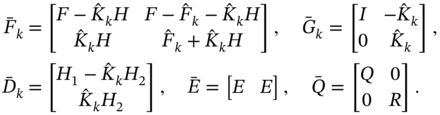

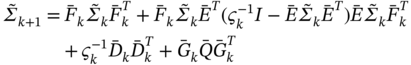

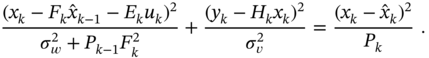

Another form of KF, called alternate KF form, has been proposed in [185] to felicitate obtaining optimal smoothing. The advantage is that this form is compatible with the game theory‐based ![]() filter [185]. The modification is provided by inverting the residual covariance (3.77) through the information matrix using (A.7). KF recursions modified in this way for a BE‐based model lead to the algorithm

filter [185]. The modification is provided by inverting the residual covariance (3.77) through the information matrix using (A.7). KF recursions modified in this way for a BE‐based model lead to the algorithm

It follows from [185] that matrix ![]() is equal to

is equal to ![]() . Therefore, this algorithm can be written in a more compact form. Given

. Therefore, this algorithm can be written in a more compact form. Given ![]() ,

, ![]() ,

, ![]() ,

, ![]() , and

, and ![]() , the initial prior error covariance is computed by

, the initial prior error covariance is computed by ![]() and the update equations for

and the update equations for ![]() become

become

The proof of the equivalence of matrices (3.92) and (3.77) is postponed to the section “Problems”.

3.2.8 General Kalman Filter

Looking into the KF derivation procedure, it can be concluded that the assumptions regarding the whiteness and uncorrelatedness of Gaussian noise are too strict and that the algorithm is thus “ideal,” since white noise does not exist in real life. More realistically, one can think of system noise and measurement noise as color processes and approximate them by a Gauss‐Markov process driven by white noise.

To make sure that such modifications are in demand in real life, consider a few examples. When noise passes through bandlimited and narrowband channels, it inevitably becomes colored and correlated. Any person traveling along a certain trajectory follows a control signal generated by the brain. Since this signal cannot be accurately modeled, deviations from its mean appear as system colored noise. A visual camera catches and tracks objects asymptotically, which makes the measurement noise colored.

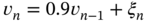

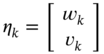

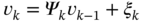

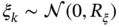

In Fig. 3.3a, we give an example of the colored measurement noise (CMN) noise, and its simulation as the Gauss‐Markov process is illustrated in Fig. 3.3b. An example (Fig. 3.3a) shows the signal strength colored noise discovered in the GPS receiver [210]. The noise here was extracted by removing the antenna gain attenuation from the signal strength measurements and then normalizing to zero relative to the mean. The simulated colored noise shown in Fig. 3.3b comes from the Gauss‐Markov sequence ![]() with white Gaussian driving noise

with white Gaussian driving noise ![]() . Even a quick glance at the drawing (Fig. 3.3) allows us to think that both processes most likely belong to the same class and, therefore, colored noise can be of Markov origin.

. Even a quick glance at the drawing (Fig. 3.3) allows us to think that both processes most likely belong to the same class and, therefore, colored noise can be of Markov origin.

![Schematic illustration of examples of CMN in electronic channels: (a) signal strength CMN in a GPS receiver [210] and (c) Gauss-Markov noise simulated by vn=0.9vn-1+ξn with ξn∼(0,16).](https://imgdetail.ebookreading.net/2023/10/9781119863076/9781119863076__9781119863076__files__images__c03f003.png)

Figure 3.3 Examples of CMN in electronic channels: (a) signal strength CMN in a GPS receiver Based on [210] and (b) Gauss‐Markov noise simulated by  with

with  .

.

Now that we understand the need to modify the KF for colored and correlated noise, we can do it from a more general point of view than for the standard KF and come up with a universal linear algorithm called general Kalman filter (GKF) [185], which can have various forms. Evidence for the better performance of the GKF, even a minor improvement, can be found in many works, since white noise is unrealistic.

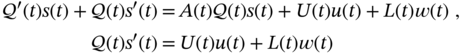

In many cases, the dynamics of CMN ![]() and colored process noise (CPN)

and colored process noise (CPN) ![]() can be viewed as Gauss‐Markov processes, which complement the state‐space model as

can be viewed as Gauss‐Markov processes, which complement the state‐space model as

where ![]() and

and ![]() are the covariances of white Gaussian noise vectors

are the covariances of white Gaussian noise vectors ![]() and

and ![]() and cross‐covariances

and cross‐covariances ![]() and

and ![]() represent the time‐correlation between

represent the time‐correlation between ![]() and

and ![]() . Equations 3.98 and (3.99) suggest that the coloredness factors

. Equations 3.98 and (3.99) suggest that the coloredness factors ![]() and

and ![]() should be chosen so that

should be chosen so that ![]() and

and ![]() remain stationary. Since the model (3.97)–(3.100) is still linear with white Gaussian

remain stationary. Since the model (3.97)–(3.100) is still linear with white Gaussian ![]() and

and ![]() , the Kalman approach can be applied and the GKF derived in the same way as KF.

, the Kalman approach can be applied and the GKF derived in the same way as KF.

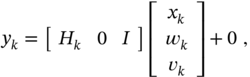

Two approaches have been developed for applying KF to (3.97)–(3.100). The first augmented states approach was proposed by A. E. Bryson et al. in [24,25] and is the most straightforward. It proposes to combine the dynamic equations 3.97–(3.99) into a single augmented state equation and represent the model as

where  . Obviously, the augmented equations 3.101 and (3.102) can be easily simplified to address only CPN, CMN, or noise correlation problems. An easily seen drawback is that the observation equation 3.102 has zero measurement noise. This makes it impossible to apply some KF versions that require inversion of the covariance matrix

. Obviously, the augmented equations 3.101 and (3.102) can be easily simplified to address only CPN, CMN, or noise correlation problems. An easily seen drawback is that the observation equation 3.102 has zero measurement noise. This makes it impossible to apply some KF versions that require inversion of the covariance matrix ![]() , such as information KF and alternate KF form. Therefore, the KF is said to be ill conditioned for state augmentation [25].

, such as information KF and alternate KF form. Therefore, the KF is said to be ill conditioned for state augmentation [25].

The second approach, which avoids the state augmentation, was proposed and developed by A. E. Bryson et al. in [24,25] and is now known as Bryson's algorithm. This algorithm was later improved in [147] to what is now referred to as the Petovello algorithm. The idea was to use measurement differencing to convert the model with CMN to a standard model with new matrices, but with white noise. The same idea was adopted in [182] for systems with CPN using state differencing. Next, we show several GKF algorithms for time‐correlated and colored noise sources.

Time‐Correlated Noise

If noise has a very short correlation time compared to time intervals of interest, it is typically considered as white and its coloredness can be neglected. Otherwise, system may experience a great noise interference [58].

When the noise sources are white, ![]() and

and ![]() , but time correlated with

, but time correlated with ![]() , equations 3.98 and (3.99) can be ignored, and (3.101) and (3.102) return to their original forms (3.32) and (3.33) with time‐correlated

, equations 3.98 and (3.99) can be ignored, and (3.101) and (3.102) return to their original forms (3.32) and (3.33) with time‐correlated ![]() and

and ![]() . There are two ways to apply KF for time‐correlated

. There are two ways to apply KF for time‐correlated ![]() and

and ![]() . We can either de‐correlate

. We can either de‐correlate ![]() and

and ![]() or derive a new bias correction gain (Kalman gain) to guarantee the filter optimality.

or derive a new bias correction gain (Kalman gain) to guarantee the filter optimality.

Noise de‐correlation. To de‐correlate ![]() and

and ![]() and thereby make it possible to apply KF, one can use the Lagrange method [15] and combine (3.32) with the term

and thereby make it possible to apply KF, one can use the Lagrange method [15] and combine (3.32) with the term ![]() , where

, where ![]() is a data vector and the Lagrange multiplier

is a data vector and the Lagrange multiplier ![]() is yet to be determined. This transforms the state equation to

is yet to be determined. This transforms the state equation to

where we assign

and white noise ![]() has the covariance

has the covariance ![]() ,

,

To find a matrix ![]() such that

such that ![]() and

and ![]() become uncorrelated, the natural statement

become uncorrelated, the natural statement ![]() leads to

leads to

and the Lagrange multiplier can be found as

Finally, replacing in (3.107) the product ![]() taken from (3.108) removes

taken from (3.108) removes ![]() , and (3.107) becomes

, and (3.107) becomes

Given ![]() ,

, ![]() ,

, ![]() ,

, ![]() ,

, ![]() , and

, and ![]() , the GKF estimates can be updated using Algorithm 1, in which

, the GKF estimates can be updated using Algorithm 1, in which ![]() should be substituted with

should be substituted with ![]() (3.110) using

(3.110) using ![]() given by (3.109) and

given by (3.109) and ![]() with

with ![]() (3.105). We thus have

(3.105). We thus have

where ![]() is given by (3.104).

is given by (3.104).

New Kalman gain. Another possibility to apply KF to models with time‐correlated ![]() and

and ![]() implies obtaining a new Kalman gain

implies obtaining a new Kalman gain ![]() . In this case, first it is necessary to change the error covariance (3.76) for correlated

. In this case, first it is necessary to change the error covariance (3.76) for correlated ![]() and

and ![]() as

as

where ![]() is given by (3.74) and

is given by (3.74) and ![]() by (3.77). Using (A.3) and (A.6), the derivative of the trace of

by (3.77). Using (A.3) and (A.6), the derivative of the trace of ![]() with respect to

with respect to ![]() can be found as

can be found as

By equating the derivative to zero, ![]() , we can find the optimal Kalman gain as

, we can find the optimal Kalman gain as

and notice that it becomes the original gain (3.78) for uncorrelated noise, ![]() . Finally, (3.118) simplifies the error covariance (3.117) to

. Finally, (3.118) simplifies the error covariance (3.117) to

Given ![]() ,

, ![]() ,

, ![]() ,

, ![]() ,

, ![]() , and

, and ![]() , the GKF algorithm for time‐correlated

, the GKF algorithm for time‐correlated ![]() and

and ![]() becomes

becomes

As can be seen, when the correlation is removed by ![]() , the algorithm (3.120)–(3.125) becomes the standard KF Algorithm 1. It is also worth noting that the algorithms (3.111)–(3.116) and (3.120)–(3.125) produce equal estimates and, thus, they are identical with no obvious advantages over each other. We will see shortly that the corresponding algorithms for CMN are also identical.

, the algorithm (3.120)–(3.125) becomes the standard KF Algorithm 1. It is also worth noting that the algorithms (3.111)–(3.116) and (3.120)–(3.125) produce equal estimates and, thus, they are identical with no obvious advantages over each other. We will see shortly that the corresponding algorithms for CMN are also identical.

Colored Measurement Noise

The suggestion that CMN may be of Markov origin was originally made by Bryson et al. in [24] for continuous‐time and in [25] for discrete‐time tracking systems. Two fundamental approaches have also been proposed in [25] for obtaining GKF for CMN: state augmentation and measurement differencing. The first approach involves reassigning the state vector so that the white sources are combined in an augmented system noise vector. This allows using KF directly, but makes it ill‐conditioned, as shown earlier. Even so, state augmentation is considered to be the main solution to the problem caused by CMN [54,58,185].

For CMN, the state‐space equations can be written as

where ![]() and

and ![]() are zero mean white Gaussian with the covariances

are zero mean white Gaussian with the covariances ![]() and

and ![]() and the property

and the property ![]() for all

for all ![]() and

and ![]() . Augmenting the state vector gives

. Augmenting the state vector gives

where, as in (3.101), zero observation noise makes KF ill‐conditioned.

To avoid increasing the state vector, one can apply the measurement differencing approach [25] and define a new observation ![]() as

as

By substituting ![]() taken from (3.126) and

taken from (3.126) and ![]() taken from (3.127), the observation equation 3.129 becomes

taken from (3.127), the observation equation 3.129 becomes

where we introduced

![]() , and white noise

, and white noise ![]() with the properties

with the properties

where

Now the modified model (3.126) and (3.130) contains white and time‐correlated noise sources ![]() and

and ![]() , for which the prior estimate

, for which the prior estimate ![]() is defined by (3.70) and the prior error covariance

is defined by (3.70) and the prior error covariance ![]() by (3.74).

by (3.74).

For (3.130), the measurement residual can be written as

and the innovation covariance ![]() can be transformed as

can be transformed as

The recursive GKF estimate can thus be written as

where the Kalman gain ![]() should be found for either time‐correlated or de‐correlated

should be found for either time‐correlated or de‐correlated ![]() and

and ![]() . Thereby, one can come up with two different, although mathematically equivalent, algorithms as discussed next.

. Thereby, one can come up with two different, although mathematically equivalent, algorithms as discussed next.

Correlated noise. For time‐correlated ![]() and

and ![]() , KF can be applied if we find the Kalman gain

, KF can be applied if we find the Kalman gain ![]() by minimizing the trace of the error covariance

by minimizing the trace of the error covariance ![]() . Representing the estimation error

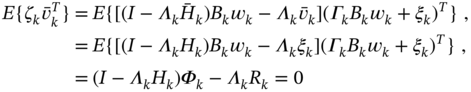

. Representing the estimation error ![]() with

with

and noticing that ![]() does not correlate

does not correlate ![]() and

and ![]() , we obtain the error covariance

, we obtain the error covariance ![]() as

as

where ![]() is given by (3.120) and

is given by (3.120) and ![]() by (3.139).

by (3.139).

Now the optimal Kalman gain ![]() can be found by minimizing

can be found by minimizing ![]() using (A.3), (A.4), and (A.6). Equating to zero the derivative of

using (A.3), (A.4), and (A.6). Equating to zero the derivative of ![]() with respect to

with respect to ![]() gives

gives

and we obtain

Finally, replacing the first component in the last term in (3.142b) with (3.144) gives

Given ![]() ,

, ![]() ,

, ![]() ,

, ![]() ,

, ![]() , and

, and ![]() , the GKF equations become [183]

, the GKF equations become [183]

and we notice that ![]() causes

causes ![]() ,

, ![]() ,

, ![]() , and

, and ![]() , and this algorithm converts to the standard KF Algorithm 1.

, and this algorithm converts to the standard KF Algorithm 1.

De‐correlated noise. Alternatively, efforts can be made to de‐correlate ![]() and

and ![]() by repeating steps (3.103)–(3.110) as shown next. Similarly to (3.103), rewrite the state equation as

by repeating steps (3.103)–(3.110) as shown next. Similarly to (3.103), rewrite the state equation as

where the following variables are assigned

and require that noise ![]() be white with the covariance

be white with the covariance

Matrix ![]() that makes

that makes ![]() and

and ![]() uncorrelated can be found by representing

uncorrelated can be found by representing ![]() with

with

that gives the following Lagrange multiplier

Substituting ![]() taken from (3.157) into the last term in (3.156) removes

taken from (3.157) into the last term in (3.156) removes ![]() and (3.156) takes the form

and (3.156) takes the form

Given ![]() ,

, ![]() ,

, ![]() ,

, ![]() ,

, ![]() , and

, and ![]() , the GKF equations become [183]

, the GKF equations become [183]

Again we notice that ![]() makes

makes ![]() ,

, ![]() ,

, ![]() ,

, ![]() ,

, ![]() , and

, and ![]() , and this algorithm transforms to KF Algorithm 1. Next, we will show that algorithms (3.146)–(3.151) and (3.160)–(3.165) give identical estimates and therefore are equivalent.

, and this algorithm transforms to KF Algorithm 1. Next, we will show that algorithms (3.146)–(3.151) and (3.160)–(3.165) give identical estimates and therefore are equivalent.

Equivalence of GKF Algorithms for CMN

The equivalence of the Bryson and Petovello algorithms for CMN was shown in [33], assuming time‐invariant noise covariances that do not distinguish between FE‐ and BE‐based models. We will now show that the GKF algorithms (3.146)–(3.151) and (3.160)–(3.165) are also equivalent [183]. Note that the algorithms can be said to be equivalent in the MSE sense if they give the same estimates for the same input; that is, for the given ![]() and

and ![]() , the outputs

, the outputs ![]() and

and ![]() in both algorithms are identical.

in both algorithms are identical.

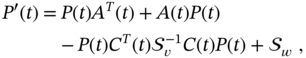

First, we represent the prior estimate ![]() (3.163) via the prior estimate

(3.163) via the prior estimate ![]() (3.149) and the prior error covariance

(3.149) and the prior error covariance ![]() (3.160) via

(3.160) via ![]() (3.146) as

(3.146) as

where ![]() . We now equate estimates (3.150) and (3.164),

. We now equate estimates (3.150) and (3.164),

substitute (3.166), combine with (3.153), transform as

and skip the nonzero equal terms from the both sides. This relates the gains ![]() and

and ![]() as

as

which is the main condition for both estimates to be identical.

If also (3.168) makes the error covariances identical, then the algorithms can be said to be equivalent. To make sure that this is the case, we equate (3.151) and (3.165) as

substitute (3.168) and (3.167), rewrite (3.169) as

introduce ![]() , and arrive at

, and arrive at

By rearranging the terms, this relationship can be transformed into

and, by substituting ![]() , represented with

, represented with

which, after tedious manipulations with matrices, becomes

By substituting ![]() taken from

taken from ![]() , we finally end up with

, we finally end up with

Because ![]() is symmetric,

is symmetric, ![]() , and (3.131) means

, and (3.131) means ![]() , then it follows that (3.171) is the equality

, then it follows that (3.171) is the equality ![]() , and we conclude that the algorithms (3.146)–(3.151) and (3.160)–(3.165) are equivalent. It can also be shown that they do not demonstrate significant advantages over each other.

, and we conclude that the algorithms (3.146)–(3.151) and (3.160)–(3.165) are equivalent. It can also be shown that they do not demonstrate significant advantages over each other.

Colored Process Noise

Process noise becomes colored for various reasons, often when the internal control mechanism is not reflected in the state equation and thus becomes the error component. For example, the IEEE Standard 1139‐2008 [75] states that, in addition to white noise, the oscillator phase PSD has four independent CPNs with slopes ![]() ,

, ![]() ,

, ![]() , and

, and ![]() , where f is the Fourier frequency. Unlike CMN, which needs to be filtered out, slow CPN behavior can be tracked, as in localization and navigation. But when solving the identification problem, both the CMN and the CPN are commonly removed. This important feature distinguishes GKFs designed for CMN and CPN.

, where f is the Fourier frequency. Unlike CMN, which needs to be filtered out, slow CPN behavior can be tracked, as in localization and navigation. But when solving the identification problem, both the CMN and the CPN are commonly removed. This important feature distinguishes GKFs designed for CMN and CPN.

A linear process with CPN can be represented in state space in the standard formulation of the Gauss‐Markov model with the equations

where ![]() ,

, ![]() ,

, ![]() , and

, and ![]() . To obtain GKF for CPN, we assign

. To obtain GKF for CPN, we assign ![]() ,

, ![]() , and

, and ![]() , and think that

, and think that ![]() ,

, ![]() , and

, and ![]() are nonsingular. We also suppose that the noise vectors

are nonsingular. We also suppose that the noise vectors ![]() and

and ![]() are uncorrelated with the covariances

are uncorrelated with the covariances ![]() and

and ![]() , and choose the matrix

, and choose the matrix ![]() so that CPN

so that CPN ![]() remains stationary.

remains stationary.

The augmented state‐space model for CPN becomes

and we see that, unlike (3.101), this does not make the KF ill‐conditioned. On the other hand, colored noise ![]() repeated in the state

repeated in the state ![]() can cause extra errors in the KF output under certain conditions.

can cause extra errors in the KF output under certain conditions.

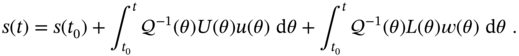

To avoid the state augmentation, we now reason similarly to the measurement differencing approach [25] and derive the GKF for CPN based on the model (3.172)–(3.174) using the state differencing approach [182].

Using the state differencing method, the new state ![]() can be written as

can be written as

where ![]() ,

, ![]() ,

, ![]() , and

, and ![]() are still to be determined. The KF can be applied if we rewrite the new state equation as

are still to be determined. The KF can be applied if we rewrite the new state equation as

and find the conditions under which the noise ![]() will be zero mean and white Gaussian,

will be zero mean and white Gaussian, ![]() . By referring to (3.176), unknown variables can be retrieved by putting to zero the remainder in (3.175b),

. By referring to (3.176), unknown variables can be retrieved by putting to zero the remainder in (3.175b),

Then we replace ![]() taken from (3.175a) into (3.177), combine with

taken from (3.175a) into (3.177), combine with ![]() taken from (3.172), and write

taken from (3.172), and write

Although ![]() may hold for some applications, the case

may hold for some applications, the case ![]() for arbitrary

for arbitrary ![]() is isolated, and a solution to (3.178) can thus be found with simultaneous solution of four equations

is isolated, and a solution to (3.178) can thus be found with simultaneous solution of four equations

which suggest that the modified control signal ![]() is given by (3.180), and for nonsingular

is given by (3.180), and for nonsingular ![]() ,

, ![]() , and

, and ![]() (3.181) gives

(3.181) gives

where ![]() is a weighted version of

is a weighted version of ![]() . Next, substituting (3.183) into (3.179) and providing some transformations give the backward and forward recursions for

. Next, substituting (3.183) into (3.179) and providing some transformations give the backward and forward recursions for ![]() ,

,

It can be seen that ![]() becomes

becomes ![]() for white noise if

for white noise if ![]() and

and ![]() , which follows from the backward recursion (3.184), but is not obvious from the forward recursion (3.185).

, which follows from the backward recursion (3.184), but is not obvious from the forward recursion (3.185).

What follows is that the choice of the initial ![]() is critical to run (3.185). Indeed, if we assume that

is critical to run (3.185). Indeed, if we assume that ![]() , then (3.185) gives

, then (3.185) gives ![]() , which may go far beyond the proper matrix. But for the diagonal

, which may go far beyond the proper matrix. But for the diagonal ![]() with equal components

with equal components ![]() is the only solution. On the other hand,

is the only solution. On the other hand, ![]() gives

gives ![]() , which makes no sense. One way to specify

, which makes no sense. One way to specify ![]() is to assume that the process is time‐invariant up to

is to assume that the process is time‐invariant up to ![]() , convert (3.184) to the nonsymmetric algebraic Riccati equation (NARE) [107] or quadratic matrix equation

, convert (3.184) to the nonsymmetric algebraic Riccati equation (NARE) [107] or quadratic matrix equation

and solve (3.186) for ![]() . However, the solution to (3.186) is generally not unique [107], and efforts must be made to choose the proper one.

. However, the solution to (3.186) is generally not unique [107], and efforts must be made to choose the proper one.

So the modified state equation 3.176 becomes

with white Gaussian ![]() and matrix

and matrix ![]() , which is obtained recursively using (3.185) for the properly chosen fit

, which is obtained recursively using (3.185) for the properly chosen fit ![]() of (3.186). To specify the initial

of (3.186). To specify the initial ![]() , we consider (3.175a) at

, we consider (3.175a) at ![]() and write

and write ![]() . Since

. Since ![]() is not available, we set

is not available, we set ![]() and take

and take ![]() as a reasonable initial state to run (3.187).

as a reasonable initial state to run (3.187).

Finally, extracting ![]() from (3.175a), substituting it into the observation equation 3.174, and rearranging the terms, we obtain the modified observation equation

from (3.175a), substituting it into the observation equation 3.174, and rearranging the terms, we obtain the modified observation equation

with respect to ![]() , in which

, in which ![]() can be replaced by the estimate

can be replaced by the estimate ![]() .

.

Given ![]() ,

, ![]() ,

, ![]() ,

, ![]() ,

, ![]() , and

, and ![]() , the GKF equations for CPN become

, the GKF equations for CPN become

A specific feature of this algorithm is that the future values of ![]() and

and ![]() are required at

are required at ![]() , which is obviously not a problem for LTI systems. It is also seen that

, which is obviously not a problem for LTI systems. It is also seen that ![]() results in

results in ![]() and

and ![]() , and the algorithm becomes the standard KF Algorithm 1.

, and the algorithm becomes the standard KF Algorithm 1.

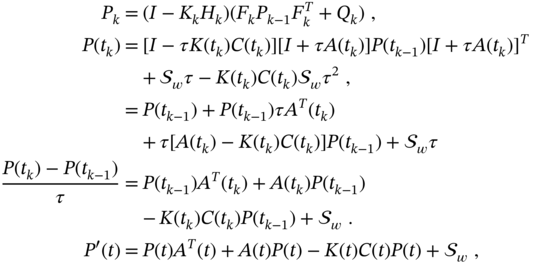

3.2.9 Kalman‐Bucy Filter

The digital world today requires fast and accurate digital state estimators. But when the best sampling results in significant loss of information due to limitations in the operation frequency of digital devices, then there will be no choice but to design and implement filters physically in continuous time.

The optimal filter for continuous‐time stochastic linear systems was obtained in [85] by Kalman and Bucy and is called the Kalman‐Bucy filter (KBF). Obtaining KBF can be achieved in a standard way by minimizing MSE. Another way is to convert KF to continuous time as shown next.

Consider a stochastic LTV process represented in continuous‐time state space by the following equations

where the initial ![]() is supposed to be known.

is supposed to be known.

For a sufficiently short time interval ![]() , we can assume that all matrices and input signal are piecewise constant when

, we can assume that all matrices and input signal are piecewise constant when ![]() and take

and take ![]() ,

, ![]() ,

, ![]() , and

, and ![]() . Following (3.6) and assuming that

. Following (3.6) and assuming that ![]() is small, we can then approximate

is small, we can then approximate ![]() with

with ![]() and write the solution to (3.196) as

and write the solution to (3.196) as

Thus, the discrete analog of model (3.196) and (3.197) is

where we take ![]() ,

,

refer to (3.25) and (3.26), and define the noise covariances as ![]() and

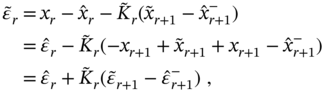

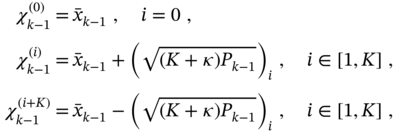

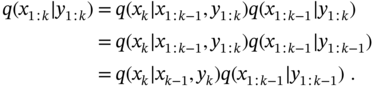

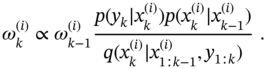

and ![]() , where