The Holistic Approach to Application Security

Fortunately, things don’t have to be this way. Instead of taking the doomed approach of trying to test security into the product at the very end, start at the very beginning and work security activities into every phase of the development lifecycle. I know that it sounds as if this would slow development down to a crawl, but the gains you make in avoiding the penetrate-and-patch cycles more than make up for the extra time you spend in design and development.

This kind of holistic approach to application security is the approach taken by some of the world’s most security-successful software companies including Symantec, EMC, and Microsoft. We’re sure that some of you reading this right now are shaking your heads in disbelief that we would hold up Microsoft as a security role model for you to follow. It’s true that Microsoft has had its fair share of security issues in the past, but since they’ve implemented their complete start-to-finish security process (the Microsoft Security Development Lifecycle, or SDL) across the company, their vulnerability rates, and the severity of those vulnerabilities that do sneak through, have both dramatically decreased. Now many security experts consider Microsoft products to have some of the best—if not the best—security in the world.

![]() Note

Note

In the interest of full disclosure, we should state that one of the authors of this book (Bryan) and its technical editor (Michael) have both previously worked on the Microsoft Security Development Lifecycle team. So we understand there may be an appearance of bias here. However, we believe that the statistics of vulnerabilities in Microsoft products before and after the introduction of the SDL throughout the company speak for themselves as to the effectiveness of the program, and for the effectiveness of holistic security programs in general.

We’ll be taking a closer look at Microsoft’s SDL and some similar programs from other organizations later in this chapter. But before that, we’ll take a look at some of the baseline activities that these programs have in common, and how to integrate these activities into your existing software development lifecycle process.

Training

Before your team even writes a single line of code, before they draw a single UML diagram, even before they start writing use cases and user stories, they can still be improving the security of the future product by learning about application security.

IMHO

There are some application security experts who believe that it’s counterproductive to train developers in security. “Let developers focus on development,” they say, and rely on tools (not to be too cynical, but usually tools their company is selling) to catch and prevent security defects. Now don’t get me wrong; I would love for us as an industry to get to this point. But I think this is more of a nirvana, end-state goal that we might start talking about realistically eight or ten years from now. A lot of companies have made a lot of excellent progress in terms of developer security tools, and I definitely do recommend using these tools, but right now there’s no tool mature enough to act as a complete replacement for developer education.

Of course it’s important for developers to stay current in security—they’re the ones potentially writing vulnerabilities into the product. But quality assurance testers can also benefit from training. Security testing is another of the key fundamentals in every secure development methodology, and having some in-house talent trained in security assessment is never a bad thing. This is often a path for incredible career advancement for these people too.

We’ve worked at several organizations where a junior-level QA engineer became interested in security testing, learned everything he could about application security, and then went on to become a world-class security penetration tester. But it’s all right if this doesn’t happen in your organization. Just getting testers to the point where they can operate security testing tools, analyze the results to weed out any false positives, and prioritize the remaining true positives, will be a huge benefit to your organization.

And for that matter, security training doesn’t need to be restricted to just developers and testers. In some of the most security-mature companies, annual security training is mandatory for everyone in a technical role, including engineering managers and program managers. A program manager may not be writing any vulnerable Java or Python code, but if he’s writing application requirements or use cases with inherent security design flaws, then that’s just as bad—in fact, it may actually be worse since the “defect” is getting introduced into the system at an even earlier point in the lifecycle.

Normally, when people think of training, they envision sitting in a classroom for an instructor-led course, sometimes lasting two or three days. And this is actually a very good form of training—if you can afford it. But in tough economic times, the first two programs that organizations usually cut from their budgets are travel and training. Since we consider ourselves more as engineers than as businesspeople, we’d prefer not to get into the debate over the wisdom of this approach. If you have the money to bring an application security expert consultant onto your site to deliver hands-on training for your team, then that’s great. If you have the money to send some or all of your team to Las Vegas for the annual BlackHat and DefCon conferences, that’s great too, and as an added benefit they’ll have plenty of opportunity to network and make connections within the security community. But if not, then don’t just give up security training this year; instead, choose a more affordable alternative.

One of the best times to take security training is right before a project kicks off: It gets everyone in a security-focused frame of mind from the very start. However, if you’re working with one of the many web application development teams who use an Agile development methodology like Scrum, it may be impractical to schedule training for this team between projects. If a “project” is only a one-week-long sprint, then there’s unlikely to be enough available time between the end of one sprint and the start of the next for training. In this case, a “just-in-time” training approach is probably more appropriate.

For example, if you know that the upcoming sprint is going to focus particularly heavily on data processing tasks, or maybe you have some new hires on the team who’ve never been trained on data security before, this is probably a good chance to get everyone refreshed on SQL injection techniques and defenses. Again, a day-long in-house training session from an expert consultant would be great if you can afford the money and time; but if not, then at least get everyone to read up on some of the relevant free online training materials such as those available from OWASP or the Web Application Security Consortium (WASC).

Threat Modeling

For smaller projects, developers might be able to get away with writing the application directly from a requirements document or, less formally, a requirement review meeting. But for projects of any significant size, the project developers and architects create design models of the application before they start writing code. Even though the new application or feature being developed only exists as an idea at this point in time, it’s still a great time to subject it to a security design review. Remember, the earlier you find potential problems, the better. If your design review finds a problem now and saves the development team 100 hours of rework later in the project cycle, the time spent on that review will have paid for itself many times over.

One of the best ways to review an application design for potential inherent security flaws is to threat model it. Threat modeling is the ultimate pessimist’s game. For every component in the system and every interaction between those components, you ask yourself: What could possibly go wrong here? How could someone break what we’re building? And most importantly of all, how can we prevent them from doing that?

There are many different approaches to threat modeling. You can take an asset-centric perspective (what do we have that’s worth defending?), an attacker-centric perspective (who might want to attack us, and how might they do it?), or a software-centric perspective (where are all the potential weaknesses within our application?). A complete review of any of these approaches is beyond the scope of this book, and would fill complete books (in fact, there are complete books on these topics). However, much of the existing guidance and documentation around threat modeling is aimed at helping you to threat model thick-client “box products” and not web applications. The same threat modeling principles apply to both, but you’ll want to apply a slightly different emphasis to focus on certain types of threats. (The same also goes for cloud applications, which often have the hybrid functionality of both desktop applications and web applications.)

For box products, almost any update that needs to be made after the product ships is a major production. A patch will need to be created, tested, and deployed to the users. Per our discussion on vulnerability costs earlier in the chapter, the average web application vulnerability might cost $4,000 to fix, which is not trivial. But fixing a similar vulnerability in a box product might cost orders of magnitude more. Because of this, threat modeling guidance understandably tries to encourage the modeler to be as thorough as possible. However, for web applications, it’s more worthwhile to concentrate your attention on identifying those problems that would take a significant amount of rework to fix.

IMHO

The real value of threat modeling web applications comes in finding design-level problems that can’t be found by any automated analysis tool or prevented with any secure coding library. For example, it’s probably not a great use of your time to identify reflected cross-site scripting threats on your web pages during a threat modeling exercise. On the other hand, if you’re adding some unique functionality to your application—maybe you want to pay your users a small bonus every time they refer a friend to sign up for your site—then it’s definitely worthwhile to threat model some abuse case scenarios around that functionality. (What if someone registers 100 accounts on Gmail and refers all of these accounts as friends?) Again, I recommend that you focus more on deeper issues rather than on garden-variety implementation-level problems.

The Microsoft SDL Threat Modeling Tool

Microsoft has made threat modeling a mandatory activity, required for all product teams as part of its Security Development Lifecycle (SDL) process. Early on, Microsoft discovered that sitting teams of software engineers and architects in a room and telling them to “think like attackers” was doomed to failure. Developers are builders; they’re not breakers. Without training and knowledge of the application security space, there’s no way that a developer would be able to accurately identify potential threats and mitigations for those threats. It would be like asking them to “think like a surgeon” and then lying down to let them take your appendix out. Probably not a wise move!

On the other hand, while Microsoft does employ a large number of very highly trained security professionals, they can’t afford to have those security experts sit down and build every team’s threat models themselves. There are just too many products, with too many developers, spread out over too wide of a geographic area to make this approach feasible.

To squeeze out from being caught between the “rock” of having developers untrained in security doing the threat modeling, and the “hard place” of not being able to send security experts all over the world either, Microsoft developed a threat modeling tool—the SDL Threat Modeling Tool—with much of the knowledge of how to build effective threat models built into it.

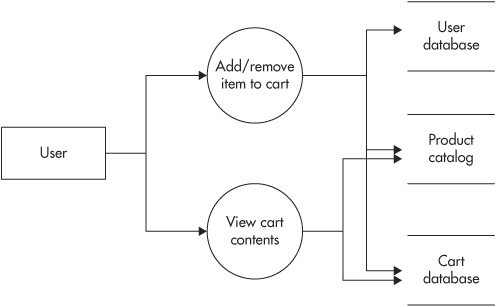

To use the SDL Threat Modeling Tool, you start by creating a high-level representation of your architecture design in data flow diagram (DFD) format. You represent processes in your application with circles, external entities with rectangles, datastores with parallel lines, and connections between components are represented with arrows. Figure 9-3 shows an example of a simple, high-level data flow diagram for a shopping cart application.

Figure 9-3 A sample data flow diagram for an online shopping cart

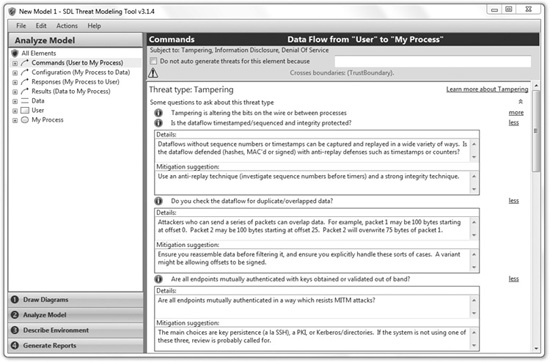

Once you’ve finished creating your DFD, the SDL Threat Modeling Tool will automatically generate some high-level potential threats for the elements you’ve modeled. These threats are categorized according to the STRIDE classification: spoofing, tampering, information disclosure, denial of service, or elevation of privilege. For example, if you’ve created a datastore object in your DFD, the tool might prompt you to consider the potential information disclosure effects of any undo or recovery functionality. You can see an example of this in Figure 9-4.

Figure 9-4 The SDL Threat Modeling Tool identifies potential threats based on your DFD.

Having this security knowledge built into a tool is a great way to get started with threat modeling. You don’t have to be a security expert in order to create useful threat models; you just have to be able to draw a data flow diagram of your application. The SDL Threat Modeling Tool is available for free download from microsoft.com (http://www.microsoft.com/security/sdl/adopt/threatmodeling.aspx).

The SDL Threat Modeling Tool requires both a fairly recent version of Windows (Vista, Windows 7, Windows Server 2003, or Windows Server 2008) and Visio 2007 or 2010.

Elevation of Privilege: A Threat Modeling Card Game

While the SDL Threat Modeling Tool is an effective way for people with little or no security expertise to threat model their applications, there’s actually an even easier “tool” to use that’s also put out by the Microsoft SDL team. At the annual RSA security conference in 2010, Microsoft released “Elevation of Privilege,” a threat modeling card game! The game is played with a literal deck of special Elevation of Privilege cards, with suits based on the STRIDE threat categories. For example, you can see the Queen of Information Disclosure card in Figure 9-5.

Figure 9-5 The Elevation of Privilege card for the Queen of Information Disclosure (Image licensed under the Creative Commons Attribution 3.0 United States License; to view the full content of this license, visit http://creativecommons.org/licenses/by/3.0/us/)

Elevation of Privilege gameplay is loosely based on the game Spades. Players are dealt cards, which they then play in rounds of tricks. Every player who can identify a potential threat in their application corresponding to the card played earns a point, and the player who wins the trick with the best card also earns a bonus point. (It’s up to teams to decide what the prize is for the winner—something small like a gift certificate for lunch at a local restaurant might be a good idea.)

Elevation of Privilege is a fun and very easy way to get started threat modeling, and just like the SDL Threat Modeling Tool, it too is freely available. Microsoft has been giving away card decks at security conferences such as RSA and BlackHat, but if you can’t make it out to one of those (or if they run out of card decks), you can download a PDF of the deck from microsoft.com (http://www.microsoft.com/security/sdl/adopt/eop.aspx) and print your own.

However you create your threat model—whether you use a specially designed software program like the Microsoft SDL Threat Modeling Tool, an interactive game like Elevation of Privilege, or if you just get your team together in a room and draw diagrams on a whiteboard—the entire exercise of identifying threats is pointless unless you also identify mitigations for those threats and record those mitigations to ensure that the development team implements them during the coding phase of the project.

For example, a mitigation to the threat of an attacker gaining access to the application database might be to encrypt all the columns in the database containing users’ sensitive personal information. This task needs to be recorded and assigned to the appropriate person on the development team so that it’s not forgotten after the threat modeling session is complete. Even if the mitigation that you decide on for a threat is just to accept the associated risk (for example, you might decide that the performance degradation from encrypting the sensitive information is too great and the possibility of an attacker gaining access is too small to justify encrypting the data), you should still record this decision and your rationale so you can refer to it later if need be.

Secure Coding Libraries

At last, we get to the fun part of developing software: actually writing the code! This is where all of your security training pays off—the developers all understand the dangers involved in writing insecure code and are educated in the ways in which they can prevent vulnerabilities from getting into the application. And hopefully all, or at least the vast majority, of design-level security vulnerabilities were properly identified during your threat modeling exercises, and appropriate mitigations were added to the developer task list. You’re in great shape to start writing a secure web application, but you can still further decrease the odds that any vulnerabilities will get through by mandating and standardizing on secure coding libraries.

We’ve talked about the importance of using secure coding libraries throughout this book. Sometimes it’s just tricky to add functionality to an application in a way that doesn’t introduce security vulnerabilities. Wiki and message board features are great examples. It’d be nice to allow users to write wiki articles and post messages to blogs in HTML, but if you do this, then the risk of introducing cross-site scripting vulnerabilities into the application goes up dramatically. I’ve seen a lot of people try to solve this problem by filtering out certain HTML elements and attributes in order to create a list of safe tags. But there are many of these unsafe elements and attributes, and many ways to encode them. If you miss just one variant, you’ll still be vulnerable.

Cryptography is another great example. Any security professional will tell you that the first rule of cryptography is never to write your own cryptographic algorithms. And just as with HTML element filtering, I’ve seen a lot of people try to develop custom encryption algorithms. Usually they base these algorithms on some combination of bit-shifting and XOR, with maybe some rot13 thrown in for good measure. All of these amateur home-rolled algorithms have been trivially easy to defeat. Unless you know exactly what you’re doing—that is, you have a degree in cryptology and years of experience in the field—don’t try to write new encryption routines; leave it to the professionals.

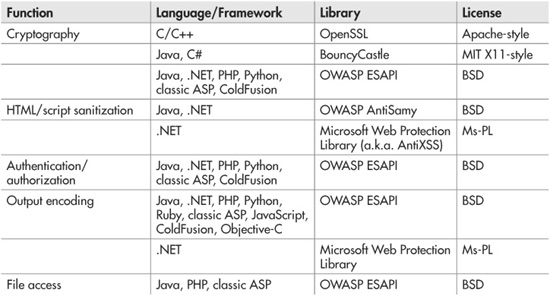

The good news for both XSS defense and cryptography (and many other application security issues) is that other people have already solved these problems and have shared their solutions in the form of secure coding libraries. Want to sanitize user-inputted HTML to remove potential XSS attacks? Use the OWASP AntiSamy library or the Microsoft Anti-XSS library. Want to encrypt data so that only people who know a password can read it? Use the standard PBKDF (password-based key derivation function) and AES (Advanced Encryption Standard) algorithms implemented in the OpenSSL library. Depending on what language and platform you’re programming for, these functions may even be built directly into the programming framework you’re already using. Table 9-1 lists just some of the available secure coding libraries for common application functions and frameworks.

Table 9-1 Some Common Secure Coding Libraries by Function and Framework

Sometimes it’s not so much that the vulnerability you’re concerned about is especially difficult to defend against; sometimes it’s just that there’s no room for error. SQL injection defense is a good example of this scenario. It’s not difficult to defend against SQL injection attacks. All you have to do is to parameterize your database queries. But you have to do this consistently, 100 percent of the time. If you miss even one parameter on one query, you may still be vulnerable. There’s a good chance an attacker will find this hole, and you don’t get second chances with SQL injection vulnerabilities: once your data’s been stolen, it can’t be “un-stolen.”

Again, standardizing on secure coding libraries can help. It doesn’t change the fact that you still have to review your code, but you’ll just have to review your code for the absence of certain API library calls. For example, if you’re using C# or any other .NET-based language, you could set up an automated test to run as part of every build that checks for any instantiation of framework database classes such as System.Data.Common.SqlCommand. (Microsoft’s free .NET static analysis tool FxCop would be excellent for this purpose.) If the test finds that any code is directly calling these classes, then you know that someone forgot to use the secure database access library, and you can flag that code as a bug.

Checking the code in this manner—just looking to see whether something is used or not used—is much easier and much less prone to error than testing to see whether it was used correctly. The outcome of the test is much more “black or white” than “shades of gray.”

Code Review

One security lifecycle task that might surprise you is manual code review—not because of its presence, but rather because of its absence. It’s true that many organizations do mandate peer review of all code that gets checked into the source control repository. Until another developer or a designated security reviewer examines the new code and approves it as being free from vulnerabilities, that code won’t be accepted as part of the build. However, not every security-mature organization does this, and you should probably consider manual code review as an optional “bonus” activity rather than a core component of your security process.

The reason for this is that many teams find that manual code review generally gives very poor results considering the effort that gets put into it. Manual code review is a pretty tedious process, and human beings (especially highly creative ones like computer programmers) aren’t good at performing tedious tasks for extended periods of time. Reading hundreds of lines of code looking for security vulnerabilities is like trying to find a needle in a haystack, except that you don’t know how many needles are even there. There could be 1, or 20, or none.

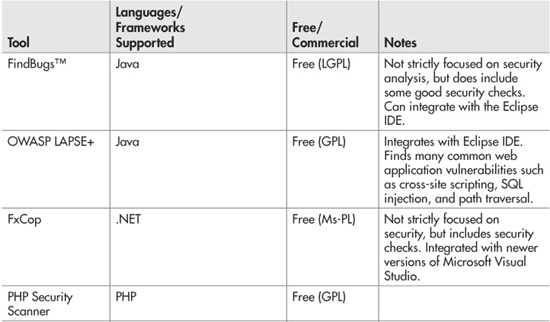

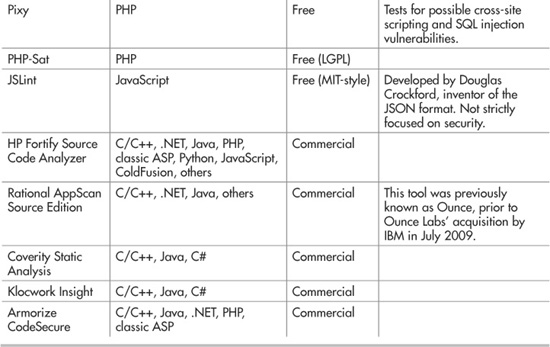

Instead of manually reviewing every line of code in your application for potential security issues, a better way to use your time is to run an automated static analysis tool as part of your development process. These tools are designed to relieve you of the tedious grunt work of poring over source code by hand. Unlike human beings, analysis tools don’t get tired or bored or hungry and will happily run for hours or days on end if need be. Table 9-2 lists some popular static analysis tools, both open-source and commercial.

Table 9-2 Some Static Analysis Tools by Language and License

![]() Note

Note

When we say “static analysis tools,” we mean to include both static source analysis tools and static binary analysis tools in this category. Both of these types of tools work similarly: Source analyzers work directly off the application’s raw source code, while static analyzers work off the compiled application binaries. The key is that the analysis tool doesn’t actually run the application (or watch while the application runs). This is in contrast with dynamic analysis tools, which do work by executing the application.

You can fire up a static analysis tool and run it against your code on an as-needed basis, for instance, if you’ve just finished coding a particularly tricky or sensitive module and you want a “second opinion” on your work. But it’s far more effective to set up a recurring scheduled scan on a central server. Configure the analyzer to run every Saturday morning, or every night at midnight, and check the results when you get in. (As a nice bonus, some of these tools will also integrate with defect-tracking products like Bugzilla and will automatically create bug issues from any potential vulnerabilities they find in the code.)

The best solution of all is to integrate a static analyzer directly into your build process or source code repository. Every time a build is kicked off or code is checked in, the analyzer runs to test it for vulnerabilities. This way, problems are found almost immediately after they’re first created, and there’s very little chance there will be significant rework required to go back and make the necessary changes.

Even though static analysis tools remove a lot of the manual effort from the code review process, they can’t remove all of it to make the process completely “hands-off.” Historically, static analysis tools have been especially prone to reporting false positive results; that is, saying that there are defects when the code in question actually works correctly and securely. You or someone on your team will need to review the results to determine whether the reported issues are true positives (that is, real vulnerabilities) or just false positives. Over time, this triage process will become easier as you become more familiar with the tool and configure it more precisely to your application and its codebase. But especially when you first start using the tool, be sure to budget some extra time to analyze the analysis.

You do have one more option available to you for static analysis, which is to subscribe to a third-party source analysis service. With this model, you send your source (or your compiled binary, depending on the service) to an external security organization that runs the analysis tools and triages the results for you. There’s a considerable upside to this approach, which is that you spend less time analyzing tool results since the service is doing that work. And, your team won’t need to have the specialized security knowledge required to accurately determine which issues are false positives and which are true positives. The downside (besides the cost of the service) is that you have to send your source code off-site. For some organizations this is no problem at all, and for others it’s a deal-breaker. If you’re interested in working with one of these analysis services, you’ll have to decide for yourself which camp you fall into.

Security Testing

Although we did discourage the “penetrate-and-patch” approach to application security at the start of this chapter, security testing is a critical part of a holistic security program. It shouldn’t be the only part of your security program—there’s an old and very true saying that you can’t test security into an application—but it should still be there nonetheless. If you’re new to security, your first inclination will probably be to apply the same types of techniques to security testing that your team currently uses for functional testing. But following this approach is unlikely to succeed, for several different reasons.

First, the very nature of security testing is different from functional testing. When you test your features’ functionality, you’re generally checking for a positive result; you’re checking that the application does do something it’s supposed to. For example, you might test to be sure that a shopping cart total is updated correctly whenever a user adds an item to his shopping cart, and that sales tax is applied if appropriate. But when you test for security, you’re usually checking for a negative result; you’re checking that the application doesn’t do something it’s not supposed to. For example, you might test that an attacker can’t break into the application database through SQL injection. But this negative test covers a lot more ground than the positive functional test. There are a lot of ways that an attacker may be able to execute a SQL injection attack, and if you want to test this as thoroughly as possible, you’ll need some special tools to do it.

We’ve said this several times throughout this book, but it bears repeating because it’s so important to the topic of web application security testing: hackers use more tools than just web browsers to attack your site. A web browser is really just a glorified user interface for sending and receiving HTTP messages, but browsers put limitations on the types and content of HTTP messages they can send. Attackers don’t like limitations. They will use any means they can to break into your site. This means they’ll not only poke around your site with a web browser, but also with automated black-box scanning tools and with HTTP proxy tools (such as the Fiddler tool that we discussed earlier in the database security chapter) that they can use to send any attack message they want. If you don’t want attackers to find vulnerabilities in your site before you do, you’re going to have to test your application with the same types of tools that they do.

Black-Box Scanning

A black-box web application scanner is a type of automated testing tool that detects vulnerabilities in target applications not by analyzing its source code, but rather by analyzing its HTTP responses, just as a real attacker would. They’re called black-box scanners because, unlike a static analysis tool, they have no access to or knowledge of the application’s underlying source code: to the scanner, the application is just an unknown “black box.” Requests go in and responses come out, but what happens in the middle is a mystery.

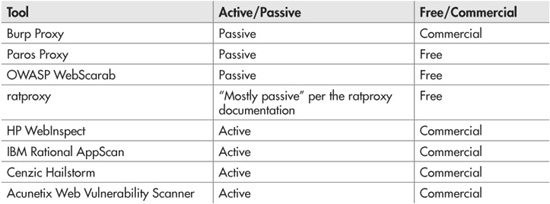

Some black-box scanners work as “active” scanners that automatically generate and send their own HTTP request attacks against the target application, and some work strictly as “passive” scanners that just watch HTTP traffic while a human tester interacts with the live application. Table 9-3 shows some of the more popular examples of both of these kinds of tools (both open-source and commercial).

Table 9-3 Some Black-Box Analysis Tools by Type (Active/Passive) and License

As always, there are pros and cons to both the active and static approaches. Active scanners can be more thorough since they can make requests much faster than a human tester can, and since they often have security knowledge built in, they know how to construct SQL injection attacks and cross-site scripting attacks, where a person might not necessarily have this knowledge. On the other hand, it’s sometimes difficult for active scanners to be able to automatically traverse through complex application workflows such as multistep login forms. You also have to be more careful using an active scanner—there’s a chance its attacks could succeed, and you don’t want it to corrupt your application or bring it down.

You might wonder why you’d want to bother with any kind of black-box scanner, active or passive, if you’re already using a white-box scanner (that is, a static analysis tool that does have access to the underlying application source code) earlier in the project lifecycle. The answer is that black-box and white-box scanners are both good at finding different types of vulnerabilities.

Static analysis tools generally work on one specific source code language or, at best, maybe a couple of different languages. If different modules of your application are written in different languages—a pretty common occurrence—then any modules written in a language that the scanner doesn’t understand won’t be analyzed. Black-box scanners don’t have this problem. Since black-box scanners don’t have any access to the application source, they don’t care what languages or frameworks you’re using. Whether you’re using just vanilla PHP (for example) or a combination of F#, Scala, and Go, a black-box scanner will happily analyze your application either way.

However, this strength of black-box analysis is also one of its biggest weaknesses. The downside of the black-box approach is that without access to the source code, it’s entirely possible for the scanner to miss analyzing large portions of the application. Say for example that your application has a page called “admin.php” that’s not linked to any other page on the site. (A page like this might be meant for organization insiders only, who would know the page is there without it having to be linked from anywhere else.)

There might be horrible security vulnerabilities on this page (not least of which being that someone could potentially access administrative-level functionality just by guessing that the page exists), but unless the black-box scanner happens to blindly check for that page, it’ll never even find the page, much less the vulnerabilities.

Just as with source analysis, you can subscribe to black-box analysis services, where a team of third-party consultants will run a scanner, weed out any false positives, triage the results according to risk, and provide you with mitigation recommendations. These services can be well worth the money spent, but if the budget is simply not there, then you might want to investigate some of the free black-box scanning tools.

Security Incident Response Planning

Even though you may follow all of the development practices and recommendations that we’ve given here around embedding security into every phase of your development lifecycle, unfortunately it’s still not a guarantee that some very determined attacker won’t find a way to break your application. While you work to avoid getting hacked, you also have to make a plan as to what you’ll do if the worst does happen.

Some web development organizations, particularly those following Agile development methodologies, don’t like this advice. “You Ain’t Gonna Need It,” they say, quoting the YAGNI principle of waiting until you definitely need a feature or plan before starting to work on it. “Who knows if we’ll ever get attacked? Working on a plan now could end up just being wasted time. We’ll deal with this issue if it ever happens.” That’s fine—if you don’t mind getting your team together for an ad-hoc scrum meeting at 3:00 A.M. on Christmas morning. Attackers know that offices are closed for holidays, and they don’t care about your personal time. It’s actually advantageous for them to launch attacks at inconvenient times for you, since it’ll take you longer to respond. This is why you need to have a plan ahead of time, so that you can minimize the attacker’s window of opportunity.