Classifying and Prioritizing Threats

In a perfect world, we would tell you that all security vulnerabilities are equally serious. We would tell you that if there’s even the slightest chance of a single attacker being able to compromise a single user for even the smallest nuisance attack, that you should hold off the product release until every single possible vulnerability has been eliminated from the code. And if anyone ever does manage to find a vulnerability in your application, we would tell you to drop everything else you’re doing and go fix the problem.

But of course, we don’t live in a perfect world, and a hard-line approach to security like this is completely unrealistic: you’d never actually ship any code. You need a method to prioritize threats, to know which problems to spend the most time on, so that you can get the most benefit from the time you have. We’ll discuss several methods for this next, but first, since you can’t accurately prioritize a threat unless you can accurately describe it, we’ll discuss some popular ways of categorizing threats.

STRIDE

STRIDE is a threat classification system originally designed by Microsoft security engineers. STRIDE does not attempt to rank or prioritize vulnerabilities—we’ll look at a couple of systems that do this later in this chapter—instead, the purpose of STRIDE is only to classify vulnerabilities according to their potential effects. This is immensely useful information to have when threat modeling an application (as we’ll discuss in the chapter on secure development methodologies, later in this book), but first, let’s explain exactly what goes into STRIDE.

STRIDE is an acronym, standing for:

![]() Spoofing

Spoofing

![]() Tampering

Tampering

![]() Repudiation

Repudiation

![]() Information Disclosure

Information Disclosure

![]() Denial of Service

Denial of Service

![]() Elevation of Privilege

Elevation of Privilege

Spoofing vulnerabilities allow an attacker to claim to be someone they’re not, or in other words, to assume another user’s identity. For example, let’s say that you’re logged in to your bank account at www.bank.cxx. If an attacker could find a way to obtain your authentication token for the bank web site, maybe by exploiting a cross-site scripting vulnerability on the site or maybe just by “sniffing” unencrypted Wi-Fi traffic, then he could spoof the bank site by using your authentication credentials and claiming to be you.

Tampering vulnerabilities let an attacker change data that should only be readable to them (or in fact, not even readable to them). For instance, a SQL injection vulnerability in an electronics store web site might allow an attacker to tamper with the catalog prices. One-dollar laptops and plasma televisions might sound good to you and me, but to the site owners, it would be disastrous. And tampering threats can apply not just to data at rest, but data in transit as well. Again, an attacker eavesdropping on an unsecured wireless network could alter the contents of users’ requests going to web servers or the servers’ responses back to the users.

Repudiation vulnerabilities let the user deny that they ever performed a given action. Did you buy 100 shares of Company X at $100/share, only to watch its price slide down to $50 that same day? Or did you just get buyer’s remorse after ordering a PlayStation 3 the day before Sony announced the PlayStation 4? A repudiation vulnerability might let you cancel those transactions out and deny that they ever happened. If you think this sounds like a pretty good deal to you, also consider that you might be on the losing end of this vulnerability! Maybe you purchased those $100 shares of Company X and the price skyrocketed to $250, but now the seller denies ever having made the transaction.

Information disclosure vulnerabilities allow an attacker to read data that they’re not supposed to have access to. Information disclosure threats can come in many forms, which is not surprising when you stop to think about all the different places you can store data: databases obviously, but also file systems, XML documents, HTTP cookies, other browser-based storage mechanisms like the HTML5 localStorage and sessionStorage objects, and probably many other places as well. Any of these represent a potential target for an information disclosure attack. And just as with tampering threats, information disclosure threats can target data in transit, not just data at rest. An attacker may not be able to read cookies directly off his victim’s hard drive, but if he can read them as they’re sent across the network, that’s just as good to him.

Denial-of-service attacks are some of the oldest attacks against web applications. Put simply, denial-of-service (or DoS) attacks attempt to knock out a targeted application so that users can’t access it any more. Usually the way attackers go about this is to attempt to consume large amounts of some constrained server resource, such as network bandwidth, server memory, or disk storage space. DoS attackers can use unsophisticated brute-force methods—like enlisting a bunch of their friends (or a botnet) to all hit the target at the same time as part of a distributed denial-of-service (DDoS) attack—or they can use highly sophisticated and asymmetric methods like sending exponential expansion “bombs” to XML web services.

IMHO

In my opinion, the importance and impact of denial-of-service attacks are highly underestimated by many software development organizations. I’ve been in threat modeling sessions where teams spent hours struggling to identify and mitigate every possible information disclosure vulnerability edge case in the system, but they glossed over DoS threats in a matter of just a few minutes. Not to diminish the importance of information disclosure or any of the other STRIDE categories, but DoS is much more than just a nuisance attack. If customers can’t get to your business, then before long you’ll be out of business. One of the primary reasons that corporate security officers and corporate information officers express a fear of moving their operations to the cloud is that they won’t be able to access their data 100 percent of the time. A successful DoS attack could quickly confirm their fears, and in the long run could do a lot more damage to your organization’s reputation for trustworthiness (and subsequently its business) than a tampering or repudiation attack ever could.

The final STRIDE element, elevation of privilege, is generally considered to be the most serious type of all of the STRIDE categories. Elevation of privilege (EoP) vulnerabilities allow attackers to perform actions they shouldn’t normally be able to do. We talked earlier about spoofing vulnerabilities allowing attackers to impersonate other users—but imagine how much worse a spoofing vulnerability would be if it allowed an attacker to impersonate a site administrator. An elevation of privilege vulnerability like that could potentially put every other STRIDE category into play: the attacker with administrative rights could read and write sensitive site data, edit server logs to repudiate transactions, or damage the system in such a way that it would be unable to serve requests. This is why EoP is considered the king of threat categories; it opens the door to anything an attacker might want to do to harm your system.

![]() Note

Note

While all EoP vulnerabilities are serious, not all of them are created equal. Some EoP vulnerabilities might allow an attacker just to elevate privilege from an anonymous user to an authenticated user, or from an authenticated user to a “power user.”

When you use STRIDE to classify a vulnerability, also keep in mind that a single vulnerability may have multiple STRIDE effects. Again, as we just mentioned, any vulnerability with an elevation of privilege component is likely also subject to spoofing, tampering, and all of the other components. A SQL injection vulnerability could allow an attacker to both read sensitive data from a database (information disclosure) and write data to that database (tampering). If the attacker could use the injection vulnerability to execute the xp_cmdshell stored procedure, then EoP—and subsequently all of STRIDE—is also a possibility. (We’ll discuss this attack in more detail in Chapter 7.)

IIMF

As a more simplified alternative to STRIDE, you might want to consider classifying potential vulnerabilities according to the IIMF model: interception, interruption, modification, and fabrication. Interception is equivalent to the STRIDE category of information disclosure (an attacker can read data he’s not supposed to, either at rest or in transit) and interruption is equivalent to the STRIDE category of denial-of-service (an attacker can prevent legitimate users from being able to get to the system). Modification and fabrication are both subtypes of tampering: modification vulnerabilities allow an attacker to change existing data, and fabrication vulnerabilities allow an attacker to create his own forged data.

So IIMF covers the T, I, and D of STRIDE, but where does that leave spoofing, repudiation, and elevation of privilege? For repudiation, since these kind of attacks generally involve tampering with log files (in order to erase or disguise the fact that a transaction took place), even in a strict STRIDE perspective, repudiation attacks could be easily considered just a subtype of tampering. In terms of IIMF, repudiation attacks would be considered to be modification attacks: an attacker modifies the system log to erase the history of his actions.

As for spoofing and elevation of privilege, in some ways these two threats could be considered the same type of attack. Some security professionals and security analysis tools will refer to both spoofing and EoP as privilege escalation threats: spoofing vulnerabilities being classified as horizontal privilege escalations (where the attacker gains no extra rights but can assume the identity of another user of equal privileges), and EoP vulnerabilities being classified as vertical privilege escalations (where the attacker does gain extra rights, such as elevating from a standard to an administrative user account). Furthermore—not to get too philosophical about it—but both horizontal and vertical privilege escalations are just a means to some other end. It doesn’t really matter just that an attacker impersonates another user, or that he impersonates an administrator; it matters what he does once he obtains that access. Maybe he’ll use that access to read confidential data or to place fraudulent orders into the system, but those could be called interception and fabrication attacks. Again, you probably shouldn’t worry too much about this fairly academic distinction. Whether you prefer the simplicity of IIMF or the specificity of STRIDE, either approach will serve you well.

CIA

A closely related concept to IIMF is CIA: not the Central Intelligence Agency (or the Culinary Institute of America, for that matter), but rather the triad of confidentiality, integrity, and availability. Where interruption, interception, modification, and fabrication are types of threats, confidentiality, integrity, and availability are the aspects of the system that we want to protect. In other words, CIA are the traits we want the system to have, and IIMF are the ways attackers break CIA.

A confidential system is one where secret or sensitive data is successfully kept out of the hands of unauthorized users. For example, you probably consider your home address to be a sensitive piece of data—maybe not as sensitive as your bank account number or your credit card number, but you probably wouldn’t want your address published all over the Internet for anyone to see. You’ll give it to a shopping web site so that they know where to send your purchases, but you expect that they’ll keep it away from people who don’t need to know it. This doesn’t mean that they’ll keep it away from everyone—the shipping company will need it, for example—but the web site’s merchandise vendors don’t need it, and the hackers in Russia certainly don’t need it either. Interception is the IIMF method these attackers will use to gain access to your secret data and break the site’s confidentiality pledge (even if that’s only a tacit pledge).

If confidentiality is the ability to keep unauthorized users from reading data, integrity is the ability to keep unauthorized users from writing data. “Writing data” here includes both changing existing data (which would be a modification attack) and creating new data wholesale (which would be a fabrication attack).

Finally, an available system is one that’s there when you need it and want it. Availability means the system is up and running and handling requests in a reasonable amount of time, and is not vulnerable to an interruption (denial-of-service) attack. As we said earlier, the importance of availability cannot be overstated. Put yourself in the shoes of a CSO, CIO, or CTO whose business relies on a third-party Software-as-a-Service (SaaS) web application or cloud service. (In fact, many readers probably won’t have to use their imagination at all for this!) It takes a great deal of faith to give up direct control of your business process and your data and let an outside organization manage it for you, even when you know that they can do it better and cheaper than you can do it yourself. It’s a little like the difference between driving in a car and flying in an airplane. You’re statistically much more likely to be involved in an accident while driving from your house to the airport than you are while flying across the country, but people get much more nervous about the flight than the drive. You could make a good case that this is due to the severity of the risks involved, but (in our honest opinion) it’s also about the element of relinquishing control.

Beyond confidentiality, integrity, and availability, some people also add authenticity and nonrepudiation as high-level security goals (CIA-AN). Authenticity is the ability of the system to correctly identify who is using it, to make sure that users (and other processes) are who they say they are.

![]() Note

Note

An “authentic” user (or system)—one where the application has correctly identified who is using it—is not necessarily the same thing as an “authorized” user/system—one who has the permission to do the things he’s trying to do. Again, authentication and authorization are extremely important topics in web application security, and each of them gets its own chapter later in this book.

Lastly, nonrepudiation is the ability of the system to ensure that a user cannot deny an action once he’s performed it. Nonrepudiation controls are the solution to the repudiation attacks (such as when a user denies that he ever made a purchase or a stock trade) that we discussed earlier in the STRIDE section.

Common Weakness Enumeration (CWE)

The Common Weakness Enumeration (or CWE) is a list of general types of software vulnerabilities, such as:

![]() SQL injection (CWE-89)

SQL injection (CWE-89)

![]() Buffer overflow (CWE-120)

Buffer overflow (CWE-120)

![]() Missing encryption of sensitive data (CWE-311)

Missing encryption of sensitive data (CWE-311)

![]() Cross-site request forgery (CWE-352)

Cross-site request forgery (CWE-352)

![]() Use of a broken or risky cryptographic algorithm (CWE-327)

Use of a broken or risky cryptographic algorithm (CWE-327)

![]() Integer overflow (CWE-190)

Integer overflow (CWE-190)

The CWE list (maintained by the MITRE Corporation) is more specific than the general concepts of STRIDE or IIMF, and is more akin to the OWASP Top Ten list we discussed in the opening chapter on web application security concepts. In fact, MITRE (in cooperation with the SANS Institute) publishes an annual list of the top 25 most dangerous CWE issues.

One helpful aspect of CWE is that it serves as a common, vendor-neutral taxonomy for security weaknesses. A security consultant or analysis tool can report that the web page www.bank.cxx/login.jsp is vulnerable to CWE-759, and everyone understands that this means that the page uses a one-way cryptographic hash function without applying a proper salt value. Furthermore, the CWE web site (cwe.mitre.org) also contains a wealth of information on how to identify and mitigate the CWE issues as well.

Note that CWE should not be confused with CVE, or Common Vulnerabilities and Exposures, which is another list of security issues maintained by the MITRE Corporation. CVEs are more specific still, representing specific vulnerabilities in specific products. For example, the most recent vulnerability in the CVE database as of this writing is CVE-2011-2883, which is the 2,883rd vulnerability discovered in 2011. CVE-2011-2883 is a vulnerability in the ActiveX control nsepa.ocx in Citrix Access Gateway Enterprise Edition that can allow man-in-the-middle attackers to execute arbitrary code.

DREAD

Like STRIDE, DREAD is another system originally developed by Microsoft security engineers during the “security push”—a special security-focused development milestone phase—for Microsoft Visual Studio .NET. However, where STRIDE is meant to classify potential threats, DREAD is meant to rank them or score them according to their potential risk. DREAD scores are composed of five separate subscores, one for each letter of D-R-E-A-D:

![]() Damage potential

Damage potential

![]() Reproducibility (or Reliability)

Reproducibility (or Reliability)

![]() Exploitability

Exploitability

![]() Affected users

Affected users

![]() Discoverability

Discoverability

The damage potential component of the DREAD score is pretty straightforward: If an attacker was able to pull off this attack, just how badly would it hurt you? If it’s just a minor nuisance attack, say maybe it just slowed your site’s response time by one-half of one percent, then the damage potential for that attack would be ranked as the lowest score, one out of ten. But if it’s an absolutely devastating attack, for example if an attacker could extract all of the personal details and credit card numbers of all of your application’s users, then the damage potential would be ranked very high, say nine or ten out of ten.

The reproducibility (or reliability) score measures how consistently an attacker would be able to exploit the vulnerability once he’s found it. If it works every time without fail, that’s a ten. If it only randomly works one time out of 100 (or one time out of 256, as might be the case for a buffer overflow attack against an application using a randomized address space layout), then the reproducibility score might be only one or two.

Exploitability refers to the ease with which the attack can be executed: how many virtual “hoops” would an attacker have to jump through to get his attack to work? For an attack requiring only a “script kiddie” level of sophistication, the exploitability score would be a ten. For an attack that requires a successful social engineering exploit of an administrative user within a five-minute timeframe of the time the attack was launched, the exploitability score would be much lower.

The affected users score is another pretty straightforward measure: the more users that could be impacted by the attack, the higher the score. For example, a denial-of-service attack that takes down the entire web site for every user would have a very high affected-users score. However, if the attack only affected the site’s login logic (and therefore would only affect registered and authenticated users), then the affected-users score would be lower. Now, it’s likely that the registered/authenticated users are the ones that you care most about providing access to, but presumably you would account for this in the damage potential metric of the DREAD score.

Finally, the second “D” in DREAD is for the discoverability score—in other words, given that a vulnerability exists in the application, how likely is it that an attacker could actually find it? Glaringly obvious vulnerabilities like login credentials or database connection strings left in HTML page code would score high, whereas something more obscure, like an LDAP injection vulnerability on a web service method parameter, would score low.

Once you’ve established a score for each of the DREAD parameters for a given vulnerability, you add each of the individual parameter scores together and then divide by five to get an overall average DREAD rating. It’s a simple system in principle, but unfortunately, it’s not very useful in actual practice.

One problem with DREAD is that all of the factors are weighted equally. An ankle-biter attack rated with a damage potential of one but all other factors of ten has a DREAD score of 8.2 (41/5). But if we then look at another vulnerability with a damage potential of ten and a discoverability of one—maybe an attack that reveals the bank account numbers of every user in the system, if you know exactly how to execute it—then that comes out to the exact same DREAD score of 8.2. This is not a great way to evaluate risk; most people would probably agree that the high-damage attack is a bigger threat than the high-discoverability attack.

And speaking of discoverability, another common (and valid) criticism of DREAD is the fact that discoverability is even a factor for consideration. Security is the ultimate pessimist’s field: there’s a good argument to be made that you should always assume the worst is going to happen. And in fact, when you score each of the other DREAD parameters, you do assume the worst. You don’t rate damage potential based on the likely effects of the attack; you rate damage potential based on the worst possible effects of the attack. So why hedge your bets with a discoverability parameter?

The worst aspect of DREAD, though, is that each of the DREAD component ratings are totally subjective. I might look at a potential threat and rate it with a damage potential score of eight, but you might look at the exact same threat and rate its damage potential as two. It’s not that one of us is right and the other is wrong—it’s that it’s totally a matter of opinion, as if we were judging contestants on Dancing with the Stars instead of triaging security vulnerabilities.

And remember that rating risks is not just an intellectual exercise. Suppose that we were using DREAD scores to prioritize which threats we would address during the current development cycle, and which would have to wait until the next release six months from now. Depending on which one of us first identified and first classified the threat, it might get fixed in time or it might not.

Because of these shortcomings, DREAD has fallen out of favor even at Microsoft. We’ve included it here because you’re likely to read about it in older security books or in online documentation, but we wouldn’t recommend that you use it yourself.

Common Vulnerability Scoring System (CVSS)

A more commonly used metric for rating vulnerabilities is the Common Vulnerability Scoring System, or CVSS. CVSS is an open standard, originally created by a consortium of software vendors and nonprofit security organizations, including:

![]() Carnegie Mellon University’s Computer Emergency Response Team Coordination Center (CERT/CC)

Carnegie Mellon University’s Computer Emergency Response Team Coordination Center (CERT/CC)

![]() Cisco

Cisco

![]() U.S. Department of Homeland Security (DHS)/MITRE

U.S. Department of Homeland Security (DHS)/MITRE

![]() IBM Internet Security Systems

IBM Internet Security Systems

![]() Microsoft

Microsoft

![]() Qualys

Qualys

![]() Symantec

Symantec

![]() Note

Note

CVSS should not be confused with either the Common Vulnerabilities and Exposures (CVE) or Common Weakness Enumeration (CWE) classification and identification systems that we discussed earlier in this chapter.

CVSS is currently maintained by the Forum of Incident Response and Security Teams (FIRST). Like DREAD, CVSS scores go to ten (CVSS scores actually start at zero, not one as DREAD does), which is the most serious rating. But the components that make up a CVSS score are much more thorough and objective than those that go into a DREAD score.

At the highest level, a CVSS score is based on three separate parts. The first and most heavily weighted part is a “base equation” score that reflects the inherent characteristics of the vulnerability. These inherent characteristics include objective criteria such as:

![]() Does the attack require local access, or can it be performed across a network?

Does the attack require local access, or can it be performed across a network?

![]() Does the attack require authentication, or can it be performed anonymously?

Does the attack require authentication, or can it be performed anonymously?

![]() Is there any impact to the confidentiality of a potential target system? If so, is it a partial impact (for example, the attacker could gain access to a certain table in a database) or is it complete (the entire file system of the targeted server could be exposed)?

Is there any impact to the confidentiality of a potential target system? If so, is it a partial impact (for example, the attacker could gain access to a certain table in a database) or is it complete (the entire file system of the targeted server could be exposed)?

![]() Is there any impact to the integrity of a potential target system? If so, is it partial (an attacker could alter some system files or database rows) or complete (an attacker could write any arbitrary data to any file on the target)?

Is there any impact to the integrity of a potential target system? If so, is it partial (an attacker could alter some system files or database rows) or complete (an attacker could write any arbitrary data to any file on the target)?

![]() Is there any impact to the availability of a potential target system? If so, is it partial (somewhat reduced response time) or complete (the system is totally unavailable to all users)?

Is there any impact to the availability of a potential target system? If so, is it partial (somewhat reduced response time) or complete (the system is totally unavailable to all users)?

The answers to these questions are answered more objectively than setting DREAD ratings; if ten different people were handed the same issue and asked to classify it according to the CVSS base rating, they’d probably come up with identical answers or at least very closely agreeing answers.

For many organizations, the CVSS base rating score will be sufficient to appropriately triage a potential vulnerability. However, if you want, you can extend the base rating by applying a temporal score modification (characteristics of a vulnerability that may change over time) and another additional environmental score modification (characteristics of a vulnerability that are specific to your particular organization’s environment).

The questions that determine the temporal score are also objectively answered questions, but the answers to the questions can change as attackers refine their attacks and defenders refine their defenses:

![]() Is there a known exploit for the vulnerability? If so, is it a proof-of-concept exploit or an actual functional exploit? If functional, is it actively being delivered in the wild right now?

Is there a known exploit for the vulnerability? If so, is it a proof-of-concept exploit or an actual functional exploit? If functional, is it actively being delivered in the wild right now?

![]() Does the vendor have a fix available? If not, has a third party published a fix or other workaround?

Does the vendor have a fix available? If not, has a third party published a fix or other workaround?

![]() Has the vendor confirmed that the vulnerability exists, or is it just a rumor on a blog or other “underground” source?

Has the vendor confirmed that the vulnerability exists, or is it just a rumor on a blog or other “underground” source?

Again, these are straightforward questions to answer and everyone’s answers should agree, but a vulnerability’s temporal score on Monday may be dramatically different from its temporal score that following Friday—especially if there’s a big security conference going on that week!

Finally, you can use the environment score questions (also optional, like the temporal score) to adjust a vulnerability’s overall CVSS score to be more specific to your organization and your system setup. The environmental metrics include questions like:

![]() Could there be any loss of revenue for the organization as a result of a successful exploit of this vulnerability? Could there be any physical or property damage? Could there be loss of life? (While this may seem extreme, it might actually be possible for some systems such as medical monitors or air traffic control.)

Could there be any loss of revenue for the organization as a result of a successful exploit of this vulnerability? Could there be any physical or property damage? Could there be loss of life? (While this may seem extreme, it might actually be possible for some systems such as medical monitors or air traffic control.)

![]() Could any of the organization’s systems be affected by the attack? (You might answer no to this question if the vulnerability only affects Oracle databases, for example, and your organization only uses SQL Server.) If so, what percentage of systems could be affected?

Could any of the organization’s systems be affected by the attack? (You might answer no to this question if the vulnerability only affects Oracle databases, for example, and your organization only uses SQL Server.) If so, what percentage of systems could be affected?

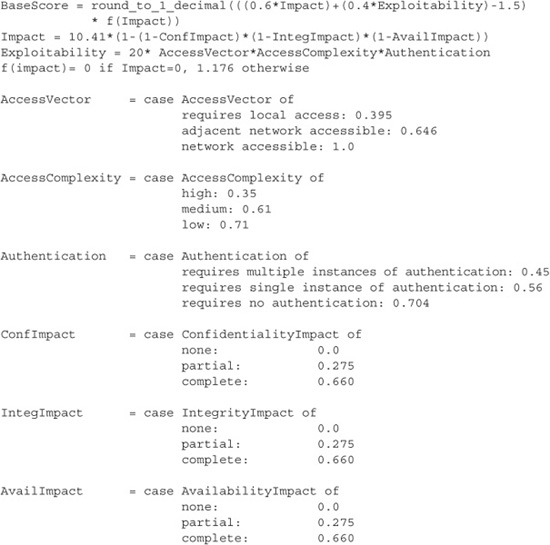

Once you’ve answered the relevant questions, you’re ready to calculate the final CVSS score. For CVSS—again, unlike DREAD—the individual components have different weight when determining the end score. The questions concerning the potential confidentiality, integrity, and availability impact of the vulnerability have more importance to the end CVSS rating than the questions concerning attack vectors (that is, local vs. remote access) and authentication. In fact, the equations used to calculate the scores are surprisingly complex. We include the formula for the base CVSS score in the “Into Action” sidebar just so you can see how the individual components are weighted, but instead of performing these calculations manually when you’re scoring actual vulnerabilities, you’ll be better off using one of the online CVSS calculators such as the one hosted on the National Vulnerability Database (NVD) site at http://nvd.nist.gov/cvss.cfm?calculator.

We’ve Covered

Input validation

![]() Avoiding blacklist validation techniques

Avoiding blacklist validation techniques

![]() The correct use of whitelist validation techniques

The correct use of whitelist validation techniques

![]() Regular expression validation for complex patterns

Regular expression validation for complex patterns

![]() The importance of validating input on the server, not just the client

The importance of validating input on the server, not just the client

![]() Disable seldom-used or non-critical features by default

Disable seldom-used or non-critical features by default

![]() Allow users to opt in to extra functionality

Allow users to opt in to extra functionality

Classifying and prioritizing threats

![]() STRIDE

STRIDE

![]() IIMF

IIMF

![]() CIA and CIA-AN

CIA and CIA-AN

![]() CWE

CWE

![]() DREAD

DREAD