Baking Security In

One great advantage that we have as web application developers is the ability to quickly and easily make changes to our applications. Compared to box-product application developers, who have to issue patches in order to fix bugs (and then hope that as many users as possible actually install those patches), we can just edit the server code and every user will just automatically pick up the changes. This convenience is great and enables us to be nimble and responsive, but unless we’re disciplined about it, it can be a dual-edged sword.

The Earlier, the Better

Since it’s relatively easy to make fixes in web applications, there can be a temptation to take a strictly reactive approach to application security: If someone finds a vulnerability in your product, you’ll just go fix it then—but no point in spending time and worrying about something that may not happen in the first place. This attitude is further encouraged by the fact that most web application product teams have extremely short and tight development schedules. We’ve worked with some teams who use agile development methodologies, and whose entire release lifecycle from the planning stage to deployment on the production server is only one week long. It’s really tough to convince these kinds of hummingbird-quick teams that they should be spending their preciously short development time hardening their applications against potential attacks. But those teams that will listen ultimately have an easier time and spend less time on security than those that refuse.

It’s intuitive to most people that the earlier in a project’s lifecycle you find a bug (including a security vulnerability), the easier—and therefore cheaper—it will be to fix. This only makes sense: The later you find a problem, the greater the chance that you’ll have a lot of work to undo and redo. For example, you learned in the previous chapter that it’s completely insecure for a web application to perform potentially sensitive operations like applying discounts in client-side code. (Remember the MacWorld Conference platinum pass discount fiasco.) If someone knowledgeable in security had looked at this design at the very start of the project, he probably could have pointed out the inherent security flaw, and the application design could have been reworked fairly quickly. But once developers start writing code, problems become much more expensive to fix. Code modules may need to be scrapped and rewritten from scratch, which is time-consuming and puts an even greater strain on already tight schedules.

IMHO

There’s another, less tangible downside to scrapping code, which is that it’s demoralizing for the developers who wrote it. Speaking from experience early in my career, it’s difficult to see code you sweated over and worked on over late nights of pizza and coffee get dumped in the trash bin through no fault of your own, just because the project requirements that were handed to you from project management were later found to be faulty.

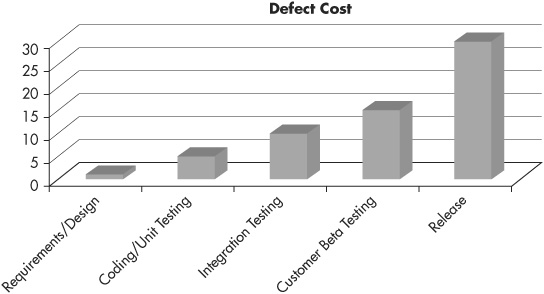

So we all understand that it’s better to get bad news about a potential problem early, but how much better is it? If you could quantify the work involved, how much extra cost would be incurred if you found a vulnerability during the application testing phase versus finding it during the requirements-gathering phase? Twice as much? Three times as much? Actually, according to the report “The Economic Impacts of Inadequate Infrastructure for Software Testing” published by the National Institute of Standards and Technology (NIST; http://www.nist.gov/director/planning/upload/report02-3.pdf), finding a defect in the testing phase of the lifecycle is 15 times more expensive to fix than if you’d found it in the architectural design stage. And if the defect actually makes it past testing and into production, that number shoots up further still: Vulnerabilities found in production are an average of 30 times more expensive to fix than if they’d been found at the start of the project. Figure 9-1 shows NIST’s chart of relative costs to fix software defects depending on when in the product lifecycle they’re originally found. Another study conducted by Barry Boehm of the University of Southern California and Victor Basili of the University of Maryland (http://www.cs.umd.edu/projects/SoftEng/ESEG/papers/82.78.pdf) found an even greater disparity: Boehm and Basili found that software defects that are detected after release can cost 100 times as much to fix as they would have cost to fix during the requirements and design phase.

Jeremiah Grossman, CTO and founder of WhiteHat Security, estimates that an organization spends about 40 person-hours of work to fix a single vulnerability in a production web site (http://jeremiahgrossman.blogspot.com/2009/05/mythbusting-secure-code-is-less.html). At an average of $100 per hour for development and test time, that works out to $4,000 per vulnerability. And again, vulnerabilities that require a substantial amount of redesign and reprogramming to be fixed can cost significantly more. Properly fixing a cross-site request forgery vulnerability can take 100 hours of time (this figure also from Grossman), at a cost of $10,000. If we assume that these estimates and the NIST statistic on relative cost-to-fix are accurate, then we come to the conclusion that almost $9,700 of the cost of that CSRF vulnerability could have been avoided if the organization had found it early enough in the development lifecycle.

Figure 9-1 Relative cost to fix software defects depending on detection phase of application development lifecycle (NIST)

However, while you may be able to put a dollar amount on the labor involved in fixing a vulnerability, it’s harder to put a price tag on the user trust you’ll lose if your application is compromised by an attacker. In some cases it may not even be possible to recover from an attack. You can fix the code that led to a SQL injection vulnerability, but if your users’ data was stolen, you can’t “un-steal” it back for them; it’s gone forever.

The Penetrate-and-Patch Approach

It’s always encouraging to watch organizations get application security religion. Sometimes this happens because the CTO attends a particularly compelling security-focused session at a technology conference, or maybe someone sends him a copy of a web application security book (hint, hint). Sometimes this happens because the organization gets compromised by an attack, and people want to know what went wrong and how to stop it from happening again. But whatever sets them on the path to improving their application security, their first inclination is usually to begin by adding some security testing to their application release acceptance testing criteria.

Now, this is not necessarily a bad place to start. Testing for security vulnerabilities is always important, no matter how big or small your organization is. And if you lack the appropriate in-house expertise to perform security testing yourself, you can always contract with a third party to perform an external penetration test (or pentest) of your application.

But although adding security testing to your release process isn’t a bad place to start, it is a bad place to end; or in other words, don’t make release testing the only part of the application lifecycle where you work on security. Remember the NIST statistic we showed just a minute ago. The earlier you find and address problems, the better. But release testing by its very nature comes at the end of the lifecycle—the most expensive part of the lifecycle. And you also have to consider the fact that it’s unlikely that the pentest will come up completely clean and report that your site has absolutely no security vulnerabilities.

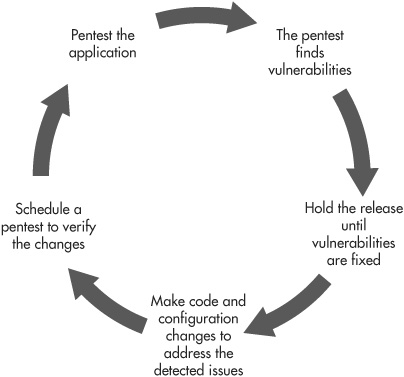

A more likely scenario is that the pentest will find several issues that need to be addressed. The same WhiteHat Security report that found that the average vulnerability costs $4,000 to fix also found that the average web site has seven such vulnerabilities. You’ll want to fix any defects that the pentest turns up, but then you’ll also want to retest the application. This is to ensure not only that you correctly fixed the vulnerabilities that were found, but also to ensure that you didn’t inadvertently introduce any new ones.

If the retest does reveal that the issues weren’t fixed completely correctly, or if turns up any new issues, then you’ll need to start up yet another round of fixing and retesting. At this point, the costs of these “penetrate-and-patch” cycles are probably really starting to add up, as you can see in Figure 9-2. Worse, the application release is being held up, and it’s likely that there are a lot of frustrated and unhappy people in the organization who are now going to start seeing security as a roadblock that gets in the way of getting things done.

Figure 9-2 The spiraling costs of the “penetrate-and-patch” approach to application security