Chapter 5

5G Fundamentals

Perhaps very few market segments have gone through the type of market adoption seen in the mobile industry. 5G does not start the mobility revolution from scratch but rather builds on the concepts and innovations of previous generations. For instance, near-real-time services have already been available in 4G, enabling real-time gaming and augmented reality applications for leisure. 5G is expected to push this further with enhanced reliability and low-latency capabilities for applications such as industrial automation, self-driving vehicles, remote medical care, and other mission-critical applications.

Similarly, mobile bandwidth has already been steadily growing to allow live video or video-on-demand over LTE, as well as multipoint videoconferencing. 5G aims to enable enhanced mobile broadband for everyone.

The fundamentals covered thus far in the previous chapters lay the foundation for the generational leap to 5G. This chapter brings those fundamentals together and dives deeper into the innovations that shape 5G. This chapter continues to focus on three domains of the mobile communication network—RAN, mobile core, and transport—and explores how each of these evolves to create a complete 5G experience.

5G Radio Access Network

Among the many parts comprising a fifth-generation mobile network, not many are entirely novel. Undeniably, significant innovations distinguish 5G radio technology from its predecessors, but all of these essential innovations are deeply rooted in technologies developed for previous generations of networks. After all, LTE proved its ambitious name by giving a solid foundation for mobile radio network evolution in the long term. Proper understanding of these innovations is fundamental for 5G mobile core network (MCN) capacity planning, architectural definitions, as well as technology selection.

Air Interface Enhancement

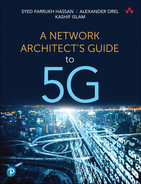

The radio access technology of 5G networks is called 5G New Radio (5G NR), or NR for short. Although it is based on the same Orthogonal Frequency-Division Multiple Access (OFDMA) technology, a few important innovations distinguish it from LTE radio access. Unlike LTE with its fixed subcarrier spacing in Orthogonal Frequency Division Multiplexing (OFDM), NR supports multiple spacings with wider subcarriers. In addition to 15 KHz, NR also supports OFDM subcarrier widths of 30, 60, 120, and 240 KHz.1 The radio frame of NR has the same duration of 10ms as in LTE and is divided into 10 subframes. However, the similarities in the frame structure between LTE and NR end here, and further subdivision of an NR subframe differs significantly from that of LTE. There are two slots per subframe in LTE, with each normally carrying seven OFDM symbols (or six in some cases). In contrast to that, 5G NR slots carry 14 OFDM symbols.

Subcarrier width and OFDM symbol duration are inversely proportional; therefore, the use of wider subcarriers effectively reduces the duration of an OFDM symbol. With a fixed number of OFDM symbols per slot, a 1ms NR subframe can have anything from one slot (with 15 KHz subcarrier spacing) to 16 slots (with 240 KHz spacing).2 The use of wider subcarrier spacing and shorter slots decreases radio interface latency, thereby benefiting URLLC scenarios. Figure 5-1 illustrates the difference in NR framing structure depending on subcarrier spacing.

FIGURE 5-1 5G NR Frames, Slots, and OFDMA Subcarriers

In both LTE and NR, slots are used to schedule data over the radio interface, but NR can schedule data in a more efficient way. Besides using more diverse slot durations, 5G NR offers flexible use of individual symbols within a slot. Contrary to LTE, the use of slots and OFDM symbols within each slot is not rigidly prescribed by the 5G specification. The control information, data, and receipt acknowledgment can be sent within a single slot, significantly reducing latency in the network and allowing faster retransmissions of lost data.

Just like in previous generations, both FDD and TDD duplexing methods can be used in NR. When FDD duplexing is used, all the slots in the frame are used for either downlink or uplink communication in their respective bands. On the other hand, a frame in TDD duplexing may contain slots for both downlink and uplink communications. The TDD concept is taken even further in NR, with each individual OFDM symbol being used for downlink or uplink communication within a single slot. There are a number of different formats for uplink, downlink, and flexible (either down or uplink) symbols in a slot defined in the 3GPP 5G; NR; Physical layer procedures for control specification.3 As a result, the receiver does not have to wait for the beginning of a new slot to start receiving data after control information, and then for another slot to send an acknowledgment back. Everything can be done within a single slot, provided the chunk of data is short enough. Such a slot is said to be self-contained, as it has all necessary information to decode and use the data it delivers. This helps not only TDD but FDD as well, as all necessary information is delivered in a self-contained slot.

To improve latency characteristics of NR for ultra- and very-low-latency scenarios even further, 3GPP’s Study on new radio access technology proposes the use of a mini-slot with a smaller number of OFDM symbols.4 A mini-slot can have as few as only one OFDM symbol and can be scheduled immediately, without waiting for the beginning of a new regular slot. In some cases, mini-slots can preempt data already scheduled for transmission via a process called puncturing, reducing latency for URLLC use cases even further.

5G NR Channel Widths and Carrier Aggregation

To boost the peak rates and overall cell capacity, 3GPP defined wider channels for 5G NR, ranging from 5 MHz to as wide as 400 MHz, depending on the frequency range used. For sub-7 GHz bands, also known as Frequency Range 1 (FR1), channel widths can be between 5 MHz and 100 MHz.5 Channel widths of 50 MHz through 400 MHz are specified for the bands above 24 GHz, known as Frequency Range 2 (FR2).6

Not every combination of subcarrier spacing and channel width is supported by 3GPP specifications. For example, 15 KHz subcarrier spacing can be used in channels 5–50 MHz wide but is not supported with wider channels. Moreover, subcarrier spacings of 15 KHz and 30 KHz are not supported in FR2 at all, due to significantly different propagation characteristics of mmWave, as was mentioned in Chapter 2, “Anatomy of Mobile Communication Networks.” Subcarrier spacings of 15 KHz and 30 KHz, with their longer OFDM symbol duration, are effective in combating inter-symbol interference in macrocells covering large areas but would make transceivers unnecessarily complex and expensive in mmWave bands. A comprehensive list of supported subcarrier spacings and channel widths is defined in 3GPP’s “5G; NR; User Equipment radio transmission and reception.”7, 8

Besides the wider channels definition in NR, the width of the guard bands is also optimized, providing a slight increase in the number of usable subcarriers in each channel compared to LTE. For example, a 20 MHz channel with 15 KHz spacing in NR can have 1272 subcarriers, versus 1200 subcarriers in the same 20 MHz LTE channel.9 Each of these subcarriers is organized in sets of 12, forming a resource block. Resource blocks were briefly introduced in Chapter 3, “Mobile Networks Today,” but unlike LTE, resource blocks in NR are not defined as a number of subcarriers per subframe. Instead, 5G NR defines a resource block as just 12 OFDMA subcarriers, without relation to time. This allows more flexible use of resource blocks within a slot, as was described in the previous section. Figure 5-2 shows a high-level view of 12 OFDMA subcarriers to a resource block mapping and multiples of resource blocks in a single NR channel. For example, there are 106 resource blocks in a 20 MHz NR channel.10

FIGURE 5-2 Carrier Aggregation in 5G NR

When a bandwidth of an individual channel is not sufficient, up to 16 channels can be aggregated using the carrier aggregation (CA) technique, just like in LTE. Individual channels become component carriers in a larger, aggregated channel, also shown in Figure 5-2. To be part of an aggregated channel, component carriers do not need to be consecutive and could belong to different frequency bands. Resulting aggregated channels in CA provide significantly more resource blocks to schedule transmissions, thereby allowing higher data rates for individual mobile devices. The total amount of resulting aggregated bandwidth is dependent on frequency range and subcarrier spacing, as well as the mobile device class.11, 12 In a best possible scenario, an LTE-Advanced subscriber can achieve almost 4 Gbps peak data rate using eight MIMO layers and five aggregated channels.13 NR, in turn, can offer almost 600 Mbps over a single 100 MHz channel with a single MIMO layer. With 400 MHz channels from the bands above 7 GHz, a single MIMO layer transmission can offer more than 2 Gbps peak data rates.14 Peak data rates for an individual subscriber can greatly exceed IMT-2020’s requirement of 20 Gbps with 4×4 or 8×8 MIMO and CA.

Beyond Orthogonal Frequency-Division Multiple Access

The work to enhance radio transmission efficiency does not stop with OFDMA. Multiple vendors and academia continue their research on Non-Orthogonal Multiple Access (NOMA) techniques in hopes of adopting it in fifth- or sixth-generation mobile networks. NOMA was introduced under different names in a number of different studies, including LTE releases.15 As the name implies, NOMA does not rely on orthogonal subcarriers; instead, it leverages interference cancellation techniques at the receiver. In simple terms, a receiver detects one, typically stronger, transmission, constituting a single layer from a composite signal. Once the stronger signal is retrieved, its original form is reconstructed and subtracted or cancelled out from the composite transmission, thereby revealing a weaker signal.16 Implementing NOMA is challenging but could provide some increase in spectral efficiency. Although the gain in efficiency is moderate, amplified by a high number of layers in multi-user MIMO transmissions, it can provide significant benefits for future air interface implementations.

5G NR Advanced Antenna Functions

The air interface enhancements in 5G NR are not limited to wider channels and new frequency bands, but also bring advanced features such as beamforming, Coordinated Multi-Point (CoMP), and multi-radio connectivity, offering better reliability and data rates for subscribers. 5G base stations use advanced antenna systems to simplify installation and provide even more layers for multi-user MIMO transmissions, achieving unprecedented cell capacities and targeted communication with mobile devices.

Active Antennas

Strictly speaking, an antenna is passive hardware designed to emit and receive radio waves. In its simplest form, it is just a piece of wire forming a dipole antenna. Since the early days of mobile communication networks, antennas have undergone phenomenal transformations, and today’s advanced antennas look very different from those simple pieces of wire used a century ago. The ever-increasing demands for efficiency, speed, and reliability of radio communications led to many innovations and ingenious solutions in those recognizable elements of modern life—antennas.

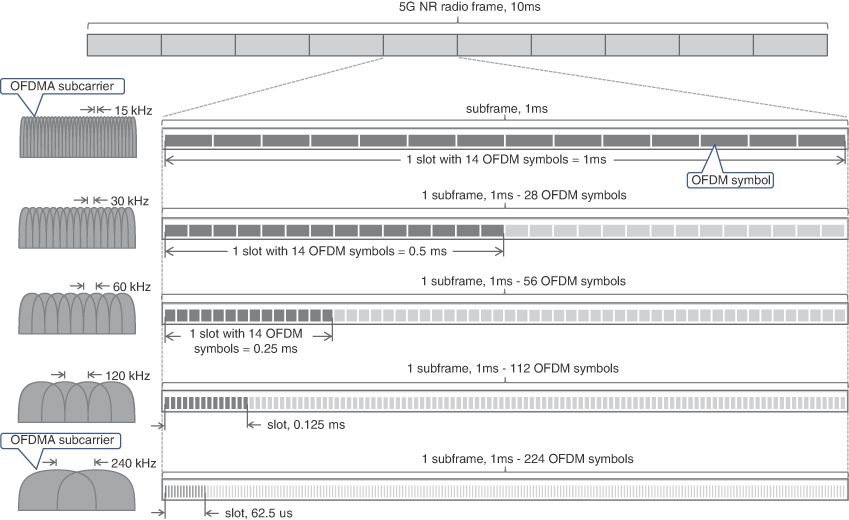

Antennas today are extremely sophisticated pieces of hardware. Virtually all installations today have antennas and radio heads (a.k.a. RRUs) mounted in close proximity on the radio towers, avoiding long spans of RF cables and associated energy losses. Nevertheless, RRUs and antennas are still connected with multiple RF jumper cables. These external cables are susceptible to environmental degradation, introduce losses, and are cumbersome to maintain, especially with a higher number of MIMO layers used in today’s systems. Eliminating these jumpers and putting RRUs inside the antenna enclosure created what is known today as an active antenna. Use of active antennas dramatically reduces the number of cables needed, making installation simpler, cleaner, and less prone to wear and tear. Figure 5-3 depicts a comparison between a passive and active antenna.

FIGURE 5-3 Passive and Active Antennas

Beamforming

Early attempts to adjust antenna radiation patterns without the use of bulky reflector hardware have led to beamforming and null-forming techniques, which facilitate dynamically adjusting the antenna radiation patterns and reusing the frequency spectrum with high efficiency. Coordinated use of beamforming and null-forming by neighboring base stations significantly reduces interference, thereby allowing many individual subscribers to enjoy high data rates. Beamforming is one of the fundamental features empowering 5G NR radio access technology.

Antennas used in today’s radio access are collections of fancy-shaped elements, intricately arranged into patterns inside the antenna’s radio-transparent enclosure called a radome. Nevertheless, the foundational principles of today’s advanced antennas operation are not overly complicated—everything starts with a dipole. The radiation pattern of a simple vertically installed dipole antenna has a donut shape with radio waves propagating in all directions except vertical (although, in the real world, radio waves may scatter in the vertical direction, albeit greatly attenuated). A typical mobile antenna used in previous generation networks had a number of dipole elements, all connected in parallel to a single antenna port. An RF signal from a BBU’s radio transceiver would be radiated by the dipoles, each forming a donut-shaped electromagnetic field around them. The composite signal of multiple dipoles and the steel plate behind them shape the signal, redirecting it perpendicular to this plate and the column of dipoles, effectively forming a horizontal beam. It is said that an antenna has gain in a particular direction. This simple process helps divert otherwise wasted energy in the desired direction, while shielding the space behind the antenna from the unwanted interference. More technically, this beam is called the main lobe of an antenna radiation pattern, and its width is determined by the number, shape, and relative positioning of individual antenna elements. An antenna with many elements can form quite a narrow beam, but unfortunately the narrower the main lobe is, the more complex-shaped side lobes are created at the same time. Radio engineers and antenna designers seek for an optimal balance in antenna radiation patterns, creating a variety of antennas for different implementation scenarios. Figure 5-4 shows an example of a sector antenna with a column of short dipoles, with side and top views of a combined radiation pattern.

FIGURE 5-4 Simple Sector Antenna with Fixed Radiation Pattern

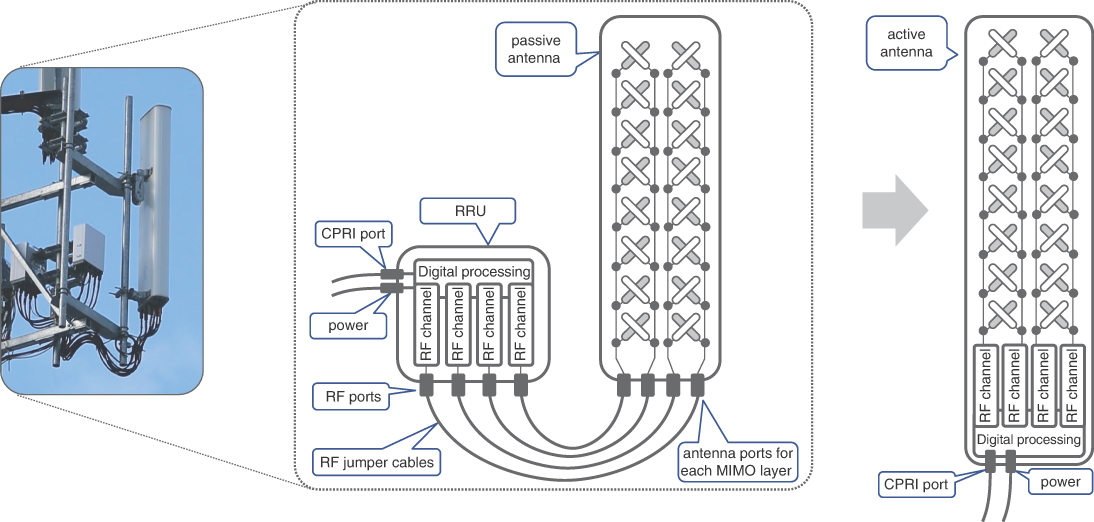

Popular antenna design features a vertical column of dipoles, redirecting otherwise wasted energy into a vertically focused beam, while covering a whole sector horizontally. These antennas with narrowly focused beams have to be tilted downward to ensure strong signal reception throughout the cell. Instead of mechanical tilting, the antennas once again rely upon the effect of constructive and destructive interference of radio waves emitted by closely placed antenna elements. Each of these antenna elements is connected to an individual analog phase-shifting device that delays the RF signal by a carefully selected, miniscule amount of time to influence the resulting radio waves’ interference peaks and troughs position in space. In simple terms, the effect of shifting phases for individual antenna elements is the tilt of the radiation pattern in the vertical direction and is displayed in Figure 5-5.

The amount of a phase shift for each antenna element can be adjusted, allowing for a change in the vertical direction, and is commonly referred to as an electric tilt. It is important to note that the whole column of antenna elements is fed by a single RF circuit, which is sometimes also called RF chain. Although the RF chain shown in Figure 5-5 primarily refers to a transmitter, a typical RF chain is composed of both the transmitter and receiver.

FIGURE 5-5 Radiation Pattern of an Antenna with Electric Tilt

The electric tilt is just a way to adjust the vertical angle of an antenna radiation pattern or, more technically, to control the antenna’s gain in the vertical direction. It is also sometimes called analog beamforming, as it steers the beam in a downward direction with the use of analog phase shifters. The use of analog phase shifters, instead of mechanical tilting, might sound like a complex solution for a simple task, but in reality, the phase shifters are rather simple inexpensive devices, usually controlled by a stepped DC motor inside the antenna enclosure.17 The amount of tilt is typically controlled through the BBU, via a special tilt control circuit on the antenna. Analog beamforming allows for tilting the antenna radiation pattern with great precision post-installation, without touching the antenna physically.

Analog beamforming was instrumental in improving overall energy efficiency of the radio interface, but it provided little utility to segregate different users or user groups. Indeed, the beams formed by most legacy LTE antennas are vertically narrow, but they still have horizontally wide radiation patterns, thereby illuminating the whole sector. As a result, all mobile devices within the sector have to share the limited number of resource blocks assigned to the base station, thus limiting the transmission data rates. In order to achieve a higher reuse of frequency, or rather resource blocks within a sector, 5G NR technology uses more advanced three-dimensional beamforming (3D beamforming) technology.

3D beamforming relies on the same principle of using multiple elements to form the beam, but this time the beams are formed by two-dimensional arrays, or planar arrays, of antenna elements. With the right spacing between antenna elements, it is possible to create a beam narrow in both the vertical and horizontal dimensions. By applying different phase shifts to the antenna elements in such a planar array, the resulting beam can be steered both vertically and horizontally, while illuminating only a small part of the sector occupied by one or more target mobile devices. By controlling the antenna gain and allowing steering in both the vertical and horizontal directions, 3D beamforming allows more independent transmissions using the same frequency, thus providing higher cell capacity and higher data rates. Figure 5-6 provides a simplified view of 3D beamforming.

FIGURE 5-6 3D Beamforming

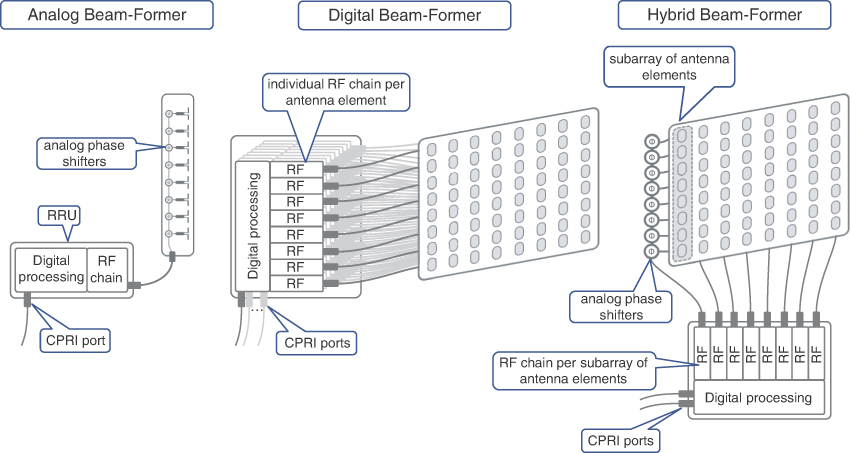

Antennas supporting beamforming sometimes are also referred to as beamformers. A typical beamformer is a planar antenna with many elements organized in columns and rows. In an ideal scenario, 3D beamforming can be implemented with all individual antenna elements connected to independent RF chains. These RF chains allow each element to act as an independent antenna transmitting the same signal with different phase shifts. The amount of a phase shift for each antenna element is controlled digitally and applied in their respective RF chains. This is in contrast to analog beamforming, where a single RF chain is used to feed multiple antenna elements while the phase shifts are applied by analog circuits. This beamforming technique is called digital beamforming, which offers great precision in beam width and direction and allow beams to follow individual subscribers.

Unfortunately, controlling dozens or hundreds of antenna elements with individual radio chains is often not feasible and might also be cost prohibitive in the case of mmWave bands. Therefore, for a more practical approach, hybrid beamforming is more commonly used. Hybrid beamforming combines analog beamforming to steer beams in vertical direction, while horizontal steering is achieved via digitally controlled phase shifts applied by individual RF chains. In this scenario, an antenna is divided into a number of different vertical subarrays of antenna elements, which might have different, preset vertical tilts. Each subarray, in turn, is controlled by an independent RF chain. A few subarrays with the same vertical tilt are used to create a beam, which can be dynamically steered in a horizontal direction, illuminating a particular spot in the sector, covering a single or a cluster of mobile devices. When the beams are narrow enough and the side lobes of a particular beam do not interfere with other mobile devices, it is possible to reuse OFDMA subcarriers within a single sector for multiple transmissions at once. Figure 5-7 shows different beamformers.

FIGURE 5-7 Analog, Digital, and Hybrid Beamformers

Hybrid beamforming is a cost-effective yet flexible solution for 3D beamforming. Individual mobile devices as well as base stations use special reference signals for sounding purposes, thus probing which beams within the sector are most optimal for a transmission. Sounding signals are transmitted every few dozens of milliseconds over each beam, and based on the feedback from the sounding signal, a mobile device can be switched to another beam, if appropriate.

Massive Multiple Input Multiple Output (mMIMO)

Fundamental for 5G radio technology is the principle of multiple simultaneous data transmissions using the same carrier frequency, known as multiple input multiple output (MIMO), the basics of which were covered in Chapter 3. MIMO technology has been instrumental in increasing spectral efficiency and boosting peak data rates for individual subscribers as well as the entire cell capacity in previous generation mobile networks. 4×4 and 8×8 MIMO systems are commonly used in LTE systems, yet increasing the order of MIMO beyond these numbers is not straightforward. The path to densification of spatial multiplexing collides with the size limitations imposed on the handheld mobile devices. Specifically, the limiting factor is how many antennas can be packed into a single mobile device. Antennas cannot be miniaturized infinitely, and the distance between multiple antennas is determined by the carrier frequency and, therefore, cannot be arbitrary. This limits the number of parallel transmissions in the case of single-user MIMO. Nevertheless, when many mobile devices are served by a cell, it is possible to apply the principles of MIMO and multiplex data streams belonging to different subscribers using the same frequency. This approach is called multi-user MIMO (MU-MIMO), and it helps to significantly increase cell capacity as well as improve data rates for multiple subscribers simultaneously.

MU-MIMO is not limited by the size of an individual mobile device; hence, more antennas can be used at the base station to further increase the order of spatial multiplexing of data streams for different subscribers. When a MU-MIMO system uses a large number of antennas at the base station and more than one antenna is used to communicate with a single antenna at the mobile device, such a system is called massive MIMO (mMIMO).18 However, there are many other definitions of mMIMO systems used by industry engineers. These definitions might focus on various aspects of technology but, as a rule of thumb, a system with 16 or more independent antennas, each one connected to a respective individual RF chain, is considered a mMIMO system.

Strictly speaking, a mMIMO system can be constructed in many ways (for example, a line of antennas distributed over the roof of a building or even stretched over multiple building roofs). As long as all these antennas illuminate a single sector and are part of the same base station, this would be a mMIMO system. In reality, however, the most common mMIMO implementation is based on planar beamformers with many antenna elements. Use of beamformers in mMIMO systems is so popular that sometimes beamforming is used as a synonym for mMIMO, which, of course, is not technically accurate. Beamforming is just one of the applications of a mMIMO system.

Interestingly enough, modern beamformers (both analog and hybrid) use a clever technique to conserve precious space on the cell tower by reducing antenna size. This technique leverages the electromagnetic phenomenon of polarization, where electric and magnetic fields oscillate in a specific direction. Two polarized radio waves are said to be cross-polarized and do not interfere when their respective electric and magnetic fields oscillate in orthogonal directions. When two antenna elements are cross-polarized, they emit radio waves with little to no interference. Thus, these antenna elements can be placed one behind the other, effectively squeezing two antennas into one.

One important consideration of MIMO transmissions using hybrid and digital beamforming techniques should be noted here. As was explained in Chapter 3, the concept of MIMO transmission is the use of different spatially diverse paths to transmit multiple data streams, with each distinct data stream representing a separate MIMO layer. In digital and hybrid beamforming techniques, however, multiple RF chains are used to form a single beam, and all these RF chains transmit, in fact, the same data. Therefore, the number of independent MIMO layers offered by hybrid and digital beamformers is not equal to the number of RF chains. This can be further exemplified by the antennas shown in Figure 5-8, which shows 4×4 MIMO (often called 4T4R to signify the transmit and receive RF chains respectively) and 16×16 (also called 16T16R) mMIMO antennas.

FIGURE 5-8 4T4R MIMO and 16T16R mMIMO Antennas

The legacy 4T4R MIMO antenna shown in Figure 5-8 is used as a four-layer MIMO system—2× columns, 2× different polarizations, creating a total of four independent antennas. Each of these four antennas is connected to an individual RF chain and may transmit or receive distinct data streams. On the other hand, the 16T16R mMIMO antenna shown in the same figure is a hybrid beamformer. In this particular example, the antenna is organized into vertical subarrays of four antenna elements each. There are eight subarrays of each polarization, with a total of 16 independent subarrays. Each subarray relies on analog phase shifters to achieve downward tilt and is connected to an individual RF chain. The total number of RF chains required to drive this antenna is 16, defining its 16T16R mode of operation. However, to implement horizontal beam steering using this antenna, a few individual RF chains, connected to subarrays of the same polarization in a single row, have to transmit the same data, applying various phase shifts digitally. Hence, in the case of four subarrays used in horizontal beam steering, this particular organization of the 16T16R beamformer can offer four independent MIMO layers.

It is possible to use the same beamformer with wider horizontal beams and less precision in horizontal steering by reducing the number of RF chains for each beam to only two. This way, the same antenna can provide up to eight independent MIMO layers. The number of RF chains and MIMO layers is critical for proper radio access network dimensioning. Chapter 10, “Designing and Implementing 5G Network Architecture,” covers the topic of xHaul dimensioning in greater detail.

Some obvious benefits of mMIMO application in 5G networks include improved spectral efficiency, faster and more robust transmission, and energy efficiency among others. On the flip side, it can be challenging to implement with FDD, due to the lack of channel reciprocity, as explained in Chapter 2. Also, it might be expensive and hard to build mMIMO systems in mmWave bands.

Multi-Radio Connectivity

Strictly speaking, the transmission of subscriber data over multiple radios is not a new 5G feature. Ever since the introduction of MIMO, multiple radios are being used to transmit data over spatially diverse paths, but such transmissions normally utilize radios residing on the same cell site/tower and connected to the same BBU. Similarly, the carrier aggregation technique relies on multiple radios, and although it is possible to leverage remote radio units (RRUs) placed on different towers, these have to belong to the same eNodeB or, its 5G equivalent, gNodeB. In other words, there is only one RF scheduler, controlling the allocation of resource blocks over the air interface for a given mobile device.

Another example of multi-radio connectivity introduced in LTE Release 11 is Coordinated Multi-Point (CoMP) transmission.19 Substantial interference from the neighboring cells near the cell borders is always a serious challenge for mobile radio engineers and is traditionally addressed by using appropriate frequency reuse patterns. Restrictive frequency reuse patterns help to mitigate interference problems but dramatically reduce spectral efficiency. As explained in Chapter 3, LTE networks allowed moving away from strict allocations of frequencies in the whole cell by dividing cells into inner and outer parts. The same frequency channels can be used in the inner parts of the neighboring cells, yet the outer parts still have to follow stringent rules of frequency reuse patterns. One of the goals of the CoMP transmission technique is to solve this challenge. CoMP defines various scenarios ranging from coordination only within a single site, mainly between multiple sectors of the same eNB, to coordination between neighboring cells, which could be a combination of either macro-macro or macro-pico cells.

The basis of CoMP technology is the coordinated scheduling of radio resources used by neighboring radios or, in 3GPP terminology, transmission points (TPs). Multiple TPs can be represented by a collection of RRUs, which can be controlled by the same or different eNBs. In the latter case, the scheduling information must be exchanged over the X2 interface to achieve the desired cooperation across eNBs. Nevertheless, all coordinated transmissions are considered by the mobile device as if they are controlled by a single RF scheduler.

A few different approaches to implement radio resource scheduling by multiple TPs are defined under the CoMP umbrella:

Joint transmission: Multiple TPs can send the same symbols on the same frequency, for the same subscriber, and if coordinated properly, can greatly improve signal quality received by a mobile device. However, such coherent joint transmission requires very accurate coordination and, thus, is very sensitive to the latency between participating TPs and/or eNBs.

Coordinated scheduling/coordinated beamforming: Neighboring TPs coordinate scheduling of transmissions using certain beams, where respective low-gain radiation pattern (null) points in the direction of subscriber, attached to a neighboring cell, participating in coordination. The benefit of using this approach is increased spectral efficiency, and it can also be combined with the joint transmission method.

Dynamic point selection: A transmission point sending the data to the subscriber can change every subframe, with one TP used at a time. Dynamic point selection enables reacting to quickly changing transmission conditions by changing the transmission point, thus improving overall reliability.

Figure 5-9 illustrates these CoMP approaches.

FIGURE 5-9 CoMP Transmission Scheduling Methods

CoMP transmission is an effective way to improve signal quality, reliability, and data rates at the edges of cells and in picocells within macrocells. CoMP techniques can be instrumental in improving signal robustness in 5G URLLC scenarios, preventing potential signal dropouts in complex industrial environments involving mission-critical applications of M2M communications. On the other hand, CoMP techniques impose tight latency and reliability requirements on backhaul for inter-eNBs/gNBs coordination. It is considered that CoMP relies on an ideal backhaul and hence can greatly benefit from C-RAN architectures, where BBUs are co-located and have virtually no latency between them. Application of CoMP in 5G networks is an area of active research among 3GPP members.20

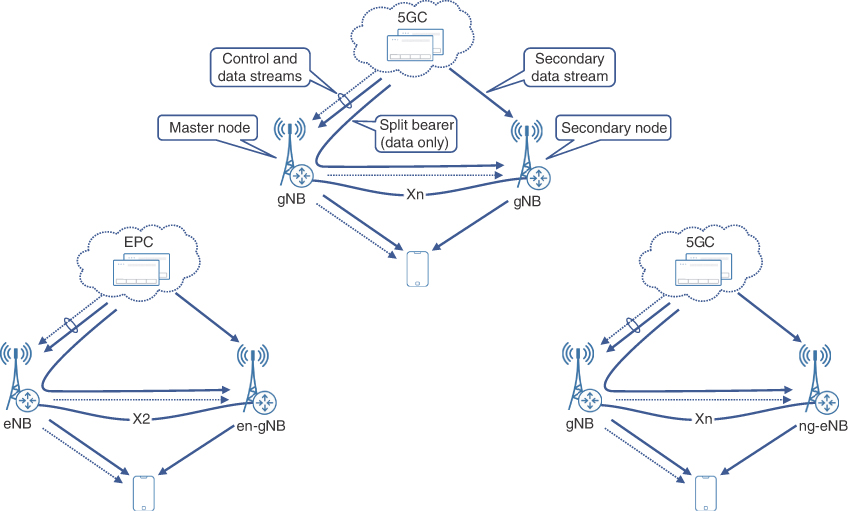

Dual connectivity is another form of multi-radio connectivity that was introduced for LTE in 3GPP Release 12. This approach was further generalized and expanded in Releases 15 and 16 and called Multi-Radio Dual Connectivity (MR-DC).21 Unlike CoMP, where coordination and scheduling happen using a single RF scheduler, MR-DC involves higher layers of 5G protocol stack (more details on 5G protocol stack are covered in the upcoming sections), allowing RF schedulers to operate independently in two participating RAN nodes, such as eNB or gNB, which allows operation in non-ideal backhaul environments. However, this connectivity model requires mobile devices to support simultaneous communications with two separate eNBs/gNBs.

It is anticipated that current large mobile network deployments will coexist with 5G networks for quite some time and will require well-defined procedures to transition from previous generation to 5G network. Some of these deployment challenges can be addressed through the use of standalone (SA) and non-standalone (NSA) 5G deployments, where 5G NR can communicate with the previous generation packet core—Evolved Packet Core (EPC).

MR-DC can operate in such heterogeneous environments and use:

en-gNB: A 5G NR RAN node, which is capable of establishing user-plane communication with EPC and works as a secondary node with the control plane established over the X2 interface via the main eNB.

ng-eNB: An LTE RAN node, which can establish control- and user-plane communication with 5G Core. These nodes also establish communication with gNB nodes over 5G’s Xn interface.

MR-DC defines two roles for RAN nodes: master node (MN) and secondary node (SN). MN is responsible for all regular tasks of a typical eNB or gNB in a single connectivity deployment; that is, it establishes both control- and data-plane connections with the mobile core, controls data transfers, and schedules radio resources. Additionally, MN establishes a control-plane connection with the SN over the X2 interface in the case of eNB—or its equivalent, Xn interface, in the case of gNB. The secondary node also establishes a user-plane connection with the mobile core, allowing both the MN and SN to schedule their own radio resources separately, through the use of respective radio bearers. However, 3GPP defines another way of delivering user data, through the use of a split bearer. This mechanism allows the bearer to be terminated at the MN, while pooling the radio resources of both the MN and SN. The user data stream is then split between the MN and SN and is transported over the X2/Xn interface between these two RAN nodes. The use of split bearers can result in a significant amount of user-plane data exchanged over X2/Xn interfaces.

Note

Use of the term master is only in association with the official terminology used in industry specifications and standards, and in no way diminishes Pearson’s commitment to promoting diversity, equity, and inclusion and challenging, countering, and/or combating bias and stereotyping in the global population of the learners we serve.

A number of different permutations for dual connectivity are specified by 3GPP, such as whether RAN nodes connect to 5G Core or to legacy EPC, if a gNB or an eNB is a master node, and what is being used as a secondary node. Figure 5-10 shows a few options of using MR-DC with EPC and 5G Core (5GC) network.

FIGURE 5-10 Multi-Radio Dual Connectivity Options

It is important to note that carrier aggregation can be used along with MR-DC connectivity options; however, the number of component carriers aggregated over two cells or cell groups cannot exceed 32, which is defined as the maximum number of component carriers in 5G NR.22

Dynamic Spectrum Sharing

Transition to a newer radio access technology is always a challenging and complex endeavor. Thanks to 5G NR capabilities to communicate with EPC, it is possible to implement gradual deployment of 5G NR technology. Yet, this provides little relief to the spectrum repurposing aspect of this transition.

Spectrum repurposing, or reallocation of bands and frequencies from older to newer radio access technology, can be complicated, especially in the areas of high traffic. In a simple and direct approach, the newer radio access technology uses a separate set of frequencies, when coexisting with the older technology. Although it might be a feasible approach to deploy 5G NR using high bands, as these are not used by LTE, it requires much more planning when low and mid bands have to be reused. The low- and mid-band frequencies are typically a very scarce resource, as these provide the sweet spot between capacity and optimal propagation. Providing 5G radios with their own set of mid- and low-band channels typically requires carving those channels out of current LTE allocations. This task requires careful resource planning by radio engineers, as it is not easy to find an optimal balance between 5G and 4G resources. Repurposing spectrum statically could result in an imbalance, where the previous generation users might not get enough RF resources, while resources assigned to another radio access technology are wasted in the absence of a substantial number of subscribers.

Dynamic spectrum sharing (DSS) helps to avoid such suboptimal scenarios and offers flexible distribution of radio resources, adjusting to the fluid ratio between 5G and 4G subscribers in a cell.

DSS leverages the fact that both LTE and NR use OFDM and can be deployed with the same subcarrier frequency spacing and symbol duration. In fact, NR was designed with such interoperability in mind. In DSS setup, LTE and NR radio nodes, serving the same cell, rely on coordination between their radio resource schedulers, such that resource blocks belonging to the same channel can be used by one of these radios, as needed. This allows the use of the same channel on both radios, without subdividing for hard allocations to a particular radio: NR or LTE.

DSS is an area of active research in 3GPP.23 Most current deployments rely on a proprietary interface between the eNB and gNB for scheduler coordination, thus limiting the choice of RAN node vendors to match those already deployed.24

Vehicle-to-Everything Communication

Originally introduced as an LTE study item, Cellular Vehicle-to-Everything, or C-V2X, became a standard in Release 14 and was adopted for 5G NR implementations in Release 16.25, 26 C-V2X communication is aimed at providing additional communication channels between vehicles, and between vehicles and other road users as well as parts of road infrastructure.

C-V2X uses a concept of a sidelink, effectively turning a vehicle into a RAN node by extending connection from nearby eNB/gNB to other mobile devices. It is also possible for a single vehicle to coordinate direct communications between a group of vehicles, thus creating a mesh of direct interconnections, enabling robust communication between multiple vehicles and a network.

The Vehicle-to-Everything communication approach encompasses a number of different use cases: Vehicle-to-Network (V2N), Vehicle-to-Vehicle (V2V), Vehicle-to-Infrastructure (V2I), and Vehicle-to-Pedestrian (V2P). Vehicles communicating with each other and with the road infrastructure may coordinate different parameters, such as speed, lane selection, and even path, based on dynamic conditions on the road. Such communication and coordination make transportation much more effective and safer, especially in the case of self-driving cars. New safety features, such as “Do not pass warning,” can be a result of V2V communication, warning a driver or a self-driving car about the upcoming traffic on non-divided highways.27 All these features require strict coordination and impose new and stringent requirements on the underlying transport network, addressed by the implementation of URLLC-type services. C-V2X communications can also improve safety of pedestrians by warning nearby cars about their presence, complementing obstacle-detection systems currently adopted by some vehicles.

C-V2X communications standards and implementations are still in their infancy, but this is an area of active research and growth. It is anticipated that vehicular communications become a widely adopted phenomenon and enable future innovations in transportation.

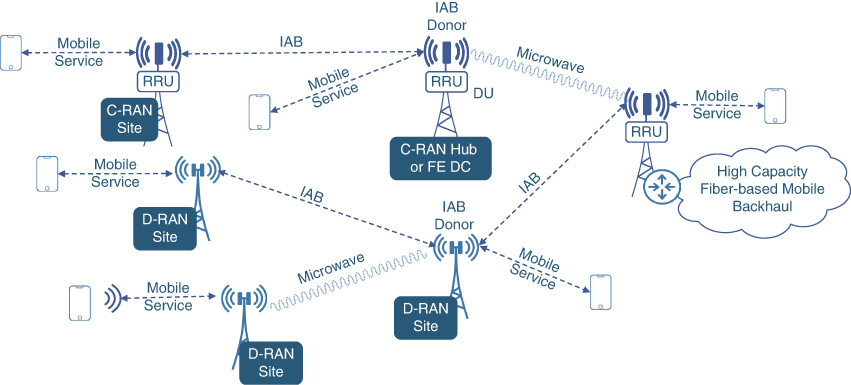

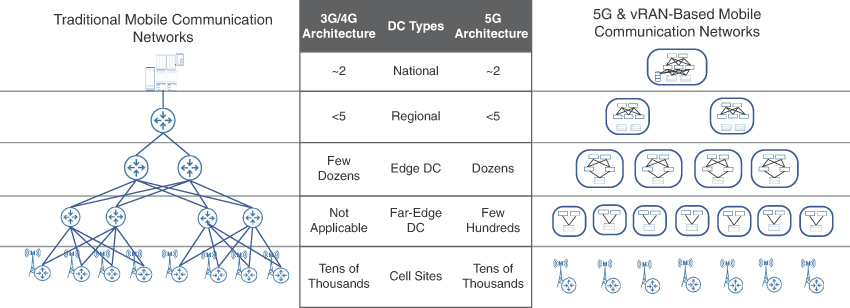

RAN Virtualization and Decomposition

Centralized RAN (C-RAN) architecture was proposed around 2010 by China Mobile Research Institute, in collaboration with hardware vendors such as Intel, ZTE, Nokia, Huawei, among others.28 It was originally geared toward offering a sustainable green solution for RAN, while offering cost savings through BBU pooling at a centralized location. It had been shown that cell sites are responsible for more than 70% of overall power consumption in a mobile service provider’s network, with over 50% of each cell site’s power consumption going toward air conditioning.29 The C-RAN cost reductions come from a leaner cell site footprint, significantly lower cooling and power costs, and lower maintenance costs due to reduced truck rolls to every cell site in case of BBU issues. Although C-RAN started primarily as a green initiative, the BBU pooling offered other advantages such as improvement in RAN utilization through increased spectral efficiency, reduced interchannel interference, and simplified use of CoMP transmission.

Despite these benefits of real estate and power savings as well as RAN efficiency that mobile operators could realize by using C-RAN architectures, they still had to deploy the same number of these chassis-based BBUs and would not be able to optimize BBU usage across cell sites. This was because the BBU was still a purpose-built, vendor-specific, physical hardware and was allocated to its specific cell site. Based on the subscriber load, some BBUs might be underutilized while others might be oversubscribed, depending on factors such as the cell site location they are serving and time of the day. The net result was the pooling of physical BBUs in a central location, but not the sharing of baseband-processing resources across cell sites. The transition toward the virtualization of RAN components offered a solution to this inefficiency.

Centralized, Cloud, and Virtualized RAN

Network Functions Virtualization (NFV) was initially proposed in 2012 (around the same time as 3GPP Release 11) by a consortium of service providers as a solution for cost and complexities associated with vendor-locked hardware.30 The solution proposed through NFV was to decouple the hardware and software layers and, by using virtualization techniques, remove the vendor lock-in for devices performing network functions. Dynamic scalability, deployment velocity, and easy upgradability through use of orchestration and automation tools were also among the goals of NFV.

General-purpose compute functions were first to be virtualized and decoupled with hardware. Soon after, new networking startups started offering virtual networking functions (VNFs) for various networking roles such as firewall, NAT, and route reflectors. These initial VNF implementations were predominantly focused on functions that relied heavily on software processes; however, the functions that had more dependency on the hardware took longer to be virtualized. BBU functions were also considered for virtualization and dubbed virtual BBU (vBBU).

New RAN equipment vendors such as Altiostar, COTS hardware vendors such as Dell, and silicon providers such as Intel partnered to offer vBBU solutions. Though it was demonstrated that vBBU would offer many benefits over specialized hardware, it was not commonly deployed as a single VNF but rather split into two RAN components—the distributed unit (DU) and the centralized unit (CU), which are discussed in detail in the next section.

The effort to drive RAN architecture toward the use of software-based, virtualized RAN components was given the name Virtual RAN (vRAN). The vRAN architectures gained industry momentum around the time when virtualization of applications was often equated with a transition to cloud-based environment. This perception led to the terms Virtual RAN and Cloud RAN being used interchangeably. Some vendors preferred the term Cloud RAN, whereas others opted to use vRAN terminology. More recently, however, a clear distinction has started to emerge between the two terminologies and the underlying architecture they represent. Virtual RAN (vRAN) now refers to the generic drive towards the use of software-based RAN components instead of physical hardware such as the BBU. On the other hand, Cloud RAN is now considered a subset of vRAN where the software-based RAN components are designed to be cloud-native. Later sections of this chapter will expand on the characteristics of cloud-native applications.

Note that the term Cloud RAN can also be abbreviated as C-RAN, which can easily be confused with Centralized RAN. Due to the similar abbreviations, and a lack of clear definitions, casual industry observers sometimes use the terms Centralized RAN and Cloud RAN interchangeably, which is not accurate. Centralized RAN refers to an architecture where the BBU or its decomposed RAN components (that is, the CU and DU) are moved away from the cell site and placed in one or more central locations. Cloud RAN, as mentioned above, is a subset of vRAN, and refers to an implementation where the RAN components are virtualized to be cloud-native. The virtualized RAN components (whether for vRAN or Cloud RAN) are referred to as virtualized CU (vCU) and virtualized DU (vDU), and could be placed at the cell site (that is, a D-RAN architecture) or in one or more DCs (that is, a Centralized RAN architecture).

It is helpful to think of Distributed/Centralized and Virtual/Cloud RAN architectures as orthogonal dimensions. Distributed or centralized RAN deployments could use physical RAN components (for example, a BBU or CU and DU appliance) or software-based RAN components (that is, a vRAN or Cloud RAN deployment). As mentioned previously, the software-based RAN components are usually decomposed into a vCU and vDU. Similarly, a vRAN deployment (including Cloud RAN deployment) could use a Distributed RAN model (where RAN components are distributed across Cell Sites) or a Centralized RAN model (where RAN components, that is, the CU and/or DU are placed in one or more Data Centers).

This book will use the term vRAN when referring to the architecture pertaining to software-based RAN components, which implicitly covers Cloud-RAN as well. The terms Centralized RAN and Cloud RAN will not be abbreviated from this point on to avoid any confusion. The term decomposed RAN will be used to refer to splitting of BBU into a CU and DU, which may or may not be virtualized and may be placed at the cell site, remotely at a data center, or a combination of the two. The placement of decomposed RAN components is discussed in the next section.

Virtualized RAN (vRAN) Architecture

A BBU performs a lot of specialized digital signal processing, converting radio traffic from RU into IP, and vice versa. The functions performed by BBU could be classified into two broad categories—real-time and non-real-time. Real-time functions would include scheduling, interference coordination, precoding MIMO and beamforming, modulation, and error correction, while non-real-time functions include bearer configuration, connection management, subscriber handovers, data encryption, header compression, and various operations, administration, and maintenance (OAM) tasks. In short, BBU tasks associated with direct traffic processing and forwarding are considered real-time functions, whereas management and control-related functions would classify as non-real-time.

Redesigning a BBU with functions split across two separate entities based on task classification made it easier for BBU to be virtualized. This was further supported by the performance improvements of available COTS hardware. The real-time functions are grouped in the DU, whereas the non-real-time functions were grouped in the CU. The decomposed vRAN architecture, that is, the virtualized CU and DU, is quickly becoming the go-to solution for new RAN deployments.

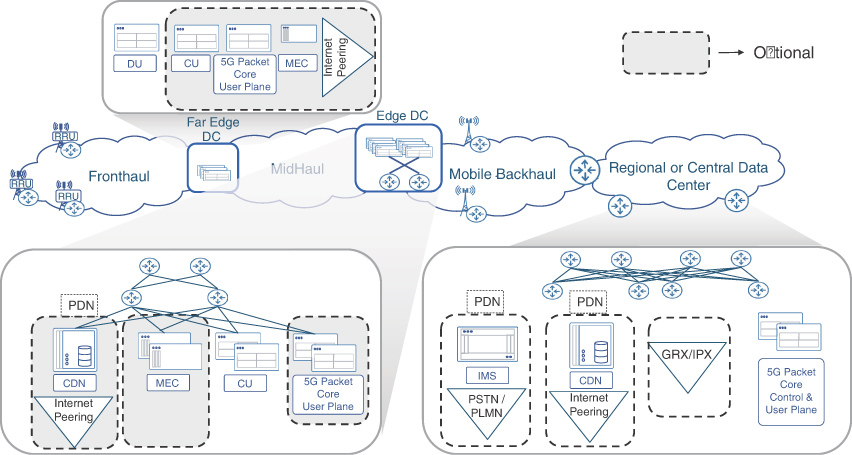

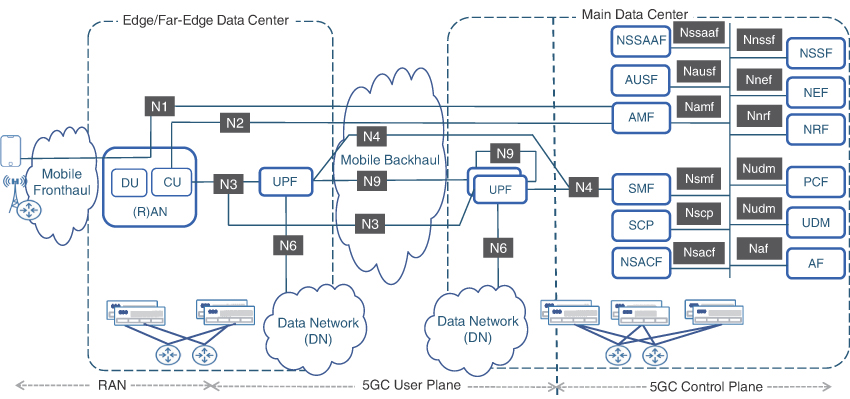

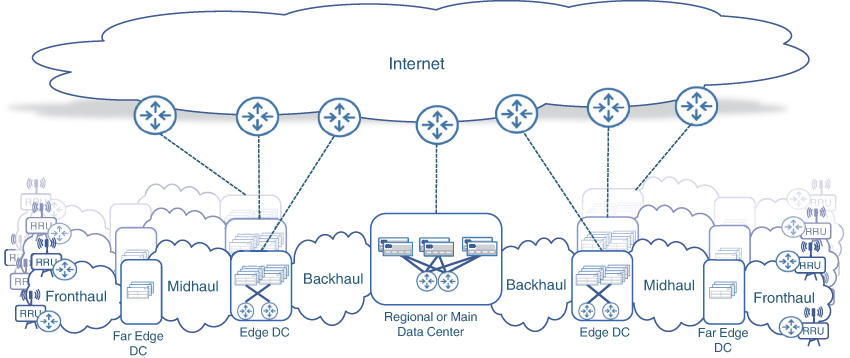

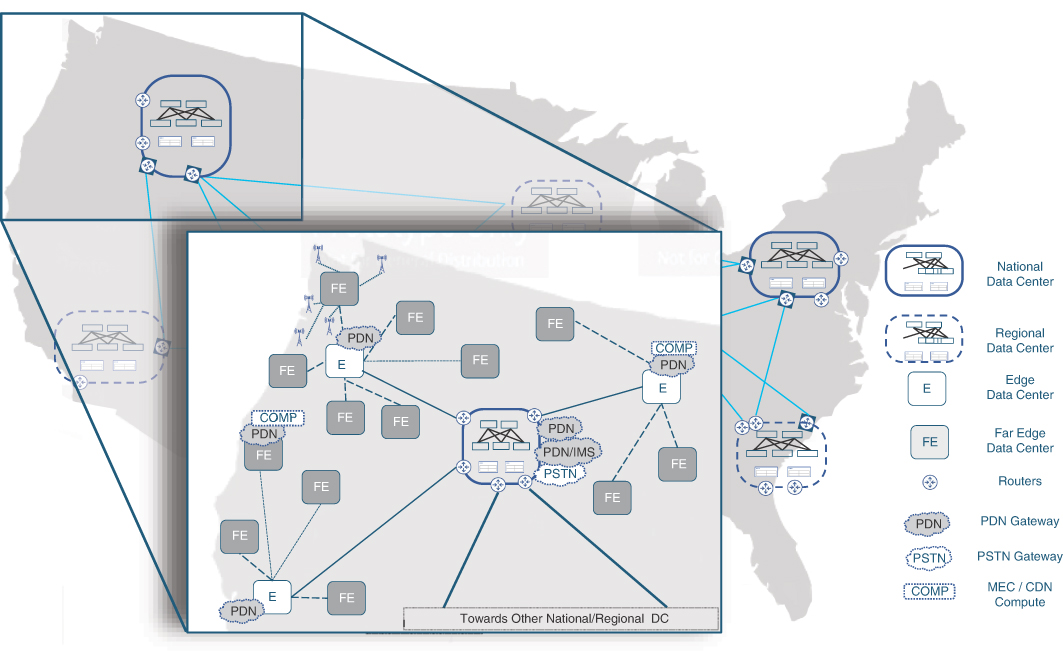

Given that the DU would be required to perform most real-time functions, it needs to be placed close to the cell sites to ensure low-latency communications with the RRU. Hence, the DUs should be hosted at the far edges of a mobile communication network, closer to the cell sites. The data center hosting the DU functions, depending on the number of cell sites it services, could be composed of just a few servers connected to the aggregation routers or a few racks of equipment. In a vRAN architecture, this DC is referred to as a far-edge data center. The transport network connecting the cell sites and the far-edge DC is still called the fronthaul, with CPRI-based RU to DU communications that could use either packet-based or WDM-based transport, as previously described in Chapter 3. Newer RAN components may use an enhanced version of CPRI, called eCPRI, which is discussed later in this chapter. As such, a fronthaul network might need to carry both CPRI and eCPRI traffic.

CU, on the other hand, is responsible for non-real-time functions and could tolerate higher latency compared to the RU-DU connectivity. So even though CU is part of the RAN infrastructure, it can be placed further from the RU compared to the DU. The CUs could be pooled in edge data centers that are placed farther away from the cell sites in comparison with a far-edge DC, but still much closer than a traditional centralized DC. The network that connects the CUs to DUs is called the midhaul. The umbrella term xHaul, which was previously used for a combination of backhaul and fronthaul networks in Centralized RAN, is also used to refer to midhaul. Figure 5-11 shows the traditional D-RAN, present-day Centralized RAN, and the next generation vRAN architectures and their corresponding xHaul domains.

FIGURE 5-11 Distributed, Centralized, and Virtualized RAN

Table 5-1 outlines the latency requirements and typical distance allowed for fronthaul, midhaul, and backhaul networks.31, 32

TABLE 5-1 xHaul Networks and Their Characteristics

xHaul Domain | Purpose | Max One-Way Latency* | Max/Typical Distance |

|---|---|---|---|

Fronthaul | RU–DU(5G)/BBU(4G) connectivity | ~100 usec (4G/LTE)* ~150 usec (5G)* | 20/10 km |

Midhaul | DU–CU connectivity | ~ few milliseconds | 200/40–100 km |

Backhaul | BBU(4G)/CU (5G)–5GC connectivity | ~ few milliseconds | 200/40–100 km |

* These numbers are guidelines by various standard bodies. Exact latency tolerance between RAN components should be provided by the equipment vendor and must be validated in test labs before deployment.

An xHaul implementation in vRAN can use any combination of fronthaul, midhaul, and backhaul networks, depending on the placement of the CU and DU relative to the RU. For instance, the DU and CU can both be co-located, but away from the cell site, in which case only a fronthaul network exists between the RU and the data center hosting the DU and CU. In other instances, the DU might be placed at the cell site with the RU. In this case, the fronthaul is collapsed within the cell site, while a midhaul network connects the cell site to the CU hosted in the edge DC. Another example of a co-located RU and DU is the Active Antenna System for mmWave bands, where the DU is integrated into the RU and antenna panel. This allows all real-time functions to be performed at the cell site, thus eliminating the need for a fronthaul network. Lastly, deploying the physical or virtual BBU, or its decomposed components (DU and CU), at the cell sites results in a traditional D-RAN-like architecture with a backhaul network connecting the cell site containing both the DU and CU to the 5G Core network. Figure 5-12 illustrates these various deployment models.

FIGURE 5-12 DU and CU Placement in Various vRAN Deployment Models

When a transformation to vRAN is being planned, there might be instances where a C-RAN hub could be rebranded as either an edge or far-edge DC, depending on its location and distance from existing or newly installed cell sites. In other cases, as cellular networks and cell sites continue to grow, there may be a need for new data centers. In a vRAN environment, edge DCs are typically fewer in number than far-edge DCs and, thus, CUs located in these edge DCs may provide services to cell sites over a larger geographical area. The edge DCs also tend to be larger than far-edge DCs, resembling a traditional data center spine-leaf architecture, and could be used to house additional components, not just the CU. There typically is a one-to-many relationship between the CUs and DUs, and both these functions are part of the 5G base station, known as gNodeB (gNB).

gNodeB

NodeB in 3G and evolved NodeB (eNodeB or eNB) in 4G LTE have been responsible for terminating the subscriber’s air interface and performing baseband traffic processing. In 5G networks, this functionality is performed by a gNodeB (gNB), short for next-generation NodeB, where n is simply omitted for better readability. There are fundamental architectural differences between the gNB and its predecessors, most notable being the decomposition of BBU functions. Next Generation RAN (NG-RAN) architecture, originally introduced in 3GPP Release 14 and refined in subsequent releases, conceptualizes the gNB architecture where the functions of BBU are split into the centralized unit (CU) and distributed unit (DU). 3GPP Release 15 officially named these functions the gNB-CU and the gNB-DU.

As per 3GPP specifications, a gNB can contain one gNB-CU and one or more gNB-DUs. 3GPP does not limit the number of DUs in a gNB but rather leaves it up to individual deployments. The number of gNB-DUs in a gNB is typically a derivative of processing capabilities and resources available on the gNB-CU.

The DUs connect to the CU using an F1 interface, while the CU connects to the 5G Core using NG interfaces, which is a collective name given to interfaces from 5G RAN to 5G Core. Various NG interfaces are discussed in the “5G Core Network” section.

An Xn-C interface, equivalent of an X2 interface in 4G LTE, is used for connectivity between gNBs and terminates on the gNB-CU. It must be reiterated that all these interfaces, like other 3GPP-defined interfaces, are logical in nature and use the underlying network infrastructure to establish connectivity.

For better scale and more granular separation of functions, the gNB-CU can be further decomposed into gNB-CU-CP (control plane) and gNB-CU-UP (user plane). The gNB-CU-CP is primarily responsible for control and management tasks of the gNB-CU’s functions, such as selecting the appropriate gNB-CU-UP for user data and establishing an F1 interface connection between the gNB-CU-UP and gNB-DU. On the other hand, gNB-CU-UP transports user data between the gNB-DU and gNB-CU and provides functions aimed at improving data speed, efficiency, and reliability through data retransmission in case of radio link outage, status reporting, and redundant data discarding.

A gNB-CU can consist of a single gNB-CU-CP instance and one or more gNB-CU-UP instance(s), thus allowing both the control and user planes to scale and operate independently of each other. The gNB-CU-CP and its corresponding gNB-CU-UPs communicate through the E1 interface. The F1 interface between the DU and CU is subdivided into F1-Control Plane (F1-CP) and F1-User Plane (F1-UP) to provide connection from gNB-DU to gNB-CU-CP and gNB-CU-UP, respectively. A gNB-DU could connect to a single gNB-CU-CP and one or multiple gNB-CU-UPs, as long as all those gNB-CU-UPs are managed by the same gNB-CU-CP. Figure 5-13 provides an overview of these various gNB components and interfaces.34

FIGURE 5-13 gNodeB Components and Interfaces

Together, the gNB-DU and the gNB-CU (along with the RRU) make up the gNB in the Next Generation RAN (NG-RAN) architecture. However, exactly how the BBU functions are split between the CU and DU has been a topic of intense discussion in various standardization bodies and organizations. As these splits have significant implications on the overall network architecture, the next section will discuss the various BBU functional split options across the RU, DU, and CU.

Understanding the RAN Functional Splits

The relocation of the baseband unit (BBU) from the cell site to a centralized location was the beginning of RAN decomposition in mobile networks that was further accelerated with the introduction of BBU functional splits. The CU and DU, or rather their more commonly used virtualized versions, are an integral part of current and future vRAN deployments. The capabilities of CU and DU depend on the functional split option implemented. The functional split option, more commonly called the split option, refers to precisely how the 5G protocol stack gets divided between the CU and the DU, and whether or not some of the baseband processing is offloaded to the RU. To understand the split options, it’s useful to first understand the functions performed by the BBU at various layers of the 5G protocol stack.

5G Protocol Stack Overview

The 5G protocol stack consists of three layers, which can be loosely correlated, though not directly mapped, with the bottom three layers of the OSI model.35, 36 Layers in the 5G protocol stack are mostly similar to those in 4G, but the tasks performed at each layer have been enhanced and new functions added to support 5G services. As shown in Figure 5-14, the lowest layer of the 5G protocol stack is called the physical layer (PHY). Layer 2 is further subdivided into the Media Access Control (MAC), Radio Link Control (RLC), Packet Data Convergence Protocol (PDCP), and Service Data Adaptation Protocol (SDAP) layers. The Radio Resource Control (RRC) and IP encapsulated PDCP frames make up Layer 3 of the 5G protocol stack.

FIGURE 5-14 5G Protocol Stack and Functions Performed by BBU

The physical layer (PHY) performs functions such as upstream and downstream link adaptation, modulation and encoding, signal power adjustment, and assisting user equipment in initial cell search and signal acquisition. The physical layer defines the frame format based on the FDD or TDD duplexing schema and the use of appropriate encoding—OFDMA in the downlink and SC-FDMA for the uplink—which have previously been discussed in detail. The physical layer is responsible for assisting in advanced antenna functions such as MIMO layer processing, beamforming, and transmission diversity management.37

The MAC layer, which is a sub-layer of Layer 2, is responsible for scheduling, prioritizing, multiplexing, and demultiplexing data to and from the mobile devices. 5G’s MAC layer has also been enhanced to support additional physical layer features such as beamforming and transmission diversity. Data integrity verification and error correction through retransmissions or Forward Error Correction (FEC) are also done at the MAC layer.

The RLC layer, also part of Layer 2, is primarily responsible for segmentation, reassembly, and buffering of protocol data units (PDUs). In certain modes of operation, the RLC sub-layer might require acknowledgment of receipt from the remote device, thereby adding transmission overhead but providing additional capabilities such as duplicate traffic detection and protocol-level error correction.38

The third sub-layer within Layer 2, the PDCP layer, is responsible for the actual data transmission for both the control-plane and user-plane traffic over the radio bearers. This sub-layer also provides data security and integrity through encryption as well as header compression and duplicate traffic detection. The PDCP sub-layer may customize processing for various 5G service types (for example, header compression and decompression may not be implemented for URLLC services to reduce latency).

In 4G LTE implementation, traffic from the PDCP sub-layer is passed on as IP data to the packet core. 5G specifications, however, add another sub-layer called SDAP in the protocol stack. SDAP’s sole purpose is to build upon the existing QoS capabilities. It provides QoS for user-plane traffic by mapping a traffic flow to a radio bearer based on its requirements.39 Layer 3 of the 5G protocol stack is the RRC layer, which is primarily focused on management function and does not explicitly participate in traffic forwarding. RRC is responsible for establishing, maintaining, and releasing radio bearers (both signaling and data bearers). Mobility functions such as mobile device handover and cell-selection functions are managed by the RRC layer as well. RRC also closes the feedback loop with the PHY layer by analyzing measurements of radio signals and ensuring appropriate resources are allocated on the air interface.

Based on the tasks and functions performed by each individual layer, it is easy to infer that lower layers such as physical, MAC, and RLC are responsible for more real-time functions, such as encoding, scheduling, segmentation, and reassembly, that require near constant communication with the RRU. On the other hand, the higher layers—PDCP, SDAP, and RRC—are involved with non-real-time tasks such as data compression, encryption, and configuration management.

This clear distinction forms the basis of the CU-DU functional splits, where the real-time functions (performed by lower layers, that is, PHY, MAC, and RLC) are delegated to the DU, whereas the non-real-time functions of the PDCP and upper layers are assigned to the CU. Given the complexities of the tasks performed by the BBU and the closed nature of the RAN ecosystem, more variations in functional splits were introduced for both the high and low layer split, as explored in the next section.

Split Options

RAN has historically been a closed, proprietary system where RAN equipment vendors would engineer their products as they see fit. There is widespread agreement among standard bodies, equipment vendors, and mobile network operators regarding the benefits of a decomposed vRAN architecture and the need for CU and DU functional split, but there are also different opinions on how to split the BBU functionality between the two. Given the complexities of the tasks performed by the BBU and the closed nature of the RAN ecosystem, 3GPP defined multiple split options in Release 14. This allowed the RAN equipment vendors the flexibility to implement the BBU functional split based on their individual product architecture, while still adhering to the 3GPP specifications. These split options, aptly named Options 1 through 8, provide a possibility to split the BBU functionality into CU and DU at every individual layer in the 5G protocol stack and, in some cases, within the layer itself, as shown in Figure 5-15. It’s worth noting that some split options, such as Options 2, 3, and 7, have multiple sub-split options; however, Figure 5-15 shows only the sub-splits for Option 7, which is more commonly used in the industry.40

FIGURE 5-15 3GPP-Defined BBU Functional Split Options

The BBU functionality is split between the CU and DU based on the split option chosen by the RAN equipment manufacturer. Each of the split options represents a deliberate decision on how processing will be distributed between the CU and DU. If more processing is done on the DU, less processing is needed on the CU, and vice versa. The distribution of functions between the two (that is, the choice of split option) also determines the bandwidth required between the CU and DU. A brief description and typical uses of each split option are mentioned in the list that follows:41

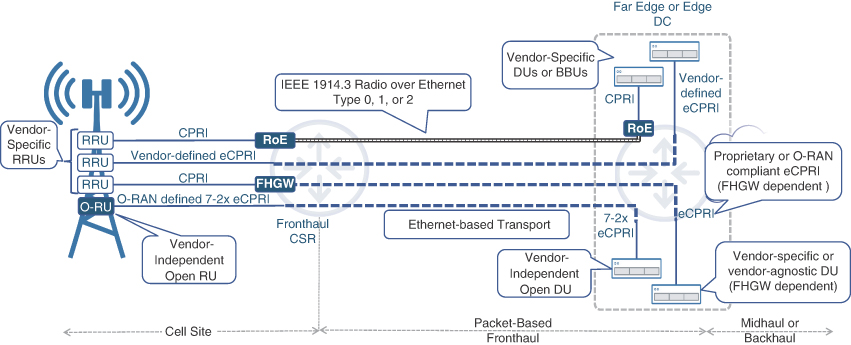

Split Option 8: PHY–RF Layer Split: This is not a new split option but rather a formalization of the traditional deployment where the RF processing is done on the RU, while 5G protocol stack processing is performed at a centralized location in the BBU. Option 8 continues to use CPRI transport between the RRU and BBU and is already used in Centralized RAN deployments. This option requires very high amounts of bandwidth across the fronthaul network and has strict latency requirements between the RRU and BBU, limiting the maximum distance to 20 km or less.

Split Option 7: Intra-PHY Layer Split: This option splits the PHY layer functionality between the DU and CU. Option 7 defines even more granular split choices within the PHY layer called Options 7-1, 7-2, and 7-3. Each of these split options defines a list of PHY layer tasks, such as encoding, modulation, MIMO, and beamforming processing, to be divided between the DU and CU. 3GPP allows the use of different sub-options in uplink and downlink directions for added efficiency. For instance, Option 7-1 or 7-2 could be used for uplink, and at the same time Option 7-3 could be used for downlink. Using any of these split options greatly reduces the bandwidth between the DU and CU, but does not relax latency restrictions between the two. Option 7, along with Option 6, is one of the favored split options by 3GPP.

Split Option 6: MAC–PHY Layer Split: This is where all MAC and higher layer functions reside in the CU, while PHY layer functions are delegated to the DU. Option 6 further reduces the bandwidth requirements between the CU and DU but does not offer much in terms of relaxing latency requirements.

Split Option 5: Intra-MAC Layer Split: This option splits the functions performed by the MAC layer into High MAC and Low MAC. CU performs the High MAC functions, such as centralized scheduling and intercell interference coordination, along with RLC and PDCP functions, whereas DU performs the Low MAC, PHY, and RF functions. Option 5 relaxes the latency requirements between the CU and DU, thus allowing longer distances, but is more complex to implement and thus not favored over Split Options 6 and 7.

Split Option 4: RLC–MAC Layer Split: This option delegates the functions of the RLC, PDCP, and RRC layers to the CU, whereas the DU performs the MAC and lower-layer functions. Option 4 is not used, as it does not offer any discernible advantages over other split options.

Split Option 3: Intra-RLC Layer Split: This option further divides the RLC layer functions of segmentation, reassembling, buffering, and error correction into High RLC and Low RLC. In this split option, the High RLC, PDCP, and RRC functions reside in the CU, whereas the Low RLC, MAC, PHY, and RF functions reside in the DU.

Split Option 2: PDCP–RLC Layer Split: This option has been recommended by 3GPP for standardizing the CU–DU split. Using Option 2, the PDCP and RRC layers reside in the CU, whereas the DU performs the functions of the RLC and lower layers. The functionality offered by this split option has previously been finalized in 4G LTE, and, thus, it makes Option 2 much more feasible for standardization with marginal incremental efforts. Option 2 offers much lower bandwidth as compared to its lower-layer counterparts as well as much better latency tolerance between the CU and DU. Option 2 has become the industry standard for implementing the so-called Higher Layer Split (HLS) between the CU and DU. More details on HLS and its counterpart, the Lower Layer Split (LLS), is covered in the next section.

Split Option 1: RRC–PDCP Layer Split: This option is also allowed by 3GPP. In this split option, only the RRC functions are implemented in the CU while the rest of the functions of the 5G protocol stack are performed by the DU. If implemented, this option results in a complex and bulky DU and thus is typically not used.

Single vs. Dual Split Architecture

The split options can be grouped into two categories: Options 1 and 2 map to the non-real-time functions and are classified as Higher Layer Splits (HLS), while Options 3 through 8, which map to real-time functions, are called Lower Layer Splits (LLS).

In a typical 5G MCN deployment, both the HLS and LLS options are utilized simultaneously, resulting in disaggregating the BBU into the DU and CU, as well as offloading some of the functionality to the RU—all working together to implement a decomposed, virtualized RAN architecture. HLS is used to implement the CU functions, whereas one of the LLS options is used to further disaggregate the functions of lower layers between the DU and RU. For instance, if the RAN equipment vendor chooses to implement HLS Option 2 for CU and LLS Option 6 for DU, then:

The CU must implement the PDCP and RRC layer functions.

The DU must implement the MAC and RLC layer functions.

The RU must implement the PHY layer functions.

Consequently, if Option 7 is used for the DU, which is an intra-PHY split, then the RU must implement some of the PHY functionality, while the DU implements the rest of PHY layer functions, along with the MAC and RLC functions. In other words, depending on the LLS option used, the RU now needs to perform additional processing of the RF signal before sending it over to the DU. The same is true in the reverse direction. The use of dual split options is also documented in the IEEE 1914.1 standards. Figure 5-16 shows various single split and dual split architecture scenarios.

FIGURE 5-16 Single vs. Dual Split vRAN Architectures

Allowing the DU to offload some of its functionality to the RU through an LLS option serves a few purposes. First, it provides the RAN equipment vendor with the flexibility to distribute the real-time functions of the BBU between the RU and DU as they see fit. Second, offloading some of the DU functions to the RU reduces the bandwidth required in the fronthaul network. Lastly, certain LLS options may be better suited for the latency-sensitive nature of communication between the RU and DU. On the flip side, moving too much processing on the RU may make it bulkier, power-hungry, and more susceptible to overheating. The RUs are typically external, pole-mounted devices, where such changes in the hardware form factor could be undesirable. The key is therefore to choose an LLS option that is best suited to provide an optimal balance between too much or too little processing on the RU. To that end, Options 8 and 7 have emerged as the frontrunners of industry adoption and have also been the focus of standardization by various industry bodies.

Choosing a Suitable Split Option

While 3GPP has standardized the use of Option 2 for HLS, it did not definitively recommend a specific LLS option.42 Over the past few years, Option 7 has emerged as the frontrunner of various standardization efforts. To a lesser degree, Option 6 is also discussed, but rarely, if ever, considered for implementation. To better appreciate the feasibility of Option 7, one must recall that Option 8 still uses CPRI traffic, which requires massive amounts of bandwidth in the fronthaul network.

CPRI is uncompressed, digitized RF data and uses constant bitrate (CBR) traffic; that is, the signal is continually transmitted at a predetermined bitrate regardless of whether there is user data present. The amount of traffic carried over a single CPRI interface depends upon the CPRI rate option used to meet RF design. Over the years, as more spectrum is unlocked and wider channels allowed more bandwidth and capacity, higher bitrate CPRI options have been introduced. Both the RU and BBU/DU must agree on one of these CPRI rate options to establish connectivity between the two. These rate options are a measure of the line bitrate supported by CPRI implementation and must not be confused with the BBU functional split options that define how the BBU functionality is split between the CU and DU. The CPRI rate options, as defined in the CPRI specifications, are as follows:43

CPRI Rate Option 1: 614.4Mbps

CPRI Rate Option 2: 1228.8Mbps (1.22 Gbps)

CPRI Rate Option 3: 2457.6Mbps (2.45 Gbps)

CPRI Rate Option 4: 3072.0Mbps (3.07 Gbps)

CPRI Rate Option 5: 4915.2Mbps (4.91 Gbps)

CPRI Rate Option 6: 6144.0Mbps (6.14 Gbps)

CPRI Rate Option 7: 9830.4Mbps (9.83 Gbps)

CPRI Rate Option 7a: 8110.08Mbps (8.11 Gbps)

CPRI Rate Option 8: 10137.6Mbps (10.13 Gbps)

CPRI Rate Option 9: 12165.12Mbps (12.16 Gbps)

CPRI Rate Option 10: 24330.24Mbps (24.33 Gbps)

CPRI bandwidth has not been an issue in traditional D-RAN deployments, where the RRU and the BBU both reside at the cell site and are connected through dedicated fiber optic cables. However, with each individual CPRI interface in excess of 10 or 20 Gbps of CBR traffic, as is the case with CPRI rate 8 and higher, transporting raw CPRI traffic over the fronthaul access network presents a significant challenge. This challenge is compounded in cases where multiple sectors are used on the cell site or if multiple frequency bands are used, each of which might require a dedicated CPRI interface. In these cases, the cumulative bandwidth requirement from a single cell site location may exceed tens or sometimes hundreds of gigabits per second. Additionally, with the availability of mmWave frequencies in 5G and advanced antenna features such as mMIMO, the sheer amount of bandwidth required to transport CPRI traffic over fronthaul makes Option 8 bandwidth-prohibitive. However, Split Option 8 will continue to be useful for both 4G and some 5G deployments. It is expected to coexist alongside Option 7-x for vRAN deployments.

eCPRI

To deal with the challenges presented by traditional CPRI transport in the fronthaul network, the coalition of Huawei, Nokia, Ericsson, and NEC proposed the evolution of CPRI standards called eCPRI in 2017.44 The drivers for creating a new eCPRI standard were multifold; chief among them was the need for significant reduction in CPRI bandwidth overall, use of flexible bandwidth scale based on user data instead of CBR, and the adoption of packet-based transport using Ethernet and IP technologies with advanced networking and OAM features.

Note

The term eCPRI was never spelled out in the original specification and was thus sometimes (incorrectly) called Ethernet CPRI due to current implementations of eCPRI using Ethernet (Layer 2) as the transport. However, eCPRI was intended to be an evolution or enhancement to traditional CPRI, and, as such, both evolved CPRI and enhanced CPRI are typically acceptable definitions. Besides, as eCPRI allows both Ethernet (Layer 2) and IP (Layer 3) encapsulation, calling it Ethernet CPRI would be neither technically accurate nor future-proof should vendors choose IP-based eCPRI implementations.

A critical distinction between CPRI and eCPRI is the lack of control, management, and synchronization traffic in the latter. Control and management of the RU (for example, initialization and configuration) make up a small portion of the overall traffic and could be implemented independently of user data transport. As defined the by CPRI forum, eCPRI carries only the user data, or the U-plane traffic, and some real-time traffic control information, whereas the control and management traffic, referred to as the C-plane and M-plane, is implemented independently over Ethernet (VLANs or L2 VPNs) or IP (L3 VPNs). Additionally, eCPRI does not carry embedded synchronization information between the RU and DU. Synchronization between the two is achieved by implementing the S-plane using an external clock source and propagating timing information through the fronthaul network. The concepts of timing and synchronization are discussed in Chapter 9, “Essential Technologies for 5G-Ready Networks: Timing and Synchronization.”

Just like CPRI, the eCPRI implementations continued to lack cross-vendor interoperability and stayed a closed, proprietary system. In alignment with 3GPP, eCPRI encourages the use of the popular intra-PHY Option 7 due to its advantages in implementing antenna functions such as MIMO, multipoint connectivity, and carrier aggregation, but does not explicitly restrict the vendors from implementing other split options. The following split options are supported in the eCPRI specifications:45

eCPRI Split Option A: The equivalent of 3GPP Split Option 1

eCPRI Split Option B: The equivalent of 3GPP Split Option 2

eCPRI Split Option C: The equivalent of 3GPP Split Option 4

eCPRI Split Option D: The equivalent of 3GPP Split Option 6

eCPRI Split Option ID: The equivalent of 3GPP Split Option 7-3

eCPRI Split Options IID and IIU: The equivalent of 3GPP Split Option 7-2

eCPRI Split Option E: The equivalent of 3GPP Split Option 8

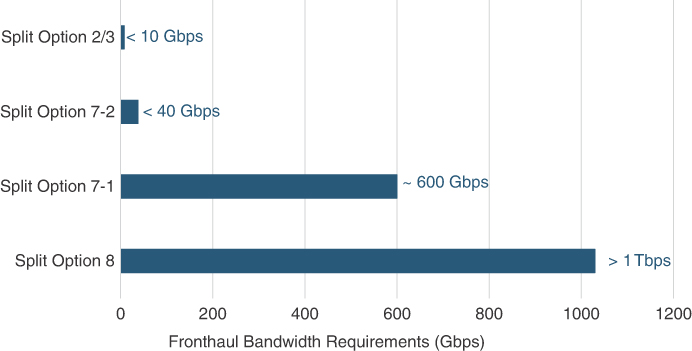

The choice of split option has an impact on the overall fronthaul bandwidth requirements. When more processing is done on the RU, signifying a higher split option, the bandwidth requirements for the fronthaul network go down proportionally. While the actual fronthaul bandwidth requirements will vary based on the individual deployment scenario, Figure 5-17 highlights the significant bandwidth savings based on the choice of split option implemented. The use case shown in the picture assumes an aggregated RF bandwidth of 500 MHz, with 64T64R mMIMO, but 4×4 MIMO layers and 64 QAM modulation. This implementation would require more than 1Tbps (terabits per second) for Split Option 8, 600 Gbps with Split Option 7-1, and 38 Gbps for Split Option 7-2. If Split Option 2 or 3 is used, the bandwidth requirement falls below 10 Gbps, similar to traditional backhaul.46

FIGURE 5-17 Example of Required Fronthaul Bandwidth Across Split Options

The introduction of eCPRI helped modernize legacy CPRI transport by utilizing Ethernet and IP for transport as well as lowered the bandwidth requirements between the RU and DU. However, it did nothing to provide open interfaces and interoperability across vendors. While new entrants in the vRAN space were looking to take advantage of virtualization and revolutionize the RAN networks, mobile networks were still subject to vendor lock-in with limited to no interop between RU and DU provided by different RAN vendors. Mobile network operators, wanting to drive interoperability in vRAN, created the Open RAN (O-RAN) Alliance in an effort to collectively influence the industry and speed up the pace of their 5G deployment.

Open RAN

The RAN industry has been dominated by a small number of incumbents, which has shrunk even further through various mergers and acquisitions, resulting in a select few (Huawei, Ericsson, and Nokia) exerting a near-total monopoly on all RAN deployments.47 The evolution to vRAN has created a lot of ambiguity in the industry in choosing the best-suited split options.