Chapter 12: Segmentation and classification of hand symbol images using classifiers

Jatinder Kaur1, Nitin Mittal1, Sarabpreet Kaur2, Rajshree Srivastava3, and Sandeep Raj4 1Department of ECE, Chandigarh University, Mohali, Punjab, India 2Department of ECE, Chandigarh Group of Colleges, Mohali, Punjab, India 3Department of CSE, DIT University, Dehradun, Uttarakhand, India 4Department of ECE, IIIT Bhagalpur, Bhagalpur, Bihar, India

Abstract

The first form of human communication was reliant on signs, which is predominant in mute people; however, numerous variations of gesture-based communication are accessible. This chapter proposes a method for the classification of hand symbols. First, the quality of an acquired image is improved through preprocessing. Then, a segmentation technique is proposed for extraction of the region of interest and a feature extraction algorithm is applied to generate a feature vector. Finally, classifiers are trained with appropriate feature vectors and tested in terms of accuracy. The results of five classifiers are compared on the basis of image features.

Keywords

Classification; Clustering; Feature extraction; Hand symbol; Segmentation

1. Introduction

Hand symbols commonly act as a bridge for communication balance when interacting with mute people, and as a supporting tool for those in difficult conditions. To ensure a balance in terms of communication there is a need to keep a check on the requirements of the mute fraternity to help them at the early stages of their development [1]. The delay in the advancement of mute people-supporting systems results in a huge loss in their early growth as it can make them unable to express their views and ideas. Hand gesture recognition studies concern the observation of hand symbols that can be used with artifacts that mainly include hand occlusion or light conditions [2]. Regular development of the sign recognition system is crucial for its sustainable development. The efficient recognition of hand symbols is very difficult to maintain as it depends on accuracy and precision. A lot of expertise is required for manually performing all stages of a hand symbol recognition system. Hence, the trends for detection are moving toward automating the process and replacing the traditional manual methods with smart detection [3].

Image processing techniques are gaining importance with the automation of detection techniques. There are numerous reasons why it is necessary to recognize hand gestures. Knowledge of the extent of symbol datasets is required for making decisions at management level because it is directly linked to special category people [4].

Although a significant level of accuracy of hand symbol classification has been attained by using these existing methods, there is a large margin in terms of the following issues:

- • The sample dataset input being used must be valid.

- • The dataset must consist of samples of every alphabet to make sentence formation easy.

- • There must be optimum segmentation.

- • Adequate feature extraction and selection for making a feature matrix are necessary.

- • Efficient classification is required for hand symbols.

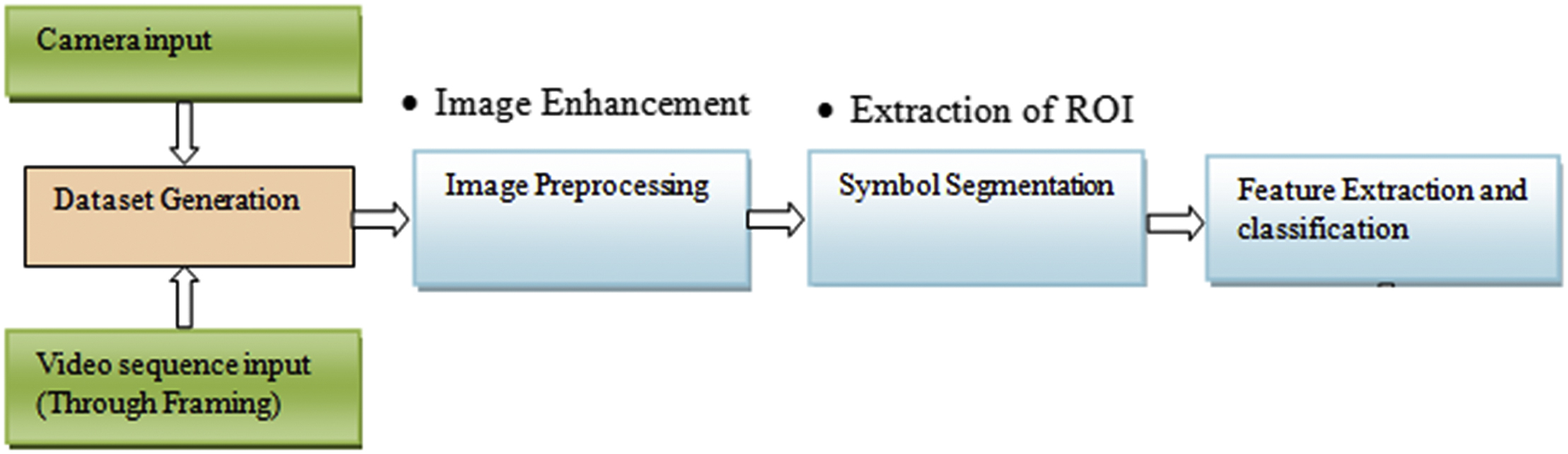

The aim of this work is to apply an appropriate algorithm to the detection of hand gestures through image processing. Optimality can be carried out by using more advanced and appropriate algorithms of image processing at different stages for the achievement of an accurate system. The algorithm thus developed can act as an efficient alternative for application to a practical scenario. Utility of the algorithm depends on efficient training of the classifier, which in turn depends on the availability of a dataset as per the requirements just stated (Fig. 12.1).

2. Literature review

Khan and Ibraheem [5] researched the essential parts of communication via hand gestures and distinguished the methods that could be helpful to structure sign language vocabulary arrangements for gesture-based communication. The main aim was to report the significance of unaddressed problems, related difficulties, and likely arrangements in the practical implementation of sign language translation.

Mohandes et al. [6] presented a sign language recognition system for Arabic sign language. An effort was made to use a color-based approach where the subject wore colored gloves. A Gaussian skin color model was used to detect the face. The centroid of the face was taken as a reference point to track the movement of hands. The feature set included geometric values such as centroid, angle, and area of the hands. The recognition stage was implemented using the hidden Markov model.

Ross and Govindarajan [7] utilized fusion based on feature level and evaluated on two biometrics systems such as face and hand biometrics system. The data related to the feature level and match level was consolidated. The strategy was examined by combining two types, i.e., intermodal and its fusion scenarios of the classifier such as strong and weak classifiers.

Jiang et al. [8] used RGB and depth image datasets for extricating shape features of input images. The size of the shape features vector was reduced with the application of discriminate analysis, which upgraded the discriminative capacity of the shape features by selecting an adequate number of features. The concepts of multimodal and method of image extraction were thoroughly explained.

Zhu [9] provided a two-phase strategy of feature fusion for bimodal biometrics. During the first phase, linear discriminant analysis was performed to measure the transform features. In the subsequent stage, complex vectors were considered as a transform feature and had the flexibility to add more input compared to the regular fusion method.

3. Hand symbol classification mechanism

The classification mechanism of hand symbols starts with acquisition of a sample image. The sample input taken with the help of a camera or the inputs available in the dataset can be used. This symbol input needs to be processed through a preprocessing operation before passing onto preceding stages.

The following are the stages of hand symbol classification:

3.1. Image preprocessing stage

The main objective of the preprocessing stage is to enhance the visualization effect of an input image by applying various image enhancement techniques and to smooth the image through noise removal techniques if required. At this stage the image is converted into color space as per the requirement of segmentation.

3.2. Segmentation stage

The preprocessed image samples are then transferred as an input to the segmentation stage [10,11]. The main goal of segmentation is to extract a region or area of interest from the problem image. The result thus created contains subsets that collectively make a complete image [12].

Researchers have explored various strategies for image analysis [13]. A few strategies are general purpose and some are application explicit. The proficient segmentation method has an effect on the overall efficiency of the system [14]. A few segmentation techniques are based on a clustering mechanism [15]. Region-based segmentation methods have also been proposed in the literature [16]. Segmentation-based method classification is called either region-growing or region-splitting segmentation [17].

3.2.1. Thresholding methods

These strategies change a range of colors of gray image into two colors, i.e., black and white. This works by picking an estimation of threshold. The pixels grouping into two clusters depend on the intensity value of the pixel. The value belonging to one cluster will be all pixels having a gray value less than threshold and the second cluster will consist of all pixels having a gray value greater than threshold:

A pixel = White if gray value > T

= Black if gray value < T

3.2.2. Histogram-based image segmentation

The histogram-based technique is used to calculate a histogram from all the pixels of an image. In this method, clustering of the image is based on the peaks and valleys but this method is not easy since it is difficult to identify significant valleys and peaks of an image.

3.2.3. Feature extraction and selection for making a feature vector

Feature extraction is one of the most significant parts of the strategy of classification. It has gained a vital role in the fields of computer vision and pattern recognition tasks. The selection of features is completely based on the quality of the segmented image. The extracted features are sorted into a codebook for reference to a classifier [18]. The extracted features must be selected in such a way to minimize the redundancy of the parameters.

3.2.4. Types of features

- 1. Color features: To extract this feature set the required image is provided by the preprocessing stage. These are the global parameters of the enhanced image and are important because the lesions differ in color from the rest of the image. For extraction of these features, different color spaces are required. The examples of color spaces include hue, saturation, value and Lab color space. Examples of color features include entropy, skewness, mean, and standard deviation.

- 2. Geometric features: These belong to the lesion-segmented area. The lesion area is the most prominent feature in the classification of diseases. These features are local area features. Examples of these features include area, aspect ratio, orientation, etc.

- 3. Zernike moment features: These polynomials are orthogonal to each other. Zernike moments can depict properties of an image without redundancy or overlap of information between the moments. Thus they can be used to extricate features from images that describe the shape attributes of an object.

3.3. Classification stage

An appropriate feature vector is the basic need of a classifier. Classification requires a wide range of decision theoretical approaches to object recognition [19]. The classification process consists of assemble and allocate labels to each group of pixels. The classification method works on the principle of training through certain indices of image data. Initially, the classifier analyzes the statistical values of various image features and then arranges the data into categories [20].

3.3.1. Classification phases

The training phase is the learning phase of the classifier. At this stage the classifier network is trained for the features of the image training class [21]. The testing phase of the classifier makes use of decision strategy, which helps the classifier to make decisions about the samples of image provided at its input [22,23].

4. Proposed work

This chapter proposes a complete process of an application of classifiers on hand symbol images. The hand symbol image from the dataset is preprocessed to enhance its visualization effect. In our proposed methodology, various alternatives of classifiers in terms of accuracy are tested on an input image.

Image segmentation is used for extraction of the region of interest. The hand symbol image is segmented using the color-based segmentation method. Through this proposed methodology, three classes of features are extracted from the acquired input. The extracted features belong to the classes of color feature, Zernike moment feature, and geometric feature (Fig. 12.2).

5. Results and discussion

The proposed method is tested on the images database that includes various static gestures acquired through a Creative Senz3D camera as mentioned in literature and in [24]. Implantation is done in MATLAB and the performance evaluation is done at two stages. First, the performance is tested for the preprocessing stage in terms of performance metrics, and then at the final stage of classification [25]. The dataset includes hand gestures performed by four different people, each making 11 different hand symbols repeated 30 times each, a total of 1320 image samples. The color, confidence, and depth frames are available for each dataset sample. Intrinsic attributes for the Creative Senz3D are also prepared.

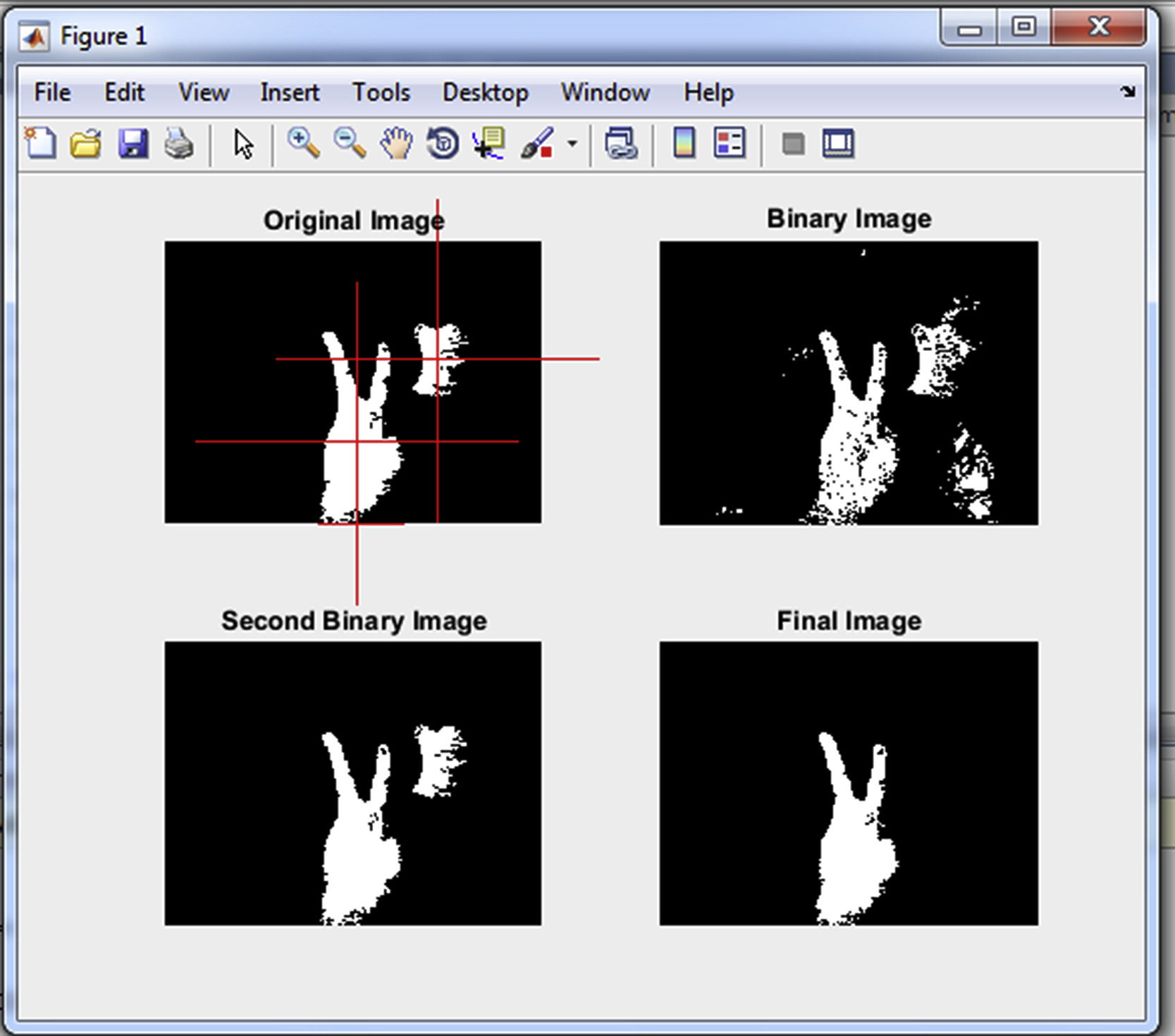

A number of segmentation algorithms are proposed in the literature for a variety of applications. The choice of segmentation technique depends on the type of application and region of interest for further processing. The main goal is to select the region of interest and separate the background. The color-based image segmentation method is applied to the images. RGB color images are converted into YCbCr image space, which is further transformed into a binary image. Furthermore, background subtraction is applied to the binary images to finally segment the images.

The segmented image will thus serve as input to the feature extraction stage.

Feature extraction is done with the segmented image of all three gestures. From the selected segmented image and enhanced image, appropriate feature extraction is done. This feature extraction brings out a feature vector that helps in the training of a classifier.

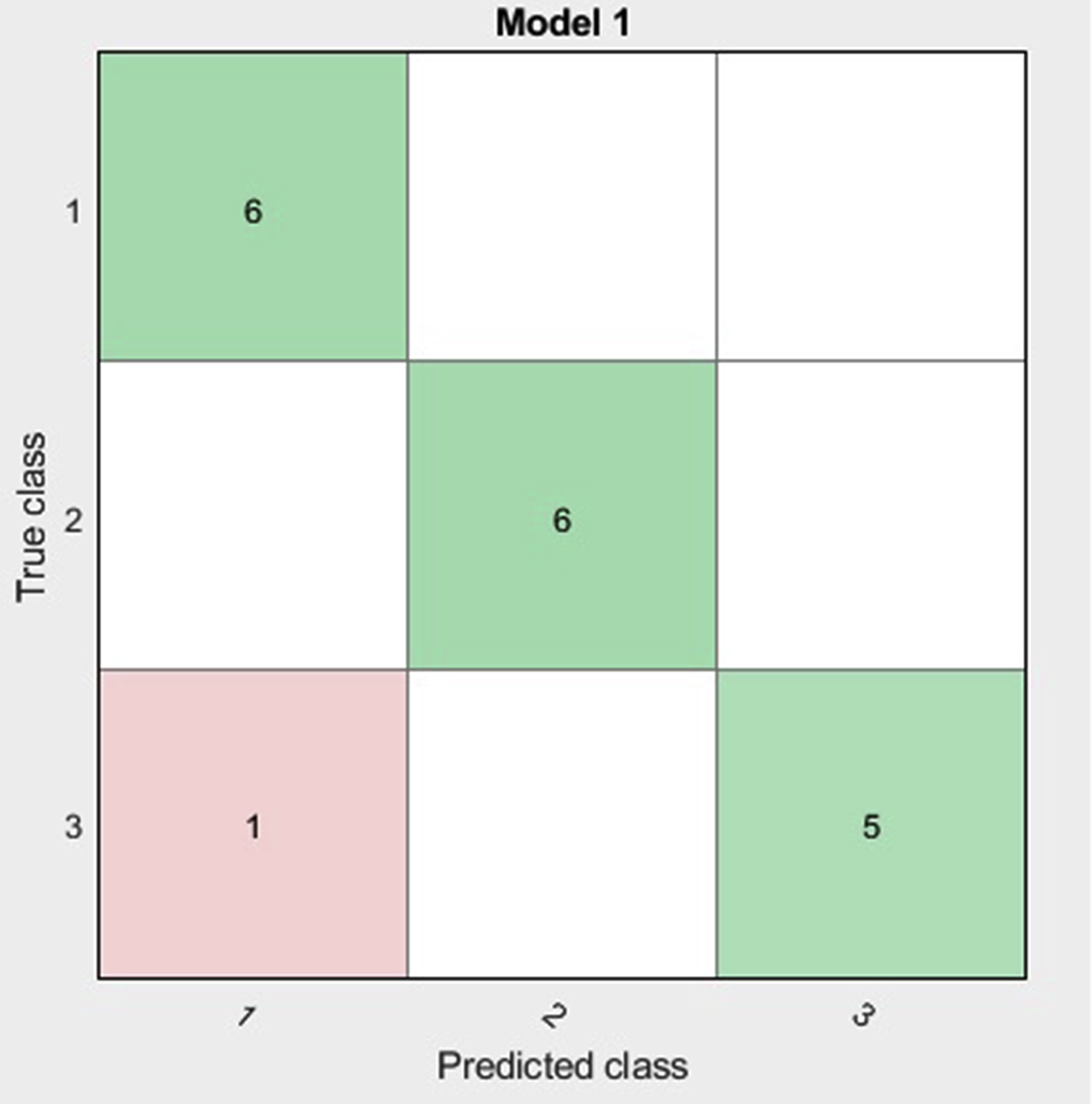

For the training of a classifier, a set of 18 images belonging to three hand symbols is selected out of a complete alphabet set. The performance of four classifiers is tested in terms of accuracy on extracted features of three sets of sample inputs to compare their performance (Fig. 12.3).

The feature execution arrangements as shown in Table 12.1 are used on a classifier and the accuracy results of each classifier are listed in Table 12.2.

From the data comparison in Table 12.2 it may very well be envisioned that the support vector machine (SVM) classifier is the most optimal choice for classification of features extracted from the image data-set of six samples of three hand symbols. The decision tree classifier performance is very low for the applied feature matrix of the test dataset (Fig. 12.4).

Figs. 12.5 and 12.6 depict the performance of the SVM classifier in the form of a confusion matrix and receiver operating characteristic curve of the SVM classifier.

6. Conclusion

In this research work, a complete and effective mechanism for hand symbol recognition was proposed. The image processing-based model reduced human effort and minimized the problem related to hand symbol recognition. A preprocessing stage served for the enhancement of visualization of the acquired input image. Furthermore, this preprocessed image went through the segmentation and feature extraction stage and then classification was done. In this execution, the classifiers were tested on 18 sample inputs and the optimum results were achieved for the SVM classifier. This work could be extended by implementing optimization and decision-making algorithms for selection of features and classification.

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.