Chapter 11

Architecture Evaluation Framework

James N. Martin

The Aerospace Corporation, El Segundo, CA, USA

True genius resides in the capacity for evaluation of uncertain, hazardous, and conflicting information.

Winston Churchill

11.1 Introduction

This chapter describes key concepts related to the development and use of an architecture evaluation framework, and a recommended set of steps that could be followed when using these concepts. When we say architecture, we mean architecture as being of the fundamental concepts or properties of a system in its environment embodied in its elements, relationships, and in the principles of its design and evolution (ISO/IEC/IEEE 42010, 2010). The evaluation we strive for is to determine if the architecture to be examined is suitable for the expected situation and that it maximizes the satisfaction of stakeholder concerns. A number of these ideas regarding how to deal with stakeholder concerns are expounded upon in Firesmith's “Method Framework for Engineering System Architectures” (Firesmith et al., 2008).

11.1.1 Architecture in the Decision Space

Architecture is one area within the overall “Decision Space” with regard to doing tradespace analysis as shown in Figure 11.1. The Decision Space represents the range of choices that can be examined during the life of a system. This space is divided into different areas of concern, starting with the operational area of concern, followed by the architectural area of concern. The architecture decision space is the focus of this chapter. Once the architecture is established, then decisions will need to be made regarding the one or more designs that “conform” to the architecture. Even after the design is done, there could be decisions regarding production, testing, deployment, logistics support, decommissioning, and so on.

Figure 11.1 Role of architecture in the decision space

It is often a good practice to establish the concept of operations before the architecture is created, which is further upstream in the Decision Space. Alternative operational concepts should be examined in the front end of exploring the Decision Space. This could entail a decision, for example, between a manned or an unmanned mission to Mars, or between whether the spacecraft will be autonomous or remotely controlled. These are “concepts” that can be explored in their own tradespace prior to considering how an architecture can best support such an operation.

As we noted earlier, the design of a system must not only honor the tenets of its architecture, but also address many other details that go beyond the scope of the “fundamental concepts and properties” of the system that are expressed in the architecture. So, the design space is further downstream from the architecture space in the overall Decision Space. The rest of this chapter is focused on the establishment of an architecture evaluation framework to aid in exploration of the Architecture Space and describes how to evaluate architecture alternatives within that space using this framework.

11.1.2 Architecture Evaluation

Architecture evaluation is making a judgment or determination about the value, worth, significance, importance, or quality of an architecture. The evaluation effort is aimed at answering one or both of these questions:

- What is the quality of an architecture?

- How well does an architecture address stakeholder concerns?

Quality is a measure of how good or bad something is. An architecture that is being evaluated is usually not “bad” but rather may display varying degrees of goodness. When we evaluate an architecture to determine its value, worth, significance, and so on, we are doing this in terms of who is impacted and how well the architecture addresses the concerns of impacted stakeholders.

We usually examine more than one architecture alternative to ensure that we have more completely explored the potential tradespace. It is a common mistake to not identify and examine alternative architectural approaches (Firesmith et al., 2008). The “tradespace” can be defined as the set of enterprise, program and system parameters, attributes, and characteristics required to satisfy a variety of stakeholder concerns throughout a system's life cycle, while at the same time living within relevant constraints. These various dimensions of the tradespace are often in tension. To increase the performance, one often needs to increase the cost, which is contrary to those to whom cost is a concern. There are many other possibly contrary dimensions in the tradespace: security versus usability, safety versus performance, speed versus accuracy, manufacturability versus maintainability, reliability versus testability, and so on. Our aim during architecture evaluation is to identify the key trade-offs and to understand their implications in terms of delivering the most balanced and robust architectural solution.

Another aim of architecture evaluation is to determine the best way to achieve the greatest amount of goodness given all the constraints and conditions we must contend with. Alexander, who is famous for his concept of architectural patterns, provides a good discussion on how this can be done in his Notes on the Synthesis of Form (Alexander, 1964). Constraints to be dealt with could be financial, technological, sociological, political, and so on. Conditions could entail such things as equitable distribution of work effort, minimal impact on the environment, limited time for doing the evaluation, acceptable risk to the enterprise, and so on.

Architecture evaluation in the context of other architecture-related processes is illustrated in Figure 11.2 (ISO/IEC 42020 2016 draft). Conceptualized architectures are evaluated to determine the suitability of an architecture or to help select among alternatives. More complete models and views of the architecture are elaborated, and these can be evaluated to determine their correctness and completeness with regard to the architecture objectives. Architecture evaluation supports architecture governance in helping determine the possible and most viable and promising ways forward for an enterprise's portfolio of programs, projects, and systems.

Figure 11.2 Architecture evaluation in context of other architecture processes

11.1.3 Architecture Views and Viewpoints

An architecture is used to characterize the fundamental concepts and properties about a system that address the most important concerns of key stakeholders as illustrated in Figure 11.3. Architecture “viewpoints” are used to help frame the concerns of stakeholders who have an interest in the system, and architecture views and models (specified in the viewpoints) are used to depict key attributes of the architecture under evaluation.

Figure 11.3 Addressing stakeholder concerns through the use of views and models

An architecture viewpoint is essentially a “specification” of a particular architecture view that would be found useful in examining the architecture to see how well it addresses the particular stakeholder concerns represented by that viewpoint. A viewpoint can be thought of as a template to be used when developing architecture views. Example viewpoints are the following:

- Operational viewpoint

- Maintenance viewpoint

- Users viewpoint

- Builders viewpoint

- Regulators viewpoint

- Programmatic viewpoint

- Policy viewpoint

- Service providers viewpoint

- Service consumers viewpoint.

Views are created using these viewpoints, often generated using models of the architecture. For example, an operational view can be composed of one or more of the following models: operational concept diagram, mission reference profile, operational node interaction diagram, operational interchange matrix, operational sequence diagram, conceptual information model, operational activity taxonomy, operational parameter influence diagram, and so on. Similarly, a builder view can be composed of one or more of the following models: system context diagram, functional flow diagram, control flow diagram, state transition diagram, system data model, system block diagram system breakdown structure, system integration sequence diagram, and so on. The key characteristics of architecture views and viewpoints are specified in the international standard on architecture description practices (ISO/IEC/IEEE 42010 2011).

11.1.4 Stakeholders

Stakeholders are individuals or groups that could be impacted – positively or negatively – by the architecture or by the system designs that are derived from the architecture. The impact on a stakeholder can be determined by finding out what “concerns” them. Hence, the concept of stakeholder concerns is crucial in performing an effective architecture evaluation.

User needs and mission needs are often the primary focus of discussion and analysis, but the breadth of stakeholders goes well beyond the needs associated with just users and the mission. It is important to do a thorough examination to determine all those kinds of individuals and groups that could be impacted by the architecture. Examples of stakeholders include users, operators, acquirers, owners, suppliers, developers, builders, and maintainers. It also includes evaluators and authorities engaged in certifying the system for a variety of purposes such as the readiness of system for use, the regulatory compliances of the system, the system's compliance to various levels of security policies, and the system's fulfillment of legal provisions. Furthermore, it is important to pick up stakeholders further upstream and downstream such as company shareholders, supply chain companies, raw material producers, general public for environmental impact, taxpayers for pocketbook impact, and future generations for financial impact. On the other hand, it is sometimes wise to deliberately exclude some stakeholders. This depends on the overall objectives of the evaluation and the intended scope. Some of the stakeholders would be excluded perhaps because the evaluation is purposefully limited in its areas of concern.

11.1.5 Stakeholder Concerns

A concern is something that interests someone because it is important or affects them in some way. This impact could be either positive or negative. It could lead to a benefit for them, or it could be detrimental. Concerns are not specified in any detail but are merely used as dimensions of the problem space that will be characterized during the architecture evaluation process. The detail comes later when these concerns are translated into measurable value assessment objectives and criteria and architecture analysis objectives and criteria. More information on these measurable attributes will be discussed later since these are key components of the architecture evaluation framework that is the main focus of this chapter.

Concerns are not the same as requirements, often because the nature of some concerns is not amenable to the requirements process since requirements must be very precise and unambiguous; requirements must be verifiable in a technical manner and must be usable by engineers in their design process. Firesmith deals with this in the way he addresses “architecturally significant requirements” (2008). Usually, only the acquirer is authorized to specify requirements on the system, and the acquirer (if there is one) is only one of many stakeholders. During architecture evaluation, one should focus on the small number of concerns for each stakeholder. Most often, there is really just one concern for a stakeholder that is most instrumental in getting the architecture right. Sometimes there might be two or three, but rarely are there more than this. Later during the engineering of the system, the design can address more detailed considerations. But for architecture evaluation, the focus is on those concerns that most impact the architectural features and functions.

Examples of concerns to consider in the assessment include the following items:

|

|

|

|

Concerns are often expressed as “stories” told by the stakeholders. We can capture these stories in narratives or various other forms such as use-case diagrams, storyboards, influence diagrams, annotated timelines, mission reference profiles, and marketing prospectus. These stories are often essential to giving “voice to the customer,” so to speak. It is usually important to capture them in their pure form to help avoid putting our own biases on them, which might lead us to delivering the “right” solution to the wrong problem.

11.1.6 Architecture versus Design

An architecture should be created with stakeholders' concerns in mind. Many of these concerns never make it into the requirements (for the reasons stated earlier). Design, on the other hand, is driven by requirements that have been vetted through the architecture and more detailed analyses of feasibility. Architecture focuses on suitability and desirability, whereas design focuses on compatibility with technologies and other design elements and feasibility of construction and integration.

System architecture deals with high-level principles, concepts, and characteristics represented by general views and models, excluding as much as possible details about implementation technologies and their assimilation such as mechanics, electronics, software, chemistry, human operations, and/or services.

In product line architectures, the architecture is necessarily spanning across several designs. The architecture serves to make the product line cohesive and ensures compatibility and interoperability across the product line. Even for a single product system, the design of the product will likely change over time while the architecture remains constant.

An effective architecture should be as design-agnostic as possible to allow for maximum flexibility in the design tradespace. An effective architecture will also highlight and support trade-offs between conflicting or opposing requirements and concerns. This does not mean that design implementability is ignored during the architecting process. One often learns something about potential designs while evaluating the architecture. Some architectures, although elegant and seemingly addressing the concerns very well, will be discovered to be brittle or possibly unachievable given the available technologies and design approaches. Sometimes design problems are not discovered until well into the design process. In such a case, the architecture evaluation may need to be redone to see what was missed, to possibly come up with alternative architectures that avoid this particular design problem. In some cases, the project might need to be abandoned in favor of more promising ventures.

11.1.7 On the Uses of Architecture

An architecture can be used in other ways than merely as a driver of the system design. An architecture can also be used:

- As the basis for operational impact analysis

- In support of bid/no-bid and make/buy decisions

- To determine where to invest in technologies

- In long-term planning to identify follow-on projects

- When training operators and end users

- To support safety and security analyses

- When reviewing features and functions with stakeholders to get their buy-in.

11.1.8 Standardizing on an Architecture Evaluation Strategy

This chapter describes the key principles and concepts relevant to the development and use of an architecture evaluation framework. These concepts are intended for inclusion in the international standard that addresses architecture evaluation (ISO/IEC 42030 2016 draft, intended for publication in 2018). These concepts deal with the strategy in which architecture evaluations are organized and recorded for the purpose of:

- Evaluating the quality of architectures,

- Verifying that an architecture addresses stakeholders' concerns, or

- Supporting decision-making informed by the architectures related to the situation of interest.

This strategy addresses the planning, execution, and documentation of architecture evaluations. It prescribes the structure, properties, and work products of architecture evaluations. This approach also specifies provisions that prescribe desired properties of architecture evaluation methods in order to usefully support architecture evaluations. It provides the basis on which to compare, select, or create value assessment and architecture analysis methods.

11.2 Key Considerations in Evaluating Architectures

When one evaluates an architecture, it is important to make a clear distinction between the factors that contribute to satisfaction of stakeholder concerns and the factors that affect the key measures of what the system does and how well it does. The former is concerned with how well the fundamental objectives will be met while the latter is focused on how well the means objectives will be met. These two concepts of “value” are often confused. Planning the effort in a systematic manner can help avoid the confusion and help clarify this distinction. As will be shown, the plan drives the overall evaluation effort, which produces a report of what took place and highlights the key findings and recommendations from the effort.

An evaluation framework such as the one shown in Figure 11.4 can help structure the evaluation effort and serve as an easy way to explain to the sponsor what will be accomplished and how this will produce good results. It can help keep the focus on the objectives of the evaluation rather than getting bogged down in the minutia of the analysis that is to be employed.

Figure 11.4 Architecture evaluation framework

11.2.1 Plan-Driven Evaluation Effort

It is important that thoughtful consideration is given to the way in which the evaluation effort will be performed. The discipline of planning can help immensely in providing a structured way of scoping and organizing the work. It is important to develop a plan and have this plan reviewed by the sponsor of the effort to ensure that the evaluation objectives are clear and understood by all parties involved.

The plan should consider which approach is the best one to be taken, sometimes even employing more than one approach for the overall effort. The approach will apply one or more value assessment methods that provide essential information needed to support the evaluation effort. The plan should also specify the value assessment and architecture analysis methods to be used. The typical contents of an architecture evaluation plan are as follows:

- Purpose and scope

- Evaluation objectives, constraints, criteria, priorities

- Schedule and required resources

- Evaluation frameworks to be used

- Evaluation approaches and methods to be used

- Roles and responsibilities of evaluators

- Required inputs and reference materials

- Expected outputs and deliverables.

The plan should determine how the evaluation results are documented and to whom the report will be shared. The evaluation report should contain the following elements:

- Purpose and scope (which might be have changed from the original plan)

- Value assessment objectives and criteria used

- Architecture analysis objectives and criteria used

- Participants involved in the effort (either directly or indirectly)

- Inputs used and their sources

- Frameworks used

- Approaches and methods used

- Method results and rationale

- Observations and findings generated

- Risks and opportunities identified

- Recommendations and regrets.

11.2.2 Objectives-Driven Evaluation

As noted in Chapter 2, it is important to understand the distinction between fundamental objectives and means objectives. In the approach described in this chapter, the fundamental objectives are called Value Assessment Objectives, while the means objectives are called Architecture Analysis Objectives.

The Architecture Evaluation Objectives are what the architecture evaluation effort is trying to achieve. For example, the objective for the architecture evaluation effort could be one of the following:

- Examine the enterprise portfolio to determine adjustments for better alignment of programs and projects with enterprise goals and objectives.

- Examine alternative system designs to establish the basis for a new project.

- Examine system designs proposed by competing contractors to determine the winning bid.

- Determine the best way to incorporate a new technology coming out of the laboratory.

- Find the best way to replace a system in the field that is no longer cost-effective.

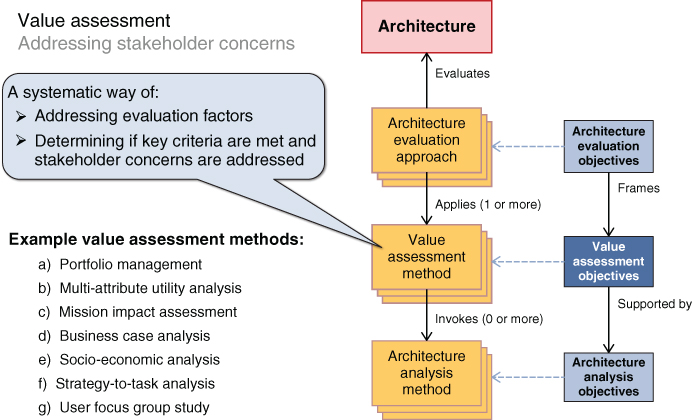

The evaluation methods – namely the Value Assessment Methods and the Architecture Analysis Methods – will use the objectives driving them at that level to establish the assessment and analysis criteria to be used, as illustrated in Figure 11.5.

Figure 11.5 Objectives-driven architecture evaluation

11.2.3 Assessment versus Analysis

Analysis deals with “what” the system does, how well, how often, when, where, under what conditions, but does not deal directly with the value associated with stakeholder concerns (where “value” signifies its worth, importance, significance, or quality). Analysis does not answer the “So What?” question, but instead will focus on the matter-of-fact results of running their models and performing their analytics.

Assessment deals with the “goodness” of the architecture, where goodness is defined in terms of how well stakeholder concerns are addressed. Assessment is primarily focused on answering the “So What?” question. Not all concerns are “technical” in nature. Sometimes there can be “sociopolitical” factors to consider, such as equitable distribution of labor, minimal impact to the environment, securing future funding for the program, minimal impact to jobs in particular governmental districts, and providing “early wins” to the naysayers to help avoid negative pressures.

Architects in general are more likely than systems designers to care about the nontechnical factors. This is one of the key reasons for so much emphasis in this approach on stakeholder “concerns” rather than on stakeholder requirements. The designers and other engineers will take the architecture they are given and optimize such design for the requirements they are given. In fact, this approach can be used as the basis for the so-called model-based systems engineering (MBSE) approach where the requirements themselves are (at least at the top level) based on the models of the architecture.

Example stakeholders to consider in the assessment include users, operators, maintainers, owners, sponsors, acquirers, developers, builders, integrators, suppliers, industrial base, labor force, third parties (e.g., environmental impacts), evaluators, policy makers, certification authorities, and auditors.

Value assessment and architecture analysis have rather different goals and results as shown in Table 11.1. The breadth and basis of work are different where assessment is typically a single, unified activity while analysis is usually spread among multiple, separate activities. The overall analysis effort is usually divided into more specific analytical tasks focused on individual concerns or on particular system properties or characteristics. Assessment will integrate the various analysis results and, by taking notice of the competing concerns, must thoroughly examine the tradespace, trying to find the architecture that is most balanced and robust.

Table 11.1 Distinctions between Value Assessment and Architecture Analysis

| Characteristics | Value Assessment | Architecture Analysis |

| Goal Orientation | Fundamental (or ends) objectives (often multilevel) | Means objectives (often multilevel) |

| Results | Passes “judgment” | Matters of fact |

| Breadth | Single, unified activity | Multiple, separate activities |

| Basis of work | Synthesis of analysis results | Technical and other analyses |

| Scope | Utility, value, worth, priorities, ranking,tTrade-offs | Ways and means |

| Focus | Effectiveness, efficiencies, equities | Performance determination, limits identification (bounds) |

| Typical figures of merit | Measures of effectiveness (MOEs), return on investment (ROI), break-even point, key success factors (KSFs) | Measures of performance (MOPs), Key performance parameters (KPPs), technical performance measurements (TPMs), quality metrics |

| Key items of interest | Competing concerns, performing trade-offs, achieving balance and robustness | Individual concerns, determining system properties and characteristics |

| Primary questions | So what?, Who cares?, What impacts?, Why?, Why not? | What, where, when, how, how much, how often? |

Assessment is concerned with utility and value, worth and priorities, ranking and trade-offs (i.e., the fundamental objectives, but sometimes elsewhere called the “ends” objectives), whereas analysis is more concerned with the ways and means of achieving the architectural objectives (i.e., the means objectives). Assessment will focus on how effective and efficient the architecture will be, leading to its use of things such as measures of effectiveness (MOEs) and key success factors (KSFs). On the other hand, analysis will often focus on things like measures of performance (MOPs), key performance parameters (KPPs), technical performance measurements (TPMs), and other quality metrics.

11.3 Architecture Evaluation Elements

The key elements of an architecture evaluation are as follows:

- Architecture evaluation approach

- Value assessment methods

- Architecture analysis methods.

11.3.1 Architecture Evaluation Approach

An architecture evaluation approach is a way to deal with the architecture to help determine key characteristics, properties, knowledge or skills of future, current, or past systems related to that architecture. The evaluation approach is a “line of attack” to be used in evaluating the architecture. Sometimes a multipronged approach is justified, using more than one approach to address different aspects of the situation for that particular evaluation effort. The distinction between approach and method is illustrated in Figure 11.6 and explained in greater detail later. In most cases, a single approach is satisfactory for a particular evaluation effort. However, there are cases where using multiple approaches can be helpful or sometimes even the only practical way to proceed.

Figure 11.6 Architecture evaluation approaches: choosing one or more “lines of attack”

Evaluation approaches come in various forms, such as modeling and simulation, prototype demonstration, system experiment, model walkthrough, technical analysis, quality workshop, expert panel, user symposium, concept review, customer focus group, and independent audit.

11.3.2 Architecture Evaluation Objectives

The architecture evaluation approach will be driven by the architecture evaluation objectives and will do this by applying one or more value assessment methods. The approach will integrate the assessment results and will identify key findings and develop recommendations. If there was more than one approach used, then the findings from each separate approach will be examined to identify areas of agreement or conflict and, if necessary, will resolve any discrepancies found. A synthesis of the results will be captured in an evaluation report and communicated to key stakeholders. If applicable, this will be reported to the decision-maker who may either make a decision based on findings and recommendations or, in some cases, ask for some part of the evaluation to be redone.

11.3.3 Evaluation Approach Examples

Here is an example of how this could be applied. Assume the following the evaluation objectives:

- Recommend changes to portfolio of systems and technologies

- Recommend architecture studies to be conducted

Given these objectives, the following approaches might be chosen:

- Modeling and simulation (performance of current systems)

- Prototype demonstration (benefits of promising technologies)

- System experiment (impact of changing the concept of operations)

- Concept review (feedback from key stakeholders after looking at future architecture alternatives and changing conditions and scenarios)

- Back-of-the-envelope calculations (to quickly determine which areas to focus on).

An examination of the performance of currently deployed systems could be an important consideration since these systems might be underperforming against their specifications, or not performing well with respect to the competition, or not performing well against the actions of adversaries. It could be helpful to talk with the research department who may have built some advanced prototypes that may have promising features that can be employed in new systems. One could also examine an old system to see if it can be used with different operational methods such that some advantage can be gained; this approach could be useful since it will likely involve a low level of cost and risk. During a concept review, a set of architectural diagrams and mock-ups can be shown to users and operators to get their reactions to the new features and functions. Finally, one should consider doing a “back-of-the-envelope” calculation to determine which of the alternatives can be abandoned without expending a large amount of effort in their examination in detail.

So, it is important to be careful about only picking one approach for evaluating an architecture when multiple approaches might be more cost-effective and timely. The tendency is to only use modeling and simulation when there are often faster, better, and cheaper ways to get the same or better architecture evaluation results.

11.3.4 Value Assessment Methods

The architecture evaluation approach chosen will dictate which value assessment methods are applicable. Whereas an approach is a general, often informal, way in which to do something, a method is a more systematic way of doing something, embodied in an orderly and logical arrangement, often represented as a series of steps. A method if used often will commonly be captured in a specified procedure and posted in a library for later reuse.

A value assessment method is used to determine whether the architecture meets the value assessment objectives, which were specified by the architecture evaluation approach. Each value assessment method specifies the criteria to be used in examination of architecture(s) and applies value assessment criteria to address specific evaluation factors that are derived from one or more value assessment objectives. When necessary, each evaluation factor can be decomposed into lower level factors. This method also will determine if key criteria are met and to what degree stakeholder concerns are addressed.

As illustrated in Figure 11.7, value assessment methods come in various forms, such as portfolio management process, multiattribute utility analysis, mission impact assessment, business case analysis, socioeconomic analysis, strategy-to-task analysis, and user focus group study.

Figure 11.7 Value assessment methods: addressing stakeholder concerns

A value assessment method can use manual or tool-based techniques and other suitable enablers. Additional resources such as test environments, discrete event simulations, queuing theory models, and Petri nets, among other architecture model evaluation techniques, can also be used to perform the evaluation. It is not uncommon that these same methods can be applied to operational concepts or to system designs. However, when applied to other things than to an architecture, the value assessment objectives and criteria are often different than those applied to the architecture.

11.3.5 Value Assessment Criteria

Each one of the value assessment criteria applied by the value assessment method is aligned with one or more value assessment objectives. Full coverage of all the objectives in the scope of the architecture evaluation can require the use of multiple value assessment methods.

Benefits should be calculated as net benefits where losses are “subtracted” from gains to ensure a comprehensive look at overall benefit to the wide diversity of stakeholders. Furthermore, some stakeholders might gain while others lose when considering the same architecture criteria. It is important to get a balanced view of the stakeholder concerns' landscape, not just focus on those who benefit.

Of course, determination of net benefit is rarely as easy as doing the simple math. Often, the benefits and costs are evaluated on incommensurate scales. The value assessment method should specify how the measurement scales are used, the protocols for taking the measurements, and how the measurements can be compared. There might need to be a protocol for converting from one scale to another to make comparisons more feasible and accurate.

11.3.5.1 Information Needs

The information needed by the value method can be specified in the form of information need statements. Information products will be used by the value assessment method to inform the production of value assessment results.

Some of the information needed could come from architecture analyses. The use of architecture analysis methods is optional since it could be possible to get all the information needed by the value assessment method from sources such as an architecture description, subject matter experts, and system experiments. There is no need to conduct analyses when the information can be readily obtained elsewhere. Analysis can often be time-consuming and expensive and should be used prudently.

11.3.5.2 Value Assessment Example

Here is an example of how this could be applied. Assume the following evaluation objectives:

- Recommend changes to portfolio of systems and technologies

- Recommend architecture studies to be conducted

The relevant value assessment objectives might be the following:

- Determine which systems to add, modify, or drop

- Determine which technologies to adopt

Given these objectives, the following value assessment methods might be chosen:

- Portfolio management

- Mission impact assessment

- Business case analysis

Portfolio management is a technique for grouping programs and projects into “baskets” and determining the right mix within each basket. The portfolio management process could be fed the results of mission impact assessment and business case analysis since this information can be used to make portfolio adjustment recommendations.

Mission impact is often assessed using some kind of mission models that represent the ways the mission is or will be conducted along with various scenarios and contingencies accounted for. This can be as complicated as a large set of computer-based simulations or could be as simple as a “board game” where the architecture is used as the basis for the game play.

Business case analysis is quite commonly used in the corporate board room to assess business proposals to determine likelihood of success, potential gains and losses, relevant risks and hazards, and alternative pathways to proceed. This method aids decision-making by identifying and comparing alternatives through the examination of mission and business impacts (both financial and nonfinancial), risks, and sensitivities. The strength of the business case is every bit as important as the value inherent in the proposed architecture, which is why it is important that the entire emphasis of architecture evaluation is not on the value metrics alone.

11.3.6 Architecture Analysis Methods

An architecture analysis method examines an architecture in the context of specific evaluation factors. Those factors derive from the architecture analysis objectives specified by the value assessment method, which in turn align with the concerns that define the scope of the architecture evaluation.

As illustrated in Figure 11.8, architecture analysis methods come in various forms, such as functional analysis, object-oriented analysis, performance analysis, behavioral analysis, cost and schedule analysis, risk and opportunity analysis, failure modes, effects and criticality analysis (FMECA), focus group surveys, and Delphi method.

Figure 11.8 Architecture analysis methods: measuring architecture attributes

In order to address the architecture analysis objective that support the architecture evaluation objectives, the architecture analysis method needs to identify the architecture attributes of interest and what information about them needs to be analyzed by the architecture analysis using the specified architecture analysis criteria.

11.3.6.1 Architecture Analysis Criteria

Architecture analysis criteria can be thought of as conditions on the attributes. The outcomes of applying the attribute criteria are information products that help in determining the degree to which the architecture addresses the stakeholder concerns. Architecture analysis methods use manual or tool-based techniques and other suitable enablers to measure and analyze attributes. Additional resources such as test environments are sometimes required to perform the analysis. Examples of relevant criteria for some analysis objectives are shown in Table 11.2.

Table 11.2 Architecture Analysis Objectives and Criteria Examples

| Architecture Analysis Objectives | Architecture Analysis Criteria |

| Determine minimum safe operating condition | Apply the XYZ safety spectrum framework and report the safety level according to the standard levels in the framework |

| Determine operational throughput | Architecture must enable throughput at least as high as currently deployed systems to be further considered in the analysis |

| Minimize training time | Determine training hours per year to maintain operator certification levels per standard practice |

| Determine maximum operating speed | Architecture must enable performance at least as high as currently deployed systems plus an additional 20% |

Information sources for an architecture analysis method can include such things as architecture description, system description, requirements documents, use case descriptions, design documents, prototypes, system engineering and test plan, deployed systems, results from related analysis, current system/program risks, issues and management concerns, system analysis results, mission analysis results, business analysis results, stakeholder analysis results, and modeling and simulation results.

11.3.6.2 Measurement Scales and protocols

The architecture analysis method could specify a measurement protocol or some other way for gathering information on the attributes and could specify an analysis protocol or some other approach for processing this information to analyze one or more architecture attributes. It does this by applying architecture analysis criteria specified by the method to address the information needs of the higher level value assessment method. This is illustrated in Figure 11.9.

Figure 11.9 Architecture measurement scales and protocols

The architecture analysis method, where appropriate, will specify measurement scales and protocols in support of the analysis scales and protocols. The Measurement Process from ISO/IEC 15939 can be used as a basis for using measurement scales and protocols. This International Standard “identifies the activities and tasks that are necessary to successfully identify, define, select, apply and improve measurement within an overall project or organizational measurement structure. It also provides definitions for measurement terms commonly used within the system and software industries” (ISO/IEC 15939 2007). The key elements of the standard's measurement process are illustrated in Figure 11.10.

Figure 11.10 Key elements in the measurement process of ISO/IEC 15939

11.4 Steps in an Architecture Evaluation Process

There is no definitive process for conducting an architecture evaluation. The concepts described in this chapter can be incorporated into a variety of methodologies or corporate procedural guidelines. However, the following steps represent one way of using these ideas in an architecture evaluation effort.

- Determine purpose and scope of the architecture

- Identify stakeholders and concerns

- Establish evaluation objectives

- Identify or develop architecture alternatives

- Identify and implement architecture evaluation approaches

- Define and implement value assessment methods

- Define and implement architecture analysis methods

- Synthesize and present findings and recommendations to decision-maker

- Document architecture features and functions

- Communicate results and incorporate feedback

These steps will address all the concepts described in this chapter and provide a reasonable path toward implementing them in an architecture evaluation effort.

11.5 Example Evaluation Taxonomy

An evaluation framework can be used to help organize the effort using the concepts discussed so far. Part of the framework would be taxonomy of the approaches, assessment methods, and analysis methods. An example is shown as follows. In this example, the following are the evaluation objectives: (i) maximize the mission impact–cost ratio, (ii) maximize the business impact–cost ratio, and (iii) minimize environmental impact. Given these objectives, three evaluation approaches are employed: (i) system experiment, (ii) modeling and simulation, and (iii) prototype demonstration. Three of the value assessment methods for modeling and simulation to be used are, for example, (i) environmental impact assessment, (ii) mission impact assessment, and (iii) business impact assessment.

11.5.1 Business Impact Factors

As illustrated in Figure 11.11, within the business impact assessment, three architecture analysis methods are employed: (i) cost and schedule analysis, (ii) risk and opportunity analysis, and (iii) return on investment.

Figure 11.11 Business impact methods example

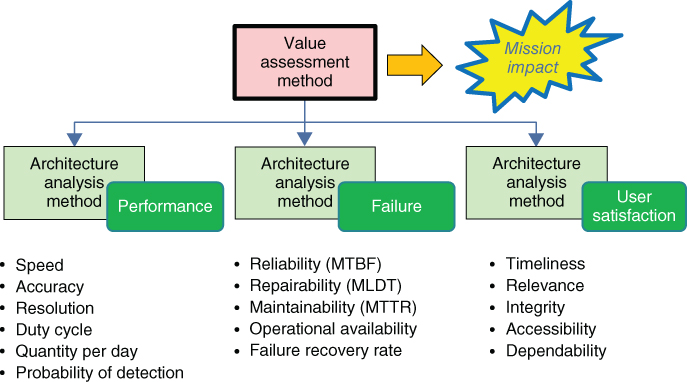

11.5.2 Mission Impact Factors

Within the mission impact assessment, three architecture analysis methods are employed: (i) performance analysis, (ii) failure analysis, and (iii) user satisfaction (Figure 11.12).

Figure 11.12 Mission impact methods example

11.5.3 Architecture Attributes

Using the same example as earlier, some possibly relevant architecture attributes of interest are shown as follows. As can be seen from this particular example, the large number of things to keep track of and to roll up into the overall evaluation results can be overwhelming. This is why a well-structured evaluation framework can be helpful in getting the work done in an efficient and effective manner (Figure 11.13).

Figure 11.13 Architecture attributes example

The roll up of results from the architecture analysis level to the value assessment level will be specified in the value assessment method. This roll up is often not merely additive. Sometimes complex mathematics or models are required to do proper integration of the results from one level to another. This is also true as you roll up value assessment results into the evaluation approach level. The evaluation approach should be defined in such a way that you know beforehand how the integration will occur.

This chapter describes how to build an architecture evaluation framework that can be used in the concept exploration activities. See Chapter 10 for details on how to apply mathematical techniques to rolling up the results during concept exploration. Integrating the results at each level in the framework is essential in progressing from the factors examined at the Architecture Analysis level to the next layer during Value Assessment where you need to understand how the architecture supports the “value proposition” as portrayed by the evaluation objectives and criteria.

11.6 Summary

Evaluating an architecture is an essential step on the way toward exploring the entire Decision Space. The Decision Space represents the range of choices that can be examined during the life of a system. This chapter describes a standard approach for doing architecture evaluation along with guidance on how to use these concepts. Using a structured evaluation framework can help ensure that the full tradespace is considered and that all key stakeholders concerns are properly addressed. It is important to make decisions based on the fundamental objectives that tie to the key stakeholder concerns rather than exclusively focusing on the means objectives that are more closely related to the system requirements. This evaluation can serve to validate proposed requirements for the system to ensure that a more balanced solution is used as the basis for system design.

11.7 Key Terms

- Architecture: Fundamental concepts or properties of a system in its environment embodied in its elements, relationships, and in the principles of its design and evolution (ISO/IEC/IEEE 42010:2011).

- Architecture Analysis Method: A way to examine an architecture in the context of specific analysis criteria. Architecture analysis methods come in various forms, such as functional analysis, object-oriented analysis, performance analysis, behavioral analysis, cost and schedule analysis, risk and opportunity analysis, failure modes, effects and criticality analysis (FMECA), focus group surveys, and Delphi method.

- Architecture Attribute: Quality or feature regarded as a characteristic or an inherent part of an architecture as a whole, of the system(s) of interest that conforms to the architecture or of the environment in which the system(s) are situated.

- Architecture Evaluation: Making a judgment or determination about the value, worth, significance, importance, or quality of an architecture.

- Architecture Evaluation Framework: Conventions, principles, and practices for evaluating architectures that are established specific to a domain of application, a collection of concerns to be examined, or a methodology or set of mechanisms to be applied.

- Architecture Evaluation Objective: Something toward which the architecture evaluation work is to be directed, a strategic position to be attained, a purpose to be achieved, or a set of questions to be answered by the architecture evaluation effort (adapted from A Guide to the Project Management Body of Knowledge (PMBOK® Guide) — Fourth Edition).

- Concern: Interest in a system relevant to one or more of its stakeholders (ISO/IEC/IEEE 42010:2011).

- Criterion: Principle, standard, rule, or test on which a judgment or decision can be based (adapted from A Guide to the Project Management Body of Knowledge (PMBOK(R) Guide) — Fourth Edition).

- Evaluation Approach: A general way to deal with the architecture to determine key characteristics, properties, knowledge or skills of future, current, or past systems related to that architecture that are relevant to the evaluation. Approaches come in various forms, such as modeling and simulation, prototype demonstration, system experiment, model walkthrough, technical analysis, quality workshop, expert panel, user symposium, concept review, customer focus group, and independent audit.

- Stakeholder: Individual, team, organization, or classes thereof having an interest in a system (ISO/IEC/IEEE 42010:2011). Examples of stakeholders include users, operators, acquirers, owners, suppliers, architects, developers, builders and maintainers, evaluators, and authorities engaged in certifying the system for a variety of purposes such as the readiness of system for use, the regulatory compliances of the system, the system's compliance to various levels of security policies, and the system's fulfillment of legal provisions.

- Value Assessment Method: A way to determine whether the architecture meets the assessment criteria. Value assessment methods come in various forms, such as portfolio management process, multiattribute utility analysis, mission impact assessment, business case analysis, socioeconomic analysis, strategy-to-task analysis, and user focus group study.

11.8 Exercises

- 11.1 Identify the stakeholders and their concerns for a family car. Identify the stakeholders and their concerns for a commercial cargo truck. Compare and contrast these lists. Which stakeholders will gain and which ones will lose in each case? What are the key differences between concerns for a car and for a truck?

- 11.2 Explain in your own words what architecture is. Explain the difference between architecture and design. Why is it important to make this distinction?

- 11.3 Explain the difference between fundamental objectives and ends objectives. Identify the fundamental objectives and ends objectives for a family car. Identify the fundamental objectives and ends objectives for a commercial cargo truck.

- 11.4 Explain the distinction between value assessment criteria and architecture analysis criteria. Define the value assessment criteria for a family car. Define the value assessment criteria for a commercial cargo truck. Define the architecture analysis criteria for these two cases.

- 11.5 Identify some possible architecture evaluation approaches that can be used when evaluating the architecture of a family car. Which approaches would be most appropriate? Map the stakeholder concerns for a family car to the relevant approaches. For each concern, which approach would be most appropriate and why?

- 11.6 Identify some possible value assessment methods that can be used when evaluating the architecture of a family car. Which value assessment methods would be most appropriate? Map the stakeholder concerns for a family car to the relevant methods. For each concern, which value assessment method would be most appropriate and why?

- 11.7 Identify some possible architecture analysis methods that can be used when evaluating the architecture of a family car. Which architecture analysis methods would be most appropriate? Map the stakeholder concerns for a family car to the relevant methods. For each concern, which architecture analysis method would be most appropriate and why? Identify the architecture attributes most relevant to each architecture analysis method chosen.

References

- Alexander, C. (1964) Notes on the Synthesis of Form, Harvard University Press.

- Firesmith, D., Capell, P., Falkenthal, D., Hammons, C.B., Latimer, D.T., and Merendino, T. (2008) The Method Framework for Engineering System Architectures, Auerbach Publications.

- ISO/IEC 15939 (2007) Systems and Software Engineering — Measurement Process, ISO.

- ISO/IEC 42020 (In press, publication expected in 2017) Systems and software engineering — Architecture Processes, ISO.

- ISO/IEC 42030 (In press, publication expected in 2018) Systems and software engineering — Architecture Evaluation, ISO.

- ISO/IEC/IEC 42010 (2011) Systems and software engineering — Architecture Description, ISO.