Chapter 6. Understanding metadata

Conquering your fears of extracting text from files in a few lines of Java code has hopefully put Tika on your personal must-have list. The ease and simplicity with which Tika can turn an afternoon’s parsing work into a smorgasbord of content handler plugins and event-based text processing is likely fresh on your mind. If not, head back to chapter 5 and relive the memories.

Looking ahead, sometimes before you’ve even obtained the textual content within the files you’re interested in, you may be able to weed out which files you’re not interested in, based on a few simple criteria, and save yourself a bunch of time (and processing power).

Take, for example, the use case presented in figure 6.1.

Figure 6.1. The search engine process and metadata. Metadata about a page, including its title, a short description, and its link are used to determine whether to “click” the link and obtain the content.

Figure 6.1 shows a user’s perspective on a search engine. Much of the time is spent inspecting links—a critical step in the search engine process is deciding which links to follow (and which not to follow). Considering that some web pages may be larger than others, and unduly waste precious time and resources, a user’s goal is to leverage the few pieces of metadata, or data about data, available in the web page’s title, description, and so on, to determine whether the web page is worth visiting.

In this scenario, a user is searching for web pages about a new book on software technology written by a pair of good-looking gentlemen. The user is interested in purchasing the book and is ultimately searching for the first link returned from the Google search, but wants to consider at least a few other relevant web pages that may also yield the desired outcome. How should the user go about using the web pages returned from their query?

The user (often unconsciously) examines the few snippets of information available for each result in the list. These snippets of information include the web page’s title, description, and URL. Whether they realize it or not, they’re using metadata to make their decision. Clicking can be expensive, as sometimes web pages are large, and contain large numbers of scripts or media files that must be fetched by the browser upon requesting the web page.

This scenario illustrates the power of metadata as an effective summary of the information stored in web pages. But metadata isn’t limited to a few simple fields—it can be much richer, and include date/time ranges, value ranges for particular data values, spatial locations, and a host of other properties.

Consider a set of PDF research paper files stored on a local hard disk. As a developer, you may be writing a program whose goal is to process papers written by a particular author (or set of authors), and to only consider those papers produced by a particular version of the PDF generation software. Since some of the files may be quite large, and contain fancy diagrams, tables, and figures, you may be interested in a quick means of deciding whether or not the PDF files are of interest to your program.

Metadata comes to the rescue again in this scenario, as often PDF files contain explicit document metadata that rapidly (without reaching into the file and extracting its textual content which may not even ultimately answer your question) exposes fields such as Author and PDFVersion, and that allows you to engage in the weeding out process quickly and with low memory footprint.

So, how does Tika help you deal with metadata? We’ll spend the rest of this chapter answering that question in great detail. We’ll first focus on exploring the existing metadata standards, and how Tika leverages these standards to provide a common vocabulary and representation of metadata across file formats. As the old saying goes, “The best thing about standards is that there are so many to choose from,” but no need to worry—we’ll point out which standards are more generic, and which are specific to concrete types of files.

Either way, you’ll want to keep Tika close by, as it’ll allow you to easily leverage all kinds of metadata standards, and to convert between them using metadata transformations. Capturing and exposing metadata from documents would be of little use without some quality control, so we’ll explain how Tika helps you in that regard. Throughout the chapter, we’ll build on top of the LuceneIndexer from chapter 5, focusing on making it metadata-aware.

To begin, let’s discuss a few of the existing metadata standards to give you a feel for what types of metadata fields and relationships are available to you as a software developer, and more importantly, as a Tika user.

6.1. The standards of metadata

So metadata is useful summary information, usually generated along with the document itself, that allows you to make informed decisions without having to reach into the document and extract its text, which can be expensive. What are some of the types of things that you can do with metadata? And are there any existing metadata guidelines or specifications that you can leverage to help figure this out?

As it turns out, the answer to both questions is, yes! This section will teach you about the two canonical types of metadata standards as well as different uses of standards, including data quality assessment and validation, as well as metadata unification.

6.1.1. Metadata models

Though PDF file and HTML page properties are useful for making decisions such as Do I want to read this research paper? or Is this the web page I was looking for?, the property names themselves don’t tell you everything you need to know in order to make use of them. For example, is PDFVersion an integer or an alphanumeric? This would be useful to know because it would allow you to compare different PDFVersion attributes. What about Author? Is it multivalued, meaning that a paper can have multiple authors, or is it only single-valued?

To answer these questions, we usually turn to metadata standards or metadata models. Standards describe all sorts of information about metadata such as cardinality (of fields), relationships between fields, valid values and ranges, and field definitions, to name a few. Some representative properties of metadata standards are given in table 6.1.

Table 6.1. Relevant components of a metadata standard (or metadata model). Metadata standards help to differentiate between metadata fields, allow for their comparison and validation, and ultimately clearly describe the use of metadata fields in software.

|

Property |

Definition |

|---|---|

| Name | The name of the metadata field, such as Author or Title. The name is useful for humans to discern the meaning of the property, but doesn’t help a software program to disambiguate metadata properties. |

| Definition | The definition of the metadata property, typically in human consumable form. If the metadata property were Target Name and the domain of discourse were planetary science, a definition might be, “The celestial body that the mission and its instruments are observing.” |

| Valid values | The allowed or valid values for a particular metadata property. Valid values may identify a particular numerical range, for example, between 1 and 100. Valid values may also identify a controlled-value set of allowed values; for example, if the metadata property were CalendarMonth, a three-character representation of the 12 calendar months in a year, we may have a valid value set of {Jan, Feb, Mar, Apr, May, Jun, Jul, Aug, Sep, Oct, Nov, Dec}. |

| Relationships | Indicates that this property may have a relationship with another property, such as requiring its presence. For example, if the Latitude field is present, so should the Longitude field. |

| Cardinality | Prescribes whether the metadata property is multivalued, for example, metadata describing a file’s set of MimeType names. |

The International Standards Organization (ISO) has published a reference standard for the description of metadata elements as part of metadata models, numbered ISO-11179. Found at http://metadata-stds.org/11179/, ISO-11179 prescribes a generally accepted mechanism for defining metadata models.

Multitudes of metadata models are out there, and they can be loosely classified as either general models or content-specific models, as depicted in figure 6.2.

Figure 6.2. Classes of metadata models. Some are general, such as ISO-11179 and Dublin Core. Others are content-specific: they’re unique to a particular file type, and only contain metadata elements and descriptions which are relevant to the content type.

Tika supports both general and content-specific metadata standards.

General Metadata Standards

General metadata standards are applicable to all known file types. The attributes, relationships, valid values, and definitions of these models focus on the properties that all electronic documents share (title, author, format, and so forth). Some examples of these models include ISO-11179 and Dublin Core, http://dublincore.org/. ISO-11179 defines the important facets of metadata attributes that are part of a metadata model. Dublin Core is a general metadata model consisting of less than 20 attributes (Creator, Publisher, Format) which are said to describe any electronic resource. Finally, the Extensible Metadata Platform (XMP: see http://www.adobe.com/products/xmp/) is a generic standard that provides a unified way for storing and transmitting metadata information based on various different metadata schemas such as Dublin Core or the more content-specific ones described next.

Content-Specific Metadata Standards

Content-specific metadata standards are defined according to the important relationships and attributes associated with specific file types and aren’t exclusively generic. For example, attributes, values, and relationships associated with Word documents such as the number of words or number of tables aren’t likely to be relevant to other file types, such as images. Other examples of content-specific metadata standards are Federal Geographic Data Committee (FGDC), a model for describing spatial data files, and the XMP dynamic media schema (xmpDM), a metadata model for digital media such as images, audio, and videos.

You can get a list of which standard metadata models your version of Tika supports via the --list-met-models option we saw in chapter 2:

java -jar tika-app-1.0.jar --list-met-models

The full output is a long list of supported metadata, so instead of going through it all, let’s focus on a few good examples of both generic and content-specific metadata models. In appendix B you’ll find a full description of all the metadata models and keys supported by Tika.

6.1.2. General metadata standards

Most electronic files available via the internet have a common set of metadata properties, the conglomerate of which are part of what we call general metadata models or general standards for metadata. General models describe electronic resources at a high level as in who authored the content, what format(s) the content represented is in, and the like.

To illustrate, let’s look at some of the properties of the Dublin Core metadata model as supported by Tika. Recall the command we showed in the previous section. By using a simple grep command, we can augment the --list-met-models output to isolate only the Dublin Core part:

java -jar tika-app-1.0.jar --list-met-models | grep -A16 DublinCore

This produces the following output:

DublinCore CONTRIBUTOR COVERAGE CREATOR DATE DESCRIPTION FORMAT IDENTIFIER LANGUAGE MODIFIED PUBLISHER RELATION RIGHTS SOURCE SUBJECT TITLE TYPE

Looking at these attributes, it’s clear that most or all of them are representative of all electronic documents. Think back to table 6.1. What would the valid values be for something like the FORMAT attribute? Most of the time the metadata field is filled with a valid MIME media type as we discussed in chapter 4. What would the cardinality be for something like the AUTHOR attribute? A document may be authored by multiple people, so the cardinality is one or more values.

We’ll cover ways that Tika can help you codify the information from table 6.1 on a per-property basis later in section 6.1.4. For now, let’s focus in on content-specific metadata models.

6.1.3. Content-specific metadata standards

Generic metadata standards and models are great because they address two fundamentally important facets of capturing and using metadata:

- Filling in at least some value per field— Content-specific metadata standards provide at least some value for each field (for example, for a PDF file the values for FORMAT and TITLE might be application/pdf and mypdffile.pdf, respectively), reducing the generic nature of the metadata.

- Being easily comparable— Mainly due to having some default value, the actual attributes themselves are so general that they’re more likely to mean the same thing (it’s clear what TITLE is referring to in a document).

On the other hand, content-specific metadata standards and models are less likely to fulfill either of these properties. First, they aren’t guaranteed to fill in any values of any of their particular fields. Take MS Office files and their field, COMPANY, derived from the same grep trickery we showed earlier:

java -jar target/tika-app-1.0.jar --list-met-models | grep -A28 MSOffice MSOffice APPLICATION_NAME APPLICATION_VERSION AUTHOR CATEGORY CHARACTER_COUNT CHARACTER_COUNT_WITH_SPACES COMMENTS COMPANY CONTENT_STATUS CREATION_DATE EDIT_TIME KEYWORDS LAST_AUTHOR LAST_PRINTED LAST_SAVED LINE_COUNT MANAGER NOTES PAGE_COUNT PARAGRAPH_COUNT PRESENTATION_FORMAT REVISION_NUMBER SECURITY SLIDE_COUNT TEMPLATE TOTAL_TIME VERSION WORD_COUNT

COMPANY will only be filled out if the user entered a company name when installing MS Office on the computer that created the file. So, if you didn’t fill out the Company field when registering MS Office, and you begin sharing MS Word files with your other software colleagues, they won’t be able to use Tika to see what company you work for. (For privacy-minded people, this is a good thing!)

As for being easily comparable, this is another area where content-specific metadata models don’t provide a silver bullet. The LAST_MODIFIED field in the HttpHeaders metadata model doesn’t correspond directly to the MODIFIED field in the DublinCore model, nor does it correspond to the LAST_SAVED field from the MSOffice metadata model. So, content-specific metadata model attributes aren’t easily comparable across metadata models.

Most document formats in existence today have a content- or file-specific metadata model associated with them (even in the presence of a general model, like Dublin Core). In addition to common models such as XMP, there are a slew of MS Office metadata formats, various models for science files such as Climate Forecast for climate sciences and FITS for astrophysics. A bunch of formats are out there, and their specifics are outside the scope of this book. The good news is this: Tika already supports a great number of existing content-specific metadata models, and if it doesn’t support the one you need, it’s extensible so you can add your own. We’ll show you how throughout the rest of the chapter.

Let’s not get too far ahead of ourselves though. First we’ll tell you a bit about metadata quality, and how it influences all sorts of things like comparing metadata, understanding it, and validating it.

6.2. Metadata quality

Metadata is like the elephant in the room that no one wants to be the first to identify: how did it get there? What’s it going to do? What can we do with it?

Metadata comes from a lot of different actors in the information ecosystem. In some cases, it’s created when you save files in your favorite program. In other cases, other software that touches the files and delivers them to you over the internet annotates the file with metadata. Sometimes, your computer OS will create metadata for your file.

With so many hands touching a file’s metadata, it’s likely that the quality and abundance of the metadata captured about your files will be of varying quality. This can lead to problems when trying to leverage the metadata captured about a file, for example, for validation, or for making decisions about what to do with the file.

In this section, we’ll walk you through the challenges of metadata quality and then see how Tika comes to the rescue, helping you more easily compare and contrast the collected metadata about your files. Onward!

6.2.1. Challenges/Problems

The biggest thing we’ve glossed over until now is how metadata gets populated. In many cases, the application that generates a particular file is responsible for annotating a file with metadata. An alternative is that the user may explicitly fill out metadata about the file on their own when authoring it. Many software project management tools (such as MS Project, or FastTrack on Mac OS X) prompt a user to fill out basic metadata fields (Title, Duration, Start Project Date, End Project Date, and so on) when authoring the file.

Sometimes, downstream software programs author metadata about files. A classic example is when a web server returns metadata about the file content it’s delivering back to a user request. The web server isn’t the originator of the file, but it has the ability to tell a requesting user things such as file size, content type (or MIME type), and other useful properties. This is depicted in figure 6.3.

Figure 6.3. A content creator (shown in the upper left portion of the figure) may author some file in Microsoft Word. During that process, Word annotates the file with basic MSOffice metadata. After the file is created, the content creator may publish the file on an Apache HTTPD web server, where it will be available for downstream users to acquire. When a downstream user requests the file from Apache HTTPD, the web server will annotate the file with other metadata.

With all of these actors in the system, it’s no wonder that metadata quality, or the examination and assessment of captured metadata for file types, is a big concern. In any of the steps in figure 6.3, the metadata for the file could be changed or simply not populated, affecting some downstream user of the file or some software that must make sense of it later.[1] What’s more, even if the metadata is populated, it’s often difficult to compare metadata captured in different files, even if the metadata captured represents the same terminology. This is often due to each metadata model using its own terms, potentially its own units for those terms, and ultimately its own definitions for those terms as well.

1 In a way, this is one of the main things that makes metadata extraction, and libraries that do so like Tika, so darned useful. So maybe we Tika community members should be happy this occurs!

Metadata quality is of prime importance, especially in the case of correlating metadata for files of different types, and most often different metadata models. As a writer of software that must deal with thousands of different file types and metadata models every day, it’s no easy challenge to tackle metadata correlation. You’re probably getting used to this broken record by now, but here comes Tika to save the day again!

6.2.2. Unifying heterogeneous standards

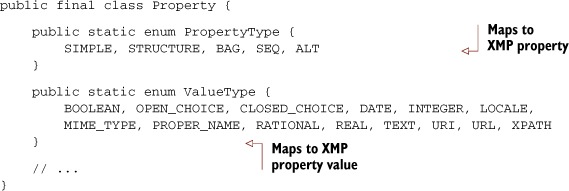

Lucky for us, Tika’s metadata layer is designed with metadata quality in mind. Tika provides a Property class based on the XMP standard for capturing metadata attributes. XMP defines a property (called PropertyType in Tika) as some form of metadata captured about an annotated document. XMP also defines property values that are captured for each metadata property. In Tika we call XMP property values ValueTypes. Let’s take a quick look at a snippet of the Tika Property class.

Listing 6.1. Property class and support for XMP-like metadata

The PropertyType and ValueType enums allow Tika to define a metadata attribute’s cardinality (is it a SIMPLE value, or a sequence of them—called SEQ for shorthand), its controlled vocabulary (a CLOSED_CHOICE or simple OPEN_CHOICE), and its units (a REAL or an INTEGER). Using Tika and its Property class, you can decide whether LAST_MODIFIED in the HttpHeaders metadata model is roughly equivalent in terms of units, controlled vocabulary, and cardinality to that of LAST_SAVED in the MSOffice metadata model.

These capabilities are useful in comparing metadata properties (recall from table 6.1 that these are important things to capture for each metadata element), in validating them, in understanding them, and in dealing with heterogeneous metadata models and formats. Tika’s goal is to allow you to curate high-quality metadata in your software application.

So now that you’re familiar with metadata models, Tika’s support for the different properties of metadata models, and most of the important challenges behind dealing with metadata models, it’s time to learn about Tika’s metadata APIs in greater detail.

6.3. Metadata in Tika

In this section, we’ll jump into Tika’s code-level support for managing instances of metadata—the actual information captured in metadata, informed by the metadata models. Specifically we’ll explore Tika’s org.apache.tika.metadata package and its Metadata and Property classes, and their relationships. These classes will become your friend: transforming metadata and making it viewable by your end users is going to be something that you’ll have to get used to. Never fear! Tika’s here to help.

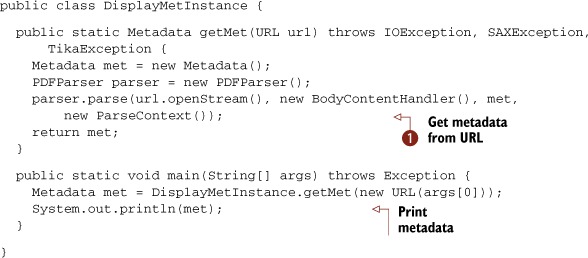

We’ve talked a lot so far about metadata models, but we’ve done little to show what instances of those models look like. Metadata instances are actual metadata attributes, prescribed by a model, along with their values that are captured for files. In other words, instances are the actual metadata captured for each file that you run through Tika. Let’s get ourselves some metadata to work with in the following listing. When given a URL, the program will obtain the metadata corresponding to the content available from that URL.

Listing 6.2. Metadata instances in Tika

The output from this program is a Java String representation of the Tika Metadata instance. Run listing 6.2 on a PDF file (passed in via the URL parameter identified

![]() ) and the output may look like the following (reformatted for easier viewing):

) and the output may look like the following (reformatted for easier viewing):

created=Sun Jul 25 09:32:47 PDT 2010

producer=pdfeTeX-1.21a

creator=TeX

xmpTPg:NPages=20

PTEX.Fullbanner=This is pdfeTeX, Version 3.141592-1.21a-2.2 (Web2C 7.5.4)

kpathsea version 3.5.6

Creation-Date=2010-07-25T16:32:47Z

Content-Type=application/pdf

The preceding example contains seven metadata attributes—created, producer, creator, xmpTPg:NPages, PTEX.Fullbanner, Creation-Date, and Content-Type—and seven corresponding metadata values. The values and attributes used in this Metadata object instance in Tika were selected by the PdfParser class in Tika. Recall from chapter 5 that each Tika Parser class not only extracts text using XHTML as the representation, but also extracts and populates Metadata, provided to it in its parse() method:

void parse(

InputStream stream, ContentHandler handler,

Metadata metadata, ParseContext context)

throws IOException, SAXException, TikaException;

Each Tika Parser is responsible for using the metadata models defined in the Tika metadata package to determine which metadata attributes to populate. Looking at the example, metadata attributes such as Content-Type come from the HttpHeaders model, others such as creator are defined by the DublinCore model, and for flexibility, Tika allows other attributes (and values) to be populated that may not yet have a defined metadata model in Tika’s org.apache.tika.metadata package.

At its core, Tika provides a Metadata class and a map of keys and their multiple values to record metadata for files it examines. Let’s wave the magnifying class over it and see what’s inside.

6.3.1. Keys and multiple values

Tika’s Metadata class, shown in figure 6.4, provides all of the necessary methods and functionality for recording metadata instances extracted from files. The class inherits and implements the set of metadata models (such as DublinCore) shown in the periphery of the diagram. The core class, org.apache.tika.metadata.Metadata, is a key/multivalued structure that allows users to record metadata attributes as keys using the set(Property,...) methods (which use the Property class previously discussed). The Metadata class allows a user to add multiple values for the same key (as in the case of Metadata.AUTHOR having multiple author values) using the add(String, String) method. The class also provides other methods that allow for introspection, including names(), which returns the set of recorded attribute names in the Metadata object instance, and the isMultiValued(String) method that allows users to test whether a particular metadata attribute has more than one value recorded. The Metadata class has a set of XMP-compatible Property attributes as we mentioned earlier, where each property is defined by its PropertyType (think attribute name) and its associated PropertyValue (think attribute value). Property classes provide facilities for validating and checking attributes, their units, and types, and for cross-comparing them.

Figure 6.4. The code-level organization of the Tika metadata framework. A core base class, Metadata, provides methods for getting and setting metadata properties, checking whether they’re multivalued, and representing metadata in the correct units.

To implement various metadata models, the Metadata class implements several model interfaces, such as ClimateForecast, TIFF, and Geographic, each of which provide unique metadata attributes to the Metadata object instance. The Metadata class has a one-to-many relationship with the Property class. Each Property provides necessary methods for validating metadata values, for example, making sure that they come from a controlled value set, or checking their units. This is accomplished using the XMP-like PropertyType and PropertyValue helper classes.

Tika’s Parser implementations can use the Metadata class, as can users of Tika who are interested in recording metadata and leveraging commonly defined metadata attributes and properties. Because of its general nature, the Metadata class serves as a great intermediate container of metadata, and can help you generate a number of different views of the information you’ve captured, including RSS and other formats.

6.3.2. Transformations and views

Since metadata extraction can be time-consuming, often involving the integration of parsing libraries, MIME detection strategies, text extraction, and other activities, once you have metadata for a corpus of files, you’ll probably want to hold on to it for a while. But the metadata community is constantly defining new metadata standards that somehow better support a particular user community’s needs.

Because of this, you’ll inevitably be asked the question, “Can you export file X’s metadata in my new Y metadata format?” It’s worth considering what that request entails. Before you jump across the table and attack the person who caused you more work, consider how Tika can be of service to help defuse this situation.

Representing Metadata Instances

Often, new metadata models are simply formatting variations and different representations of existing attributes defined in common metadata models, and already captured by Tika. Let’s take the Really Simple Syndication (RSS) format as an example. RSS has been a commonly used XML-based format since the early 2000s, and is integrated into most web browsers and news websites as a means of publishing and subscribing to frequently changing content. RSS defines a channel as a set of recently published items. Each RSS document usually contains a single Channel tag (such as News Items or Hot Deals!), and several Item tags which correspond to the recent documents related to that channel.

Transforming Recorded Metadata

As it turns out, turning basic key/multivalued metadata into RSS isn’t as challenging as you may think. The same is true for changing basic key/multivalued metadata into a number of similar XML-based formats, or views of your captured metadata. The steps involved usually boil down to the following:

1. Map the metadata attribute keys into the view’s tag or item names— This process amounts to deciding for each metadata attribute in your recorded metadata what the corresponding view tag’s name is (for example, Metadata.SOURCE maps to RSS’s link tag).

2. Extract the metadata values for each mapped view tag and format accordingly— For each mapped view tag, grab the values in the recorded metadata and shovel them into the view’s output XML representation (for example, shove the value for Metadata.SOURCE into the value for the link tag, and enclose it within an outer item tag).

Now that you know how to represent metadata instances and values in Tika, and how to transform that recorded metadata, let’s take a real use case and revisit the LuceneIndexer example from chapter 5. We’ll augment it to explicitly record DublinCore metadata, and then use that to feed an RSS service that shows the files recently indexed in the last 5 minutes. Sound difficult? Nah, you’ve got Tika!

6.4. Practical uses of metadata

Remember the LuceneIndexer from chapter 5? It was a powerful but simple example that showed how you could use the Tika facade class to automatically select a Tika Parser for any file type that you encountered in a directory, and then index the content of that file type inside of the Apache Lucene search engine.

One thing that we skipped in that prior example was using Tika to extract not just the textual content from the files we encountered, but the metadata as well. Now that you’re a metadata master, you’re ready for this next lesson in your Tika training. We’ll show you two variations where we augment the LuceneIndexer with explicit recording of metadata fields. In the first example, we’ll specifically index DublinCore metadata, leveraging Tika’s support for metadata models. In the second example, you’ll see how easily Tika can be used to extract content-specific metadata for any type of file encountered.

6.4.1. Common metadata for the Lucene indexer

Let’s hop to it. The following listing rethinks the indexDocument(File) function from the existing LuceneIndexer and records some explicit file metadata using the DublinCore model.

Listing 6.3. Extending the Lucene indexer with generic metadata

public void indexWithDublinCore(File file) throws Exception {

Metadata met = new Metadata();

met.add(Metadata.CREATOR, "Manning");

met.add(Metadata.CREATOR, "Tika in Action");

met.set(Metadata.DATE, new Date());

met.set(Metadata.FORMAT, tika.detect(file));

met.set(DublinCore.SOURCE, file.toURL().toString());

met.add(Metadata.SUBJECT, "File");

met.add(Metadata.SUBJECT, "Indexing");

met.add(Metadata.SUBJECT, "Metadata");

met.set(Property.externalClosedChoise(Metadata.RIGHTS,

"public", "private"), "public");

InputStream is = new FileInputStream(file);

tika.parse(is, met);

try {

Document document = new Document();

for (String key : met.names()) {

String[] values = met.getValues(key);

for (String val : values) {

document.add(new Field(key, val, Store.YES, Index.ANALYZED));

}

writer.addDocument(document);

}

} finally {

is.close();

}

}

It’s worth pointing out that Tika’s Metadata class explicitly supports recording metadata as Java core types—String, Date, and others. If you record metadata using a non-String type, Tika will perform validation for you on the field. At the time of this writing, Tika’s support explicitly focuses on Date properties, but additional support is being added to handle the other types. Note the use of the add(String,String) method to record metadata that contains multiple values (like Metadata.CREATOR). The example in listing 6.3 also includes the use of an XMP-style explicit closed choice, recording whether the indexed file is public or private, in terms of its security rights (defined in DublinCore as DublinCore.RIGHTS).

Listing 6.3 is great because it uses a common, general metadata model like Dublin Core to record metadata (we saw in section 6.3.2 what this can buy us), but our example is limited to only using one set of metadata attributes. What if we wanted to use metadata attributes from all of the different metadata models that Tika supports?

Listing 6.4. Extending the Lucene indexer with content-specific metadata

In this example, we leverage the Tika facade class and its parse(InputStream, Metadata) method, an entry point into all of Tika’s underlying Parser implementations. By leveraging the Tika facade, we allow any of Tika’s Parsers to be called, and to contribute metadata in their respective metadata models to the underlying file that we’re indexing in Lucene. Pretty easy, huh?

Now that you have support for arbitrary metadata indexing and the ability to build up your corpus of metadata, let’s see how you can easily transform that metadata and satisfy the inevitable questions you’ll get from some of your downstream users. Just remember, Tika wants you to build bridges to your users, not choke them!

6.4.2. Give me my metadata in my schema!

Suppose one of your users asked you to produce an RSS-formatted report of all of the files your server received within the last 5 minutes. It turns out a hacker got into the system and the security team is doing a forensic audit trying to figure out whether the hacker has created any malicious files on the system.

Assume that you thought so highly of the metadata-aware LuceneIndexer that you created here that you put a variant of it into production on your system long before the hacker got in. So, you have an index that you incrementally add to, usually on the hour, which contains file metadata and free-text content for all files on your system.

In a few lines of Tika-powered code, we’ll show you how to generate an RSS feed from your Tika-enabled metadata index. First, you’ll need to get a listing of the files that have appeared within the last 5 minutes. The following takes care of this for you and provides the general framework for our RSS report.

Listing 6.5. Getting a list of recent files from the Lucene indexer

public String generateRSS(File indexFile) throws CorruptIndexException,

IOException {

StringBuffer output = new StringBuffer();

output.append(getRSSHeaders());

try {

reader = IndexReader.open(new SimpleFSDirectory(indexFile));

IndexSearcher searcher = new IndexSearcher(reader);

GregorianCalendar gc = new java.util.GregorianCalendar();

gc.setTime(new Date());

String nowDateTime = ISO8601.format(gc);

gc.add(java.util.GregorianCalendar.MINUTE, -5);

String fiveMinsAgo = ISO8601.format(gc);

TermRangeQuery query = new TermRangeQuery(Metadata.DATE.toString(),

fiveMinsAgo, nowDateTime, true, true);

TopScoreDocCollector collector =

TopScoreDocCollector.create(20, true);

searcher.search(query, collector);

ScoreDoc[] hits = collector.topDocs().scoreDocs;

for (int i = 0; i < hits.length; i++) {

Document doc = searcher.doc(hits[i].doc);

output.append(getRSSItem(doc));

}

} finally {

reader.close();

}

output.append(getRSSFooters());

return output.toString();

}

The key Tika-enabled part of listing 6.5 is the use of a standard metadata attribute, DublinCore.DATE, as the metadata key to perform the query. Since Lucene doesn’t enforce a particular metadata schema, your use of the metadata-enabled LuceneIndexer allows you to use a common vocabulary and presentation for dates that’s compatible with Lucene’s search system. For each Lucene Document found from the Lucene Query, listing 6.6 demonstrates how to use Tika to assist in unmarshalling the Lucene Document into an RSS-compatible item, surrounded by an enclosing RSS-compatible channel tag (provided by the getRSSHeaders() method and the getRSSFooters() called in the following listing).

Listing 6.6. Using Tika metadata to convert to RSS

public String getRSSItem(Document doc) {

StringBuffer output = new StringBuffer();

output.append("<item>");

output.append(emitTag("guid", doc.get(DublinCore.SOURCE), "isPermalink",

"true"));

output.append(emitTag("title", doc.get(Metadata.TITLE), null,

null));

output.append(emitTag("link", doc.get(DublinCore.SOURCE), null,

null));

output.append(emitTag("author", doc.get(Metadata.CREATOR), null,

null));

for (String topic : doc.getValues(Metadata.SUBJECT)) {

output.append(emitTag("category", topic, null, null));

}

output.append(emitTag("pubDate", rssDateFormat.format(ISO8601.

parse(doc

.get(Metadata.DATE.toString()))), null, null));

output.append(emitTag("description", doc.get(Metadata.TITLE), null,

null));

output.append("</item>");

return output.toString();

}

The getRSSItem(Document) method shown in listing 6.6 is responsible for executing the processed we saw in listing 6.1, effectively using Tika’s standard DublinCore metadata and its values and reformatting it to the RSS format and syntax. Scared of RSS reports, or metadata model–happy end users? Not anymore!

6.5. Summary

Phew! We just used Tika to leverage general and content-specific metadata models (Dublin Core and RSS, respectively), validated the outgoing RSS metadata by leveraging Tika’s Metadata class and its underlying Property class, and built up a search engine index generated in large part by standardizing on Tika metadata and its models. After we constructed the index, we showed you how to transform your recorded metadata instances in Dublin Core into the language of RSS, using Tika APIs. Metadata is grand, isn’t it?

In this chapter, we’ve covered a multitude of things related to the world of metadata. Let’s review.

First, we defined what metadata is (data about data), and its ultimate utility in the world of text extraction, content analysis, and all things Tika. We also defined what a metadata model is, and its important facets: attributes, relationships between those attributes, and information about the attributes, such as their formats, cardinality, definitions, and of course their names! After these definitions, we looked at some of the challenges behind metadata management, and how Tika can help.

- Metadata comparison and quality— We discussed a bunch of different metadata models, and classified them into two areas: general models (like Dublin Core) and content-specific models (HttpHeaders, or MS Word) that are directly related to specific types of files and content. We also highlighted the importance of metadata quality and dealing with metadata transformation.

- Implementation-level support for metadata— With a background on metadata models and standards, we took a deep dive into Tika’s support for representing metadata, looking at Tika’s Metadata class. We discussed the simplicity and power of a basic key/multivalued text-based structure for metadata, and how Tika leverages this simplicity to provide powerful representation of metadata captured in both general and content-specific models for all the files that you feed through the system.

- Metadata representation, validation and transformation in action— The latter part of the chapter focused in on practical examples of Tika’s Metadata class, and we brought the LuceneIndexer example from chapter 5 back to life, showing you how to augment the LuceneIndexer with common Dublin Core metadata, and how to extend the code to include property-specific metadata attributes (Date-related, Integer-related, and more). Finally, we showed you how to turn the metadata extracted by the LuceneIndexer into different metadata views, including some common XML formats you’re probably familiar with (RSS), and even some that you probably aren’t (remember: the metadata community is not out to cause you more work—well, maybe they are!).

It’s been a great ride! Now that you’re a bona fide Tika metadata expert, the time has come to understand how an important piece of per-file metadata gets populated by Tika: the file’s language. It’s a lot harder than it looks, and more involved than simply calling set(Property, String) on Tika’s Metadata class—so much so that it warrants its own chapter, which is next up on your Tika training. Enjoy!